Abstract

Water quality affects many aspects of water availability, from precluding use to societal perceptions of fit-for-purpose. Pathogen source and transport processes are drivers of water quality because they have been responsible for numerous outbreaks resulting in large economic losses due to illness and, in some cases, loss of life. Outbreaks result from very small exposure (e.g., less than 20 viruses) from very strong sources (e.g., trillions of viruses shed by a single infected individual). Thus, unlike solute contaminants, an acute exposure to a very small amount of contaminated water can cause immediate adverse health effects. Similarly, pathogens are larger than solutes. Thus, interactions with surfaces and settling become important even as processes important for solutes such as diffusion become less important. These differences are articulated in “Colloid Filtration Theory”, a separate branch of pore-scale transport. Consequently, understanding pathogen processes requires changes in how groundwater systems are typically characterized, where the focus is on the leading edges of plumes and preferential flow paths, even if such features move only a very small fraction of the aquifer flow. Moreover, the relatively short survival times of pathogens in the subsurface require greater attention to very fast (<10 year) flow paths. By better understanding the differences between pathogen and solute transport mechanisms discussed here, a more encompassing view of water quality and source water protection is attained. With this more holistic view and theoretical understanding, better evaluations can be made regarding drinking water vulnerability and the relation between groundwater and human health.

Résumé

La qualité de l’eau impacte par de nombreux aspects les questions de disponibilité de l’eau, depuis la proscription de son usage jusqu’aux perceptions sociales relatives à l’adéquation des besoins par rapport à l’usage prévu. Les sources de pathogènes et les processus de transport conditionnent la qualité de l’eau, du fait qu’ils sont à l’origine de nombreux foyers infectieux entraînant des pertes économiques importantes dues à la maladie et, dans certains cas, à des pertes de vie. Les foyers infections sont le résultat d’une très petite exposition (par ex., moins de 20 virus) à des sources très virulents (par ex. des trillions de virus transmis par un seul individu infecté). Ainsi, contrairement aux contaminants de type soluté, une exposition aiguë à une très petite quantité d’eau contaminée peut avoir des effets négatifs immédiats sur la santé. De même, les pathogènes sont plus nombreux que les solutés. Par conséquent les interactions entre les surfaces et les zones de dépôts deviennent importantes alors que des processus importants pour les solutés comme la diffusion deviennent moins importants. Ces différences sont définies dans la « Théorie de la Filtration des Colloïdes », une partie distincte du transport à l’échelle porale. Par conséquent, la compréhension des processus des pathogènes exige des modifications dans la manière de caractériser classiquement les systèmes aquifères, où la cible est située sur les bordures avant des panaches et au niveau des chenaux d’écoulement préférentiel, même si de telles structures mobilisent seulement une petite fraction du flux de l’aquifère. De plus, les temps de survie relativement courts des pathogènes dans le milieu souterrain nécessite de porter une plus grande attention aux voies d’écoulement très rapides (<10 ans). A travers une meilleure compréhension des différences entre les mécanismes de transport des pathogènes et des solutés telles que discutée ici, la qualité de l’eau et la protection des ressources sont approchées de manière plus large. Avec cette approche plus holistique et cette compréhension théorique, de meilleures évaluations peuvent être faites concernant la vulnérabilité de l’eau potable et la relation entre les eaux souterraines et la santé humaine.

Resumen

La calidad del agua afecta muchos aspectos de la disponibilidad de agua, por excluir del uso que sirve a la sociedad. La fuente de los patógenos y los procesos de transporte son factores determinantes de la calidad del agua, ya que han sido responsables de numerosos brotes que han provocado grandes pérdidas económicas por enfermedad y, en algunos casos, pérdida de vidas. Los brotes resultan de una exposición muy pequeña (por ejemplo, menos de 20 virus) de fuentes muy fuertes (por ejemplo, trillones de virus desprendidos por un solo individuo infectado). Así, a diferencia de los contaminantes de soluto, una exposición aguda a una cantidad muy pequeña de agua contaminada puede causar efectos adversos inmediatos para la salud. Del mismo modo, los patógenos son más grandes que los solutos. Por lo tanto, las interacciones entre las superficies y la sedimentación se vuelven importantes incluso cuando procesos importantes para los solutos tales como la difusión llegan a ser menos importantes. Estas diferencias se articulan en la “Colloid Filtration Theory”, una rama separada del transporte a escala de poros. En consecuencia, la comprensión de los procesos patógenos requiere cambios en la forma en que los sistemas de agua subterránea son normalmente caracterizados, donde el foco está en los límites delanteros de las plumas y las trayectorias preferenciales de flujo, incluso si tales características mueven sólo una pequeña fracción del flujo del acuífero. Además, los tiempos relativamente cortos de supervivencia de los patógenos en la subsuperficie requieren una mayor atención a las trayectorias rápidas de flujo (<10 años). Mediante una mejor comprensión de las diferencias entre los mecanismos de transporte de patógenos y de soluto que aquí se discuten, se alcanza una visión más amplia de la calidad del agua y la protección del agua a la fuente. Con esta visión más holística y la comprensión teórica, se pueden hacer mejores evaluaciones con respecto a la vulnerabilidad del agua potable y la relación entre el agua subterránea y la salud humana.

摘要

水质影响谁可用性的很多方面,从妨碍水的利用到符合目的的社会认知。病原体源及运移过程是水质的驱动者,因为它们对众多的疾病爆发负责,这些爆发引起疾病蔓延、有些情况下导致人员丧命,致使蒙受重大的经济损失。爆发起因于非常强的来源(例如从单个感染的个体中分裂出的亿万个病毒)非常小的暴露(例如不到20个病毒)。因此,和溶质污染物不同,短时间暴露于非常少量的污染水可立即引起对健康不利的影响。同样,病原体比溶质更大。因此,与表面的相互作用及着附就非常重要,正如过程对溶质溶质非常重要一样,而扩散就不显得那么重要。这些区别在孔隙尺度运移的单独分支“胶体过滤理论”中有明确的论述。所以,了解病原体过程需要在怎样典型描述地下水系统中有所改变,重点放在主要羽状物边缘及优先流通道,即使这样的特征影响到很小一部分含水层水流。此外,地下病原体相对短的存活时间需要更加注意非常快(<10年)的水流通道。通过更好地了解这里探讨的病原体和溶质运移机理之间的差异,可以获取更加包容的水质和源水保护方面的认识。有了更加全面的整体观和理论认识,可以对饮用水可用性及地下水和人类健康之间的关系作出更好的评价。

Resumo

A qualidade da água afeta diversos aspectos da disponibilidade de água, do impedimento de sua utilização a percepções sociais da adequação do uso. Fontes de patógenos e processos de transporte são condicionantes da qualidade da água pois eles têm sido responsáveis por numerosos surtos resultando em grandes perdas econômicas em razão de enfermidades e, em alguns casos, perdas de vidas. Epidemias resultam de exposições muito pequenas (p. ex. menos que 20 vírus) a fontes muito fortes (p. ex. trilhões de vírus transmitidos por um único indivíduo infectado). Assim, ao contrário dos contaminantes de soluto, uma exposição aguda a uma quantidade muito pequena de água contaminada pode causar efeitos adversos imediatos à saúde. Da mesma forma, os patógenos são maiores do que os solutos. Assim, as interações com superfícies e sedimentação tornam-se importantes mesmo quando os processos importantes para solutos como a difusão se tornam menos importantes. Essas diferenças são expressas na “Teoria de Filteração de Colóides”, um ramo separado de transporte na escala do poro. Consequentemente, a compreensão dos processos patogénicos requer alterações na forma como os sistemas de águas subterrâneas são tipicamente caracterizados, onde o foco está nos limites das plumas e caminhos de fluxo preferencial, mesmo que essas características movam apenas uma fracção muito pequena do fluxo do aquífero. Além disso, os tempos de sobrevivência relativamente curtos dos agentes patogénicos na subsuperfície requerem uma maior atenção para caminhos de fluxo muito rápidos (<10 anos). Ao compreender melhor as diferenças entre os mecanismos de transporte de agentes patogénicos e de soluto aqui discutidos, alcança-se uma visão mais abrangente da qualidade da água e da protecção das fontes de água. Com esta visão mais holística e compreensão teórica, podem ser feitas melhores avaliações sobre a vulnerabilidade da água potável e a relação entre as águas subterrâneas e a saúde humana.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In recent years, the concept of sustainable water use, and water availability, has come to the forefront. To be considered available, however, the water must have a suitable quality (Warner et al. 2016); moreover, the definition of suitable quality can evolve over time as analysis techniques improve and societal perceptions change. Recent advances in the understanding of pathogens in groundwater are prime examples of these concepts. Pathogens are here defined as bacteria, viruses, or other microorganisms that can cause disease. Pathogens are responsible for most all episodic disease outbreaks, and are present in many groundwater supplies (e.g., Abbaszadegan et al. 2003; Borchardt et al. 2003; Fout et al. 2003; USEPA 2006; Craun et al. 2010). The economic cost of pathogen-related illness can be appreciable, on the order of hundreds of thousands of US dollars per year for an individual US state from human enteric viruses alone (Warner et al. 2016). Clearly, increased understanding of pathogen source and transport can only serve to facilitate improved human health and associated economic benefits.

Pathogen transport has been extensively investigated (e.g., Gerba et al. 1975; Vilker 1978, 1980; Vilker and Burge 1980; Gerba 1983; Lance and Gerba 1984; Yates et al. 1987; Harvey and Garabedian 1991; Bales et al. 1989, 1993; Powelson et al. 1993; Harvey 1997; Pieper et al. 1997; DeBorde et al. 1998, 1999; Woessner et al. 2001), where it often focused on easy to measure targets (bacteriophage, other inorganic tracers) because other targets such as viruses were both difficult and expensive to analyze. As a result, understanding of pathogen source and transport also evolves as analytical techniques improve—for example, as recent as the early 2000s, typical characterization consisted of detecting virus presence versus absence (not concentration) by conventional polymerase chain reaction (PCR; i.e., Abbaszadegan et al. 1999). Quantification of virus concentration could only be accomplished, if at all, by laborious and expensive culture methods restricted to a small subset of virus types. Now, with the advancement of real-time quantitative PCR (qPCR), the quantities of many virus types can be reliably measured with high-throughput, low cost, and less labor (Hunt et al. 2014). Detailed genetic information on virus subtypes can also be obtained with high-throughput sequencers, which are widely available; therefore, from a theoretical standpoint, this newly developed technology facilitated new insights into the behavior of viruses in the environment and associated vulnerability of drinking water supplies. Over this same period, theory to predict pathogen transport has advanced to account for the transport behaviors observed for pathogens that are not typically seen in solutes.

Classical contaminant transport theory describes the advection and dispersion of solutes, and typically employs equilibrium distribution constants to describe the transport of solutes that interact with porous media surfaces—examples include solutes such as DDT, PCBs, and dioxins, as well as a host of other chemicals potentially dangerous to human health. When considering these chemical contaminants, it is important to note that acute exposure generally poses little immediate risk to human health. Rather, it is the chronic exposure that is of most concern, for example in the cases where risk of exposure to a solute contaminant is assessed using a prescribed number of decades over which one drinks a specified volume of contaminated water. Pathogens, on the other hand, focus on acute risks, which influence determination of which transport behaviors are important—for example, in most cases, pathogen transport is concerned with characterizing short-term, exceedingly small, fractions of the bulk contamination, often at the leading edge of the plume. In contrast, risk assessments from solute contamination typically have a longer-term focus on the bulk transport behavior of contamination.

Here, traditional solute transport approaches are formally contrasted with approaches needed to characterize pathogen transport. The focus here is primarily on pathogen transport and how it differs from the more widely understood (and extensively published) applied-solute-transport concepts. The hope is that awareness of such contrasts provides a more holistic understanding of the fundamental processes governing the transport behaviors of solutes and pathogens. This holistic view, in turn, allows practitioners to obtain a more complete assessment of vulnerability, and availability, of groundwater resources in the 21st century.

Pathogens sources and importance for transport

Pathogen contamination requires a fundamental shift from the classical view of contaminant transport (Hunt et al. 2010). Small concentrations of contaminant solute molecules (or “toxic solutes”) are typically not considered an actionable health risk because they cannot replicate, and because physiological systems have at least modest ability to prevent toxic outcomes; thus, contaminant removal during water treatment focuses on reducing them to a concentration below a regulatory determined level, such as a “maximum contaminant level” (MCL). The lowest MCLs commonly lie within the parts per million (ppm) and parts per billion (ppb) range. Although one might consider such concentrations to be very low, the number of contaminant molecules is appreciable—for example, the benzene MCL of 5 ppb equates to the presence of 38 × 1015 benzene molecules per liter (38 quadrillion per liter). Pathogens, on the other hand, can cause infection at exceedingly low levels of exposure, i.e., it may take only a very small number of virus particles (e.g., <20; CDC 2016) to make a healthy individual ill. Rather than attaining an MCL, the treatment goal is to remove all pathogens from water during treatment. Recognizing that total removal is not technologically feasible or practical, typically such a protective goal might be stated as a “4-log reduction” (i.e., 4 orders of magnitude reduction) in pathogen concentration.

There are also important differences with respect to contaminant source: solute contaminants do not reproduce, pathogen contaminants can. Using the aforementioned example, a pure liquid benzene spill can theoretically produce a toxic solute concentration of 1,100 μg/L in water, which equates to 8.5 × 1018 benzene molecules per liter (8,500 quadrillion per liter), or about 220 times the MCL for benzene. In contrast, a small virus exposure, in turn, can result in an infected person that can shed very large numbers of viruses—numbering in the quadrillions per liter of feces (e.g., Feachem et al. 1983). Even if one assumes dilution by 1000 during mixing with water, the contaminated water may contain virus contents in the trillions per liter range, which is a factor of one trillion (1012) greater than, for example, a 1 pathogen per liter goal. The contrast is vast: a factor of 220 removal for the highest possible concentration of benzene versus a factor of trillion removal for a high concentration of viruses. Fortunately, in addition to dilution, pathogens undergo death or inactivation; the result of death, inactivation, and other processes is that most pathogens are commonly degraded in the subsurface over relatively short time horizons (months to < 10 years; e.g., John and Rose 2005; Seitz et al. 2011).

Sources for pathogens can be exceedingly varied and widespread—for example, both sanitary sewer exfiltration (e.g., Hunt et al. 2010; Bradbury et al. 2013) and surface water downstream of a wastewater treatment plant (e.g., Borchardt et al. 2004) can be important sources of human enteric viruses to groundwater. The geometry and distribution of pathogen contamination can also be difficult to characterize. Sanitary sewers lines underlie most middle to large communities, typically in gravel-lined trenches, and they are also known to leak. Reported estimates of sanitary sewer leakage has been estimated as 30% of system flow as a result of infrastructure deterioration; in local areas, sanitary sewer leakage has been reported to be as high as 50% of the system flow (USEPA 1989). The ubiquity of pathogen sources can be expected to cause widespread pathogen occurrence in adjacent groundwater systems. In one recent investigation of 14 community drinking-water supplies, groundwater from each community had virus contamination (Borchardt et al. 2012).

The low numbers of pathogens needed to effect illness, and the variety and distribution of potential sources, results in pathogen contamination manifesting itself in a different manner as compared to contaminant solutes. Rather than a risk-based approach that considers chronic exposure, pathogen exposures are instead characterized by acute episodes that can result in asymptomatic infection, sporadic illness in the exposed population, or disease outbreaks. The minimum infective dose can vary widely among pathogens, as can their ability to move in the subsurface; thus, the temporal nature of the pathogen occurrence itself in a groundwater system can be complex (Hunt et al. 2010).

It is common for groundwater wells in a pathogen source area to show pathogen occurrence given sufficient sampling over time. It is rare, however, for all wells to show simultaneous occurrence. The degree of pathogen-related illness in a human population is well recognized as being temporally variable (e.g., “flu season”). Moreover, low-level pathogen contamination is also more dynamic than toxic solute contamination because mobilization of pathogens can vary over time. Bradbury et al. (2013) noted that times of expected high groundwater recharge were commonly followed within weeks by virus presence in municipal well water. Moreover, the type of virus at the well was similar to what was seen in the waste stream (sewerage system) near the time of the groundwater recharge. This suggests that unsaturated zone transport may be limiting, where the unsaturated zone may serve as a holding reservoir between pathogen mobilization events (e.g., Tafuri and Selvakumar 2002), where the mobilization becomes rapid during recharge (e.g., McCarthy and McKay 2004; Bradford et al. 2013; Gotkowitz et al. 2016). As a result of these factors, pathogen sources can be very dynamic and not suited for more stable source terms appropriate for many solute contamination applications. This lack of stationarity holds true even if the source location itself is not variable, as is the case of a wastewater-treatment-plant outfall or a recurring leak in an urban sanitary sewer system.

Transport of pathogens versus solutes: underlying nanoscale mechanisms

This section examines attributes of pathogens that originate in nanoscale interactions but which influence their macroscale transport and produce well-noted contrasting behaviors for pathogens relative to solutes. A primary property of pathogens is their large size relative to solutes, which ripple to additional characteristics that influence their transport. The range in solute sizes is 0.1 nm for simple ions (e.g., chloride) to several nm for proteins. Excluding prions (pathogenic proteins), viruses are the smallest among pathogens (27–75 nm). Using an average size of 50 nm, viruses are about 20 times larger than proteins and ~500 times larger than simple ions. Bacteria (~1 μm) are about 400 and 10,000 times larger than proteins and simple ions, respectively, while Cryptosporidium oocysts (~5 μm) and Giardia (~10 μm) are larger still. The large size of these “colloids” relative to solutes yields two primary characteristics that govern their contrasting transport to solutes: (1) low diffusion rates relative to solutes, which limits their ability to get near, or “find”, surfaces; and (2) longer time for interactions with surfaces relative to solutes, which holds, or “lands”, them on surfaces. Note that another impact of the large size of pathogens relative to solutes is their potential to be strained, that is, removed from water by entrapment in pore throats too small to pass. Straining depends primarily on colloid and pore throat size, and is discussed further in the following. In this section, non-straining removal is described: the nanoscale interactions that contribute to contrasting transport behaviors of pathogens versus solutes even when both are far smaller than the smallest pore throats in a porous medium.

The “finding” of surfaces in porous media is often a direct function of diffusion. The relatively high diffusion rates associated with solutes results in random trajectories that are not predictable at the pore scale. Although diffusion occurs in the direction from higher to lower solute concentration, this directed flux is driven by the small-scale random motion of solutes that causes the molecular “cloud” to spread from higher to lower solute concentration. The greater range of diffusive motion of solutes (relative to pathogens) ensures they will reach grain surfaces under typical groundwater conditions (e.g., pore widths in the ~100-μm size range and average pore-water velocities in the ~1-m/day range or less). Although the highly diffusive nature of solutes makes their trajectories random and unpredictable at the pore scale, it makes their macroscale transport relatively simple to simulate. In contrast, the relatively low diffusion of pathogens and other colloids makes their pore-scale transport predictable, because their likelihood of reaching grain surfaces largely depends on whether they are situated on a fluid streamline that brings them to a sufficiently close proximity to grain surfaces to intercept them and allow attachment.

A primary concept of pore-scale fluid flow fields is the forward flow stagnation zone (FFSZ) of a grain (Yao et al. 1971; Rajagopalan and Tien 1976), where flow diverges as it approaches the upstream grain surface (Fig. 1). At the pore scale, the fluid streamlines most likely to allow colloids to intercept the grain surface are those closest to the FFSZ axis. Coupled to this influence of fluid streamlines on colloid delivery to grain surfaces is the ability of colloids to cross streamlines to reach surfaces via diffusion and gravitational settling. Colloid filtration theory (CFT) predicts particle attachment rate constants based on particle trajectories determined via a mechanistic force and torque balance involving diffusion, settling, fluid drag, and colloid-surface interaction forces in a flow field representing porous media (Yao et al. 1971; Rajagopalan and Tien 1976).

a Schematic of a Happel sphere in cell flow field geometry, and b scale-up of the Happel collector efficiency (η) to the continuum rate constant (k f), using the relationship for relative concentration (C/C 0) from the advection-dispersion equation (ADE) under steady-state conditions. N c number of collectors, L length of transport, and v average pore-water velocity. Modified from Johnson and Hilpert (2013)

The predominantly used representative flow field is the Happel sphere-in-cell (Happel 1958), a spherical fluid envelope with thickness scaled to represent desired porosity, and with flow divergence and convergence on its upstream and downstream sides, respectively, and radial symmetry about the flow stagnation axis (Fig. 1). Performing mechanistic trajectory simulations for many starting locations in a plane upstream of the grain results in a pore-scale collector efficiency (η), which represents the number of particles intercepted relative to the number of particles introduced for a given set of conditions (colloid size, fluid velocity, etc.). Heuristic expressions to predict η across ranging conditions have been developed to approximate the mechanistic simulations (e.g., Nelson and Ginn 2011). The collector efficiency is upscaled to an attachment rate constant (k f) for use in the continuum advection-dispersion equation assuming that all interception equals attachment and that porous media can be represented as a series of Happel collectors as shown in Fig. 1. CFT predicts well k f for colloids of opposite charge to porous media surfaces (Elimelech and O’Melia 1990), with the presumption that colloids and porous media grains are spheroidal and uniformly sized (Long and Hilpert 2009; Pazmino et al. 2011).

Because pathogens and other colloids themselves occupy a range of sizes spanning more than two orders of magnitude (e.g., ~50–10,000 nm), the smallest colloids have relatively high diffusion, whereas the largest colloids have relatively high gravitational settling. Both of these processes enhance interception of grain surfaces. The result is that, under typical groundwater conditions, the ~1,000–2,000-nm colloid size range is predicted to undergo the least interception because this size range is least assisted by combined diffusion and settling that enhance interception at surfaces. In the laboratory, CFT predicts well the observed non-linear relationship between fluid velocity and colloid breakthrough, at least under conditions where no colloid-surface repulsion exists, and all colloids “land” upon “finding” the surface (e.g., Tong and Johnson 2006).

Colloid “landing” on surfaces after “finding” them, however, is an extremely complex process that is difficult to predict. Colloids interact with grain surfaces via a complex combination of forces that vary with colloid-surface separation distance. These processes include van der Waals attraction and electric double-layer repulsion which act across tens to hundreds of nm separation distances, as well as other shorter-range forces (e.g., Born, steric, Lewis acid-base; Israelachvili 2011). All of these forces influence whether a colloid will experience net attraction or repulsion to a surface, and because they have different distances over which they extend, they together produce a complex profile of net attraction and repulsion that varies with colloid-grain separation distance (Israelachvili 2011; Fig. 2). Because most pathogens and environmental surfaces are negatively charged, the electric double layer interactions are typically repulsive, leading to a profile for which colloid-grain interactions are net attractive at the largest separation distances where weak van der Waals attraction dominates (e.g., tens to hundreds of nm) to form a zone of “secondary minimum” attraction (Fig. 2). At closer separation distances, electric double layer repulsion dominates creating a “barrier” to attachment. At still closer separation distances (e.g., within a few nm) van der Waals attraction is sufficiently strong to overwhelm electric double repulsion, forming a strongly attractive “primary minimum” wherein a colloid may attach to “land on” the surface (Fig. 2).

For given surface and solution properties, the magnitudes of the forces increase directly with colloid size such that colloids experience stronger primary minimum attraction relative to solutes. For a given colloid size and surface properties, the barrier magnitude is inversely proportional to solution ionic strength (Israelachvili 2011). This classic description of colloid surface interactions shows qualitative correspondence with many observed influences of surface and solution chemistry on colloid transport in porous media; e.g., colloid breakthrough concentrations are inversely proportional to solution ionic strength (e.g., Elimelech and O’Melia 1990; Li et al. 2004; Tufenkji and Elimelech 2004). However, this classic approach assumes that measured surface properties are homogenous such that bulk repulsive surfaces are everywhere repulsive. As such, this “mean field theory” fails to predict colloid attachment to grain surfaces under environmental conditions because it fails to recognize the nanoscale surface chemical heterogeneity and roughness that change the balance of attraction and repulsion experienced by colloids interacting with surfaces. This complex subject will not be further described here, but suffice it to say that chemical heterogeneity and roughness on surfaces in both field and laboratory settings create local nanoscale zones of attraction that allow colloids to “land” on bulk repulsive surfaces (e.g., Johnson et al. 2010; Pazmino et al. b), and colloid transport theory is now catching up to these observations (e.g., Bhattacharjee et al. 1998; Hoek et al. 2003; Duffadar et al. 2009; Ma et al. 2011; Bendersky and Davis 2011; Pazmino et al. 2014a, b; Trauscht et al. 2015).

Transport of pathogens versus solutes: macroscale behaviors

Pore-scale trajectories of solutes are random and unpredictable; however, high diffusion largely guarantees that solutes will reach porous media surfaces under many groundwater conditions. Furthermore, the high diffusion and relatively short-range attractive interaction forces holding solutes to surfaces allows them to rapidly swap onto and off of surfaces, facilitating equilibrium for the distribution of solute between water and the porous media. Such equilibrium is well observed for ion exchange or hydrophobic partitioning processes, which are commonly described by linear equilibrium distribution coefficients (K d) that are measured in the laboratory for a given solute with a given porous medium. Incorporation of K d into the advection-dispersion equation for transport produces a retardation factor (R), which describes the longer travel time of a sorbing solute relative to bulk groundwater (Fig. 3). This process is often described using breakthrough to a given relative concentration (C/C 0) in an aquifer with a given volumetric water content (θ) and bulk density (ρ b), and a constant source concentration of contaminant (C 0).

Typical breakthrough curve profiles that illustrate how equilibrium partitioning (i.e., retardation; solid green line) and filtration (i.e., kinetic removal; blue line) impact breakthrough behavior relative to a conservative tracer (red line). The variables in the equations are defined as: C effluent concentration; v pore-water velocity; t time; x position; θ porosity; D dispersivity; K d partition coefficient; ρ b bulk density; R retardation coefficient; k f filtration rate coefficient; C o initial (injection) concentration; and L transport distance. Reproduced from Molnar et al. 2015

In contrast, retention of pathogens and other colloids on surfaces is largely irreversible (in the absence of perturbations in flow or chemistry) due to strong van der Waals attraction and relatively weak back diffusion for colloids relative to solutes (Johnson et al. 2010; Pazmino et al. 2014a). Therefore, pathogens (and other colloids) do not rapidly swap onto and off of surfaces like solutes. Thus, rather than the equilibrium K d representative of a solute, the predicted likelihood of colloids finding and landing on attachment sites is represented by an irreversible attachment rate constant (k f). The rate constant, k f, is derived by upscaling the likelihood of colloids finding surfaces (via interception as already described and in Fig. 1) and conceptualizing macroscale porous media as a series of FFSZs (Nelson and Ginn 2011; Johnson and Hilpert 2013). When k f is employed in the advection-dispersion equation, it produces a reduced steady-state breakthrough that always results in C/C 0 < 1. That is, for colloids, the concentration passing a given transport distance will be lower than the source concentration even under steady-state conditions (Fig. 3), and this relative concentration will be further reduced with increasing transport distance. With sufficient distance, the mobile concentration is effectively reduced to zero.

These concepts form an important conceptual difference between many solutes and pathogens: equilibrium (fully reversible) linear sorption that retards contaminant breakthrough to later times is not characteristic of pathogenic contaminants. Rather, irreversible pathogen “landing” on grains results in contaminant pathogen breakthrough to steady-state concentrations that are lower than the source (Fig. 3). The concepts discussed here are most easily seen during steady-state flow conditions with constant source concentration; transient flow and/or source concentration, however, can complicate these distinctions. Furthermore, the aforementioned contrast in behaviors between solutes and pathogens blurs when one considers irreversible processes for solutes (e.g., degradation, chemical precipitation). Still, understanding these contrasts in “textbook” behavior is useful for describing the contrasting transport behaviors of pathogens versus solutes.

Although it is true that pathogen sorption is typically considered irreversible, this association can be reversed by perturbations such as increased flow and reduced solution ionic strength (Hahn and O’Melia 2004; Shen et al. 2007; Pazmino et al. 2014a). Mechanistic simulations (e.g., Pazmino et al. 2014a) demonstrate that reduced ionic strength can increase net colloid-grain repulsive interactions, and increased fluid velocity increases the torque driving colloid mobilization. Such a nanoscale phenomenon likely contributes to the macroscale observation that disease outbreaks are typically associated with heavy rainfall (e.g., citations in Pazmino et al. 2014a). These processes likely act in concert with hydrologic impacts such as enhanced unsaturated zone transport resulting from higher precipitation-driven infiltration (e.g., McCarthy and McKay 2004).

To this point the focus has been on CFT physicochemical filtration of pathogens (colloids) as a means of explaining their macroscale transport behaviors relative to solutes; however, in natural porous media with fine or non-uniform grain sizes, one can expect straining to serve as an important mechanism of colloid capture. Furthermore, although CFT physicochemical filtration may be the primary capture process in clean porous media, the continued capture of colloids on surfaces will eventually constrict pore throats, and the mechanism of capture will evolve toward straining. It should be noted that straining is a purely physical process related to the pore size being smaller than the colloid. The influence of pore-scale flow field attributes such as FFSZs, diffusion/settling, and colloid-surface interaction, as captured in CFT (the “finding” of and “landing” on surfaces), is not relevant to straining. Simply put, colloids larger than pore throats will get stuck, unequivocally. The most fundamental driver of the straining process is the pore-throat-size distribution. Because straining further closes pore throats, flow through a porous medium will eventually be reduced and require greater driving pressure, which is why engineered granular filters must eventually be back-flushed.

With respect to capture mechanisms for pathogens in granular aquifers, physicochemical filtration versus straining processes may seemingly result in contrasting outcomes. Although not representative, for simplicity let it be assumed that no repulsion exists between pathogens and grain surfaces in aquifers. In that case physicochemical filtration would predict that colloids in the size range of bacteria (~1,000–2,000 nm) should be most prevalent in groundwater because of their relatively low combined diffusion and sedimentation relative to smaller (e.g., viruses) and larger (e.g., protists) colloids. In contrast, straining as the primary capture mechanism predicts the greatest mobility for the smallest colloids because they are least likely to be strained. In fact, viruses are commonly observed in porous media and drinking water wells when larger pathogens are not (e.g., Borchardt et al. 2004; Wu et al. 2011), which supports straining as a primary capture mechanism in aquifers. However, when one no longer assumes that repulsion is absent between pathogens and surface, physicochemical filtration may not predict 1,000–2,000-nm-sized bacteria to be most prevalent once repulsion (and influence of heterogeneity) is accurately incorporated into models. Furthermore, in practice, the different source concentrations, longevities, and ecological differences between viruses versus bacteria may increase the prevalence of smaller pathogens.

One practical result of the difference in processes is this: if straining is the primary capture mechanism operating in natural granular aquifers, then the long-term removal of pathogens in aquifers and/or hyporheic zones necessarily involves regeneration of clogged pores via degradation and/or flushing of accumulated material (e.g., during dynamic conditions such as flooding). Likewise, the association of disease outbreaks with heavy rainfall would be interpreted as being more closely related to hydrologic processes (e.g., mobilization of vadose zone water) than physicochemical processes such as changes in ionic strength; however, the effect of lower ionic strength likely assists pathogen mobilization, working in concert with more strictly physical processes. As might be now readily apparent from this discussion, opportunities to better understand the relative roles of physicochemical filtration versus straining in pathogen removal in natural media, and the processes that support this ecosystem service over the long-term, are vast and our understanding is rapidly evolving.

Solute and pathogen transport differs also in macroscopically heterogeneous media (e.g., heterogeneity arising from stratigraphy, fractures, and bioturbation resulting from root traces and burrows) that are characterized by preferential flow paths. Pathogen transport in preferential flow paths is demonstrably rapid and robust relative to the transport of solutes (McKay et al. 1993; McKay 2011; Borchardt et al. 2011). Notably this contrast arises for the same reasons as the contrasting transport behaviors described in the preceding section at the pore scale in relatively uniform media. Advective flux in heterogeneous media may occur predominantly within the preferential flow paths; hence, the relatively low diffusion of colloids largely prevents their mass transfer from fractures into the matrix pore space, whereas the relatively high diffusion of solutes facilitates their entry into matrix pore space. Moreover, the matrix pore advection is relatively slow despite it typically occupying a significant or even large portion of the overall porosity of the media; therefore, colloids in heterogeneous media often show early breakthrough relative to solutes (e.g., Powelson et al. 1993; Bales et al. 1989, and Deborde et al. 1998). Notably, even in uniform media, colloids may show somewhat faster transport than solutes because their size (and bulk repulsion) tends to keep them at least one radius from grain surfaces such that they do not experience the lowest fluid drag at the closest distances to grain surfaces, whereas the lowest fluid drag is experienced by solutes diffusing rapidly across the pore fluid (e.g., Zhang et al. 2001a).

Finally, recall that solute and pathogenic contaminants can have differences in macroscale transport due to their potential for degradation. Solutes may be degraded (e.g., hydrolyzed or biodegraded by bacteria), transforming them to by-products that may or may not be innocuous. Similarly, pathogens exist within an ecological context in which they may be “preyed” upon, for example, from below by viruses that are pathogenic to bacteria, or from above by protozoa that use bacteria as a food source (Zhang et al. 2001b; Kinner et al. 1998); however, the degradation endpoint can be different because some solutes have a relatively simple chemical structure compared to the biochemical complexity of a pathogen. These simple solutes might be returned in a form where it is recognizable even after it is removed from the fluid (e.g., arsenic As, lead Pb, and mercury Hg). However, when complex pathogenic molecules are degraded, decayed, or consumed by other organisms at the grain surface, the complex biological molecules are broken down to simpler molecules that are not easily reconstituted back to an infectious pathogen; thus, when a pathogen leaves the fluid, it is more likely to be rendered innocuous.

Implications for groundwater and health

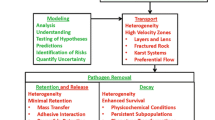

As shown, conceptual and practical differences between pathogen transport and traditional chemical contaminant transport can be appreciable. Consequently, a practitioner interested in pathogens needs an approach more encompassing than those used for solute transport. First, the area of interest within the plume is different. Solute contaminant transport often has a focus on the travel of the plume center-of-mass and hot spots, which reflect bulk properties of the aquifer and well-understood concepts of retardation. Such a focus is appropriate because the risk from solute contamination depends on long-term/chronic exposure related to slowly moving plumes. Pathogen transport, however, is different because adverse health effects can only occur while a pathogen is still infectious. As a result, assessment of pathogen transport is not focused on long-term plumes where centers of mass move from a source area of concern. Rather, it focuses on the potential emergence of a small fraction of the bulk contamination, and transport in preferential flow paths that can quickly move a low-concentration leading edge of a plume from a source to a receptor. Rather than groundwater vulnerability being assessed using decade-scale water movement, it is the fast pathway properties of the aquifer that are most important for understanding the vulnerability to pathogens and the risk for disease transmission.

Hunt et al. (2010) characterize this difference as follows: traditional solute contaminant hydrology focuses on “high yield-slow transport” pathways; pathogen transport requires a focus on “low yield-fast transport” pathways. Yield, as used by Hunt et al. (2010), refers to the percentage of the total water moved in an aquifer or to a well, whereas “high yield” refers to the dominant source of water and “low yield” is a non-dominant source of water. Consequently, the ability to infect at very low dose, and a limited time of infectivity in the subsurface, requires emphasis on fast pathways even if they contribute a very low percentage of the total volume of water that moves (e.g., red rectangle in Fig. 4). Rather than the slow changes to the center of mass, the outermost edges of contamination, and the temporal perturbations that may mobilize pathogens, are most important for pathogen transport. Much of applied solute transport work is less concerned with these spatial and temporal “fringes” because that is not where the risk associated with contamination traditionally resides. Indeed, with pathogen transport’s focus on very small amounts moving very quickly in preferential flow paths or in response to perturbations, the concept of the plume edge changes to one that cannot easily be defined by a smooth isochemical-like contour.

Schematic diagram of modeled distribution of groundwater ages from a sample collected from an idealized aquifer with dispersive flow (modified from Jurgens et al. 2012 and Hunt et al. 2014). Short travel times associated with pathogens (red-shaded rectangle designating the 0–3 year time interval on the modeled distribution) are expected to be a very small component of the water pumped from a well

Extending this distinction further: how fast is “fast transport”? For purposes of discussion, consider the approximately 3-year time for a virus to become undetectable in groundwater reported by Seitz et al. (2011). For most aquifers, distances traveled in many unstressed groundwater systems in 3 years are short, typically less than 100 m (e.g., Alley et al. 1999); however, distances on the order of kilometers can be traveled in short timeframes in karst and fractured rock aquifers (e.g., Borchardt et al. 2011). Even in porous media aquifers, stress applied to the groundwater system such as that resulting from high capacity pumping significantly depressurizes groundwater systems and can create large hydraulic gradients. These gradients, in turn, induce faster local groundwater velocities than occur in unstressed groundwater flow systems. In such settings, travel times and transport are perhaps better conceptualized as groundwater moving through preferential flow paths that act like a network of interconnected pipes rather than the bulk aquifer. This could explain, in part, why virus contamination frequency tends to be greater in high capacity wells (e.g., 50% of samples, Borchardt et al. 2004) than in private domestic wells (e.g., 8% of samples, Borchardt et al. 2003). This conceptualization can also describe observations of virus transport to deep supply wells cased through an aquitard occurring in several weeks (Bradbury et al. 2013). Such fast travel times are rarely documented in solute contamination, due to both the differences in transport described here, as well as orders of magnitude higher minimum analytical detection level of solutes (Hunt et al. 2010).

Episodic outbreaks of groundwater-borne pathogens demonstrate that worrisome combinations of pathogen source and transport conditions can happen given the right circumstances. More concerning, long-term annual/quarterly monitoring of the groundwater quality typically gave no indication of potential pathogen threats related to the groundwater (e.g., Hrudey and Hrudey 2004, 2007). Predicting when and where drinking water is vulnerable is difficult. Potential pathogen sources are widely distributed; for example, most countries have many kilometers of sanitary infrastructure adjacent or near to the same infrastructure that supplies drinking water. In addition to large potential source areas, rates of pathogen movement in groundwater can vary widely. Also, different pathogens can have different sizes and transportability, even in the same aquifer; thus, periodic sampling of standard microbiological indicators of water sanitary quality, total coliform bacteria and E. coli, are rarely correlated with virus detection (Wu et al. 2011). Finally, accurate characterization of the edge of the plume and extensive networks of preferential flow paths within an aquifer is difficult.

Thus, true assessments of drinking water vulnerability are difficult and costly. They must include both traditional solute contamination moving through high yield/slow transport paths and fast transport pathways even if those pathways comprise a very small amount of total water moved. Vulnerability must consider both the relatively stable threat of slow moving solutes of a sufficient concentration and episodic and highly variable pathogen concentrations that are difficult to evaluate without an intensive sampling regime over an extended period. Vulnerability characterization must use both traditional solute contaminant transport theory and extensions of less formalized and widely understood pathogen transport concepts described herein. Finally, vulnerability assessments of groundwater supplies must encompass the well-accepted risk-based epidemiology of solute contamination and the less known epidemiological effects of pathogens. Although seemingly overwhelming, it should be clear that pathogens require an extension of existing approaches, not replacing them.

Fortunately, in one sense additional uncertainty added to predictions of drinking water vulnerability can be addressed in a straightforward manner by protecting the public through multiple safety barriers, including relatively low-cost approaches such as chlorination. That is, as Hunt et al. (2010) suggest: rather than costly and imperfect predictions of vulnerability at every drinking water supply, it may be more expedient and effective for protection of public health to assume that pathogen contamination is present at least some of the time. Then societal efforts can leverage the understanding presented in this work to optimize safety barriers that can be used to protect drinking water rather than incorrectly asserting that no safety barriers are needed at all.

References

Abbaszadegan M, Stewart PW, LeChevallier MW (1999) A strategy for the detection of viruses in water by PCR. Appl Environ Microbiol 65:444–449

Abbaszadegan M, LeChevallier MW, Gerba CP (2003) Occurrence of viruses in US groundwaters. J Am Water Works Assoc 95:107–120

Alley WA, Reilly TE, Franke OL (1999) Sustainability of ground-water resources. US Geol Surv Circ 1186, 79 pp

Bales RC, Gerba CP, Grondin GH, Jensen SL (1989) Bacteriophage transport in sandy soil and fractured tuff. Appl Environ Microbiol 55(8):2061–2067

Bales RC, Li S, Maguire KM, Yahya MT, Gerba CP (1993) MS-2 and poliovirus transport in porous media: hydrophobic effects and chemical perturbations. Water Resour Res 29(4):957–963. doi:10.1029/92WR02986

Bendersky M, Davis JM (2011) DLVO interaction of colloidal particles with topographically and chemically heterogeneous surfaces. J Colloid Interface Sci 353(1):87–97

Bhattacharjee S, Ko C-H, Elimelech M (1998) DLVO interaction between rough surfaces. Langmuir 14(12):3365–3375

Borchardt MA, Bertz PD, Spencer SK, Battigelli DA (2003) Incidence of enteric viruses in groundwater from household wells in Wisconsin. Appl Environ Microbiol 69:1172–1180

Borchardt MA, Haas NL, Hunt RJ (2004) Vulnerability of drinking-water wells in La Crosse, Wisconsin to enteric-virus contamination from surface water contributions. Appl Environ Microbiol 70(10):5937–5946. doi:10.1128/AEM.70.10.5937-5946.2004

Borchardt MA, Bradbury KR, Alexander EC, Kolberg RJ, Alexander SC, Archer JR, Braatz LA, Forest BM, Green JA, Spencer SK (2011) Norovirus outbreak caused by a new septic system in a dolomite aquifer. Groundwater 49(1):85–97. doi:10.1111/j.1745-6584.2010.00686.x

Borchardt MA, Spencer SK, Kieke BA Jr, Lambertini E, Loge FJ (2012) Viruses in non-disinfected drinking water from municipal wells and community incidence of acute gastrointestinal illness. Environ Health Perspect 120:1272–1279

Bradbury KR, Borchardt MA, Gotkowitz M, Spencer SK, Zhu J, Hunt RJ (2013) Source and transport of human enteric viruses in deep municipal water supply wells. Environ Sci Technol 47(9):4096–4103. doi:10.1021/es100698m

Bradford SA, Morales VL, Zhang W, Harvey RW, Packman AI, Mohanram A, Welty C (2013) Transport and fate of microbial pathogens in agricultural settings. Crit Rev Environ Sci Technol 43:775–893. doi:10.1080/10643389.2012.710449

CDC (Centers for Disease Control and Prevention) (2016) Norovirus and working with food. http://www.cdc.gov/norovirus/food-handlers/work-with-food.html. Accessed 14 Nov 2016

Craun GF, Brunkard JM, Yoder JS, Roberts VA, Carpenter J, Wade T, Calderon RL, Roberts JM, Breach MJ, Roy SL (2010) Causes of outbreaks associated with drinking water in the United States from 1971 to 2006. Clin Microbiol Rev 23(3):507–528. doi:10.1128/CMR.00077-09

DeBorde DC, Woessner WW, Lauerman B, Ball PN (1998) Virus occurrence and transport in a school septic system and unconfined aquifer. Groundwater 36(5):825–834. doi:10.1111/j.1745-6584.1998.tb02201.x

DeBorde DC, Woessner WW, Kiley QT, Ball P (1999) Rapid transport of viruses in a floodplain aquifer. Water Res 33(10):2229–2238. doi:10.1016/S0043-1354(98)00450-3

Duffadar R, Kalasin S, Davis JM, Santore MM (2009) The impact of nanoscale chemical features on micron-scale adhesion: crossover from heterogeneity-dominated to mean-field behavior. J Colloid Interface Sci 337(2):396–407

Elimelech M, O’Melia CR (1990) Kinetics of deposition of colloidal particles in porous media. Environ Sci Technol 24(10):1528–1536

Feachem RG, Bradley DJ, Garelick H, Mara DD (1983) Sanitation and disease: health aspects of excreta and wastewater management. World Bank Studies in Water Supply and Sanitation, vol 3. Wiley, Chichester, UK, 530 pp

Fout GS, Martinson BC, Moyer MWN, Dahling DR (2003) A multiplex reverse transcription-PCR method for detection of human enteric viruses in groundwater. Appl Environ Microbiol 69:3158–3164

Gerba CP (1983) Virus survival and transport in groundwater. Dev Ind Microbiol 24:247–251

Gerba CP, Wallis C, Melnick JL (1975) The fate of wastewater bacteria and viruses in soil. J Irrig Drain Div ASCE 101:157–174

Gotkowitz MB, Bradbury KR, Borchardt MA, Zhu J, Spencer SK (2016) Effects of climate and sewer condition on virus transport to groundwater. Environ Sci Technol. doi:10.1021/acs.est.6b01422

Hahn MW, O’Melia CR (2004) Deposition and reentrainment of Brownian particles in porous media under unfavorable chemical conditions: some concepts and applications. Environ Sci Technol 38(1):210–220

Happel J (1958) Viscous flow in multiparticle systems: slow motion of fluids relative to beds of spherical particles. AICHE J 4(2):197–201

Harvey RW (1997) In situ and laboratory methods to study subsurface microbial transport. In: Hurst CJ (ed) Manual of environmental microbiology. American Society for Microbiology Press, Washington, DC, pp 586–599

Harvey RW, Garabedian SP (1991) Use of colloid filtration theory in modeling movement of bacteria through a contaminated sandy aquifer. Environ Sci Technol 25:178–185

Hoek EMV, Bhattacharjee S, Elimelech M (2003) Effect of membrane surface roughness on colloid−membrane DLVO interactions. Langmuir 19(11):4836–4847

Hrudey SE, Hrudey EJ (2004) Safe drinking water: lessons from recent outbreaks in affluent nations. IWA, London, 512 pp

Hrudey SE, Hrudey EJ (2007) Published case studies of waterborne disease outbreaks: evidence of a recurrent threat. Water Environ Res 79(3):233–245

Hunt RJ, Borchardt MA, Bradbury KR (2014) Viruses as groundwater tracers: using ecohydrology to characterize short travel times in aquifers. Groundwater 52(2):187–193. doi:10.1111/gwat.12158

Hunt RJ, Borchardt MA, Richards KD, Spencer SK (2010) Assessment of sewer source contamination of drinking water wells using tracers and human enteric viruses. Environ Sci Technol 44(20):7956–7963. doi:10.1021/es100698m

Israelachvili JN (2011) Solvation, structural, and hydration forces, chap 15. In: Israelachvili JN (ed) Intermolecular and surface forces, 3rd edn. Academic, San Diego, pp 341–380

John DE, Rose JB (2005) Review of factors affecting microbial survival in groundwater. Environ Sci Technol 39(19):7345–7356

Johnson WP, Hilpert M (2013) Upscaling colloid transport and retention under unfavorable conditions: linking mass transfer to pore and grain topology. Water Resour Res 49(9):5328–5341

Johnson WP, Pazmino E, Ma HL (2010) Direct observations of colloid retention in granular media in the presence of energy barriers, and implications for inferred mechanisms from indirect observations. Water Res 44(4):1158–1169

Jurgens BC, Bohlke JK, Eberts SM (2012) TracerLPM: an Excel® workbook for interpreting groundwater age distributions from environmental tracer data. US Geol Surv Tech Methods Rep 4-F3, p 60

Kinner NE, Harvey RW, Blakeslee K, Novarino G, Meeker LD (1998) Size-selective predation on groundwater bacteria by nanoflagellates in an organic-contaminated aquifer. Appl Environ Microbiol 64(2):618–625

Lance JC, Gerba CP (1984) Virus movement in soil during saturated and unsaturated flow. Appl Environ Microbiol 47(2):335–337

Li XQ, Scheibe TD, Johnson WP (2004) Apparent decreases in colloid deposition rate coefficients with distance of transport under unfavorable deposition conditions: a general phenomenon. Environ Sci Technol 38(21):5616–5625

Long W, Hilpert MA (2009) Correlation for the collector efficiency of Brownian particles in clean-bed filtration in sphere packings by a lattice-Boltzmann method. Environ Sci Technol 43(12):4419–4424

Ma H, Pazmino E, Johnson WP (2011) Surface heterogeneity on hemispheres-in-cell model yields all experimentally-observed nonstraining colloid retention mechanisms in porous media in the presence of energy barriers. Langmuir 27(24):14982–14994

McCarthy JF, McKay LD (2004) Colloid transport in the subsurface: past, present, and future challenges. Vadose Zone J 3(2):326–337. doi:10.2113/3.2.326

McKay LD (2011) Foreword: pathogens and fecal indicators in groundwater. Groundwater 49(1):1–3. doi:10.1111/j.1745-6584.2010.00763.x

McKay LD, Gillham RW, Cherry JA (1993) Field experiments in a fractured clay till: 1. solute and colloid transport. Water Resour Res 29(12):3879–3880

Molnar IL, Johnson WP, Gerhard JI, Willson CS, O’Carroll DM (2015) Predicting colloid transport through saturated porous media: a critical review. Water Resour Res 51(9):6804–6845

Nelson KE, Ginn TR (2011) New collector efficiency equation for colloid filtration in both natural and engineered flow conditions. Water Resour Res 47:W05543. doi:10.1029/2010WR009587

Pazmino EF, Ma H, Johnson WP (2011) Applicability of colloid filtration theory in size-distributed, reduced porosity, granular media in the absence of energy barriers. Environ Sci Technol 45(24):10401–10407

Pazmino EF, Trauscht J, Johnson WP (2014a) Release of colloids from primary minimum contact under unfavorable conditions by perturbations in ionic strength and flow rate. Environ Sci Technol 48(16):9227–9235

Pazmino EF, Trauscht J, Dame B, Johnson WP (2014b) Power law size-distributed heterogeneity explains colloid retention on soda lime glass in the presence of energy barriers. Langmuir 30(19):5412–5421

Pieper AP, Ryan JN, Harvey RW, Amy GL, Illangasekare TH, Metge DW (1997) Transport and recovery of Bacteriophage PRD1 in a sand and gravel aquifer: effect of sewage-derived organic matter. Environ Sci Technol 31(4):1163–1170. doi:10.1021/es960670y

Powelson DK, Gerba CP, Yahya MT (1993) Virus transport and removal in wastewater during aquifer recharge. Water Resour Res 27(4):583–590

Rajagopalan R, Tien C (1976) Trajectory analysis of deep-bed filtration with the sphere-in-cell porous media model. AICHE J 22(3):523–533

Seitz SR, Leon JS, Schwab KJ, Lyon GM, Dowd M, McDaniels M, Abdulhafid G, Fernandez NL, Lindesmith LC, Baric RS, Moe CL (2011) Norovirus infectivity in humans and persistence in water. Appl Environ Microbiol 77(19):6884–6888

Shen C, Baoguo L, Huang Y, Jin Y (2007) Kinetics of coupled primary- and secondary-minimum deposition of colloids under unfavorable chemical conditions. Environ Sci Technol 41(20):6976–6982

Tafuri AN, Selvakumar A (2002) Wastewater collection system infrastructure research needs in the USA. Urban Water J 4:21–29

Tong M, Johnson WP (2006) Excess colloid retention in porous media as a function of colloid size, fluid velocity, and grain angularity. Environ Sci Technol 40(24):7725–7731. doi:10.1021/es061201r

Trauscht J, Pazmino EF, Johnson WP (2015) Prediction of nanoparticle and colloid attachment on unfavorable mineral surfaces using representative discrete heterogeneity. Langmuir 31(34):9366–9378

Tufenkji N, Elimelech M (2004) Deviation from the classical colloid filtration theory in the presence of repulsive DLVO interactions. Langmuir 20(25):10818–10828

USEPA (1989) Results of the evaluation of groundwater impacts of sewer exfiltration. Engineering Science Pb95-158358. US Environmental Protection Agency, Washington, DC

USEPA (2006) Occurrence and monitoring document for final ground water rule. USEPA publication no. 815-R-06-012. Office of Water, US Environmental Protection Agency, Washington, DC

Vilker VL (1978) An adsorption model for prediction of virus breakthrough from fixed beds. In: Proceedings of International Symposium on Land Treatment of Wastewater, vol 2. US Army Corps of Engineers Cold Regions Research and Engineering Laboratory, Hanover, NH, pp 381–388

Vilker VL (1980) Simulating virus movement in soils. In: Iskandar IK (ed) Modeling wastewater renovation: land treatment. Wiley, New York, pp 223–253

Vilker VL, Burge WD (1980) Adsorption mass transfer model for virus transport in soils. Water Res 14:783–790

Warner KL, Barataud F, Hunt RJ, Benoit M, Anglade J, Borchardt MA (2016) Interactions of water quality and integrated groundwater management: examples from the United States and Europe. In: Jakeman AJ, Barreteau O, Hunt RJ, Rinaudo J-D, Ross A (eds) Integrated groundwater management: concepts, approaches and challenges. Springer, Heidelberg, Germany, p 953

Woessner WW, Ball PN, DeBorde DC, Troy TL (2001) Viral transport in a sand and gravel aquifer under field pumping conditions. Groundwater 39(6):886–894. doi:10.1111/j.1745-6584.2001.tb02476.x

Wu J, Long SC, Das D, Dorner SM (2011) Are microbial indicators and pathogens correlated? A statistical analysis of 40 years of research. J Water Health 9:265–278

Yao KM, Habibian MT, O’Melia CR (1971) Water and waste water filtration: concepts and applications. Environ Sci Technol 5:1105–1112

Yates MV, Yates SR, Wagner J, Gerba CP (1987) Modeling virus survival and transport in the subsurface. J Contam Hydrol 1(3):329–345

Zhang P, Johnson WP, Piana MJ, Fuller CC, Naftz DL (2001a) Potential artifacts in interpretation of differential breakthrough of colloids and dissolved tracers in the context of transport in a zero-valent iron permeable reactive barrier. Groundwater 39(6):831–840

Zhang P, Johnson WP, Scheibe TD, Choi KH, Dobbs FC, Mailloux BJ (2001b) Extended tailing of bacteria following breakthrough at the Narrow Channel focus area, Oyster, Virginia. Water Resour Res 377(11):2687–2698

Acknowledgements

Ron Harvey (USGS), Mark Borchardt (USDA-ARS), and an anonymous reviewer are thanked for their review of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Published in the special issue “Hydrogeology and Human Health”

Rights and permissions

About this article

Cite this article

Hunt, R.J., Johnson, W.P. Pathogen transport in groundwater systems: contrasts with traditional solute transport. Hydrogeol J 25, 921–930 (2017). https://doi.org/10.1007/s10040-016-1502-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10040-016-1502-z