Abstract

The critical role of forests in the global carbon cycle is well known, but significant uncertainties remain about the specific role of disturbance, in part because of the challenge of incorporating spatial and temporal detail in the characterization of disturbance processes. In this study, we link forest inventory data to remote sensing data to derive estimates of pre- and post-disturbance biomass, and then use near-annual remote sensing observations of forest disturbance to characterize biomass loss associated with disturbance across the conterminous U.S. between 1986 and 2004. Nationally, year-to-year variability in the amount of live aboveground carbon lost as a result of disturbance ranged from a low of 61 T g C (±16) in 1991 to a high of 84 T g C (±33) in 2003. Eastern and western forest strata were relatively balanced in terms of their proportional contribution to national-level trends, despite eastern forests having more than twice the area of western forests. In the eastern forest stratum, annual biomass loss tracked closely with the area of disturbance, whereas in the western forest stratum, annual biomass loss showed more year-to-year variability that did not directly correspond to the area of disturbance, suggesting that the biomass density of forests affected by disturbance in the west was more spatially and temporally variable. Eastern and western forest strata exhibited somewhat opposing trends in biomass loss, potentially corresponding to the implementation of the Northwest Forest Plan in the mid 1990s that resulted in a shift of timber harvesting from public lands in the northwest to private lands in the south. Overall, these observations document modest increases in disturbance rates and associated carbon consequences over the 18-year period. These changes are likely not significant enough to weaken a growing forest carbon sink in the conterminous U.S. based largely on increased forest growth rates and biomass densities.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The critical role of forests in the global carbon cycle is well known, but significant uncertainties remain about the specific role of disturbance (CCSP 2007; U.S. EPA 2011). In U.S. forests, a growing carbon sink, equivalent in magnitude to over 10% of U.S. greenhouse gas emissions (Pan and others 2011a; U.S. EPA 2011), is associated with recovery from past disturbances (Pan and others 2011a). However, the fate of this sink is highly uncertain in the face of continued forest maturation (Pan and others 2011b) and increased rates of forest disturbance (Kurz and others 2008; Westerling and others 2006; Masek and others 2013). The importance of forest disturbance to carbon dynamics has been well-documented for specific disturbance factors and locations. For example, the on-going mountain pine beetle epidemic in western Canada is responsible for reversing a net carbon sink in Canadian forests to a net carbon source, as a cumulative 270 T g of carbon are estimated to be released by 2020 as a result of forest mortality (Kurz and others 2008). Elsewhere, the singular storm event of Hurricane Katrina was estimated to cause forest mortality and the loss of carbon equivalent to 50–140% of the net annual U.S. carbon sink in forest trees (Chambers and others 2007). Masek and others (2006) estimated that in the southeastern U.S., forest harvest accounts for approximately 25% of interannual variability in net ecosystem productivity (NEP), with climate accounting for the remaining variability.

An important element of the uncertainty about the role of forest disturbance in the carbon cycle is the challenge of incorporating spatial and temporal detail in the characterization of disturbance processes. In this study, we make significant progress in that direction.

Two common approaches to characterizing carbon flux at broad spatial and temporal scales are the stock change method and the age structure–carbon accumulation method. The stock change method, so-called because biomass stocks are periodically re-measured at forest inventory plots, is currently the cornerstone for large area monitoring and reporting to the United Nations Framework Convention on Climate Change (U.S. EPA 2011). Periodic re-measurement of biomass stocks yields estimates of net change, meaning that disturbance processes are only implicitly captured (Houghton 2005), making it difficult to separate mortality from growth. Direct attribution of change to disturbance processes (forest management, land use, fire, insects, and so on) is challenging (U.S. Agriculture and Forestry Greenhouse Gas Inventory 1990–2008; U.S. EPA 2011). Moreover, the stock change method is constrained by the temporal frequency (up to 10 years in the western U.S.) and spatial distribution of plot samples. Thus, periodic short-term pulses of forest disturbance, or changes on marginal forest lands might not be adequately captured (Houghton 2005; Campbell and others 2012), despite their dramatic impacts on the carbon cycle (Chambers and others 2007; Westerling and others 2006).

The age structure–carbon accumulation method (Williams and others 2012) relies upon scaled-up inventory-based estimates of forest-age distributions to simulate the growth of biomass across a landscape. In conjunction with a process-based model, the age structure–carbon accumulation method can explicitly partition the separate effects of forest growth and mortality on net carbon balance (Williams and others 2012). However, because the spatial locations of disturbance are not typically known, generalized assumptions about biomass densities are required to estimate the effect of disturbance on biomass stocks. Even when the spatial locations of disturbance are used to characterize carbon flux, such as a hybrid approach that uses the age structure–carbon accumulation approach in conjunction with maps of land-cover change and forest disturbance (Zheng and others 2011), spatially aggregated biomass densities derived from inventory data are still used to estimate the effect of disturbance on biomass stocks. These spatially generic approaches highlight the challenges of incorporating detailed disturbance characterization into carbon accounting and underscore the need for spatially and temporally explicit maps of biomass and biomass change at the resolution of land management to better guide carbon accounting and ultimately policy and management.

Remote sensing approaches can help to reveal when, where, and by what intensity disturbances occur, but a direct linkage to forest inventory data is essential for understanding the impact of disturbance on carbon flux. Forest growth and carbon accumulation after disturbance can be monitored with remote sensing up to a point but typically require forest growth modeling. Carbon uptake by forests is the net difference between disturbance and regrowth and a more complete accounting of the relative magnitudes of these processes is critical for improved understanding of how future disturbances and regrowth, and interactions with forest management and climate change, will impact what is currently a net carbon sink in North American forests. In this paper, we link forest inventory data to remote sensing data to derive estimates of pre- and post-disturbance biomass, and then use near-annual remote sensing observations of forest disturbance to characterize biomass loss associated with disturbance.

What our approach highlights is the need for the continued development of a national-scale carbon monitoring system (for example, NASA Carbon Monitoring System: http://carbon.nasa.gov/), which would include spatially comprehensive and ongoing disturbance detection. Ideally, this system will be tied to the annual forest inventory data system as well as models that combine growth simulators and other available data (including lidar) to explicitly partition growth and disturbance effects. In this paper, we focus on steps toward development of the part of a national-scale system that includes a linkage between disturbance mapping and forest inventory data.

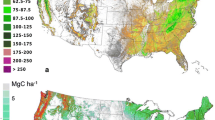

Remote sensing approaches can provide spatially and temporally explicit maps of disturbance dynamics (Huang and others 2010; Kennedy and others 2012), but not all datasets or analyses are sufficient. Previous studies have also successfully mapped biomass stocks at broad scales with passive optical remote sensing imagery (Kellndorfer and others 2004; Blackard and others 2008), active remote sensing imagery (Lefsky and others 2005; Toan and others 2004), and fusion approaches involving both passive and active imagery (Saatchi and others 2007; Kellndorfer and others 2010). However, these approaches typically lack the historical view required to estimate decadal scale biomass flux (for example, Cohen and others 1996). Archived AVHRR data have been used to estimate biomass flux since the early 1980s (Myneni and others 2001), but the course spatial resolution of these data do not adequately capture changes in biomass stocks attributable to forest management or disturbance (Coops and others 2009).

Here, we employ Landsat satellite data, which are unique in that they are capable of mapping both disturbance and biomass stocks with nearly 40 years of archived observations at the scale of land management (Cohen and Goward 2004; Powell and others 2010). We report on the results of sample-based observation of aboveground biomass loss in forests as a result of disturbance across the conterminous U.S. between 1986 and 2004. Biomass loss in the context of our study is a generic concept, including both natural and anthropogenic disturbances, and does not strictly equate to carbon flux as the eventual fate of the live aboveground biomass that is lost is unknown (for example, it might be removed from a site following harvesting or it might be left on site to decompose following a fire). Landsat remote sensing-based estimates of disturbance rates and associated biomass consequences affords a consistent and synoptic assessment of the trends and relative importance of disturbance to the forest carbon sink.

This study combines two components of the North American Forest Dynamics (NAFD) project (Goward and others 2008; Masek and others 2013). In the initial objective, dense time series of Landsat imagery were assembled for 50 sample scenes across North America to estimate rates of forest disturbance (Masek and others 2013). Subsequently, we developed methods to translate the time series of spectral imagery into biomass with empirical models based on U.S.D.A. Forest Service Forest Inventory and Analysis (FIA) field measurements of aboveground biomass (Powell and others 2010). In this paper, we combine the biomass maps with biennial maps of forest disturbance based on sample scenes across the conterminous U.S. (Huang and others 2010; Masek and others 2013), which are the basis for sample-based estimation of forest biomass loss as a result of disturbance in eastern and western U.S. strata. Current research efforts are underway to operationalize the wall-to-wall coverage of Landsat time series across the conterminous U.S. for disturbance quantification.

Our approach employs two strategies to improve estimation of forest biomass from the relationships between optical remote sensing and inventory data. First, we use a pixel-level curve-fitting approach to time series of biomass estimates to reduce noise and improve predictions of biomass stocks and fluxes (Kennedy and others 2010; Powell and others 2010). Second, we focus the predictions of biomass change only on disturbed areas of the landscape, which captures the most dynamic loss and gain periods in the biomass cycle, maximizing the signal-to-noise ratio. Despite the known limitations of optical remote sensing of biomass, the spatial and temporal resolutions of the nearly 40 years of archived Landsat imagery enable consistent, repeatable measurement of biomass flux across large areas, long time-frames, and at the appropriate resolution of land management and disturbance (Cohen and Goward 2004). Overall, this approach has the potential to serve as an empirical check on other estimates of national-level biomass loss as a result of disturbance.

The overall goal of this study is to derive empirical estimates of the rates of biomass loss as a result of disturbance in the conterminous U.S. for the period 1986–2004. The specific objectives are to:

-

1)

Assess geographic and temporal trends in biomass loss as a result of disturbance.

-

2)

Compare estimates of biomass loss among studies to better understand the strengths and weaknesses of various carbon accounting approaches.

-

3)

Evaluate trends in biomass loss in the context of a large and growing forest carbon sink in the U.S.

Methods

Empirical Biomass Modeling with Forest Inventory and Analysis Plots

The methodological basis for biomass estimation was developed on two Landsat scenes (Powell and others 2010). In this paper, we extend those established methods to a sample (explained below) of 50 Landsat scenes across the conterminous U.S. (Figure 1). For each of the 50 sample scenes, we developed an empirical regression tree model of live, aboveground biomass based on spectral reflectance, and biophysical variables. The biomass reference data were derived from FIA tree-level observations of tree species and diameter-at-breast-height (DBH). We converted these observations to live, aboveground biomass using the Jenkins equations, which are a national-scale set of ten DBH-based biomass allometric equations developed through a meta-analysis of published equations (Jenkins and others 2003). Tree-level biomass observations were aggregated to the plot level using the tree expansion factor, and only homogenous “single-condition” forested plots were used to develop empirical biomass models. For each plot location, we extracted a suite of spectral and ancillary data to be used as inputs to the empirical model. From temporally coincident Landsat surface-reflectance imagery, we extracted native spectral bands and several derived spectral indices including the tasseled cap indices brightness, greenness, wetness, angle, and distance (Powell and others 2010), and the Normalized Difference Vegetation Index (NDVI). From other spatial data layers, we extracted elevation, slope, potential relative solar radiation, temperature, precipitation, growing degree days, water vapor pressure, and shortwave radiation. The empirical models were developed using RandomForest regression tree models (Breiman 2001), a non-parametric ensemble classifier that includes an internal error assessment. RandomForest models and maps were developed in the R package ModelMap (Freeman 2009). Each individual biomass model was assessed in terms of the root-mean-square-error (RMSE) and bias between observed and predicted biomass. We calculated RMSE as follows:

where (\( \hat{y}_{i} \)) was the predicted biomass on the ith plot, and (y i ) the observed biomass on the ith plot. Bias was calculated as the difference between the mean-predicted \( (\hat{\bar{y}}) \) and the mean-observed \( (\bar{y}) \) biomass:

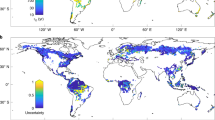

NAFD sample scenes across the conterminous U.S. Each sample is a Landsat scene Voronni polygon labeled with the path/row. Samples are overlaid on a map of forest types developed by the USDA Forest Service (http://fsgeodata.fs.fed.us/rastergateway/forest_type/).

Independent Corroboration

In addition to internal model error assessment, we compared approximately one-half of the predicted maps of biomass (23 phase 1 samples) against three independent data sets: The National Biomass Carbon Dataset (NBCD2000) (Kellndorfer and others 2004), The FIA National Biomass Map (Blackard and others 2008), and FIA inventory-based estimates based on plot data alone. For each of the comparisons, we calculated the RMSE and bias of the scene-level mean biomass predictions based on a large sample of FIA plot locations.

Landsat Time Series Stacks

Each of the 50 sample scenes consisted of a Landsat time series stack (LTSS) of near-annual imagery (Masek and others 2013). Each LTSS was developed using the highest quality, least cloudy images acquired as close to the peak growing season anniversary dates as possible to minimize the effects of exogenous factors such as vegetation phenology and sun-angle differences (Huang and others 2009). The raw images were geometrically corrected to achieve subpixel geolocation accuracy (that is, within 28.5 m) using the Automated Registration and Orthorectification Package (AROP) (Gao and others 2009) and radiometrically calibrated to surface reflectance using a MODIS-like 6S-based atmospheric correction algorithm implemented as part of the Landsat Ecosystem Disturbance Adaptive Processing System (LEDAPS) (Masek and others 2006). Each image was then radiometrically normalized to a common reference image using the Multivariate Alteration Detection (MAD) method (Canty and others 2004; Schroeder and others 2006) to further minimize unimportant temporal spectral variability. Then, the empirical biomass models were temporally applied to their respective radiometrically normalized LTSS to obtain biomass time series between 1986 and 2004 (Healey and others 2006).

LandTrendr Smoothing

After temporal prediction we employed a pixel-level smoothing algorithm to each biomass time series to minimize errors associated with year-to-year variability in spectral reflectance due to exogenous factors. We modified the LandTrendr algorithm (Kennedy and others 2010) specifically for this purpose to smooth the Landsat biomass trajectories. LandTrendr in this context operates by identifying a potential set of vertices that represent the biomass trajectory and then selecting the model that balances minimization of residual error with increased complexity.

Disturbance Maps

To isolate only the biomass lost through disturbance, each of the biomass time series stacks was intersected with a map of forest disturbance derived from the Vegetation Change Tracker (VCT) algorithm applied to the same LTSS (Huang and others 2010). The VCT operates by quantifying changes in the disturbance-related direction of a spectral index for pixels identified as forested and labeling the year of change as year-of-disturbance. Independent validation of VCT maps across several of the sample scenes indicated accuracy of over 92% for change versus no-change (Thomas and others 2011). We opted to use these disturbance maps to estimate disturbance rates to ensure consistency with NAFD estimates of rates of forest disturbance across the conterminous U.S. (Masek and others 2013). Biomass loss (that is, max biomass–min biomass) was quantified only for pixels labeled as disturbed by VCT (Figure 2).

National-Level Estimation

We estimated the national-level and stratum-level (eastern and western U.S.) rates of biomass loss based on the sampling and estimation framework used to quantify rates of forest disturbance (Masek and others 2013). According to this framework, the samples for the two strata were selected based on an unequal probability of selection that targeted geographic scene dispersion, forest type diversity, total forest area, and inclusion of some fixed scenes. Biomass loss as a result of disturbance within each stratum was estimated using the generalized Horvitz–Thompson estimator, which accounts for the unequal-probability of sample selection by weighting the responses according to probability (Horvitz and Thompson 1952). Biomass estimates were converted to carbon estimates based on the assumption that carbon content equaled 50% of biomass (Smith and others 2003). In this context, biomass loss estimates do not equate directly to CO2 emissions, as the immediate fate of the lost biomass is unknown.

Results

Empirical Biomass Models

Across the 50 empirical biomass models, RMSEs ranged from a low of 22 Mg/ha of biomass to a high of 221 Mg/ha of biomass (Table 1), with a mean of 80 Mg/ha of biomass. Relative to the range of observed biomass (max–min) within each of the sample scenes, RMSEs ranged from 9 to 25% (Figure 3). The average bias across all 50 scenes was 1.77 Mg/ha of biomass, ranging from a low of 0.02 Mg/ha to a high of 9.8 Mg/ha (Table 1). Only four scenes had a slight negative bias, indicating under-prediction relative to the mean observed biomass.

Comparison to Other Biomass Maps

Comparison of predicted Landsat scene-level biomass to three independent sources yielded favorable results, with mean scene-level biomass RMSEs ranging from 27 to 35 Mg/ha (Figure 4). Our scene-level biomass predictions were generally higher than the FIA plot-based biomass estimates (bias = 21 Mg/ha), especially for one significant outlier (central Oregon p45/r29). Likewise, our predicted biomass was generally higher than the FIA National Biomass Map estimates (bias = 24 Mg/ha). Compared to the NBCD2000 biomass map, predictions were more evenly distributed around the 1:1 line (bias = 1 Mg/ha).

Biomass Loss Estimates

Nationally, year-to-year variability in the amount of aboveground carbon lost as a result of disturbance ranged from a low of 61 T g C (±16) in 1991 to a high of 84 T g C (±33) in 2003 (Table 2; Figure 5). Eastern and western forest strata were relatively balanced in terms of their proportional contribution to national-level trends, despite eastern forests (181 million ha) having more than twice the area of western forests (73 million ha). On average, disturbance in eastern forests led to a loss of 0.19 Mg C per ha y−1 whereas in western forests the rate was 0.53 Mg C per ha y−1. Extrapolated across the area of forest in each stratum, eastern forests lost an average of 35 T g C y−1 (±5) via disturbance and western forests lost an average of 39 T g C y−1 (±14) for a national-level average annual total of 74 T g C y−1 (±19) between 1986 and 2004.

Regional Trends

In the eastern forest stratum, annual biomass loss tracks closely (inversely) with the area of disturbance (Figure 6), suggesting a relative consistency of biomass density affected by disturbances, which in the eastern stratum are largely harvest. In contrast, in the western forest stratum, annual biomass loss shows more year-to-year variability that does not directly correspond to the area of disturbance, particularly in the post-2000 era (Figure 7). This pattern suggests that the biomass density of forests affected by disturbance in the west is more spatially and temporally variable.

We examined the sample-level trends in biomass loss by region (East-North; East-South; Interior West; Pacific Coast) to better understand the regional drivers of the stratum and national-level trends (Figure 8). The 12 samples from the East-North showed little temporal variability in biomass loss between 1986 and 2004 and low overall magnitude of biomass loss as a result of disturbance. The 12 samples from the Interior West similarly showed low magnitude of biomass loss as a result of disturbance, but in contrast, exhibited two pronounced pulses of increased biomass loss in the mid-1990s and then again in the early 2000s, largely related to wildfire events. The 13 East-South samples and the 13 Pacific Coast samples exhibited contrasting trends in biomass loss between 1986 and 2004. When the Pacific Coast samples exhibited a pronounced decrease in biomass loss throughout the 1990s, the East-South samples exhibited an increase in biomass loss.

Discussion

Spatially and temporally explicit characterization of the carbon consequences of disturbance is critical for accurate assessment of continental-scale carbon flux. Combining maps of disturbance with estimates of biomass loss demonstrates the importance of quantifying the geographic variability of biomass density with respect to disturbance. Although quantifying biomass loss as a result of disturbance is not strictly equivalent to quantifying carbon flux, it is an important precursor to being able to quantify how carbon is ultimately redistributed among carbon storage pools and the atmosphere. This critical baseline information is only one part of the overall carbon budget, but a difficult one to estimate by conventional methods (for example, inventory-based approaches). Inventory-based approaches do not explicitly account for disturbance; rather repeat measurements of field plots implicitly include both growth and mortality. As a result, the inventory-based approach alone makes it difficult to address one of the key uncertainties of what drives the magnitude and fate of the carbon sink (Masek and Healey 2013). Is the estimated growth in the North American carbon sink a result of increased forest growth or reduced disturbance?

Optical remote sensing-based methods for carbon accounting, like inventory-based approaches, cannot by themselves be relied upon to estimate all of the key elements of the carbon budget. However, spatially and temporally detailed mapping of disturbance is a noted strength of the Landsat data archive (Huang and others 2010; Kennedy and others 2010; Masek and others 2013), and coupled with empirical estimates of biomass loss provides needed insight into this critical part of the carbon cycle. Other studies have demonstrated that at the regional scale, Landsat-based estimates of harvest volume correspond well with Timber Products Output (TPO) harvest records (Healey and others 2009).

Spatial and Temporal Trends in Biomass Loss

We document a nearly 20-year trend in biomass loss as a result of forest disturbance across the conterminous U.S., including harvest, fire, hurricane, land-cover conversion, and numerous other disturbance processes. Each of these disturbance types leaves a unique imprint on the carbon cycle, though we make no attempt here at disturbance attribution. We do observe, however, a stronger coherence between trends in biomass loss and disturbance area in eastern forests versus western forests, potentially highlighting key differences in stand age heterogeneity and disturbance impacts (for example, even-aged harvest in eastern forests versus mix-aged disturbances such as insect outbreaks and fire in western forests). Other more formal research into disturbance attribution is ongoing (Stewart and others 2009; Schroeder and others 2011). The general trend in the latter half of the time series is of increasing biomass loss (Figure 5), driven in large part by the observation of increasing disturbance over this time period (Masek and others 2013). Eastern and western forest strata exhibit somewhat opposing trends in biomass loss—as the western stratum declines in the mid-portion of the time series, the eastern stratum increases (Figures 6, 7). The timing of these opposing trends corresponds to the implementation of the Northwest Forest Plan in the Pacific Northwest in the mid 1990s that resulted in a shift of timber harvesting from public lands in the northwest to private lands in the south (Smith and others 2009; Healey and others 2008). The trend of decreasing biomass loss in the western forest stratum is largely driven by the Pacific samples, which on a per-sample scene basis contribute significantly more to biomass loss than the interior west samples (P = 0.002) (Figure 8). This trend is consistent with the fact that forest carbon stocks are highest in the Pacific states, lowest in the Great Plains, and intermediate in the Rocky Mountains and eastern states (U.S. Agriculture and Forestry Greenhouse Gas Inventory 1990–2008). The increase in biomass loss for the western stratum near the end of the time series is likely associated with notable wildfire events in both interior west and Pacific samples. The eastern stratum trends are driven almost entirely by the southern samples. The northern samples show little year-to-year variability in biomass loss and contribute little to the overall magnitude of biomass loss for the eastern stratum.

Comparison of Estimates of Biomass Loss Among Studies

A number of other studies have estimated disturbance and biomass loss using a variety of approaches. The 2011 EPA Greenhouse Gas Inventory (U.S. EPA 2011) estimated a range of biomass loss as a result of disturbance (harvest and fire only) of 146–202 T g C y−1 between 1990 and 2004. Domestic harvests ranged from 125 to 144 T g C y−1 and fire (wild and prescribed) ranged from 11 to 67 T g C y−1. For the purposes of comparison, we plotted our estimates of biomass loss against the EPA estimates for the time period 1990–2004 (Figure 9). Most of the variability in the EPA disturbance estimates stems from the year-to-year fluctuations in estimated wildfire emissions. Annual domestic harvest rates are relatively consistent but declining between 1990 and 2004. The EPA estimate of biomass removed as a result of harvest is based on calculations of harvested wood product end uses (Skog 2008). For fire emissions, average biomass densities and combustion factors were applied to the area of forest that burned in a given year (41 Mg C/ha was estimated to be emitted by wildfire regardless of forest type, pre-fire biomass, fire severity, and so on).

Comparison of estimate of biomass loss as a result of disturbance between 1990 and 2004 against EPA estimates of biomass flux as a result of harvest and fire for the same time period. For reference, the estimated net aboveground, live biomass flux from EPA is shown. Positive numbers indicate carbon uptake; negative numbers indicate carbon loss.

Zheng and others (2011) estimated that disturbances reduced the forest carbon sink by 36% from 1992 through 2001, compared to that without disturbances in the contiguous U.S. On an annual basis, this estimate equated to 233 T g C y−1 removed as a result of disturbance, over three times the amount that we report here, though importantly we only report on live, aboveground tree carbon pools whereas Zheng and others (2011) include standing dead, down dead, forest floor, and soil carbon pools. The methods that they used to quantify carbon consequences of disturbance relied on a combination of approaches with varying assumptions about spatial and temporal precision. Carbon removed as a result of deforestation was estimated by first calculating the area of deforestation using the National Land Cover Database (NLCD) 1992–2001 Retrofit Change Map and then multiplying by the mean forest carbon density for a given county and assuming a carbon loss of 80% following deforestation. Carbon removed by harvest was estimated based on the volume of timber reported by the FIA as part of the TPO data (USDA Forest Service 2010). Western harvests were represented by the average state-level estimate for the period 1996–2001 and, therefore, lacked temporal variability. Forest fire carbon losses were calculated using the Monitoring Trends in Burn Severity (MTBS) (Eidenshink and others 2007) maps to estimate burned area, then multiplying this estimate by the average state-level carbon density from Smith and others (2006) and assuming 20, 40, and 60% carbon emission for low, moderate, and high severity fire classes, respectively. Burned area estimates from MTBS were taken as average annual burned area, and, therefore, as above, lacked temporal variability.

Williams and others (2012) estimated biomass loss by forest type, region, and productivity class as a function of the area of forested land assigned a stand age of one year based on the FIA age histogram, the pre-disturbance aboveground biomass, and the 80% of the “killed” biomass that was removed via harvest or fire from the site. Across the conterminous U.S. for the year 2005, they estimated that 117 T g C y−1 were removed as a result of harvest (107 T g C y−1) and fire (10 T g C y−1). Wildfire emissions were based on the Global Fire Emissions Database V3 (GFED3) (van der Werf and others 2010).

What these carbon accounting approaches highlight is the uncertainty regarding the spatial and temporal characterization of biomass change with respect to disturbance. The assumption that disturbances impact forests with spatially averaged biomass densities can lead to gross under- or over-estimation of biomass loss if disturbances are in fact taking place in areas that diverge from “average” conditions (Houghton and others 2009).

Study Limitations and Potential Sources of Error

Our empirical remote sensing-based estimates of biomass loss as a result of disturbance are lower than the other reported studies. Importantly, however, our estimates afford a spatially and temporally explicit characterization with a consistent and synoptic method. We do recognize the many potential sources of error that affect our estimates of biomass loss as a result of disturbance, many of which likely result in under-estimation. The major sources of error include sampling error and errors associated with Landsat data and empirical modeling (including model bias). The sampling error is explicitly accounted for in terms of standard deviations around the estimates of biomass loss. The Landsat and empirical modeling errors, on the other hand, are not explicitly accounted for here. We will describe these individual sources of error and how each would likely affect the estimates of biomass loss.

Disturbance Map

The VCT-derived disturbance map underwent rigorous independent validation for six sample scenes, enabling an approximation of overall map error (Thomas and others 2011). Our approach to estimate biomass loss did not hinge upon the accuracy of the disturbance map’s year-of-disturbance, but rather was premised on the accuracy of disturbed versus non-disturbed. Even so, the disturbance maps under-estimated the amount of disturbance, and therefore biomass loss, in five out of six validation scenes. Map bias was calculated as the difference between the proportion of reference disturbance and map disturbance. Overall, across the six scenes, the average bias was −6.4% (−7.7% in the east and −4.0 in the west). Disturbance map commission errors (false negatives) also likely resulted in under-estimation of biomass loss because we calculated the mean biomass loss across all pixels that were labeled as disturbance in a given year by VCT.

Biomass Modeling

A number of sources of error revolve around empirical biomass modeling. The limitations of optical remote sensing of biomass are well understood. In closed canopy forests especially, optical remote sensing data saturate at high levels of biomass. Furthermore, RandomForest regression tree models tend toward over-prediction in low biomass forests and under-prediction in high biomass forests (Powell and others 2010). Therefore, our estimates of biomass loss are likely deflated because of errors at both ends of the spectrum. In addition, with respect to biomass modeling, our estimates only account specifically for live, aboveground biomass pools. We do not make any attempt to address the other biomass pools, including dead (standing and coarse woody debris), belowground, non-tree biomass (for example, grasses and shrubs), or soil carbon.

Biomass Allometry

Error associated with biomass allometry is another potential source of error. Here, we used the Jenkins allometric equations based solely on DBH for the purposes of national-level consistency. A recent comparison of biomass allometric approaches found that the Jenkins method overestimated carbon stocks by an average of 16% for 20 tree species compared to an approach that incorporated tree height into species-specific tree volume models (Domke and others 2012).

Additional Sources

Finally, a variety of challenges of empirical modeling with plot data add additional sources of uncertainty and error, though their overall effects on biomass loss estimates are unknown. Chief among them is the potential that geo-locational inaccuracies between the plot data and the satellite imagery cloud the empirical relationships between biomass and spectral data. In addition, although we attempted to minimize the various sources of error that can affect Landsat time series analyses, it is inevitable that there were residual effects of exogenous factors such as sun angle differences, phenological differences, and atmospheric differences.

Trends in Biomass Loss in the Context of the U.S. Forest Carbon Sink

How do we reconcile our estimates of increasing rates of forest disturbance and biomass loss with a significant and growing forest carbon sink? Pan and others (2011a) estimated that the magnitude of the U.S. forest carbon sink increased from 179 T g C y−1 between 1990 and 1999 to 239 T g C y−1 between 2000 and 2007 with large increases in biomass and soil carbon pools. One explanation for this pattern is that despite increasing rates of forest disturbance, a relatively small percentage (~1%) of conterminous U.S. forests is disturbed annually (Masek and others 2013), and hence the associated carbon consequences are relatively minor. For example, the 2003 EPA estimate of total live aboveground forest biomass stocks was 16,295 T g C (U.S. EPA 2011). In that same year, our study estimated that 84 T g C were lost as a result of disturbance, equating to just over 0.5% of live aboveground forest biomass stocks. Acknowledging that we are likely underestimating the amount of biomass lost as a result of disturbance, the relative consistency of these results suggest that disturbance factors alone are unlikely to significantly weaken a growing forest carbon sink. Another explanation for this pattern is that the area of forest land is increasing, albeit slowly (U.S. EPA 2011). A third explanation is that current forest management practices combined with prior land-use changes are resulting in increased forest growth rates, and hence carbon uptake and higher biomass density (U.S. EPA 2011). This interpretation is consistent with the recent increase in aboveground live biomass flux documented by the EPA (U.S. EPA 2011) (Figure 9). Despite increases in forest mortality, in the Rocky Mountain region in particular, net annual growth of U.S. forests is increasing (Smith and others 2009), with southern forests being a major contributor. Eastern forests contain larger carbon stores than western forests but western forests are sequestering carbon at a higher rate than eastern forests on a per-hectare basis (U.S. Agriculture and Forestry Greenhouse Gas Inventory 1990–2008). The overall balance between net growth and loss suggests a mechanism for a growing carbon sink. Growing stock removals across the U.S. have been relatively stable the past several decades whereas net growth has continued to increase (Smith and others 2009). The stability (and even recent decline) in timber harvests masks a highly variable and potentially growing rate of non-harvest disturbance (Goward and others 2008; Westerling and others 2006; Masek and others 2013). Overall, our data suggest that modest increases in disturbance rates and associated carbon consequences over the 18 year period of the study are not significant enough to weaken a growing forest carbon sink in the conterminous U.S. based largely on increased forest growth rates and biomass densities.

References

Blackard JA, Finco MV, Helmer EH, Holden GR, Hoppus ML, Jacobs DM, Lister AJ, Moisen GG, Nelson MD, Riemann R, Ruefenacht B, Salanjanu D, Weyermann DL, Winterberger KC, Brandeis TJ, Czaplewski RL, McRoberts RE, Patterson PL, Tymcio RP. 2008. Mapping US forest biomass using nationwide forest inventory data and moderate resolution information. Remote Sens Environ 112:1658–77.

Breiman L. 2001. Random Forests. Mach Learn 45:5–32.

Campbell JL, Kennedy RE, Cohen WB, Miller R. 2012. Assessing the carbon consequences of western juniper encroachment across Oregon, USA. Range Ecol Manag 65(3):223–31.

Canty MJ, Nielson AA, Schmidt M. 2004. Automatic radiometric normalization of multitemporal satellite imagery. Remote Sens Environ 91(3–4):441–51.

Chambers JQ, Fisher JI, Zeng H, Chapman EL, Baker DB, Hurtt GC. 2007. Hurricane Katrina’s carbon footprint on U.S. Gulf Coast forests. Science 318:1107.

Climate Change Science Program. 2007. The first State of the Carbon Cycle Report (SOCCR): the North American carbon budget and implications for the global carbon cycle. In: King AW, Dilling L, Zimmerman GP, Fairman DM, Houghton RA, Marland G, Rose RA, Wilbanks TJ, Eds. A report by the U.S. Climate Change Science Program and the Subcommittee on Global Change Research. Asheville, NC: National Oceanic and Atmospheric Administration, National Climate Data Center. 242 pp.

Cohen WB, Goward SN. 2004. Landsat’s role in ecological applications of remote sensing. Bioscience 54:535–45.

Cohen WB, Harmon ME, Wallin DO, Fiorella M. 1996. Two decades of carbon flux from forests of the Pacific Northwest. Bioscience 46:836–44.

Coops NC, Wulder MA, Iwanicka D. 2009. Large area monitoring with a MODIS-based Disturbance Index (DI) sensitive to annual and seasonal variations. Remote Sens Environ 113:1250–61.

Domke GM, Woodall CW, Smith JE, Westfall JA, McRoberts RE. 2012. Consequences of alternative tree-level biomass estimation procedures on U.S. forest carbon stock estimates. For Ecol Manage 270:108–16.

Eidenshink J, Schwind B, Brewer K, Zhu Z, Quayle B, Howard S. 2007. A project for monitoring trends in burn severity. Fire Ecol Spec Issue 3(1):3–21.

Freeman EA, Frescino TA. 2009. ModelMap: an R package for modeling and map production using Random Forest and Stochastic Gradient Boosting. Ogden, UT: USDA Forest Service, Rocky Mountain Research Station. http://CRAN.R-project.org.

Gao F, Masek J, Wolfe R. 2009. An automated registration and orthorectification package for Landsat and Landsat-like data processing. J Appl Remote Sens 3:033515. doi:10.1117/1.3104620.

Goward SN, Masek JG, Cohen W, Moisen G, Collatz GJ, Healey S, Houghton RA, Huang C, Kennedy R, Law B, Powell S, Turner D, Wulder MA. 2008. Forest disturbance and North American carbon flux. EOS 89(11):105–6.

Healey SP, Yang Z, Cohen WB, Pierce DJ. 2006. Application of two regression-based methods to estimate the effects of partial harvest on forest structure using Landsat data. Remote Sens Environ 101:115–26.

Healey SP, Cohen WB, Spies TA, Moeur M, Pflugmacher D, Whitley MG, Lefsky M. 2008. The relative impact of harvest and fire upon landscape-level dynamics of older forests: lessons from the Northwest Forest Plan. Ecosystems 11(7):1106–19.

Healey SP, Blackard JA, Morgan TA, Loeffler D, Jones G, Songster J, Brandt JP, Moisen GG, DeBlander LT. 2009. Changes in timber haul emissions in the context of shifting forest management and infrastructure. Carbon Balance Manage 4:9.

Horvitz DG, Thompson DJ. 1952. A generalization of sampling without replacement from a finite universe. J Am Stat Assoc 47:663.

Houghton RA. 2005. Aboveground forest biomass and the global carbon balance. Glob Change Biol 11:945–58.

Houghton RA, Hall F, Goetz SJ. 2009. Importance of biomass in the global carbon cycle. J Geophys Res 114:G00E03. doi:10.1029/2009JG000935.

Huang C, Goward SN, Masek JG, Gao F, Vermote EF, Thomas N, Schleeweis K, Kennedy RE, Zhu Z, Eidenshink JC, Townshend JRG. 2009. Development of time series stacks of Landsat images for reconstructing forest disturbance history. Int J Digit Earth 2:195–218.

Huang C, Goward SN, Masek JG, Thomas N, Zhu Z, Vogelmann JE. 2010. An automated approach for reconstructing recent forest disturbance history using dense Landsat time series stacks. Remote Sens Environ 114:183–98.

Jenkins JC, Chojnacky DC, Heath LS, Birdsey RA. 2003. National-scale biomass equations for United States tree species. For Sci 49(1):12–35.

Kellndorfer JM, Walker WS, Pierce LE, Dobson MC, Fites J, Hunsaker C, Vona J, Clutter M. 2004. Vegetation height derivation from Shuttle Radar Topography Mission and National Elevation data sets. Remote Sens Environ 93(3):339–58.

Kellndorfer JM, Walker WS, LaPoint E, Kirsch K, Bishop J, Fiske G. 2010. Statistical fusion of lidar, InSAR, and optical remote sensing data for forest stand height characterization: a regional-scale method based on LVIS, SRTM, Landsat ETM+, and ancillary data sets. J Geophys Res 115:G00E08. doi:10.1029/2009JG000997.

Kennedy RE, Yang Z, Cohen WB. 2010. Detecting trends in disturbance and recovery using yearly Landsat time series: 1. LandTrendr—temporal segmentation. Remote Sens Environ 114:2897–910.

Kennedy RE, Yang Z, Cohen WB, Pfaff E, Braaten J, Nelson P. 2012. Spatial and temporal patterns of forest disturbance and regrowth within the area of the Northwest Forest Plan. Remote Sens Environ 122:117–33.

Kurz WA, Dymond CC, Stinson G, Rampley GJ, Neilson ET, Carroll AL, Ebata T, Safranyik L. 2008. Mountain pine beetle and forest carbon feedback to climate change. Nature 452. doi:10.1038/nature06777.

Lefsky MA, Harding DJ, Keller M, Cohen WB, Carabajal CC, Del Bom Espirito-Santo F, Hunter MO, de Oliveira Jr R. 2005. Estimates of forest canopy height and aboveground biomass using ICESat. Geophys Res Lett 32. doi:10.1029/2005GL023971.

Masek JG, Healey SP. 2013. Monitoring U.S. forest dynamics with Landsat. In: Achard F, Hansen MH, Eds. Global forest monitoring from earth observation. Boca Raton, FL: CRC Press.

Masek JG, Vermote EF, Saleous N, Wolfe R, Hall FG, Huemmrich F, Gao F, Kutler J, Lim TK. 2006. Landsat surface reflectance data set for North America, 1990–2000. Geosci Remote Sens Lett 3:68–72.

Masek JG, Goward SN, Kennedy RE, Cohen WB, Moisen GG, Schleweiss K, Huang C. 2013. United States forest disturbance trends observed using Landsat time series. Ecosystems 16:1087–104.

Myneni RB et al. 2001. A large carbon sink in the woody biomass of Northern forests. Proc Natl Acad Sci USA 98(26):14784–9.

Pan Y, Birdsey RA, Fang J, Houghton R, Kauppi PE, Kurz WA, Phillips OL, Shvidenko A, Lewis SL, Canadell JG, Ciais P, Jackson RB, Pacala SW, McGuire AD, Piao S, Rautiainen A, Sitch S, Hayes D. 2011a. A large and persistent carbon sink in the world’s forests. Science 333:988–93.

Pan Y, Chen JM, Birdsey R, McCullough K, He L, Deng F. 2011b. Age structure and disturbance legacy of North American forests. Biogeosciences 8:715–32.

Powell SL, Cohen WB, Healey SP, Kennedy RE, Moisen GG, Pierce KB, Ohmann JL. 2010. Quantification of live aboveground forest biomass dynamics with Landsat time-series and field inventory data: a comparison of empirical modeling approaches. Remote Sens Environ 114:1053–68.

Saatchi SS, Houghton RA, Dos Santos Alvala RC, Soares JV, Yu Y. 2007. Distribution of aboveground live biomass in the Amazon basin. Glob Change Biol 13:816–37.

Schroeder TA, Cohen WB, Song C, Canty MJ, Yang Z. 2006. Radiometric calibration of Landsat data for characterization of early successional forest patterns in western Oregon. Remote Sens Environ 103:16–26.

Schroeder TA, Wulder MA, Healey SP, Moisen GG. 2011. Mapping wildfire and clearcut harvest disturbances in boreal forests with Landsat time-series data. Remote Sens Environ 115(6):1421–33.

Skog KE. 2008. Sequestration of carbon in harvested wood products for the United States. For Prod J 58:56–72.

Smith JE, Heath LS, Jenkins JC. 2003. Forest volume-to-biomass models and estimates of mass for live and standing dead trees of U.S. forests. Gen. Tech. Rep. NE-298. Newtown Square, PA: USDA Forest Service, Northeastern Research Station.

Smith JE, Heath LS, Skog KE, Birdsey RA. 2006. Methods for calculating forest ecosystem and harvested carbon with standard estimates for forest types of the United States (NE-GTR-343). Newtown Square, PA: USDA Forest Service, Northeastern Research Station.

Smith WB, Miles PD, Perry CH, Pugh CH. 2009. Forest resources of the United States, 2007. Gen. Tech. Rep. WO-78. Washington, DC: U.S. Department of Agriculture, Forest Service, Washington Office. 336 pp.

Stewart BP, Wulder MA, McDermid GJ, Nelson T. 2009. Disturbance capture and attribution through the integration of Landsat and IRS-1C imagery. Can J Remote Sens 35:523–33.

Thomas NE, Huang C, Goward SN, Powell SL, Rishmawi K, Schleeweis K, Hinds A. 2011. Validation of North American forest disturbance dynamics derived from Landsat time series stacks. Remote Sens Environ 115:19–32.

Toan TL, Quegan S, Woodward I, Lomas M, Delbart N, Picard G. 2004. Relating radar remote sensing of biomass to modeling of forest carbon budgets. Clim Chang 67:379–402.

U.S. Agriculture and Forestry Greenhouse Gas Inventory. 1990–2008. Climate Change Program Office, Office of the Chief Economist, U.S. Department of Agriculture. Technical Bulletin No. 1930. 159 pp. June, 2011.

U.S. EPA. 2011. Inventory of U.S. greenhouse gas emissions and sinks: 1990–2009. Washington, DC: U.S. Environmental Protection Agency.

USDA Forest Service. 2010. Forest timber product output (TPO) reports. Washington, DC: USDA Forest Service. http://srsfia2.fs.fed.us/php/tpo_2009/tpo_rpa_int1.php.

van der Werf GR, Randerson JT, Giglio L, Collatz GJ, Mu M, Kasibhatla PS, Morton DC, Defries RS, Jin Y, van Leewen TT. 2010. Global fire emissions and the contribution of deforestation, savanna, forest, agricultural, and peat fires (1997–2009). Atmos Chem Phys 10:11707–35.

Westerling AL, Hidalgo HG, Cayan DR, Swetnam TW. 2006. Warming and earlier spring increase western U.S. forest wildfire activity. Science 313:940–3.

Williams CA, Collatz GJ, Masek J, Goward SN. 2012. Carbon consequences of forest disturbance and recovery across the conterminous United States. Global Biogeochem Cy 26:GB1005. doi:10.1029/2010GB003947.

Zheng D, Heath LS, Ducey MJ, Smith JE. 2011. Carbon changes in conterminous US forests associated with growth and major disturbances: 1992–2001. Environ Res Lett 6. doi:10.1088/1748-9326/6/1/014012.

Acknowledgments

We would like to thank NASAs Terrestrial Ecology Program for funding this study. We would also like to thank a number of team members and collaborators for overall project management, guidance, and support. Specifically, Sam Goward, Nancy Thomas, and Karen Schleeweis of the University of Maryland; Jeff Masek and Jim Collatz of NASA Goddard, and Gretchen Moisen of the Rocky Mountain Research Station. A special thanks to Elizabeth LaPointe of the USDA Forest Service FIA National Spatial Data Services for all of her help with the FIA data. Thanks also to Maureen Duane and Yang Zhiqiang at Oregon State University for their assistance and support.

Author information

Authors and Affiliations

Corresponding author

Additional information

Author Contributions

SLP, WBC, REK, SPH, and CH conceived study and developed methods; SLP, REK, and CH analyzed data; SLP and WBC wrote the article; REK, SPH, and CH contributed to writing the article.

Rights and permissions

About this article

Cite this article

Powell, S.L., Cohen, W.B., Kennedy, R.E. et al. Observation of Trends in Biomass Loss as a Result of Disturbance in the Conterminous U.S.: 1986–2004. Ecosystems 17, 142–157 (2014). https://doi.org/10.1007/s10021-013-9713-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10021-013-9713-9