Abstract

Low flow drainage from a river system, in the absence of precipitation or snowmelt, derives directly from the water stored in the upstream aquifers in the basin; therefore, observations of the trends of the annual lowest flows can serve to deduce quantitative estimates of the evolution of the basin-scale groundwater storage over the period of the streamflow record. Application of this method has allowed for the first time to determine the magnitudes of the trends in groundwater storage over the past two-third century in some 41 large prototypical basins in the United States east of the Rocky Mountains. It was found that during the period 1940–2007 groundwater storage has generally been increasing in most areas; these positive trends were especially pronounced in the Ohio and Upper Mississippi Water Resources Regions, but they were weaker in most other regions. Notable exceptions are the northern New England and especially the South Atlantic-Gulf regions, which saw prolonged declines in groundwater levels over this nearly 70-year long period. These observed long-term trends are generally in agreement with previous studies regarding trends of other components of the water cycle, such as precipitation, total runoff, and terrestrial evaporation. Over the most recent 20 years, from 1988 through 2007, except for the Ohio and the Souris-Red-Rainy regions, most regions have experienced declining average groundwater levels to varying degrees, with maximal values of the order of −0.2 mm a−1.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Although precipitation is the only reliable and sustainable source of water supply over large regions, short periods of drought and surges in demand can usually be overcome or buffered by various storage mechanisms in the system. Among the different forms of storage, groundwater far outstrips all the others in magnitude and availability, and thus in practical importance. For instance, on a global scale (e.g. Brutsaert 2005) active groundwater storage has been estimated to be at least an order of magnitude larger than the water stored in all lakes and reservoirs; also, while at least as much if not more water is stored in ice caps and glaciers as in the world’s aquifers, it is not in a state allowing direct and immediate transport and consumption.

Recently, the notion has become increasingly accepted that human activities, mainly those related to industrialization, have globally resulted in radiative forcing and in an average net warming effect over the past century. Even though this warming is well documented (e.g. Hansen et al. 2006; Santer et al. 2008), any concurrent changes in hydrologic cycle activity and water resources are more uncertain and unsettled (e.g. IPCC 2007). This is especially the case for groundwater, as it is normally inaccessible for direct observation. Thus, in light of the different possible scenarios of global change in the hydrologic cycle, it is now of critical importance not just to monitor groundwater storage in real time, but to unravel the historical evolution of this valuable resource over the long term as well. Unfortunately, at present such long-term water storage records, based on direct measurements in observation wells, are available for only a few regions on Earth. In the past decade, some exciting developments in satellite gravity measurement technology have taken place, which now allow the large-scale monitoring of changes in terrestrial water storage, even in the most inaccessible and remote regions of the globe (e.g. Swenson et al. 2006; Syed et al. 2008), from which groundwater storage may be deduced. However, the resulting records are still much too short to allow meaningful inferences, as required in the global change context.

To obtain the necessary information it is therefore imperative to make use of alternative methods that can provide it in some indirect yet reliable way. One such method makes use of streamflow measurements to infer changes in groundwater storage in the upstream aquifers in the basin; it was proposed in Brutsaert (2008) and tested locally in two basins in Illinois and it has subsequently been applied with success elsewhere as well (Brutsaert and Sugita 2008; Sugita and Brutsaert 2009). The advantages of this method are first, that it provides storage values integrated over the entire upstream basin area, rather than point values as obtained from observation wells; and second, that it produces long-term time series that predate most groundwater observations, if they even exist, by many years, since routine streamflow measurements were usually started much earlier.

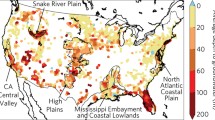

It is the objective of this paper to apply this technique of Brutsaert (2008) more broadly to the entire eastern half of the conterminous United States, in order to gain some insight in the evolution of groundwater storage over the past two-third century; this region east of the Rocky Mountains is characterized mostly by a humid continental climate. For this purpose, some 41 large prototypical basins distributed in the different water resources regions were selected, so they would be, first, sufficiently unregulated and free of controls, and therefore representative of natural flow conditions; and second, large enough so that they are illustrative and typical of the larger area surrounding them. While this study is primarily intended as an exploratory overview to pave the way for broader implementation, it will be shown that the groundwater storage trends during the past 70 years derived this way and their spatial distribution are generally consistent with the trends observed in other components of the hydrologic cycle over this large region.

2 The dependence of baseflow on groundwater storage

2.1 Low flow parameterization

The flow in a natural river system resulting from groundwater outflows from the upstream riparian aquifers, in the absence of precipitation or artificial storage releases, is referred to as baseflow; it can also variously be called drought flow, low flow, or dry-weather flow. Probably the most commonly used assumption to describe this type of flow, which goes back at least to the pioneering work of Boussinesq (1877), is that it is an exponential decay phenomenon. To allow direct comparison with other components of the hydrologic cycle, such as precipitation and evaporation, this can be written conveniently as

where y = Q/A is the rate of flow in the stream per unit of catchment area [LT−1], Q is the volumetric rate of flow in the stream [L3T−1], A the area of the basin [L2], y 0 is the value of y at the arbitrarily selected time origin t = 0, and K is the characteristic time scale of the catchment drainage process [T], also commonly referred to as the storage coefficient. As was shown earlier in Brutsaert (2008), the form of Eq. 1 immediately implies the following linear relationship between storage and outflow rate

in which S, the underground water storage volume per unit catchment area [LT−1], can also be visualized as the average thickness of a layer of water above the zero-flow level spread over the area A. Thus the rate of change of groundwater storage in the basin can be estimated from the temporal trend in baseflows observed at the outlet of the river basin by means of

The application of Eq. 3 requires a knowledge of K and dy/dt; their estimation is treated next in Sections 2.2 and 2.3, respectively.

2.2 Characteristic basin drainage time scale

Over the past 50 to 60 years numerous procedures have been developed to estimate K for any given river basin from streamflow measurements. However, most if not all of these are somewhat subjective in their application. An important first reason for this is no doubt that Eq. 1 is overly idealized, so that it is incapable of encompassing all the intricacies of the drainage processes in natural catchments. Secondly, in any procedure to estimate K it is necessary to ensure that no storm runoff is present and that the streamflow results only from upstream groundwater outflows; this requires careful scrutiny of concurrent precipitation records. But this requirement is hard to satisfy, because rain gage networks in large basins are never dense enough to capture all events everywhere in the basin. Thirdly, low flows in a river, when close to zero, are unavoidably subject to measurement error and often other uncertainties.

It so happens however, that as demonstrated in Brutsaert (2008) both conceptually and experimentally, the variability of the K parameter for large basins is relatively small, so that it can be considered a fairly robust parameter. Conceptually, on the basis of classical hydraulic groundwater theory (e.g. Brutsaert 2005, p 404) and of some additional simplifying assumptions, K can be shown to be related to basin characteristics as follows

where n e is the effective drainable porosity of the riparian aquifer materials in the basin, T e = k 0 pD is their effective hydraulic transmissivity [L2T−1], (in which k 0 is the hydraulic conductivity [LT−1] and p is the average fraction of the vertical aquifer thickness D occupied by flowing water [L]), and D d = (L/A) is referred to as the drainage density (in which L is the total length of all stream channels in the river basin and A its area).

A cursory inspection of Eq. 4 readily confirms that K values in different basins can be expected to vary within a relatively narrow range. Drainage density D d depends only weakly, if at all, on drainage area A within regions of homogeneous lithology; this has been documented in different types of landscapes, such as for example, the Appalachian Plateau with different degrees of relief and ruggedness (Morisawa 1962), the Southern Great Plains in Oklahoma (USA; Brutsaert and Lopez 1998), and the tidal marshlands of the lagoon of Venice (Italy), Petaluma Bay (CA, USA), and Barnstable marsh (MA, USA) (Marani et al. 2003). While the matter may involve other considerations (e.g. Gregory and Gardiner 1975; Gardiner et al. 1977), many basins exhibit some kind of self-similarity or fractal behavior in the drainage pattern, and in such basins D d can be taken as a constant (e.g. Smart 1972, p 334; Maritan et al. 1996). Moreover, highly transmissive terrain, that is with high values of T e = k 0 pD, will tend to require fewer channels to drain the excess precipitation, and thus smaller values of the drainage density D d (or vice-versa), the net result being that the product \( \left( {{T_{\text{e}}}D_{\text{d}}^2} \right) \) will tend to be even less variable and more independent of terrain than each term by itself. Interestingly, the near-constancy of \( \left( {{T_{\text{e}}}D_{\text{d}}^2} \right) \) is not a new idea; it was already implicit some 45 years ago in the work of Carlston (1963, Carlston 1966), in a somewhat different context, namely following a steady-state groundwater description by Jacob (1943). The drainable porosity n e rarely exhibits large variability. But even so, since n e can be expected to be larger for coarser more permeable than for finer less permeable aquifer materials (or vice-versa), the variability of (n e/T e) will also usually be smaller than that of each term separately. Finally, there is the fact that the larger a basin is, the more likely it will consist of many different types of soils and geological formations; therefore, the more important controlling variables like n e, D d, and T e will not only compensate each other as suggested by the structure of Eq. 4, but they will also tend to average out to some typical values. As a result, in large basins within regions with a similar climate, K can be expected to be relatively invariant.

These conceptual considerations are corroborated by numerous experimental observations of K in the field. A first review of numerous published values of K for large basins obtained with different procedures was already presented in Brutsaert (2008). It was concluded there that for the very lowest flows from large basins with mature river network patterns, with well-developed aquifers and with generally mild surface slopes, the decay parameter in Eq. 1 tends be of the order of 1.5 months with an uncertainty of about 2 weeks.

Meanwhile some additional results have come to light which largely confirm these values and which are worth being cited here. Chapman and Peck (1997, Table 5) analyzed recessions at a number of gaging stations on the Chattahoochee River in Georgia; at the two stations with the largest drainage areas, they obtained for the summer flows (which is the season considered in this study) values of K = 43 days (at A = 6,265 km2) and K = 52 days (at A = 3,754 km2) and for the winter flows, respectively, K = 56 days and K = 65 days. Van de Griend et al. (2002) analyzed baseflow from a number of catchments, most of which were in mountainous terrain and very small, of the order of 1 km2; however, for the 133 km2 basin of the Groenlose Slinge in Gelderland, which comes closest to satisfying the requirements for the present analysis, K was observed to vary as a function of discharge rate between a maximum of 58.6 days and a minimum of 31.5 days, with a mean value of K = 45 days for the entire range. Dewandel et al. (2003) studied hydrograph recession curves of an ophiolite hard-rock aquifer measured in Wadi Khafifah, Sultanate of Oman; they found that this aquifer was sufficiently fissured, so that, at the scale of this 37 km2 basin, it could be considered an equivalent porous medium, and that K = 62.5 days when analyzed with Eq. 1. Brutsaert and Sugita (2008) analyzed flow rates at three gaging stations on the Kherlen River in Mongolia. A value of K = 41 days was obtained for Baganuur, K = 43 days for Undurkhaan and K = 48 days for Choibalsan. At these stations the respective drainage areas are A = 7,350 km2, 39,400 km2, and 71,500 km2, the respective elevations are H = 1,297 m, 1,033 m, and 738 m AMSL, and the respective distances from the end at Hulun Lake are L = 940 km, 829 km, and 390 km; although these K values show a slight dependency on travel time in the river, they reflect mainly the groundwater drainage time as they are all very close to their average value of K = 44 days. Tague and Grant (2009) analyzed seasonal recessions after snowmelt in two watersheds in the Cascades in Oregon and in two watersheds in the California Sierras; not surprisingly for small catchments, the K values covered a wide range, namely between 26 and 100 days, but the area-weighted average was 50 days.

For the present climatic analysis mainly the sign and the order of magnitude of the groundwater storage trends are of interest and not their exact values. Therefore, in light of the relative invariance of the parameter K and the difficulty of its determination, it was decided to adopt the typical value of K = 45 days as a working assumption and use it in what follows.

2.3 Estimation of drought flow trend

For the present study, the measure of the annual values of y to be used in Eq. 3, in order to calculate (dS/dt) was estimated with the method proposed in Brutsaert (2008). In principle, the long-term baseflow trend could have been taken as the trend of the lowest daily flows for each year; but since daily flows are normally subject to error and other uncertainties, the annual lowest 7-day daily mean flows, namely y L7, were used instead, as a more robust measure for this purpose. This quantity can be used to track the long-term evolution of groundwater storage over many years, because it results directly from the lowest storage level each year, that is the non-depleted reserve, which is carried over to the next year. Moreover, since these y L7 values are the lowest of the low flows, they lie in the range where the linear representation Eq. 2 is most likely to be valid. With this definition of the annual low flows, for convenient reference Eq. 3 can now be rewritten as follows

In the practical implementation of Eq. 5, the lowest 7-day daily mean flow for each year of record was first determined as the lowest value of the 7-day running averages during that year. The temporal trends of these annual drought flows were then directly calculated by simple linear regression.

3 Selected large-scale river basins

3.1 Criteria for selection

The basins selected for the present study were made to satisfy a number of criteria that would warrant valid conclusions. First, their streamflow records are long enough so they are relevant in the context of detectable long-term changes. In the United States only very few streamflow records are more than a century long, but many have records of the order of 3/4 to 2/3 century. Thus to allow comparison between as many basins as possible, it was decided to select basins with records going back at least to 1940. Second, all basins are large enough to embody not merely local but rather more broadly representative features of the water resources regions they are meant to exemplify. Besides, nonlinear effects, which might invalidate Eqs. 1 and 2, are usually more pronounced in smaller basins (e.g. Brutsaert 2005, Fig. 12.3), and they tend to weaken as the drainage area increases. Therefore, all selected basins have drainage areas of at least several thousands of square kilometers, with the smallest being about 2,000 km2. Third, to allow the application of Eq. 5, only basins were selected with continuous uninterrupted flow and without any no-flow episodes when the contact between the stream and the riparian aquifers would be temporarily suspended. By this criterion nearly all basins are situated in the humid continental climate zone, where streams keep flowing throughout the year. For the same reason, in the analyses only river discharge data recorded between April 15 and October 31 were used, to avoid the possibility of ice conditions. Fourth, many basins in the United States have undergone some human impact or have been subject to anthropogenic influences that may diminish or hide any climate-related signals. Therefore, to avoid this, all basins selected were taken from the Hydro-Climatic Data Network, developed by the US Geological Survey (Slack and Landwehr 1992; Slack et al. 1993) for the purpose of studying the variation in surface-water conditions, free of confounding anthropogenic influences. With these stringent criteria only few large basins were left available for analysis. These were mostly well separated from each other and can therefore be considered independent and uncorrelated in their hydrologic behavior with that of their neighbors within each water resources region. An indirect consequence of this relative independence was that spatial cross-correlation effects between the individual flow records (e.g. Douglas et al 2000) were not an issue in the present analyses. The selected basins are listed in Table 1 and their corresponding water resources regions are shown in Fig. 1.

3.2 Validation of the method

The specific implementation of Eq. 5 was first explored and validated in an earlier study with data recorded in two large river basins in Illinois (Brutsaert 2008), one of the few regions in the world where beside streamflow measurements also groundwater storage observations have been made since the late 1950s. It was found that the groundwater storage changes inferred from the baseflows by means of Eq. 5 are generally consistent with the average groundwater level changes measured in the observation well network over the same area. As an illustration for readers who may not have easy access to the Illinois study, Fig. 2 shows a comparison of the evolution of the annual lowest 7-day flows y L7 = Q L7/A (in millimeters per day) of the Rock River basin (drainage area A = 24,721 km2), with the evolution of the average values of the lowest water table heights \( \left\langle \eta \right\rangle \) (in meters with the ground surface as reference) at four well stations distributed over the same area in Illinois, for the period 1965–2000. The two trend lines, obtained by linear least squares regression, are dy L7/dt = 0.00404 mmd–1a–1 and \( d\left\langle \eta \right\rangle /dt = 10.423{\text{ mm }}{{\text{a}}^{ - 1}} \); the correlation coefficient between the two time series is ρ = 0.808. This good agreement at the basin scale provides validation of the method used in the present study. As an aside, these two trends depicted in Fig. 2 also allow the determination of the large-scale drainable porosity, as follows

Comparison of the evolution of the annual lowest 7-day flows y L7 = Q L7/A (in millimeters per day) of the Rock River near Joslin, Illinois, (circles) (basin area A = 24,721 km2), with the evolution of the average values of the lowest water table heights \( \left\langle \eta \right\rangle \) (in m with the ground surface as reference) at four well stations distributed over the same area in Illinois (triangles). The two trend lines are obtained by linear least squares regression. The trends are dy L7/dt = 0.00404 mm d−1 a−1 and \( d\left\langle \eta \right\rangle /dt = 10.423{\text{ mm }}{{\text{a}}^{ - 1}} \); the correlation coefficient between the two time series is ρ = 0.808

which in the present case with Eq. 2 and K = 45 days, yields immediately n e = 0.0174; this value is fairly typical for basin-scale analyses, and it is of the same order as values obtained elsewhere (e.g. Brutsaert and Lopez 1998; Brutsaert 2008).

4 Analysis and results

The trends of groundwater storage (dS/dt) were calculated as described in Sections 2.2 and 2.3 for several different periods, namely the period of record of each individual station, the past two-third century 1940–2007, the second half of the twentieth century 1951–2000, which is often used as a benchmark in climate studies, and the most recent 20 years 1988–2007. The results are shown in Table 1 for the 41 basins investigated. As a summary, in Table 2 the average trends weighted by drainage area are shown for each of the 11 water resources regions.

It can be seen in Tables 1 and 2 that in nine out of the 11 water resources regions the trends in groundwater storage over the past 2/3 century are positive; the same holds true for the trends over the second half of the twentieth century. The strongest positive trends were obtained for the Ohio, and the Upper Mississippi regions. Also positive, but somewhat weaker, are the trends observed for the Mid Atlantic, the Great Lakes, the Tennessee, the Lower Mississippi, the Souris-Red-Rainy, the Missouri, and the Arkansas-White-Red regions. The selected basins in the northern New England and the South Atlantic-Gulf regions had generally negative trends over these same two periods. Remarkably however, more recently over the past 20 years most, namely 29 out of 41 or 71%, of the stations underwent negative trends. The maximal values of these most recent negative trends are of the order of -0.2 mm a−1.

How reliable are these results? Table 1 shows also that 27 of the 41 basins have one or more trends which are significant at the 0.05 level, and an additional four basins which have at least one trend significant at the 0.10 level. This means that for one quarter of the basins the observed trends are only marginally significant or not at all. On the other hand, within each water resources region the trends for any given period are by and large either mostly all positive or mostly all negative; this consistency should provide some additional confidence in the general validity of the observations in the previous paragraph. The basins with the most significant trends are mostly those in the Ohio and the Upper Mississippi regions. These two are also the regions with some of the largest positive trends in the second half of the twentieth century.

As an example of a positive trend, in Fig. 3 the annual 7-day low flows are shown for the Cedar River, at Cedar Rapids, Iowa, in the Upper Mississippi Region; this station is of some interest, because it has the longest record, having been in operation for more than a century. It can be seen that the long-term trend from 1903 through 2007 is quite strong and, as indicated in Table 1, significantly different from zero. However, the figure also shows that the intermediate trends over shorter periods of the order of 20 to 25 years can assume many different values, positive or negative depending on the selected period. This illustrates climate variability and underscores the fact that it is hazardous to draw definite conclusions regarding the severity or persistence of a drought on the basis of only two or three decades of data. As shown in Table 1, the total storage change over the 105-year period of record amounts to about 5.73 mm of water; according to Eq. 6 this would be equivalent with an average water table rise of 0.328 m, i.e. around 1 ft in a century, if for this example the typical drainable porosity value of n e = 0.017, derived from Fig. 2, were adopted.

Evolution of the annual lowest 7-day flows y L7 = Q L7/A in millimeters per day of the Cedar River at Cedar Rapids, Iowa, in the Upper Mississippi Region. The long straight line represents the regression over the period of record 1903–2007, and indicates a significantly (p = 0.006) positive trend dy L7/dt = +0.00121 mm d−1 a−1. The short straight line segments are the regressions over the indicated periods. The upstream drainage area at this gaging station is A = 16,854 km2

As an example of a persistently negative trend, Fig. 4 displays the annual lowest groundwater storage values for the Altamaha River at Doctortown, Georgia, in the South Atlantic-Gulf Region. Also at this station, the long-term trend over the period of record 1932–2007, namely −0.0489 mm a−1, is quite pronounced and different from zero at the 0.05 significance level. This amounts to a total loss of 3.72 mm of water over 76 years, which according to Eq. 6 would be equivalent with an average water table drop of approximately 0.22 m if, again for the sake of illustration, the typical large-scale drainable porosity value of 0.017 were adopted.

Evolution of the annual lowest groundwater storage S in millimeters above the zero-flow level over the past 3/4 century in the Altamaha River basin upstream of Doctortown, Georgia (with K = 45 days), in the South Atlantic-Gulf Region. The straight line represents the regression over the period of record 1932–2007, and indicates a significantly (p = 0.003) negative trend dS/dt = 0.0489 mm a−1. The upstream drainage area at this gaging station is A = 35,209 km2

5 Discussion

The trends observed here for groundwater storage are consistent with those observed over the past half century in previous studies of overall water availability and of other measured components of the hydrologic cycle. Thus, the major terms in the hydrologic budget are demonstrated to have generally increased over large portions of the United States east of the Rocky Mountains, except in the coastal southeast and perhaps in a small area in the northeast as well. This was certainly the case of precipitation and surface runoff, for which most previous data analyses also appear to indicate that the observed increases were largest over the Upper Mississippi basin and in regions adjacent to the Great Lakes, and that they were most pronounced for fall precipitation and for the annual low flows (e.g. Lins and Slack 1999, Fig. 2; Lawrimore and Peterson 2000, Fig. 1; Groisman et al. 2004, Fig. 6, Table 5; Small et al. 2006). Similar conclusions were obtained in simulation studies. For instance, drought conditions on the basis of modeled runoff and soil moisture were found generally to have decreased in the eastern USA, except broadly in the southeast and in sections of Missouri (Andreadis and Lettenmaier 2006, Figs. 3 and 4); a model-based analysis of observed precipitation increases from 1948 to 2004 was found to result in positive trends in both runoff and evapotranspiration over the Mississippi basin (Qian et al. 2007).

Also evaporation is generally agreed to have mostly increased over the past 50 or so years. No long-term direct measurements are available of terrestrial evaporation, but all indirect estimates indicate that it has increased over major areas of the eastern United States. Probably most relevant in the present context are the trend estimates based on those of pan evaporation. Indeed, it is now generally accepted that under a wide range of natural conditions, evaporation from pans tends to exhibit a complementary relationship with actual terrestrial evaporation (e.g. Brutsaert and Parlange 1998; Kahler and Brutsaert 2006; Brutsaert 2006). Accordingly, several studies have shown that the trends of terrestrial evaporation have been positive over most of the eastern United States, except not only in major portions of the southeast (Lawrimore and Peterson 2000, Fig. 1) but perhaps also, albeit to a less significant extent, in the northeast (Golubev et al. 2001, Table 1; Hobbins et al. 2004, Fig. 2b). Water budget-based estimates of terrestrial evaporation, as the difference between precipitation and runoff, are generally in agreement with these pan-based findings for the Mississippi basin (Milly and Dunne 2001) and for several other large river basins in the conterminous United States, but somehow not for the southeast (Walter et al. 2004), in contrast with the aforementioned pan-based studies.

The strongest and most significant positive long-term trends in groundwater storage shown in Tables 1 and 2 occurred in the Upper Mississippi and Ohio regions. However, these changes may not be solely the result of climate change. This was brought out in Potter’s (1991) analysis of peak and daily river flow data in a watershed in the Driftless Area of southwestern Wisconsin; this showed that since 1940, peak flows had been decreasing while baseflow had been increasing. It was determined that these changes were not likely due to climatic changes, reservoir construction, or land use changes, but instead probably to the adoption of various soil conservation practices, such as gully control and different tillage methods. These observations were largely confirmed by Juckem et al. (2008) who noted, that in the same Driftless Area baseflow increases, which were accompanied by decreased stormflow volumes, were somehow larger than what could be expected by concurrent changes in precipitation; thus it was concluded that the positive changes in baseflow were not the result only of climatic changes but were likely amplified by land management practices.

As can also be seen in Tables 1 and 2, the basins in the Missouri and Arkansas-White-Red regions have generally weaker trends than the other regions with positive groundwater storage trends, such as the Ohio and Upper Mississippi regions; in fact, some basins in these regions listed in the tables even display negative trends. One, among possibly other reasons for the relatively smaller positive trends, may be the fact that a few percent of the areas of these regions are occupied by the High Plains (or Ogallala) aquifer. This aquifer has a total area of roughly 450,000 km2, and between 1950 and 2005 it has provided some 311 km3 in irrigation water (e.g. McGuire 2007); this amounts to a groundwater outflow rate on the order of 12.3 mm a−1 over the entire area of this aquifer. Nevertheless, although this drawdown has had severe local repercussions, as the present results indicate, the climatic implications of the Ogallala aquifer drawdown have been less pronounced.

The present results and also the analyses of evaporation by Golubev et al. (2001, Table 2) and Hobbins et al. (2004, Fig. 2b) indicate that, beside the southeast, there may also be a small area with a slowing hydrologic cycle in the extreme northeastern part of the United States. While the trends in the latter region may not be significant in the usual sense, they are certainly consistent with the findings of a 10% decrease from 1964 to 2003 in the total annual river discharge to the Arctic and North Atlantic Oceans in Canada by Dery and Wood (2005) and with the identification of an apparent dipole-like pattern in annual precipitation variations from 1948 to 2003 by Jutla et al. (2006) over eastern North America between 30oN and 60oN. In other words, it appears from these studies that over the past 50 years annual precipitation has been increasing in the eastern United States and decreasing in southeastern Canada with, as the present results indicate, conceivably a small spill-over of this decrease into Maine. The analysis of Jutla et al. (2006) further raised the possibility that this anticorrelation in positive and negative trends along the Eastern seaboard might be influenced by sea surface temperature anomalies in the adjacent areas of the Atlantic Ocean.

6 Conclusions

A method, proposed by Brutsaert (2008), was implemented herein to infer groundwater storage changes in the eastern half of the conterminous United States, by means of river flow measurements in a number of large river basins. The 41 selected basins, which were all free of human disturbances, were taken large enough, that is at least some 2,000 km2, in order to be representative for their respective water resources regions, and in order to justify adoption of a typical value of the drainage time scale of 45 days in the calculations. The method can be considered to be reliable because in the earlier study (Brutsaert 2008), it had already been shown to produce excellent results compared to local groundwater level measurements in two basins in Illinois.

It was found that groundwater storage has generally been increasing during the past 2/3 century, and perhaps even longer, in the United States east of the Rocky Mountains. The strongest and most significant trends, which were of the order of 0.1 mm a−2, were observed in the Ohio and Upper Mississippi regions. Weaker and less significant trends, but still generally positive and on average mostly of the order of 0.02 to 0.03 mm a−2, were observed in the Mid Atlantic, the Great Lakes, the Tennessee, the Lower Mississippi, the Souris-Red-Rainy, the Missouri, and the Arkansas-White-Red regions. Generally, negative trends were obtained in the South Atlantic-Gulf and to a lesser extent in the New England regions. Noteworthy is that most recently, from 1988 to 2007, in 29 out of 41 basins or in 71%, the observed trends were negative; more than half of the exceptions, that is, the basins which persisted with positive trends during the past 20 years, tended to lie in the Ohio and Upper Mississippi regions; but the most significant positive trends during this recent period were observed in the Souris-Red-Rainy region in northern Minnesota.

The present results on groundwater storage are fully consistent with previous studies dealing with changes of other measures of hydrologic cycle activity, namely with the trends in precipitation, total surface runoff, and terrestrial evaporation. The consensus of these studies is that the water cycle has generally been accelerating during the past half century over most of the United States east of the Rockies, the two major exceptions being the South Atlantic-Gulf region and possibly also a smaller area in New England.

References

Andreadis KM, Lettenmaier DP (2006) Trends in 20th century drought over the continental United States. Geophys Res Lett 33:L10403. doi:10.1029/2006GL025711

Boussinesq J (1877) Essai sur la théorie des eaux courantes. Mém Acad Sci Inst France 23, footnote 252–260

Brutsaert W (2005) Hydrology: an introduction. Cambridge Univ Press, Cambridge 605 pp

Brutsaert W (2006) Indications of increasing land surface evaporation during the second half of the 20th century. Geophys Res Lett 33:L20403. doi:10.1029/2006GL027532

Brutsaert W (2008) Long-term groundwater storage trends estimated from streamflow records: climatic perspective. Water Resour Res 44:W02409. doi:10.1029/2007WR006518

Brutsaert W, Lopez JP (1998) Basin-scale geohydrologic drought flow features of riparian aquifers in the southern Great Plains. Water Resour Res 34:233–240

Brutsaert W, Parlange MB (1998) Hydrologic cycle explains the evaporation paradox. Nature 396:30

Brutsaert W, Sugita M (2008) Is Mongolia’s groundwater increasing or decreasing? The case of the Kherlen River Basin. Hydrol Sci J 53:1221–1229

Carlston CW (1963) Drainage density and streamflow. US Geol Survey Prof Paper 422-C:1–8

Carlston CW (1966) The effect of climate on drainage density and streamflow. Bull Internat Assoc Scientif Hydrol 11:62–69

Chapman MJ, Peck MF (1997) Ground-water resources of the upper Chattahoochee River basin in Georgia. US Geol Survey Open-File Rept 96–363:43

Dery SJ, Wood EF (2005) Decreasing river discharge in Northern Canada. Geophys Res Lett 32:L10401. doi:10.1029/2005GL022845

Dewandel B, Lachassagne P, Bakalowicz M, Weng PH, Al-Malki A (2003) Evaluation of aquifer thickness by analysing recession hydrographs. Application to the Oman ophiolite hard-rock aquifer. J Hydrol 274:248–269

Douglas EM, Vogel RM, Kroll CN (2000) Trends in floods and low flows in the United States: impact of spatial correlation. J Hydrol 240:90–105

Gardiner V, Gregory KJ, Walling DE (1977) Further notes on the drainage density—basin area relationship. Area 9:117–121

Golubev VS, Lawrimore JH, Groisman PY, Speranskaya NA, Zhuravin SA, Menne MJ, Peterson TC, Malone RW (2001) Evaporation changes over the contiguous United States and the former USSR: a reassessment. Geophys Res Lett 28:2665–2668

Gregory KJ, Gardiner V (1975) Drainage density and climate. Z Geomorph 19:287–298

Groisman PYa, Knight RW, Karl TR, Easterling DR, Sun B, Lawrimore JH (2004) Contemporary changes of the hydrological cycle over the contiguous United States: trends derived from in situ observations. J Hydromet 5:64–85

Hansen J, Sato M, Ruedy R, Lo K, Lea DW, Medina-Elizade M (2006) Global temperature change. Proc Nat Acad Sci 103:14288–14293

Hobbins MT, Ramírez JA, Brown TC (2004) Trends in pan evaporation and actual evapotranspiration across the conterminous U.S.: paradoxical or complementary? Geophys Res Lett 31:L13503. doi:10.1029/2004GL019846

IPCC (2007) Climate Change 2007: the physical science basis. Contribution of Working Group I to the fourth assessment report of the intergovernmental panel on climate. Cambridge University Press, Cambridge, UK (also: <http://ipcc-wg1.ucar.edu/wg1/wg1-report.html>)

Jacob CE (1943) Correlation of ground-water levels and precipitation on Long Island, N. Y.: Pt. 1. Theory. Trans Amer Geophys Un 24:564–573

Juckem PF, Hunt RJ, Anderson MP, Robertson DM (2008) Effects of climate and land management change on streamflow in the driftless area of Wisconsin. J Hydrol 355:123–130

Jutla AS, Small D, Islam S (2006) A precipitation dipole in eastern North America. Geophys Res Lett 33:L21703. doi:10.1029/2006GL027500

Kahler DM, Brutsaert W (2006) Complementary relationship between daily evaporation in the environment and pan evaporation. Water Resour Res 42:W05413. doi:10.1029/2005WR004541

Lawrimore JH, Peterson TC (2000) Pan evaporation in dry and humid regions of the United States. J Hydromet 1:543–546

Lins H, Slack J (1999) Streamflow trends in the United States. Geophys Res Lett 26:227–230

Marani M, Belluco E, D’Alpaos A, Defina A, Lanzoni S, Rinaldo A (2003) On the drainage density of tidal networks. Water Resour Res 39:1040. doi:10.1029/2001WR001051

Maritan A, Rinaldo A, Rigon R, Giacometti A, Rodríguez-Iturbe I (1996) Scaling laws for river networks. Phys Rev E 53:1510–1515

McGuire VL (2007) Water-level changes in the high plains aquifer, predevelopment to 2005 and 2003 to 2005. US Geol Survey Scient Invest Rept 2006–5324, 7 pp ( http://pubs.usgs.gov/sir/2006/5324/pdf/SIR20065324.pdf)

Milly PCD, Dunne KA (2001) Trends in evaporation and surface cooling in the Mississippi River basin. Geophys Res Lett 28:1219–1222

Morisawa M (1962) Quantitative geomorphology of some watersheds in the Appalachian Plateau. Geolog Soc Amer Bull 73:1025–1046

Potter KW (1991) Hydrological impacts of changing land management practices in a moderate-sized agricultural catchment. Water Resour Res 27:845–855

Qian T, Dai A, Trenberth K (2007) Hydroclimatic trends in the Mississippi River basin from 1948 to 2004. J Climate 20:4599–4614

Santer BD, Thorne PW, Haimberger L et al (2008) Consistency of modelled and observed temperature trends in the tropical troposphere. Int J Climatol 28:1703–1722. doi:10.1002/joc.1756

Seaber PR, Kapinos FP, Knapp GL (1987) Hydrologic unit maps, US geol survey water-supply paper 2294, 63 pp (http://pubs.usgs.gov/wsp/wsp2294/)

Slack JR, Landwehr JM (1992) Hydro-climatic data network: A U.S. Geological Survey streamflow data set for the United States for the study of climate variations, 1874–1988. US Geol Survey Open-File Rept 92–129, 193 pp (http://pubs.usgs.gov/of/1992/ofr92-129/)

Slack JR, Lumb AM, Landwehr JM (1993) Hydro-climatic data network (HCDN): streamflow data set, 1874–1988. US Geol Survey Water-Resour Invest Rept. 93–4076, CD-ROM. (http://pubs.usgs.gov/wri/wri934076/)

Small D, Islam S, Vogel RM (2006) Trends in precipitation and streamflow in the eastern U.S.: paradox or perception? Geophys Res Lett 33:L03403. doi:10.1029/2005GL024995

Smart JS (1972) Channel networks. Adv Hydrosci 8:305–346

Sugita M, Brutsaert W (2009) Recent low-flow and groundwater storage changes in upland watersheds of the Kanto region, Japan. J Hydrol Eng 14:280–285

Swenson SC, Yeh PJ-F, Wahr J, Famiglietti JS (2006) A comparison of terrestrial water storage variations from GRACE with in situ measurements from Illinois. Geophys Res Lett 33:L16401. doi:10.1029/2006GL026962

Syed TH, Famiglietti JS, Rodell M, Chen J, Wilson CR (2008) Analysis of terrestrial water storage changes from GRACE and GLDAS. Water Resour Res 44:W02433. doi:10.1029/2006WR005779

Tague C, Grant GE (2009) Groundwater dynamics mediate low-flow response to global warming in snow-dominated alpine regions. Water Resour Res 45: doi:10.1029/2008WR007179

Van de Griend AA, De Vries JJ, Seyhan E (2002) Groundwater discharge from areas with a variable specific drainage resistance. J Hydrol 259:203–220

Walter MT, Wilks DS, Parlange J-Y, Schneider RL (2004) Increasing evapotranspiration from the conterminous United States. J Hydromet 5:405–408

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Brutsaert, W. Annual drought flow and groundwater storage trends in the eastern half of the United States during the past two-third century. Theor Appl Climatol 100, 93–103 (2010). https://doi.org/10.1007/s00704-009-0180-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00704-009-0180-3