Abstract

This paper is concerned with the one-dimensional periodic-parabolic eigenvalue problem:

where \(D,\,\alpha ,\,L\) are constants with \(D,\,L>0\), \(a,\,h\) and \(V\) are continuous functions which are periodic in \(t\) with a common period \(T\) and \(a,\,h>0\) on \([0,L]\times {\mathbb {R}}\). The dependence of the principal eigenvalue on the diffusion and advection coefficients \(D\) and \(\alpha \) is investigated. In particular, the asymptotic behaviors of the principal eigenvalue as \(D\) and \(\alpha \) go to zero or infinity are derived. These qualitative results are then applied to a nonlocal reaction–diffusion–advection equation, which describes the spatiotemporal evolution of a single phytoplankton species with periodic incident light intensity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The principal eigenvalue is a basic concept in the field of reaction–diffusion partial differential equations. In recent decades, a large amount of research work has been devoted to the investigation of qualitative properties of the principal eigenvalue and its eigenfunction for second-order linear elliptic operators; see, e.g., [5–7, 10–13, 20, 21, 30, 56] and the references therein. As far as the nonautonomous periodic-parabolic operator is concerned, however, to our best knowledge, much less has been known for the associated principal eigenvalue, especially when the advection term appears. The principal eigenvalue for linear periodic-parabolic operators is important, not only because it contains the autonomous elliptic case, but also because it is interesting in its own right when a time periodic environment is involved.

In this paper, we consider the linear periodic-parabolic eigenvalue problem in one space dimension case:

where \(D,\,\alpha ,\,L\) are constants with \(D,\,L>0\), the functions \(a,\,h\) and \(V\) are continuous and periodic in \(t\) with the same period \(T\) (i.e., \(a(x,t)=a(x,t+T)\) for all \(x\in [0,L],\,t\in {\mathbb {R}}\), etc). The positive constants \(D\) and \(\alpha \) stand for the diffusion and advection (or drift) coefficients, respectively. Throughout the paper, unless otherwise stated, it is assumed that \(a(x,t),\,h(x,t)>0,\ \forall (x,t)\in [0,L]\times [0,T]\).

It is well known (see, e.g., [33]) that, given \(D,\,L>0\), \(\alpha \in {\mathbb {R}}\) and the continuous \(T\)-periodic functions \(a,\,h,\,V\), problem (1.1) admits a principal eigenvalue \(\lambda =\lambda _1\in {\mathbb {R}}\), which is unique in the sense that only such an eigenvalue corresponds to a positive eigenfunction \(\varphi \) (\(\varphi \) is also unique up to multiplication). Such a function \(\varphi \) is usually called a principal eigenfunction.

From (1.1), it is easy to see that

In particular, when \(V(x,t)\) depends on the time variable alone, that is, \(V(x,t)=V(t)\) for all \(x\in [0,L]\), we have

Nevertheless, the estimates (1.2) are usually insufficient in most applications since the sign of \(\lambda _1\) plays the decisive role in such cases; see [9, 11] for detailed discussions. So it becomes important to obtain more precise estimates for \(\lambda _1\) when \(V\) is a function of spatiotemporal variables.

The main goal of the present paper is to investigate the dependence of the principal eigenvalue \(\lambda _1\) on the diffusion and advection coefficients \(D\) and \(\alpha \). We are especially interested in the asymptotic behavior of \(\lambda _1\) as \(D\) goes to zero or infinity or \(\alpha \) goes to infinity. Our work is motivated by the qualitative study of a one-dimensional nonlocal reaction–diffusion–advection phytoplankton model with the periodic incident light intensity, in which the sign of the principal eigenvalue of the linearized problem at zero will be used to determine the dynamical behavior of such a model (indeed, this is the case for a large class of reaction–diffusion equations). See Sect. 5 below for concrete applications of our results to this model.

Our current study is also motivated by the recent works [1, 5, 10, 11]. Chen and Lou [10, 11] investigated the following elliptic eigenvalue problem of divergent form in the multi-dimensional case:

subject to the Neumann boundary condition on \(\partial \Omega \), where \(\Omega \) is a bounded smooth domain in \({\mathbb {R}}^N\,(N\ge 1)\), and \(m,\,V\) are smooth functions on \(\overline{\Omega }\). They obtained important results for the asymptotic behaviors of the principal eigenvalue \(\lambda =\lambda _1\) and its eigenfunction of (1.3) as the diffusion and advection coefficients \(D\) and \(\alpha \) approach zero or infinity. Their results have found new significant applications in the Lotka–Volterra reaction–diffusion–advection model as well as in a nonlocal reaction–diffusion–advection phytoplankton problem. In [1], Aleja and López-Gómez derived further properties of this principal eigenvalue when \(V(x)=m(x)\). By applying those important analytic results to a class of degenerate diffusive logistic equations, they observed several paradoxical effects of large advection; see [1] for the details. On the other hand, Berestycki et al. [5] dealt with the elliptic eigenvalue problems like (1.3) with large drift and Neumann boundary condition. They focussed on the situation that the drift velocity (advection term) \(\mathbf {v}\) is divergence-free and \(\mathbf {v}\cdot \nu =0\) on \(\partial \Omega \), where \(\nu \) represents the unit exterior normal to \(\partial \Omega \). Among other things, they established the equivalent connections between the boundedness of the principal eigenvalue and the existence of the first integrals of the velocity field \(\mathbf {v}\).

To analyze the qualitative behavior of the principal eigenvalue for the elliptic operators mentioned above, the mathematical approaches developed in [5, 10, 11] rely heavily on the variational characterization of the principal eigenvalue. Apparently, for the nonautonomous eigenvalue problem (1.1), the techniques of [5, 10, 11] cease to work due to the lack of variational structure for the periodic-parabolic operator. Although our results on the asymptotic behaviors of the principal eigenvalue for the periodic-parabolic eigenvalue problem can be regarded as generalizations of those for the elliptic eigenvalue problem considered in [10, 11], the mathematical techniques involved in these two kinds of problems are essentially different. Indeed, in order to obtain the asymptotic behaviors of the principal eigenvalue for problem (1.1) as \(D\) and \(\alpha \) go to zero or infinity, we need to introduce new ideas and techniques to overcome the nontrivial difficulties caused by the nonautonomous character and the advection term associated with problem (1.1). Though the current paper focuses only on one-dimensional space domain, it will become a starting point for our study of the principal eigenvalue in the case of higher dimensional domains. We leave this latter challenging problem for future investigation.

As pointed out in [10, 11], much more literatures concern the principal eigenvalue for elliptic operators with Dirichlet or Robin boundary conditions; see [5–8, 16, 17, 20, 21, 30, 38, 46, 47, 56] among many others; in particular, in an extensive survey paper [32], Grebenkov and Nyuyen summarized the very recent research progress in this direction. When it comes to the periodic-parabolic eigenvalue problem, one may refer to the classical book [33] by Hess. In a recent work [39], Hutson et al. derived the limiting behavior of the principal eigenvalue \(\lambda _1\) in any space dimension as the diffusion coefficient goes to zero or infinity for the Neumann problem (1.1) without the drift term, and their results found interesting applications in a Lotka–Volterra competition system. Besides, when no advection appears, Du and Peng in [27, 28] considered the case that \(V(x,t)=\mu v(x,t)\), where \(v(x,t)\ge 0,\,\not \equiv 0\) may vanish somewhere, and studied the asymptotic behavior of \(\lambda _1\) as \(\mu \rightarrow \infty \), which turns out to be essentially different from that of the autonomous elliptic counterpart. For some other recent work on the periodic-parabolic eigenvalue problems and related applications, one may further refer to [14, 18, 19, 37, 40, 48, 50]; on the other hand, the study on the principal eigenvalue for cooperative systems can be found in [2–4, 12, 13, 15] and the references therein.

We are now in a position to state the main results obtained in this paper. In order to emphasize the dependence of \(\lambda _1\) on the parameters \(\alpha ,\,D\) and the boundary condition, in what follows, we write \(\lambda _1=\lambda _1^\mathcal {N}(\alpha ,D)\).

Our first result concerns the case of large \(|\alpha |\) and the main result reads as follows.

Theorem 1.1

For any given \(D,\,L>0\), there holds

We then consider the case of small \(D\), and obtain

Theorem 1.2

For any given \(L>0\) and \(\alpha \in {\mathbb {R}}\), the following assertions hold.

-

(i)

If \(\alpha >0\), then

$$\begin{aligned} \lim _{D\rightarrow 0}\lambda _1^\mathcal {N}(\alpha ,D)={1\over T}\int _0^T V(L,t) dt. \end{aligned}$$ -

(ii)

If \(a(x,t)\) is a positive constant and \(\alpha =0\), then

$$\begin{aligned} \lim _{D\rightarrow 0}\lambda _1^\mathcal {N}(\alpha ,D)={1\over T}\min _{x\in [0,L]}\int _0^T V(x,t)dt. \end{aligned}$$ -

(iii)

If \(\alpha <0\), then

$$\begin{aligned} \lim _{D\rightarrow 0}\lambda _1^\mathcal {N}(\alpha ,D)={1\over T}\int _0^T V(0,t)dt. \end{aligned}$$

Clearly, Theorem 1.2 shows that \(\lim _{D\rightarrow 0}\lambda _1^\mathcal {N}(\alpha ,D)\) is discontinuous at \(\alpha =0\). Indeed, the assertion (ii) of Theorem 1.2 was derived in [39] for the Laplacian operator in any dimensional space domain, and it turns out that the technique to be used to obtain the assertions (i) and (ii) of Theorem 1.2 will have to be significantly different.

We further remark that though in Theorems 1.1 and 1.2, we have assumed that \(h(x,t)>0,\,\forall (x,t)\in [0,L]\times [0,T]\), it is obvious to observe that the case of \(h<0\) can be transformed into \(h>0\) by replacing \(\alpha \) and \(h\) with \(-\alpha \) and \(-h\), respectively.

We next study the asymptotic behavior of \(\lambda _1^\mathcal {N}(\alpha ,D)\) when either \(D\) or both \(D\) and \(\alpha \) are large. Due to mathematical difficulties, we can only handle the case that \(a(x,t)=a(t)\) depends on the time variable alone, but no positivity on \(h(x,t)\) is required any more. Our first result reads as follows.

Theorem 1.3

Assume that \(a(x,t)=a(t),\,\forall (x,t)\in [0,L]\times [0,T]\). Then for any given \(L>0\),

We can improve the above result when \(a\) is a positive constant and \(h(x,t)\) depends on the spatial variable alone. Indeed, we have

Theorem 1.4

Assume that \(a\) is a positive constant and \(h(x,t)=h(x),\, \forall (x,t)\in [0,L]\times [0,T]\). Let \(D\rightarrow \infty ,\,\alpha ^2/D\rightarrow k\in [0,\infty )\). Then for any given \(L>0\), there holds

In the sequel, we are going to discuss the monotonicity of the principal eigenvalue with respect to \(\alpha ,D\) and \(L\). So to explicitly stress the dependence of \(\lambda _1^\mathcal {N}(\alpha ,D)\) on \(L\), in the rest of this section, we shall use \(\lambda _1^\mathcal {N}(\alpha ,D,L)\) in place of \(\lambda _1^\mathcal {N}(\alpha ,D)\).

Before presenting our monotonicity result, we should point out that, in general, \(\lambda _1^\mathcal {N}(\alpha ,D,L)\) does not enjoy any monotonicity property on either \(D\), \(\alpha \) or \(L\). Indeed, Huston et al. in [39] showed that, unlike the autonomous case, \(\lambda _1^\mathcal {N}(\alpha ,D,L)\) is not monotone in \(D>0\) even when \(h\equiv 0\). In [34], Hsu and Lou proved that \(\lambda _1^\mathcal {N}(\alpha ,D,L)\) is not monotone with respect to \(\alpha >0\) in the case of \(a(x,t)=h(x,t)\equiv 1\). It is also obvious that \(\lambda _1^\mathcal {N}(\alpha ,D,L)\) has no strict monotonicity in \(\alpha ,\,D\) or \(L>0\) since \(\lambda _1^\mathcal {N}(\alpha ,D,L)={1\over T}\int _0^T V(t) dt\) is a constant if \(V(x,t)\equiv V(t)\). Therefore, the monotonicity results of \(\lambda _1^\mathcal {N}(\alpha ,D,L)\) with respect to either \(D\), \(\alpha \) or \(L\) are valid only when additional conditions are imposed on \(a,\,h\) and \(V\). In particular, we are able to state the following

Theorem 1.5

Assume that \(a,\,h\) are positive constants, \(V_x\in C([0,L]\times [0,T])\) and \(V_x(x,t)\ge 0\) on \([0,L]\times [0,T]\). Then the following assertions hold.

-

(i)

For any given \(D>0\) and \(L>0\), \(\lambda _1^\mathcal {N}(\alpha ,D,L)\) is strictly monotone increasing in \(\alpha \).

-

(ii)

For any given \(D>0\) and \(\alpha \in {\mathbb {R}}\), \(\lambda _1^\mathcal {N}(\alpha ,D,L)\) is strictly monotone increasing in \(L\).

-

(iii)

For any given \(L>0\) and \(\alpha \le 0\), \(\lambda _1^\mathcal {N}(\alpha ,D,L)\) is strictly monotone increasing in \(D\).

We remark that when \(h(x,t)\equiv 0\) and \(a(x,t)=a(t)\), one can apply the same analysis as in Sect. 4 to show that the assertions (ii) and (iii) of Theorem 1.5 remain true. We also mention that though the proof of Theorem 1.5 mainly follows [34], considerable modifications are needed.

The rest of our paper is organized as follows. In Sect. 2, we provide some preliminary results, which have their own interest. In Sect. 3, we analyze the asymptotic behaviors of \(\lambda _1^\mathcal {N}(\alpha ,D,L)\) and prove Theorems 1.1–1.4, and Sect. 4 concerns the monotonicity property of \(\lambda _1^\mathcal {N}(\alpha ,D,L)\) and Theorem 1.5 is established. Finally, as an application of the theoretical results, in Sect. 5, we study a nonlocal reaction–diffusion–advection equation with the periodic incident light intensity, which is used to model the evolution of a single phytoplankton species.

2 Preliminaries

In this section, we introduce some definitions and preliminary results, which will be frequently used in the coming sections and also have their independent interest.

Throughout this paper, we use \(\Omega \subset {\mathbb {R}}^N\,(N\ge 1)\) to denote a bounded domain with the smooth boundary \(\partial \Omega \), and let \(\mathcal {A}=\mathcal {A}(x,t)\) given by

be uniformly elliptic in the usual sense for each \(t\in [0,T]\). We assume that \(a_{ij}=a_{ji},\,(1\le i,\,j\le N)\), and \(a_{ij}, a_i, a_0\in C(\overline{\Omega }\times [0,T])\) and are \(T\)-periodic in \(t\).

To present the general result below for three types of boundary conditions in a unified manner, we assume that the boundary \(\partial \Omega \) consists of \(\Gamma _1\) and \(\Gamma _2\) which are two disjoint open and closed subsets of \(\partial \Omega \). Define the boundary operator

where \(\nu \) is the unit exterior normal to \(\partial \Omega \), and the nonnegative function \(b_0\in C^{1+\theta _0}(\overline{\Omega })\) for some \(0<\theta _0<1\). We notice that \(\mathcal {B}=\mathcal {B}(x)\) is independent of \(t\). Of course, either \(\Gamma _1\) or \(\Gamma _2\) allows to be the empty set.

According to [33], the following eigenvalue problem

admits a principal eigenvalue \(\lambda =\lambda _1(\mathcal {L})\), and any other eigenvalue \(\lambda \) satisfies that the real part \(\mathrm{{Re}}(\lambda )>\lambda _1\).

To stress the weight function \(a_0\) and the boundary condition, in this paper we sometimes use \(\lambda _1^\mathcal {D}(a_0)\) and \(\lambda _1^\mathcal {N}(a_0)\) to denote the principal eigenvalue of (2.1) over \(\Omega \times (0,T)\), with the zero Dirichlet (i.e., \(\Gamma _2\) is an empty set) and the zero Neumann boundary condition (i.e., \(\Gamma _1\) is an empty set and \(b_0\equiv 0\)), respectively. It is well known that \(\lambda _1^\mathcal {D}(a_0)>\lambda _1^\mathcal {N}(a_0)\).

To obtain a more general result as well as for our later use, we shall work on the following Sobolev function space:

Thus, the standard Sobolev embedding theorem guarantees that \(u\in C^{1+\theta ,\theta /2}(\overline{\Omega }\times [0,T])\) for some \(\theta \in (0,1)\) if \(u\in X\).

Definition 2.1

A function \(\overline{w}\in X\) is called a supersolution of \(\mathcal {L}\) if \(\overline{w}\) satisfies

The function \(\overline{w}\) is called a strict supersolution if it is a supersolution but not a solution. A subsolution \(\underline{w}\) is defined by reversing the inequality signs in (2.2).

Definition 2.2

We say that \(\mathcal {L}\) admits the strong maximum principle if \(w\in X\) satisfying (2.2) implies \(w>0\) in \(\Omega \times [0,T]\) unless \(w\equiv 0\).

With the above preparations, we are ready to prove the equivalent characteristics for the principal eigenvalue, the strong maximum principle and a positive strict supersolution. We should mention that the analogous result for the elliptic operator case is well known (see, e.g., [3, 22, 45, 55]). On the other hand, Anton and López-Gómez [4] already established such kind of equivalent relationships for a class of linear cooperative periodic-parabolic systems subject to zero Dirichlet boundary conditions. It is worthy pointing out that a supersolution (or subsolution) defined in [4] was assumed to satisfy the \(T\)-periodic condition.

Proposition 2.1

The following statements are equivalent.

-

(i)

\(\mathcal {L}\) admits the strong maximum principle property;

-

(ii)

\(\lambda _1(\mathcal {L})>0\);

-

(iii)

\(\mathcal {L}\) has a strict supersolution which is positive in \(\Omega \times [0,T]\).

Proof

The idea of the proof below was traced back to Walter [55] for the elliptic operator case under zero Dirichlet boundary conditions, and was then extended by López-Gómez [45] to cover the case of general boundary conditions. For the completeness and reader’s convenience, we include the necessary modifications and provide a detailed proof.

\(\hbox {(i)}\Rightarrow \hbox {(ii)}\). Suppose that \(\lambda _1(\mathcal {L})\le 0\). Then, for the corresponding principal eigenfunction \(\phi >0\) in \(\Omega \times [0,T]\), clearly, \(-\phi <0\) and solves (2.1) for \(\lambda =\lambda _1(\mathcal {L})\). So we notice that

Thus, applying the strong maximum principle in Definition 2.1 to \(-\phi \), we find \(-\phi >0\) in \(\Omega \times [0,T]\), a contradiction with the positivity of \(\phi \).

\(\hbox {(ii)}\Rightarrow \hbox {(iii)}\). It is obvious that the corresponding principal eigenfunction \(\phi >0\) is a strict supersolution of \(\mathcal {L}\).

\(\hbox {(iii)}\Rightarrow \hbox {(i)}\). Assume that \(\overline{w}\in X\) is a strict supersolution which is positive in \(\Omega \times [0,T]\). To prove the desired conclusion, we first prepare two claims as follows.

We first claim that \(\overline{w}>0\) on \(\Gamma _2\times [0,T]\). Otherwise, we can find \((x_0,t_0)\in \Gamma _2\times [0,T]\) such that \(\overline{w}(x_0,t_0)=0\). Note that \(\overline{w}(x,0)\ge \overline{w}(x,T)\) in \(\Omega \), and so we may assume that \(t_0\in (0,T]\). Thus, by the Hopf boundary lemma for parabolic equations (see, e.g., [52]), we have \(\partial _\nu w(x_0,t_0)<0\), which contradicts the boundary condition that \(\mathcal {B}w(x_0,t_0)\ge 0\) on \(\Gamma _2\times (0,T]\). Hence, our claim is verified.

By assuming that \(\Gamma _1\) is nonempty, we then claim that

From now on, whenever \(\Gamma _1\) is nonempty, let us denote by \(d(x,\Gamma _1)\) the usual distance between the point \(x\in \overline{\Omega }\) and the set \(\Gamma _1\).

Indeed, if (2.3) is false, we then find a sequence \((x_n,t_n)\in \overline{\Omega }\times [0,T]\) such that \(\overline{w}(x_n,t_n)< {1\over n}d(x_n,\Gamma _1)\). We may assume that \(x_n\rightarrow x_0\in \overline{\Omega }\) and \(t_n\rightarrow t_0\in [0,T]\). By letting \(n\rightarrow \infty \), one gets \(\overline{w}(x_0,t_0)=0\). Thanks to \(\overline{w}(x,0)\ge \overline{w}(x,T)\) in \(\Omega \) again, we further assume that \(t_0\in (0,T]\). We recall that \(\overline{w}>0\) on \((\Omega \cup \Gamma _2)\times [0,T]\). Thus, \(x_0\in \Gamma _1\) and so \(\overline{w}\) attains its minimum at \((x_0,t_0)\in \Gamma _1\times (0,T]\). As a result, the Hopf boundary lemma gives \(\partial _\nu \overline{w}(x_0,t_0)<0\). On the other hand, as \(\Omega \) is smooth and \(d(x_n,\Gamma _1)\rightarrow 0\), for each large \(n\), there is a unique \(y_n\in \Gamma _1\) such that \(|x_n-y_n|=d(x_n, \Gamma _1)\). For each such \(n\), we also observe that

Since \(\overline{w}\in X\), the standard Sobolev embedding theorem guarantees that \(\overline{w}\in C^{1+\theta ,\theta /2}(\overline{\Omega }\times [0,T])\) for some \(\theta \in (0,1)\), which also implies that \(\overline{w}(\cdot ,t)\) is a continuous function from \([0,T]\) to \(C^1(\overline{\Omega })\). With the choice of \(y_n\), this fact allows us to conclude that

Thus, in view of (2.4), (2.5) and \(|x_n-y_n|=d(x_n, \Gamma _1)\), we deduce

which contradicts with our assumption \(\overline{w}(x_n,t_n)<{1\over n}d(x_n,\Gamma _1)\) for all \(n\). So far, we have proved the claim (2.3).

We are now in a position to show that \(\mathcal {L}\) enjoys the strongly maximum principle property. To the end, we first consider the situation that \(\Gamma _1\) is nonempty.

Suppose that \(w\in X\) with \(w\not \equiv 0\) satisfying (2.2). So \(w\in C^{1+\theta ,\theta /2}(\overline{\Omega }\times [0,T])\) for some \(\theta \in (0,1)\). First of all, one can use the similar argument to that of (2.3) to prove that

In the sequel, we distinguish two cases: (a) \(w\ge 0\) on \(\overline{\Omega }\times [0,T]\); (b) \(\min _{\overline{\Omega }\times [0,T]}w<0\).

We first consider case (a). Assume that there exists \((x_0,t_0)\in \Omega \times [0,T]\) such that \(w(x_0,t_0)=0\). If \(t_0>0\), then \(w\) attains its minimum in the interior point \((x_0,t_0)\) and so it follows from the well-known maximum principle (see, e.g., [52] or [43, 44]) that \(w(x,t)=0\) for all \((x,t)\in \overline{\Omega }\times [0,t_0]\). Thus, the conditions \(w(x,0)\ge w(x,T)\) in \(\Omega \) and \(w\ge 0\) on \(\overline{\Omega }\times [0,T]\) yield \(w(x,T)=0\) for all \(x\in \Omega \). By the maximum principle again, we have \(w\equiv 0\) on \(\overline{\Omega }\times [0,T]\), a contradiction with our assumption of \(w\not \equiv 0\). If \(t_0=0\), then in view of \(w(x,0)\ge w(x,T)\) in \(\Omega \), \(w(x_0,T)=0\) and so the maximum principle gives \(w\equiv 0\) on \(\overline{\Omega }\times [0,T]\). Then the same contradiction occurs. Our argument shows that in case (a), \(w>0\) on \(\Omega \times [0,T]\), which is what we wanted.

We next show that case (b) is impossible. Let \(w(x_0,t_0)=\min _{\overline{\Omega }\times [0,T]}w<0\) for some \((x_0,t_0)\in \overline{\Omega }\times [0,T]\). Then, (2.6), together with (2.3), yields

Let us define

Owing to our assumption of \(w(x_0,t_0)<0\), \({C^*\over \delta _0}\ge \xi ^*>0\). As a result, by continuity, \(w^*:=w+\xi ^*\overline{w}\ge 0\) on \(\overline{\Omega }\times [0,T]\). We observe that \(w^*\) is a strict supersolution of \(\mathcal {L}\) which is nonnegative. Moreover, \(w^*\) is not identically zero; otherwise, it would be easy to see that \(\overline{w}=-{1\over \xi ^*}w\) is a positive solution to (2.1) for \(\lambda =0\), a contradiction against the hypothesis that \(\overline{w}\) is a strict supersolution to (2.2). Therefore, we apply the same argument as in the previous paragraph to obtain \(w^*>0\) in \(\Omega \times [0,T]\). Then, as in obtaining (2.3), one can find a \(\delta ^0>0\) such that \(w^*\ge \delta ^0d(x,\Gamma _1)\) on \(\overline{\Omega }\times [0,T]\). This and (2.6) immediately derive

which therefore contradicts the definition of \(\xi ^*\). Our analysis shows that case (b) can not happen.

When \(\Gamma _1\) is an empty set, we first notice from our previous argument that \(\overline{w}>0\) on \(\overline{\Omega }\times [0,T]\). Then, in (2.3) and (2.6), we take \(d(x,\Gamma _1)=1\) and so (2.3) holds and (2.6) is fulfilled automatically. Thus, the similar (but simpler) analysis as above shows that \(w>0\) on \(\overline{\Omega }\times [0,T]\). Hence, \(\mathcal {L}\) has the strongly maximum principle property. The proof is now complete. \(\square \)

It is easily seen from Proposition 2.1 that \(\lambda _1(\mathcal {L})>0\) if and only if \(\mathcal {L}\) has a strict subsolution which is negative in \(\Omega \times [0,T]\). On the other hand, Proposition 2.1 shows that \(\lambda _1(\mathcal {L})<0\) implies the existence of a positive strict subsolution of \(\mathcal {L}\) which can be chosen to be a principal eigenfunction of \(\lambda _1(\mathcal {L})\). In this paper, the following assertion will be used.

Corollary 2.1

Assume that \(\mathcal {L}\) has a subsolution \(\underline{w}\) with \(\underline{w}>0\) in \(\Omega \times [0,T]\). Then we have \(\lambda _1(\mathcal {L})\le 0\).

Proof

If \(\underline{w}\) is a solution to (2.1), clearly \(\lambda _1(\mathcal {L})=0\) by the uniqueness of the principal eigenvalue. If \(\underline{w}\) is not a solution, then \(\underline{w}\) is a positive strict subsolution. Suppose by contradiction that \(\lambda _1(\mathcal {L})>0\). Then, since \(-\underline{w}=0\) on \(\Gamma _1\times [0,T]\) due to the definition of subsolution and \(\underline{w}\ge 0\) on \(\overline{\Omega }\times [0,T]\), we can apply the strong maximum principle property of Proposition 2.1 to \(-\underline{w}\) to assert that \(-\underline{w}>0\) in \(\Omega \times [0,T]\), contradicting the positivity of \(\underline{w}\). \(\square \)

3 Asymptotic behavior of \(\lambda _1\): proof of Theorems 1.1–1.4

Proof of Theorem 1.1

We first notice that the second limit in Theorem 1.1 follows from the first one by using the variable change \(y=-x\) to (1.1). Thus, it suffices to verify the first limit. For sake of clarity, we divide our proof into two main steps.

Step 1. We first prove

For any given small constant \(\epsilon >0\), let us consider the following auxiliary eigenvalue problem

where

Denote by \(\lambda _1^\mathcal {N}(\overline{\Psi }_\epsilon )\) the principal eigenvalue of (3.2).

To show (3.1), we want to construct a strict positive supersolution \(\overline{w}\) to the operator

in the sense of Sect. 2 (subject to the zero Neumann boundary condition). In what follows, for simplicity we just call \(\overline{w}\) a strict positive supersolution of (3.2). Once such a supersolution \(\overline{w}\) exists, we then apply Proposition 2.1. For such purpose, let us rewrite \(\overline{\Psi }_\epsilon \) as

This motivates us to construct a positive strict supersolution of variable-separated form:

where \(f\) satisfies

Actually, up to multiplication, \(f\) has the explicit expression

As a result, it is easy to check that if for large \(\alpha >0\), we can find \(\overline{z}\) such that \(\overline{z}>0\) on \([0,L]\) and satisfies

for all \(t\in [0,T]\), then \(\overline{w}\) is a strict positive supersolution to (3.2).

Let us assume, for the moment, that such \(\overline{z}\) exists. Then applying Proposition 2.1 to (3.2), it immediately follows that \(\lambda _1^\mathcal {N}(\overline{\Psi }_\epsilon )\ge 0\), and hence

This therefore implies (3.1) by sending \(\epsilon \rightarrow 0\).

Thus, the above analysis shows that in order to prove (3.1), it is sufficient to find such a function \(\overline{z}\) so that (3.4) holds. For simplicity, we define the operator

with

As the preparation, we also choose a positive constant \(\epsilon _0<\epsilon \), and denote

and

Clearly, \(\underline{a},\,\overline{a}>0\), \(c_1\) does not depend on small \(\epsilon \), and \(c_2\) is decreasing with respect to \(\epsilon _0\in (0,\epsilon )\) and \(c_2>0\) if \(\epsilon _0\) is sufficiently small.

We now take

for some large constant \(M_1>0\) to be determined later. Then,

So \(\overline{z}'(0)=0\). Furthermore, we require \( L-M_1x^2\ge L/2\) on \([0,\epsilon _0]\). Thus,

By direct calculation, for \(x\in [0,\epsilon _0]\) and \(t\in [0,T]\), we have

if \(M_1\ge {{c_1L}\over {2\underline{a}D}}\) and \(\alpha \ge 0\). Hence, in view of the first inequality in (3.5), we take smaller \(\epsilon _0\) if necessary, such that \({{c_1L}\over {2\underline{a}D}}\le {L\over {2\epsilon _0^2}}\). Moreover, we choose

Next, we choose

where the positive constant \(M_2\) will be determined later. Then \(\overline{z}'(x)=2(x-L)\) and \(\overline{z}''(x)=2\) on \([L-\epsilon _0,L]\). So \(\overline{z}'(L)=0\). Moreover, when \(x\in [L-\epsilon _0,L]\) and \(t\in [0,T]\), for any \(\alpha \ge 0\), we have

if

We then consider \(x\in [\epsilon _0,L-\epsilon _0]\). Denote

According to the previous choice for \(\overline{z}\), \(\overline{z}\) is strictly decreasing in \(x\) on \([0,\epsilon _0]\) and \([L-\epsilon _0,L]\), and

We further notice from (3.7) and (3.9) that \(M_1\) decreases in \(\epsilon _0\) while \(M_2\) increases in \(\epsilon _0\). Additionally, both \(M_1\) and \(M_2\) do not depend on \(\alpha \) provided that \(\alpha \ge 0\). Thus, we can require that \(M_1\) is much larger than \(M_2\) by taking smaller \(\epsilon _0\). So, there holds

Now, for such fixed \(\epsilon _0>0\), if \(x\in [\epsilon _0,L-\epsilon _0]\), we can choose \(\overline{z}\in C^2([0,L])\) which is strictly decreasing on \([0,L]\), and on \([\epsilon _0,L-\epsilon _0]\) enjoys the properties:

In the above, the positive constants \(c_3,c_4\) are independent of \(\alpha \). Consequently, on \([\epsilon _0,L-\epsilon _0]\), if \(\alpha \) satisfies

we then find, for all \((x,t)\in [\epsilon _0,L-\epsilon _0]\times [0,T]\),

As a consequence, in view of (3.6), (3.8) and (3.11), for \(\alpha \) satisfying (3.10), the above chosen \(\overline{z}\) satisfies (3.4), which in turn implies that \(\overline{w}=\overline{z} f\) is a desired strict supersolution of (3.2). Therefore, we can conclude that (3.1) holds.

Step 2. We next prove

We shall employ the similar strategy to that in step 1. For arbitrary small \(\epsilon >0\), we first appeal to the following periodic-parabolic eigenvalue problem

where

Denote \(\lambda _1^\mathcal {N}(\underline{\Psi }_\epsilon )\) to be the principal eigenvalue of (3.13).

To get (3.12), we are going to construct a strict positive subsolution \(\underline{w}\) to (3.13) and then apply Corollary 2.1. The same analysis as in step 1 shows that if for large \(\alpha >0\), one can find \(\underline{z}\) such that \(\underline{z}>0\) on \([0,L]\) and satisfies

for all \(t\in [0,T]\), then

is a strict positive subsolution to (3.13), where \(f\) is determined by (3.3).

Suppose that such \(\underline{z}\) exists. In light of Corollary 2.1, as applied to (3.13), we deduce that \(\lambda _1^\mathcal {N}(\underline{\Psi }_\epsilon )\le 0\), and so

which then yields (3.12) due to the arbitrariness of \(\epsilon \).

In the sequel, we aim to look for such a desired function \(\underline{z}\). For simplicity, for \(w\in C^{2,1}([0,L]\times [0,T])\), let us denote the operator

with

We take a small constant \(0<\epsilon _0<\epsilon \). Let \(\underline{a},\,\overline{a}\) be defined as in step 1, and

Clearly, \(\tilde{c}_1\) does not depend on small \(\epsilon \). Furthermore, one can require \(\epsilon _0\) to be small enough so that \( -2\epsilon <\tilde{c}_2<-{1\over 2}\epsilon \).

For such \(\epsilon _0\), we first choose

Then, by elementary calculation, we have that \(\underline{z}'(0)=0\), and for \((x,t)\in [0,\epsilon _0]\times [0,T]\) and \(\alpha \ge 0\),

if \(\epsilon _0\le \min \{\epsilon ,\,({{2\underline{a}D} \over {\tilde{c}_1}})^{1/2}\}\).

Fix the above \(\epsilon _0\), we then take

for some large positive constant \(\tilde{M}_2\) to be determined below. Then \(\underline{z}'(L)=0\). Moreover, when \((x,t)\in [L-\epsilon _0,L]\times [0,T]\) and \(\alpha \ge 0\), we have

if \(\tilde{M}_2=\epsilon _0^2+{{4\overline{a} D}\over {\epsilon }}\).

We finally consider \(x\in [\epsilon _0,L-\epsilon _0]\). According to the previous choice for \(\underline{z}\), \(\underline{z}\) is strictly increasing in \(x\) on \([0,\epsilon _0]\) and \([L-\epsilon _0,L]\), and

Therefore, for the above chosen \(\epsilon _0,\,\tilde{M}_2\), when \(x\in [\epsilon _0,L-\epsilon _0]\), we can take a strictly increasing function \(\underline{z}\in C^2([0,L])\) on \([0,L]\), which additionally satisfies

It is easily seen that the positive constants \(\tilde{c}_3=\tilde{c}_3(\epsilon _0),\,\tilde{c}_4=\tilde{c}_4(\epsilon _0)\) are independent of \(\alpha \). Hence, for \((x,t)\in [\epsilon _0,L-\epsilon _0]\times [0,T]\), we obtain

if

Here, \(h_0>0\) is given as in step 1. Thus, combining (3.15), (3.16) and (3.17), we have found a positive strict subsolution \(\underline{w}=\underline{z} f\) and so (3.12) holds true. Therefore, Theorem 1.1 follows from (3.1) and (3.12). \(\square \)

Proof of Theorem 1.2

The assertion (ii) of Theorem 1.2 follows from [39, Lemma 2.4], and the assertion (iii) directly follows from the assertion (i) by applying the variable change \(y=-x\) to (1.1). Thus, we need only verify the assertion (i) of Theorem 1.2.

We will be in the same spirit as in the proof of Theorem 1.1 to establish the assertion (i) of Theorem 1.2. Our analysis consists of two steps. From now on, we fix \(\alpha >0\).

Step 1. We prove

We shall use the same notation as in step 1 of the proof of Theorem 1.1. Then, to show (3.18), it is sufficient to prove that, for fixed \(\epsilon >0\),

Again, we are ready to construct a positive function of the form \(\overline{w}(x,t)=\overline{z}(x)f(t)\) which is a strict supersolution of (3.2). To find a desired function \(\overline{z}\), we first want to seek an auxiliary function \(\overline{v}\) and then take \(\overline{z}=e^{\overline{v}}\).

For a small \(0<\epsilon _0<\min \{\epsilon ,\,({{\alpha h_0}\over {ec_1}})^{1/2}\}\), we first choose

and

Hence, \(\overline{v}'(0)=-{1\over {\epsilon _0^2}}<0\) and \(\overline{v}'(L)=0\).

We next consider \(x\in [\epsilon _0,L-\epsilon _0]\). Note that \(\overline{v}\) is strictly decreasing in \(x\) on \([0,\epsilon _0]\) and \([L-\epsilon _0,L]\). Furthermore, simple calculations give that

and

Therefore, we can find a strictly decreasing \(\overline{v}\in C^2([0,L])\), which enjoys the following properties on \([0,L]\):

where the positive constants \(\hat{c}_3=\hat{c}_3(\epsilon _0),\,\hat{c}_5=\hat{c}_5(\epsilon _0)\) are independent of \(D\), and the positive constant \(\hat{c}_4\) is independent of \(D\) and \(\epsilon _0\).

Now, we set

Then, we have

Thus, \(\overline{z}\) is also decreasing on \([0,L]\), \(\overline{z}'(0)<0\) and \(\overline{z}'(L)=0\). In addition, there holds

As a result, on \([0,\epsilon _0]\times [0,T]\), by (3.20) and the expression of \(\overline{v}\) we find

provided that

If \((x,t)\in [L-\epsilon _0,L]\times [0,T]\), it is easy to check that

provided that

Finally, on \([\epsilon _0,L-\epsilon _0]\times [0,T]\), according to (3.20) and the choice of \(\overline{v}\) we have

provided that

by requiring \(\epsilon _0<({{\alpha h_0\hat{c}_4}\over {c_1}})^2\).

As a consequence, from (3.21)–(3.26), it follows that when

the above chosen \(\overline{z}\) satisfies (3.4) and hence \(\overline{w}=\overline{z}f\) is a desired strict supersolution. Therefore, by Proposition 2.1, (3.4) holds and so (3.19) is valid. Thus (3.18) has been proved.

Step 2. We are going to verify

In what follows, we adopt the same notation as in step 2 of the proof of Theorem 1.1.

In order to establish (3.27), as in step 2 of the proof of Theorem 1.1, it is enough to find a positive strict subsolution \(\underline{w}=\underline{z}f\) to (3.13), where \(\underline{z}\) satisfies (3.14).

As in step 1 above, we first construct an auxiliary function \(\underline{v}\) and then take \(\underline{z}=e^{\underline{v}}\). For such purpose, we let

and

Clearly, \(\underline{v}\) is strictly increasing on \([0,\epsilon _0]\) and \([L-\epsilon _0,L]\). In addition, we have

and

Therefore, we can find a strictly increasing function \(\underline{v}\in C^2([0,L])\) on \([0,L]\) such that on \([\epsilon _0,L-\epsilon _0]\), \(\underline{v}\) satisfies

where the positive constant \(\hat{c}_3=\hat{c}_3(\epsilon _0)\) is independent of \(D\), and the positive constant \(\hat{c}_4\) is independent of \(\epsilon _0\) and \(D\).

We now set

Thus, we have \(\underline{z}'(0)>0,\,\underline{z}'(L)=0\), and

By basic calculation, when \((x,t)\in [0,\epsilon _0]\times [0,T]\), it follows from (3.28) that

if \(\epsilon _0\le \min \{\epsilon ,\,{{\alpha h_0}\over {\tilde{c}_1}}\}\).

When \((x,t)\in [L-\epsilon _0,L]\times [0,T]\), it is easy to check that

if \(D\le {{\epsilon _0^5\varepsilon }\over {4\overline{a}}}\) since \(\underline{\Phi }_\epsilon \le -{1\over 2}\epsilon \) on \([L-\epsilon _0,L]\times [0,T]\).

If \((x,t)\in [\epsilon _0,L-\epsilon _0]\times [0,T]\), making use of (3.28) and the choice of \(\underline{v}\) we obtain

provided that

Thus, combining (3.29), (3.30) and (3.31), when

we have found a positive strict subsolution \(\underline{w}=\underline{z}f\) and so (3.27) holds. Therefore, Theorem 1.2 follows from (3.18) and (3.27). The proof is now complete. \(\square \)

Proof of Theorem 1.3

We employ the approach used in [39]. Let \(\varphi \) be the principal eigenfunction corresponding to \(\lambda _1^\mathcal {N}(\alpha ,D)\). For simplicity, we use \(\lambda _1^\mathcal {N}=\lambda _1^\mathcal {N}(\alpha ,D)\). We also normalize \(\varphi \) such that

and set

Thus, \(\overline{h}\ge 0\) is independent of \(D,\,\alpha \).

In our case of \(a(x,t)=a(t)\), (1.1) becomes

We multiply the equation of (3.33) by \(\varphi \) and then integrate over \((0,L)\times (0,T)\) to obtain

From (3.32), (1.2) and the Hölder inequality, it then follows that

Hereafter, the positive constant \(c\) is independent of \(D,\alpha \) and may vary from place to place. Thus, when \(D\) is sufficiently large, it is easy to see that

On the other hand, we define

Clearly,

which allows one to use the well-known Poincaré inequality to conclude that

Therefore, as \(\varphi ^*_x=\varphi _x\), making use of (3.34), we get

which and (3.34), together with the Hölder inequality, also deduce that

In addition, integrating the equation of (3.33) over \((0,L)\), we know

Due to (3.36), we easily see that

Hence, by solving the ordinary differential equation (3.37), we obtain

where

Taking \(t=T\) in (3.38) and using the \(T\)-periodicity of \(\varphi _*\), we see that

As a consequence, when \(D\rightarrow \infty ,\,{\alpha ^2/D}\rightarrow 0\), either \(\varphi _*(0)\rightarrow 0\), or

We further claim that (3.39) must hold true, which will thereby derive the desired assertion of Theorem 1.3. Otherwise, suppose that \(\varphi _*(0)\rightarrow 0\) as \(D\rightarrow \infty ,\,{\alpha ^2/D}\rightarrow 0\). Then, (3.38) implies that \(\varphi _*(t)\rightarrow 0\) uniformly on \([0,T]\). This, combined with (3.35), ensures

which is a contradiction with (3.32). \(\square \)

Proof of Theorem 1.4

We need only modify the proof of Theorem 1.3. We use the same notation as in the proof of Theorem 1.3 and assume that (3.32) holds. Without loss of generality, we take \(a(x,t)=1\). Since \(a(x,t)=1\) and \(h(x,t)=h(x)\), (1.1) is reduced to the following

In (3.40), we can rewrite the equation of \(\varphi \) as follows

As in the proof of Theorem 1.3, multiplying (3.41) by \(\varphi \) and then integrating over \((0,L)\times (0,T)\), we can easily deduce

for some positive constant \(c\) independent of \(D,\alpha \). This therefore yields

Now, in the present situation, we find that \(\varphi _*\) solves

Proceeding similarly to the proof of Theorem 1.3, we can use the above equation to obtain

as wanted. \(\square \)

Remark 3.1

The results analogous to Theorems 1.3 and 1.4 can be easily extended to the principal eigenvalue for the following periodic-parabolic eigenvalue problem:

where \(\Omega \subset {\mathbb {R}}^N\) is a bounded smooth domain, \(a_{ij}(x,t)=a_{ji}(x,t)\) satisfy the usual uniformly elliptic condition for each \(t\in [0,T]\), and \(a_{ij}, a_i, a_0\) are \(T\)-periodic and smooth functions on \(\overline{\Omega }\times [0,T]\).

4 Monotonicity of \(\lambda _1\): Proof of Theorem 1.5

In this section, without loss of generality, we take \(a=h\equiv 1\). Thus, the eigenvalue problem (1.1) reads as

which can be further written as the following

To prove Theorem 1.5, we need to introduce an auxiliary problem. From [33, Chapter II], one knows that for the adjoint problem of (4.2):

equivalently,

it has the same principal eigenvalue \(\lambda =\lambda _1^\mathcal {N}(\alpha ,D,L)\) with a principal eigenfunction \(\psi \in C^{2,1}([0,L]\times [0,T])\).

In this section, unless otherwise stated, we always assume that \(V_x(x,t)\ge 0,\ \forall (x,t)\in [0,L]\times [0,T]\).

Lemma 4.1

For any given \(D,\,L>0\) and \(\alpha \in {\mathbb {R}}\), \(\varphi _x(x,t)<0\) in \((0,L)\) for all \(t\).

Proof

We define \(w(x,t)=\varphi _x(x,t)\). To stress the dependence of the principal eigenvalue on the weight function \(V\), we shall use \(\lambda _1^\mathcal {N}(V)\) instead of \(\lambda _1^\mathcal {N}(\alpha ,D,L)\).

We now differentiate (4.1) with respect to \(x\). Obviously, \(w\) solves

Since \(V,\,V_x\in C([0,L]\times [0,T])\), the standard regularity theory for parabolic equations ensures that \(w\in W^{2,1}_p((0,L)\times (0,T))\) for any \(1<p<\infty \). Furthermore, using the assumption of \(V_x(x,t)\ge 0\) for \((x,t)\in [0,L]\times [0,T]\), we get from (4.5) that

On the other hand, according to our notation, it follows that \(\lambda _1^\mathcal {N}(V-\lambda _1^\mathcal {N}(V))=0\), and so

In light of this and (4.6), we can apply Proposition 2.1 to (4.6) to assert that \(-w>0\) in \((0,L)\times [0,T]\). So our conclusion is established. \(\square \)

Lemma 4.2

For given \(D,\,L>0\), \(\lambda _1^\mathcal {N}(\alpha ,D,L)\) is strictly monotone increasing in \(\alpha \).

Proof

For simplicity, we write \(\lambda _1=\lambda _1^\mathcal {N}(\alpha ,D,L)\). It is well known that \(\lambda _1\) and the associated principal eigenfunction \(\varphi \) are \(C^1\)-functions of \(\alpha \). For notational simplicity, we denote \({{\partial \varphi }\over {\partial \alpha }}\) by \(\varphi '\) and \({{\partial \lambda _1}\over {\partial \alpha }}\) by \(\lambda _1'\). Then, we differentiate (4.1) with respect to \(\alpha \) to obtain

We rewrite (4.7) as

On the other hand, in (4.4) we take \(\psi \) to be the positive eigenfuntion corresponding to \(\lambda _1\). Then, \(\psi \) solves

We now multiply the equation of (4.8) by \(\psi \) and integrate the resulting equation to get

Because of the equation of \(\psi \) in (4.9), we find

from which and (4.10), it immediately follows that

Thanks to Lemma 4.1 and the positivity of \(\varphi ,\,\psi \), we have \(\lambda _1'>0\). \(\square \)

Lemma 4.3

For any given \(D>0\) and \(\alpha \in {\mathbb {R}}\), \(\lambda _1^\mathcal {N}(\alpha ,D,L)\) is strictly monotone increasing in \(L\).

Proof

For simplicity, we write \(\lambda _1(L) =\lambda _1^\mathcal {N}(\alpha ,D,L)\). Given \(0<L_1<L_2\), we want to show \(\lambda _1(L_1)<\lambda _1(L_2)\). We denote by \(\varphi \) the principal eigenfunction of (4.1) corresponding to \(\lambda _1(L_2)\) with \(L=L_2\), and by \(\psi \) the principal eigenfunction of (4.4) corresponding to \(\lambda _1(L_1)\) with \(L=L_1\), respectively. According to our notation, \((\varphi ,L_2)\) satisfies

and \((\psi ,L_1)\) satisfies

We now multiply the equation of \(\varphi \) by \(\psi \), the equation of \(\psi \) by \(\varphi \), and subtract the resulting equations to obtain

Integrating the above equality over \((0,L_1)\times (0,T)\) and using the boundary conditions of \(\varphi ,\,\psi \) at \(x=0\) for all \(t\) and their \(T\)-periodicity, we then find

Here we have used the fact of \(\varphi _x(x,t)<0,\,\forall (x,t)\in (0,L_1]\times [0,T]\) due to Lemma 4.1. Hence, \(\lambda _1(L_1)<\lambda _1(L_2)\), as wanted. \(\square \)

Lemma 4.4

For any given \(D>0\) and \(\alpha \le 0\), \(\lambda _1^\mathcal {N}(\alpha ,D,L)\) is strictly monotone increasing in \(D\).

Proof

Let \(\lambda _1:=\lambda _1^\mathcal {N}(\alpha ,D,L)\) and the associated principal eigenfunction \(\varphi \) satisfy (4.1). Clearly, \(\lambda _1^\mathcal {N}(\alpha ,D,L)\) and \(\varphi \) are \(C^1\)-functions of \(D\). For simplicity, we denote \({{\partial \varphi }\over {\partial D}}\) by \(\varphi '\) and \({{\partial \lambda _1}\over {\partial D}}\) by \(\lambda '_1\). Then, differentiating (4.1) with respect to \(D\), we have

We rewrite (4.11) as

Let \(\psi \) be the principal eigenfuntion corresponding to \(\lambda _1\) which satisfies (4.9). Multiplying the equation of (4.12) by \(\psi \) and integrating the resulting equation, we obtain

Substituting \(-\psi _t=D\psi _{xx}+\alpha \psi _x-V(x,t)\psi +\lambda _1\psi \) to (4.13), we readily get

By virtue of (4.9), it is easy to see that \(\xi (x,t)=\psi (x,-t)\) solves

Since \(V_x(x,t)\ge 0\) for \((x,t)\in [0,L]\times [0,T]\), the same reasoning as in the proof of Lemma 4.1 shows that

In light of this fact and Lemma 4.1, (4.14) implies \(\lambda _1'>0\) if \(\alpha \le 0\). \(\square \)

Now it is clear that Theorem 1.5 follows from Lemmas 4.2, 4.3 and 4.4.

5 Applications to a phytoplankton model

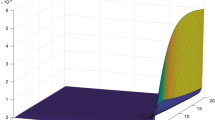

In this section, we will apply the theoretical results obtained in this paper to the following reaction–diffusion–advection equation, which was proposed by Shigesada and Okubo [54] to describe the population dynamics of a single phytoplankton species in a water column:

with zero flux boundary conditions at \(x=0\) and \(x=L\) and nonnegative initial condition at time \(t=0\). Here, \(u(x,t)\) stands for the population density of the phytoplankton species at location \(x\) and time \(t\), \(D>0\) is the vertical turbulent diffusion coefficient, \(\alpha \) is the sinking velocity (\(\alpha >0\)) or the buoyant velocity (\(\alpha <0\)), \(L>0\) is the depth of the water column and \(d>0\) is the death rate.

By the Lambert–Beer law, the light intensity \(I\) is governed by

where \(I_0\) is the incident light intensity, \(k_0\) is the background turbidity and \(k_1\) is the absorption coefficient of the phytoplankton species. The function \(g(I)\) is the specific growth rate of phytoplankton as a function of light intensity \(I(x,t)\). Taking into account the biological meaning, \(g(I)\) is usually assumed to satisfy

Problem (5.1) has received extensive studies numerically as well as theoretically; see, e.g., [22–26, 29, 31, 34–36, 41, 42, 53, 54] and the references therein. It should be mentioned that in all those papers, a common assumption is that the incident light intensity \(I_0(t)=I_0\) is a positive constant.

As pointed out by [54], the constant incident light intensity \(I_0(t)\equiv I_0\) represents an ideal environment. In general, the intensity of light at the surface of the water varies with time, particularly it changes periodically at day and night time. So we here only assume that

Therefore, we are led to consider the following nonlocal reaction–diffusion–advection equation

As in [25, 34], it is easy to show that, given the initial data \(u_0\in C([0,L])\) with \(u_0\ge 0,\not \equiv 0\), (5.5) admits a unique solution, which exists globally in time and is positive for all \(x\in [0,L]\) and \(t>0\).

Of the main concern for (5.5) (or (5.1)) is the uniform persistence (or bloom) and extinction property of the species in the long run. To reach such an aim, given \(V\in C([0,L]\times [0,T])\) with \(T\)-periodicity in \(t\), we shall look at the following the eigenvalue problem

To make the results in the previous sections directly applicable to problem (5.5), we set

and so (5.6) becomes

Let us denote by \(\lambda =\lambda _1^\mathcal {N}(\alpha ,D,L;V)\) the principal eigenvalue of (5.7) and by \(\varphi \in C^{2,1}([0,L]\times [0,T])\) the corresponding principal eigenfunction.

In what follows, we denote

It is obviously noticed that the hypothesis (5.3) guarantees that \(V_0\) is monotone increasing in \(x\) for each given \(t\in [0,T]\). In addition, for every \(\alpha \in {\mathbb {R}},\,D,L>0\), \(\lambda _1^\mathcal {N}(\alpha ,D,L;V_0)>0\) and it corresponds to the principal eigenvalue of the linearized problem of (5.5) at zero.

For simplicity, from now on, we also define

By using the comparison argument and persistence theory for periodic semiflows (see, e.g., [57]), we are able to prove the following result (see [51] for details).

Theorem 5.1

Let \(u\) be the unique solution of (5.5). Then the following hold:

-

(i)

If \(d_*\le d\), then \(\lim _{t\rightarrow \infty }u(x,t)\rightarrow 0\) uniformly for \(x\in [0,L]\);

-

(ii)

If \(0<d<d_*\), then (5.5) admits at least one positive \(T\)-periodic solution, and there exists a positive constant \(\eta \), independent of \(u_0(x)\), such that \(\liminf _{t\rightarrow \infty }u(x,t)\ge \eta \) uniformly for \(x\in [0,L]\).

In fact, it can be proved that if \(d\le 0\), then \(\lim _{t\rightarrow \infty }u(x,t)\rightarrow \infty \) uniformly on \(x\in [0,L]\), which has no good biological implication though. In [25, 26, 34], when \(I_0(t)\equiv I_0\) is a positive constant, the authors proved that (5.5), which then reduces to an elliptic equation, has a unique positive steady state solution, which is a global attractor for (5.5). We conjecture that such a conclusion also holds for the general case that (5.4) holds.

In view of Theorem 5.1, we know that the phytoplankton model (5.5) possesses the threshold-type dynamical behavior in terms of the death rate \(d\). That is, when the death rate \(d\) of the species is smaller than the critical value \(d_*\), the species will bloom in the long run, whereas the species will become extinct eventually if \(d\ge d_*\).

In the sequel, we focus on the effects of the diffusion and advection parameters \(\alpha ,\,D\) and the length \(L\) of the water volume on the persistence and extinction behavior of (5.5).

First of all, from (1.2) we have the following simple observation for the value \(d_*(\alpha ,D,L)\).

Lemma 5.1

For any given \(D,\,L>0\) and \(\alpha \in {\mathbb {R}}\), the following holds:

and

Proof

Notice that if \(k_0>0\)

It then follows from the monotonicity of the principal eigenvalue with respect to the weight function that if \(k_0>0\),

In addition, we have

which, combined with (5.12), yields the desired estimates. When \(k_0=0\), (1.2) deduces (5.11). This completes the proof. \(\square \)

The equality (5.11) implies that when (5.5) is reduced to the self-shading case (i.e., \(k_0=0\)), then there is no critical values for \(D,\,\alpha ,\,\) or \(L\) beyond which the species can not persist. This is essentially different from the case of \(k_0>0\), where the critical values for either \(D,\,\alpha ,\,\) or \(L\) may exist. Concerning such issues, see Theorems 5.2, 5.3 and 5.4 below for detailed discussions for the case of \(k_0>0\).

In view of (5.11), in the rest of this section, we always assume that \(k_0>0\).

Using Lemma 4.1, we can improve the estimates (5.10) in Lemma 5.1 when \(\alpha \le 0\).

Lemma 5.2

For any given \(D,\,L>0\) and \(\alpha \le 0\), the following holds:

Proof

According to Lemma 5.1, it remains to verify the left-side inequality in (5.13). Recall that \((\lambda _1^\mathcal {N}(\alpha ,D,L;V_0),\varphi )\) satisfies

Thus, dividing the above equation by \(\varphi \) and then integrating over \((0,L)\times (0,T)\) by parts, we obtain

By virtue of Lemma 4.1, when \(\alpha \le 0\), we have

It then follows that

This completes the proof. \(\square \)

Biologically, Lemma 5.2 implies that, with the parameters \(D,L\) given, compared to a sinking phytoplankton species with large advection coefficient \(\alpha >0\), a buoyant species (i.e., \(\alpha <0\)) has a larger range of the death rate \(d\) for the bloom phenomenon to happen.

Let us now study the effect of the advection coefficient \(\alpha \) on the dynamics of (5.5). In the following, for simplicity we denote

and

Clearly, \(0<g_*<\overline{g}<g^*\).

By Theorem 1.1, we see that

On the other hand, Theorem 1.5 shows that \(d_*(\alpha ,D,L)\) is strictly monotone decreasing for \(\alpha \in {\mathbb {R}}\). Therefore, for each fixed \(d\in (g_*,g^*)\), there exists a unique \(\alpha _*:=\alpha _*(d,D,L)\) such that \(d=d_*(\alpha _*,D,L)\). Furthermore, \(\alpha _*\) satisfies

By Theorems 1.1, 1.5 and 5.1 and the definition of \(\alpha _*\), our main results concerning the effect of the advection coefficient \(\alpha \) can be summarized as follows.

Theorem 5.2

For any given \(D,\,L>0\), the following hold:

-

(i)

If \(0<d\le g_*\), then (5.5) has at least one positive \(T\)-periodic solution and the uniform persistence property holds for (5.5) for each \(\alpha \in {\mathbb {R}}\).

-

(ii)

If \(d\in (g_*,g^*)\), then (5.5) has at least one positive \(T\)-periodic solution and the uniform persistence property holds for (5.5) provided that \(\alpha <\alpha _*\); while zero is the unique nonnegative \(T\)-periodic solution to (5.5) and the extinction property holds for (5.5) provided that \(\alpha \ge \alpha _*\).

-

(iii)

If \(d\ge g^*\), then zero is the unique nonnegative \(T\)-periodic solution to (5.5) and the extinction property holds for (5.5) for each \(\alpha \in {\mathbb {R}}\).

Theorem 5.2 shows that the existence of a critical sinking/buoyant velocity \(\alpha _*\) depends on the death rate \(d\); whenever it exists, it must be unique. Indeed, our result asserts that if \(d\) is suitably small (i.e., \(d\le g_*\)), the phytoplankton can always bloom for any sinking/buoyant velocity; i.e., there is no critical sinking/buoyant velocity in this case. Only when the death rate \(d\) falls into some intermediate range (i.e., \(d\in (g_*,g^*)\)), the unique critical sinking/buoyant velocity \(\alpha _*\) exists and in such a case, the phytoplankton blooms if and only if the sinking/buoyant velocity \(\alpha \) is smaller than \(\alpha _*\). However, when \(d\) is suitably large (i.e., \(d\ge g^*\)), then the phytoplankton can not bloom for any sinking/buoyant velocity \(\alpha \).

We next explore the effect of the water column depth \(L\). We first prepare two lemmas.

Lemma 5.3

For any given \(D>0\) and \(\alpha \in {\mathbb {R}}\), there holds

Proof

For any given \(\epsilon >0\), we restrict \(0<L<\epsilon \). Then, the monotonicity of \(g\) implies

Thus, according to our notation, it is easy to check that

By sending \(\epsilon \rightarrow 0\), one gets

This and (5.10) of Lemma 5.1 imply (5.14). \(\square \)

Lemma 5.4

For any given \(D>0\) and \(\alpha \in {\mathbb {R}}\), we have \(\lim _{L\rightarrow \infty }d_*(\alpha ,L,D)=d_\infty (\alpha ,D)\ge 0\), where \(d_\infty (\alpha ,D)\) is monotone non-increasing with respect to \(\alpha \in {\mathbb {R}}\). Moreover, if \(I_0(t)>0\) for \(t\in [0,T]\) and \(g(I)\ge aI^{\gamma }\) for \(I\in [0,\max _{t\in [0,T]}I_0(t)]\) for some positive constants \(a\) and \(\gamma \), then there exists a unique \(\alpha ^*>0\) such that \(d_\infty (\alpha ,D)>0\) if \(\alpha <\alpha ^*\) and \(d_\infty (\alpha ,D)=0\) if \(\alpha \ge \alpha ^*\).

Proof

Clearly, Theorem 1.5 asserts that \(d_*(\alpha ,D,L)\) is strictly monotone decreasing in \(L>0\) and \(\alpha \in {\mathbb {R}}\). In addition, by Lemma 5.1, \(\lim _{L\rightarrow \infty }d_*(\alpha ,D,L)=d_\infty (\alpha ,D)\) exists, and so \(d_\infty (\alpha ,D)\ge 0\) is monotone non-increasing with respect to \(\alpha \in {\mathbb {R}}\).

For simplicity, let us denote

Then by the monotonicity of the principal eigenvalue with respect to the weight function, we find

It follows from [34, Lemma 6.3] that

On the other hand, we have

From [26, Theorem 3.19], it follows that

In view of (5.15) to (5.18), together with the monotonicity of \(d_\infty (\alpha ,D)\) in \(\alpha \in {\mathbb {R}}\), it easily follows that \(d_\infty (\alpha ,D)>0\) if \(\alpha <\alpha ^*\), and \(d_\infty (\alpha ,D)=0\) if \(\alpha \ge \alpha ^*\) for some \(\alpha ^*>0\). \(\square \)

From Lemmas 5.3 and 5.4, given \(\alpha \in {\mathbb {R}}\) and \(D>0\), if \(d\in (d_\infty (\alpha ,D),g^*)\), then there exists a unique \(L_*:=L_*(d,\alpha ,D)\) such that \(d=d_*(\alpha ,D,L_*)\). With the help of the above two lemmas and Theorem 5.1, we obtain the following result.

Theorem 5.3

For any given \(D>0\) and \(\alpha \in {\mathbb {R}}\), there hold

where \(d_\infty (\alpha ,D)\) is a nonnegative monotone decreasing function of \(\alpha \in {\mathbb {R}}\), and if \(I_0(t)>0\) for \(t\in [0,T]\) and \(g(I)\ge aI^{\gamma }\) for \(I\in [0,\max _{t\in [0,T]}I_0(t)]\) for some positive constants \(a\) and \(\gamma \), then there exists a unique \(\alpha ^*>0\) such that \(d_\infty (\alpha ,D)>0\) if \(\alpha <\alpha ^*\) and \(d_\infty (\alpha ,D)=0\) if \(\alpha \ge \alpha ^*\). Moreover, the following hold:

-

(i)

If \(0<d\le d_\infty (\alpha ,D)\), then (5.5) has at least one positive \(T\)-periodic solution and the uniform persistence property holds for (5.5) for each \(L>0\).

-

(ii)

If \(d\in (d_\infty (\alpha ,D),g^*)\), then (5.5) has at least one positive \(T\)-periodic solution and the uniform persistence property holds for (5.5) provided that \(0<L<L_*\); while zero is the unique nonnegative \(T\)-periodic solution to (5.5) and the extinction property holds for (5.5) provided that \(L\ge L_*\).

-

(iii)

If \(d\ge g^*\), then zero is the unique nonnegative \(T\)-periodic solution to (5.5) and the extinction property holds for (5.5) for each \(L>0\).

Theorem 5.3 also implies that a critical water column depth may or may not exist, which depends on the values of \(\alpha \) and \(d\). If a critical water column depth exists, it must be unique. Assume that \(I_0(t)>0\) on \([0,T]\) and \(g(I)\ge aI^{\gamma }\) for \(I\in [0,\max _{t\in [0,T]}I_0(t)]\) for positive constants \(a\) and \(\gamma \). Then, according to Theorem 5.3, there exists a unique \(\alpha ^*>0\) such that for every \(\alpha <\alpha ^*\), if \(0<d\le d_\infty (\alpha ,D)\), there will be no critical water column depth and the phytoplankton can bloom for any water column depth; while if \(d_\infty (\alpha ,D)<d<g^*\), there does exist a critical water column depth \(L^*\) such that the phytoplankton is uniformly persistent if and only if the water column depth \(L\) is less than \(L^*\). On the other hand, for every \(\alpha \ge \alpha ^*\), if \(0<d<g^*\), then the critical water column depth exists and the phytoplankton persists if and only if \(L<L_*\). However, once \(d\) is suitably large (i.e., \(d\ge g^*\)), then the phytoplankton can not bloom for any water depth \(L\). We believe that these assertions remain true if only (5.3) holds.

Lastly, let us discuss the existence of a critical turbulent diffusion coefficient. It turns out that this case is more subtle, as shown in [34] and [29] when \(I_0(t)=I_0\) is a positive constant. First of all, if \(\alpha \le 0\), Theorem 1.5 shows that, given \(L>0\), \(d_*(\alpha ,D,L)\) is strictly monotone decreasing in \(D>0\). Moreover, due to Theorem 1.1, it follows that

for any given \(L>0\) and \(\alpha \le 0\). On the other hand, by Lemma 5.1, we have \(\limsup _{D\rightarrow 0}d_*(\alpha ,D,L)\le g^*\). Hence, given \(L>0\) and \(\alpha \le 0\), we obtain

Making use of Theorem 1.3, given \(\alpha \in {\mathbb {R}}\) and \(L>0\), there holds

These facts tell us that, given \(\alpha \le 0\) and \(L>0\), for every \(d\in (\overline{g},g^*)\), there is a unique \(D_*=D_*(d,\alpha ,L)\) such that \(d=d_*(\alpha ,D_*,L)\).

When \(\alpha >0\), Theorem 1.2 states, for any fixed \(L>0\),

As a consequence of the above results and Theorem 5.1, we have

Theorem 5.4

For any given \(\alpha \in {\mathbb {R}}\) and \(L>0\), there holds

For any \(\alpha >0\) and \(L>0\), there holds

while for any \(\alpha \le 0\) and \(L>0\), we have

Moreover, given \(\alpha \le 0\) and \(L>0\), the following hold:

-

(i)

If \(0<d\le \overline{g}\), then (5.5) has at least one positive \(T\)-periodic solution and the uniform persistence property holds for (5.5) for each \(D>0\).

-

(ii)

If \(d\in (\overline{g},g^*)\), then (5.5) has at least one positive \(T\)-periodic solution and the uniform persistence property holds for (5.5) provided that \(0<D<D_*\); while zero is the unique nonnegative \(T\)-periodic solution to (5.5) and the extinction property holds for (5.5) provided that \(D\ge D_*\).

-

(iii)

If \(d\ge g^*\), then zero is the unique nonnegative \(T\)-periodic solution to (5.5) and the extinction property holds for (5.5) for each \(D>0\).

Theorem 5.4 shows that, for the buoyant species (i.e., \(\alpha <0\)), a critical turbulent diffusion rate may or may not exist for buoyant species, and whenever it exists, it must be unique. However, as far as the sinking species is concerned (i.e., \(\alpha >0\)), we are unable to say anything about the existence of critical turbulent diffusion rates. In [34], when \(I_0(t)\) is a positive constant, the authors showed that there may exist two critical turbulent diffusion rates, which was first observed numerically in [29]. It would be interesting to confirm that this remains true in the case where (5.4) holds.

References

Aleja, D., López-Gómez, J.: Some paradoxical effects of the advection on a class of diffusive equations in ecology. Discrete Contin. Dyn. Syst. B 19, 3031–3056 (2014)

Álvarez-Caudevilla, P., Du, Y., Peng, R.: Qualitative analysis of a cooperative reaction-diffusion system in spatiotemporally degenerate environment. SIAM J. Math. Anal. 46, 499–531 (2014)

Amann, H., López-Gómez, J.: A priori bounds and multiple solutions for superlinear indefinite elliptic problems. J. Differ. Equ. 146, 336–374 (1998)

Anton, I., López-Gómez, J.: The strong maximum principle for cooperative periodic-parabolic systems and the existence of principal eigenvalues. Proceedings First WCNA, Tampa (1992)

Berestycki, H., Hamel, F., Nadirashvili, N.: Elliptic eigenvalue problems with large drift and applications to nonlinear propagation phenomena. Commun. Math. Phys. 253, 451–480 (2005)

Berestycki, H., Nirenberg, L., Varadhan, S.R.S.: The principal eigenvalue and maximum principle for second-order elliptic operators in general domains. Commun. Pure Appl. Math. 47, 47–92 (1994)

Berestycki, H., Rossi, L.: On the principal eigenvalue of elliptic operators in \(\mathbb{R}^N\) and applications. J. Eur. Math. Soc. 8, 195–215 (2006)

Bucur, D., Daners, D.: An alternative approach to the Faber–Krahn inequality for Robin problems. Cal. Var. Partial Differ. Equ. 37, 75–86 (2010)

Cantrell, R.S., Cosner, C.: Spatial Ecology via Reaction–Diffusion Equations, Series in Mathematical and Computational Biology. Wiley, Chichester (2003)

Chen, X.F., Lou, Y.: Principal eigenvalue and eigenfunctions of an elliptic operator with large advection and its application to a competition model. Indiana Univ. Math. J. 57, 627–658 (2008)

Chen, X.F., Lou, Y.: Effects of diffusion and advection on the smallest eigenvalue of an elliptic operator and their applications. Indiana Univ. Math. J. 61, 45–80 (2012)

Dancer, E.N.: On the principal eigenvalue of linear cooperating elliptic systems with small diffusion. J. Evol. Equ. 9, 419–428 (2009)

Dancer, E.N.: On the least point of the spectrum of certain cooperative linear systems with a large parameter. J. Differ. Equ. 250, 33–38 (2011)

Dancer, E.N., Hess, P.: Behaviour of a semilinear periodic-parabolic problem when a parameter is small. In: Functional analytic methods for partial differential equations, Lecture Notes in Mathematics, vol. 1450. Springer, Berlin, pp. 12–19

Daners, D.: Eigenvalue problems for a cooperative system with a large parameter. Adv. Nonlinear Studies 13, 137–148 (2013)

Daners, D.: A Faber–Krahn inequality for Robin problems in any space dimension. Math. Ann. 335, 767–785 (2006)

Daners, D., Kennedy, J.: Uniqueness in the Faber–Krahn inequality for Robin problems. SIAM J. Math. Anal. 39, 1191–1207 (2007)

Daners, D., Koch Medina, P.: Abstract evolution equations, periodic problems and applications, Pitman Research Notes in Mathematics Series, vol. 279. Longman Scientific & Technical, Harlow (copublished in the United States with Wiley, New York) (1992)

Daners, D., López-Gómez, J.: The singular perturbation problem for the periodic-parabolic logistic equation with indefinite weight functions. J. Dyn. Differ. Equ. 6, 659–670 (1994)

Devinatz, A., Ellis, R., Friedman, A.: The asymptotic behaviour of the first real eigenvalue of the second-order elliptic operator with a small parameter in the higher derivatives, II. Indiana Univ. Math. J. 23, 991–1011 (1973/1974)

Devinatz, A., Friedman, A.: Asymptotic behavior of the principal eigenfunction for a singularly perturbed Dirichlet problem. Indiana Univ. Math. J. 27, 143–157 (1978)

Du, Y.: Order Structure and Topological Methods in Nonlinear Partial Differential Equations. Maximum Principles and Applications, vol. 1. World Scientific, Singapore (2006)

Du, Y., Hsu, S.-B.: Concentration phenomena in a nonlocal quasi-linear problem modeling phytoplankton: I. Existence. SIAM J. Math. Anal. 40, 1419–1440 (2008)

Du, Y., Hsu, S.-B.: Concentration phenomena in a nonlocal quasi-linear problem modeling phytoplankton: II. Limiting profile. SIAM J. Math. Anal. 40, 1441–1470 (2008)

Du, Y., Hsu, S.-B.: On a nonlocal reaction-diffusion problem arising from the modeling of phytoplankton growth. SIAM J. Math. Anal. 42, 1305–1333 (2010)

Du, Y., Mei, L.F.: On a nonlocal reaction–diffusion–advection equation modelling phytoplankton dynamics. Nonlinearity 24, 319–349 (2011)

Du, Y., Peng, R.: The periodic logistic equation with spatial and temporal degeneracies. Trans. Am. Math. Soc. 364, 6039–6070 (2012)

Du, Y., Peng, R.: Sharp spatiotemporal patterns in the diffusive time-periodic logistic equation. J. Differ. Equ. 254, 3794–3816 (2013)

Ebert, U., Arrayas, M., Temme, N., Sommeojer, B., Huisman, J.: Critical condition for phytoplankton blooms. Bull. Math. Biol. 63, 1095–1124 (2001)

Friedman, A.: The asymptotic behaviour of the first real eigenvalue of a second order elliptic operator with a small parameter in the highest derivatives. Indiana Univ. Math. J. 22, 1005–1015 (1973)

Gerla, D.J., Wolf, W.M., Huisman, J.: Photoinhibition and the assembly of light-limited phytoplankton communities. Oikos 120, 359–368 (2011)

Grebenkov, D.S., Nyuyen, B.-T.: Geometrical structure of Laplacian eigenfunctions. SIAM Rev. 55, 601–667 (2013)

Hess, P.: Periodic-parabolic Boundary Value Problems and Positivity, Pitman Research Notes in Mathematics, vol. 247. Longman Sci. Tech., Harlow (1991)

Hsu, S.-B., Lou, Y.: Single phytoplankton species growth with light and advection in a water column. SIAM J. Appl. Math. 70, 2942–2974 (2010)

Huisman, J., Arrayas, M., Ebert, U., Sommeijer, B.: How do sinking phytoplankton species manage to persist? Am. Nat. 159, 245254 (2002)

Huisman, J., van Oostveen, P., Weissing, F.J.: Species dynamics in phytoplankton blooms: incomplete mixing and competition for light. Am. Nat. 154, 46–67 (1999)

Húska, J.: Exponential separation and principal Floquet bundles for linear parabolic equations on general bounded domains: nondivergence case. Trans. Am. Math. Soc. 360, 4639–4679 (2008)

Hutson, V., López-Gómez, J., Mischaikow, K., Vickers, G.: Limiting behaviour for a competing species problem with diffusion. WSSIAA 4, 343–358 (1995)

Hutson, V., Mischaikow, K., Polácik, P.: The evolution of dispersal rates in a heterogeneous time-periodic environment. J. Math. Biol. 43, 501–533 (2001)

Hutson, V., Shen, W., Vickers, G.T.: Estimates for the principal spectrum point for certain time-dependent parabolic operators. Proc. Am. Math. Soc. 129, 1669–1679 (2000)

Ishii, H., Takagi, I.: Global stability of stationary solutions to a nonlinear diffusion equation in phytoplankton dynamics. J. Math. Biol. 16, 1–24 (1982/1983)

Kolokolnikov, T., Ou, C.H., Yuan, Y.: Phytoplankton depth profiles and their transitions near the critical sinking velocity. J. Math. Biol. 59, 105–122 (2009)

Ladyzenskaja, O.A., Solonnikov, V.A., Uralceva, N.N.: Linear and Quasi-Linear Equations of Parabolic Type. AMS, Providence (1968)

Lieberman, G.M.: Second Order Parabolic Differential Equations. World Scientific Publ. Co. Inc, River Edge (1996)

López-Gómez, J.: Classifying smooth supersolutions for a general class of elliptic boundary value problems. Adv. Differ. Equ. 8, 1025–1042 (2003)

López-Gómez, J.: Linear Second order Elliptic Operators. WSP, Singapore (2013)

López-Gómez, J., Montenegro, M.: The effects of transport on the maximum principle. J. Math. Anal. Appl. 403, 547–557 (2013)

Nadin, G.: The principal eigenvalue of a space-time periodic parabolic operator. Ann. Mat. Pura Appl. 188, 269–295 (2009)

Peng, R.: Long-time behavior of a cooperative periodic-parabolic system: temporal degeneracy versus spatial degeneracy. Calc. Var. Partial Differ. Equ. (2014). doi:10.1007/s00526-014-0745-6

Peng, R., Zhao, X.-Q.: A reaction-diffusion SIS epidemic model in a time-periodic environment. Nonlinearity 25, 1451–1471 (2012)

Peng, R., Zhao, X.-Q.: A nonlocal and periodic reaction–diffusion–advection model of a single phytoplankton species (2014, preprint)

Protter, M.H., Weinberger, H.F.: Maximum Principles in Differential Equations. Springer, New York (1984)

Ryabov, A.B., Rudolf, L., Blasius, B.: Vertical distribution and composition of phytoplankton under the influence of an upper mixed layer. J. Theor. Biol. 263, 120–133 (2010)

Shigesada, N., Okubo, A.: Analysis of the self-shading effect on algal vertical distribution in natural waters. J. Math. Biol. 12, 311–126 (1981)

Walter, W.: A theorem on elliptic differential inequalities and applications to gradient bounds. Math. Z. 200, 293–299 (1989)

Wentzell, A.D.: On the asymptotic behavior of the first eigenvalue of a second order differential operator with small parameter in higher derivatives. Theory Probab. Appl. 20, 599–602 (1975)

Zhao, X.-Q.: Dynamical Systems in Population Biology. Springer, New York (2003)

Acknowledgments

We are very grateful to the anonymous referee for careful reading and helpful comments on our original manuscript, especially for pointing out to us several relevant references.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by P. Rabinowitz.

R. Peng was partially supported by NSF of China (11271167, 11171319), the Program for New Century Excellent Talents in University (NECT-11-0995), the Priority Academic Program Development of Jiangsu Higher Education Institutions, and Natural Science Fund for Distinguished Young Scholars of Jiangsu Province (BK20130002); and X.-Q. Zhao was partially supported by the NSERC of Canada and the URP fund of Memorial University.

Rights and permissions

About this article

Cite this article

Peng, R., Zhao, XQ. Effects of diffusion and advection on the principal eigenvalue of a periodic-parabolic problem with applications. Calc. Var. 54, 1611–1642 (2015). https://doi.org/10.1007/s00526-015-0838-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00526-015-0838-x