Abstract

In this paper, by using the adaptive control method, the global matrix-projective synchronization of delayed fractional-order competitive neural network with different time scales is researched for the first time. Firstly, the fractional-order global matrix-projective synchronization is defined. Then, in order to achieve the matrix-projective synchronization, the sufficient condition is obtained under an adaptive controller and its effectiveness is proved by combining the fractional-order Barbalat theory with a suitable Lyapunov–Krasovskii functional as well as some fractional-order differential inequalities. And all unknown parameters are identified and estimated to the fixed constants successfully. Finally, as applications, a numerical example with simulations is employed to demonstrate the feasibility and efficiency of the new synchronization analysis.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Fractional calculus, which is applied to dealing with integration and differentiation for arbitrary non-integer orders, has become a powerful mathematical tool to research the practical problems [1]. Fractional-order system has strong memory and hereditary characteristic, and the order parameter of derivate can improve system performance, so it can better describe the dynamical behaviors and internal structure of many practical problems than integer ones. So far, many valuable results of fractional-order dynamical systems have been obtained, which are widely applied in many areas, such as mathematical physics [2,3,4,5,6,7,8,9,10,11,12], optimum theory [13], financial problems [14], anomalous diffusion [15], secure communication [16], biological systems [17], and neural network [18,19,20,21,22,23,24,25]

In the past decades, in order to solve some practical problems, some famous neural networks such as Hopfield neural network, Cohen–Grossberg neural network, cellular neural network, and bidirectional associative memory neural network have appeared one after another. It should be pointed out that these neural networks have only one single time scale, which means they only consider the state variable of neuron activity. However, in a dynamical neural network, the synaptic weights also change with time due to the learning process and the variation of connection weights may affect its dynamics. From 1996 to 2010, Meyer-Bäse and his cooperators first presented the competitive neural network with different time scales and analyze its stability [26,27,28]. This competitive neural network model combines the additive activation kinetics of Hopfield neural network with a competitive learning rule of synaptic modifications, so it can be reduced to Hopfield neural network without learning. In this network, there are two state variables: the short-term memory (STM) and long-term memory (LTM), which, respectively, describe the rapid neural activity and slow unsupervised synaptic modifications. So, the competitive neural network has two time scales, namely the rapid change of state and the slow change of synapse under external stimulation. As a result of the wide applications in image processing, signal processing, pattern recognition, and control theory and so on, many researchers began to explore the dynamical phenomena of delayed competitive neural network with different time scales, such as the global asymptotic stability [29], existence and exponential stability [30], multistability and instability [31, 32], adaptive synchronization [33], cluster synchronization [34], exponential synchronization [35], and finite-time synchronization [36]

However, all the above research works focus on the integer-order competitive neural network. Recently, because of the introduction of fractional calculus, fractional-order competitive network is attracting more and more attentions. In [37,38,39], the multistability, global stability, and complete synchronization for fractional-order competitive neural network are researched. In [40], Pratap and his cooperators concentrate on exploring the synchronization problem in finite time of the delayed fractional-order memristive competitive neural network. These studies have promoted the development of fractional-order competitive neural network to some extent, but it is still in the stage of exploration and development.

Projective synchronization, which means the drive and response systems could be synchronized up to a scaling factor, can be applied in vast areas of physics and engineering science, especially the secure communication, signal encryption, affine cipher, image encryption, and text encryption in communication area [41,42,43]. What’s more, with the development of fractional calculus, projective synchronization for fractional-order neural network has attracted many attentions. In [44], according to the comparison principle, the authors designed a suitable controller and realized the projective synchronization for delayed fractional-order neural network. In [45, 46], researchers explored the projective and quasi-projective synchronization for fractional-order neural network in complex domain. In [47, 48], the authors presented the new sufficient conditions and designed the suitable controllers to ensure projective synchronization for the delayed fractional-order memristor-based neural network. In [49], by introducing the new sliding mode control laws, Wu and his cooperators realized the projective synchronization in finite time for non-identical fractional-order neural network.

However, most of the existing literatures like the above researches have concentrated on the projective synchronization whose proportion factor is only a fixed constant or a diagonal matrix, while the simple scaling factor could not guarantee high security for communication. Additionally, because of the non-local property and weakly singular kernels of fractional-order derivatives, fractional-order competitive neural network own better and more comprehensive description of dynamical behaviors of neurons. In this paper, we will study the matrix-projective synchronization for fractional-order competitive neural network. In particular, the complexity and unpredictability of matrix scaling factors in matrix-projective synchronization can effectively enhance the security of secure communication.

Based on the above discussions, the main contributions of this paper are summarized as follows: (1) Our work extends the projective scaling factor to an arbitrary matrix and proposes the matrix-projective synchronization for delayed fractional-order competitive neural network with different time scales. In practical applications, fractional matrix-projective synchronization has obvious advantages of strong real-time performance and high security, which can guarantee more security image encryption and text encryption in secure communication. (2) Most of the existing works on the chaotic synchronization for neural network assume that the systems’ parameters are known in advance, but in many actual applications, system parameters may not be exactly known in advance and the synchronization behaviors will be destroyed and broken by the impact of these uncertainties. To solve this problem, our work will use the adaptive control scheme to realize the matrix-projective synchronization for delayed fractional-order competitive neural network with unknown parameters. (3) Time delays always occur in the signal transmission between neurons in neural network. Due to the finite propagation velocity of neural signals and the finite switching speed of amplifiers (neurons), time delays are omnipresent in artificial neural network and, in particular, in the electronic hardware implementation. Time delays will make the system’s dynamical behaviors more complicated and destroy the stable equilibrium and synchronization process. Therefore, time delay will be considered in our research, which is more consistent with the actual competitive neural network.

In Sect. 2, some Lemmas of fractional calculus are introduced and n-dimensional delayed fractional-order competitive neural network with different time scales is constructed. In Sect. 3, adaptive feedback controller is designed to realize the matrix-projective synchronization for this fractional-order competitive neural network with different time scales. As applications, matrix-projective synchronization for a 2-dimensional fractional-order competitive neural network is achieved and its unknown parameters are estimated to the fixed constants successfully in Sect. 4 and 5 summarizes the whole research work.

2 Preliminaries and system description

Definition 1

[1] Fractional integral for an integral function \(f:\left[ {t_{0} , + \infty } \right) \to {\mathbb{R}}\) is defined by

where \({\mathbb{R}}\) is the space of real number and \(\varGamma \left( \alpha \right) = \int_{0}^{ + \infty } {e^{ - t} t^{\alpha - 1} } {\text{d}}t\) is Gamma function.

Definition 2

[1] The definition of Caputo derivative for a function \(f\left( t \right) \in C^{n} \left( {\left[ {t_{0} , + \infty } \right),{\mathbb{R}}} \right)\) is

where \(C^{n} \left( {\left[ {t_{0} , + \infty } \right),{\mathbb{R}}} \right)\) represents the space composed of n-order continuous differentiable functions from \(\left[ {t_{0} , + \infty } \right)\) to \({\mathbb{R}}\),\(f^{\left( n \right)}\) represents the nth-order derivative of \(f\left( x \right)\) and \(n\) is the positive integer satisfying \(n - 1 < \alpha < n\).

Especially, when \(0 < \alpha < 1\), \({}_{{t_{0} }}^{C} D_{t}^{\alpha } f\left( t \right) = \frac{1}{{\varGamma \left( {1 - \alpha } \right)}}\int_{{t_{0} }}^{t} {\frac{{f^{'} \left( \xi \right)}}{{\left( {t - \xi } \right)^{\alpha } }}{\text{d}}\xi }\). If we choose derivative order \(\alpha = 1\), the Caputo derivative operation \({}_{{t_{0} }}^{C} D_{t}^{\alpha } f\left( t \right)\) coincides with the integer-order derivative \(\frac{{{\text{d}}f\left( t \right)}}{{{\text{d}}t}}\).

Lemma 1

[1] If Caputo derivative\({}_{{t_{0} }}^{C} D_{t}^{\alpha } f\left( t \right)\)is integrable, then

Especially, for \(0 < \alpha < 1,\) one can obtain

Lemma 2

[2] If\(x\left( t \right) \in {\mathbb{R}}\)is continuously differentiable, then for any time instant\(t \ge 0\), the relationship\(\frac{1}{2}{}_{a}^{C} D_{t}^{\alpha } \left[ {x^{2} \left( t \right)} \right] \le x\left( t \right){}_{a}^{C} D_{t}^{\alpha } x\left( t \right),{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} \left( {0 < \alpha < 1} \right)\)holds.

Lemma 3

[3] For any given vectors\(a,b \in R^{N}\)and positive definite matrix\(\varOmega \in R^{N \times N}\), the following relationship holds

Lemma 4

[4] (Fractional-order Barbalat lemma): Let\(V\left( t \right) \in C^{1} \left( {{\mathbb{R}}^{ + } } \right)\)be a bounded uniformly continuous function and\(\mathop {\lim }\nolimits_{t \to \infty } I^{\alpha } V\left( t \right) \to L\), where\(L\)is an arbitrary constant. If\(V\left( t \right)\)is a positive function, then\(V\left( t \right)\)converges to zero as\(t\)goes to infinity.

The Caputo delayed fractional-order competitive network with different time scales is described by the following state equations

where \(N\),\(P\) denote the number of STM states and the constant external stimulus, respectively; \(X_{i} \left( t \right)\) represents the neuron of current activity level;\(F_{i} \left( \cdot \right)\) shows the output of neurons; \(M_{ij} \left( \cdot \right)\) represents synaptic efficiency; \(\delta_{i}\) shows the constant external stimulus; \(A_{i} > 0\) describes the time constant of neuron; \(B_{i}\) represents the strength of external stimulus; \(C_{i} > 0\) is the disposable scaling constant; \(D_{ik}\) and \(D_{ik}^{\tau }\), respectively, represents the connection weight and synaptic weight of delayed feedback between the ith neuron and kth neuron;\(\varepsilon > 0\) shows the time scale of STM state [27]. \(\tau > 0\) characterizes the time delay; Additionally, \(\varepsilon ,\tau\) and \(\sigma_{j}\) are assumed to be known in prior, while the parameters \(A_{i} ,C_{i} ,B_{j} ,D_{ik} ,D_{ik}^{\tau }\) are unknown.

In order to facilitate discussion of the next synchronization analysis, we will translate system (6) into the following expression. Let

where \(M_{i} \left( t \right) = \left( {M_{i1} \left( t \right),M_{i2} \left( t \right), \ldots ,M_{ip} \left( t \right)} \right)^{T} ,\)\(\sigma = \left( {\sigma_{1} ,\sigma_{2} , \ldots ,\sigma_{p} } \right)^{T} ,\) then system (6) is written as

where \(\left| \sigma \right|^{2} = \sigma_{1}^{2} + \sigma_{2}^{2} + \cdots + \sigma_{p}^{2}\) is a constant. Without loss generality, the input stimulus vector \(\sigma\) can be normalized by unit magnitude \(\left| \sigma \right|^{2} = 1\). System (8) are simplified to

and its compact form is

where \(A = diag\left( {A_{1} ,A_{2} , \ldots ,A_{N} } \right),\)\(B = diag\left( {B_{1} ,B_{2} , \ldots ,B_{N} } \right),\)\(C = diag\left( {C_{1} ,C_{2} , \ldots ,C_{N} } \right),\)\(D = \left( {D_{ik} } \right)_{N \times N} ,\)\(D^{\tau } = \left( {D_{ik}^{\tau } } \right)_{N \times N} ,\)\(X\left( t \right) = \left( {X_{1} \left( t \right),X_{2} \left( t \right), \ldots ,X_{N} \left( t \right)} \right)^{T} ,\)\(S\left( t \right) = \left( {S_{1} \left( t \right),S_{2} \left( t \right), \ldots ,S_{N} \left( t \right)} \right)^{T} ,\)\(F\left( {X\left( t \right)} \right) = \left( {F_{1} \left( {X_{1} \left( t \right)} \right),F_{2} \left( {X_{2} \left( t \right)} \right), \ldots ,F_{N} \left( {X_{N} \left( t \right)} \right)} \right)^{T} ,\)\(F\left( {X\left( {t - \tau } \right)} \right) = \left( {F_{1} \left( {X_{1} \left( {t - \tau } \right)} \right),F_{2} \left( {X_{2} \left( {t - \tau } \right)} \right), \ldots ,F_{N} \left( {X_{N} \left( {t - \tau } \right)} \right)} \right)^{T} .\)

Throughout this paper, we will focus on studying the matrix-projective synchronization problem of (10), so the response system is designed as

where \(Y\left( t \right) = \left( {Y_{1} \left( t \right),Y_{2} \left( t \right), \ldots ,Y_{N} \left( t \right)} \right)^{T} ,\)\(R\left( t \right) = \left( {R_{1} \left( t \right),R_{2} \left( t \right), \ldots ,R_{N} \left( t \right)} \right)^{T} ,\)\(\hat{A} = {\text{diag}}\left( {\hat{A}_{1} ,\hat{A}_{2} , \ldots ,\hat{A}_{N} } \right),\)\(\hat{B} = {\text{diag}}\left( {\hat{B}_{1} ,\hat{B}_{2} , \ldots ,\hat{B}_{N} } \right),\)\(\hat{C} = {\text{diag}}\left( {\hat{C}_{1} ,\hat{C}_{2} , \ldots ,\hat{C}_{N} } \right),\)\(\hat{D} = \left( {\hat{D}_{ik} } \right)_{N \times N}\) and \(\hat{D}^{\tau } = \left( {\hat{D}_{ik}^{\tau } } \right)_{N \times N}\) are respectively the estimations of unknown matrices \(A,B,C,D\) and \(D^{\tau }\) .\(U_{1} \left( t \right)\) and \(U_{2} \left( t \right)\) are the control inputs.

Assumption 1

There exist positive constants \(F_{i} > 0\) that make the output functions \(f_{i} ( \cdot )\) meet Lipschitz conditions:

3 Fractional global matrix-projective synchronization analysis

In this part, by using the adaptive control techniques, we will explore the matrix-projective synchronization problem for two delayed fractional competitive neural networks (10) and (11).

The error states of matrix-projective synchronization are

where \(\varLambda = \left( {\varLambda_{ij} } \right)_{n \times n}\) means an arbitrary constant projective matrix whose element in each row cannot be equal to zero at the same time, \(e = \left( {e_{1} ,e_{2} , \ldots ,e_{N} } \right)^{T} ,\)\(w = \left( {w_{1} ,w_{2} , \ldots ,w_{N} } \right)^{T}\). Next we will propose the definition of matrix-projective synchronization.

Definition 3

Systems (10) and (11) are said to be matrix-projective synchronization if any of their solutions meet

Theorem 1

The two delayed fractional competitive neural network (10) and (11) can realize the matrix-projective synchronization under the following controller

where the feedback strength \(W = diag\left( {W_{1} ,W_{2} , \ldots ,W_{N} } \right)\) and \(V = diag\left( {V_{1} ,V_{2} , \ldots ,V_{N} } \right)\) are adjusted according to the update rules

and

where \(\lambda_{i} ,\zeta_{i} ,\alpha_{i} ,\beta_{ik} ,\gamma_{ik} ,\xi_{i} ,\eta_{i}\) are arbitrary positive constants.

Proof

Taking Caputo derivative of both sides of error function (13), respectively, and using (10) and (11), one can obtain the error system as

Now, we introduce the Lyapunov–Krasovskii functional as

where \(Q\) is a positive definite matrix and \(\phi_{i} ,\varphi_{i}\) are constants to be determined. Taking Caputo derivative of \(V\left( t \right)\) along the trajectory of system (18), using Lemma 2 and substituting into the controller (15), one can get

According to Lemma 3 and Assumption 1, the following inequality holds

where \(G = {\text{diag}}\left( {G_{1} ,G_{2} , \ldots ,G_{N} } \right)\). Substituting (21)–(24) into (20) gives

Let

then one has

After taking the fractional integral on both sides of (29), we have

Using definition (1), Lemma 1 and Eq. (30), we can get

so \(V\left( t \right) \le V\left( {t_{0} } \right)\) for all \(t \ge t_{0}\), this together with (19), we can conclude that \(e_{i} \left( t \right),w_{i} \left( t \right),W_{i} \left( t \right),V_{i} \left( t \right),\)

\(\hat{A}_{i} \left( t \right),\hat{D}_{ik} \left( t \right),\hat{D}_{ik}^{\tau } \left( t \right),\hat{B}_{i} \left( t \right)\) and \(\hat{C}_{i} \left( t \right)\) are bounded on \(t \ge t_{0}\). Then, according to error system (18), we know \({}_{0}^{C} D_{t}^{\alpha } \left( {e^{T} \left( t \right)e\left( t \right) + w^{T} \left( t \right)w\left( t \right)} \right) \le \varXi\) is bounded where \(\varXi\) is a positive constant.

Next, we will prove \(e^{T} \left( t \right)e\left( t \right) + w^{T} \left( t \right)w\left( t \right)\) is uniformly continuous. For \(t_{0} \le T_{1} < T_{2}\), by using definition (1) and Lemma 1, we get

where \(\left| {T_{2} - T_{1} } \right| < \delta \left( \varepsilon \right) = \left( {{\raise0.7ex\hbox{${\varepsilon \varGamma \left( {\alpha + 1} \right)}$} \!\mathord{\left/ {\vphantom {{\varepsilon \varGamma \left( {\alpha + 1} \right)} {2\varXi }}}\right.\kern-0pt} \!\lower0.7ex\hbox{${2\varXi }$}}} \right)^{{\frac{1}{\alpha }}}\) So \(e^{T} \left( t \right)e\left( t \right) + w^{T} \left( t \right)w\left( t \right)\) is uniformly continuous. In addition, from (29), we know there is a non-negative function \(m\left( t \right)\) that satisfies

After taking the fractional integral on both sides of (33), we get

i.e.,

Hence,\(\mathop {\lim }\limits_{t \to + \infty } \left( {e^{T} \left( t \right)e\left( t \right) + w^{T} \left( t \right)w\left( t \right)} \right)\) is bounded. According to Lemma 4, one can concluded that \(e^{T} \left( t \right)e\left( t \right) + w^{T} \left( t \right)w\left( t \right) \to 0\), i.e.,

Then, according to Definition 3 of matrix-projective synchronization, the trivial solution of error system (18) is globally asymptotically stable. So, drive system (10) and response system (11) completely realize the matrix-projective synchronization. At the same time, by using (17), we can get \({}_{0}^{C} D_{t}^{\alpha } \hat{A}_{i} ,\)\({}_{0}^{C} D_{t}^{\alpha } \hat{B}_{i} ,\)\({}_{0}^{C} D_{t}^{\alpha } \hat{C}_{i} ,\)\({}_{0}^{C} D_{t}^{\alpha } \hat{D}_{ik} ,\)\({}_{0}^{C} D_{t}^{\alpha } \hat{D}_{ik}^{\tau } \to 0\) which means \(\hat{A}_{i} ,\hat{B}_{i} ,\hat{C}_{i} ,\hat{D}_{ik}\) and \(\hat{D}_{ik}^{\tau }\) can tend to some fixed constants.

Theorem 1 and its proof process constitute the theoretical framework of matrix-projective synchronization for delayed fractional-order competitive neural network with different time scales and unknown parameters.

Remark 1

Substitute (15) into (11) and activate controller (15), response system (11) becomes to

According to drive system (10), controlled response system (37), and error system (18), one can research the behaviors of matrix-projective synchronization between fractional competitive neural network (10) and (11).

Particularly, because of the generality and universality of matrix-projective synchronization, if we choose different projective matrices and controllers, it can degenerate into the following special synchronization types.

Remark 2

If projective matrix \(\varLambda = I,\) systems (10) and (11) can realize the complete synchronization; if \(\varLambda = - I,\) they can realize the anti-synchronization; if \(\varLambda = mI,\)\(\left( {{\kern 1pt} {\kern 1pt} m{\kern 1pt} {\kern 1pt} \in R} \right.\left. {{\text{and}}{\kern 1pt} {\kern 1pt} m \ne 0, \pm 1} \right),\) they can achieve the projective synchronization; if \(\varLambda = {\text{diag}}\left( {m_{1} ,m_{2} , \ldots ,m_{n} } \right),\left( {i = 1,2, \ldots ,n} \right)\) and at least one of \(m_{i} \ne m_{j} \left( {i \ne j} \right)\), they can achieve the modified projective synchronization.

Remark 3

In [25], the authors pointed out that the convergence rate is affected by the fractional order \(\alpha\) of the fractional system. So, in this paper, we verify that the convergence speed of matrix-projective synchronization errors can be accelerated with the increase of fractional order \(\alpha\). In the following numerical applications, Fig. 3a and b show the convergence speed of matrix-projective synchronization errors for different fractional order and one can easily observe that the convergence speed of \(\alpha = 0.95\) is faster than that of \(\alpha = 0.8\), which show the novelty of our proposed work.

Remark 4

It is not difficult to know that, for \(\alpha = 1\), Theorem 1 and its proof are degenerated into the matrix-projective synchronization for integer-order delayed competitive neural network with different time scales and unknown parameters, which is also the new result that has not been studied yet. When we take \(\alpha = 1\) and \(\varLambda = I\), the matrix projection synchronization for fractional-order competitive neural network is reduced to the normal complete synchronization for integer-order competitive neural network [33,34,35,36]. Recently, some researchers have studied the projective synchronization for fractional-order neural network, but the synchronization scaling factor is only a fix constant or a diagonal matrix and the systems’ parameters are known in advance, which is hardly the case in real applications [38,39,40,41,42,43,44,45,46,47,48,49]. Therefore, this work generalizes the relevant results of chaotic synchronization and integer-order competitive neural network.

4 Application

In this part, a numerical example for Caputo delayed fractional-order competitive neural network is presented. In the next simulation, choose hyperbolic tangent function \(F\left( X \right) = \tanh \left( X \right)\) as the activation function and assume the parameters of system (10) are

\(A = \left( {\begin{array}{*{20}c} {A_{1} } & 0 \\ 0 & {A_{2} } \\ \end{array} } \right) = \left( {\begin{array}{*{20}c} 1 & 0 \\ 0 & 1 \\ \end{array} } \right),\) \(D = \left( {\begin{array}{*{20}c} {D_{11} } & {D_{12} } \\ {D_{21} } & {D_{22} } \\ \end{array} } \right) = \left( {\begin{array}{*{20}c} { - 3} & 2 \\ { - 3} & { - 1} \\ \end{array} } \right),\) \(D^{\tau } = \left( {\begin{array}{*{20}c} {D_{11}^{\tau } } & {D_{12}^{\tau } } \\ {D_{21}^{\tau } } & {D_{22}^{\tau } } \\ \end{array} } \right) = \left( {\begin{array}{*{20}c} { - 1} & { - 1} \\ { - 3} & { - 2} \\ \end{array} } \right),\) \(B = \left( {\begin{array}{*{20}c} {B_{1} } & 0 \\ 0 & {B_{2} } \\ \end{array} } \right) = \left( {\begin{array}{*{20}c} 1 & 0 \\ 0 & 1 \\ \end{array} } \right),\) \(C = \left( {\begin{array}{*{20}c} {C_{1} } & 0 \\ 0 & {C_{2} } \\ \end{array} } \right) = \left( {\begin{array}{*{20}c} 1 & 0 \\ 0 & 1 \\ \end{array} } \right),\) \(\tau = 2,\) \(\varepsilon = 1.\)

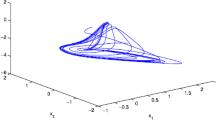

For the fractional-order model constructed in practical problems, its fractional order affects the model’s dynamical properties. In the following numerical analysis, in order to explore the synchronization behaviors of system (10), we will select fractional order \(\alpha = 0.95\) and its phase diagrams are depicted in Fig. 1 a, b with initial conditions \(\left[ {X_{1} \left( 0 \right),X_{2} \left( 0 \right),S_{1} \left( 0 \right),S_{2} \left( 0 \right)} \right] = \left[ {0.5, - \;0.3, - \;0.4,0.8} \right]\).

Phase diagrams of system (10) at \(\alpha = 0.95.\)a\(X_{1} - X_{2}\) phase diagram. b\(S_{1} - S_{2}\) phase diagram

According to Definition 3, for ease of discussion and numerical simulation, the projective matrix is selected as \(\varLambda = \left( {\varLambda_{ij} } \right)_{2 \times 2} = \left( {\begin{array}{*{20}c} { - 1} & 2 \\ { - 1} & 2 \\ \end{array} } \right)\). Certainly, we can also choose any other 2-dimensional projective matrix according to the actual needs. Then, the error function of matrix-projective synchronization is computed as

In order to achieve the matrix-projective synchronization between systems (10) and (11), we set \(\lambda_{i} = \zeta_{i} = \alpha_{i} = \xi_{i} = \eta_{i} = \beta_{ik} = \gamma_{ik} = 10,\left( {i,k = 1,2} \right)\) and the initial values of unknown parameters as \(\hat{A}\left( 0 \right) = \left( {\begin{array}{*{20}c} {0.8} & 0 \\ 0 & {0.5} \\ \end{array} } \right),\)\(\hat{D}\left( 0 \right) = \left( {\begin{array}{*{20}c} {0.8} & {0.7} \\ { - 0.4} & { - 0.8} \\ \end{array} } \right),\)\(\hat{D}^{\tau } \left( 0 \right) = \left( {\begin{array}{*{20}c} { - 1.2} & {0.5} \\ { - 0.3} & {0.9} \\ \end{array} } \right),\)\(\hat{B}\left( 0 \right) = \left( {\begin{array}{*{20}c} {0.5} & 0 \\ 0 & { - 1} \\ \end{array} } \right),\)\(\hat{C}\left( 0 \right) = \left( {\begin{array}{*{20}c} {0.1} & 0 \\ 0 & {0.1} \\ \end{array} } \right)\) and \(W\left( 0 \right) = V\left( 0 \right) = \left( {\begin{array}{*{20}c} { - 0.5} & 0 \\ 0 & { - 0.5} \\ \end{array} } \right)\). Next, by using the adaptive control strategy (15)–(17), we will analysis and simulate the synchronization behavior between systems (10) and (11).

Figure 2a–d shows the time trajectories of matrix-projective synchronization for variables \(X_{1} \sim Y_{1}\), \(X_{2} \sim Y_{2} ,\)\(S_{1} \sim R_{1}\) and \(S_{2} \sim R_{2} .\) At the same time, we can also clearly observe that variables \(- X_{1} + 2X_{2} \sim Y_{1} ,\)\(- X_{1} + 2X_{2} \sim Y_{2} ,\)\(- S_{1} + 2S_{2} \sim R_{1}\) and \(- S_{1} + 2S_{2} \sim R_{2}\) can realize the complete synchronization as depicted in Fig. 2e–h. Figure 3a, b describe the time trajectories of the synchronization errors with fractional order \(\alpha = 0.8\) and \(\alpha = 0.95\) which clearly verify the Remark 3. Figure 4a–e clearly describes that the adaptive parameters \(\hat{A},\hat{B},\hat{C},\hat{D}\) and \(\hat{D}^{\tau }\) converge to a fixed constant, respectively. As can been seen from Fig. 4f, g, when systems (10) and (11) are synchronized, the adaptive control gains \(W_{i} ,V_{i} ,\left( {i = 1,2} \right)\), respectively, tend to a positive constant. These figures and simulations clearly demonstrate the effectiveness and applicability of the adaptive controller for matrix-projective synchronization of delayed fractional competitive neural network with unknown parameters.

Matrix-projective synchronization trajectories of state variables \(X \sim Y\) and \(S \sim R.\)a Time history of \(X_{1} ,{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} Y_{1} .\)b Time history of \(X_{2} ,{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} Y_{2} .\)c Time history of \(S_{1} ,{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} R_{1} .\)d Time history of \(S_{2} ,{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} R_{2} .\)e Time history of \(- X_{1} + 2X_{2} ,{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} Y_{1} .\)f Time history of \(- X_{1} + 2X_{2} ,{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} Y_{2} .\)g Time history of \(- S_{1} + 2S_{2} ,{\kern 1pt} R_{1} .\)h Time history of \(- S_{1} + 2S_{2} ,{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} R_{2}\)

Time history of adaptive parameters \(\hat{A},\hat{B},\hat{C},\hat{D},\hat{D}^{\tau } ,W\) and \(V.\)a Estimation of parameter \(\hat{A}.\)b Estimation of parameter \(\hat{B}.\)c Estimation of parameter \(\hat{C}.\)d Estimation of parameter \(\hat{D}.\)e Estimation of parameter \(\hat{D}^{\tau } .\)f Time history of \(W.\)g Time history of \(V\)

5 Conclusions

In this paper, according to the fractional-order Barbalat theory, we have proved and realized the global matrix-projective synchronization for delayed fractional-order competitive neural network by using the adaptive control techniques and have estimated unknown parameters of the systems. In addition, a numerical example with simulations is employed to illustrate the feasibility of the synchronization analysis.

Nowadays, chaotic synchronization has become the key technology of secure communication. In our paper, since the unpredictability and flexibility of projective matrix factors can strengthen the security of secure communication, matrix-projective synchronization for delayed fractional-order competitive neural network has more obvious advantages than other synchronization types. In our next researches, we will continue to study the application of matrix-projective synchronization in secure communication and propose two secure communication schemes, one that combines the fractional matrix-projective synchronization and parameter modulation and the other that combines the coupled fractional matrix-projective synchronization and chaos hiding. As the fractional matrix-projective synchronization is a new synchronization type, the research on its application in secure communication is still in the theoretical simulation stage. How to use the theoretical research results to design a secure communication system with superior performance and realize the combination of theory and practice will be an important research topic. Evidently, the matrix-projective synchronization proposed in this paper can bring new insights into the research of the synchronization for delayed fractional-order competitive neural network with different time scales. Recently, Zhang and his cooperators have studied the stability and synchronization problems for integer-order neural network by applying the new integral inequality and inequality techniques [50,51,52,53,54], which is very important and interesting. These research works also give us great interests to study the synchronization problem for fractional-order neural network with time delay by using this method in the future works.

References

Kilbas AA, Srivastava HM, Trujillo JJ (2006) Theory and applications of fractional differential equations. Elsevier, New York

Aguila-Camacho N, Duarte-Mermoud M, Gallegos J (2014) Lyapunov functions for fractional order systems. Commun Nonlinear Sci Numer Simul 19:2951–2957

Boyd S, Ghaoui EIL, Feron E, Balakrishnan V (1994) Linear matrix inequalities in system and control theory. SIAM, Philadelphia

Gallegos JA, Duarte-Mermoud MA, Aguila-Camacho N, Castro-Linares R (2015) On fractional extensions of Barbalat Lemma. Syst Control Lett 84:7–12

Baleanu D, Inc M, Yusuf A, Aliyu AI (2017) Time fractional third-order evolution equation: symmetry analysis, explicit solutions, and conservation laws. J Comput Nonlin Dyn 13:021011

Wu GC, Baleanu D, Huang LL (2018) Novel Mittag-Leffler stability of linear fractional delay difference equations with impulse. Appl Math Lett 82:71–78

Ouannas A, Wang X, Pham VT, Grassi G, Huynh VV (2019) Synchronization results for a class of fractional-order spatiotemporal partial differential systems based on fractional Lyapunov approach. Bound Value Probl 2019:74

Inc M, Yusuf A, Aliyu AI, Baleanu D (2018) Lie symmetry analysis and explicit solutions for the time fractional generalized Burgers-Huxley equation. Opt Quant Electron 50:94

He JM, Chen FQ, Lei TF (2018) Fractional matrix and inverse matrix projective synchronization methods for synchronizing the disturbed fractional-order hyperchaotic system. Math Method Appl Sci 41:6907–6920

Yusuf A, Inc M, Aliyu AI, Baleanu D (2018) Conservation laws, soliton-like and stability analysis for the time fractional dispersive long-wave equation. Adv Differ Equ 2018:319

He JM, Chen FQ (2018) Dynamical analysis of a new fractional-order Rabinovich system and its fractional matrix projective synchronization. Chin J Phys 56:2627–2637

He JM, Chen FQ (2017) A new fractional order hyperchaotic Rabinovich system and its dynamical behaviors. Int J Non-Linear Mech 95:73–81

Jajarmi A, Hajipour M, Mohammadzadeh E, Baleanu D (2018) A new approach for the nonlinear fractional optimal control problems with external persistent disturbances. J Frankl I 355:3938–3967

Huang CD, Cai LM, Cao JD (2018) Linear control for synchronization of a fractional-order time-delayed chaotic financial system. Chaos Soliton Fract 113:326–332

Yang XJ, Machado JAT (2017) A new fractional operator of variable order: application in the description of anomalous diffusion. Phys A 481:276–283

Kiani-B A, Fallahi K, Pariz N, Leung H (2009) A chaotic secure communication scheme using fractional chaotic systems based on an extended fractional Kalman filter. Commun Nonlinear Sci Numer Simul 14:863–879

Jajarmi A, Baleanu D (2018) A new fractional analysis on the interaction of HIV with CD4 + T-cells. Chaos Soliton Fract 113:221–229

Chen LP, Liu C, Wu RC, He YG, Chai Y (2016) Finite-time stability criteria for a class of fractional-order neural networks with delay. Neural Comput Appl 27:549–556

Bao HB, Park JH, Cao JD (2015) Adaptive synchronization of fractional-order memristor-based neural networks with time delay. Nonlinear Dyn 82:1343–1354

Huang X, Fan YJ, Jia J, Wang Z, Li YX (2017) Quasi-synchronisation of fractional-order memristor-based neural networks with parameter mismatches. IET Control Theory A 11:2317–2327

Wang F, Yang YQ, Xu XY, Li L (2017) Global asymptotic stability of impulsive fractional-order BAM neural networks with time delay. Neural Comput Appl 28:345–352

Wang LM, Song QK, Liu YR, Zhao ZJ, Alsaadi FE (2017) Finite-time stability analysis of fractional-order complex-valued memristor-based neural networks with both leakage and time-varying delays. Neurocomputing 245:86–101

He JM, Chen FQ, Bi QS (2019) Quasi-matrix and quasi-inverse-matrix projective synchronization for delayed and disturbed fractional order neural network. Complexity 2019:4823709

Rajivganthi C, Rihan FA, Lakshmanan S, Muthukumar P (2018) Finite-time stability analysis for fractional-order Cohen-Grossberg BAM neural networks with time delays. Neural Comput Appl 29:1309–1320

Yu J, Hu C, Jiang H (2012) α-stability and α-synchronization for fractional-order neural networks. Neural Netw 35:82–87

Meyer-Bäse A, Ohl F, Scheich H (1996) Singular perturbation analysis of competitive neural networks with different time scales. Neural Comput Appl 8:1731–1742

Meyer-Bäse A, Pilyugin SS, Chen Y (2003) Global exponential stability of competitive neural networks with different time scales. Neural Netw 14:716–719

Meyer-Bäse A, Roberts R, Thümmler V (2010) Local uniform stability of competitive neural networks with different time-scales under vanishing perturbations. Neurocomputing 73:770–775

Liu XM, Yang CY, Zhou LN (2018) Global asymptotic stability analysis of two-time-scale competitive neural networks with time-varying delays. Neurocomputing 273:357–366

Balasundaram K, Raja R, Pratap A, Chandrasekaran S (2019) Impulsive effects on competitive neural networks with mixed delays: existence and exponential stability analysis. Math Comput Simulat 155:290–302

Xu DS, Tan MC (2018) Multistability of delayed complex-valued competitive neural networks with discontinuous non-monotonic piecewise nonlinear activation functions. Commun Nonlinear Sci Numer Simul 62:352–377

Nie XB, Cao JD, Fei SM (2019) Multistability and instability of competitive neural networks with non-monotonic piecewise linear activation functions. Nonlinear Anal-Real 45:799–821

Gan QT, Hu RX, Liang YH (2012) Adaptive synchronization for stochastic competitive neural networks with mixed time-varying delays. Commun Nonlinear Sci Numer Simul 17:3708–3718

Yang W, Wang YW, Shen YJ, Pan LQ (2017) Cluster synchronization of coupled delayed competitive neural networks with two time scales. Nonlinear Dyn 90:2767–2782

Gong SQ, Yang SF, Guo ZY, Huang TW (2019) Global exponential synchronization of memristive competitive neural networks with time-varying delay via nonlinear control. Neural Process Lett 18:103–119

Duan L, Fang XW, Yi XJ, Fu YJ (2018) Finite-time synchronization of delayed competitive neural networks with discontinuous neuron activations. Int J Mach Learn Cyber 9:1649–1661

Liu PP, Nie XB, Liang JL, Cao JD (2018) Multiple Mittag-Leffler stability of fractional-order competitive neural networks with Gaussian activation functions. Neural Netw 108:452–465

Pratap A, Raja R, Cao JD, Rajchakit G, Fardoun HM (2019) Stability and synchronization criteria for fractional order competitive neural networks with time delays: an asymptotic expansion of Mittag Leffler function. J Frankl I 356:2212–2239

Zhang H, Ye ML, Cao JD, Alsaedi A (2018) Synchronization control of Riemann-Liouville fractional competitive network systems with time-varying delay and different time scales. Int J Control Autom 16:1404–1414

Pratap A, Raja R, Cao JD, Rajchakit G, Alsaadi FE (2018) Further synchronization in finite time analysis for time-varying delayed fractional order memristive competitive neural networks with leakage delay. Neurocomputing 317:110–126

Wu XJ, Wang H, Lu HT (2012) Modified generalized projective synchronization of a new fractional-order hyperchaotic system and its application to secure communication. Nonlinear Anal Real World Appl 13:1441–1450

Chee CY, Xu D (2005) Secure digital communication using controlled projective synchronisation of chaos. Chaos Soliton Fract 23:1063–1070

Muthukumar P, Balasubramaniam P, Ratnavelu K (2015) Fast projective synchronization of fractional order chaotic and reverse chaotic systems with its application to an affine cipher using date of birth (DOB). Nonlinear Dyn 80:1883–1897

Zhang WW, Cao JD, Wu RC, Alsaedi A, Alsaadi FE (2018) Projective synchronization of fractional-order delayed neural networks based on the comparison principle. Adv Differ Equ 2018:73

Xu Q, Xu XH, Zhuang SX, Xiao JX, Song CH, Che C (2018) New complex projective synchronization strategies for drive-response networks with fractional complex-variable dynamics. Appl Math Comput 338:552–566

Yang S, Yu J, Hu C, Jiang HJ (2018) Quasi-projective synchronization of fractional-order complex-valued recurrent neural networks. Neural Netw 104:104–113

Gu YJ, Yu YG, Wang H (2019) Projective synchronization for fractional-order memristor-based neural networks with time delays. Neural Comput Appl 31:6039–6054

Liu SX, Yu YG, Zhang S (2019) Robust synchronization of memristor-based fractional-order Hopfield neural networks with parameter uncertainties. Neural Comput Appl 31:3533–3542

Wu HQ, Wang LF, Niu PF, Wang Y (2017) Global projective synchronization in finite time of nonidentical fractional-order neural networks based on sliding mode control strategy. Neurocomputing 235:264–273

Zhang ZQ, Ren L (2019) New sufficient conditions on global asymptotic synchronization of inertial delayed neural networks by using integrating inequality techniques. Nonlinear Dyn 95:905–917

Zhang ZQ, Cao JD (2018) Periodic solutions for complex-valued neural networks of neutral type by combining graph theory with coincidence degree theory. Adv Differ Equ 2018:261

Zhang ZQ, Cao JD (2019) Novel finite-time synchronization criteria for inertial neural networks with time delays via integral inequality method. IEEE Trans Neural Netw Learn Syst 30:1476–1485

Zhang ZQ, Zheng T, Yu SH (2019) Finite-time anti-synchronization of neural networks with time-varying delays via inequality skills. Neurocomputing 356:60–68

Zhang ZQ, Li AL, Yu SH (2018) Finite-time synchronization for delayed complex-valued neural networks via integrating inequality method. Neurocomputing 318:248–260

Acknowledgments

This work is supported by the National Natural Science Foundation of China (11872201, 11572148 and 11632008).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there have no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

He, J., Chen, F., Lei, T. et al. Global adaptive matrix-projective synchronization of delayed fractional-order competitive neural network with different time scales. Neural Comput & Applic 32, 12813–12826 (2020). https://doi.org/10.1007/s00521-020-04728-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-020-04728-7