Abstract

In this paper, an extraction algorithm of color disease spot image based on Otsu and watershed is proposed to overcome the problem of excessive segmentation in the traditional watershed algorithm. The proposed algorithm converts the color space of the color spot image to calculate the component gradient that is not interfered by the reflected light in the new color space. Then, final gradient image is obtained from the gradient image reconstructed by open and close operation under the different-sized structural elements. The label from the final gradient image is extracted by Otsu algorithm, and then, the H-minima transform is used to modify the labeled image. The modified gradient image is transformed with a label by the watershed algorithm. Finally, the extraction of the disease spot is implemented. The experimental results show that the proposed approach obtains accurate and continuous target contour and reaches the requirement of human visual characteristics. Compared with other similar algorithms, the proposed algorithm can effectively suppress the impact of reflected light, optimize the extraction results of disease spot, better maintain the information of disease spot image, and improve the robustness and the applicability.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, the development of high-tech, such as artificial intelligence, image pattern recognition, has promoted the extensive practice of “fine farming” technology system. In the technical system of “fine farming,” the research of field information rapid acquisition technology lags far behind the development of other technologies supporting fine farming and has become an important subject for many units in the world to tackle key problems. Rapid collection of field information mainly includes soil information, depth of soil tillage layer, information collection of crop diseases, and distribution of crop seedlings. Therefore, the research of automatic disease diagnosis will provide an important theoretical basis for the realization of automatic crop management and has far-reaching guiding significance.

Image segmentation of crop diseases directly affects the effect of subsequent image processing. Therefore, the role of image segmentation is very important. There are many classical methods for image segmentation, such as segmentation based on threshold, edge, histogram, region, clustering algorithm analysis, and wavelet transform.

Watershed image segmentation algorithm has unique ability of region edge location and closed contour extraction. However, the traditional watershed algorithm has serious over-segmentation problem and is sensitive to noise (Romero-Zaliz and Reinoso-Gordo 2018). The shortcomings of the traditional watershed algorithm (Ostu 1979; Raja et al. 2014; Satapathy et al. 2018) are sensitive to noise and easy to produce over-segmentation. Many scholars have proposed the corresponding improved watershed algorithm based on their own research needs. The algorithm has achieved good experimental results. Other scholars have also combined the watershed algorithm with other methods to provide ideas for solving the actual problems encountered in the image segmentation. The main objective in the initial segmentation is to try to suppress the influence of noise and fine texture, while preserving the important contours, reasonably reducing the number of regions, avoiding region consolidation, or reducing difficulty and complexity of merging. It is the fundamental way to solve the traditional watershed algorithm.

Zhang et al. (2016) used the morphological filtering to filter the discrete cell points and the holes in the nucleus. Then, they applied the watershed algorithm to the segmentation of overlapping cells. The results showed that the algorithm could extract the ideal cellular tissue boundaries. Verdú-Monedero et al. (2010) used the anisotropic filtering and morphological filtering with watershed algorithm to deal with remote sensing images to automatically detect the olive trees. The experimental results showed that the algorithm could solve the over-segmentation problem of the watershed algorithm. Acquisition of Underwater Animal Images by Camera of Detecting Robot and the watershed algorithm was implemented for image segmentation. The robot could discern creature under the water with its advantages of reducing workload and improving work efficiency (Wang et al. 2017). In order to solve the over-segmentation problem in the process of image preprocessing, Shanmugavadivu and Jeevaraj (2011) used the median filter for the first time to eliminate the partial noise and, at the same time, performed the opening and closing operations of the gradient image of original image that removed the noise while preserving the important contour. Cuiyun et al. (2014) optimized the distance function of k-means algorithm to overcome over-segmentation. This method can effectually segment and denoise an image that contains a lot of noise. Gonzalez et al. (2013) first used the wavelet transform to generate multi-resolution images and then used the watershed segmentation algorithm based on labeling to segment the lowest resolution images and obtain the initial segmentation area. Then, they obtained the watershed segmentation results of high-resolution images by using regional labeling and wavelet inverse transform. Therefore, the problem of over-segmentation in the watershed transform was solved well. Yan et al. (2014) combined the watershed algorithm with the region merging and applied to the segmentation of the iris image and merging regions in the over segmented part of the segmented image. The experimental results showed that the method could reduce the excessive segmentation in the iris segmentation and obtain accurate and closed iris edges. A watershed segmentation algorithm based on ridge detection and rapid region merging was proposed (Chen and Chen 2014). This algorithm reconstructed the discontinuity ridge using the ridge historical information that reserved the information of image segmentation after opening and closing operations and completed pseudo-blobs marks based on Bayes’ rule. Wei et al. (2016) constructed a two-order tensor with the gradient of each image and took the difference between its two eigenvalues as the equivalent gradient squared norm. The watershed algorithm was applied to this norm to obtain the desired region segmentation. Several activity measures of a region have been used to construct various fusion rules, and several performance metrics have been computed to evaluate the performance. Razavitoosi and Samani (2016) developed methodologies to prioritize the watersheds by considering different development strategies in environmental, social, and economic sectors. Their paper (Wang et al. 2014) presented a new algorithm for the segmentation of wear particles by combining the watershed algorithm and an improved ant colony clustering algorithm. The experimental results demonstrated the possibility of achieving accurate segmentation of wear particles, including large abnormal wear particles and deposited chains.

A novel watershed algorithm based on the concept of connected component lab ENGLeling and chain code has been proposed, which generates a final lab ENGLel map in just four scans of a preprocessed binary image (Gu et al. 2017). The evaluation results showed that the algorithm decreased the average running time by more than 39% without loss of accuracy. Zhang and Cheng (2010) proposed a segmentation approach by combining the watershed algorithm with graph theory. The algorithm reconstructed the gradient before the watershed segmentation and introduced a floating-point active image based on the reconstruction as the reference image of watershed transform. The false contours of the over-segmentation were effectively excluded and the total segmentation quality was improved significantly, making it suitable for medical image segmentation. Jiji (2016) presented the hippocampus segmentation based on watershed bottom hat filtering algorithm and morphological operations. The extracted features were used as criteria to categorize the image features into two classes. The segmentation results of multiple sets of hippocampus can be applied to the segmentation of complex structures such as the hippocampus. Presently, the labeling and the region merging algorithms are widely used for the improvement in the above-mentioned four types of watershed algorithms. They have achieved good results in eliminating over-segmentation and handling noise-sensitive phenomena in image processing and have been applied to some practical systems.

Finally, an extraction algorithm of color disease spot image based on Otsu and watershed is proposed to overcome the problem of excessive segmentation in the traditional watershed algorithm. The proposed algorithm converts the color space of the color spot image to calculate the component gradient that is not interfered by the reflected light in the new color space. Then, final gradient image is obtained from the gradient image reconstructed by open and close operation under different-sized structural elements. The label from the final gradient image is extracted by Otsu algorithm, and then, the H-minima transform is used to modify the labeled image. The modified gradient image is transformed with a label by the watershed algorithm. Finally, the extraction of the disease spot is implemented.

2 Methodology

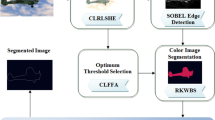

2.1 Description of algorithmic

Firstly, the improved algorithm performs the conversion of color space for color spot image and overcomes the problem of losing part information of the color disease spot image using the watershed algorithm. Secondly, the morphological reconstruction technology of operation of open and close is used to reconstruct the image. It reduces and eliminates the problem of location migration caused by detail and noise interference. Finally, the Otsu threshold processing method is used to reduce the watershed over-segmentation caused by excessive local minimum. Figure 1 shows the workflow of the proposed algorithm, and the steps are described in detail as follows:

Step 1 The color disease spot image is converted to a new color space to eliminate the influence of light reflection on the image. Then, the color components are extracted that are unaffected by the reflected light.

Step 2 First, the gradients of the color components that are not affected by the reflected light are calculated, and then, the gradient values are processed by sequence from low to high. In the sorted array, the lower the gradient, the more the position is ahead.

Step 3 The maximum gradient of the gradient values is selected as the final gradient to construct a gradient image.

Step 4 The morphological technique of opening and closing operations is used to reconstruct the gradient image. The Otsu threshold processing method is used to extract the label of the target of interest from the reconstructed image. Finally, the reconstructed image is modified by labeling.

Step 5 The watershed algorithm is applied to the gradient image of labeling to complete the image segmentation

Step 6 The disease spot image is analyzed and the completed segmented image is divided into target and background classes. Then, the extraction of disease spot image is completed.

2.2 The transformation of color space from color disease spot image

The watershed segmentation algorithm based on color spot image mostly converts the color spot image into gray images. The loss of part of the image information is often caused during the conversion process. The commonly used color models include RGB model, HSI model, \( {\text{YCbCr}} \) model, and CMYK model (Qiang et al. 2017). The affinity propagation clustering is adopted to merge the regions segmented by the watershed transform, using the color moments computed on each local region to obtain the final segmentation results. Experiments are conducted on publicly available datasets to demonstrate the adaptability and robustness of the proposed algorithm compared with the relative state-of-the-art methods. The proposed method can solve the over-segmentation problem well and obtain accurate results. Ding et al. (2017) realized the leaf segmentation of tomato canopy multispectral image based on the complex background extraction, gradient graph calculation, wavelet transform, marker selection and watershed segmentation. The results of the wavelet transform watershed segmentation and the mathematical morphology watershed segmentation were superimposed, and it was found that the average segmentation error rate of tomato canopy leaves was 21% for complex background and different light intensities, which provided some technical support for the analysis of tomato leaf nutrient content detection. Ma et al. (2017) presented a novel image processing method using color space and region growing for segmenting greenhouse vegetable foliar disease spots images captured under real field conditions. Such method can suppress the phenomenon of over-segmentation to a certain extent.

The specific color space is as follows:

where O3 is the component affected by the reflected light and {α, β, γ} is the parameter of the light source. Supposing that a known source of light is given, {α, β, γ} represents the reflection ratio of the color surface, which is usually set to 1 in the experiment. There are three components in the color space: {\( O_{1} , O_{2} , O_{3} \)}, and their relationship is as follows:

In Eq. (4), \( O_{3x} \) is the spatial differential of the \( O_{3} \) component and \( O_{x} \) represents the component affected by the reflected light. The parameters \( O_{1} \) and \( O_{2} \) are the components that are not affected by the reflected light. When processing a color disease spot image, the first image is converted from RGB to the new color space to obtain \( O_{1} \) and \( O_{2} \) components. Then, the gradient of the two components is calculated and the maximum value is selected for the current gradient image.

2.3 Calculation of gradient

The watershed segmentation algorithm is based on the mathematical morphology and topographic topology theory. Its objective is to determine the waterline, by finding the minimum in the image area and then using the immersion method to find the waterline corresponding to the minimum. The waterline described here is the basic outline of the object of interest in the image, that is, the dividing line of the intensity mutation in the image. The gradient image can enhance the contrast, highlight the obvious changes in the light and the shade in the image, and can better reflect the trend of gray-level change in the image. In this paper, the non-flat structural element of the disk can make the waterline on the gradient image closer to the target contour in the image than the original watershed segmentation algorithm. The expansion and the corrosion can be combined with the image \( f \left( {x, y} \right) \) to obtain the morphological gradient \( g \left( {x, y} \right) \) of an image, which is defined as:

In Eq. (5), \( b\left( {x,y} \right) \) represents the non-flat structural elements and \( \oplus \) and \( { \ominus } \) are the expansion operator of mathematical morphology and the corrosion operator with mathematical morphology, respectively. The expansion coarsens the area of an image, while corrosion refines it. The difference between the expansion and the corrosion emphasizes the boundary between the regions.

The expansion operation expands the region from the periphery, and its function is to fill the hole in the non-flat structural element b contained in the image. When the image \( f \left( {x, y} \right) \) is expanded by the non-flat structural element b, it is defined as:

The expansion operation is to expand the area with a relatively bright background and reduce the dark areas of the image with respect to the background. The essence of the corrosion operation is to narrow the area from the surrounding and remove the redundant parts on the edge. When the image f(x, y) is corroded by the non-flat structural element b, it is defined as:

The corrosion operation is the opposite of the expansion operation. It extends the dark areas in the image and reduces the area that is brighter than the background. As mentioned in Sect. 2.2, this paper calculates the morphological gradient of \( O_{1} \) and \( O_{2} \) components in the new color space of the color disease spot image. Since the two components have nothing to do with the reflection of light, they can avoid the pseudo-minimum value generated by the light. The non-flat structural element of the disk is selected here, not only because it has the characteristic of isotropy, but it can weaken the dependence of the gradient on the edge direction. According to the above definition, the gradient of the two components is calculated, and the following operation is carried out.

where \( g_{{O_{1} }} \) and \( g_{{O_{2} }} \) represent the morphological gradient of \( O_{1} \) and \( O_{2} \) components, respectively. The gradient is calculated by the disk shape of the flattened structural element. The reason for selecting smaller radius is to avoid the deviation of contour location caused by thick edges. \( g_{\rm{max} } \) reports the gradient image by comparing the two gradients and selecting the largest gradient map as the color disease spot image. The calculation of the gradient of color disease spot image can strengthen and isolate the noise in the original image, which provides a good basis for the following noise reduction.

2.4 The extraction of label based on reconstruction of image of open and close operations

2.4.1 Reconstruction of morphology

The morphological gradient map of color disease spot image is obtained according to the above-mentioned contents and then compared with the original image to obtain the result, which shows that the noise and gray problem still exists. If a watershed is used directly to divide this result, it will lead to serious over-segmentation. Therefore, it is essential to perform the reconstruction operations of open and close before applying the algorithm of watershed segmentation. The open and close reconstruction can reduce and eliminate the noise and the details causing the excessive local extremum in the detail and suppress the over-segmentation caused by the excessive local extremum. The significant contour of the object of interest is restored during the reconfiguration process, and the shape information of the main target is maintained. The reconstruction of morphology involves two images and one structural element: One is a labeled image that contains the starting point of the transformation and the other is a template image that terminates the transformation. The structural elements are used to define the connectivity. The core of morphological reconstruction is geodesic expansion and geodesic corrosion. \( g_{\rm{max} } \) and l represent the labeled and the template images, respectively, and usually both are the same grayscale images of the same size, and then \( g_{\rm{max} } \le l \). The geodesic expansion \( D_{l}^{\left( n \right)} \) of \( g_{\rm{max} } \) with respect to the size of l is defined as:

The inflated morphological reconstruction of the template image by the labeled image \( g_{\rm{max} } \) is defined as the repeated iteration of the geodesic expansion of the \( g_{\rm{max} } \) about l until stability is reached: \( R_{l}^{D} \left( {g_{\rm{max} } } \right) = D_{l}^{\left( k \right)} \left( {g_{\rm{max} } } \right) \) and there is a k that should make \( D_{l}^{\left( k \right)} \left( {g_{\rm{max} } } \right) = D_{l}^{{\left( {k + 1} \right)}} \left( {g_{\rm{max} } } \right) \).

The definition of geodesic corrosion \( E_{l}^{\left( n \right)} \) of \( g_{\rm{max} } \) with respect to l is:

Similar to geodesic expansion, the \( g_{\rm{max} } \) geodesic expansion of l iterates repeatedly until it reaches stability: that is, \( R_{l}^{E} \left( {g_{\rm{max} } } \right) = E_{l}^{\left( k \right)} \left( {g_{\rm{max} } } \right) \), and there is a k that should make \( E_{l}^{\left( k \right)} \left( {g_{\rm{max} } } \right) = E_{l}^{{\left( {k + 1} \right)}} \left( {g_{\rm{max} } } \right) \).

According to the basic principle of geodesic expansion and geodesic corrosion, the open operation of image reconstruction first corrodes the input image and uses it as a labeled image. The reconstruction operation of open of an image \( g_{\rm{max} } \) with a size of n is defined as:\( g_{\rm{max} } \) is first corroded by size n and then reconstructed by expansion of \( g_{\rm{max} } \). The operation can eliminate the texture details and the bright noise that are less than the structural elements. The operation of reconstruction of open is:

where \( \left( {g_{\rm{max} } { \ominus }nb} \right) \) represents the \( n{\text{th}} \) corrosion of b to \( g_{\rm{max} } \).

Similarly, the reconstruction operation of close of an image \( g_{\rm{max} } \) with a size of n is defined as:\( g_{\rm{max} } \) is first expanded by size n and then reconstructed by corrosion of \( g_{\rm{max} } \). The operation can eliminate the texture details and the dark noise that are less than the structural elements, and better restore the edge of the target. The operation of reconstruction of close is:

where \( \left( {g_{\rm{max} } \oplus nb} \right) \) represents the \( n{\text{th}} \) expansion of b to \( g_{\rm{max} } \). The definition of the reconstruction operation \( OC_{R}^{\left( n \right)} \left( {g_{\rm{max} } } \right) \) of morphological opening and closing is:

The operation first uses the open reconstruction for the gradient image, followed by the close reconstruction. The combination of opening and closing operations not only corrects the maximum and the minimum values of the regions in the gradient graphs and eliminates the influence of irregular interference, but also accurately locates the edges. It resolves the over-segmentation problem caused by the excessive local minimum.

2.4.2 The extraction of mark

The reconstructed gradient image removes most of the noise. However, some of the minimum points in the image are still not suppressed because they are not related to the target subjects, resulting in numerous meaningless regions in the segmentation results. In order to solve this problem, the method of label extraction can be used. In short, it labels the minimum value of the interested target in the reconstructed gradient image before segmentation using the watershed algorithm and shields other redundant minimum values. This method can reduce the problem of over-segmentation to a certain extent. Therefore, this paper uses an adaptive extended minimum transform (H-minima) technique based on morphology. The method can be used to effectively extract the labels combined with prior knowledge.

The basic principle of H-minima technique in mathematical morphology is to eliminate the local minimum of a water basin depth lower than the given threshold H.

In this paper, the gradient reconstruction image \( g_{\text{rec}} \left( {x,y} \right) \) obtained from Sect. 2.4.1 is transformed by H-minima technique. The transformation of a two-value labeled image \( g_{n} \left( {x,y} \right) \) can be obtained by setting the closed value H as follows:

The selection of parameter H in the traditional H-minima transform contradicts between shielding the noise and extracting the weak edges. The small H cannot shield the strong texture noise, while the weak edge extraction effect is not good when the H is too large. The parameter H in the traditional H-minima technique is mostly human set. This makes it very different for the segmentation results of different images in the same parameter. Therefore, the threshold is obtained by using the maximum inter class variance algorithm (Otsu) in this paper.

The Otsu algorithm uses the threshold to divide the gray histogram into two parts of the target area and the background region. The threshold value that makes the variance maximum between the two classes or makes the intraclass difference minimum is set to be the best threshold. The algorithm is simple and effective. In most cases, it can obtain good segmentation results, has wide application range, and has the advantages of real-time processing.

The set \( \left\{ {0,1,2, \ldots L - 1} \right\} \) represents a different gradation of L in a digital image \( g_{n} \left( {x,y} \right) \) with a size of n pixels. The \( n_{i} \) represents the number of pixels with a gray level \( i \).

The probability of a pixel point with a gray level of \( I \) is \( p_{i} = n_{i} /n \). Thus, \( p_{i} \ge 0 \), \( \sum\nolimits_{i = 0}^{L - 1} {p_{i} = 1} \). When the image is segmented, the threshold k divides the input images into two categories, \( C_{1} \) and \( C_{2} \). The target class \( C_{1} \) and the background class \( C_{2} \) are composed of all pixels within the range [0, k] and [k + 1, L − 1], respectively.

The probability of pixel being divided into \( C_{1} \) is \( p_{1} \left( k \right) = \sum\nolimits_{i = 0}^{k} {p_{i} } \).

Similarly, the probability of pixel being divided into \( C_{2} \) is \( p_{2} \left( k \right) = \sum\nolimits_{i = 0}^{L - 1} {p_{i} } \).

The average gray values of the pixels allocated to \( C_{1} \) and \( C_{2} \) are \( a_{1} \left( k \right) = \frac{1}{{p_{1} \left( k \right)}}\sum\nolimits_{i = 0}^{k} {ip_{i} } \) and \( a_{2} \left( k \right) = \frac{1}{{p_{2} \left( k \right)}}\sum\nolimits_{i = k}^{L - 1} {ip_{i} } \), respectively.

The cumulative mean value of gray level k is \( a\left( k \right) = \sum\nolimits_{i = 0}^{k} {ip_{i} } \).

The average gray value of the whole image is \( a_{\text{G}} = \sum\nolimits_{i = 0}^{L - 1} {ip_{i} } \).

The interclass variance is

The best threshold is \( k^{*} \), and the maximized variance \( \sigma_{k}^{2} \): \( \sigma_{{k^{*} }}^{2} = \mathop {\rm{max} }\nolimits_{0 \le k \le L - 1} \sigma_{k}^{2} \). If the maximum value is not unique, \( k^{*} \) is obtained by using the respective maximum value k of the corresponding detection.

Input the image \( g_{n} \left( {x,y} \right) \), \( g_{\rm{max} } = \left\{ {\begin{array}{*{20}l} 1 & {g_{n} > k^{*} } \\ 0 & {g_{n} \le k^{*} } \\ \end{array} } \right. \)

In this paper, the closed value k obtained by the Otsu algorithm is used to extract the effective label for the gradient reconstruction image \( g_{n} \left( {x,y} \right) \). It is mandatory to calibrate the location of the minimum value, to prevent the appearance of the meaningless minimum, and to avoid the unscience of setting the closed value artificially. This not only improves the robustness, but also obtains the segmentation results that are closer to the target contour.

The maximum radius of the algorithm is 3, and the morphological opening and closing operations at various scales are carried out. The union of labeled images extracted from Sect. 2.4.2 is obtained under various scales to be used as the final labeled image. The original gradient image is modified with the labeled image so that the local polar region only appears in the location of the label. Finally, the modified gradient image is transformed by the watershed transform to achieve the final image segmentation.

2.5 Segmentation of watershed and extraction of disease spot

The labeled image \( g_{\rm{max} } \left( {x,y} \right) \) obtained from Sect. 2.4.2 is considered as the local minima of the gradient image \( g_{n} \left( {x,y} \right) \) of the original color disease spot image. In this way, the edge information of the image can be preserved to the maximum, and numerous unrelated local minimum values can be suppressed. The algorithm uses the watershed segmentation on the modified gradient image \( g_{\text{mode}} \left( {x,y} \right) \) to obtain the ideal experimental result \( g_{\text{last}} \left( {x,y} \right) \) as:

In Eq. (16), \( M() \) represents the calibration operation of morphological minima.

In Eq. (17), \( W() \) represents the transformation of watershed.

3 Experimental results and analysis

3.1 Experimental results

Figure 2 shows the effects of the extraction algorithm of color disease spot.

The test image is the corn disease spot image of 200 × 200 pixels, which includes the common leaf blight, Cochliobolus heterostrophus, and gray speck disease. The experimental results show that the extraction algorithm effectively identifies the disease spot location and segments it. The proposed algorithm is less to determine the dividing point and retain the original disease images in color, edge, and texture features.

3.2 Comparison and analysis

Figure 3 shows the comparison of the proposed algorithm with one-dimensional Otsu threshold segmentation and the EM clustering segmentation algorithms. The experimental results show that both the Otsu and the EM algorithms have misjudged the points when they are segmented, and non-disease spot position has been mistaken for disease spot for extraction. At the same time, there are still some good identified disease spot locations. The proposed algorithm has effectively determined the disease spot location and has less error. It is also used to extract the texture edge area, keeping in good agreement with the lesion of the original image.

The algorithms of G-MRF (Lai 2010) and TSRG (Li 2010) are disease spot segmentation methods based on corn disease image. Figure 4 shows the experimental comparison among the proposed, the G-MRF, and the TSRG algorithms. Figure 5 shows the experimental comparison among the proposed, the watershed, and the OSTU algorithms. The experimental results show that the G-MRF algorithm can retain the disease spot appearance and texture. However, it is easily affected by other scattered points, resulting in a small number of misjudged points. Although the TSRG algorithm can avoid the influence of scattered points of other non-disease spots, the disease spot area cannot well retain the original appearance of the spot. The proposed algorithm has outstanding performance in retaining the characteristics of original disease spot texture edge and has a high quality of regional extraction. Moreover, it can avoid the influence of scattered points to some extent. In order to quantitatively evaluate the segmentation results, the evaluation method of segmentation area number, the segmentation accuracy F (O’Callaghan 2005), and the regional consistency U (Yujin 1996) are used.

The rate of detection is \( P = \left( {Det \cap Gt} \right)/Det \), the rate of correction is \( R = \left( {Det \cup Gt} \right)/Gt \), and the precision is \( F = PR/\left( {\alpha R + \left( {1 - \alpha } \right)P} \right) \), where \( Gt \) represents the number of pixels of an artificial segmentation image, \( Det \) represents the number of pixels in the image segmentation, \( Det \cap G \) represents the number of pixels that are accurately detected by the algorithm, and \( \alpha = 0.5 \). The regional mean of the image is \( \mu_{i} = \mathop \sum \nolimits_{{\left( {x,y} \right) \in R_{i} }} I\left( {x,y} \right)/A_{i} \), where \( i = 1\;{\text{or}}\;i = 2 \), \( R_{i} \) is the regions of target and background, and \( A_{i} \) is the number of pixels in the region. The variance of the region is \( \sigma_{i}^{2} = \mathop \sum \nolimits_{{\left( {x,y} \right) \in R_{i} }} \left( {I\left( {x,y} \right) - \mu_{i} } \right)^{2} \), and the consistency of the region can be expressed as \( U = 1 - \left( {\sigma_{2}^{1} + \sigma_{2}^{2} } \right)/c \), where c is the sum of the pixels of the image. Table 1 shows that the proposed algorithm can describe the segmentation results with a more reasonable number of regions and has higher segmentation accuracy than the watershed algorithm with morphological gradient reconstruction and label extraction, and the watershed algorithm with improved gradient and adaptive label extraction. In terms of regional consistency index, the proposed algorithm results are better than the other two algorithms, which is basically consistent with human vision.

In order to prove the reliability of the proposed algorithm, the experiment selected 100 images of color disease spots in the image library to conduct the simulation test. The statistical results of 20 images from three evaluation indexes of region number, regional consistency, and segmentation accuracy are shown in Figs. 6, 7, and 8, respectively. It can be seen from the figures that the number of regions obtained by the proposed algorithm is less, and the region consistency and the segmentation accuracy are relatively high than the other two algorithms. Therefore, the proposed algorithm has better segmentation results than the watershed algorithm based on morphological gradient reconstruction and labeling extraction.

4 Conclusions

In this paper, an extraction approach of color disease spot image based on Otsu and watershed algorithms is proposed. Firstly, the proposed algorithm converts the RGB image into new color space, separating the components that are reflected by the reflected light from the components that are unaffected, in order to facilitate the subsequent operation. Secondly, the final gradient image is obtained by performing reconstruction of opening and closing the gradient image under the different-sized structural elements. Then, the Otsu algorithm is used to extract the labeled image from the final gradient image, and the marked image is modified by H-minima transform.

Finally, the watershed transform is performed on the modified labeled image, and the disease spot is extracted. The experimental results show that the proposed algorithm can effectively suppress the effect of light reflection, optimize the effect of extraction of disease spot, and keep the integrity of the disease spot image better than the classical methods. However, the proposed algorithm still has limitation in the aspect of extraction of disease spot, which will be investigated further in the future work.

References

Chen Y, Chen J (2014) A watershed segmentation algorithm based on ridge detection and rapid region merging. In: IEEE international conference on signal processing, communications and computing, pp 420–424

Cuiyun L, Caiming Z, Shanshan G (2014) A method based on geodesic distance for image segmentation and denoising. Res J Appl Sci Eng Technol 7(9):1837–1841

Ding Y, Zhang J, Suk LW, Minzan LI (2017) Segmentation of tomato leaves from canopy images by combination of wavelet transform and watershed algorithm. Trans Chin Soc Agric Mach 48(9):32–37

Gonzalez MA, Meschino GJ, Ballarin VL (2013) Solving the over segmentation problem in applications of watershed transform. J Biomed Graph Comput 3(3):29–40

Gu Q, Chen J, Aoyama T, Ishii I (2017) An efficient watershed algorithm for preprocessed binary image. In: IEEE international conference on information and automation, pp 1781–1786

Jiji GW (2016) Analysis of hippocampus in multiple sclerosis-associated depression using image processing. Int J Biomed Eng Technol 20(4):369

Lai J (2010) Research on intelligent diagnosis of corn disease based on disease image. Doctoral dissertation, Shihezi University

Li J (2010) Research and implementation of intelligent spot image processing algorithm on corn leaf. Doctoral dissertation, Beijing University of Posts and Telecommunications

Ma J, Du K, Zhang L, Zheng F, Sun Z (2017) A segmentation method for greenhouse vegetable foliar disease spots images using color information and region growing. Comput Electron Agric 142(142):110–117

O'Callaghan RJ, Bull DR (2005) Combined morphological-spectral unsupervised image segmentation[J]. IEEE Trans Image Process 14(1):49–62

Ostu N (1979) A threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybern 9(1):62–66

Qiang C, Liu YQ, Jian C, Hai-Sheng LI, Jun-Ping DU (2017) A watershed image segmentation algorithm based on self-adaptive marking and interregional affinity propagation clustering. Acta Electronica Sinica 45(8):1911–1918

Raja NSM, Rajinikanth V, Latha K (2014) Otsu based optimal multilevel image thresholding using firefly algorithm. Model Simul Eng 2014:1–17

Razavitoosi SL, Samani JMV (2016) Evaluating water management strategies in watersheds by new hybrid fuzzy analytical network process (fanp) methods. J Hydrol 534:364–376

Romero-Zaliz R, Reinoso-Gordo JF (2018) An updated review on watershed algorithms. In: Soft computing for sustainability science, pp 235–258

Satapathy SC, Sri MRN, Rajinikanth V, Ashour AS, Dey N (2018) Multi-level image thresholding using Otsu and chaotic bat algorithm. Neural Comput Appl 29(12):1285–1307

Shanmugavadivu P, Jeevaraj PSE (2011) Modified partial differential equations based adaptive two-stage median filter for images corrupted with high density fixed-value impulse noise. Commun Comput Inf Sci 204:376–383

Verdú-Monedero R, Angulo J, Serra J (2010) Anisotropic morphological filters with spatially-variant structuring elements based on image-dependent gradient fields. IEEE Trans Image Process Publ IEEE Sig Process Soc 20(1):200–212

Wang J, Zhang L, Lu F, Wang X (2014) The segmentation of wear particles in ferrograph images based on an improved ant colony algorithm. Wear 311(1–2):123–129

Wang L, Wang S, Deng Y (2017) Under water animals detecting robot based on watershed algorithm. In: International conference on mechatronics engineering and information technology

Wei T, Gao Q, Ma N, Li N, Wang J, Lei P et al (2016) Feature-level image fusion through consistent region segmentation and dual-tree complex wavelet transform. J Imaging Sci Technol 60:20502-1–20502-11

Yan F, Tian Y, Wu H, Zhou Y (2014) Iris segmentation using watershed and region merging. In: IEEE conference on industrial electronics and applications. IEEE, pp 835–840

Yujin Z (1996) Classification and comparison of image segmentation evaluation techniques. Chin J Image Graph 1(2):151–158

Zhang Y, Cheng X (2010) Medical image segmentation based on watershed and graph theory. In: International congress on image and signal processing, vol 3. IEEE, pp 1419–1422

Zhang J, Hu Z, Han G, He X (2016) Segmentation of overlapping cells in cervical smears based on spatial relationship and overlapping translucency light transmission model. Pattern Recognit 60(C):286–295

Acknowledgements

The work is supported in part by Science and Technology Project of Guangdong Province under Grant 2017A010101037, by the National Natural Science Foundation of China with the Grant No. 61773296, and by the Guangdong Academy of Science with No. 2019GDASYL-0103077.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they have no conflicts of interest to this work. We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Additional information

Communicated by V. Loia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xiong, L., Zhang, D., Li, K. et al. The extraction algorithm of color disease spot image based on Otsu and watershed. Soft Comput 24, 7253–7263 (2020). https://doi.org/10.1007/s00500-019-04339-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-019-04339-y