Abstract

Background

Flexible endoscopy is an integral part of surgical care. Exposure to endoscopic procedures varies greatly in surgical training. The Society of American Gastrointestinal and Endoscopic Surgeons has developed the Fundamentals of Endoscopic Surgery (FES), which serves to teach and assess the fundamental knowledge and skills required to practice flexible endoscopy of the gastrointestinal tract. This report describes the validity evidence in the development of the FES cognitive examination.

Methods

Core areas in the practice of gastrointestinal endoscopy were identified through facilitated expert focus groups to establish validity evidence for the test content. Test items then were developed based on the content areas. Prospective enrollment of participants at various levels of training and experience was used for beta testing. Two FES cognitive test versions then were developed based on beta testing data. The Angoff and contrasting group methods were used to determine the passing score. Validity evidence was established through correlation of experience level with examination score.

Results

A total of 220 test items were developed in accordance with the defined test blueprint and formulated into two versions of 120 questions each. The versions were administered randomly to 363 participants. The correlation between test scores and training level was high (r = 0.69), with similar results noted for contrasting groups based on endoscopic rotation and endoscopic procedural experience. Items then were selected for two test forms of 75 items each, and a passing score was established.

Conclusions

The FES cognitive examination is the first test with validity evidence to assess the basic knowledge needed to perform flexible endoscopy. Combined with the hands-on skills examination, this assessment tool is a key component for FES certification.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

The surgical management of disease has evolved from a long tradition of open surgical procedures to a vast array of minimal access techniques. The advantages of laparoscopic techniques include improved outcomes and reduced morbidity for the treatment of several disease processes. Training in laparoscopic surgery currently is an integral part of education in general surgery.

Flexible endoscopy is an important tool in managing surgical patients. Surgeons often use flexible endoscopy to locate and treat pathology, establish enteral access, and confirm the adequacy of operative procedures. Surgeon-led endoscopic innovation has resulted in procedures and techniques that have been adopted by both surgeons and gastroenterologists alike, including percutaneous endoscopic gastrostomy and endoscopic retrograde cholangiopancreatography (ERCP) [3, 4]. Unlike training for laparoscopy, the training of surgeons to perform flexible endoscopy is variable, and no standardized outcome measures exist for assessing cognitive knowledge and technical skills.

The Society of American Gastrointestinal and Endoscopic Surgeons (SAGES) has been at the forefront of surgical education in minimal access techniques. SAGES has successfully developed and provided validity evidence for the fundamentals of laparoscopic surgery (FLS), which serves as a critical component of surgical education worldwide [5]. In concert with the American College of Surgeons, the American Board of Surgery has adopted passing FLS as a requisite for board certification in general surgery.

To address the need for consistency in endoscopic training and to measure fundamental knowledge and skills, SAGES formed a task force to create the Fundamentals of Endoscopic Surgery (FES). The mission of the FES task force was to provide an examination of the cognitive and hands-on skills required to perform basic flexible endoscopy. In this report, we describe the development of the FES cognitive examination and the validity evidence for use of the examination results.

Materials and methods

Test development

The FES cognitive examination was developed by SAGES in consultation with Kryterion Inc. (Phoenix, AZ, USA), a company with extensive experience developing high-stakes examinations to measure the knowledge and judgment underlying safe basic, flexible gastrointestinal endoscopy. The cognitive examination would be only one of three requirements for obtaining FES certification. The additional two requirements would be possession of a valid physician’s license to practice medicine and successful completion of the hands-on skills component of the FES examination.

Successful achievement of FES certification alone is not sufficient grounds for the independent practice of flexible endoscopy. Specifically, the candidate also must gain appropriate clinical experience in a proctored training environment. Advanced procedures such as ERCP, endoscopic ultrasound, and deep enteroscopy are not part of the FES program.

The core content areas in the practice of gastrointestinal endoscopy were identified by facilitated expert focus groups through a collaborative and iterative process involving practicing endoscopists from surgery and gastroenterology. Individuals who succeed in passing the cognitive exam would be seen as having the knowledge and clinical judgment necessary to practice basic endoscopy of the gastrointestinal tract including upper and lower endoscopy. In addition, these individuals would be expected to have the cognitive knowledge to use devices for tissue sampling, hemostasis, tissue ablation and removal, dilation, and enteral access. This process resulted in a detailed test blueprint.

A test item-writing workshop was undertaken with experts in flexible endoscopy to develop multiple-choice questions according to the test blueprint. These experts represented a subset of the FES task force and were identified as high-volume clinicians in the field of flexible endoscopy. The test items were rigorously reviewed by the FES task force and Kryterion for psychometric properties and grammar editing. A congruency and accuracy review then was performed followed by a final edit for spelling, punctuation, and style. A pool of 220 items was created for beta testing.

Beta testing

Participants at various levels of training and experience were prospectively recruited for beta testing. In all, 393 participants were recruited from 34 North American institutions and 1 participant each from Italy, Turkey, Saudi Arabia, and Mexico. Institutional review board (or equivalent) approval was obtained at each participating facility. To ensure a wide range of experience levels for beta testing, recruitment included postgraduate year 1 (PGY-1) through PGY-5 residents in general surgery, surgical and gastroenterology fellows, attending surgeons, and attending gastroenterologists.

The pool of 220 items was divided into two forms of 120 items each. Both tests were stratified for the basic content areas. These two forms then were randomly assigned to beta test participants. All the exams were administered via online Webassessor (Kryterion, Phoenix, AZ, USA) at high-stakes secured test locations.

To establish validity evidence based on internal structure, an in-depth statistical analysis was undertaken, with statistics presented for each item including p values, point-biserial correlations, ability-by-option analysis, completion time, and item-option statistics. Items exhibiting unusual statistical performance were reviewed in detail by the FES task force, and a final determination to retain, delete, rework, or retire the item from the item bank was made. Both the item analysis results and the selection results were used to create two final comparable test forms (forms A and B) of 75 items each according to the test blueprint.

To establish validity evidence based on relationships to external variables, data were gathered at beta testing on those variables expected to relate to knowledge of endoscopy. These variables included current level of training, participation in a formal endoscopic rotation, and number of upper and lower endoscopic cases managed. These groupings formed the basis of the contrasting-groups analysis.

The raw exam score was defined as the number of questions a participant answered correctly from the 75-item test. Correlations between these variables and mean test scores were examined to ascertain whether they were related in the expected manner. Tables of mean scores across various groupings of these variables also were inspected.

The mean raw score also was compared with experience level (PGY-1 to PGY-5, fellow, attending surgeon) and the level of correlation computed. One-way analysis of variance was performed to determine the statistical significance between experience level and the mean raw test score. Tukey post hoc comparisons were made to assess specific between-group differences. An alpha level lower than 0.05 was deemed significant.

Standard-setting: determination of the passing score

Both content- and examinee-based standard-setting methods were used to determine a passing score for the final 75-item test. A modified Angoff method was used in a special meeting with a panel of 11 subject matter experts. At this meeting, the test definition and blueprint were reviewed, and a discussion was led by a facilitator to identify what a “minimally qualified” or “just acceptable” candidate might possess in terms of the knowledge, skills, and abilities noted in the test blueprint.

After the discussion, the panelists were asked to determine independently for each question whether a “minimally qualified” candidate would answer correctly or not. The results from the first round were discussed and discrepancies among the panelists examined, after which a second and final round of items ratings was carried out and summarized.

Contrasting-groups methodology also was used to help determine an appropriate passing score. Analysis from beta testing showed that three demographic variables were significantly correlated with the scores of the cognitive examination: (1) current level of training, (2) participation in a formal endoscopic rotation, and (3) number of upper and lower endoscopic cases performed. For each variable, the contrasting groups were as follows: those who had training at a PGY-4 level or above versus those who had training at a PGY-3 level or below, those who had a formal endoscopic rotation versus those who had no rotation, and those who had performed more than 50 endoscopic cases (upper endoscopy or colonoscopy) versus those who had performed 50 or fewer cases. Pearson’s product-moment correlations were calculated for each of these contrasting groups.

For each standard-setting method, a range of acceptable passing scores was determined, and the consequences (predicted failing and passing rates) were established, together with the sensitivity and specificity of each passing score. In this context, sensitivity represented the percentage of the examinees expected to pass (based on their training, rotation, or cases performed) who in fact did pass given the selected pass score. The false-positives were represented by “1 minus the specificity,” which indicated the percentage of those not expected to pass but who in fact did pass given the selected passing score. The final passing score selected was a compromise between all the scores deemed possible by the various methods, with somewhat more emphasis placed on reducing the number of false-positives.

Results

Five core content areas were identified for the FES cognitive exam, namely, endoscope technology and equipment, patient preparation, sedation, general gastrointestinal endoscopy, and endoscopic therapies. The detailed subjects within these content areas are listed in Table 1. The expectations for the candidate taking the FES cognitive examination are summarized in Table 2.

For beta testing, 363 candidates were prospectively and randomly administered one form of the FES cognitive examination (120 items on each form). In the beta testing, 195 Form 1 tests and 168 Form 2 tests were administered. The core content area distributions were identical for the two forms of the test based on the test blueprint. From the item analysis of the 220 items (i.e., test questions), 140 were selected for inclusion in two final forms, labeled Form A and Form B, consisting of 75 items each. The contents of the two forms were identically balanced, and a 13 % overlap of items in the forms allowed for them to be statistically equated.

To establish validity evidence based on a relationship to other variables for the FES cognitive examination, the obtained scores then were related to the various variables describing the beta test-takers’ level of experience. The cognitive examination scores correlated well with the test-takers’ training level (PGY-4 or higher vs PGY-3 or lower), formal endoscopic rotation participation, and number of endoscopic cases performed (>50 cases vs ≤50 cases), with respective correlations of 0.67, 0.48, and 0.71.

Table 3 shows the cognitive examination mean raw test scores for these contrasting groups. Higher levels of training were strongly correlated with higher cognitive examination raw scores (r = 0.69, Table 4; Fig. 1). In general, the test-takers with greater levels of training, formal endoscopic rotation experience, and more endoscopic case experience had higher examination scores than the test-takers in the less experienced groups, providing definite validity evidence for the examination.

Analysis of variance results found a statistically significant difference between raw scores on the FES cognitive examination and experience level of the participant (p < 0.05). Effect size indicated that 50.46 % of the variance in examination score was explained by experience level.

The results of the post hoc analysis are detailed in Table 5. The test-takers in each experience level group had higher examination scores than the test-takers in the lower experience level group or groups. Based on these data, those with more experience are more likely to earn higher scores on the FES cognitive examination.

Each method for determining a passing score resulted in a recommended range of scores considered appropriate. The recommended range of scores for the Angoff method were somewhat higher—more demanding—than the ranges for the various contrasting methods groups but still within the standard error of the panelists’ recommendations. A final passing score was selected based on evaluation of the sensitivity and specificity data for each and weighing of the “1 minus the specificity” or the false-positive error more than on the false-negative error because retaking of the examination was definitely allowed. Given the projected passing mark and the beta test data, the percentage of passing in each group of interest is shown in the final columns of Tables 3 and 4.

Given the projected passing score and the beta test data, the percentage of participants passing in each contrasting group is shown in the final column of Table 3. These percentages represent the sensitivity (true-positives) and 1 minus the specificity (false-positives) for the final raw cut score selected for the cognitive examination.

Discussion

This report describes the development of the cognitive component of the FES examination and the validity evidence for the use of the results. This test was developed by experts in the field using empiric data and psychometric principles to establish validity evidence for the test content. The cognitive assessment is intended to be used in concert with the hands-on skills evaluation for FES certification. FES certification alone is not sufficient for the independent practice of gastrointestinal endoscopy in clinical settings. Proceduralists also must gain appropriate clinical experience in a proctored training environment and abide by local credentialing policies. As a help for credentialing, FES certification provides decision-making bodies with a rigorous and empirically validated tool for measuring basic knowledge and skills.

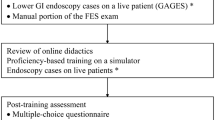

Most assessments of gastrointestinal endoscopy have focused on the technical component of procedural performance. An increasing number of tools have been developed to this end. The Global Assessment of Gastrointestinal Endoscopic Skills (GAGES) was developed as a measurement tool to evaluate these skills in flexible endoscopy, and GAGES has demonstrated significant validity evidence [8]. This tool, however, relies on a supervising proceduralist to evaluate the performance of a novice in an uncontrolled clinical environment with obvious limitations.

The American Society of Gastrointestinal Endoscopy (ASGE) has developed a robust procedure-based core curriculum covering many aspects of basic and advanced endoscopy [1, 2, 7]. This effort represents a crucial step to ensure that endoscopic procedures are performed with a baseline level of expertise. Neither GAGES nor the ASGE core curriculum provides empirically developed tools to assess the cognitive knowledge needed for gastrointestinal endoscopy.

The Mayo Colonoscopy Skills Assessment Tool developed by Sedlack [6] is a tool with evidence for validity that includes a cognitive evaluation component. However, this tool, like GAGES, relies on assessment by a supervising proceduralist in the clinical environment and not on direct independent knowledge assessment of the endoscopist in a controlled environment. In addition, this tool is more focused on procedural performance than on knowledge and clinical judgment.

A continuing debate exists regarding the competency and proficiency of endoscopy performed for patients. Most agree that two components are needed, namely, cognitive knowledge required to provide care and technical knowledge required for quality procedural performance. The goal of this study was to develop a tool with validity evidence that assesses the cognitive knowledge required to perform basic gastrointestinal endoscopy. This tool was designed with a goal similar to that established for laparoscopy with FLS [5]. Adequate validity evidence for the test content has been established with the identification of core areas (Table 1) by an expert group of endoscopists.

Equally important, however, was the identification of validity evidence based on relationship to other variables using prospectively obtained empiric data. To our knowledge, this is the first time such rigorous methods have been used for the sole purpose of creating an examination to assess the knowledge needed to perform basic gastrointestinal endoscopy. Higher mean raw scores correlated well with higher PGY levels of training and endoscopic procedural experience. Having undergone a formal endoscopic rotation alone also correlated with better performance on the examination, although to a lesser degree. This is understandable considering that no standards exist for the duration, content, or breadth of endoscopic training rotations within and across disciplines, except for gastroenterology fellows.

Several limitations are recognized with the development of the FES cognitive examination. Surgeons with extensive experience in endoscopy and surgery were more highly represented than gastroenterologists in creating this program and defining the content areas. It could be readily argued that the FES examination is heavily biased toward surgeons. However, the cognitive knowledge and decision-making issues are similar for the common disease entities faced on a daily basis by surgeons and gastroenterologists alike. The FES task force involved our gastroenterology colleagues in the development of FES, and 6 % of the beta test-takers were either practicing gastroenterologists or gastroenterology fellows.

Several concerted efforts by the ASGE, other gastroenterological societies, and some surgical societies aim to standardize endoscopic training. The critical difference between the FES cognitive examination and other efforts to date is that FES is the first program to offer an empirically validated, objective assessment of the knowledge required to perform basic gastrointestinal endoscopy. Building on the multidisciplinary success of FLS, FES is designed to transcend specialty barriers and can be applied to all physicians interested in performing gastrointestinal endoscopy regardless of discipline. The examination will be updated and kept current as knowledge and techniques change over time. To this end, the FES task force will review content and create and establish validity evidence for new test items on an ongoing basis.

In summary, with the FES cognitive examination, SAGES has developed a comprehensive tool to assess the basic knowledge needed to perform gastrointestinal endoscopy. Extensive validity evidence has been established with the goal of dissemination regardless of specialty. This assessment tool is designed as a key component for obtaining certification in FES.

References

AT Committee, Adler DG, Dua KS, Dimaio CJ, Lee LS, Bakis G, Coyle WJ, Degregorio B, Hunt GC, McHenry L Jr, Pais SA, Rajan E, Sedlack RE, Shami VM, Faulx AL (2012) Endoluminal stent placement core curriculum. Gastrointest Endosc 76:719–724

AT Committee, DiMaio CJ, Mishra G, McHenry L, Adler DG, Coyle WJ, Dua K, DeGregorio B, Enestvedt BK, Lee LS, Mullady DK, Pais SA, Rajan E, Sedlack RE, Tierney WM, Faulx AL (2012) EUS core curriculum. Gastrointest Endosc 76:476–481

Gauderer MW, Ponsky JL, Izant RJ Jr (1980) Gastrostomy without laparotomy: a percutaneous endoscopic technique. J Pediatr Surg 15:872–875

McCune WS, Shorb PE, Moscovitz H (1968) Endoscopic cannulation of the ampulla of vater: a preliminary report. Ann Surg 167:752–756

Peters JH, Fried GM, Swanstrom LL, Soper NJ, Sillin LF, Schirmer B, Hoffman K, Committee SF (2004) Development and validation of a comprehensive program of education and assessment of the basic fundamentals of laparoscopic surgery. Surgery 135:21–27

Sedlack RE (2010) The Mayo colonoscopy skills assessment tool: validation of a unique instrument to assess colonoscopy skills in trainees. Gastrointest Endosc 72:1125–1133, 1133.e1121–1123

Training Committee, Sedlack RE, Shami VM, Adler DG, Coyle WJ, DeGregorio B, Dua KS, DiMaio CJ, Lee LS, McHenry L Jr, Pais SA, Rajan E, Faulx AL (2012) Colonoscopy core curriculum. Gastrointest Endosc 76:482–490

Vassiliou MC, Kaneva PA, Poulose BK, Dunkin BJ, Marks JM, Sadik R, Sroka G, Anvari M, Thaler K, Adrales GL, Hazey JW, Lightdale JR, Velanovich V, Swanstrom LL, Mellinger JD, Fried GM (2010) Global Assessment of Gastrointestinal Endoscopic Skills (GAGES): a valid measurement tool for technical skills in flexible endoscopy. Surg Endosc 24:1834–1841

Acknowledgments

We extend a heartfelt thanks to Carla Bryant (SAGES), Jessica Mischna (SAGES), Kaaren Hoffman, PhD (University of Southern California), and Patricia Young, MA (Kryterion, Inc.) for their extensive work and support in developing the Fundamentals of Endoscopic Surgery (FES).

Disclosures

Benjamin K. Poulose has received research support from Karl Storz Endoscopy and W.L. Gore. Melina C. Vassiliou has received research support from Covidien. Brian J. Dunkin has received honoraria from Covidien and Boston Scientific. Robert D. Fanelli has ownership interest in New Wave Surgical and has received royalties from Cook Surgical. Jose M. Martinez has received honoraria from Lifecell, Olympus, Boston Scientific, and Covidien. Jeffrey W. Hazey has ownership interest in Endoretics and has received research support from Stryker and Boston Scientific. Conor P. Delaney has received consulting fees from Tranzyme, licensing fees from Ethicon, and IP rights to Socrates Analytics, and honoraria from Covidien. Gerald M. Fried has received research support from Covidien and has a son who works for CAE Healthcare. Dr. Marks has received honoraria from Olympus, Apollo Endosurgery, and W.L. Gore. Jeffrey M. Marks has received consulting fees from GI Supply. None of these potential conflicts pertain to the content of this manuscript. John D. Mellinger, Lelan Sillin, Vic Velanovich, and James R. Korndorffer Jr have no conflicts of interest or financial ties to disclose.

Author information

Authors and Affiliations

Corresponding author

Additional information

This study was conducted on behalf of the Society of American Gastrointestinal and Endoscopic Surgeons Fundamentals of Endoscopic Surgery Committee.

Rights and permissions

About this article

Cite this article

Poulose, B.K., Vassiliou, M.C., Dunkin, B.J. et al. Fundamentals of Endoscopic Surgery cognitive examination: development and validity evidence. Surg Endosc 28, 631–638 (2014). https://doi.org/10.1007/s00464-013-3220-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00464-013-3220-0