Abstract

Background

Surgical residents often use a laparoscopic camera in minimally invasive surgery for the first time in the operating room (OR) with no previous education or experience. Computer-based simulator training is increasingly used in residency programs. However, no randomized controlled study has compared the effect of simulator-based versus the traditional OR-based training of camera navigation skills.

Methods

This prospective randomized controlled study included 24 pregraduation medical students without any experience in camera navigation or simulators. After a baseline camera navigation test in the OR, participants were randomized to six structured simulator-based training sessions in the skills lab (SL group) or to the traditional training in the OR navigating the camera during six laparoscopic interventions (OR group). After training, the camera test was repeated. Videos of all tests (including of 14 experts) were rated by five blinded, independent experts according to a structured protocol.

Results

The groups were well randomized and comparable. Both training groups significantly improved their camera navigational skills in regard to time to completion of the camera test (SL P = 0.049; OR P = 0.02) and correct organ visualization (P = 0.04; P = 0.03). Horizon alignment improved without reaching statistical significance (P = 0.20; P = 0.09). Although both groups spent an equal amount of actual time on camera navigation training (217 vs. 272 min, P = 0.20), the SL group spent significantly less overall time in the skill lab than the OR group spent in the operating room (302 vs. 1002 min, P < 0.01).

Conclusion

This is the first prospective randomized controlled study indicating that simulator-based training of camera navigation can be transferred to the OR using the traditional hands-on training as controls. In addition, simulator camera navigation training for laparoscopic surgery is as effective but more time efficient than traditional teaching.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The emergence of laparoscopic surgery and growing economic pressure in the medical field demand the establishment of new, more efficient educational training standards for residents to acquire surgical skills [1–3]. The first contact with laparoscopic surgery that students or residents have is the role of the “camera-man” in the operating room. Current training curricula make novices start using a camera in laparoscopic surgery mostly without any previous education or experience.

Virtual reality (VR) simulators are currently being evaluated for inclusion in surgical training curricula [4–6], mainly because skills learned on a simulator can be transferred to the operating room (OR) [7–11]. While most of this research focuses on the training of surgical operative skills in a simulation-based environment, only one study investigated the effect of simulator-based training on camera performance [12], showing a benefit of VR camera training versus no training in a porcine model. Adequate use of a 30° angled laparoscopic camera incorporates a whole set of skills such as anatomical orientation, horizon alignment, and scope orientation [13]. The absence of these skills may be associated with a decrease in general surgical performance, an increase in operating time [1, 14] and surgeon frustration. In addition, studies [12, 13] showing a transfer of manipulative skills learned on a simulator to the OR used a “nontraining” group as controls. It is obvious that a trained group performs better than a nontrained group. Therefore, the real value of simulator-based training and its transfer to the OR, especially in camera navigation, is not yet proven.

The present prospective randomized controlled study aimed to determine whether focused VR simulator-based laparoscopic camera training of novices could improve camera performance in an actual clinical situation in the same manner as does traditional training in the OR.

Material and methods

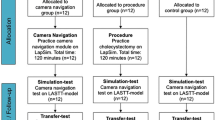

Thirty-two consecutive medical students on a surgical clerkship at the Department of Visceral and Transplantation Surgery of the University Hospital of Zurich, Switzerland, were enrolled in the study. Any previous active experience in laparoscopic camera handling in the OR and/or use of a VR simulator checked by a questionnaire was an exclusion criterion (n = 2 students). The study flowchart is shown in Fig. 1. The study was approved by the IRB of the University Hospital of Zurich and was registered on clinicaltrial.gov (identifier NCT01092013).

All eligible participants (n = 30 students) completed the validated visuospatial “Stumpf-Fay cube perspective” test [15] and were given an identical 60-min introduction on the technical functionality and the correct handling of an angled laparoscopic camera to create an equal level of knowledge.

Pretraining test

Students were then sent to the OR to perform a baseline camera skills assessment test (pretraining test) involving a standardized set of navigational tasks they would have to accomplish at the beginning of an actual operation. All patients were placed in a supine position. Participants were positioned on the patient’s right side and were given the 30° angled laparoscope introduced in the trocar. They had to center and hold for 5 s the following positions/organs and had to maintain the correct horizontal alignment during camera movement: (1) left abdominal wall, (2) ascending colon, (3) right lobe of the liver, (4) sigmoid colon, (5) cecum, (6) pelvis, (7) trocar entry site in the upper left quadrant (simulated by a finger pressing externally), and (8) descending colon. Maximum duration of this test was set at 5 min. No additional trocars had to be placed and no manipulation of tissue occurred. No patient had had previous abdominal surgery. This assessment was videotaped. Performance of 14 camera tests done by laparoscopic experts (>100 laparoscopic operations each) were also taped to check for the ability of the test to distinguish between experts and novices.

Randomization and training

The students were randomized by sealed, opaque envelopes into two groups to obtain camera training for the next 3 weeks in either the skills lab (SL group) or the OR (OR group). Subjects in the SL group trained twice a week for 1 h for 3 weeks (total of six training hours) using two Xitact IHP™ instrument haptic ports as interfaces for the laparoscopic instruments and a third unidirectional electromechanical interface, the Xitact ITP™ instrument tracking port, for the camera navigation (Mentice AB, Gothenburg, Sweden) with the LAP Mentor™ (Simbionix USA, Cleveland, OH) software. As a second simulator, a haptic ProMIS™ surgical hybrid simulator (Haptica Ltd., Dublin, Ireland) was used. They had to follow a standardized protocol, performing 40 min of camera navigation-specific tasks on the different simulators (25 min on the basic task modules of the Lap Mentor camera manipulation and 15 min on the laparoscope orientation Core modules on the ProMIS) and 20 min of training on noncamera or camera-specific simulator exercises of free choice. After every two sessions, progress was monitored by an expert and corrections for improvement were made as necessary. Total time spent in the skills lab and actual time using the different individual exercise modules were documented by the participant immediately after each training session using standard forms.

Participants randomized to the OR group assisted at six laparoscopic surgeries (including hemicolectomy, rectum resection, gastric bypass, and cholecystectomy), navigating the camera at the surgeon’s direction. They were trained the traditional way by immediate hands-on practice in the OR. Total time spent in the OR and actual time navigating the camera were documented by the participant immediately after each operation using standard forms.

Post-training test

After both groups were trained for 3 weeks, the same “camera assessment” test in the OR (post-training test) and the validated visuospatial test were repeated.

Analysis of videotapes

All videotapes (of participants and of experts) were independently reviewed by five blinded observers, all experienced surgical attending physicians. For each of the eight steps (see above), performance of (a) horizon alignment and (b) centered, steady organ visualization was graded using a predefined rating scale (4 points: achieved goal >75% of time, 3 points: >50%, 2 points: >25%, or 1 point: ≤25%). The range of score was 8 (minimum) to 32 (maximum). Correct orientation of the angled scope (30° optic) for step 7 was also assessed (yes or no). Time to complete the test was noted, and time spent for lens cleaning or technical problems was subtracted.

Hypothesis

We hypothesized that both groups would show improvement in the same camera skills as assessed by the videotaped camera test, but that the OR group would be significantly less efficient regarding the proportion of actual camera navigating time over the overall time spent in the respective training facility (OR vs. skills lab).

Statistical analysis

A sample size of 12 participants in each group will have an 80% power to detect a difference in means of 100 min (assuming a mean of 300 ± 15 min camera training for the SL group and a mean of 400 ± 115 min for the OR group) using a two-group Satterthwaite t-test with a 0.050 two-sided significance level.

Statistical analysis was performed using standard software SPSS v16 for Windows (SPSS, Inc., Chicago, IL). To compare continuous variables between the two groups, the Mann–Whitney U test was used. Categorical variables were compared using the χ2 test or, when appropriate, Fisher’s exact test. A P value less than 0.05 was considered to indicate statistical significance. An interrater reliability analysis calculating the single-measure intraclass correlation was performed to determine consistency among raters of the videotapes.

Results

Thirty medical students fulfilled the inclusion criteria and were randomized. Six trainees dropped out during the study because they failed to acquire enough training time (n = 2) or to attend the two necessary camera assessment tests (n = 4). Therefore, 24 trainees completed the study.

Baseline demographics and pretraining test results

No difference in baseline characteristics of the 24 participants was noted (Table 1). The pretraining camera assessment tests did not reveal any group difference (Table 1). The 14 experts performed significantly better in the camera assessment test than the 24 participants, demonstrating that the test can distinguish between novices and experts (construct validity): organ visualization score (30.9 ± 1.2 vs. 23.7 ± 4.2, P < 0.001), horizon alignment score (29.2 ± 1.5 vs. 21.4 ± 4.1, P < 0.001), time to completion (69 ± 12 s vs. 171 ± 65 s, P < 0.001), and percentage of correct camera rotation (93% vs. 100%, P < 0.001). Interrater reliability, single-measure intraclass correlation among the five independent experts grading all camera tests were 0.68 for organ visualization and 0.66 for horizon alignment.

Training was performed according to the study protocols and was efficient

The analysis of the training protocols showed that participants in the SL group adhered to the standardized 40-min protocol. They spent their remaining 20 min of free choice mainly performing manipulative task exercises equally on both simulators. Participants in both groups spent equal time actually training on camera navigation. However, participants in the OR group spent significantly more overall time in the OR to do so (Table 2).

Post-training test results

The comparison of the results of the post-training test between the SL group and the OR group is given in Table 2, the results of the pretraining test compared to the post-training test for each group is given in Table 3, and the difference in progress between the SL group and the OR group is listed in Table 4. Both groups showed significant progress in the organ visualization score and a significant decrease in mean time to complete the test. Improvements in horizon alignment and scope rotation handling were not significant. There was no significant intergroup progress difference for any of the parameters.

Discussion

To our knowledge this is the first prospective randomized study indicating that simulator-based camera navigation training can be transferred to the OR using the traditional hands-on training in the OR as control rather than a “nontraining” control group as used in other simulator studies [16–18]. Our study showed that traditional teaching in the OR still achieved its goal of improved and correct camera navigation but it was not as time efficient as VR simulator-based training. Camera handling generally represents the first step in today’s laparoscopic training for residents and is considered the basis for the acquisition of other laparoscopic skills. So far, current training standards make novices start using a camera in laparoscopic surgery mostly without any previous education or experience in the matter [19, 20]. As shown in this study, simulator-based camera navigation training is effective and highly time efficient and therefore should be integrated early into the training curriculum.

Surgical residency programs worldwide have been trying to integrate simulator-based training into their standard education [4, 5, 20]. However, the integration of such skill labs in standard surgical training curriculum is a demanding task [21]. Residents’ time restraints, the need for additional infrastructure including human resources (teachers), and the ambiguity about what such a curriculum should look like in detail [4, 22] are just a few of many important factors. It has been reported that unsupervised use of a surgical skills lab leads to uneager participation and the student fails to accumulate significant training time [5, 22]. Thus, training should be mandatory, with a dedicated schedule, and be at least partially supervised; this has been shown to increase the attendance rate substantially [22]. In this study we used a simple and short curriculum of 1 h twice a week for 3 weeks. The first 40 min of every hour included standardized exercises on simulators followed by 20 min of free exercises. After every two sessions, progress was monitored by a teacher and corrections were made as necessary. This combination of structured, controlled training with free time to discover the simulators (so-called “fun” part) has proven to highly motivate trainees as 10 of the 12 participants of the SL group returned to the simulator lab after the end of the study and 8 of the 12 in the OR group asked for further training on simulators. Trainees with little or no laparoscopic experience seem to profit most from simulator-based training [3], which indicates the importance of early incorporation into a training curriculum [6, 20]. This is supported by our findings of novices improving their camera navigation skills after completing a simple and short simulator-based training curriculum.

The main difficulty in learning laparoscopic camera handling and surgery is psychomotor and perceptual in nature [23]. Those spatial visualization abilities may vary significantly within the general population [24] and among residents [25]. There are more than ten different simulators available for camera navigational training functionality [26], while only a small number of devices have been properly validated [10]. In this study, we used two validated computer-based simulators with haptic and nonhaptic peripheral instrument ports. Although this study was not designed to determine if a single device or the combination of them was responsible for developing relevant camera navigational skills, participants’ feedback suggests that a combination of different training tools is highly appreciated and increased compliance as well as motivation.

Although in this study accuracy of organ visualization, a measure of orientation in the abdomen, improved significantly after training in both groups, correct horizon alignment was equal before and after training in the SL group, and improved, but not significantly, in the OR group. One could hypothesize that in simulated environments, participants can orientate on existing straight lines indicating the correct horizon level that do not exist in a real abdomen, and thus trainees were in an unfamiliar situation during the camera test. It has been stated that experienced laparoscopic surgeons might feel unfamiliar and possibly are at a disadvantage and learn less when training in a conceptual visual environment because of the absence of anatomical landmarks [13, 27]. As a consequence, one could postulate for more camera training modules using VR simulator software in an anatomical, not abstract, environment. However, training in the SL group was significantly more time efficient. Therefore, residents should be sent more often to simulator-based training, but not exclusively to simulations, as hands-on training is as effective and important. It is well known that an increase in operating time due to training in the OR is very expensive. Although our study was not designed to measure the effect of simulator training on overall operating time, students in the SL group achieved skills equivalent to those trained in the OR and were able to complete the camera test considerably faster, thus potentially reducing the operating time by handling the camera more efficiently. However, this needs to be proven in specially designed trials.

There are some limitations to this study. Participants in this study were students and not surgical residents and the results might not reflect the results of doctors with a particular interest in surgery. Recent studies found a correlation of visual-spatial abilities test scores and manual skills performed in surgery and on a VR simulator among novices [28], but failed to show such an association with experienced surgeons [29–32]. However, most simulator studies choose students as trainees mainly because of their availability, their lack of any experience in surgery, and residents’ time [12, 33, 34] restraints. A second limitation was that the exact camera training time was not predefined in both groups and hands-on training in the OR was not standardized. However, “learning by doing” most likely represents the current practice of teaching in many hospitals all over the world. Standardized, controlled, simulator-based training will become the new gold standard because it is effective and more efficient, as shown in this study, and provides objective feedback.

In summary, this is the first prospective randomized controlled study indicating that simulator-based training of camera navigation can be transferred to the OR using the traditional hands-on training in the OR as control. Correct camera navigation is the first step in laparoscopic surgery and training using simulators should be integrated in all training curricula.

References

Williams JR, Matthews MC, Hassan M (2007) Cost differences between academic and nonacademic hospitals: a case study of surgical procedures. Hosp Top 85:3–10

Bridges M, Diamond DL (1999) The financial impact of teaching surgical residents in the operating room. Am J Surg 177:28–32

Scott DJ, Bergen PC, Rege RV, Laycock R, Tesfay ST, Valentine RJ, Euhus DM, Jeyarajah DR, Thompson WM, Jones DB (2000) Laparoscopic training on bench models: better and more cost effective than operating room experience? J Am Coll Surg 191:272–283

Gerdes B, Hassan I, Maschuw K, Schlosser K, Bartholomäus J, Neubert T, Schwedhelm B, Petrikowski-Schneider I, Wissner W, Schönert M, Rothmund M (2006) Instituting a surgical skills lab at a training hospital. Chirurg 77:1033–1039

Chang L, Petros J, Hess DT, Rotondi C, Babineau TJ (2007) Integrating simulation into a surgical residency program: is voluntary participation effective? Surg Endosc 21:418–421

Panait L, Bell RL, Roberts KE, Duffy AJ (2008) Designing and validating a customized virtual reality-based laparoscopic skills curriculum. J Surg Educ 65:413–417

Ahlberg G, Heikkinen T, Iselius L, Leijonmarck CE, Rutqvist J, Arvidsson D (2002) Does training in a virtual reality simulator improve surgical performance? Surg Endosc 16:126–129

Cosman PH, Hugh TJ, Shearer CJ, Merrett ND, Biankin AV, Cartmill JA (2007) Skills acquired on virtual reality laparoscopic simulators transfer into the operating room in a blinded, randomised, controlled trial. Stud Health Technol Inform 125:76–81

Grantcharov TP, Kristiansen VB, Bendix J, Bardram L, Rosenberg J, Funch-Jensen P (2004) Randomized clinical trial of virtual reality simulation for laparoscopic skills training. Br J Surg 91:146–150

Hamilton EC, Scott DJ, Fleming JB, Rege RV, Laycock R, Bergen PC, Tesfay ST, Jones DB (2002) Comparison of video trainer and virtual reality training systems on acquisition of laparoscopic skills. Surg Endosc 16:406–411

Seymour NE, Gallagher AG, Roman SA, O’Brien MK, Bansal VK, Andersen DK, Satava RM (2002) Virtual reality training improves operating room performance: results of a randomized, double-blinded study. Ann Surg 236:458–463; discussion 463–454

Ganai S, Donroe JA, St Louis MR, Lewis GM, Seymour NE (2007) Virtual-reality training improves angled telescope skills in novice laparoscopists. Am J Surg 193:260–265

Buzink SN, Botden SM, Heemskerk J, Goossens RH, de Ridder H, Jakimowicz JJ (2009) Camera navigation and tissue manipulation; are these laparoscopic skills related? Surg Endosc 23:750–757

Babineau TJ, Becker J, Gibbons G, Sentovich S, Hess D, Robertson S, Stone M (2004) The “cost” of operative training for surgical residents. Arch Surg 139:366–369 discussion 369–370

Hassan I, Gerdes B, Koller M, Dick B, Hellwig D, Rothmund M, Zielke A (2007) Spatial perception predicts laparoscopic skills on virtual reality laparoscopy simulator. Childs Nerv Syst 23:685–689

Dunkin B, Adrales GL, Apelgren K, Mellinger JD (2007) Surgical simulation: a current review. Surg Endosc 21:357–366

Seymour NE (2008) VR to OR: a review of the evidence that virtual reality simulation improves operating room performance. World J Surg 32:182–188

Gurusamy KS, Aggarwal R, Palanivelu L, Davidson BR (2009) Virtual reality training for surgical trainees in laparoscopic surgery. Cochrane Database Syst Rev 1:CD006575

Gallagher AG, Ritter EM, Champion H, Higgins G, Fried MP, Moses G, Smith CD, Satava RM (2005) Virtual reality simulation for the operating room: proficiency-based training as a paradigm shift in surgical skills training. Ann Surg 241:364–372

Hassan I, Osei-Agymang T, Radu D, Gerdes B, Rothmund M, Fernández ED (2008) Simulation of laparoscopic surgery-four years’ experience at the Department of Surgery of the University Hospital Marburg. Wien Klin Wochenschr 120:70–76

Haluck RS, Satava RM, Fried G, Lake C, Ritter EM, Sachdeva AK, Seymour NE, Terry ML, Wilks D (2007) Establishing a simulation center for surgical skills: what to do and how to do it. Surg Endosc 21:1223–1232

Stefanidis D, Acker CE, Swiderski D, Heniford BT, Greene FL (2008) Challenges during the implementation of a laparoscopic skills curriculum in a busy general surgery residency program. J Surg Educ 65:4–7

Jordan JA, Gallagher AG, McGuigan J, McClure N (2001) Virtual reality training leads to faster adaptation to the novel psychomotor restrictions encountered by laparoscopic surgeons. Surg Endosc 15:1080–1084

Ozer DJ (1987) Personality, intelligence, and spatial visualization: correlates of mental rotations test performance. J Pers Soc Psychol 53:129–134

Langlois J, Wells GA, Lecourtois M, Bergeron G, Yetisir E, Martin M (2009) Spatial abilities in an elective course of applied anatomy after a problem-based learning curriculum. Anat Sci Educ 2:107–112

Basdogan C, Sedef M, Harders M, Wesarg S (2007) VR-based simulators for training in minimally invasive surgery. IEEE Comput Graph Appl 27:54–66

Buzink SN, Christie LS, Goossens RH, de Ridder H, Jakimowicz JJ (2010) Influence of anatomic landmarks in the virtual environment on simulated angled laparoscope navigation. Surg Endosc 24(12):2993–3001

Hassan I, Maschuw K, Rothmund M, Koller M, Gerdes B (2006) Novices in surgery are the target group of a virtual reality training laboratory. Eur Surg Res 38:109–113

Keehner MM, Tendick F, Meng MV, Anwar HP, Hegarty M, Stoller ML, Duh QY (2004) Spatial ability, experience, and skill in laparoscopic surgery. Am J Surg 188:71–75

Wanzel KR, Hamstra SJ, Caminiti MF, Anastakis DJ, Grober ED, Reznick RK (2003) Visual-spatial ability correlates with efficiency of hand motion and successful surgical performance. Surgery 134:750–757

Gallagher AG, Cowie R, Crothers I, Jordan-Black JA, Satava RM (2003) PicSOr: an objective test of perceptual skill that predicts laparoscopic technical skill in three initial studies of laparoscopic performance. Surg Endosc 17:1468–1471

McClusky DA III, Ritter EM, Lederman AB, Gallagher AG, Smith CD (2005) Correlation between perceptual, visuo-spatial, and psychomotor aptitude to duration of training required to reach performance goals on the MIST-VR surgical simulator. Am Surg 71:13–20 discussion 20–11

Korndorffer JR Jr, Hayes DJ, Dunne JB, Sierra R, Touchard CL, Markert RJ, Scott DJ (2005) Development and transferability of a cost-effective laparoscopic camera navigation simulator. Surg Endosc 19:161–167

Hogle NJ, Briggs WM, Fowler DL (2007) Documenting a learning curve and test-retest reliability of two tasks on a virtual reality training simulator in laparoscopic surgery. J Surg Educ 64:424–430

Acknowledgment

This study was supported by Swiss National Science Foundation grant to D. Hahnloser and R. Rosenthal (grant No. 3200B0-120722/1) (Clinicaltrial.gov identifier: NCT01092013).

Disclosure

Florian M. Franzeck, R. Rosenthal, Markus K. Muller, Antonio Nocito, Frauke Wittich, Christine Maurus, Daniel Dindo, Pierre-Alain Clavien, and D. Hahnloser have no conflicts of interest or financial ties to disclose.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Franzeck, F.M., Rosenthal, R., Muller, M.K. et al. Prospective randomized controlled trial of simulator-based versus traditional in-surgery laparoscopic camera navigation training. Surg Endosc 26, 235–241 (2012). https://doi.org/10.1007/s00464-011-1860-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00464-011-1860-5