Abstract

Following up on previous work of Cathabard et al. (in: Proceedings of foundations of genetic algorithms (FOGA’11), ACM, 2011) we analyze variants of the (1 + 1) evolutionary algorithm (EA) for problems with unknown solution length. For their setting, in which the solution length is sampled from a geometric distribution, we provide mutation rates that yield for both benchmark functions OneMax and LeadingOnes an expected optimization time that is of the same order as that of the (1 + 1) EA knowing the solution length. More than this, we show that almost the same run times can be achieved even if no a priori information on the solution length is available. We also regard the situation in which neither the number nor the positions of the bits with an influence on the fitness function are known. Solving an open problem from Cathabard et al. we show that, for arbitrary \(s\in {\mathbb {N}}\), such OneMax and LeadingOnes instances can be solved, simultaneously for all \(n\in {\mathbb {N}}\), in expected time \(O(n (\log (n))^2 \log \log (n) \ldots \log ^{(s-1)}(n) (\log ^{(s)}(n))^{1+\varepsilon })\) and \(O(n^2 \log (n) \log \log (n) \ldots \log ^{(s-1)}(n) (\log ^{(s)}(n))^{1+\varepsilon })\), respectively; that is, in almost the same time as if n and the relevant bit positions were known. For the LeadingOnes case, we prove lower bounds of same asymptotic order of magnitude apart from the \((\log ^{(s)}(n))^{\varepsilon }\) factor. Aiming at closing this arbitrarily small remaining gap, we realize that there is no asymptotically best performance for this problem. For any algorithm solving, for all n, all instances of size n in expected time at most T(n), there is an algorithm doing the same in time \(T'(n)\) with \(T'=o(T)\). For OneMax we show results of similar flavor.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Evolutionary algorithms (EAs) form a class of black-box optimizers, that—thanks to their high flexibility and their ability to produce high-quality solutions for a broad range of problems—are today well-established problem solvers in industrial and academic applications, where they are often used as subroutines for particularly difficult parts of the optimization problem, as well as for several pre- or post-processing steps of state-of-the-art optimization methods. The predominant part of research on EAs focuses on engineering and empirical work. This research is complemented by the theory of evolutionary computation (EC), which aims at providing mathematically founded statements about the performance of general-purpose problem solvers like EAs and similar randomized search heuristics, with one of its main objectives being to provide rigorous insights into the working principles of such optimization techniques. This way, theory of EC has helped to debunk common misconceptions about the performance of EAs and has served as a source of inspiration to design more efficient EAs.

One of the key performance measure in EC is expected run time (also referred to as optimization time), i.e., the expected number of function evaluations that are needed until an optimal solution is evaluated for the first time. Run time analysis is hence one of the core topics in the theory of EC. Thanks to significant methodological advances in the last 20 years, run time analysis for static problem settings is today quite well developed [3, 23, 28]. However, a topic that has received much less attention in the theory of EA literature is the performance of EAs in uncertain environments. Uncertainty can come in many guises, for example with respect to function evaluations, the variation operators, or the dynamics of the fitness function. Understanding how evolutionary search algorithms can tackle such uncertain environments is an emerging research topic; see [4] for a survey on examples in combinatorial optimization, but also [25] for a survey also discussing different sources of uncertainty.

Following up on a line of research initiated in [6], we study what EAs can achieve in the presence of uncertainty with respect to the solution length. More precisely, we regard how well an EA can optimize small problems that are hidden in larger representations. Our aim is to design algorithms which, on each such small problem, have a performance comparable to the one that could be obtained with similar algorithms which know the length and the location of the small problem in the representation. Quite surprisingly, we are able to show that already some variants of the simplest evolutionary algorithm, the \((1 + 1)~\text{ EA }\) (cf. Algorithm 1 for the pseudocode of this classic EA), can be very efficient for such problems.

To model uncertainty with respect to the solution length, we assume that we have a large search space \(\{0,1\}^N\) consisting of all bit strings of length N. The objective function f that we want to optimize, however, depends only on a small subset of the bit positions; that is, there is a set \(I \subseteq [1{\ldots }N] := \{1, \ldots , N\}\) such that \(f(x) = f(x_{|I})\) for all \(x \in \{0,1\}^N\), where we write \(x_{|I}\) to denote the bit string composed of only those positions i with \(i \in I\). We call \(n := |I|\) the relevant size of our optimization problem. We regard two models of uncertainty.

-

1.

Initial segment uncertainty model: We assume that I is an initial segment of \([1{\ldots }N]\), that is, that \(I = [1{\ldots }n]\) for some \(n \le N\). This model was proposed and investigated in [6]; it is motivated there by applications in the design of testing sequences for finite state machines [27].

-

2.

Unrestricted uncertainty model: In this model, we allow I to be an arbitrary subset of \([1{\ldots }N]\) having cardinality \(n = |I| \le N\). Since position-dependent mutation rates cannot reasonably cope with such problems, it was posed as open problem in [6] whether there are evolutionary algorithms that can deal with this stronger type of uncertainty.

1.1 Previous Work

Cathabard et al. [6], were the first to consider, from a theoretical point of view, evolutionary algorithms in environments with unknown solution lengths. Cathabard et al. regard the initial segment uncertainty model and assume that the solution length is sampled from a fixed and known distribution D with finite support. More precisely, they assume that the solution length n is sampled from a truncated version of the geometric distribution, in which the probability mass for values greater than some threshold N is shifted to the event that \(n=N\). In this situation, the algorithm designer has access to both the upper bound N for the solution length and the success probability q of the distribution.

Cathabard et al. analyze a variant of the \((1 + 1)~\text{ EA }\) in which each bit is flipped with probability 1 / N and they also study a variant with position-dependent bit-flip probabilities. In the latter, the i-th bit is flipped independently of all other bits with probability \(p_i = 1/(i+1)\). For any fixed choice of vector of bit flip probabilities \(\vec {p}\), we denote the corresponding algorithm with \((1 + 1)~\text{ EA }_{\vec {p}}\).

Catahabard et al. consider the classic benchmark problems OneMax defined by \(\textsc {Om}:\{0,1\}^n \rightarrow {\mathbb {R}}, x \mapsto \sum _{i=1}^n{x_i}\) and LeadingOnes defined by \(\textsc {Lo}:\{0,1\}^n \rightarrow {\mathbb {R}}, x \mapsto \max \left\{ i\in \{0,1,\ldots ,n\} \mid \forall j \le i: x_j=1\right\} \). They show that, for their two choices of \(\vec {p}\), the \((1 + 1)~\text{ EA }_{\vec {p}}\) has an expected run time which is polynomial in N and n, where the expectation is taken with respect to the solution length and the random decisions of the algorithm. An overview of the precise bounds obtained in [6] is given in Table 1.

1.2 Our Results

We extend the work of Cathabard et al. in several ways. In a first step (Sect. 3) we show that the regarded mutation probabilities are sub-optimal. Making use of the light upper tail of the (truncated) geometric distribution, we design bit flip probabilities that yield significantly smaller expected run times (for both the OneMax and the LeadingOnes function). For LeadingOnes, we complement this finding by a lower bound that proves that no mutation probabilities can yield a performance that is better by more than a constant factor than our suggested ones. Table 1 summarizes our findings and compares them to the results from [6].

While in the setting of Cathabard et al. we are in the convenient situation that we have full knowledge of the distribution D from which the solution length is sampled, one may wonder if this knowledge is necessary. We therefore study in Sect. 4 what can be done in the initial segment uncertainty model without any prior knowledge about the solution length. In this situation we require that the algorithm designer chooses bit flip probabilities \((p_i)_{i\in {\mathbb {N}}}\) such that, regardless of the solution length n, the expected performance of the \((1 + 1)~\text{ EA }_{\vec {p}}\) using bit flip probabilities \(\vec {p}= (p_1, \ldots , p_n)\) is as small as possible. It is not obvious that this can be done in polynomial time. In fact, for both algorithms studied by Cathabard et al. as well as for any uniform choice (\(p_i=p\) for all \(i \in {\mathbb {N}}\)) of the bit flip probabilities, the expected run time on this problem is exponential in n if N is exponential in n (cf. Theorems 13 and 14).

We show (Theorems 15 and 16) that not only this problem can be tackled with position-dependent bit flip probabilities, but, quite surprisingly, we shall also see that this can be done in a way that yields almost optimal run times. Indeed, for LeadingOnes our results are only a \((\log (n))^{1+\varepsilon }\) factor worse than the best possible \({\varTheta }(n^2)\) run time bound. This factor can be made even smaller. The precise runtime bounds are \(O(n (\log (n))^2 \log ^{(2)}(n) \ldots \log ^{(s-1)}(n) (\log ^{(s)}(n))^{1+\varepsilon })\) and \(O(n^2 \log (n) \log ^{(2)}(n) \ldots \log ^{(s-1)}(n) (\log ^{(s)}(n))^{1+\varepsilon })\), for OneMax and LeadingOnes, respectively, where s is an arbitrary positive integer and \(\log ^{(j)}\) denotes the j-fold iterated logarithm (cf. Equation (6)).

In Sect. 5 we provide a second way to deal with unknown solution lengths. We introduce an alternative variant of the \((1 + 1)~\text{ EA }\) in which the bit flip probability, which is uniformly and independently applied to each bit position, is chosen according to some (fixed) distribution at the beginning of each iteration. For suitably chosen distributions Q, the expected run times of the respective \((1 + 1)~\text{ EA }_{Q}\) on OneMax and LeadingOnes are of the same asymptotic order as those of the previously suggested solution with position-dependent bit flip probabilities \((p_i)_{i\in {\mathbb {N}}}\). In particular, for LeadingOnes the expected run times are, simultaneously for all possible solution lengths n, almost of the same order as the expected run time of a best possible \((1 + 1)~\text{ EA }\) knowing the solution length in advance.

This second approach has an advantage over the position-dependent bit flip probabilities in that it effectively ignores bits that do not contribute anything to the fitness function (irrelevant bits). Unlike all previously suggested solutions, it can therefore deal with the unrestricted uncertainty model. These EAs therefore provide a positive answer to the above-mentioned question posed by Cathabard et al. [6, Sect. 6] concerning the ability of EAs do deal with unrestricted uncertainty.

Summarizing the above, we see that the EA variants—with either position-dependent or random mutation rates in the initial segment uncertainty model and using random mutation rates in the unrestricted uncertainty model—are only by a factor of \(O(\log (n) \log ^{(2)}(n) \ldots \log ^{(s-1)}(n) (\log ^{(s)}(n))^{1+\varepsilon })\) slower than the performance of the \((1 + 1)~\text{ EA }\) on LeadingOnes when the relevant bits are known to the algorithms. Since the unrestricted model contains the initial segment model as a very small subcase (one OneMax and LeadingOnes instance of size n instead of \(\left( {\begin{array}{c}N\\ n\end{array}}\right) \ge (N/n)^n\)OneMax and \(n! \left( {\begin{array}{c}N\\ n\end{array}}\right) \)LeadingOnes instances)Footnote 1, these results raise the question if one can obtain a superior performance from using position-dependent mutation rates in the much more restricted initial segment uncertainty model. We address this question in Sects. 6 and 7, where we investigate how good the above-described results are.

For LeadingOnes, our lower bounds show that our run time results from Sects. 4 and 5 are very tight (e.g., up to an \(O((\log ^{(s)}(n))^{\varepsilon })\) factor for arbitrary \(s \in {\mathbb {N}}\)). This in particular implies that for the LeadingOnes problem, not much can be gained by using position-dependent mutation rates in the heavily restricted initial segment model. Much stronger than that, we show that the \((1 + 1)~\text{ EA }\) with any mutation operator that treats zeros and ones symmetrically, that is, that uses an arbitrary distribution on the subsets of \([1{\ldots }N]\), selects a random subset according to this distribution, and then flips exactly the bits in this set, cannot obtain a better performance in either the initial segment or the unrestricted model than the \((1 + 1)~\text{ EA }\) with position-dependent rates (in the initial segment model) or the \((1 + 1)~\text{ EA }\) with random mutation rates (in either model). This shows in a very strong sense that the two uncertainty models are equally difficult when optimizing LeadingOnes.

We said above that we have very good lower bounds. We would have liked to claim that we have matching upper and lower performance bounds. We cannot do so, as we observe a curiosity of this optimization under uncertainty problem: There is no asymptotically best performance. More precisely, for any function \(T : {\mathbb {N}}\rightarrow {\mathbb {N}}\) such that there is an algorithm solving LeadingOnes instances with unknown length n in expected time at most T(n), there is a function \(T' : {\mathbb {N}}\rightarrow {\mathbb {N}}\) such that \(T' = o(T)\) and there is an algorithm solving LeadingOnes instances with unknown length n in expected time at most \(T'(n)\).

For the case of OneMax we get an analogous result for the unrestricted uncertainty model: any choice of distribution Q over bit flip probabilities can be improved with a distribution \(Q'\) so that using bit flip probabilities chosen from \(Q'\) lead to an asymptotically better run time than using those chosen from Q. For the case of the initial segment uncertainty model it continues to be open whether there is an optimal run time, or whether there are lower bounds similar to those for LeadingOnes.

Our run time results are summarized in Tables 1 and 2 . This work builds on results that we have previously announced in [11, 13].

2 Problem Setting and Algorithms

We use this section to fix notation (Sect. 2.1), to make precise the models of uncertainty (Sect. 2.2), and to define the EA variants capable of coping with uncertainty (Sect. 2.3).

2.1 Basic Notation, Summability, OneMax, and LeadingOnes

We use the following standard notation. We write \({\mathbb {N}}\) to denote the positive integers. We write \([a{\ldots }b]\) to denote the set of integers no less than a and no larger than b. We abbreviate \([n]:=[1{\ldots }n]\).

A sequence\(\vec {p}\) is a mapping \(\vec {p}:{\mathbb {N}}\rightarrow {\mathbb {R}}\), which equivalently can be written as \(\vec {p}=(p_n)_{n \in {\mathbb {N}}}\). We alternate between these two notations. A sequence \(\vec {p}=(p_n)_{n \in {\mathbb {N}}}\) is said to be monotonically decreasing if, for all \(n \in {\mathbb {N}}\), it holds that \(p_n \ge p_{n+1}\). It is summable if the sequence \((S_n)_{n \in {\mathbb {N}}}\) of partial sums of absolute values \(S_n:=\sum _{i=1}^n{|p_i|}\) converges.

We are mostly interested in the asymptotic behavior of our algorithms. We therefore use a lot Landau’s big- and small-Oh notation. For convenience, we write \(\vec {p}=o(\vec {q})\) instead of \(p(n) = o(q(n))\) whenever the variable n is clear from the context.

We regard in this work the two classic benchmark problems \(\textsc {OneMax} \) and \(\textsc {LeadingOnes} \), which, for given n, assign to each bit string \(x \in \{0,1\}^n\) the number of ones in x and the number of initial ones before the first zero entry, respectively; that is,

We omit the subscript n when it is clear from the context.

OneMax and LeadingOnes functions are the two best-studied problems in the theory of evolutionary computation literature. How the \((1 + 1)~\text{ EA }\), \((1 + \lambda )~\text{ EA }\), \((\mu + 1)~\text{ EA }\), and \((\mu + \lambda )~\text{ EA }\) optimize these functions is relatively well understood [1, 18, 20, 24, 30] (with the exception of the runtime of the \((\mu + \lambda )~\text{ EA }\) on LeadingOnes). For the performance of the \((1 + 1)~\text{ EA }\), not only the asymptotic order of magnitude is known [20], namely \({\varTheta }(n \log n)\) for OneMax and \({\varTheta }(n^2)\) for LeadingOnes, but even more precise results. Ending a series of works [14, 15, 20, 29] on the expected runtime E[T] of the \((1 + 1)~\text{ EA }\) on OneMax, the very precise estimate of \(E[T] = en\ln (n) + c_1 n + \frac{1}{2} e \ln (n) + c_2 + O((\log n)/n)\) for concrete constants \(c_1, c_2\) was proven in [22]. For the expected runtime of the \((1 + 1)~\text{ EA }\) on LeadingOnes, the exact value of \(E[T] = \frac{1}{2} n^2 ((1-\frac{1}{n})^{-n+1} - 1 + \frac{1}{n})\) was determined, independently, in [5, 29].

We regard in this work OneMax and LeadingOnes functions of unknown solution length. If a distribution D is known from which the solution length is sampled we consider the expected run time of our algorithms on \(\textsc {OneMax} _D\) and \(\textsc {LeadingOnes} _D\), respectively, which are the problems \(\textsc {Om} _n\) respectively \(\textsc {Lo} _n\) with random solution length \(n \sim D\). Note here that the expectation is taken both with respect to the random solution length and with respect to the random samples of the algorithm.

2.2 Models of Uncertainty

The focus of our work is on how evolutionary algorithms optimize objective functions that only depend on a small subset of the decision variables. Hence we assume that there is a large search space \(\{0,1\}^N\), but our objective function f can be written as \(f(x) = {\tilde{f}}(x_{|I})\) for some subset \(I \subset [1{\ldots }N]\) with \(n := |I| \ll N\) and some function \({\tilde{f}} : \{0,1\}^I \rightarrow {\mathbb {R}}\).

It turns out that the dimension N of the large search space is not very relevant for our results. For this reason we assume that the large search space is in fact \(\{0,1\}^{{\mathbb {N}}}\), that is, the set of all infinite binary sequences. Since the complexity measure we regard is the number of fitness evaluations (and not the number of elementary operations as in classic algorithmics), this expansion of the search space has no influence on our run time statements.

We then regard two models of uncertainty about the location of the relevant part of the problem instance. To remain consistent with previous works, we call both a model of unknown solution length despite the fact that in the second model also the relevant bit positions are unknown.

Initial segment uncertainty: We assume that the set I of relevant bits is an initial segment \([1{\ldots }n]\) of the outer space \(\{0,1\}^{{\mathbb {N}}}\). The number n is not known to the algorithm. When regarding LeadingOnes as fitness function f, we assume that f respects the usual order of the bit-positions, that is, \(f(x) = \textsc {Lo} _n(x) = \max \{i \in [0{\ldots }n] \mid \forall j \le i: x_j=1\}\).

Unrestricted uncertainty: We assume that the set I of relevant bits is any subset of \({\mathbb {N}}\) of cardinality \(|I| = n\). Neither n nor I is known to the algorithm. When regarding the LeadingOnes fitness function, we do not assume that it respects the natural order of the bits. Hence the problem instance is described by I and a bijective mapping \(\sigma : [1{\ldots }n] \rightarrow I\) such that \(f(x) = \textsc {Lo} _{n,I,\sigma }(x) = \max \{i \in [0{\ldots }n] \mid \forall j \le i: x_{\sigma (j)}=1\}\).

2.3 Evolutionary Algorithms for Dealing With Unknown Solution Lengths

We now present two ways how evolutionary algorithms can deal with unknown solution length scenarios. Both are based on modifying the mutation operator. Hence, in principle, our ideas can be used in conjunction with any evolutionary algorithm in which mutation is used with significant probability. Nevertheless, to keep things simple and to not obscure the main ideas, we restrict ourselves to the \((1 + 1)~\text{ EA }\), the simplest of all EAs.

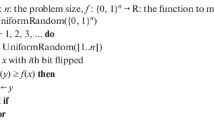

The classic \((1 + 1)~\text{ EA }\), whose pseudocode is given in Algorithm 1, starts by sampling an initial search point from \(\{0,1\}^n\) uniformly at random. It then proceeds in rounds, each of which consists of a mutation and a selection step. Throughout the whole optimization process the \((1 + 1)~\text{ EA }\) maintains a population size of one, and the individual in this population is always a best-so-far solution. In the mutation step of the \((1 + 1)~\text{ EA }\), the current-best solution x is mutated by flipping every bit with probability 1 / n, independently of all other bits. The fitness of the resulting search point y is evaluated and in the selection step the parent x is replaced by its offspring y if and only if the fitness of y is at least as good as the one of x. Since we consider maximization problems here, this is the case if \(f(y) \ge f(x)\). As usual in the theory of evolutionary algorithms, we are interested in expected run times, i.e., the expected number of rounds it takes until the \((1 + 1)~\text{ EA }\) evaluates for the first time a solution of maximal fitness. We therefore do not specify a termination criterion.

The first idea to enable the \((1 + 1)~\text{ EA }\) to cope with uncertainty with respect to the solution length is to use position-dependent mutation rates [6]. This idea makes sense only for the initial segment uncertainty model. For a given sequence \(\vec {p}: {\mathbb {N}}\rightarrow [0,1]\), the \((1 + 1)~\text{ EA }_{\vec {p}}\) (cf. Algorithm 2) is the standard \((1 + 1)~\text{ EA }\), except that in the mutation step the offspring is generated from flipping the bit in position i with position-dependent probability \(p_i\), independently of all other bits.

It is not difficult to see that the \((1 + 1)~\text{ EA }_{\vec {p}}\) indeed generalizes the standard \((1 + 1)~\text{ EA }\). In fact, we obtain the \((1 + 1)~\text{ EA }\) from the \((1 + 1)~\text{ EA }_{\vec {p}}\) if we set \(p_i=1/n\) for all \(i \in [n]:=\{1, \ldots , n\}\). We call such mutation vectors with \(p_i=p_j\) for all i, juniform mutation rates.

We note that in [6] position-dependent mutation rates were referred to as non-uniform. In the context of our work, however, position-dependent is more precise, since we also consider EA variants that are non-uniform with respect to time.

The second idea to overcome the challenges of an unknown solution length scenario, in particular in the unrestricted uncertainty model, is to use a random mutation rate sampled independently in each iteration from a suitable distribution. More precisely, let Q be a probability distribution over [0, 1]. For the ease of presentation, we restrict ourselves to discrete distributions. Then the \((1 + 1)~\text{ EA }_{Q}\) (see Algorithm 3 for the pseudocode) is again the classic \((1 + 1)~\text{ EA }\) with the sole exception that in each iteration t a mutation rate \(p_t\) is sampled from Q and then the offspring is generated from the parent individual by flipping each bit independently with probability \(p_t\), that is, by performing standard bit mutation with mutation rate \(p_t\). Again we see that this algorithm generalizes the classic \((1 + 1)~\text{ EA }\), which we obtain for choosing Q to be 1 / n deterministically.

We will mostly work with distributions Q that have all their probability mass on the values \(\{1/i \mid i \in {\mathbb {N}}\}\). These are most conveniently described via a probability distribution \((p_n)_{n \in {\mathbb {N}}}\) on \({\mathbb {N}}\), that is, via a sequence \(\vec {p}\) in [0, 1] such that \(\sum _{n=1}^\infty p_n = 1\), and then taking \(\Pr _{Q(\vec {p})}(1/i) = p_i\). For convenience, we shall allow arbitrary summable sequences \(\vec {p}\) in [0, 1] and then set \(\Pr _{Q(\vec {p})}(1/i) = p_i/\sum _{n \in {\mathbb {N}}}{p_n}\).

For reasons of completeness, we remark that there are a few other theoretical works using random mutation strengths, however with different targets and with different distributions. For multi-valued representations, more precisely, when the search space is \([0{\ldots }r-1]^n\), [7, 12] show that a harmonic mutation strength often gives good results. Very roughly speaking, this means that a variable value \(k \in [0{\ldots }r-1]\) is changed to either \(k+\delta \) or \(k-\delta \) with probability proportional to \(1/\delta \), and hence with probability \({\varTheta }(\log (r)/\delta )\). In [19], it was shown that standard-bit mutation with a random mutation rate chosen according to a power-law distribution can greatly speed-up the optimization of non-unimodal functions. We note that the choice of the distribution generally is important. We could not obtain the performance shown in this work with either a harmonic or a power-law mutation strength.

3 Initial Segment Uncertainty Model with Random Solution Length

We first consider the setting introduced by Cathabard et al. [6]. After a short presentation of the model in Sect. 3.1, a general lower bound for LeadingOnes (Sect. 3.2), and the results of [6] in Sect. 3.3, we show that the bounds in [6] can be improved by using different (uniform) mutation rates (Sect. 3.4).

3.1 The Model

Cathabard et al. [6] consider the following model. The algorithm designer knows the distribution D from which the unknown solution length for the initial segment uncertainty model is drawn; only distributions with finite support are considered, so the algorithm designer knows an upper bound N on the actual solution length n. He also knows the class of functions from which the optimization problem is taken (for example OneMax or LeadingOnes).

Based on this knowledge, the algorithm designer chooses a vector \((p_1,\ldots , p_N)\) of bit flip probabilities indicating with which probability a bit is flipped in each round. As discussed in the previous section, we will also regard a slightly more general model in which the distributions over \({\mathbb {N}}\) may possibly have infinite support; the algorithm designer then chooses an infinite sequence of bit flip probabilities \(\vec {p}= (p_i)_{i \in {\mathbb {N}}}\). After this choice of bit flip probabilities, the actual solution length n is sampled from the given distribution D. Then the \((1 + 1)~\text{ EA }_{\vec {p}}\) (Algorithm 2) is run with mutation probabilities \(\vec {p}=(p_1, \ldots , p_n)\) on the given problem with the given problem length. We use \(\textsc {OneMax} _D\) and \(\textsc {LeadingOnes} _D\), respectively, to denote the problem of optimizing OneMax and LeadingOnes, respectively, in this initial segment uncertainty model with random length drawn from D.

Cathabard et al. [6] consider as distribution D the following truncated geometric distribution, based on a geometric distribution where the probability mass for values greater than n are moved to n.

Definition 1

([6]) The truncated geometric distribution\(\mathrm {TruncGeo}(N,q)\) with truncation parameter N and success probability \(q \in (0,1/N]\) satisfies, for all \(n \in {\mathbb {N}}\), that the probability of \(\mathrm {TruncGeo}(N,q)=n\) is

Note that the truncated geometric distribution recovers the geometric distribution \(\mathrm {Geo}(q)\) for \(N = \infty \).

It is well known, respectively can be found in [6, Proposition 1], that for \(X=\mathrm {Geo}(q)\) and \(Y=\mathrm {TruncGeo}(N,q)\) with \(q \ge 1 / N\)

Note that we trivially have \({{\mathrm{E}}}[Y] \le {{\mathrm{E}}}[X]\).

3.2 A General Lower Bound for LeadingOnes

What is a good lower bound for the expected run time of any\((1 + 1)~\text{ EA }_{\vec {p}}\) on OneMax or LeadingOnes when the length is sampled from some given distribution D on \({\mathbb {N}}\)? If the algorithm designer knew the true length n before he has to decide upon the mutation probabilities \((p_1, \ldots , p_n)\), then the optimal bit flip probability for this solution length could be chosen. We show that for LeadingOnes, if the true length n is known, any setting of the bit-flip probabilities leads to an expected run time of \({\varOmega }(n^2)\) regardless of the choice of \(\vec {p}\).

Lemma 2

For any fixed solution length n and any vector \(\vec {p}=(p_i)_{i \in {\mathbb {N}}}\) of mutation probabilities, the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {LeadingOnes} _n\) is \({\varOmega }(n^2)\).

Proof

Via arguments analogous to the ones in [5, Sect. 3.3] (see [8, Sect. 2.3] for a formal proof) it is easy to see that the expected run time E[T] of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {LeadingOnes} _n\) is

Using this bound one can easily show that we can assume without loss of generality that the mutation probabilities are monotonically increasing, i.e., \(p_i \le p_{i+1}\) holds for all \(i \in [n]\). Indeed, if \(p_k > p_{k+1}\) for some \(k \in [n]\), the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) is larger than that of the \((1 + 1)~\text{ EA }_{\vec {q}}\) with \(\vec {q}=(q_1, \ldots , q_n)\), \(q_k=p_{k+1}\), \(q_{k+1}=p_k\), and \(q_i=p_i\) for \(i \notin \{k,k+1\}\).

Let \(k:=\lfloor n/2 \rfloor \). We have

which gives

This shows that the overall expected optimization time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {LeadingOnes} _n\) is \({\varOmega }(n\exp (p_k k)/p_k)\). For all possible choices of \(p_k\) this expression is \({\varOmega }(n^2)\): for \(p_k = O(1/n)\) we have \(n/p_k = {\varOmega }(n^2)\) and \(\exp (p_k k) = {\varTheta }(1)\), while for larger \(p_k\) we see that \(\exp (p_k k)/p_k\) is a growing function. \(\square \)

Using these lower bounds for fixed solution lengths, Jensen’s Inequality and the convexity of \(n \mapsto n^2\), we get the following general lower bound.

Theorem 3

Let D be any distribution on \({\mathbb {N}}\) with a finite expectation of m. Then for any sequence \(\vec {p}\) the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {LeadingOnes} _D\) is \({\varOmega }(m^2)\). This bound also applies to the setting in which the algorithm designer can choose the mutation probabilities \(\vec {p}=(p_1, \ldots , p_n)\)after the solution length \(n~\sim ~D\) has been drawn.

Using Eq. (1), we get the following corollary.

Corollary 4

Let \(N \in {\mathbb {N}}\) and \(q \ge 1/N\). Let \(D= \mathrm {TruncGeo}(N,q)\) or \(D=\mathrm {Geo}(q)\). For any sequence \(\vec {p}\) the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {LeadingOnes} _D\) is \({\varOmega }(q^{-2})\). This bound also applies to the setting in which the algorithm designer can choose the mutation probabilities \(\vec {p}=(p_1, \ldots , p_n)\)after the solution length \(n~\sim ~D\) has been drawn.

We conjecture that analoguous results hold for OneMax. More precisely, we conjecture that for any fixed solution length n and any vector \(\vec {p}\) of mutation rates the expected optimization time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) is \({\varOmega }(n \log n)\). With this result, Theorems 3 and Corollary 4 would extend to OneMax, with \({\varOmega }(m^2)\) and \({\varOmega }(q^{-2})\) replaced by \({\varOmega }(m \log m)\) and \({\varOmega }(q^{-1} \log q^{-1})\), respectively.

3.3 Known Upper Bounds

Cathabard et al. [6] analyze the run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) with uniform mutation probabilities \(\vec {p}=(1/N)_{i \in {\mathbb {N}}}\) and of the \((1 + 1)~\text{ EA }_i\), which is the \((1 + 1)~\text{ EA }_{\vec {p}}\) with \(\vec {p}=(p_i)_{i \in {\mathbb {N}}}:=(1/(i+1))_{i \in {\mathbb {N}}}\).

For OneMax they obtain the following results.

Theorem 5

(Results for OneMax from [6]) Let \(N \in {\mathbb {N}}\), \(\varepsilon \in (0,1)\), and \(q=N^{-\varepsilon }\). For \(D = \mathrm {TruncGeo}(N,q)\) the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {OneMax} _{D}\) is \({\varTheta }(N \log q^{-1})\) when \(\vec {p}=(1/N)_{i \in {\mathbb {N}}}\), while the expected run time of the \((1 + 1)~\text{ EA }_i\) on \(\textsc {OneMax} _{D}\) is \(O(q^{-2} \log N)\).

This result shows that the \((1 + 1)~\text{ EA }_{\vec {p}}\) with \(\vec {p}=(1/N)_{i \in {\mathbb {N}}}\) outperforms the \((1 + 1)~\text{ EA }_i\) for \(q < 1/\sqrt{N}\), while the latter algorithm is preferable for larger q. As we shall see in the following section one should not conclude from this result that position-dependent bit flip probabilities are the better choice for this problem.

Remark

By using a slightly more careful analysis than presented in [6], the bound for the \((1 + 1)~\text{ EA }_i\) on \(\textsc {OneMax} _{D}\) can be improved to \(O(q^{-2} \log q^{-1})\). In fact, an analysis similar to the one in Sect. 3.4, that is disregarding outcomes that are much larger than the expectation, will give that result. It can also be shown that the requirement \(q=N^{-\varepsilon }\) is not needed as the \(O(q^{-2} \log q^{-1})\) bound holds for all \(q>1/N\). It also holds for the (non-truncated) geometric distribution \(D=\mathrm {Geo}(q)\).

For LeadingOnes, Cathabard et al. show the following results.

Theorem 6

(Results for LeadingOnes from [6]) For N, \(\varepsilon \), q, and D as in Theorem 5, the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) with \(\vec {p}=(1/N)_{i \in {\mathbb {N}}}\) on \(\textsc {LeadingOnes} _{D}\) is \({\varTheta }(N q^{-1})\), while the expected run time of the \((1 + 1)~\text{ EA }_i\) on \(\textsc {LeadingOnes} _{D}\) is \({\varTheta }(q^{-3})\).

Thus also for \(\textsc {LeadingOnes} \) the \((1 + 1)~\text{ EA }_i\) performs better than the \((1 + 1)~\text{ EA }_{\vec {p}}\) with \(\vec {p}=(1/N)_{i \in {\mathbb {N}}}\) when \(q>1/\sqrt{N}\) while the uniform \((1 + 1)~\text{ EA }_{\vec {p}}\) should be preferred for smaller q.

Remark

As in the \(\textsc {OneMax} \) case, the \({\varTheta }(q^{-3})\) bound for the \((1 + 1)~\text{ EA }_i\) holds more generally for all geometric distributions \(\mathrm {Geo}(q)\) with parameter \(q>1/N\).

Theorem 6 shows that on \(\textsc {LeadingOnes} _D\) the \((1 + 1)~\text{ EA }_i\) loses a factor of 1 / q with respect to the lower bound given by Corollary 4. This will be improved in the following section.

3.4 Optimal Upper Bounds With Uniform Mutation Probabilities

We show that, for D being the (truncated or non-truncated) geometric distribution, there exist bit flip probabilities \(\vec {p}=(p_i)_{i \in {\mathbb {N}}}\) such that the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {OneMax} _D\) and \(\textsc {LeadingOnes} _D\) is significantly lower than those of the two algorithms studied by Cathabard et al. For LeadingOnes the expected run time of our algorithm matches the lower bound given in Corollary 4 and is thus optimal in asymptotic terms.

In both cases, i.e., both for \(\textsc {OneMax} _D\) (Theorem 7) and for \(\textsc {LeadingOnes} _D\) (Theorem 10), the mutation rates yielding the improvement over the results in [6] are uniform. Our results therefore imply that for LeadingOnes, unlike conjectured in [6], one cannot gain more than constant factors from using position-dependent mutation probabilities.

The key observation determining our choice of the mutation probability is the fact that the (truncated) geometric distribution has a light upper tail, so that it is preferable to choose the mutation probability in a way that minimizes the expected optimization time of the \((1 + 1)~\text{ EA }\) when faced with a solution size of average lengths. Hence, if we know the parameters of the distribution, we can choose the mutation probability such that it is (almost) reciprocal in each position to the expected length of the solution (which we stated in Equation (1) to be proportional to \(q^{-1}\)). Thus, in the setting of [6], i.e., for the truncated geometric distribution with parameters N and q, we set \(p_i:=q/2\) for all \(i \in {\mathbb {N}}\). Our approach naturally also works for the (non-truncated) geometric distribution \(\mathrm {Geo}(q)\), which also has a light upper tail.

We remark without proof that similar results hold for distributions that are strongly concentrated around the mean, e.g., binomial distributions, and also strongly concentrated unbounded distributions, such as Poisson distributions.

Theorem 7

For \(N \in {\mathbb {N}}\) let \(1/N \le q=q(N)<1/2\). Let \(\vec {p}:=(q/2)_{i \in {\mathbb {N}}}\). For \(D= \mathrm {Geo}(q)\) and \(D= \mathrm {TruncGeo}(N,q)\), the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {OneMax} _{D}\) is \({\varTheta }(q^{-1} \log q^{-1})\).

We note that the lower bound is immediate. With constant probability, the random instance has length \({\varOmega }(q^{-1})\). Since our \((1 + 1)~\text{ EA }_{\vec {p}}\) flips bits independently with probability q / 2, the classic coupon collector type argument (see Lemma 10 in [20]) gives that with probability \({\varOmega }(1)\) after \(c q^{-1} \ln (q^{-1})\) iterations, where c is a suitable constant, there is a bit position which was initially zero and which was not flipped in any mutation operation. Hence in this case, which shows up with constant probability, the run time is more than \(c q^{-1} \ln (q^{-1})\). This immediately shows that the expected run time is \({\varOmega }(q^{-1} \ln (q^{-1}))\).

For the proof of the upper bound we will use the following upper bound from [29] for the expected run time of the \((1 + 1)~\text{ EA }\) on OneMax. A similar upper bound can be found in [31, Theorem 4.1].

Lemma 8

([29, Theorem 8]) For a fixed length n and a uniform mutation vector \(\vec {p}=(p)_{i \in {\mathbb {N}}}\) with \(0<p<1\), the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {OneMax} _n\) is at most \((\ln (n) + 1)/(p(1-p)^{n})\).

Proof (of Theorem 7)

We first consider \(D = \mathrm {TruncGeo}(N,q)\). We do not worry about constant factors in this analysis and thus bound some expressions generously.

Using Lemma 8 we can bound the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {OneMax} _{D}\) from above by

To bound the last summand in this expression, we first observe that (using the binomial theorem), for all positive n,

This shows that the last summand in (2) is at most

which is \(O(q^{-1} \log q^{-1})\). This can be seen as follows. For \(q \ge 2 \ln \ln (N)/N\) it holds (using the inequality \(1-q \le \exp (-q)\)) that \((1-q)^{N/2-1} \le \exp (-qN/2) \le 1/\ln (N)\) and thus \(2 (1-q)^{N/2-1} (\ln (N)+1)/q = O(1/q)\), while for \(1/N \le q \le 2 \ln \ln (N)/N\) we have (for some suitably chosen constant C)

Using again (3) we bound the first part of the sum (2) by

To show that this expression is \(O(q^{-1} \log q^{-1})\) we split the sum into blocks of length \(k:=\lceil 1/q \rceil \) and use again the inequality \(1-q \le \exp (-q)\). This shows that the last expression is at most

where the last equality can be best seen by first considering that \(\sum _{j=0}^{\lceil N/k \rceil -1}\exp (-\tfrac{1}{k}(\tfrac{jk}{2}-1)) (\ln (k)+1) = {\varTheta }(\log k)\), while \(\sum _{j=0}^{\lceil N/k \rceil -1}{ \exp (-\tfrac{1}{k}(\tfrac{jk}{2}-1)) (\ln (j+1))}=O(1)\). Summarizing the computations above we see that (2) is of order at most \(q^{-1} \log q^{-1}\).

For \(D= \mathrm {Geo}(q)\) the computations are almost identical. By Lemma 8 and (3) the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {OneMax} _D\) is at most

which can be seen in a similar way as above by splitting the sum into blocks of size \(k:=\lceil 1/q \rceil \) and using \(1-q \le \exp (-q)\). \(\square \)

It is interesting to note that the expected run time increases to between \({\varOmega }(N)\) and \(O(N \log N)\) when the mutation probability is chosen to be \(\vec {p}=(q)_{i \in {\mathbb {N}}}\). This can easily be seen as follows. For the upper bound we use Lemma 8 (ignoring the “+1” terms which are easily seen to play an insignificant role) to obtain that the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) with \(\vec {p}=(q)_{i \in {\mathbb {N}}}\) on \(\textsc {OneMax} _{\mathrm {TruncGeo}(N,q)}\) is at most

We can derive a strong lower bound of \({\varOmega }(N \log N)\) in the case of \(2^{-N/3} \le q \le 1/N\) from the following one for static solution lengths. See also [31, Sect. 6] for very strong lower bounds.

Lemma 9

([29, Theorem 9]) For a fixed length n and a uniform mutation vector \(\vec {p}=(p)_{i \in {\mathbb {N}}}\), the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {OneMax} _n\) is at least \((\ln (n)-\ln \ln n-3)/(p(1-p)^{n})\) for \(2^{-n/3} \le p \le 1/n\) and at least \((\ln (1/(p^2n))-\ln \ln n-3)/(p(1-p)^{n})\) for \(1/n \le p \le 1/(\sqrt{n}\log n)\).

Thus, the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) with \(\vec {p}=(q)_{i \in {\mathbb {N}}}\) and \(2^{-N/3} \le q \le 1/N\) on \(\textsc {OneMax} _{\mathrm {TruncGeo}(N,q)}\) is at least \(\sum _{n=1}^{N-1} q(1-q)^{n-1} \frac{ (\ln (n)-\ln \ln n-3)}{q (1-q)^{n}} \ge \sum _{n=1}^{N-1}{ \frac{1}{2} \frac{\ln n}{1-q}} = \frac{1}{2}\frac{\ln ((N-1)!)}{1-q} = {\varOmega }(N \log N). \) Similarly we can get a lower bound of \({\varOmega }(N)\) in case of \(1/N \le q \le 1/(\sqrt{N}\log N)\) by using the lower bound of \(1/(q (1-q)^{n})\) for any fixed solution length n.

We now turn our attention to the LeadingOnes problems, where a similar approach as above yields the following result.

Theorem 10

Let \(N \in {\mathbb {N}}\) and \(1/N \le q \le 1/2\). Let \(\vec {p}:=(q/2)_{i \in {\mathbb {N}}}\). For \(D = \mathrm {TruncGeo}(N,q)\) and \(D = \mathrm {Geo}(q)\), the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {LeadingOnes} _{D}\) is \({\varTheta }(q^{-2})\).

We will derive this result from the following lemma, which was independently proven in [5, Theorem 3], [29, Corollary 2], and in a slightly weaker form in [26, Theorem 1.2].

Lemma 11

([5, 29], and [26]) For a fixed length n and a mutation vector \(\vec {p}=(p)_{i \in {\mathbb {N}}}\) with \(0<p<1/2\), the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {LeadingOnes} _n\) is exactly \(\left( 1/(2p^2)\right) \left( (1-p)^{-n+1}-(1-p)\right) \).

Proof (of Theorem 10)

We first consider the case that the solution length is sampled from the truncated geometric distribution \(\mathrm {TruncGeo}(N,q)\). By Lemma 11 the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {LeadingOnes} _{D}\) is

where A is the summand that accounts for the event that the solution length is N, i.e.,

To bound expression (4), we use inequality (3), which for \(n=1\) provides \((1-q)^{1/2} < 1-q/2\). Using this inequality (in the first and the last step in the computations below), and ignoring the “\(-(1-q/2)\)” term in (4), we can bound the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {LeadingOnes} _{D}\) from above by

Similar computations, again using that \((1-q)^{1/2} < 1-q/2\) show that for \(D = \mathrm {Geo}(q)\) the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {LeadingOnes} _D\) is bounded from above by

\(\square \)

Just as for \(\textsc {OneMax} _{D}\) (with \(D = \mathrm {TruncGeo}(N,q)\)) we see that also on \(\textsc {LeadingOnes} _{D}\) the expected run time increases (in this case to \({\varTheta }(N/q)\)) when the mutation probability is chosen to be \(\vec {p}=(q)_{i \in {\mathbb {N}}}\). By Lemma 11 this run time equals

where A is the summand that accounts for the event that the solution length is N, i.e.,

4 Initial Segment Uncertainty Model with Arbitrary Solution Length

In the setting described in Sect. 3 it is assumed that the algorithm designer has quite a good knowledge about the solution length. Not only does he know an upper bound of N, but he may also crucially exploit its distribution. Indeed, we make quite heavy use in Theorems 7 and 10 of the fact that the (truncated) geometric distribution has a light upper tail. Since situations may exist in which one cannot rely on so much information, we regard in this section a more general setting in which no prior information is given about the possible solution length n. That is, we regard a setting in which the solution length can be an arbitrary positive integer. In this setting neither do we have any upper bounds on n nor any information about its distribution.

As before, our task is to decide upon on a sequence \((p_i)_{i \in {\mathbb {N}}}\) of mutation probabilities \(0 \le p_i \le 1\). An adversary may then choose the solution length n and we run the \((1 + 1)~\text{ EA }_{\vec {p}}\) with \(\vec {p}=(p_1, \ldots , p_n)\). In practical applications, this can be implemented with a (possibly generous) upper bound on the problem size.

We first show that uniform fixed bit flip probabilities necessarily lead to exponential run times (see Sect. 4.1). We then show two ways out of this problem. In Sect. 4.2 we consider position-dependent bit flip probabilities and in Sect. 5 we show that we can have an efficient algorithm with uniform bit flip probabilities if we choose the bit flip probability randomly in each iteration.

4.1 Non-suitability of Uniform Bit Flip Probabilities

It seems quite intuitive that, if nothing is known about the solution length, there is not much we can achieve with uniform bit flip probabilities. In fact, for any fixed mutation probability \(p \in [0,1]\), we just need to choose a large enough solution length n to see that the \((1 + 1)~\text{ EA }_{\vec {p}}\) with uniform mutation probability p is very inefficient.

More precisely, using the following statement (which is a simplified version of [31, Theorem 6.5]), we get the lower bound regarding optimizing OneMax with uniform bit flip probabilities stated in Theorem 13.

Theorem 12

(from [31]) Let \(0<\varepsilon <1\) be a constant. Let \(p = O(n^{-2/3-\varepsilon })\) and \(\vec {p}:=(p)_{i \in {\mathbb {N}}}\). On any linear function, the expected optimization time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) is bounded from below by

Theorem 13

Let \(p \in [0,1]\) be a constant and \(\vec {p}:=(p)_{i \in {\mathbb {N}}}\). Then there exists a positive integer \(n_0 \in {\mathbb {N}}\) such that, for all \(n \ge n_0\), the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {OneMax} _n\) is \(2^{{\varOmega }(n)}\).

It is quite intuitive that for large p the expected optimization time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) with \(\vec {p}=(p)_{i \in {\mathbb {N}}}\) is very large also for small problem sizes, as in this case typically too many bits are flipped in each iteration. This has been made precise by Witt, who showed that for p, n with \(p={\varOmega }(n^{\varepsilon -1})\), the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) is \(2^{{\varOmega }(n^\varepsilon )}\) with probability at least \(1-2^{-{\varOmega }(n^\varepsilon )}\) [31, Theorem 6.3].

For LeadingOnes we get a similar lower bound from Lemma 11.

Theorem 14

Let \(p \in (0,1/2)\) be a constant and \(\vec {p}:=(p)_{i \in {\mathbb {N}}}\). Then the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {LeadingOnes} _n\) is \(2^{{\varOmega }(n)}\).

Proof

From Lemma 11 we have that the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) is, for n large enough,

\(\square \)

4.2 Initial Segment Uncertainty Model with Position-Dependent Bit Flip Probabilities

As discussed, one way to achieve efficient optimization with unknown solution length is by using position-dependent mutation rates, that is, different bit positions have different probabilities associated for being flipped during a mutation operation. We shall make use of summable sequences\(\vec {p}\) to define these bit-flip probabilities. For the convenience of the reader, some basic facts and definitions of summable sequences are summarized in Section A in the “Appendix”.

The advantage of using summable sequences is that the probability of flipping only one single bit is always constant, regardless of the total number of bits considered, i.e., regardless of the problem length n. This is in contrast to the sequence \((1/(i+1))_{i \in {\mathbb {N}}}\) considered in [6], which is not summable, and which has a chance of \((1/2)\prod _{i=2}^{n}(1-1/(i+1))=1/n\) of flipping only the first bit and a chance of \((1/n)\prod _{i=1}^{n-1}(1-1/(i+1))=1/n^2\) of flipping only the nth bit. For this reason the \((1 + 1)~\text{ EA }_i\) is very inefficient for the setting in which the solution length can be arbitrary.

Using the following two theorems for OneMax and LeadingOnes, respectively, we will be able to show in Corollary 17 that not knowing the solution length n does not harm the expected run time more than by a factor of order \(\log (n) \log ^{(2)}(n) \ldots \log ^{(s-1)}(n) (\log ^{(s)}(n))^{1+\varepsilon }\) with respect to the best known (and, in case of LeadingOnes according to Lemma 2 optimal) bound when the problem length is known a priori.

We start with the theorem regarding OneMax.

Theorem 15

Let \(\vec {p}=(p_i)_{i \in {\mathbb {N}}}\) be a monotonically decreasing summable sequence with \({\varSigma }:=\sum _{i=1}^{\infty }{p_i}<1\). Then the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {OneMax} _n\) is at most \(\log n/(p_n(1-{\varSigma }))= O(\log n/p_n)\).

Proof

We make use of the multiplicative drift theorem [17, Theorem 3] and show that for every n and every search point x with \(n-k\) ones, the probability to create in one iteration of the \((1 + 1)~\text{ EA }_{\vec {p}}\) with \(\vec {p}\) a search point y with \(\textsc {OneMax} _n(y)>\textsc {OneMax} _n(x)\) is at least of order \(k/p_n\). This can in fact be seen quite easily by observing that the probability to increase the OneMax-value of x by exactly one is at least

where the first (elementary) estimate is known as Weierstrass product inequality, see, e.g., [9]. From this an upper bound of \(\log n/(p_n (1-{\varSigma }))\) for the run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) follows immediately from the multiplicative drift theorem. \(\square \)

Next we consider LeadingOnes. The proof follows along similar lines as the one for OneMax and uses a fitness level argument instead of multiplicative drift (using additive drift would also be possible). The following statement will be generalized in Lemma 27 but suffices for the considerations made in this section.

Theorem 16

Let \(\vec {p}=(p_i)_{i \in {\mathbb {N}}}\) be a monotonically decreasing summable sequence with \({\varSigma }:=\sum _{i=1}^{\infty }{p_i}<1\). The expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {LeadingOnes} _n\) is at most \(n/(p_n(1-{\varSigma })) = O(n/p_n)\).

Proof

Let \(n,k\in {\mathbb {N}}\) with \(k < n\) and let \(x \in \{0,1\}^n\) with \(\textsc {Lo} (x)=k-1\). The probability to get in one iteration of the \((1 + 1)~\text{ EA }_{\vec {p}}\) a search point y with \(\textsc {Lo} (y)>\textsc {Lo} (x)\) is at least

By a simple fitness level argument (see, e.g., the work by Sudholt [29] for background and examples of this method), the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {LeadingOnes} _n\) is thus at most \(n/(p_n(1-{\varSigma }))\). \(\square \)

It is well known that, for every constant \(\varepsilon >0\), the sequence \((1/(i (\log i)^{1+\varepsilon }))_{i \in {\mathbb {N}}}\) is summable (this can be proven via Cauchy’s condensation test). It is also monotonically decreasing in i. Theorems 15 and 16 therefore imply that the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) on \(\textsc {OneMax} _n\) is \(O(n (\log n)^{2+\varepsilon })\) and \(O(n^2 (\log n)^{1+\varepsilon })\) for \(\textsc {LeadingOnes} _n\) when \(\vec {p}=(p_i)_{i \in {\mathbb {N}}}:=(1/(2S i (\log i)^{1+\varepsilon }))_{i \in {\mathbb {N}}}\) with \(S:=\sum _{i=1}^{\infty }{1/(i (\log i)^{1+\varepsilon })}\).

This bound can be improved by regarding the following sequences of iterated logarithms. For every \(b>1\), \(\varepsilon \ge 0\), \(r>0\), and all positive integers s, set

where, for each \(b>1\), \(j \in {\mathbb {N}}_{\ge 2}\), and \(r>0\), we set

with \(\log _b^{(1)}(r):=\log _b(r)\) if \(r\ge b\) and \(\log _b^{(1)}(r):=1\) otherwise. When, in the following, the subscript b indicating the base of the logarithm is omitted, it can be assumed to be equal to two.

It is furthermore well known that, for every \(\varepsilon >0\) and every \(s \ge 1\), the sequence \((p_2^{s,\varepsilon }(n))_{n \in {\mathbb {N}}}\) is summable, cf. [21, page 48]. Together with Theorems 15 and 16 , this gives the following bounds.

Corollary 17

Let \(s \in {\mathbb {N}}\) and \(\varepsilon >0\). Set \(\vec {p}:=(p^{s,\varepsilon }_{i})_{i \in {\mathbb {N}}}\). Then the expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) is

-

\(O(n (\log (n))^2 \log ^{(2)}(n) \ldots \log ^{(s-1)}(n) (\log ^{(s)}(n))^{1+\varepsilon })\) on \(\textsc {OneMax} _n\), and

-

\(O(n^2 \log (n) \log ^{(2)}(n) \ldots \log ^{(s-1)}(n) (\log ^{(s)}(n))^{1+\varepsilon })\) for \(\textsc {LeadingOnes} _n\).

A natural question arising from the construction in (5) is the summability of those sequences in which the iterated logarithms in the denominator are not interrupted; that is, of the sequences \((p_b^{\infty }(n))_{n \in {\mathbb {N}}}\) with elements

It is known in the mathematics literature (cf. also Theorem 43 in the “Appendix”) that these sequences \((p_b^{\infty }(n))_{n \in {\mathbb {N}}}\) are summable if and only if the base b of the logarithm satisfies \(b<e:=\exp (1)\). This yields the following bounds.

Corollary 18

Let \(b<e\) and \(\vec {p}:=(p_b^{\infty }(n))_{n \in {\mathbb {N}}}\). The expected run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\) is

-

\(O(n (\log _b(n))^2 \log ^{(2)}_b(n)\ldots )\) on \(\textsc {OneMax} _n\), and

-

\(O(n^2 \log _b(n) \log ^{(2)}_b(n)\ldots )\) on \(\textsc {LeadingOnes} _n\).

5 Unrestricted Uncertainty Model

In the conclusions of [6] the authors ask the following: how can we optimize efficiently when an upper bound N on the problem length is known, but only n bits at unknown positions are relevant for the fitness? It is not difficult to see that our previous solutions with position-dependent bit flip probabilities will not be able to assign appropriate bit flip probabilities to the relevant bit positions. However, any uniform choice of bit flip probabilities will effectively ignore irrelevant bit positions. In this section we therefore consider the \((1 + 1)~\text{ EA }_{Q}\), described as Algorithm 3 in Sect. 2.3, a variation of the \((1 + 1)~\text{ EA }\) where the bit flip probability p is chosen randomly from a distribution Q on (0, 1) in each iteration (the distribution Q does not change over time). This mutation probability is then applied independently to each bit, i.e., each bit of the current best solution is independently flipped with probability p.

To make the problem more explicit, we are asked to find a distribution Q on [0, 1] such that the \((1 + 1)~\text{ EA }_{Q}\) efficiently optimizes for any \(n \in {\mathbb {N}}\) and any pairwise different \(b_1, \ldots , b_n \in {\mathbb {N}}\) the functions

In Theorems 19 and 20 we show that such a distribution Q exists. That is, there is a distribution Q such that the corresponding \((1 + 1)~\text{ EA }_{Q}\) efficiently optimizes any \(\textsc {OneMax} _{b_1, \ldots , b_n}\) and any \(\textsc {LeadingOnes} _{b_1, \ldots , b_n}\) function, regardless of the number of relevant bits and regardless of their positions.

We start with our main result regarding OneMax.

Theorem 19

Let \((p_i)_{i \in {\mathbb {N}}} \in (0,1)^{\mathbb {N}}\) be a monotonically decreasing summable sequence. Set \({\varSigma }:=\sum _{j=1}^{\infty }{p_j}\). Let Q be the distribution which assigns the mutation probability 1 / i a probability of \(p_i/{\varSigma }\).

For any pairwise different positive integers \(b_1, \ldots , b_n\) the expected run time of the \((1 + 1)~\text{ EA }_{Q}\) on \(\textsc {OneMax} _{b_1, \ldots , b_n}\) is \(O\left( \log (n) / p_{2n}\right) \).

Proof

Let \(n \in {\mathbb {N}}\) and \(b_1, \ldots , b_n\) be pairwise different positive integers.

The probability to sample a mutation probability between 1 / (2n) and 1 / n is

We disregard all iterations in which we do not sample a mutation probability between 1 / (2n) and n (they can only be beneficial). Thus, on average, we consider at least one iteration out of \(1/(np_{2n})\).

Assuming that x is a search point with \(n-\ell \) ones (in the relevant positions) and that the sampled bit flip probability p satisfies \(1/(2n) \le p \le 1/n\), the probability of gaining one of the remaining \(\ell \) ones in a relevant position and not flipping any other relevant bit is

Thus, we have an expected progress in each iteration of at least

Therefore, by the multiplicative drift theorem [17, Theorem 3], we need in expectation \(O(\log (n) / p_{2n})\) iterations to optimize function \(\textsc {OneMax} _{b_1, \ldots , b_n}\). \(\square \)

For \(\textsc {LeadingOnes} \) we obtain the following.

Theorem 20

Let \((p_i)_{i \in {\mathbb {N}}}\) and Q as in Theorem 19. For any pairwise different \(b_1, \ldots , b_n \in {\mathbb {N}}\) the expected run time of the \((1 + 1)~\text{ EA }_{Q}\) on \(\textsc {LeadingOnes} _{b_1, \ldots , b_n}\) is \(O\left( n / p_{2n}\right) \).

Proof

Let \(n \in {\mathbb {N}}\) and \(b_1, \ldots , b_n\) be pairwise different positive integers.

This proof follows along similar lines as the one for OneMax. We have again that the probability to have a bit flip probability between 1 / (2n) and 1 / n in an iteration is at least \(np_{2n}\).

Let x be a search point with \(\textsc {LeadingOnes} _{b_1, \ldots , b_n}(x) = \ell \). Given a mutation probability p between 1 / (2n) and 1 / n, the probability to create in one iteration of the \((1 + 1)~\text{ EA }_{Q}\) a search point y of fitness greater than \(\ell \) is at least

Thus, we have an expected progress in each iteration of at least

Therefore, by the fitness level method (see again [29] for a discussion of this method), we need in expectation \(O(n / p_{2n})\) iterations to optimize \(\textsc {LeadingOnes} _{b_1, \ldots , b_n}\). \(\square \)

Similarly to Corollaries 17 and 18 we obtain the following bounds.

Corollary 21

Let \(n \in {\mathbb {N}}\) and \(b_1, \ldots , b_n\) be pairwise different positive integers.

For \(s \in {\mathbb {N}}\), \(\varepsilon >0\), \(\vec {p}:=(p^{s,\varepsilon }_{i})_{i \in {\mathbb {N}}}\), and \(Q = Q(\vec {p})\) the expected run time of the \((1 + 1)~\text{ EA }_{Q}\) is

-

\(O(n (\log (n))^2 \log ^{(2)}(n) \ldots \log ^{(s-1)}(n) (\log ^{(s)}(n))^{1+\varepsilon })\) for \(\textsc {OneMax} _{b_1, \ldots , b_n}\), and

-

\(O(n^2 \log (n) \log ^{(2)}(n) \ldots \log ^{(s-1)}(n) (\log ^{(s)}(n))^{1+\varepsilon })\) for \(\textsc {LeadingOnes} _{b_1, \ldots , b_n}\).

For \(b<e\), \(\vec {p}=(p_b^{\infty }(n))_{n \in {\mathbb {N}}}\), and \(Q=Q(\vec {p})\) the expected run time of the \((1 + 1)~\text{ EA }_{Q}\) is

-

\(O(2n (\log _b(2n))^2 \log ^{(2)}_b(2n)\ldots )\) for \(\textsc {OneMax} _{b_1, \ldots , b_n}\), and

-

\(O(2n^2 \log _b(2n) \log ^{(2)}_b(2n)\ldots )\) for \(\textsc {LeadingOnes} _{b_1, \ldots , b_n}\).

6 Lower Bounds for LeadingOnes in Both Uncertainty Models

In this section, we prove very sharp lower bounds for the optimization of the LeadingOnes function in the two uncertainty models via the two algorithm classes \((1 + 1)~\text{ EA }_{\vec {p}}\) and \((1 + 1)~\text{ EA }_{Q}\). Our first result and the central step towards proving the lower bounds is proposing a fairly general class of algorithms that extends both previous algorithm classes (Sect. 6.1). We then show that all three lead to the same run time profiles for the initial segment model (Theorem 26). Since the \((1 + 1)~\text{ EA }_{Q}\) algorithm class gives the same performance on instances defined on any subset of the bit positions, this general result connects the two uncertainty models and shows that none of our algorithms can have a better performance in the weaker initial segment model.

Given that all algorithm classes are equally powerful, we then concentrate in Sect. 6.2 on the performance of the \((1 + 1)~\text{ EA }_{\vec {p}}\) in the initial segment uncertainty model. In Theorem 34 we show that, for all \(s \in {\mathbb {N}}\), \({\varOmega }(n^2 \log (n) \log ^{(2)}(n) \ldots \log ^{(s)}(n))\) is a lower bound for the expected run time of the LeadingOnes instance of length n. By carefully constructing for each sequence \(\vec {p}\) a sequence \(\vec {q}\) such that the asymptotic performance of \((1 + 1)~\text{ EA }_{\vec {q}}\) is strictly better than the one of \((1 + 1)~\text{ EA }_{\vec {p}}\), we also show that there is no best asymptotic performance on the initial segment LeadingOnes problem in the \((1 + 1)~\text{ EA }_{\vec {p}}\) algorithms class (and likewise in the \((1 + 1)~\text{ EA }_{Q}\) class), cf. Sect. 6.2.2.

6.1 Four Algorithm Classes with Equal Power in the Initial Segment Uncertainty Model

To conveniently prove lower bounds for the two algorithm classes \((1 + 1)~\text{ EA }_{\vec {p}}\) and \((1 + 1)~\text{ EA }_{Q}\), we define the following algorithm class which generalizes both. Consequently, a lower bound for the new algorithm class immediately is a lower bound for both previously regarded ones.

Arbitrary Mutation on Infinite Bit Strings: The \((1 + 1)~\mathbf EA _{{\mathbb {P}}}\). In simple words, we consider all algorithms that can be defined as follows. Let \({\mathbb {P}}\) be a probability measure on the set \({\varOmega } = \{0,1\}^{{\mathbb {N}}}\) of infinite bit strings. The \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\), when run on the finite subset \(I \subset {\mathbb {N}}\) of bit positions, is the classic \((1 + 1)~\text{ EA }\) except that the mutation operator (informally speaking) samples a random element X from \({\varOmega }\) and then flips exactly those bits \(i \in I\) for which \(X_i = 1\).

To make this formally correct, we need a mild excursion into probability theory. For all \(n \in {\mathbb {N}}\) and \(a_1, \ldots , a_n \in \{0,1\}\), we call the set

a cylinder in \({\varOmega }\). Let \({\mathcal {A}}\) be the \(\sigma \)-algebra generated by all cylinders. The precise structure of \({\mathcal {A}}\) is not overly important in the remainder except that it contains the following sets C(y).

For a finite set \(I \subset {\mathbb {N}}\) denote by \(\{0,1\}^I\) the set of all finite binary sequences with indices in I, formally, all \(y : I \rightarrow \{0,1\}\). For \(y \in \{0,1\}^I\), let

Then \({\mathcal {A}}\) contains all such sets C(y).

Formally speaking, \(\{C(y) \mid y \in \{0,1\}^I\}\) generates a sub-\(\sigma \)-algebra of \({\mathcal {A}}\), which is isomorphic to \(\{0,1\}^I\). Consequently, any probability measure \({\mathbb {P}}\) on the measurable space \(({\varOmega },{\mathcal {A}})\) gives rise to a probability measure \({\mathbb {P}}_I\) on \(\{0,1\}^I\) (with the power set as \(\sigma \)-algebra) defined by

for all \(y \in \{0,1\}^I\).

Building on these considerations, we can define the mutation operator of the \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\) when running on a finite set \(I \subset {\mathbb {N}}\) of bit positions as follows. The offspring stemming from a parent \(x \in \{0,1\}^I\) is \(x \oplus y\), where \(y \in \{0,1\}^I\) is chosen randomly from the distribution \({\mathbb {P}}_I\). Here \(\oplus \) denotes the bit-wise exclusive-or. In other words, we flip those bits i of x for which \(y_i\) is one.

Next we argue that the \((1 + 1)~\text{ EA }_{\vec {p}}\) and \((1 + 1)~\text{ EA }_{Q}\) classes are subclasses of the class of all algorithms \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\).

-

Let \(\vec {p}= (p_i)_{i \in {\mathbb {N}}}\) be any sequence in [0, 1]. Then the \((1 + 1)~\text{ EA }_{\vec {p}}\), when run on a finite bit set I, performs mutation by flipping each bit \(i \in I\) independently with probability \(p_i\). When defining a probability measure \({\mathbb {P}}\) on \({\varOmega }\) by setting

$$\begin{aligned} {\mathbb {P}}[C(a_1,\ldots ,a_n)] = \prod _{i \in [n]; a_i = 1} p_i \prod _{i \in [n]; a_i = 0} (1-p_i) \end{aligned}$$for all cylinders, then the \((1 + 1)~\text{ EA }_{\vec {p}}\) and the \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\) are the same algorithm.

-

Let Q be a discrete distribution on [0, 1]. Then the \((1 + 1)~\text{ EA }_{Q}\), when run on a finite bit set I, performs mutation by first sampling a number q from Q and then flipping each bit \(i \in I\) independently with probability q. When defining a probability measure \({\mathbb {P}}\) on \({\varOmega }\) by setting

$$\begin{aligned} {\mathbb {P}}[C(a_1,\ldots ,a_n)] = \sum _{q} Q(q) \prod _{i \in [n]; a_i = 1} q \prod _{i \in [n]; a_i = 0} (1-q), \end{aligned}$$then the \((1 + 1)~\text{ EA }_{Q}\) and the \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\) are the same algorithm.

For this reason, any lower bound for the best performance achievable with an algorithm from the class \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\) immediately carries over to the subclasses \((1 + 1)~\text{ EA }_{\vec {p}}\) and \((1 + 1)~\text{ EA }_{Q}\). Now that we regard the same class \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\) of algorithms for the two uncertainty models of an unknown initial segment [n] and an unknown set I of relevant bits, it is clear that a lower bound for the first case carries over to the second. Since our lower bound for the first case will essentially match our upper bounds for both models (as they are equal), it suffices in the following to regard the model of an unknown initial segment \(I = [n]\) of bits on which the LeadingOnes function to be optimized is defined.

The next step towards the solution of our problem is the following surprising result that for any algorithm \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\) there is a randomized local search algorithm (flipping a single randomly chosen bit as mutation operation) that has exactly the same performance on all initial segments (Lemma 22).

Generalized Randomized Local Search on Infinite Bit Strings:\(\mathbf{RLS }_{\vec {p}}\). Let \(p_1, p_2, \ldots \) be non-negative numbers with \(\sum _{n \in {\mathbb {N}}} p_n \le 1\). Then the algorithm \(\hbox {RLS}_{\vec {p}}\) is a special case of the \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\) that

-

flips exactly the ith bit with probability \(p_i\)

-

and flips no bit with probability \(1 - \sum _{n \in {\mathbb {N}}} p_n\).

More formally, \(\hbox {RLS}_{\vec {p}}\) equals \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\) for the measure \({\mathbb {P}}\) defined by \({\mathbb {P}}[C(a_1,\ldots ,a_n)]:=\)

Lemma 22

Let \({\mathbb {P}}\) be any probability measure on \({\varOmega } = \{0,1\}^{{\mathbb {N}}}\). Then there is a sequence of non-negative numbers \(\vec {p}= (p_1, p_2, \ldots )\) with \(\sum _{n \in {\mathbb {N}}} p_n \le 1\) such that, for LeadingOnes, the randomized local search algorithm \(\hbox {RLS}_{\vec {p}}\) and \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\) when run on any initial segment of bits have exactly the same run time distribution.

To prove this lemma, we need the auxiliary result that in any run of an \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\) algorithm at any time t the random individual \(X^{(t)}\) is identically distributed within each fitness level. This elementary observation has been used previously in [5, 16, 29] to analyze the optimization of fixed length LeadingOnes instances.

Lemma 23

Consider a run of an \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\) algorithm on the LeadingOnes function defined on the initial segment [n]. Denote by \(X^{(0)}\) the random initial individual and by \(X^{(t)}\), \(t \in {\mathbb {N}}\), the random individual forming the one-element population of the \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\) after the t-th iteration. Then, for any two search points \(x, y \in \{0,1\}^n\) with \(\textsc {Lo} (x) = \textsc {Lo} (y)\), we have \(\Pr [X^{(t)} = x] = \Pr [X^{(t)} = y]\).

Proof

We proceed by induction over time. For \(t=0\), the claim is obvious since \(X^{(0)}\) is simply a bit string chosen uniformly at random from \(\{0,1\}^n\), so we even have \(\Pr [X^{(t)} = x] = \Pr [X^{(t)} = y]\) for all \(x, y \in \{0,1\}^n\).

Assume the claim to hold for some \(t \ge 0\). Since the distribution of \(X^{(t+1)}\) is a convex combination of the distributions of \(X^{(t+1)}\) conditional on the events \(\textsc {Lo} (X^{(t)})=i\), \(i \in [0{\ldots }n]\), it suffices to show the claim conditioning on \(\textsc {Lo} (X^{(t)})=i\) for some \(i \in [0{\ldots }n]\) (by the law of total probability). So let us assume that \(X^{(t)} = i\) for some \(i \in [0{\ldots }n]\). Since the claim is trivial for \(i = n\), let us assume \(i < n\). Then, with probability one, the first i bits of \(X^{(t)}\) are one and \(X^{(t)}_{i+1} = 0\). For all \(j > i+1\), the bits \(X^{(t)}_j\) are independently and uniformly distributed in \(\{0,1\}\) by our induction hypothesis.

Let \(Y \in \{0,1\}^n\) be the random mutation mask used in the \((t+1)\)-st iteration, that is, \(Y_j = 1\) if and only if the mutation step in this iteration flipped the j-th bit. We again condition on each possible outcome \(y \in \{0,1\}^n\) of Y and prove the claim in this case. Hence let \(y \in \{0,1\}^n\) and condition on \(Y^{(t)} = y\). We need to show that \(X^{(t+1)}\) in this situation is uniformly distributed within each fitness level. We consider three cases.

Case 1: If y is such that \(y_j = 1\) for some \(j \le i\), then the mutation offspring has a lower fitness than \(X^{(t)}\) and is not accepted. Hence \(X^{(t)}\) and \(X^{(t+1)}\) are identically distributed and the claim is obvious.

Case 2: If y is such that \(y_j = 0\) for all \(j \le i+1\), then the mutation offspring has fitness equal to \(\textsc {Lo} (X^{(t)})\) and is accepted. The distribution of this \(X^{(t+1)}\) is identical to the one of \(X^{(t)}\), since the first \(i+1\) bits are not changed and the last \(n-(i+1)\) bits are obtained from an exclusive-or of the last \(n-(i+1)\) bits of \(X^{(t)}\), which are uniformly distributed in \(\{0,1\}^{n-(i+1)}\), and a fixed bit string, namely the last \(n-(i+1)\) bits of y. Since such an exclusive-or is a bijection of \(\{0,1\}^{n-(i+1)}\), this part of \(X^{(t+1)}\) remains uniformly distributed in \(\{0,1\}^{n-(i+1)}\).

Case 3: We consider finally the case that \(y_{i+1} = 1\) and \(y_j = 0\) for all \(j \le i\). In this case, the mutation offspring and hence also \(X^{(t+1)}\) have a fitness larger than i. With the same argument as in the previous case, we see that the last \(n-(i+1)\) bits remain independently and uniformly distributed. Consequently, when conditioning on the fitness of \(X^{(t+1)}\) being some \(j \ge i+1\), the bits with index larger than \(j+1\) remain independently and uniformly distributed. This again shows the claim. \(\square \)

Proof (of Lemma 22)

For \(n \in {\mathbb {N}}\), let \(C_n := C(a_1,\ldots ,a_n)\) with \(a_1 = \ldots = a_{n-1} = 0\) and \(a_n = 1\). Let \(p_n := {\mathbb {P}}[C_n]\). Since the sets \((C_n)_{n \in {\mathbb {N}}}\) are disjoint, we have \(\sum _{n \in {\mathbb {N}}} p_n \le 1\). We show that the \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\) and \(\hbox {RLS}_{\vec {p}}\) have an identical optimization behavior.

Let \(n \in {\mathbb {N}}\) and consider the optimization of LeadingOnes on the initial segment [n] both via the \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\) and \(\hbox {RLS}_{\vec {p}}\). By Lemma 23, both algorithms at any time t have the property that the parent individual \(X^{(t)}\) is uniformly distributed within each fitness level. Consequently, the distribution of \(X^{(t)}\) is fully described by the fitness level densities \((\Pr [\textsc {Lo} (X^{(t)}) = i])_{i \in [0{\ldots }n]}\). To show that \(X^{(t)}\) has the same distribution for the \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\) and \(\hbox {RLS}_{\vec {p}}\), it therefore suffices to show that both algorithms have the same fitness level densities.

We do so by induction. There is nothing to show for \(t = 0\), since the initial individual is chosen uniformly at random in both algorithms. Assume that at some time \(t \ge 0\) both algorithms have the same fitness level densities. We show that this is also true at time \(t+1\). Since \(\Pr [\textsc {Lo} (X^{(t+1)}) = i] = \sum _{j=0}^n \Pr [\textsc {Lo} (X^{(t+1)}) = i \mid \textsc {Lo} (X^{(t)}) = j] \Pr [\textsc {Lo} (X^{(t)}) = j]\) and since the \(\Pr [\textsc {Lo} (X^{(t)}) = j]\) are identical for both algorithms, it suffices to show that both algorithms have identical \(\Pr [\textsc {Lo} (X^{(t+1)}) = i \mid \textsc {Lo} (X^{(t)}) = j]\) for all \(i, j \in [0{\ldots }n]\).

Let \(j \in [0{\ldots }n]\). Since there is nothing to show for \(j=n\), let us assume \(j < n\). For the \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\), the probability to leave the j-th fitness level is the probability to flip the \((j+1)\)-st bit and to not flip bits \(1, {\ldots }, j\). By definition of the \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\), this is exactly \({\mathbb {P}}[C_{j+1}]\). The second algorithm \(\hbox {RLS}_{\vec {p}}\), obviously, leaves the j-th fitness level if and only if the \((j+1)\)-st bit is the single bit that is flipped, which by definition happens with probability \(p_{j+1} = {\mathbb {P}}[C_{j+1}]\). For both algorithms, conditional on that the j-th fitness level being left, the bits with index larger than \(j+1\) are independently and uniformly distributed. Consequently, the number of additional fitness levels gained in addition to the \((j+1)\)-st one is also equally distributed. \(\square \)

We note in the following lemma that, for LeadingOnes, the class of algorithms \((1 + 1)~\text{ EA }_{\vec {p}}\) regarded in [11] is equal in power to the class of algorithms \(\hbox {RLS}_{\vec {p}}\). Together with Lemma 22, this shows that all three classes of algorithms are equally powerful, and in particular, that the subclass \((1 + 1)~\text{ EA }_{\vec {p}}\) regarded in [11] was of maximal power.

Lemma 24

For any sequence of non-negative numbers \(\vec {p}= (p_1, p_2, \ldots )\) with \(\sum _{n \in {\mathbb {N}}} p_n \le 1\) there is a sequence \(\vec {q}= (q_1, q_2, \ldots )\) of numbers in [0, 1] such that \(\hbox {RLS}_{\vec {p}}\) and \((1 + 1)~\text{ EA }_{\vec {q}}\) for each initial segment of bits have the same run time distribution on LeadingOnes.

Proof

Let us assume that we have \(p_n < 1\) for all \(n \in {\mathbb {N}}\), as otherwise trivially \(\vec {q}:= \vec {p}\) suffices. Let \(q_1 := p_1\) and recursively \(q_n := p_n / \prod _{j=1}^{n-1} (1 - q_j)\). Using induction, it is not difficult to see that \(q_n=p_n/(1-\sum _{j<n}{p_j})\). Furthermore, since \(1-\sum _{j<n}{p_j} \le p_n\), this also shows that \(q_n \le 1\). As discussed earlier, this \((1 + 1)~\text{ EA }_{\vec {q}}\) is a special case of the \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\) with \({\mathbb {P}}[C(a_1,\ldots ,a_n)] = \prod _{i \in [n]; a_i = 1} q_i \prod _{i \in [n]; a_i = 0} (1-q_i)\). In particular, for \(a_1 = \ldots = a_{n-1} = 0\) and \(a_n=1\), we have \({\mathbb {P}}[C(a_1,\ldots ,a_n)] = q_n \prod _{i=1}^{n-1} (1-q_i)\), which by construction equals \(p_n\). Consequently, if we redo the construction of the proof of Lemma 22 with this \((1 + 1)~\text{ EA }_{{\mathbb {P}}}\), we obtain our original \(\hbox {RLS}_{\vec {p}}\) algorithm. Consequently, the \((1 + 1)~\text{ EA }_{\vec {q}}\) just constructed has an identical performance with \(\hbox {RLS}_{\vec {p}}\). \(\square \)

An advantage of the class \(\hbox {RLS}_{\vec {p}}\) is that the expected run times are easy to compute. The following result will be used in Lemma 27 to bound the run time of the \((1 + 1)~\text{ EA }_{\vec {p}}\). The proof is inspired by the analysis of the expected run time of the \((1 + 1)~\text{ EA }\) on LeadingOnes in [5], however, we are not aware of a previous analysis of the run time of RLS on LeadingOnes. We note that, while this work was under review, a general analysis of (1 + 1)-type algorithms on LeadingOnes via stochastic domination was proposed in [8], which implies our result. Since this proof is very different from ours, and to keep our work self-contained, we still present our original proof.

Lemma 25

Let \(\vec {p}= (p_1, p_2, \ldots )\) be a sequence of non-negative numbers with \(\sum _{n \in {\mathbb {N}}} p_n \le 1\). Then the expected run time of \(\hbox {RLS}_{\vec {p}}\) optimizing the LeadingOnes function on the initial segment [n] is \(\tfrac{1}{2} \sum _{i=1}^n \tfrac{1}{p_i}\), where \(\frac{1}{0} := \infty \).

Proof

Let \(n \in {\mathbb {N}}\). Denote by E the expected run time of \(\hbox {RLS}_{\vec {p}}\) on LeadingOnes in [n]. If there is an \(i \in [n]\) with \(p_i = 0\), then with positive probability \(\hbox {RLS}_{\vec {p}}\) never finds the optimum, hence \(E = \infty \) as claimed. So let us assume that all \(p_i\) are positive.