Abstract

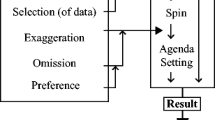

In the first part of this review, I defined the media filter and how it can operate to frame and blame the forecaster for losses incurred during an environmental disaster. In this second part, I explore the meaning and role of uncertainty when a forecast, and its basis, is communicated through the response and decision-making chain to the newspaper, especially during a rapidly evolving natural disaster which has far-reaching business, political, and societal impacts. Within the media-based communication system, there remains a fundamental disconnect of the definition of uncertainty and the interpretation of the delivered forecast between various stakeholders. The definition and use of uncertainty differs especially between scientific, media, business, and political stakeholders. This is a serious problem for the scientific community when delivering forecasts to the public though the press. As reviewed in Part 1, the media filter can result in a negative frame, which itself is a result of bias, slant, spin, and agenda setting introduced during passage of the forecast and its uncertainty through the media filter. The result is invariably one of anger and fury, which causes loss of credibility and blaming of the forecaster. Generation of a negative frame can be aided by opacity of the decision-making process that the forecast is used to support. The impact of the forecast will be determined during passage through the decision-making chain where the precautionary principle and cost-benefit analysis, for example, will likely be applied. Choice of forecast delivery format, vehicle of communication, syntax of delivery, and lack of follow-up measures can further contribute to causing the forecast and its role to be misrepresented. Follow-up measures to negative frames may include appropriately worded press releases and conferences that target forecast misrepresentation or misinterpretation in an attempt to swing the slant back in favor of the forecaster. Review of meteorological, public health, media studies, social science, and psychology literature opens up a vast and interesting library that is not obvious to the volcanologist at a first glance. It shows that forecasts and their uncertainty can be phrased and delivered, and followed-up upon, in a manner that reduces the chance of message distortion. The mass-media delivery vehicle requires careful tracking because the potential for forecast distortion can result in a frame that the scientific response is “absurd”, “confused”, “shambolic”, or “dysfunctional.” This can help set up a “frightened”, “frustrated”, “angry”, even “furious” reaction to the forecast and forecaster.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Uncertainty and error are central to any scientific measurement, model-based projection or forecast (Taylor 1997; Grabe 2005). Because quantification of uncertainty is part of making any measurement, there are accepted standards for error assessment in measurement results (e.g., Taylor and Kuyatt 1994; JCGM 2008; Rougier 2013; Rougier and Beven 2013). Although the forecaster will strive to use the most complete and up-to-date measurements and models available to make the best possible forecast, error is inherent in any measurement or data set, and uncertainty will always be present in the final product. Statement of uncertainty is thus an unavoidable but essential, accepted, and standardized method of communication when passing measurements, model-based simulations, and forecasts between actors in the scientific community (e.g., Hall 1950; Gong and Forrest 2014), as well as upwards through the disaster-response chain to policy and decision-makers. Thus, as Brannigan (2011) states “one of the most important fundamental policy decisions is the treatment of uncertainty.” But, what happens when forecasts and uncertainty are communicated beyond the scientific and policy making community to be filtered by the media? According to Norton et al. (2006),

uncertainties may be framed by the presentation, sources, and social construction of information—this being a social science perspective—as well as the degrees and perceived quality of information available—this being a Bayesian, physical science perspective.

Eldridge and Reilly (2003) argued that the media now pay more attention to uncertainty and can either generate concern about particular threats or offer reassurance. Schwitzer (2011) backed this perspective up, arguing that (health care) news coverage can educate and inform, but also confuse. The ideal scientific treatment of uncertainty will also be balanced by the business perspective. This was stressed by Eoyang and Holladay (2013) who commented that the challenge in the business world is to work ethically and responsibly in circumstances where outcomes are unknowable.

Disconnects in the definition, interpretation, and use of uncertainty between scientific, media, business, political, and public stakeholders are thus bound to occur. These potential disconnects are explored here, mostly from the social science perspective and using four examples of newspaper responses to an environmental disaster. These are:

-

1.

The 2010 eruption of Eyjafjallajökull (Iceland);

-

2.

The passage of the storm “Dirk” though Brittany (France) in December 2013;

-

3.

The “great storm” of 16–17 October 1987 that impacted SE England (UK);

-

4.

The St. Jude’s day storm of 28 October 2013 that, again, impacted SE England.

Recourse to meteorological events, as well as the meteorological and health literature, is essential. This is because the meteorologist and health practitioner have a great deal of experience in, and a long experience of, delivering forecasts—in verbal, written, and graphic format—to the public. As regards the weather forecast, Stewart (1997) pointed out that “studies of the value of forecasts themselves necessarily consider the decisions made by users of the forecast.” Analysis of forecasts are then either descriptive, focusing on how the users actually decide (e.g., Johnson and Holt 1997; Wilks 1997), or prescriptive, focusing on how the users should decide (e.g., Davis and Nnaji 1982; Sonka et al. 1988). This study is prescriptive.

The volcanological perspective

There is a significant literature in volcanology on the importance of probabilistic forecasts and their communication. Indeed, this review compliments that of Doyle et al. (2014) by adding the complications posed by passing uncertainty and forecast through the decision-making and communication chain to the newspaper.

Probabilism is definedFootnote 1 as a theory that certainty is impossible in the sciences and that probability has to suffice when governing belief and action. Thus, probabilism and uncertainty are intimately linked. As Sparks (2003) pointed out,

Due to intrinsic uncertainties and the complexity of non-linear systems, precise prediction is usually not achievable. Forecasts of eruptions and hazards need to be expressed in probabilistic terms that take account of uncertainties.

In volcanology, many systems have been developed to aid in probabilistic risk assessments for volcanic eruption scenarios and for input into eruption forecast models (e.g., Gómez-Fernández 2000; Pareschi et al. 2000; Newhall and Hoblitt 2002; Marzocchi et al. 2004, 2008; Behncke et al. 2005; Felpeto et al. 2007; De la Cruz-Reyna and Tilling 2008; Jenkins et al. 2012a; Marzocchi and Bebbington 2012; Bebbington 2013; Garcia-Aristizabal et al. 2013; Gunn et al. 2014; Sobradelo et al. 2014). Long-term forecasts are mainly used for risk management and planning (Bebbington 2013), and tend to be based on historical and deposit data. Now-casting, however, needs to be applied during an ongoing event, in real-time, and relies heavily on live data feeds from operational geophysical arrays, satellite data, and observers in the field. This allows up-to-the-minute input into forecast models. All steps in this flow through the data-model-forecast system have their uncertainties, which inevitably stack up so that certainty in the final product is utterly impossible.

Hazards tend to scale risk numerically in terms of a percentage and/or by using colors. Words used are typically “high”, “medium”, and “low”, with colors being red, orange, yellow, green and/or white. In this context, lava flow models have been used to produce probabilistic maps for lava flow invasion of the city of Goma on the flanks of Nyiragongo (Chirico et al. 2009), the town of Zafferana on Etna (Bisson et al. 2009), as well as for all sectors of Etna (Forgione et al. 1989; Favalli et al. 2009), Vesuvius (Lirer and Vitelli 1998), Lanzarote (Felpeto et al. 2001), and Mount Cameroon (Bonne et al. 2008). Probabilistic risk mapping and assessments have also been completed for explosive events at Vesuvius (Carta et al. 1981; Cioni et al. 2003; Pesaresi et al. 2008; Sandri et al. 2009; Gurioli et al. 2010), Campi Flegrei (Marzocchi et al. 2010), Teide-Pico Viejo (Sobradelo and Martí 2010), and Cotopaxi (Biass et al. 2012), as well as vent opening in the Auckland volcanic field (Lindsay et al. 2010) and Campi Flegrei (Selva et al. 2012). In addition, probability-based assessments have been made for evacuation vulnerability in Campi Flegrei (Alberico et al. 2012), socio-economic vulnerability for eruptions in Valles Caldera (Alcorn et al. 2013), potential damage caused by a possible future eruption at the proposed radioactive waste repository of Yucca Mountain (Ho et al. 2006), hazard to aviation operations during ash-emitting events at Vesuvius (Folch and Sulpizio 2010; Sulpizio et al. 2012), and ash fall hazard in the Asia-Pacific region (Jenkins et al. 2012b). For the Auckland volcanic field, Sandri et al. (2012) combined probability-based hazard assessments with cost-benefit analysis to produce maps that assessed the probability of base surge impact and evacuation time.

There is also a growing literature on how such volcanic hazard information can be communicated, and trusted, on a local scale (e.g., Haynes et al. 2007, 2008). However, Alemanno (2011a) argued that,

The raison d’être of risk communication within the broader framework of risk regulation lies in the assumption that scientific results as well as risk management options cannot always be easily converted into simple guidelines and advice that non-scientists, like the public or media, can easily understand or follow. This seems especially true at a time when we learn about crises via new media tools such as Twitter, Facebook and YouTube. Moreover, with public opinion having become more skeptical about the neutrality and effectiveness of science, there is a growing call for transparency, especially in times of emergencies.

Pidgeon and Fischhoff (2011) backed this view up arguing, “communication failure makes future success less likely, by eroding both the public’s trust in the experts, who seem not to know their needs, and the experts’ trust in the public, which seems unable to understand the issues.” Pidgeon and Fischhoff (2011) concluded by arguing that a new model of science communication was needed. Also, there may be government-based campaigns to erode trust in science through a process which can be summarized as “abusing” science (Wright and Dunlap 2010), as well as lobbying (Eldridge and Reilly 2003). It is not surprising, then, that surveys by Davis et al. (2005) showed that confidence among the population of government officials’ levels of preparedness and ability to provide accurate information about an impending eruption was not high.

However, the decision as to whether and how to respond to an environmental disaster is not the responsibility of the forecaster. In the decision-making process, the forecaster is at the base of the triangle that tips out with government bodies, these being those agencies responsible for defining, implementing, and enforcing environmental hazard response policy (Fig. 1). For a volcanic disaster, information-flow protocols have been laid out in many documents (e.g., Tilling 1989; Heliker 1992; Bertolaso et al. 2009). Within this scheme, forecasters are charged with providing the best possible information to those bodies higher up in the decision-making chain so that the best-informed decision can be made on the basis of the best scientific information available (Bonfils et al. 2012). Application of this scheme involves not just the media filter (see Part 1 of this review), but also application of cost-benefit analysis and the precautionary principle by policy and decision-makers. Worse, although “we can make a good deal of progress in understanding why, and when, people fail to respond sensibly to worst-case scenarios, when probabilities cannot be assigned to the worst-case scenario, the analysis is harder” (Sunstein 2007). As Sunstein (2007) adds, “suppose that officials or scientists have no idea about a terrible outcome, or that they are able to specify only a wide range.”

Volcanic crisis response and decision-making pyramid as based on the original scheme of Tilling (1989) and adapted to cover the response to a volcanic event that impacts air space. I have divided the main stakeholders into three groups (science, governmental, and others—as divided by the dashed lines). The main role of each group is given in the yellow boxes. Forecasting and government bodies were those active during the 2010 Eyjafjallajökull eruption as taken from Alemanno (2011c) and Macrae (2011). I have added a second pyramid of influence to the original scheme of Tilling (1989) which enters from the right that contains the “other” stakeholder group

Cases and sources

With the exception of the Great Storm of 1987 for which I refer to a 1988 special issue of the journal Weather devoted to the storm, the UK press sources that I use for the Eyjafjallajökull and UK weather events are The Times, The Daily Telegraph, and The Sun, as well as The Daily Mail, The Daily Mirror, and The Independent. I have already reviewed these sources in Part 1 of this review. Thus, following a brief review of each event, I detail the new French press sources used here.

The 2010 eruption of Eyjafjallajökull

Beginning on 14 April 2010, an explosive eruption of Eyjafjallajökull volcano (Iceland) fed an ash cloud that drifted into transatlantic and European air routes prompting closure of the same air space during 15–20 April (Gudmundsson et al. 2010). The impact, especially on airline operations, has been well documented (e.g., Alemanno 2011b), as have methods used to measure, model, and track the ash cloud (e.g., Kristiansen et al. 2012; Newman et al. 2012; Turnbull et al. 2012; Woodhouse et al. 2013). The impact of the event on industries and individuals lead to extensive press coverage in the European and US press (Harris et al. 2012). Reports appeared in The Times on nine consecutive days beginning on 15 April, and on eight consecutive days beginning on 16 April in The Sun. Coverage in these two UK newspapers alone amounted to 6 m2 of paper space or 7500 cm2 of newspaper coverage per day.

Dirk and the flooding of Morlaix

The storm named “Dirk” crossed the French region of Brittany during 23–24 December 2013. Between 60 and 80 mm of rain fell on land already saturated by water, winds reached speeds of 100 km/h and around 18,500 households lost electricity (Ouest France, 26 December 2013, p. 3). Flooding was widespread, especially in western Brittany where the towns of Quimperlé, Quimper, and Châteaulin were flooded (Violette 2014). The town of Morlaix was particularly hard hit, where the “rising water surprised everyone” as the river that flows through the town center rapidly rose and overflowed to flood roads, houses, and shops to a depth of more than 1 m (Ouest France 2014).

The “great storm” of 16–17 October 1987

The “great storm” of 16–17 October 1987 was one of Britain’s most severe windstorm events since 1703 (Lamb 1988); the 1703 storm being argued by Daniel Dafoe to have been “the most violent tempest the world ever saw” (Clow 1988). The 1987 event thus became the “so-called hurricane” or the “great gale” (Stirling 1997), with peak wind-gust speeds of up to 325 km/h (Templeman et al. 1988). As a result, winds blew down around 15 million trees (Quine 1988) and caused extensive property damage (e.g., Lawes 1988), with 18 fatalities being recorded in Britain (Met. Office 2013). Total damage was assessed at 1.4 billion British pounds by RMS (2007).

The St. Jude’s Day storm of 28 October 2013

On 28 October 2013 a storm, named St. Jude, swept across southern England bringing winds of up to 160 km/h. Although details in newspaper reports were contradictory, initial losses were reported as:

-

5 dead, power cuts hit 500,000 (The Sun, 29 October 2014, p. 4);

-

4 dead, “thousands” without power (The Independent, 29 October 2014, p. 2);

-

5 killed, 500,000 “families” left without power (Daily Mail, 29 October 2014, p. 1);

-

5 dead, 600,000 homes without power (The Times, 29 October 2014, p. 1);

-

“100 mph hurricane force winds claim 6 lives” (Daily Express, 29 October 2014, p. 1).

The storm caused five deaths. It also felled thousands of trees, left hundreds of thousands of homes without power, blew down walls, scaffolding and cranes, and disrupted the railway network.

French press sources

For storm Dirk, I use four French newspapers: Ouest France, La Montagne, Le Figaro, and Le Monde. All newspapers published between 23 December 2013 and 9 January 2014 were examined. Ouest France, specifically the Morlaix edition, was selected as the primary target newspaper due to the location of Morlaix in one of the worst hit zones. Based in Rennes (Brittany), Ouest France was founded in 1944 following the collapse of the controlled press of the Second World War (Martin 2002). Ouest France currently has a circulation of 768,226, being the most read regional newspaper in France as of 2009 (Corroy and Roche 2010). La Montagne was selected as a control. Founded in 1919 in Clermont Ferrand (Martin 2002) it is, geographically, the most central newspaper in France and its circulation of 190,268 makes it the eighth most popular regional newspaper in France (Corroy and Roche 2010). Le Figaro and Le Monde were selected as being two of the main national “haut de gamme” (high standing) daily newspapers in France (Charon 2013).

As of 2006, there were 254 regional newspapers in France, with a total readership of 2,010,240 (from data in Béguier 2006). As of 2008–2009, the distribution of Le Figaro and Le Monde was 315,656 and 294,324, respectively (Corroy and Roche 2010). Where impacted populations are widely dispersed, and in the existence of a press system composed of multiple regional newspapers, Besley and Burgess (2002) argue that regional presses will have a greater incentive to cover local issues. They will also have a greater influence on the catchment populations, writing in the language, dialect or style of the reader, thus being accessible to (and preferred by) the local readership.

Forecast delivery by the newspaper during Eyjafjallajökull

During the Eyjafjallajökull eruption, forecasts, risks, and hazards, as well as uncertainty on projections, were well communicated by newspapers. For example, maps of (and projections for) cloud extent appeared in both The Times and The Sun on 16 April 2010, the model-based forecast for future cloud location being termed a “prediction” by The Times. The cloud extent was filled with a dark gray tone (The Times) or red color (The Sun), with The Times adding “when it erupts it produces a grey ash that has a high fluoride content.” The nature of the hazard was also well stated. For example, on 16 April, The Times published a double page spread illustrating ash impacts on aviation operations, including a correctly annotated schematic of an aircraft engine ingesting ash. On the same day, a report spread across pages 4 to 5 of The Sun stated that ash “can wreck jet engines, choke ventilation systems and sand-blast windscreens.”

Uncertainty was clearly stated. On 21 April, The Times, in a page 5 analysis entitled “flying into the unknown”, pointed out that “all weather models are based on probabilities rather than fact.” The article added that the model used by the UK Meteorological Office (hereafter the Met. Office) was called “Name” (Nuclear Accident Model), which had been developed out of the need to model dispersion of nuclear fallout after the 1986 Chernobyl disaster. The Times described how Name treated the volcanic cloud in the same way as Chernobyl’s radioactive cloud, using “an estimate of the volume of ash injected into the atmosphere” to produce “a best estimate for where ash will be found.” The report went on to state that “our knowledge of the nature of the plume and of atmospheric conditions being imperfect, the model will inevitably be unable to predict the position of the plume to the nearest inch.”

However, in spite of these statements, uncertainty was used to mean “cautious”, even “overcautious”; or “health and safety gone mad” (see Part 1 of this review). Words such as “absurd”, “chaos”, “confusing”, “crisis”, “havoc”, “mad”, “mayhem”, “pandemonium”, and “shambles” appeared in dictionaries created from all reports in The Times and The Sun during the event, with the volcano even being described as “mighty” or a “monster” (Harris et al. 2012). These are strong, evocative words that carry more weight than “uncertain” and “forecasting”.

An aircraft encounter during the Eyjafjallajökull eruption

On 22 April, a Sun Exclusive spread across pages 10 and 11 detailed a probable aircraft ash encounter that caused a commercial flight to abort due to loss of an engine-bleed after a “strong smell of ash” was encountered at 16,000 ft. The report gave the flight path information and the pilot communication transcript, with an expert statement commenting that it was “a very uncommon fault,” and that “for it to happen as the plane flew through the ash cloud is a worry.” The source added that, if it was really a minor technical fault, the pilot would not have taken the long detour over the sea, “he would simply have turned around.” The airline involved, however, claimed that the incident was due to a minor technical fault with the air-conditioning system. The report concluded with the line that “meanwhile travel firms claimed that Britain’s response to the ash crisis was a shambles,” with the UK Transport Secretary being quoted as admitting “it’s fair to say we’ve been too cautious.” These final lines appear to align with the slant apparent in The Times on 20 April that accused the Met. Office of “only making a weather report.” However, this aircraft encounter actually seems to have validated the Met. Office forecast for that day. Advisory maps issued by the Met. Office at 00:00 GMT on 22 April placed ash over the Manchester area, at flight level SFC/FL200 (that is, between sea level and 20,000 ft, i.e., up to 6000 m) for most of the day (see http://www.metoffice.gov.uk/aviation/vaac/data/VAG_1271892412.png). This apparently successful forecast was, though, not mentioned in the report.

Framing of the response during the Eyjafjallajökull eruption

Forecast, uncertainty, and their use was framed in such a way that implied the response agencies were in some way incompetent or, at best, confused; even somehow responsible for the crisis. For example, on 25 April an article appeared on page 19 of the Mail on Sunday under the banner headline “A natural disaster, but a man-made catastrophe.” Likewise, “Air crisis shambles” was the front page headline in The Times on 22 April. Irrespective of the content of the stories that followed, the messages transmitted by such eye-catching and evocative titles is not positive to those deemed responsible for the “catastrophe” or “shambles”. The subsequent distortion was summed up in the key words found in a page 5 report of the Daily Mirror on 22 April 2010. These were: “cautious”, “caved in”, “shambles”, “muddle”, “confusion”, “irritated”, and “furious.” The same report, entitled “We made an ash of flight ban”, contained the following quote regarding the Government response,

They underestimated the severity of the consequences of the decision.

These sentiments are borne out by the results of the Google trends analysis of Burgess (2011) who found 29 blame or responsibility stories in his search, including:

-

“airlines look for blame”;

-

“Met. Office got it wrong”;

-

“airline fury”;

-

“pandemic of panic”;

-

“Met. Office photos didn’t exist”;

-

“restrictions unnecessary”;

-

“our reaction a shambles.”

The effect of this frame was soon reflected in letters written to various newspapers. For example, on 20 April, a letter written by a “pilot with 15 years of experience” appeared in The Sun on page 19. The letter argued that tens of thousands of planes were likely to have flown through ash during the “last 50 years” and that, although thick ash was a huge risk, “thin ash had not proven to be a serious risk.” The writer argued that volcanic ash had not yet claimed a life due to the skill of crews. The writer would have “kept planes flying and gladly flown in them”, and demanded:

better facts, proper science and solid risk analysis.

The letter finished by claiming that, if the issue of risk is taken to its ultimate conclusion, then “placing 300 passengers in a metal tube 30 000 ft above ground would not happen.” Such sentiments even entered the scientific literature (see Appendix). The question is: why and how does such framing occur, and what can we do about it?

The uncertainty slant during the Eyjafjallajökull eruption

During the Eyjafjallajökull eruption, uncertainty was turned from a necessary consideration of error, or incomplete constraint of a scientific problem, into evidence that the responsible agencies were “too cautious” (The Times, 20 April, p. 3). This was exaggerated by the fact that the same agencies were dealing with apparently simple questions that would have been perceived as easy to answer, such as (The Times, 20 April, p. 3),

-

“where is the ash cloud?”,

-

“when will the eruption end?”,

-

“when will flights resume?”, or (Daily Mirror, 22 April, p. 5):

-

“why did it take 6 days (to reopen airspace)?”

The problem was exacerbated by the readership being faced with regular images of impressive ash plumes rising above Eyjafjallajökull and widespread use of evocative words such as “black”, “gigantic”, and “menacing” to describe the cloud (Harris et al. 2012). As a result, statements to the effect that the ash often seemed “not too bad” but, because it was caught in a high-pressure system, it was constantly “swirling around” (The Times, 22 April, p. 71) were likely difficult to comprehend by the readership. This simply did not match what they were seeing in the skies above them and on the front pages in front of them. Readers were instead familiar with the problems and loss faced by those viewed as “stranded”, this being the top word used by both The Sun and The Times with a total word count of 139 (Harris et al. 2012). To use the words published in the press examined here, they were stranded by an invisible but “black”, “menacing”, “swirling” mass.

Newspaper reporting of storm Dirk and flooding of Morlaix

Météo France has a four color warning system for severe weather and floods running from green (i.e., no warning in place), through yellow and orange, to red. Orange means “remain very vigilant” (Météo France 2011). On 23 December 2013, an article appeared on page 3 of Ouest France giving an orange weather warning and stating that strong winds, heavy rain, high waves and littoral flooding were likely. Although such a warning advises “caution … … … above all, next to the sea”, no inland flood warning was printed. During the night of 23–24 December, the Breton town of Morlaix was flooded up to a depth of 1.4 m, with flooding beginning around 03:30 am (all times are local, GMT+1) on 24 December (Ouest France, 26 December, p. 6). Due to confidence in the forecast, many businesses and households had not implemented flood protection measures that would otherwise have been installed (Figaro, 27 December, p. 8). As a result, on 25 December, La Montagne published a back page report entitled “Torrential flood surprises inhabitants of Morlaix in the heart of the night.” In the report, it was stated that the flood warning at 02:00 am on 24 December was still green. Thus, the report continued, the area had been alerted to the storm, but not to the possibility of flooding. Flooding was widespread across Brittany during the night of 23–24 December, but Ouest France (26 December 2010) reported that the town of Quimperlé was still at level yellow on the evening of 23 December, and only on the morning of 24 December was the level increased to orange, by which time the situation was “already at level red.” The alert was also “late” in Quimper. All of the problems were argued to result from the fact that the weather forecast was “too optimistic” in regards to rain fall (Ouest France, 26 December, p. 6).

On 27 December, Le Figaro ran a report entitled, “DIRK: state services called into question”. Placed on page 8, it stated that the Breton population was “angry” after being left without information during the storm. The report went on to point out that the flood warning map had been “erroneous.” On the same day, Ouest France carried a page 6 report with a similar title, “Floods: the alert system called into question.” The report pointed out that both Météo France and the regional flood monitoring agency (Vigicrue) had kept the warning level at green through 06:00 am on 24 December. The Minister for the Interior was quoted as saying that it was “necessary to work on better prevention measures” and to “review the alert system for floods.” These sentiments were echoed in Le Monde where it was claimed that the state services had committed an “error of appreciation” (Le Monde, 28 December, p. 10).

These are words, phrases, reactions, and expectations not too dissimilar to those printed during the Eyjafjallajökull eruption. In regard to expectations, there appears to be a belief that a failsafe warning can be provided in plenty of time, all of the time; and that if there is no warning—or a poor forecast occurs- then those who are part of the response system become responsible for the event and all losses incurred (see Part 1). This unquestioning faith in the certainty of the warning parallels the 1997 Grand Forks flood disaster (USA), when complete confidence in the ability of flood protection measures were “transferred into certainty in the National Weather Service forecast” (Morss 2010). In the case of Morlaix, uncertainty on the forecast was not given. The result, though, was the same as for Eyjafjallajökull event, forecasters (in this case Météo France) working with the natural phenomena were viewed as “dysfunctional” (Ouest France, 27 December, p. 7 & 9).

Michael Fish and the “great storm” of 1987

In many ways, the Morlaix example mimics the famous “Michael Fish case” of 1987. On 15 October 1987, Fish (a well-known British Broadcasting Company (BBC) weatherman) stated, during the BBC 1 lunchtime (12:55 GMT) weather forecast, that (http://www.youtube.com/watch?v=uqs1YXfdtGE):

earlier on today apparently a woman rang the BBC and said she’d heard there was a hurricane on the way. Well, if you are watching; don’t worry, there isn’t … … …

The next day, the deepest depression to hit the UK in at least 150 years swept across southern Britain (Burt and Mansfield 1988). Due to the forecast miscommunication and the resulting storm impact, on 17 October 1987 The Daily Mirror ran a front page headline:

Fury at weathermen as 17 people die, WHY DIDN’T THEY WARN US?

The report began with the line “What’s the point of having weathermen if they can’t even warn us a hurricane is on the way?” Subsequently, Houghton (1988) pointed out that warnings based on forecast models were given to the police, fire service, rail network, and airports (Morris and Gadd 1988). However, Houghton (1988) also wrote that,

by Sunday, the papers, still looking for a scapegoat to blame for all the damage, were looking for stories which concentrated on the personalities involved.

As a result, a well-attended press conference was held at the London weather center in which the reality of the forecasts, uncertainty, and how unusual the storm had been were pointed out. Subsequently, the “whole tone of the press” became “more favorable” (Houghton 1988).

Newspaper response to forecasts during St. Jude

In the case of St. Jude, a correct forecast was widely applauded, with The Sun printing (29 October 2013, p. 4) “The Met Office got this one right.” The response, which included a blanket train cancelation, was clearly necessary. More than 200 trees were removed from railway lines, with staff shortages meaning there was insufficient man power to clear the lines quickly, around 40 of which had been blocked (The Daily Express, 29 October 2013, p. 2–3). South West trains thus stated (The Times, 29 October 2013, p. 6), “If we had gone ahead with normal services, people would have been stuck on trains, and we would have (had) trains and crews stranded all over the place.” The chief executive of the rail customer watchdog added,

It’s too early to tell if the industry made the right call when cancelling so many services, but the fact that major incidents have been avoided is good news.

We even had the headline (The Times, 29 October 2013, p. 7), “Advance warnings kept storm bill down to estimated £1.5 bn.” However, spread across pages 6 and 7 of the Daily Mail we still have the banner headline, “Fury of the stranded commuters.” In this report, we find (Daily Mail, 29 October 2013, p. 6):

Millions suffer as trains and roads are hit by the storm

… … … Last night angry commuters said they had not been given enough warning about the cancellations. Forums and message boards were flooded with comments, with some people complaining that rail companies had been giving confusing and unreliable advice about the services they were running. Others accused rail bosses of overreacting by cancelling rail services in sunny parts of the country.

Note again the expectation that a precise “warning” will be made well in advance of the event. The report also contained several quotes from passengers including, “Sitting at the station in sunny Leicester and pretty much every train has been cancelled due to severe weather;” and “Opposite of the British Bulldog spirit. Flights on. Buses on, but trains all cancelled on Southern Railways lines. Overcautious!”

This example raises the problem that, during the event, responding actors may be too busy with their role in the chain of response to construct and deliver information to those impacted by the event. Unfortunately the result is again a newspaper frame of “confusion” and “unreliability” for the responding group. As a result, however good the forecast and response, we still see claims of “over-caution” and “overreaction”, to cause “anger”, “fury”, and “accusation.”

What is the popular press response?

In the cases of storm Dirk and Michael Fish, “anger” or “fury” was the immediate reaction to the two forecasts and the impact of the ensuing event. Such a reaction may be expected because incorrect forecasts for both events were delivered with an air of certainty. In contrast, forecasts made during the Eyjafjallajökull eruption were delivered with a degree of uncertainty. However, in the word dictionaries created for Eyjafjallajökull by Harris et al. (2012) from all reports appearing in The Times and The Sun we find the words “anger” or “angry” appearing 23 times, with “fury” and “furious” appearing 14 times: around three “angry” or “furious” responses per day over the 10 day study period. Such an “anger emotion family” has been found to be the reaction associated with an event whose outcome is judged as unfair or unjust in terms of the impacted stakeholder (Mikula et al. 1998). Unfortunately, it is an easy emotion to generate during widespread loss (see Part 1 of this review), and is a natural response among groups whose goals are blocked by an external force. The anger emotion family will thus not necessarily be triggered by reading about “anger” or “fury” in the newspapers, but, for those not involved in the event, generation of anger may be exactly what the press wants. As Curran (2010) argues, a tabloid-driven dynamic began in the 1970s, and prevailed throughout the 2000s, to make readers “angry”, “indignant”, or “cross”. This strategy was designed to win, and keep, readers (Curran 2010).

Uncertainty: filtering and communication

One problem lies in expectation. That is, we need to ask what each stakeholder expects from the forecast in regard to the risks each faces. Nelkin (2003) lays the problem out nicely,

People perceive risks through different ‘frames’ that reflect their values, world views, and concepts of social order. These frames can influence definitions of risk, allocations of responsibility and blame, evaluations of scientific evidence and ideas about appropriate decision-making authority. Is risk to be defined as a technical matter to be resolved by measuring the extent of harm? A bureaucratic issue of appropriate regulatory mechanisms and jurisdictions? An economic question of allocating costs and benefits? A political issue involving consumer choice and control? A moral issue involving questions of social responsibility, religious values, equity and rights?

A similar disconnect was found by Jardine and Hrude (1997) who suggested that terminologies used by risk practitioners have different technical and colloquial meanings that result in mixed “messages.” For example, risk may mean danger, venture or opportunity colloquially; but hazard, probability or consequence technically, and chance or uncertainty for the insurance business. Consequently, a risk forecast will be interpreted and used differently by each stakeholder. The result is what Jardine and Hrude (1997) termed “unnecessary confusion”.

Thus, we need to understand the language or syntax of forecast and uncertainty, and then meaning of that language as used by each stakeholder. There will be several stakeholders involved in the crisis or emergency, including scientists, forecasters, hazard managers, responsible government agencies, politicians, businesses, media, and the public. All will be interacting with each other and each will have their own expectations. This complex interaction will further influence the perception and application of uncertainty, potentially corrupting its use for political or business gain (Cornell and Jackson 2013). However, to begin to understand the communication of uncertainty during a crisis, we first need to understand the role of the forecast in the decision-making process. During emergencies, forecast and uncertainty will pass through, and be modified by, filters applied during the decision-making process, especially application of cost-benefit analysis and precaution.

Filtering the decision I: role of cost-benefit analysis

Cost-benefit analysis (CBA) allows regulators to “tally up the benefits of regulations and its costs, and choose the approach that maximizes the net benefits,” so that regulators should “proceed if the costs exceed the benefits, but not otherwise” (Sunstein 2005). Sunstein (2005) continues, “if poor people stand to gain from regulatory protection, such protection might be worthwhile even if rich people stand to lose somewhat more”, with CBA providing a clearer sense of the “stakes” involved in enforcing regulation. In terms of such a CBA-based approach, Arrow and Fisher (1974) pointed out that, “any discussion of public policy in the face of uncertainty must come to grips with the problem of determining an appropriate attitude toward risk on the part of the policy maker.” In their opinion, the expected benefits of an irreversible decision should be “adjusted to reflect the loss of options it entails” (Arrow and Fisher 1974). Arrow et al. (1996) followed up by arguing that CBA has “a potentially important role to play in helping inform regulatory decision-making”, while recognizing that “it should not be the sole basis for such decision-making.”

A classic approach applied in economics, as originally proposed by Dupuit (1844), Arrow et al. (1996) argued that the role of CBA is to compare favorable and unfavorable effects of policies and should be required for all major regulatory decisions. Their conclusion was that, “CBA analysis can play an important role in legislative and regulatory policy debates on protecting and improving health, safety, and the natural environment.” CBA has since been endorsed by the Commission of the European Communities who stated that, “the protection of health takes precedence over economic considerations” (European Community 2000). In this sense, CBA is proposed not on the basis of economic efficiency, but to assist in accounting for and thinking about risks (Mandel and Gathii 2006). While Woo (2008), for example, assessed the ability of CBA to set probabilistic criteria for evacuation decisions during volcanic crises, Marzocchi and Woo (2007) explored the potential of CBA in assessing the costs versus proposed mitigation measures and levels of “acceptable risk” during a volcanic eruption. Marzocchi and Woo (2009) concluded that their approach “enabled volcanologists to apply all of their scientific knowledge and observational information to assist authorities in quantifying the positive and negative risk implications of any decision.” Sunstein (2005) thus supported CBA for its ability to “produce useful information” and “increase the coherence of programs that would otherwise be a product of some combination of fear, neglect, and interest group power.”

However, for Mandel and Gathii (2006), consideration of future benefits and costs raises a temporal quagmire. How, for example, do we treat deaths? One way is to apply the willingness to pay (WTP) framework which estimates the value of statistical life (VOSL). This can be derived by taking into account individuals’ own WTP for a reduction in the risk of death (Covey 2001). Within this architecture, if an ash cloud encounter caused an Airbus A320-200 to go down, at maximum capacity, we could lose 180 passengers, plus four crew members. If we use a VOSL value of US$ 200,000, as used by Arrow et al. (1996), then this amounts to a WTP of US$ 37 million for one incident. If we use the VOSL value used by the US Environmental Protection Agency of US$ 6.1 million (Sunstein 2005) then this increases to US$ 1.1 billion. These estimates compare with the US$ 693 million cost estimated by Čavka and Čokorilo (2012) for catastrophic loss of an Airbus A320. The Čavka and Čokorilo (2012) estimate also includes costs of loss of aircraft, delay and closure, staff investment, baggage and increased insurance, as well as search and rescue, site clear-up, investigation costs, and loss of investment income. Brownbill (1984) estimated that the total cost of aircraft accidents in Australia in 1980 was approximately US$ 27 million. These values compare with the US$ 2.4 billion loss to the airline industry due to the airspace closures forced by Eyjafjallajökull’s eruption during April and May 2010, plus a US$ 4.1 billion loss in market value (Ragnao et al. 2011). We can add to this financial loss experienced by passengers which were likely between US$ 0.3 and 8 billion (Harris et al. 2012). Then there is the US$ 640 million per day of economic losses due to reduced productivity because of stranded workforce (Harris et al. 2012), which over the 6 days of airspace closure amounts to US$ 3.8 billion. Such financial losses by airlines were covered at length on a daily basis in the newspapers, as were human interest stories of individual personal financial loss (Harris et al. 2012). However, the cost of a single airliner loss was not. The question is thus, was the role of CBA appropriately communicated during the events reviewed here?

As Arrow et al. (1996) suggested that “benefits and costs of proposed policies should be quantified wherever possible—best estimates should be presented along with a description of the uncertainty.” They added that favorable and unfavorable effects of policies must be considered. However, during Eyjafjallajökull and Dirk, costs were covered by the press in terms of financial loss to businesses and individuals. Only for St. Jude were benefits covered. One of the few statements of benefit I could find during Eyjafjallajökull was in a letter that appeared in The Sun on 21 April 2010 (p. 47) in which the writer argued that people should not complain because if a plane went down “they’d all be dead,” so for once we should applaud the Government for “doing their job right”. A communication blueprint that ensures that the costs and benefits of the action are clearly stated, ideally in numeric terms, thus seems a logical action during an environmental disaster.

Filtering the decision II: the precautionary principle

The precautionary principle (PP) has a long history in influencing policy and decision-making in the UK and Europe, having entered the language of environmental policy in Britain in the mid-1980s (Haigh 1994). Sunstein (2005) opens his book with the following definition of PP:

All over the world, there is increasing interest in a simple idea for the regulation of risk: In case of doubt, follow the Precautionary Principle. Avoid steps that will create a risk of harm. Until safety is established, be cautious … … … In a catchphrase: Better safe than sorry.

Thus Sachs (2011) argues that PP “can provide a valuable framework for preventing harm to human health and the environment.” As such, PP can be used in many domains including business, health, and hazard (e.g., Raffensperger and Jackson 1999; Faunce et al. 2008). It requires any precautionary action to be “cost effective” and is applied to risks where there is a “lack of full scientific certainty” (Marchant et al. 2013). However, there is much ambiguity over the definition of PP, there being dozens of different definitions and differences in the understanding of the intended purpose and status of PP (Marchant et al. 2013). Adams (2002) concurs that PP is “vague and ill-defined”, but suggests that there are six main ingredients to its application; where PP should be applied if:

-

1.

A causal link to effects is unclear;

-

2.

Scientific evidence does not yet exist;

-

3.

There is no scientific evidence;

-

4.

Cost is a factor;

-

5.

The scale of the threat is a factor;

-

6.

There are a diversity of situations to be accounted for.

Adams (2002) adds that “the unifying factor is that the handling of inconclusive knowledge, i.e. uncertainty, is central to PP.” Peel (2005) adds that, at the heart of PP is a concern whether uncertain scientific knowledge can be to “describe comprehensively, and predict accurately, threats to human health and the environment.” Van den Belt (2003) and Ricci et al (2003) attempt to clarify PP in the context of dealing with environmental hazards by taking the text from the Rio Declaration on environment and development of 1992 (Article 15):

The precautionary approach shall be widely applied by states according to their capabilities. Where there are threats of serious or irreversible damage, lack of full scientific certainty shall not be used as a reason for postponing cost-effective measures to prevent environmental degradation (http://www.unep.org/Documents.multilingual/Default.asp?DocumentID=78&ArticleID=1163).

Andorno (2004) went on to argue that,

The greatest merit of the precautionary principle is that it has succeeded to reflect the current public concern about the need to favor the protection of the public health and the environment over short term commercial interests.

Judging what is an “acceptable” level of threat to society is an eminently political responsibility (Graham and Hsia 2002). As a result, in 2000, the Commission of the European Communities issued a communication to clarify its approach in using PP and to establish guidelines for its application (European Community 2000). The document, applauded by Foster et al. (2000) because it stated how science rests in the decision-making process, argued that PP should be applied if preliminary objective scientific evaluation indicates reasonable grounds for concern over potentially dangerous effects on human health. Relevantly, the document stated,

The precautionary principle, which is essentially used by decision-makers in the management of risk, should not be confused with the element of caution that scientists apply in their assessment of scientific data;

but,

Recourse to the precautionary principle presupposes that potentially dangerous effects deriving from a phenomenon, product or process have been identified, and that scientific evaluation does not allow the risk to be determined with sufficient certainty.

The European Community (2000) thus argued that,

when there are reasonable grounds for concern that potential hazards may affect the environment or human, animal and plant health, and when at the same time the available data preclude a detailed risk evaluation, the precautionary principle has been politically accepted as a risk management strategy.

Similar sentiments have been laid out by, for example, the Intergovernmental Panel on Climate Change (Pachauri et al. 2000), the UK Government (ILGRA 2002) and UNESCO (COMSET 2005). As modified from circulars from European Community (2000) and Foster et al. (2000), the PP guidelines reduce to:

-

1.

Proportionality: measures must not be disproportionate to the desired level of protection, but cannot aim at zero risk;

-

2.

Nondiscrimination: comparable situations cannot be treated differently and different situations cannot be treated in the same way;

-

3.

Consistency: measures should be comparable in nature and scope with measures already taken in equivalent areas in which all the scientific data are available

-

4.

CBA: examination of the benefits or cost of action, or lack of action, should include a cost-benefit analysis;

-

5.

Scientific developments: initial assessments must be viewed as provisional in nature, pending availability of more reliable data, instrument deployment, analysis, interpretation, and reporting so as to obtain a more complete and updated assessment.

Clearly, within this approach, the scientist and forecaster has no responsibility for making any decision. Their role is purely supportive and advisory in the decision-making process. As Andorno (2004) writes:

Although the precautionary principle operates in the context of scientific uncertainty, it should be applied only when, on the basis of the best scientific advice available, there is good reason to believe that harmful effects might occur to public health or to the environment.

Within this framework, Gollier et al. (2000) argued that greater levels of uncertainty should induce the decision-maker to favor more conservative measures today, but to then reconsider options in the future. Thus, decisions made even in the recent past should not influence the current response.

PP is not without its detractors (Sandin et al. 2002). Sunstein (2002) argued that the “problem with PP is not that it leads in the wrong direction, but that—if taken for all it is worth—it leads in no direction at all.” Van den Belt (2003) also argued that, because the “slightest indication that a particular product or activity might possibly produce some harm to human health or the environment will suffice to invoke the principle”, the principle reduces to an “absurdity”. Mandel and Gathii (2006) pointed out that forms of PP range from relatively “weak” constructions (e.g., a lack of decisive evidence of harm should not be grounds for “refusing to regulate”) to “strong” (e.g., action should be taken to correct a problem as soon as there is evidence that harm may occur). Sunstein (2005) concluded that both forms are useless. While weak forms simply state a truism where governments cannot require absolute certainty that harm will occur, the strong form prohibits all actions and so is totally paralyzing. Hahn and Sunstien (2005) added to these sentiments, writing, “taken seriously, it can be paralyzing, providing no direction at all.” At the same time, Andorno (2004) pointed out that, “the line between a reasonable precaution and an excessive precaution is very thin and allows a wide margin of appreciation to decision makers.”

Ricci et al. (2003) added a question regarding legal, scientific, and probabilistic implications of updating past information when the state of information increases “because a failure to update can result in regretting past choices.” Goldstein and Carruth (2004) went further, arguing that PP can inherently restrict obtaining and using science so that, if we are to maximize the value of PP, “it is crucial that its impact does not adversely affect the potent preventive role of science and technology.” Thus, Cameron (2006) listed the seven most frequent criticisms of PP as being:

-

1.

Excessive discretion;

-

2.

Reversal of the burden of proof;

-

3.

Distortion of regulatory priorities;

-

4.

Stifling of technological innovation and paralysis of development;

-

5.

Costs of precautionary measures, while discounting the benefits;

-

6.

Misuse as a protectionist barrier;

-

7.

Perverse consequences from precautionary measures;

To sum up, PP causes governments to err on the side of caution in decision-making, especially when uncertainties are large (Goldstein and Carruth 2004). Chakraborty (2011) argued that during the Eyjafjallajökull eruption “the Civil Aviation Authority applied a zero-tolerance policy in regards to aircraft operations through volcanic ash.” Indeed, PP has emerged as one of the main regulatory tools of European Union environmental and health policy with important ramifications for member state policies (Löfstedt 2002; Wiener and Rogers 2002; Balzano and Sheppard 2002).

CBA and PP: operational constraints

CBA and PP are the frameworks and constraints within which scientists and forecasters have to operate and communicate during times of crisis. There is an immense amount of uncertainty on any forecast, so that PP—being uncertainty driven—will always be applied. This explains the widespread use of the words “cautious”, “overcautious”, and “too cautious” in the word dictionaries created for The Times and The Sun during Eyjafjallajökull’s eruption by Harris et al. (2012), in which the word “cautious” appears at least 20 times. In contrast, the word “uncertainty” appears just once (in The Sun dictionary). Even the report of an aircraft ash encounter in The Sun, as cited above, ended with the quote “it’s fair to say we’ve been too cautious.” The conclusion of Viens (2011) here seems appropriate,

given the level and extent of normative uncertainty during times of emergency, risk regulation should devote more attention to the question: what one ought to do when one does not know what to do?

Because there will always be uncertainty, more flexible approaches in forecast provision and communication of the risks involved with the associated hazard have been advocated. In terms of uncertainty and application of PP, Funtowicz and Ravetz (1990) set up the problem by stating that “the traditional assumption of the certainty of all quantitative information” needs to be recognized as “unrealistic and counterproductive.” They argued that the problem originates from an inappropriate conception and meaning of numbers in relation to the natural and social worlds, where “an uncertain quantity” can be conceived as an “incorrect fact”. However, care needs to be taken because data expressed as a string of digits presents a spurious appearance of accuracy (Funtowicz and Ravetz 1990). Ruckelshaus (1984) added that risk calculations must be expressed as distributions of estimates or ranges of probability. But, results also need to be put into perspective, and provision of “magic numbers” that can be “manipulated without regard to what they really mean” (Ruckelshaus 1984) needs to be avoided. This is a well-recognized problem, where the “red book” produced by the Committee on the Institutional Means for Assessment of Risks to Public Health (CIMARPH 1983) stated that “when scientific uncertainty is encountered in the risk assessment process, inferential bridges are needed to allow the process to continue.”

Meaning and language of uncertainty during emergencies

To the scientist, uncertainty on a measurement stems from many components which Taylor and Kuyatt (1994) group into “those which (can be) evaluated by statistical methods” and “those which (can be) evaluated by other means”, so as to cause random and systematic errors. Taylor and Kuyatt (1994) go on to state that “the nature of an uncertainty component is conditioned by the use made of the corresponding quantity.” Taylor and Kuyatt (1994) argue that uncertainty is also conditioned by “how the quantity appears in the mathematical process that describes the measurement process.” Thus, in science, uncertainty is a quantitative error statement which may be expressed using statistical assessments of variation in a measurement (e.g., Grabe 2001). Consequently, the measurement, result, projection, or forecast can be expressed in terms of (Grabe 2005):

This defines “the result of a measurement” which is “required to localize the true value” of the quantity being measured (Grabe 2005). In support of this definition, Taylor (1997) writes, “error analysis is the study and evaluation of uncertainty in measurement. Experience has shown that no measurement, however carefully made, can be completely free of uncertainties.” However, such simple definitions of uncertainty can only apply to a single measurement made in isolation and then interpreted by the scientist who made them. Uncertainty on model results and forecasts supplied to decision-making chains are more complex (Fig. 2).

The uncertainty cascade: an attempt to define the processing chain between event occurrence and forecast delivery. While the top row gives the objects of the chain, the second lists some of the uncertainties impinging on each object [following Wynne (1992) and Spiegelhalter and Riesch (2011)]. An external (to the official response chain) feedback into the uncertainty process is indeterminacy. This may be introduced by the press and other actors impacted by the forecast. There will also be those involved in the uncertainty trough, these being alienated parties (Shackley and Wynne 1995). These two effects may not always apply, so are linked with dashed lines. At the bottom, I qualitatively assess the integrated uncertainty on the forecast in terms of multiplication of the uncertainty objects defined in the top row, these being: (i) unpredictability of the event (UCO 1); (ii) model input limits (UCO 2a), and the result of feeding unreliable results from one model to the next (UCO 2b); (iii) inadequacy in knowledge and ability to make basic measurements (UCO 3); and (iv) the impact of unexpected events (UCO 4). The latter effect may not always come into play, so is depicted as a top row detour. The press and industrial or business influences (UCO A), as well as maverick and rival scientific influences (UCO B), may also contribute to the uncertainty of the forecast. The result is a complex and hard to quantify uncertainty value on the forecast

Definition and application of uncertainty during the decision-making process

Walker et al. (2003) defined uncertainty as “any deviation from the unachievable ideal of completely deterministic knowledge of the relevant system.” Moss and Schneider (2000) argued,

The term ‘uncertainty’ can range in implication from a lack of absolute sureness to such vagueness as to preclude anything more than informed guesses or speculation. Sometimes uncertainty results from a lack of information, and on other occasions it is caused by disagreement about what is known or even knowable. Some categories of uncertainty are amenable to quantification, while other kinds cannot be sensibly expressed in terms of probabilities.

In terms of the decision-making process for a population at risk, the European Community (2000) is more specific, stating:

Scientific uncertainty results usually from five characteristics of the scientific method: (i) the variable chosen, (ii) the measurements made, (iii) the samples drawn, (iv) the models used and (v) the causal relationship employed.

The same document also points out that scientific uncertainty may also arise from controversy. Uncertainty may thus relate to both qualitative and quantitative elements of the analysis. Within this system, Wynne (1992) defined four different kinds of uncertainty, which increase in scale as we move down the list:

-

1.

RISK—where we know the odds;

-

2.

UNCERTAINTY—where we do not know the odds, but may know the main parameters which can be used to reduce uncertainty;

-

3.

IGNORANCE—complete ignorance—where we just “don’t know what we don’t know”, and

-

4.

INDETERMINACY—which are causal chains and open networks that produce results and feed backs that cannot be predicted.

In terms of forecasting, Shubik (1954) made the pertinent point that “the more and the better are one’s data on the past, the more chance one has of picking a good law for predicting the future.” Following Shubik (1954), we may add that the amount of information regarding the future state of factors influencing event progression will decrease as the time period separating the forecast from the event becomes more distant, meaning that ignorance, and hence also, uncertainty will increase with forecast time period. Epstein (1980) makes this point well stating that “typically a decision must be made in period 1 subject to uncertainty about the environment that will prevail in period 2.” At the start of period 2, the state of the environment becomes known. Epstein (1980) argues that such a logical temporal progression appropriate for a situation where n > 1 decisions have to be made simultaneously during an event when the decisions actually need to be made sequentially subject to improving information is impossible. The same problem will be true for events for which we have little past experience. Stirling (2007) sums up well, pointing out that, due to ignorance, “neither probabilities nor outcomes can be fully characterized”, especially for events that are new and have no precedent. This was very much the case for Eyjafjallajökull.

In terms of indeterminacy, actors in the chain may intercede in an attempt to change the forecast or its basis. Shubik (1954) provides another good example,

stock market prediction published in the newspaper may influence many people to change their intended actions and thus help to make the forecast a reality.

Indeterminacy may also result from the choice of words used to present uncertainty, subjective judgments and scientific disagreement in public (Moss and Schneider 2000), plus detrimental comments, and actions, of impacted stakeholders. This then reduces confidence in the forecast, thereby introducing a form of “qualitative uncertainty.” An example of such an instance can be found during the Eyjafjallajökull eruption when a report in The Times on 20 April 2010 cited the International Air Transport Association as criticizing a “reliance” on “theoretical modeling”. There were many other such comments from airline industry stakeholders which contributed to qualitative indeterminacy.

These multiple and complex components of uncertainty involved when communicating modeling and forecasting results during environmental disasters will all be overlain on each other. The range of uncertainty will span small, if just risk is involved, through large if we have complete ignorance and high degrees of indeterminacy (Wynne 1992; Funtowicz and Ravetz 1990). Forecasting involves all of the uncertainty types listed above. Thus, uncertainty on a cloud forecast—whether it be volcanic or meteorological—is, by definition, as large as it possibly can be. Spiegelhalter and Riesch (2011) add to the problem. They identified five objects on which there will be uncertainty when conducting a model-based risk analysis:

-

1.

The event, which is essentially unpredictable;

-

2.

Parameters within models, which suffer from limitations in terms of input information, availability of real-time data and ability to physically parameterize the natural process;

-

3.

Alternative model structures, that may reveal limitations in the formalized, accepted or mandated knowledge, or which may provide contradictory or conflicting information;

-

4.

Model inadequacy due to known limitations in understanding of the modeled system, counter-lobbies (i.e., other ideas and approaches), and other sources of indeterminacy;

-

5.

Effects of model inadequacy due to unspecified sources, ignorance of anomalies and unexpected events, and other unknown limitations to our knowledge when modeling a highly dynamic and chaotic natural system.

There is also the issue of ambiguity whereby a claim or forecast cannot be definitively resolved or proved. When there is ambiguity Stirling (2007) argues that reduction to a single “sound scientific” picture is neither rigorous nor rational. During the Eyjafjallajökull eruption this was a particularly pressing problem, which was set against airline industry claims that there was no ash where it had been predicted to be. For example, on 19 April 2010, The Times lead with a dominant page 1 report. Occupying 78 % of the front page and entitled “Brown under pressure to get Britain flying”, the caption to the picture accompanying the report read “A test flight carrying the BA chief executive, Willie Walsh, leaves Heathrow yesterday.” The report also stated that test flights had been carried out and no damage was reported so that airline authorities were calling for restrictions to be lifted.

Within the uncertainty system, there will be cascading uncertainty (e.g., Pappenberger et al. 2005). In such a cascade, uncertainties will multiply rapidly as we move through the chain from measurement, through data processing to modeling, to forecast production and delivery (Fig. 2). The complex uncertainty cascade associated with the resulting forecast will be almost impossible to describe in a clear and succinct way. Some of these uncertainties may also not be easy to quantify. Take, for example, the problem of the “uncertainty trough” (e.g., Shackley and Wynne 1995). This is the situation where perceived uncertainty is high among those directly involved in knowledge production, low among users and managers, and then high again by those alienated from the source research program or institute. Lobbying by “alienated” parties may result in further uncertainty in the official forecast. A good example of this problem can be found on The Independent on 20 April 2010 in which the following line was printed,

The main criticism is that European watchdogs are using computer models of theoretical volcanic output and local wind speeds to estimate affected area, and then banning all flights.

In other words, a qualitative criticism of the forecast process added uncertainty to the result. Such indeterminacy will then feedback to reduce confidence in the forecasting process. Within the framework of Fig. 2, such qualitative uncertainty appraisals cannot be quantified, and are not helpful from the scientific perspective.

Donovan et al. (2012a) and Stirling (2007) collapsed these ideas into diagrams that charted the ways in which different types of knowledge regarding risk, ambiguity and ignorance can be combined in an attempt to at least understand the complex interplay of the uncertainty components that feed into the newspaper-published forecast. I have attempted to combine and build on these frameworks in Fig. 3. During a volcanic crisis, there will be many sources of uncertainty to add to the cascade, including “instrument error, model error, choice of models, processing error, interpretative error, population behavior, unknown unknowns and language issues” (Donovan et al. 2012a). Uncertainty thus not only results from error on measurement but also from ignorance—especially if there is no past experience to go on. Uncertainty is then multiplied by subjective judgments, model choice and parameterization, data collection limits, lack of validation opportunities, publication of results from rival models, presentation format of the forecast itself and randomness of the event, as well as criticism, public debate and argument. The end product that arrives in the newspaper is a highly complex derivation of all precedent steps (Fig. 3). This is the product that the readership is subject to.

Attempt to place the heritage of, and complexity behind, newspaper-published event forecasts within the information-flow frameworks of Stirling (2007) and Donovan et al. (2012a). The basis of the flow is a square whose corners are defined by the four main components of uncertainty that impinge on the forecast. These uncertainty sources become less problematic toward the top left-hand corner of the uncertainty square. While thin lines link the components of the scientific preparation of a forecast, thick lines link the uncertainty chain. Unfortunately, the popular perception resulting from viewing the forecast as presented by the newspaper finds itself toward the bottom right corner of this scheme. That is, close to the ignorance component of uncertainty and thus in the most problematic corner of the scheme

These complexities make clear communication of the forecast and its uncertainty to, and through, the newspaper a complicated issue. As Kasperson and Palmlund (1989) pointed out, “the simple fact of the matter is that we know relatively little about how best to communicate complex risk issues.” Risk communication being, itself, a “highly uncertain activity with high visibility and political stakes” (Kasperson and Palmlund 1989). Communication thus requires carefully constructed syntax. Information contained in a report appearing on page 5 of The Times on 21 April 2010, entitled “Flying into the unknown”, was the best blueprint for such a statement that I could find. The article considered the model used by the Met. Office to help forecast the cloud, stating that the model used was called ‘Name’ (Nuclear Accident Model). The report descried how estimates of ash volume being ejected into atmosphere were fed into the model to be coupled with wind speed and structure forecasts to give the best estimate of ash location. The report pointed out that all weather models were based on probabilities rather than “fact” and added that imperfect knowledge of the plume nature and atmospheric conditions meant that predictions inevitably would not be “to the nearest inch”, but that physical measurements suggested that the Met. Office predictions were “pretty close.” However, the same report was careful to point out that airlines thought that scientists made “overcautious interpretations based on probabilistic models and very limited empirical evidence.” This actually seems to be an instance of a recognized component of the uncertainty cascade being used as evidence against the forecast. In such situations, we need more statements that clarify the forecasting process and uncertainty problem, even if we cannot avoid statements from other stakeholders that frame uncertainty in a negative way.

Uncertainty in the business world

In the business world, uncertainty is defined in a similar, but less quantitative, way. The BusinessDictionary.com defines uncertainty as,

a situation where the current state of knowledge is such that (1) the order or nature of things is unknown, (2) the consequences, extent, or magnitude of circumstances, conditions, or events is unpredictable, and (3) credible probabilities to possible outcomes cannot be assigned. (http://www.businessdictionary.com/definition/uncertainty.html, downloaded 01/02/2014 20:56:22)

BusinessDictionary.com adds that, “too much uncertainty is undesirable; (but) manageable uncertainty provides the freedom to make creative decisions.” As a result, in business, uncertainty can be used to the advantage of certain business interests to frame arguments in favor of self-interest. For an individualist market system, Douglas and Wildavsky (1982) wrote that, in terms of an entrepreneur seeking to “optimize at the margins of all his transactions”, the behavior that works best in this environment “does not ignore or regret uncertainties; on the contrary, uncertainties are opportunities.” This business definition of uncertainty thus means that scientific statements of uncertainty can be converted into creative statements to the advantage of the corporate strategist. This appears to have been the case with the “no-risk” argument constructed by the airlines in the press during the Eyjafjallajökull eruption. One, of many, statements printed to support this premise was summed up in a widely cited statement from one airline actor that read (The Independent, 20 April 2010, p. 42–43),

The analysis we have done so far, alongside that from other airlines’ trial flights, provides fresh evidence that the current blanket restrictions on airspace are unnecessary. We believe airlines are best positioned to assess all available information and determine what, if any, risk exists to aircraft, crew and passengers.

This is consistent with Zehr’s (1999) warning that uncertainty can be managed by a spokesperson to achieve a specific goal. Using a series of case studies from environmental debates, Zehr (1999) argued that,

if non-scientists fail to become aware of how uncertainty works, they open themselves to manipulation by scientists and other groups and organizations that use science (and uncertainty) to their own benefit.

Although Stocking (1999) found that the newspaper typically gives equal weight to scientists and nonscientists when handling scientific issues involving uncertainty, in the case of Eyjafjallajökull far more weight was given to nonscientists (see Part 1 of this review). Such a factor enhances corporate use of uncertainty to frame a situation to their advantage. This runs into the problem presented by Kasper (1980) who argued that disparity between objective (real) and subjective (imaginary) risks creates difficulties for decision-makers and regulators due to:

-

1.

Potential presentation by government, industry and technical experts that certain estimates are valid, to result in,

-

2.

Erosion of trust between scientific experts and the public, which is complicated by,

-

3.

The process of setting priorities by governmental and corporate actors, to result in

-

4.

A challenge for the decision-makers to explain uncertainties about the effects of their actions.

Such issues seem to have helped fuel the framing of a “crisis” situation during the Eyjafjallajökull eruption. In Part 1 of this review, I used the word “crisis” 25 times. However, Macrae (2011) argued that, during the Eyjafjallajökull eruption, “one of the most amazing aspects of this crisis was that there was a crisis at all” because “there was already regulation in place with an emphasis on safety throughout the aviation industry.” Macrae (2011) went on to argue that airlines “recognized that the first carrier to send up an airliner that then crashed would go the way of Pan Am after the Lockerbie bombing: the market would kill the company as passengers shifted to “safer” airlines.” The problem was, “the image of catastrophic engine failure that captured the imaginations in the first two days soon faded as millions of lesser disasters and conveniences surfaced,” these being the stories of suffering among the stranded (see Part 1 of this review). Thus, the Eyjafjallajökull event “was a remarkable instance of the possibility of a severe loss being set against the certainty of multiple lesser losses, a risk equation that is always difficult to manage” (Macrae 2011). As argued in Part 1, individual blame logic, as commonly applied in business, can then result in the forecasts and their uncertainty being blamed for all losses associated with the event.

Uncertainty: the popular view

For the colloquial meaning of uncertainty the problem is well stated by Gigerenzer (2002). The second chapter of his book, “The illusion of certainty”, opens with the statement (p. 9),

The creation of certainty seems to be a fundamental tendency of human minds. The perception of simple visual objects reflects this tendency. At an unconscious level, our perceptual systems automatically transform uncertainty into certainty.

Peel (2005) adds:

For a generation growing up with television programs like ‘CSI: Crime Scene Investigation’, ‘scientific certainty’ may well seem an achievable reality, rather than an elusive fiction. Claims of scientific ‘proof’ in the media suggest that knowledge about a particular phenomenon is indisputable and universally accepted by scientists.

That the uncertainty versus certainty problem is commonly transformed into a black and white decision—unknown versus known; “yes” or “no”—is borne out in various online definitions of uncertainty. For example, the British English Dictionary and Thesaurus defines uncertainty as (http://dictionary.cambridge.org/dictionary/british/uncertainty, downloaded 01/02/2014 21:02:43),

a situation in which something is not known, or something that is not known or certain: Nothing is ever decided, and … … … uncertainty is very bad for staff morale.

The last part of this definition is of extreme concern. Such a meaning is implicit in the following line published during the second air space closure due to continued activity at Eyjafjallajökull in May 2010 (The Daily Telegraph, 10 May 2010, p. 14):

Thousands of travellers are facing uncertainty after another cloud of volcanic ash crippled services to parts of Europe and America over the weekend