Abstract

Temperate C3-grasslands are of high agricultural and ecological importance in Central Europe. Plant growth and consequently grassland yields depend strongly on water supply during the growing season, which is projected to change in the future. We therefore investigated the effect of summer drought on the water uptake of an intensively managed lowland and an extensively managed sub-alpine grassland in Switzerland. Summer drought was simulated by using transparent shelters. Standing above- and belowground biomass was sampled during three growing seasons. Soil and plant xylem waters were analyzed for oxygen (and hydrogen) stable isotope ratios, and the depths of plant water uptake were estimated by two different approaches: (1) linear interpolation method and (2) Bayesian calibrated mixing model. Relative to the control, aboveground biomass was reduced under drought conditions. In contrast to our expectations, lowland grassland plants subjected to summer drought were more likely (43–68 %) to rely on water in the topsoil (0–10 cm), whereas control plants relied less on the topsoil (4–37 %) and shifted to deeper soil layers (20–35 cm) during the drought period (29–48 %). Sub-alpine grassland plants did not differ significantly in uptake depth between drought and control plots during the drought period. Both approaches yielded similar results and showed that the drought treatment in the two grasslands did not induce a shift to deeper uptake depths, but rather continued or shifted water uptake to even more shallower soil depths. These findings illustrate the importance of shallow soil depths for plant performance under drought conditions.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Grasslands are pan-global agroecosystems which occur under arid, semi-arid and humid conditions and cover about 40 % of the total global land area (without Greenland and Antarctica; White et al. 2000). Most of the temperate C3-grasslands of Central Europe, especially the highly productive ones, occur in regions with a relatively high annual precipitation (>800 mm year−1) and strongly depend on sufficient water in the spring/early summer when aboveground biomass production reaches its maximum (Hopkins 2000). However, the frequency of drought periods is predicted to increase in Europe, including Switzerland, during the next decades (Christensen et al. 2007; Fischer and Schär 2010; Frei et al. 2006; Schär et al. 2004). Regional models project a decrease in summer (June, July, August) precipitation by 21–28 % for Switzerland up to 2085 (Fischer et al. 2012; CH2011 2011), with potentially severe consequences for the productivity and species composition of grassland systems.

The ecological impact of summer drought on grasslands has been tested in several precipitation manipulation experiments. However, the majority of these field studies were conducted in C4-grass dominated prairies of North America, which are already drought-driven ecosystems (e.g. Axelrod 1985; Fay et al. 2003; Frank 2007; Harper et al. 2005; Knapp et al. 2001, 2002; Nippert et al. 2007; Suttle et al. 2007; Tilman and Haddi 1992), while fewer studies have focused on C3-grasslands which are common in Europe (e.g. Gilgen and Buchmann 2009; Grime et al. 2000; Hoekstra et al. 2014; Kahmen et al. 2005; Van Ruijven and Berendse 2010; Vogel et al. 2012). In general, these studies demonstrated that under drought conditions, root growth was reduced less than shoot growth and that relatively more carbon was allocated to belowground organs (Davies and Bacon 2003; Marschner 1995; Palta and Gregory 1997). These findings are in agreement with fundamental predictions of plant ecology that assume roots grow towards deeper soil layers to improve a plant’s access to water and to avoid drought-induced low soil moisture in the top soil (Garwood and Sinclair 1979; Troughton 1957). It is, however, still unclear whether these changes in root density and distribution also lead to water uptake from deeper soil layers. In fact, several studies have shown that the presence of roots does not necessarily correlate with water uptake (Dawson and Ehleringer 1991; Kulmatiski and Beard 2013). As a consequence, the question of whether drought induces a shift to deeper water sources in grasslands remains unresolved.

The aims of this study were to identify the most important water source—in terms of soil depth—relied upon by the grassland community during drought and to determine whether drought induces a shift to deeper soil layers for water uptake. As Central European C3-grasslands vary strongly in composition and thus management with altitude (Ellenberg 2009), these grasslands might also show different responses to drought, which is predicted to differ in frequency and magnitude with altitude. Therefore, we selected two different grassland sites for our experiments, a Swiss lowland and a sub-alpine grassland (both purely C3), where we established transparent rainout shelters to simulate severe summer drought events during three consecutive growing seasons. To determine the depth of plant water uptake we used the natural abundance of stable oxygen and hydrogen isotopes (δ18O and δ2H, respectively) in soil water profiles and related these to δ18O and δ2H values of plant xylem water. We also assessed aboveground productivity and the vertical distribution of root biomass (in the last year of our experiment) to relate our stable isotope-derived data for water uptake depth to drought-induced changes in belowground carbon allocation. Overall, our study was guided by the hypothesis that plants subjected to drought explore deeper soil layers for water uptake to compensate for the low soil moisture availability in the uppermost soil layers.

Material and methods

Research sites and experimental setup

This study was performed at two grasslands in Switzerland: Chamau (CHA) and Alp Weissenstein (AWS). CHA represents an intensively managed grassland with up to six cuts per year and frequent farm manure inputs and is located in the Swiss lowlands at 393 m a.s.l. (47°12′37″N, 8°24′38″E). AWS is a sub-alpine grassland, characterized by extensive grazing, and is located at 1,978 m a.s.l. (46°34′60″N, 9°47′26″E). The soils of both sites can be classified as cambisols. CHA is located on a postglacial gravel plain, while AWS is located on a glacial moraine (Bearth et al. 1987; Roth 2006). Both sites show no or only minimal inclination. More details on the sites are given in Table 1 and in Gilgen and Buchmann (2009) and Zeeman et al. (2010).

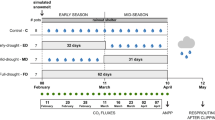

To test the effects of summer drought on plant water uptake, we established replicated 5.5 × 5.5-m control and drought plots at both sites. Plots were arranged in a randomized block design, where one block consisted of a control plot and a drought plot each. At CHA, we used seven blocks in 2009 (which had been established in 2006). In the 2009/2010 winter, we established six new blocks for 2010 and 2011. At AWS, we used five permanently established blocks from 2009 to 2011. The drought plots were covered with a 3 × 3.5-m transparent rainout shelter for 6–12 weeks in late spring/early summer (for exact dates see Table 1). The duration of the shelter period was set to create extreme drought conditions during early summer by reducing the mean annual precipitation by approximately 30 %. This level of reduced precipitation was slightly more severe than the projected 20 % decrease in precipitation for June to August for Northern Switzerland (reference period 1980–2009; CH2011 2011; Fischer et al. 2012; Table 1). The tunnel-shaped shelters were about 2.1 m high at the vertex and had two opposing open sites, oriented in the main wind direction, to minimize possible warming (Kahmen et al. 2005). The differences in air temperatures at a height of 160 cm under the shelters compared to outside were −0.03 °C at CHA and +0.1 °C at AWS (Gilgen and Buchmann 2009). However, photosynthetically active radiation was reduced at CHA and AWS by about 20 and 26 %, respectively.

All soil and plant samples were collected from a 2 × 1-m inner core area in the center of each plot which was surrounded by a buffering zone to prevent lateral water flow or precipitation inputs. Samples were collected during the growing season of each year on all of the control and treatment plots at the following time-points: (1) before the shelters were installed, i.e. during the pre-treatment period (pre-tmt period); (2) during the drought treatment (tmt period); (3) after the shelters had been removed, i.e. during the recovery (post-treatment) period (post-tmt period).

Soil moisture

Volumetric soil water content was measured continuously during all growing seasons using an EC-5 soil moisture sensor probe (Decagon Devices, Inc., Pullman, WA) at three soil depths (5, 15 and 30 cm) in at least two blocks of each control and treatment plots. Data were logged every 10 min with a CR10X data logger (Campbell Scientific Inc., Logan, UT). Gravimetric soil water content was also determined at AWS in September 2010. Briefly, soil samples were taken with a soil auger down to 40 cm and separated into 10-cm layers. These separated soil samples were put immediately upon collection into sealed plastic bags, weighed in the laboratory directly after arriving there from the field, oven-dried at 105 °C for 72 h and weighed again. Gravimetric water content was calculated as the difference between the soil fresh and dry weights (FW and DW, respectively), divided by soil DW.

Community aboveground biomass and species composition

Aboveground biomass at the community level was harvested each time when the farmers managing the sites mowed the respective grasslands. CHA was mowed four times in 2009 (20 May, 1 July, 6 August, 2 September) and 2010 (8 May, 1 July, 25 August, 23 September) and five times in 2011 (22 April, 26 May, 15 July, 30 August, 13 October). AWS was mowed only once a year in early autumn (15 September 2010, 28 August 2011). Biomass samples were clipped using two randomly distributed frames (20 × 50 cm) and then pooled for each plot. According to the farmers’ standard practice, the cutting height was approximately 7 cm above ground. The harvested biomass was dried at 60 °C for at least 96 h and then weighed. Species composition was determined according to the Braun–Blanquet method during the pre-tmt period in the last year of the experiment (2011).

Belowground biomass

Belowground biomass in each plot (CHA: n = 6 blocks; AWS: n = 5 blocks) was sampled at soil depths ranging from 0 to 30 cm seven times throughout 2011 at CHA and six times at AWS, using a 5.5-cm diameter Eijkelkamp soil auger (Eijkelkamp Agrisearch Equipment, Giesbeek, The Netherlands). Each soil core was separated into three layers according to soil depth, i.e. 0–5, 5–15 and 15–30 cm, respectively. Samples were packed into polyethylene bags, stored immediately in a cool box, brought to the laboratory and stored in a 4 °C cool room prior to being subjected to analysis. We also sampled belowground biomass at a soil depth of 0–15 cm at high temporal resolution (n = 3, about weekly) at CHA during and after the tmt period in 2011. To make the 0–15-cm sample of belowground biomass comparable with the more stratified sampling of the soil samples, we pooled the data from soil samples collected at 0–5-cm and 5–15-cm soil depths for further temporal analysis. The cores were washed over a 1-mm mesh as quickly as possible after collection (typically within 24 h after sampling) to separate the roots, following which the roots were dried at 60 °C for at least 72 h. Sample DW were determined.

Plant and soil water samples for the stable isotope analyses

The stable isotope composition of a plant’s water source was determined in the root crown, i.e. the transition zone between the root and shoot, which we collected (depending on the size of the root crowns) from two to ten individuals per species. For non-woody, herbaceous plants, the root crown has been shown to best reflect the isotopic signal of a plant’s water source (Barnard et al. 2006; Durand et al. 2007). The water sources of the most abundant species were studied: at CHA, these were Phleum pratense, Lolium multiflorum, Poa pratensis, Taraxacum officinale, Trifolium repens, Rumex obtusifolius; at AWS, these were Trisetum flavescens, Phleum rhaeticum, Carum carvi, Achillea millefolium, Rumex alpestris, Taraxacum officinale and Trifolium pratense. The root crown samples collected were cleaned of remaining soil particles with soft tissue and immediately inserted into a gas-tight 10-ml exetainer, stored in a cool box for transportation and kept frozen at −18 °C until further analysis.

To determine the stable isotopic composition of the soil water, we collected soil cores from the main rooting zone (core diameter 2 cm, soil depth 0–30 cm) from all drought and control plots concomitantly with taking root crown samples. Three replicate cores per treatment/sampling time were taken in 2009 and four replicate cores per treatment/sampling time in 2010 and 2011. In 2009 and 2010, the soil cores were separated into three 10-cm soil depth layers; in 2011, they were separated at a higher spatial resolution (soil depth layers: 0–2, 4–6, 9–11, 14–16, 19–21, 29–31 cm, with and an additional sample deeper than 40 cm) [CHA pre-tmt and tmt = 47.5 cm; CHA post-tmt and AWS tmt = 42.5 cm; see Electronic Supplementary Material (ESM) 1)]. However, due to the fragility of dry soil, this stratification had to be adjusted for the post-tmt period at CHA and the tmt and post-tmt periods at AWS: soil samples were taken at soil depths of 0–5, 5–10, 20–25 and 30–35 cm, respectively. After separation into layers, soil samples were transferred to gas-tight 10-ml exetainers and stored at −18 °C until further analysis. Water from all root crown and soil samples was extracted by cryogenic vacuum distillation, following the method described in Ehleringer and Osmond (1989).

Stable isotope analyses

The δ18O and δ2H (only in 2011) of extracted water samples were analyzed with a high-temperature conversion/elemental analyzer coupled with a DeltaplusXP isotope ratio mass spectrometer via a ConFlo III interface (Thermo-Finnigan, Bremen, Germany) using the methods described by Werner et al. (1999). All δ18O and δ2H values are expressed relative to the Vienna Standard Mean Ocean Water (VSMOW) in per mil (‰):

where R is the 18O/16O or 2H/1H ratio of the sample or the VSMOW, respectively. The long-term precision of the laboratory’s quality control standard for δ18O and δ2H in water was 0.08 and 0.36 ‰, respectively.

Calculation of plant water uptake depth

We used two different approaches to estimate the depth of plant water uptake, both based on stable isotope analyses of soil and plant waters: (1) a linear interpolation (LI) method and (2) a Bayesian calibrated mixing model (SIAR).

The LI method

The LI approach is a single isotopologue approach (in our case only δ18O) based on the assumption that the depth of plant water uptake can be estimated by simply comparing the δ18O values of xylem water with the δ18O values of soil water along the soil profile. Thus, the water uptake depth is defined as the soil depth where δ18Oxylem = δ18Osoil, neglecting potential mixing of several water sources for total plant water uptake. While this assumption is clearly an oversimplification, it allows the estimation of single, clearly defined water uptake depths that can be used in subsequent statistical analyses. We used the δ18O values of soil water, measured along the entire soil profile (down to a soil depth of 50 cm in 2011), but stratified the soil profile into different layers (see above) to establish smoothed (i.e. steady instead of stratified) soil water δ18O profiles of treatment and control plots for each sampling time by linearly interpolating between the averages of all replicates for a given plot, treatment and sampling time (most soil water profiles were unimodal, with the most positive values at shallow depth). By doing so, we were able to account for the high spatial variability of soil water δ18O. To compare these soil water δ18O profiles to the respective δ18O in plant water, we averaged the δ18O values of all sampled plant species from a given plot, treatment and sampling time, obtaining one source water δ18O value at the community level. This plot-wise evaluation accounted for differences in root crown volumes across species as well as for presence/absence of individual plant species over the entire growing season. Our pooling approach was supported by the absence of significant interactions between treatment and species (CHA, P = 0.7849; AWS, P = 0.9821), tested using a linear mixed-effect model (LMM), which indicated that species-specific responses to drought were only of minor importance. On average (over all treatments, years and experimental periods), the mean variation (mean of standard deviations) of δ18O across all species present in any given plot [mean ± standard deviation (SD): CHA, 2.41 ± 6.1 ‰; AWS, 2.95 ± 0.51 ‰) was comparable to the mean variation of δ18O between the replicates within a single species across all corresponding plots (CHA, 2.36 ± 0.28 ‰; AWS, 2.64 ± 1.59 ‰). Some plant water samples showed a root crown water δ18O value that was higher than the highest soil water δ18O value, likely due to the fact that the topsoil in the uppermost layer had a higher δ18O value than the top soil layer we analyzed. In these cases, we assigned the δ18O of plant water to that soil depth which resulted in the smallest difference of δ18O between plant water and water in the top soil.

Bayesian calibrated mixing model

To overcome the limitation of the LI approach mentioned above, we also estimated plant water uptake depth using SIAR (siar.package, version 4.1.2), a R-based program that allows solving mixing models for stable isotope data within a Bayesian framework (Parnell et al. 2010). The Bayesian model is based on a Gaussian likelihood method for a mixture with a Dirichlet distributed prior-distribution of the mean. For our study, projections of SIAR were based on the measured isotope data of three sources (i.e. soil layers) and the root crown δ18O values and are represented as a probability density function. The model parameters were set as follows: trophic enrichment factor = 0; iterations = 500,000; number of initial iterations to discard, burnin = 50,000; concentration dependence, concdep = 0. SIAR calculates the probability density distributions of the relative contributions of different soil water sources (in our case soil layers) to plant water. To interpret the SIAR output (probability density functions) we focused on the contributions to plant water uptake with the highest density (mode value). It is important to mention that probability density functions of SIAR describe continuous probability distributions, which represent the mean of several thousand calculations (posterior sample proportions). Therefore, if the input data (in our case isotope data) are less distinct, the proportions of the different sources (in our case three soil layers) with the highest density (mode) can add up to >100 %.

For CHA in the years 2009 and 2010, the input source data for SIAR (soil layers) included δ18O data of soil water as follows: top = soil depth 0–10 cm, intermediate = 10–20 cm and deep = 20–30 cm. Due to the higher vertical resolution of the soil sampling in 2011, soil water input data were: top = soil depth 0–2 cm, intermediate = 14–16 cm and deep = 29–31 cm for the pre-tmt and tmt periods; top = 0–5 cm soil depth, intermediate = 20–25 cm and deep = 30–35 cm for the post-tmt period. For AWS in 2010, the input source data for SIAR included δ18O data of soil water as follows: top = 0–10 cm soil depth, intermediate = 10–20 cm, deep = 20–30 cm. Due to the higher vertical resolution of the soil sampling in 2011, the layers were: top = soil depth 0–2 cm, intermediate = 14–16 cm and deep = 29–31 cm for the pre-tmt period; top = 0–5 cm, intermediate = 20–25 cm and deep = 30–35 cm for the tmt and post-tmt. To increase model performance in this Bayesian approach, we used data for both water isotopes, δ18O and δ2H, at both sites in 2011.

Statistical analyses

For statistical analyses we mainly applied linear mixed-effects models (LMM) with “plot” as the random effect, comparable to “repeated measures” (Pinheiro et al. 2012). To test treatment effects on both aboveground biomass and the depth of plant water uptake, we calculated an LMM for each site, with treatment (drought vs. control), experimental period (i.e. pre-tmt period, tmt period and post-tmt period) and year (CHA: 2009–2011, AWS: 2010 and 2011) as fixed effects (main factors) and “plot” as the random effect. To test the effects of drought treatments on belowground biomass, we calculated an LMM for each site, with treatment, experimental period and soil depth (0–15 and 15–30 cm) as fixed effects and “plot” as the random effect. In order to analyze the effects of these factors on plant water uptake depth for the three experimental periods separately, we calculated an additional LMM for each experimental period at the two sites, with treatment and year as fixed effects and “plot” as random effect. Significant differences between the treatments of a single harvest of aboveground biomass were tested by a one-way analysis of variance (ANOVA). To test for significant differences between the two treatments of a single sampling, we used an ANOVA with a subsequent post hoc test (Tukey) and multiple comparisons. All statistical analyses were performed using R version 2.14.2 (2012).

Results

Effect of shelters on soil moisture and the isotopic composition of soil water

Due to the inter-annual variation in precipitation and the different treatment durations, the amount of excluded rain per year ranged between 21 and 39 % (Table 1). Volumetric soil water content (SWC) at both sites was reduced by the drought treatment during the tmt periods in all 3 years (Fig. 1). Even during 2011, a year with an exceptional spring drought, soil moisture was significantly reduced in the drought plots. Effects of the drought treatment were most pronounced in the uppermost soil layer (depth 5 cm). At CHA, the moisture at a soil depth of 5 cm dropped on average (2009–2011) from 26.6 % [±5.1 % (SD); control] to 13.4 % (±4.5 %; drought) due to the drought treatment; at AWS, it decreased at the same depth from 26.8 (±1.1 %) to <10 %, respectively (Fig. 1). At AWS, SWC during the treatment period in 2010 was very likely to be below the measurement range of the sensors (no soil cracks were observed); thus, no reliable data were available later into the tmt period. The moisture reduction could also be seen at a soil depth of 30 cm where moisture dropped on average from 36.8 % (±2.5 %; control) to 32.8 % (±4.0 %; drought) at CHA and from 26.8 % (±6.1 %; control) to 4.8 % (±4.0 %; drought) at AWS.

Volumetric soil water content (SWC) in soil samples collected at soil depths of 5, 15 and 30 cm at Chamau (CHA) in 2009–2011 and at Alp Weissenstein (AWS) in 2010 and 2011. Control plots (n = 2) received natural precipitation, whereas drought plots (n = 2) were covered with transparent shelters to exclude rain for 8–10 weeks per year (shaded gray area)

Additional gravimetric soil water measurements in September 2010 (post-tmt period) confirmed the sensor measurements and the significant drying-out of the soil during the drought treatment, even down to a soil depth of 40 cm (data not shown; control vs. drought, P = 0.009). After removal of the shelters, i.e. in the post-tmt periods, SWC in the drought plots recovered to levels observed in the control plots within 2–3 weeks in both sites (Fig. 1).

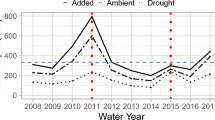

Community aboveground biomass

At both sites, the drought treatment significantly reduced aboveground biomass (Fig. 2; Table 2), without changing species composition (interaction tmt:species, P = 0.3534; see ESM 1 and 2). At CHA, aboveground biomass decreased in response to drought by 22.3 % [±1 % (SD)] when averaged across all sampling dates in all 3 years (Table 2, factor tmt, P < 0.001). This effect did not differ among years (Table 2, interaction tmt:year, P = 0.916), but it did show a significant interaction with the experimental period (interaction tmt:period, P = 0.017; factor period, P < 0.001). At AWS, aboveground biomass was reduced by drought by 42 % (±35 %; Table 2, factor tmt, P = 0.056) when averaged over all 3 years. However, there was a significant difference between the years (factor year; P < 0.001), and when analyzed for each year separately, the drought effect was absent in 2010 at AWS, a relatively wet and cool year (Table 1).

Aboveground biomass at the intensely managed grassland site CHA (2009: n = 7; 2010 and 2011: n = 6) and the extensively managed sub-alpine site AWS (n = 5). Shaded gray area Period when shelters were installed to exclude rain from drought plots. Significant differences between the treatments for a single harvest were tested with a one-way analysis of variance (ANOVA). Significance: *0.05 ≥ P > 0.01; **0.01 ≥ P > 0.001, ***P ≤ 0.001). Significance differences between the treatments for overall community aboveground biomass (2009–2011) are given in Table 2. Data are presented as the mean ± standard error (SE)

Belowground biomass

At both sites, belowground biomass showed a pronounced depth profile (Table 3, factor depth, P < 0.001). At CHA, root biomass ranged between 200 and 600 g DW m−2 in the top soil layer (0–15 cm) compared to 5–30 g DW m−2 in deeper soil layers (15–30 cm; Fig. 3). Root biomass at CHA significantly increased throughout the growing season (Table 3, factor period, P = 0.031) for both treatments (Table 3, interaction tmt:period, P = 0.945). Under drought conditions, there was a trend of increased root biomass in the top soil layer which became only significant in the period immediately after the shelters were removed (P < 0.05; Fig. 3). This trend towards more root biomass in the top soil layer (depth 0–15 cm) was mainly driven by an increase of root biomass in the top 5 cm of soil where the bulk of the roots was present (see ESM 3).

Belowground biomass in soil samples collected at soil depths of 0–15 cm and 15–30 cm at the intensely managed grassland site CHA (n = 3 samples; if 15–30-cm soil samples present, n = 6) and the extensively managed sub-alpine site AWS (n = 5 samples) in 2011. Shaded gray area Period when the shelters were installed to exclude the rain on the drought plots, asterisk indicates a significant difference between the two treatments at P ≤ 0.5, as tested by an F test referring to the LMM (Table 3).

Root biomass at a soil depth of 0–15 cm was higher at AWS than at CHA, with root biomass ranging between 400 and 1,200 g DW m−2 (Fig. 3). In contrast to CHA, the changes in root biomass at AWS between the periods were less clear (Table 3, factor period, P = 0.053), and no effect of drought was observed (Table 3, factor tmt, P = 0.884). Comparable to CHA, the bulk of root biomass at AWS was also located in the top 5 cm of soil, where plants from the drought plots showed the tendency of increasing root biomass (factor depth, P < 0.001; ESM 3).

Plant water uptake depth

Estimates based on LI

At the CHA control plots, the depth of plant water uptake changed significantly among the experimental periods (Figs. 4 and 5, see ESM 4 and 5). The drought treatment also significantly affected the plant water uptake depth (Table 4, factor treatment, P = 0.017). Over time, the depth of water uptake increased during the treatment period and declined in the post-tmt period (Table 4, factor period, P < 0.001; interaction tmt:period, P < 0.001). During the treatment period, plants from the drought plots utilized water from upper soil layers [soil depth (mean ± SD) 6 ± 7 cm], while plants from the control plots relied on relatively deeper soil layers for their soil water uptake (17 ± 3 cm). This effect was present in all 3 years of our experiment (ESM 6, tmt:factor treatment, P < 0.001), but the water uptake depth of the control plants within the treatment periods differed among the years (Fig. 4; ESM 6, interaction tmt:year, P = 0.009). During the pre-tmt and post-tmt periods, there was no significant difference in water uptake depth between the control and drought plots (ESM 6, pre-tmt:factor treatment, P = 0.895; post-tmt:factor treatment, P = 0.878).

Water uptake depth (presented by an box-whisker plot) at the two grassland sites CHA and AWS as calculated by linear interpolation over all plots (replicates, CHA: n = 4–6; AWS: n = 3–4). The growing season was divided into three experimental periods: the pre-treatment period (pre-tmt), i.e. before the shelters were installed; the treatment period (tmt), when the shelters were present; the post-treatment period (post-tmt) when the shelters were removed (recovery period). Water uptake depths were calculated separately for these three experimental periods for each year (CHA: 2009–2011; AWS: 2010 and 2011) and the two treatments. Statistical F test (LMM) output across all periods and years as well as per period and year is given in Table 4 and ESM 6, respectively

Mean soil depth of plant water uptake over all years (CHA: 2009–2011; AWS: 2010 and 2011) and per experimental period (pre-tmt, tmt, post-tmt period) for the two sites (CHA and AWS) as calculated by the linear interpolation method (LI). Data are mean values of pooled replicates (CHA: n = 15–16; AWS: n = 7–9), with the error bars representing the inter-annual variability (SE; n = 3). Statistical output based on a linear mixed-effects model is given in Table 4 and ESM 6

At AWS, the depth of plant water uptake changed significantly across the experimental periods (Table 4, factor period, P < 0.001), with lower depths during the treatment periods (Fig. 5) that were independent of the treatment (Table 4, factor treatment, P = 0.321). Thus, drought-affected plants utilized water from the same soil layer as control plants.

Estimates based on SIAR

In order to estimate the probabilities (densities) of different soil layers contributing to plant water uptake and to test the accuracy of the LI approach, we used the Bayesian calibrated SIAR mixing model. At CHA in 2009 and 2010, the highest probabilities (mode values) of a soil layer contributing to plant water uptake during the pre-tmt period was found for the top layer, with mode values of between 70 and 90 % independent of the treatment (Fig. 6; Table 5; excluding the very dry spring of 2011). Contributions of intermediate and deeper soil layers to total plant water uptake were less likely, being only between 2 and 6 %. During the pre-tmt period in 2011, water uptake by control plants was rather equally distributed along the soil profile, with most likely the top, intermediate and deep layers contributing 26, 39 and 36 % of water, respectively (Table 5). For the plants on the drought plots (pre-tmt period 2011), the top layer showed highest probability of contributing to plant water uptake (50 %) compared to the lower depths (intermediate, deep: 3, 10 %), similar to values obtained during the 2 years previously. During the treatment periods (2009–2011), plants growing at the drought and control plots at CHA clearly used different soil layers for water uptake. For plants at drought-affected plots, the largest fraction of water was very likely contributed by the top soil layer, with values of between 43 and 68 % (Table 5), while the contributions of the intermediate and deep soil layers were less likely (3–36 %). In contrast, for plants at the control plots, the largest water contributions consistently and most likely originated from the deep layer (29–48 %), while the top and intermediate layers contributed relatively less (top: 4–37 %; intermediate: 4–36 %). After the removal of the shelters, i.e., during the post-tmt periods, the likelihoods that plants took up most water from the top layer were highest (30–93 %), while the contributions of the two other layers (intermediate, deep) were less likely (1–43 %), independent of the treatment. Thus, results based on the SIAR approach supported our results obtained by the simple LI approach.

Probability density distributions for the contribution of three different soil layers (top, intermediate, deep) to plant water uptake at CHA for 3 different years (2009–2011), 3 experimental periods within 1 year (pre-tmt, tmt, post-tmt periods) and 2 different treatments (control vs. drought). The density distribution was calculated with a Bayesian calibrated mixing model (SIAR) based on the measured water isotope data of soils and plants (see ESM 5)

In contrast to CHA, the contributions of the three soil layers to total plant water uptake at AWS were more variable over time (Fig. 7). During the pre-tmt periods in both years, all soil layers at the control plots showed similar likelihoods (Table 5) and contributed rather equally to total plant water uptake (between 34 and 40 %). For plants at the drought plots, the two deepest layers showed higher probabilities (between 38 and 40 %) to contribute to total plant water uptake than the top layer (28 %) in 2010, while in 2011, the deep soil layer most likely contributed the most to total water uptake of plants from the drought plots (62 %) compared to the top and intermediate soil layers (5–6 %). During the treatment periods in 2010 and 2011, it was most likely that the top layer contributed <33 % to total water uptake, while the intermediate and deep layers most likely contributed between 32 and 55 %, independent of the treatment. The post-tmt periods were characterized by large inter-annual differences in water uptake depth between the two treatments (Fig. 7). In 2010, the top layer most likely contributed 90 % to the total plant water uptake at the drought plots, while the contributions of the intermediate and deep layers were only between 1 and 3 %. For plants at the control plots, all three layers contributed between 35 and 39 % to total plant water uptake (Table 5). In the post-tmt period 2011, the top layer most likely contributed 35 % to plant water uptake at the drought plots, which falls within the same range as the contribution of the other two layers (intermediate: 36 %; deep: 30 %). However, the top layer at the control plots dominated the most likely contributions to total plant water uptake (with 66 %), while the two other layers only showed probabilities of 22 % (intermediate) and 7 % (deep). Again, these results confirmed our findings based on LI (except for the plants at the drought plots during pre-tmt period 2011).

Probability density distributions for the contribution of 3 different soil layers (top, intermediate, deep) to plant water uptake at AWS for 2 different years (2010–2011), 3 experimental periods (pre-tmt, tmt, post-tmt period) within a year and two different treatments (control vs. drought). The density distribution was calculated with a Bayesian calibrated mixing model (SIAR) based on the measured water isotope data of soils and plants (see ESM 5)

Discussion

Drought response of grassland biomass production

During the treatment period, SWC dropped in all three depths of soil layers (Fig. 1), which is in accordance with soil water potential data from an associated study (Bollig and Feller 2014; Gilgen and Buchmann 2009). In response to the simulated drought conditions, community aboveground biomass production in both grasslands decreased (Fig. 2). These findings confirm similar results from previous studies, where not only community productivity, but also plant gas exchange and plant water relations clearly showed reduced activities in response to drought (Bollinger et al. 1991; Bollig and Feller 2014; Fay et al. 2003; Gilgen and Buchmann 2009; Hopkins 1978; Signarbieux and Feller 2012). In contrast, reports on belowground biomass production have been less clear, showing small effects or even increased root biomass under drought conditions as plants invest more resources belowground to counteract water and nutrient limitations (e.g. Davies and Bacon 2003; Kahmen et al. 2005; Marschner 1995; Palta and Gregory 1997). These findings are consistent with those of our study since we did not find a significant treatment effect on overall root biomass (Fig. 3). However, we are aware that our belowground biomass data should be interpreted with caution, even though both treatments are affected similarly. The relatively small core diameter (5.5 cm; necessary due to our multiannual experimental setup) could lead to a underestimation of root biomass. Furthermore, fine roots, which can account for up to approximately 80 % of the total belowground biomass in grasslands, might have been lost during root washing, and we did not distinguish between living and dead roots (Fisk et al. 1998, Ping et al. 2010, Pucheta et al. 2004).

In general, grasses are known to be rather shallow rooted, i.e. to allocate their belowground biomass preferentially in the topsoil (<30 cm), particularly in arid regions (Jackson et al. 1996; Schenk and Jackson 2002). This allocation strategy is most likely related to the life form and morphology of grasses but also to the higher concentration of nutrients in the topsoil than in soil at deeper depths (Evans 1978; Hopkins 2000; Hu and Schmidhalter 2005). In a savannah ecosystem, February and Higgins (2010) reported a negative correlation between root distribution of grasses and soil moisture, but a positive correlation between root distribution and soil nitrogen concentrations, indicating the dominant control of nutrient availability rather than soil moisture on root distribution. Moreover, in their study of a C4-grass (Andropogon gerardii)-dominated tallgrass prairie, Nippert et al. (2012) showed that conductive tissues of roots— and thus hydraulic conductivity—decreased exponentially with depth in the soil profile. These findings imply that shallow roots may transport a significantly higher amount of water than deeper roots. However, also in temperate zones, the allocation of more root biomass in shallow rather than deeper soil depths might serve two strategies, namely, rapid access to water input through precipitation after drought events and the facilitating of nutrient uptake if water is available.

Water uptake during drought periods

At the lowland site (CHA), the depth of plant water uptake was significantly affected by drought. While plants under control conditions shifted their water uptake to deeper soil layers in summer, drought-affected plants showed no shift and utilized predominantly water from the top 10 cm of the soil (Figs. 4, 5, 6). This unexpected behavior was highly consistent across all 3 years of the study and also across the two different approaches used to estimate plant water uptake depths (LI and SIAR). Our data on belowground biomass production also support these findings as they showed no increase of belowground biomass in deeper soil layers but rather a trend towards more root biomass in the top 5 cm of the soil (Fig. 3, ESM 3). Evaporative enrichment in 18O can be excluded as an explanation since this would have affected sites similarly. However, in contrast to the lowland site, plant water uptake depths at the sub-alpine site (AWS) were not affected by drought during the treatment periods (Figs. 4, 5, 7). Here, plant water uptake depths differed significantly only in the post-tmt periods, although this pattern was not consistent between years. Also, belowground biomass only tended to increase about 1 month after shelter removal (2011; Fig. 3), when isotope sampling had already stopped.

The results of our study in C3-grasslands is in agreement with a number of recently published studies, mainly in C4-systems, reporting shallow rooting patterns and water uptake of grassland species affected by drought. For example, Nippert and Knapp (2007) showed that C4-grasses had a greater dependency on water from the top 30 cm of the soil than on water from “deep soil” (characterized by the isotopic composition of winter precipitation, assumed to recharge and drive the isotopic composition of groundwater). This dependency on shallow soil layers continued even during prolonged dry periods, which is in accordance with the results we show for C3-grasslands here. A recently published tracer (2H) study also showed that grasses of a mesic savanna continued to extract water from the top 5 cm of soil depth even when water became scarce (Kulmatiski and Beard 2013). Similar results are available for intensively managed C3-grassland, for which Hoekstra et al. (2014) report predominantly shallow plant water uptake under drought conditions for (4-species) mixtures, but not for the respective monocultures. Asbjornsen et al. (2007) reported on two C4-species, Zea mays and the prairie species Andropogon gerardii, which obtained a high proportion (45 and 36 %, respectively) of their water from the top 20 cm of the soil profile after an extended dry period. Similarly, using the natural abundance of stable isotopes as markers, Eggemeyer et al. (2009 ) showed that C4-grass species in a semi-arid grassland increased water uptake from depths below 0.5 m only to a minimal extent under drought conditions, preferentially taking up most of their water from the upper soil profile (0.05–0.5 m). Syers et al. (1984) showed the greatest phosphorus uptake of perennial ryegrass and white clover (both C3) in surface soil (2.5 cm), especially under non-irrigated conditions. Studying the carbon allocation of soybean (C3), Benjamin and Nielsen (2006) showed that water deficits did not affect plant root distribution, but that approximately 97 % of the total soybean roots occurred in the top 23 cm of the soil, irrespective of sampling time or water regime. In summary, our data from temperate C3-grasslands (in Switzerland) agree well with previously published work on C4-grasslands, which have evolved under more arid climates, and suggest that grassland species rely on water from shallow soil layers rather than use or shift to deeper layers under drought conditions.

Thus, the question arises why grassland species use predominately shallow soil layers for water uptake, even though it might be the driest soil layer and even though water is available in deeper horizons. Grasses and many grassland species possess traits allowing them to survive even extreme habitat disturbances. These traits include the opportunistic and superior acquisition of resources, such as high assimilation and water exchange rates, well-protected meristems close to or in the ground and the option of dormancy if conditions are less favorable (Craine et al. 2012; Gibson 2009; Grime et al. 2000; Grubb 1977). Recent research has highlighted the fact that physiological traits for drought tolerance in grass species are wide-spread among different climate regimes and different taxa, suggesting that native species and native species diversity have promoted drought resilience (Craine et al. 2012). It has also been shown that the physiological tolerance of grasses to dry environments (with high vapor pressure deficits and thus high potential evaporation rates) is strongly linked to high hydraulic conductivity rates of leaf and root tissues, independent of C3 or C4-pathways (Ocheltree et al. 2014). Olmsted (1941) already found in a pot experiment that drought induced the growth of new basal roots at a shallow soil depth. Such young and shallow root systems, which are common in plants adapted to drought and arid conditions, typically possess a higher hydraulic conductivity and allow the use of water from small rainfall events only infiltrating into the top soil (Hunt and Nobel 1987; Nobel 2002; Nobel and Alm 1993; Tyree 2003; Ward 2009). That plants at our sites were still able to extract water at a relatively low SWC, and thus at soil water potentials of −0.18 MPa (at 10 cm depth on drought plots during tmt; <−0.025 MPa on control plots; Bollig and Feller 2014), is not only supported by our isotope measurements, but also by the leaf water potential and gas exchange measurements of an associated study (Signarbieux and Feller 2012). Thus, physiological acclimation as well as evolutionary adaptations of the life-form “grasses” and grassland species seems to favor the exploitation of shallow soil layers even under drought, increasing the ecological fitness of grasses under varying environmental conditions.

Conclusion

Based on our findings in temperate C3-grasslands in Switzerland, there is indeed evidence that belowground biomass distribution and its dynamic changes in response to the environment correlate with plant water uptake depths. Contrary to our original hypothesis, grassland species, both grasses and herbs, generally rely on rather shallow soil layers (soil depth 0–30 cm) for water uptake and do not grow roots that extend into deeper soil layers under drought conditions to access soil moisture in the lower soil profile. To the contrary, such grassland species continue or extend water uptake to even more shallow soil depths (0–10 cm). This behavior has not yet been studied well in humid temperate C3-grasslands, but if confirmed, vegetation models used for climate impact studies need to take this behavior into account.

References

Asbjornsen H, Mora G, Helmers MJ (2007) Variation in water uptake dynamics among contrasting agricultural and native plant communities in the Midwestern US. Agric Ecosyst Environ 121:343–356

Axelrod DI (1985) Rise of the grassland biome, central North America. Bot Rev 51:163–201

Barnard RL, de Bello F, Gilgen AK, Buchmann N (2006) The δ18O of root crown water best reflects source water δ18O in different types of herbaceous species. Rapid Commun Mass Spectrom 20:3799–3802

Bearth P, Heierli H, Roesli F (1987) Blatt 1237 Albulapass (Geologischer Atlas der Schweiz 1 : 25,000, Atlasblatt 81). Schweizerische Geologische Kommission und von der Landeshydrologie und –geologie. Bundesamt für Landestopographie, Wabern

Benjamin JG, Nielsen DC (2006) Water deficit effects on root distribution of soybean, field pea and chickpea. Field Crop Res 97:248–253

Bollig C, Feller U (2014) Impacts of drought stress on water relations and carbon assimilation in grassland species at different altitudes. Agric Ecosyst Environ 188:212–220

Bollinger EK, Harper SJ, Barrett GW (1991) Effects of seasonal drought on old-field plant communities. Am Midl Nat 125:114–125

CH2011 (2011) Swiss Climate Change Scenarios CH2011. C2SM, MeteoSwiss, ETH, NCCR Climate, OcCC, Zurich, Switzerland

Christensen JH, Hewitson B, Busuioc A, Chen A, Gao X, Held R, Jones R, Kolli RK, Kwon WK, Laprise R (2007) Regional climate projections. In: Solomon S et al (eds) Climate change 2007: the physical science basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. University Press Cambridge, Cambridge, pp 847–940

Craine JM, Ocheltree TW, Nippert JB, Towne EG, Skibbe AM, Kembel SW, Fargione JE (2012) Global diversity of drought tolerance and grassland climate-change resilience. Nat Clim Ch 3:63–67

Davies W, Bacon M (2003) Adaptation of roots to drought. In: de Kroon H, Visser EJW (eds) Root ecology. Ecological studies, vol 168. Springer, Berlin, pp 173–192

Dawson TE, Ehleringer JR (1991) Streamside trees that do not use stream water. Nature 350:335–337

Durand JL, Bariac T, Ghesquiere M, Biorn P, Richard P, Humphreys M, Zwierzykovski Z (2007) Ranking of the depth of water extraction by individual grass plants, using natural 18O isotope abundance. Environ Exp Bot 60:137–144

Eggemeyer KD, Awada T, Harvey FE, Wedin DA, Zhou X, Zanner CW (2009) Seasonal changes in depth of water uptake for encroaching trees Juniperus virginiana and Pinus ponderosa and two dominant C4 grasses in a semiarid grassland. Tree Physiol 29:157–169

Ehleringer JR, Osmond CB (1989) Stable isotopes. In: Pearcy RW, Ehleringer JR, Mooney HA, Rundel P (eds) Plant physiological ecology: field methods and instrumentation. Chapman and Hall Ltd, Heidelberg, pp 300–381

Ellenberg H (2009) Vegetation ecology of central Europe. Cambridge University Press, Cambridge

Evans PS (1978) Plant root distribution and water use patterns of some pasture and crop species. N Z J Agric Res 21:261–265

Fay PA, Carlisle JD, Knapp AK, Blair JM, Collins SL (2003) Productivity responses to altered rainfall patterns in a C4-dominated grassland. Oecologia 137:245–251

February EC, Higgins SI (2010) The distribution of tree and grass roots in savannas in relation to soil nitrogen and water. S Afr J Bot 76:517–523

Fischer EM, Schär C (2010) Consistent geographical patterns of changes in high-impact European heatwaves. Nat Geosci 3:398–403

Fischer A, Weigel AP, Buser CM, Knutti R, Künsch HR, Liniger MA, Schär C, Appenzeller C (2012) Climate change projections for Switzerland based on a Bayesian multi-model approach. Int J Climatol 32:2348–2371

Fisk MC, Schmidt SK, Seastedt TR (1998) Topographic patterns of above-and belowground production and nitrogen cycling in alpine tundra. Ecology 79:2253–2266

Frank D (2007) Drought effects on above- and belowground production of a grazed temperate grassland ecosystem. Oecologia 152:131–139

Frei C, Schöll R, Fukutome S, Schmidli J, Vidale PL (2006) Future change of precipitation extremes in Europe: intercomparison of scenarios from regional climate models. J Geophys Res 111:D06105

Garwood EA, Sinclair J (1979) Use of water by six grass species. 2. Root distribution and use of soil water. J Agric Sci 93:25–35

Gibson DJ (2009) Grasses and grassland ecology. Oxford University Press, Oxford

Gilgen AK, Buchmann N (2009) Response of temperate grasslands at different altitudes to simulated summer drought differed but scaled with annual precipitation. Biogeosciences 6:2525–2539

Grime JP, Brown VK, Thompson K, Masters GJ, Hiller SH, Clarke IP, Askew AP, Corker D, Kielty JP (2000) The response of two contrasting limestone grasslands to simulated climate change. Science 289:762–765

Grubb PJ (1977) The maintenance of species-richness in plant communities: the importance of the regeneration niche. Biol Rev 52:107–145

Harper CW, Blair JM, Fay PA, Knapp AK, Carlisle JD (2005) Increased rainfall variability and reduced rainfall amount decreases soil CO2 flux in a grassland ecosystem. Glob Ch Biol 11:322–334

Hoekstra NJ, Finn JA, Lüscher A (2014) The effect of drought and interspecific interactions on the depth of water uptake in deep- and shallow-rooting grassland species as determined by δ18O natural abundance. Biogeosci Discuss 11:4151–4186

Hopkins B (1978) The effects of the 1976 drought on chalk grassland in Sussex, England. Biol Conserv 14:1–12

Hopkins A (2000) Grass: its production and utilization. Blackwell Science, Oxford

Hu Y, Schmidhalter U (2005) Drought and salinity: a comparison of their effects on mineral nutrition of plants. J Plant Nutr 168:541–549

Hunt ER, Nobel PS (1987) Allometric root/shoot relationships and predicted water uptake for desert succulents. Ann Bot 59:571–577

Jackson RB, Canadell J, Ehleringer JR, Mooney HA, Sala OE, Schulze ED (1996) A global analysis of root distributions for terrestrial biomes. Oecologia 108:389–411

Kahmen A, Perner J, Buchmann N (2005) Diversity-dependent productivity in semi-natural grasslands following climate perturbations. Funct Ecol 19:594–601

Knapp AK, Briggs J, Koelliker J (2001) Frequency and extent of water limitation to primary production in a mesic temperate grassland. Ecosystems 4:19–28

Knapp AK, Fay PA, Blair JM, Collins SL, Smith MD (2002) Rainfall variability, carbon cycling, and plant species diversity in a mesic grassland. Science 298:2202–2205

Kulmatiski A, Beard K (2013) Root niche partitioning among grasses, saplings, and trees measured using a tracer technique. Oecologia 171:25–37

Marschner H (1995) Mineral nutrition of higher plants, 2nd edn. Academic Press, London

Nippert JB, Knapp AK (2007) Linking water uptake with rooting patterns in grassland species. Oecologia 153:261–272

Nippert JB, Fay PA, Knapp AK (2007) Photosynthetic traits in C3 and C4 grassland species in mesocosm and field environments. Environ Exp Bot 60:412–420

Nippert JB, Wieme RA, Ocheltree TW, Craine JM (2012) Root characteristics of C4 grasses limit reliance on deep soil water in tallgrass prairie. Plant Soil 355:385–394

Nobel P (2002) Ecophysiology of roots of desert plants, with special emphasis on agaves and cacti. In: Waisel Y, Eshel A, Kafkafi U (eds) Plant roots: the hidden half. CRC Press, New York, pp 961–973

Nobel P, Alm D (1993) Root orientation vs water uptake simulated for monocotyledonous and dicotyledonous desert succulents by a root-segment model. Funct Ecol 7(5):600–609

Ocheltree T, Nippert J, Prasad P (2014) Stomatal responses to changes in vapor pressure deficit reflect tissue-specific differences in hydraulic conductance. Plant Cell Environ 37:132–139

Olmsted CE (1941) Growth and development in range grasses. I. Early development of Bouteloua curtipendula in relation to water supply. Bot Gaz 102:499–519

Palta JA, Gregory PJ (1997) Drought affects the fluxes of carbon to roots and soil in 13C pulse-labelled plants of wheat. Soil Biol Biochem 29:1395–1403

Parnell AC, Inger R, Bearhop S, Jackson AL (2010) Source partitioning using stable isotopes: coping with too much variation. PLoS One 5:e9672

Ping X, Zhou G, Zhuang Q, Wang Y, Zuo W, Shi G, Lin X, Wang Y (2010) Effects of sample size and position from monolith to core methods on the estimation of total root biomass in a temperate grassland ecosystem in Inner Mongolia. Geoderma 155:262–268

Pinheiro J, Bates D, DebRoy S, Sarkar D, R Development Core Team (2012) nlme: linear and nonlinear mixed effects models. R package version 3.1-103. R Foundation for Statistical Computing, Vienna

Pucheta E, Bonamici I, Cabido M, Díaz S (2004) Below-ground biomass and productivity of a grazed site and a neighbouring ungrazed exclosure in a grassland in central Argentina. Aust Ecol 29:201–208

R Development Core Team (2012) R: a language and environment for statistical computing, R version 2.14.2 R Foundation for Statistical Computing, Vienna. URL: http://www.R-project.org/

Roth K (2006) Bodenkartierung und GIS-basierte Kohlenstoffinventur von Graslandböden: Untersuchungen an den ETH-Forschungsstationen Chamau und Früebüel (ZG, Schweiz). PhD thesis. University of Zurich, Zurich

Schär C, Vidale PL, Lüthi D, Frei C, Häberli C, Liniger MA, Appenzeller C (2004) The role of increasing temperature variability in European summer heatwaves. Nature 427:332–336

Schenk HJ, Jackson RB (2002) Rooting depths, lateral root spreads and below-ground/above-ground allometries of plants in water-limited ecosystems. J Ecol 90:480–494

Signarbieux C, Feller U (2012) Effects of an extended drought period on physiological properties of grassland species in the field. J Plant Res 125:251–261

Suttle K, Thomsen MA, Power ME (2007) Species interactions reverse grassland responses to changing climate. Science 315:640–642

Syers JK, Ryden JC, Garwood EA (1984) Assessment of root activity of perennial ryegrass and white clover measured using 32phosphorus as influenced by method of isotope placement, irrigation and method of defoliation. J Sci Food Agric 35:959–969

Tilman D, Haddi A (1992) Drought and biodiversity in grasslands. Oecologia 89:257–264

Troughton A (1957) The underground organs of herbage grasses. Bulletin No. 44. Commonwealth Bureau of Pastures and Field Crops, Hurley

Tyree M (2003) Hydraulic properties of roots. In: de Kroon H, Visser EJW (eds) Root ecology. Ecological studies, vol 168. Springer, Berlin, pp 125–150

Van Ruijven J, Berendse F (2010) Diversity enhances community recovery, but not resistance, after drought. J Ecol 98:81–86

Vogel A, Scherer-Lorenzen M, Weigelt A (2012) Grassland resistance and resilience after drought depends on management intensity and species richness. PLoS One 7:e36992

Ward D (2009) The biology of deserts. Oxford University Press, Oxford

Werner RA, Bruch BA, Brand WA (1999) ConFlo III: an interface for high precision δ13C and δ15N analysis with an extended dynamic range. Rapid Commun Mass Spectrom 13:1237–1241

White RP, Murray S, Rohweder M, Prince SD, Thompson KM (2000) Grassland ecosystems. World Resources Institute, Washington DC

Zeeman MJ, Hiller R, Gilgen AK, Michna P, Plüss P, Buchmann N, Eugster W (2010) Management and climate impacts on net CO2 fluxes and carbon budgets of three grasslands along an elevational gradient in Switzerland. Agric For Meteorol 150:519–530

Acknowledgments

We would like to thank Roland Werner and Annika Ackermann for the stable isotope analyses, Peter Plüss and Thomas Baur for help with data logger setup and fieldwork, Werner Eugster for providing meteorological data and Albin Hammerle for the introduction in Bayesian statistics. Andrew Parnell is acknowledged for help with SIAR, Matthias Suter for supporting the statistical analysis and Samuel Schmid for help in the vegetation analysis. We are grateful to all of those who assisted during fieldwork, especially the staff at the ETH research stations. We would like to thank Josef Nösberger for fruitful discussions and Johanna Hänger for help with water extractions and root washing. This study was financed by NCCR Climate (subproject “Plant/Soil”).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Susanne Schwinning.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Prechsl, U.E., Burri, S., Gilgen, A.K. et al. No shift to a deeper water uptake depth in response to summer drought of two lowland and sub-alpine C3-grasslands in Switzerland. Oecologia 177, 97–111 (2015). https://doi.org/10.1007/s00442-014-3092-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00442-014-3092-6