Abstract

A recent meta-analysis of meta-analyses by Møller and Jennions (2002, Oecologia 132:492–500) suggested that ecologists using statistical models are explaining between 2.5% and 5.42% of the variability in ecological studies. Although we agree that there is considerable variability in ecological systems that is not explained, we disagree with the approach and general conclusions of Møller and Jennions. As an alternate perspective, we explored the question: "How much ecological variation in relationships is not explained?" We did this by examining published studies in five different journals representative of the numerous sub-disciplines of ecology. We quantified the proportion of variance not explained in statistical models as the residual or random error compared to the total variation in the data set. Our results indicate that statistical models explain roughly half of the variation in variables of interest, vastly different from the 2.5%–5.42% reported by Møller and Jennions. This difference resulted largely from a different level of analysis: we considered the original study to be the appropriate level for quantifying variability while Møller and Jennions combined studies at different temporal and spatial scales and attempted to find universal single-factor relationships between ecological variables across study organisms or locations. Therefore, we believe that Møller and Jennions actually measured the universality of single factor effects across multiple ecological systems, not the amount of variability in ecological studies explained by ecologists. This study, combined with Møller and Jennions', illustrates importance of applying statistical models appropriately to assess ecological relationships.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

A recent analysis by Møller and Jennions (2002) suggested that ecologists using statistical models are explaining little of the variability in ecological studies. They paint a bleak picture by suggesting that the mean amount of variance in a measured trait explained by the main factor of interest is between 2.5% and 5.42%. This conclusion was based on a meta-analysis of 43 published meta-analyses in physiology, ecology and evolutionary biology. Additionally, the authors suggest sample sizes of greater than 100 are necessary to adequately assess significance of effects, with 80% power.

Ecologists have the challenging task of explaining and predicting the complex natural world. Ideally, ecologists would like to explain 100% of the variability in a system. However, random processes, inherent complexity, measurement accuracy, individual behavior, genetic variability, evolutionary history and the interaction of these factors complicate this explanatory process (Mangel et al. 2001; Møller and Jennions 2002). Nevertheless, ecologists often employ a number of statistical models in an attempt to quantify relationships between the most important dependent and independent variables when examining natural phenomena (Hilborn and Mangel 1997). Many of these statistical models rely on variance techniques to quantify the portion of variance in dependent variables that is explainable by independent variables. Other unmeasured independent variables and the factors listed above are lumped into residual error, or unexplained variance. These statistical models are largely without mechanism, but quantify whether one variable changes in concert with other variables (Mangel et al. 2001).

Although we agree that there is considerable variability in ecological systems, we disagree with the approach that Møller and Jennions (2002) used to draw their conclusion and believe that their research findings are misdirected. By performing a meta-analysis of meta-analyses, they (1) combine studies at different temporal and spatial scales (Osenberg et al. 1999); (2) cannot account for changes in the relationship between ecological variables in different study organisms or locations (Arnqvist and Wooster 1995); and (3) suggest large sample sizes for ecological studies when their calculation of sample size is based on correlations twice removed from the original studies. We argue that the strength of relationships in ecological studies are better assessed using the original statistical models as is done with a meta-analysis rather than with a meta-analysis of meta-analyses, and we hypothesize that a much greater portion of variability is explained than proposed by Møller and Jennions (2002).

Instead of asking "How much variation can be explained by ecologists?" as was done by Møller and Jennions (2002), we posed the question: "How much variation in relationships between variables is not explained?" This approach allowed us to examine the amount of variation not explained with statistical models relative to the total amount of variation in the data set. In effect we assessed the importance of random processes, inherent complexity, measurement accuracy, individual behavior and evolutionary history relative to the relationships between measured variables in a given study. Furthermore, we hypothesized that the variability explained using statistical models would be greatest for experimental studies in controlled environments (e.g. greenhouse, laboratory or growth-chamber) and least for observational field studies as randomness and noise would often be less under more controlled laboratory conditions.

Materials and methods

We collected data from published studies in the journals Behavioral Ecology and Sociobiology, Ecology, Ecosystems, Evolution and Oecologia in the year 2001. We chose studies in these five journals to represent the wide range of numerous sub-disciplines of ecology (Table 1) and assumed that the power of the statistical analyses published in 2001 and in these journals would be similar to those over a broader range of years and publications. All of the factors included in a statistical analysis were included regardless of their significance; this reflected the investigator's attempt at looking at the "important" factors in a given system.

In statistical models, the proportion of variability (η2) in a dependent variable attributed to an effect can be expressed as the ratio of sum of squares of that effect (SSeffect) to the total sum of squares (SStotal) (Pearson 1911; Fisher 1928). We modified η2 from the proportion of variability in a dependent variable attributed to an effect to quantify the proportion of variability not explained (i.e. residual or experimental errors) by experimental factors as:

Thus, percent variability explained by factors would be calculated as 100×(1−η2). The statistic we used, η2, limited the type of data we could include in our data set to linear regression, fixed effect ANOVAs and fixed effect ANCOVAs (see Tabachnick and Fidell 1983). We were also restricted to studies that published enough information to obtain the degrees of freedom, error and total sums of squares or r 2 values. Since the addition of more factors in a given study will explain more of the variance, regardless of the significance of the factors, we calculated the weighed mean of η2 using total degrees of freedom as the weight factor.

We found a total of 1,137 error terms in 181 published studies that met our criteria (see Appendix). Of the 1,137 error terms, 166 were controlled experimental studies, 376 were field experimental studies, 67 were controlled observational studies and 528 were field observational studies. Multiple η2 values per study were treated as non-independent samples. To account for this non-independence, we randomly chose one analysis per study, calculated η2 per study and the weighted mean η2 across all 181 studies; this was repeated 1,000 times. Means and 95% confidence intervals (CI) were obtained from the 1,000 iterations of this randomization procedure. Means of η2 with non-overlapping confidence intervals were considered significantly different.

Results and discussion

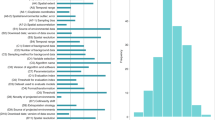

The proportion of variability not explained by experimental factors (η2) estimated from our analysis of all combined ecological studies (Table 1) had a 95% confidence interval of 63%–45%. Thus, ecologists using statistical models are explaining roughly half of the variability in dependent variables in their studies. When further dividing these studies into experimental or observational, we found similar mean unexplained variance (Table 2). Controlled and experimental studies had the smallest unexplained variance, roughly 47%, but none of the comparisons were statistically significant (Table 2). Consistent with our hypothesis but not statistically significant, controlled studies that were experimental in design had the lowest unexplained variance (43%) when compared to experimental field or controlled or field observational studies (Fig. 1). Observational field studies, with little to no control over factors influencing variance, still explained on average 51% of the variation in response variables.

Percentage of variance attributed to error in ecological studies. Data were collected from papers containing simple fixed effect ANOVAs, ANCOVAs and simple linear regressions. Studies were categorized into controlled environments (solid bars, e.g. greenhouse, growth chamber) or field environments (hatched bars) and further divided into experimental (colorless bars) or observational (gray bars) studies

Our results show that ecological studies evaluated with statistical models can account for considerably more variation than Møller and Jennions (2002) would suggest. This is in large part due to a fundamental difference in how Møller and Jennions (2002) viewed ecological relationships as compared to the approach we now present. Their meta-analysis of meta-analyses looked at linear response to single factors (e.g. elevated CO2, mating success, sexual selection) across a wide range of studies that may have come from entirely different systems. Such an approach fails to appreciate that non-linear complexity and threshold responses are ubiquitous across ecological systems (Romme et al. 1998; Maurer 1999), and that statistical models are generally specific to a location and system (Mangel et al. 2001). We believe what Møller and Jennions (2002) measured was how universal the relationships are between variables across multiple ecological systems, not how much variability in these relationships can be explained by ecologists. For example, a study on the effects of elevated CO2 on plants (Curtis and Wang 1998) was included in Møller and Jennions' meta-analysis. In that study, CO2 effects were examined across numerous plant types from coniferous forests to tropical trees and from arid systems to more mesic deciduous forests. By combining all of these studies into one meta-analysis, one is in effect putting a large degree of variability into the data with little more than a hope to get a correct sign (positive or negative) in the relationship between CO2 and plants, not explain variability. In addition, Møller and Jennions (2002) introduce another level of variability by combining numerous meta-analyses that span several spatial scales (Osenberg et al. 1999) from the individual (Thornhill and Møller 1998) to the ecosystem (Brett and Goldman 1996), and temporal scales including generational times (Järvinen 1991) and single field seasons (Xiong and Nilsson 1999).

We also feel that Møller and Jennions (2002), in their retrospective power analysis, greatly over-estimate the sample sizes (122–396) necessary for adequate statistical power to measure significant effects. Because they performed a meta-analysis of meta-analyses, their suggested sample sizes should reflect the number of meta-analyses needed to obtain overall significance of effects, not the number of samples necessary in a single study. The sample sizes reported from our sampled studies reflect a wide range from a minimum of five replicates to sample sizes greater than 4,000, with a median of 25. Although many of the studies found had sample sizes suggested by Møller and Jennions (2002), many still had much lower sample sizes with adequate statistical power. This is an important consideration as allocation of limited resources to increase replication is of critical importance when designing ecological studies.

In conclusion, we feel that ecologists using statistical models are explaining much more of the variation in relationships between important variables in ecological studies than suggested by Møller and Jennions (2002) and are currently using adequate sample sizes. Our results may also suffer from the same limitations as meta-analyses, such as publication bias (Cooper and Hedges 1993) and combining studies across spatial and temporal scales (Gurevitch et al 2001). Nevertheless, explained variance in the individual studies we examined is vastly different from the 2.5%–5.42% reported by Møller and Jennions (2002). Our analysis suggests, when combined with that of Møller and Jennions (2002), that the scope of inference for single-factor effects is small in scale and that extrapolations of single-factor effects across vastly different systems may be difficult. This conclusion, however, does not suggest that ecologists are inherently limited in their ability to quantify interactions occurring in ecological systems. We believe this is a potentially dangerous perspective given the importance of ecological science in public policy and decision-making. It also highlights the need for more mechanistic studies that do not rely on correlative analyses which can be subject to spurious relationships, in conjunction with more advanced/predictive modeling that clarifies those mechanisms to elucidate the important ecological patterns and processes (Hilborn and Mangel 1997). While we feel meta-analyses are important syntheses, they should be confined to assessing the consistency in response of single-factor effects across systems or environmental conditions and should not be used to quantify the portion of variability explained (Gurevitch et al. 1992; Osenberg and Mittelbach 1989). Additionally, the conflicting results between our study and that of Møller and Jennions (2002) illustrates the difficulty in finding emergent properties or fundamental ecological laws from independent studies that result from the complex nature of ecological systems (Brown 1999).

References

Arnqvist G, Wooster D (1995) Meta-analysis: synthesizing research findings in ecology and evolution. Trends Ecol Evol 10:236–240

Brett MT, Goldman G (1996) A meta-analysis of the freshwater trophic cascade. Proc Natl Acad Sci USA 93:7723–7726

Brown JH (1999) Macroecology: progress and prospect. Oikos 87:3–14

Cooper HM, Hedges LV (1993) Handbook of research synthesis. Russel Sage Foundation, New York

Curtis PS, Wang X (1998) A meta-analysis of elevated CO2 effects on woody plant mass, form, and physiology. Oecologia 113:299–313

Fisher RA (1928) The general sampling distribution of the multiple correlation coefficient. Proc R Soc A 121:654–673

Gurevitch J, Morrow LL, Wallace A, Walsh JS (1992) A meta-analysis of competition in field experiments. Am Nat 140:539–572

Gurevitch J, Curtis PS, Jones MH (2001) Meta-analysis in ecology. Adv Ecol Res 32:199–247

Hilborn R, Mangel M (1997) The ecological detective, confronting models with data. Princeton University Press, Princeton, N.J.

Järvinen A (1991) A meta-analytic study of the effects of female age on laying-date and clutch-size in the Great Tit (Parus major) and the Pied Flycatcher (Ficedula hypoleuca). Ibis 133:62–67

Mangel M, Fiksen O, Giske J (2001) Theoretical and statistical models in natural resource management and research. In: Shenk TM, Franklin AB (eds) Modeling in natural resource management. Development, interpretation, and application. Island, Washington, D.C., pp 57–72

Maurer BA (1999) Untangling ecological complexity: the macroscopic perspective. University of Chicago Press, Chicago

Møller AP, Jennions MD (2002) How much variance can be explained by ecologists and evolutionary biologists? Oecologia 132:492–500

Osenberg CW, Mittelbach GG (1989) The effects of body size on the predator-prey interaction between pumpkinseed sunfish and gastropods. Ecol Monogr 59:405–432

Osenberg CW, Sarnelle O, Cooper SD, Holt RD (1999) Resolving ecological questions through meta-analysis: goals, metrics, and models. Ecology 80:1105–1117

Pearson K (1911) On a correction to be made to the correlation ratio η. Biometrika 8:254–256

Romme WH, Everham EH, Frelich LE, Moritz MA, Sparks RE (1998) Are large, infrequent disturbances qualitatively different from small, frequent disturbances? Ecosystems 1:524–534

Tabachnick BG, Fidell LS (1983) Using multivariate statistics. Harper and Row, New York

Thornhill R, Møller RA (1998) The relative importance of size and asymmetry in sexual selection. Behav Ecol 9:546–551

Xiong S, Nilsson C (1999) The effect of plant litter on vegetation: a meta-analysis. J Ecol 87:984–994

Acknowledgements

The authors thank Susan Durham for discussions on statistical methods and Martyn Caldwell, Marc Mangel and one anonymous referee for improving the manuscript. This work was funded by the National Science Foundation (DEB-9807097), United States Department of Agriculture CSRS/NRICG 98-35100-6107, and the Utah Agricultural Experiment Station.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Data sources used for η2 analyses

-

Aizen MA (2001) Flower sex ratio, pollinator abundance, and the seasonal pollination dynamics of a protandrous plant. Ecology 82:127–144 (PE)

-

Angilletta MJ (2001) Thermal and physiological constraints on energy assimilation in a widespread lizard (Sceloporus undulatus). Ecology 82:3044–3056 (EE)

-

Arnal C, Cote IM, Morand S (2001) Why clean and be cleaned? The importance of client ectoparasites and mucus in a marine cleaning symbiosis. Behav Ecol Sociobiol 51:1–7 (BE)

-

Ashton KG (2001) Body size variation among mainland populations of the western rattlesnake (Crotalus viridis). Evolution 55:2523–2533 (EV)

-

Bach CE (2001a) Long-term effects of insect herbivory on responses by Salix cordata to sand accretion. Ecology 82:397–409 (PE)

-

Bach CE (2001b) Long-term effects of insect herbivory and sand accretion on plant succession on sand dunes. Ecology 82:1401–1416 (CE)

-

Baldi R, Albon SD, Elston DA (2001) Guanacos and sheep: evidence for continuing competition in arid Patagonia. Oecologia 129:561–570 (PE)

-

Bannister P, Strong GL (2001) Carbon and nitrogen isotope ratios, nitrogen content and heterotrophy in New Zealand mistletoes. Oecologia 126:10–20 (EP)

-

Barata C, Baird DJ, Soares AMVM (2001) Phenotypic plasticity in Daphnia magna Straus: variable maturation instar as an adaptive response to predation pressure. Oecologia 129:220–227 (EV, PE)

-

Benkman CW, Holiman WC, Smith JW (2001) The influence of a competitor on the geographic mosaid of coevolution between crossbills and lodgepole pine. Evolution 55:282–294 (EV)

-

Berglund O, Larsson P, Ewald G, Okla L (2001) The effect of lake trophy on lipid content and PCB concentrations in planktonic food webs. Ecology 82:1078–1088 (CE)

-

Billick I, Wiernasz DC, Cole BJ (2001) Recruitment in the harvester ant, Pogonomyrmex occidentalis: effects of experimental removal. Oecologia 129:228–233 (PE)

-

Bird RB, Smith EA, Bird DW (2001) The hunting handicap: costly signaling in human foraging strategies. Behav Ecol Sociobiol 50:9–19 (BE)

-

Boone MD, Bridges CM, Rothermel BB (2001) Growth and development of larval green frogs (Rana clamitans) exposed to multiple doses of an insecticide. Oecologia 129:518–524 (PE)

-

Bosch M, Waser NM (2001) Experimental manipulation of plant density and its effect on pollination and reproduction of two confamilial montane herbs. Oecologia 126:76–83 (PE)

-

Bot ANM, Rehner SA, Boomsma JJ (2001) Partial incompatibility between ants and symbiotic fungi in two sympatric species of Acromyrmex leaf-cutting ants. Evolution 55:1980–1991 (EV)

-

Boulinier T, Nichols JD, Hines JE, Sauer JR, Flather CH, Pollock KH (2001) Forest fragmentation and bird community dynamics: inference at regional scales. Ecology 82:1159–1169 (CE)

-

Brown BJ, Mitchell RJ (2001) Competition for pollination: effects of pollen of an invasive plant on seed set of a native congener. Oecologia 129:43–49 (PE)

-

Budden AE, Wright J (2001) Falling on deaf ears: the adaptive significance of begging in the absence of a parent. Behav Ecol Sociobiol 49:474–481 (BE)

-

Burger J, Gochfeld M (2001) Smooth-billed ani (Crotophaga ani) predation on butterflies in Mato Grosso, Brazil: risk decreases with increased group size. Behav Ecol Sociobiol 49:482–492 (BE)

-

Camill P, Lynch JA, Clark JS, Adams JB, Jordan B (2001) Changes in biomass, aboveground net primary production, and peat accumulation following permafrost thaw in the boreal peatlands of Manitoba, Canada. Ecosystems 4:461–478 (EE)

-

Campbell DR, Waser NM (2001) Genotype-by-environment interaction and the fitness of plant hybrids in the wild. Evolution 55:669–676 (EV)

-

Christe P, de Lope F, González G, Saino N, Møller AP (2001) The influence of environmental conditions on immune responses, morphology and recapture probability of nestling house martins (Delichon urbica). Oecologia 126:333–338 (EP)

-

Colfer RG, Rosenheim JA (2001) Predation on immature parasitoids and its impact on aphid suppression. Oecologia 126:292–304 (CE)

-

Coltman DW, Pilkington J, Kruuk LEB, Wilson K, Pemberton JM (2001) Positive genetic correlation between parasite resistance and body size in a free-living ungulate population. Evolution 55:2116–2125 (EV)

-

Cook WM, Holt RD, Yao J (2001) Spatial variability in oviposition damage by periodical cicadas in a fragmented landscape. Oecologia 127:51–61 (PE)

-

Craig JK, Foote CJ (2001) Countergradient variation and secondary sexual color: phenotypic convergence promotes genetic divergence in carotenoid use between sympatric anadromous and nonanadromous morphs of sockeye salmon (Oncorhynchus nerka). Evolution 55:380–391 (EV)

-

Crocker DE, Williams JD, Costa DP, Le Boeuf BJ (2001) Maternal traits and reproductive effort in northern elephant seals. Ecology 82:3541–3555 (EV, PE)

-

Cruz A, Moreno JM (2001) Seasonal course of total non-structural carbohydrates in the lignotuberous Mediterranean-type shrub Erica australis. Oecologia 128:343–350 (EP)

-

Dahlgaard J, Hasson E, Loeschcke V (2001) Behavioral differentiation in oviposition activity in Drosophila buzzatii from highland and lowland populations in Argentina: plasticity or thermal adaptation? Evolution 55:738–747 (EV)

-

Dahlhoff EP, Buckley BA, Menge BA (2001) Physiology of the rocky intertidal predator Nucella ostrina along an environmental stress gradient. Ecology 82:2816–2829 (EP)

-

Dedryver CA, Hullé M, Le Gallic JF, Caillaud MC, Simon JC (2001) Coexistence in space and time of sexual and asexual populations of the cereal aphid Sitobion avenae. Oecologia 128:379–388 (PE)

-

DeHeer CJ, Backus VL (2001) Sociogenetic responses to ecological variation in the ant Myrmica punctiventris are context dependent. Behav Ecol Sociobiol 49:375–386 (BE)

-

De Valpine P, Harte J (2001) Plant responses to experimental warming in a montane meadow. Ecology 82:637–648 (CE)

-

Dierschke V, Delingat J (2001) Stopover behavior and departure decision of northern wheatears, Oenanthe oenanthe, facing different onward non-stop flight distances. Behav Ecol Sociobiol 50:535–545 (BE)

-

Di Giusto B, Anstett MC, Dounias E, McKey DB (2001) Variation in the effectiveness of biotic defence: the case of an opportunistic ant-plant protection mutualism. Oecologia 129:367–375 (CE)

-

Duffy JE, Macdonald KS, Rhode JM, Parker JD (2001) Grazer diversity, functional redundancy, and productivity in seagrass beds: an experimental test. Ecology 82:2417–2434 (CE)

-

Dukes JS (2001) Biodiversity and invasibility in grassland microcosms. Oecologia 126:563–568 (EE)

-

Duncan FD, Dickman CR (2001) Respiratory patterns and metabolism in tenebrionid and carabid beetles from the Simpson Desert, Australia. Oecologia 129:509–517 (EP)

-

Durand LZ, Goldstein G (2001) Photosynthesis, photoinhibition, and nitrogen use efficiency in native and invasive tree ferns in Hawaii. Oecologia 126:345–354 (EP)

-

Ekblad A, Högberg P (2001) Natural abundance of 13C in CO2 respired from forest soils reveals speed of link between tree photosynthesis and root respiration. Oecologia 127:305–308 (EE, EP)

-

Eklov P, VanKooten T (2001) Facilitation among piscivorous predators: effects of prey habitat use. Ecology 82:2486–2494 (CE)

-

Epstein HE, Burke IC, Mosier AR (2001) Plant effects on nitrogen retention in shortgrass steppe 2 years after 15N addition. Oecologia 128:422–430 (EE)

-

Erickson H, Keller M, Davidson EA (2001) Nitrogen oxide fluxes and nitrogen cycling during postagricultural succession and forest fertilization in the humid tropics. Ecosystems 4:67–84 (EE)

-

Euler F von, Svensson S (2001) Taxonomic distinctness and species richness as measures of functional structure in bird assemblages. Oecologia 129:304–311 (CE)

-

Fargallo JA, De Leon A, Potti J (2001) Nest-maintenance effort and health status in chinstrap penguins, Pygoscelis antarctica: the functional significance of stone-provisioning behaviour. Behav Ecol Sociobiol 50:141–150 (BE)

-

Fenster CB, Dudash MR (2001) Spatiotemporal variation in the role of hummingbirds as pollinators of Silene virginica. Ecology 82:844–851 (PE)

-

Finlay JC (2001) Stable-carbon-isotope ratios of river biota: implications for energy flow in lotic food webs. Ecology 82:1052–1064 (CE)

-

Forbes S, Glassey B, Thornton S, Earle L (2001) The secondary adjustment of clutch size in red-winged blackbirds (Agelaius phoeniceus). Behav Ecol Sociobiol 50:37–44 (BE)

-

Forsman JT, Monkkonen M, Hukkanen M (2001) Effects of predation on community assembly and spatial dispersion of breeding forest birds. Ecology 82:232–244 (CE)

-

Fox CW, Czesak ME, Fox RW (2001) Consequences of plant resistance for herbivore survivorship, growth, and selection on egg size. Ecology 82:2790–2804 (EV)

-

Fraser AM, Axén AH, Pierce NE (2001) Assessing the quality of different ant species as partners of a myrmecophilous butterfly. Oecologia 129:452–460 (CE)

-

Freeman-Gallant CR, O'Connor KD, Breuer ME (2001) Sexual selection and the geography of Plasmodium infection in Savannah sparrows (Passerculus sandwichensis). Oecologia 127:517–521 (PE, EV)

-

Fritz RS, Hochwender CG, Lewkiewicz DA, Bothwell S, Orians CM (2001) Seedling herbivory by slugs in a willow hybrid system: developmental changes in damage, chemical defense, and plant performance. Oecologia 129:87–97 (CE)

-

Galloway LF (2001) Parental environmental effects on life history in the herbaceous plant Campanula americana. Ecology 82:2781–2789 (EV)

-

Gido KB, Matthews WJ (2001) Ecosystem effects of water column minnows in experimental streams. Oecologia 126:247–253 (EE)

-

Gillespie TR, Chapman CA (2001) Determinants of group size in the red colobus monkey (Procolobus badius): an evaluation of the generality of the ecological-constraints model. Behav Ecol Sociobiol 50:329–338 (BE)

-

Gliwicz ZM, Slusarczyk A, Slusarczyk M (2001) Life history synchronization in a long-lifespan single-cohort Daphnia population in a fishless alpine lake. Oecologia 128:368–378 (PE)

-

Graham JH, McArthur ED, Freeman DC (2001) Narrow hybrid zone between two subspecies of big sagebrush (Artemisia tridentata: Asteraceae). XII. Galls on sagebrush in a reciprocal transplant garden. Oecologia 126:239–246 (CE)

-

Granberg G, Sundh I, Svensson BH, Nilsson M (2001) Effects of temperature, and nitrogen and sulfur deposition, on methane emission from a boreal mire. Ecology 82:1982–1998 (EE)

-

Green M (2001) Is wind drift in migrating barnacle and brent geese, Branta leucopsis and Branta bernicla, adaptive or non-adaptive? Behav Ecol Sociobiol 50:45–54 (BE)

-

Grim T, Honza M (2001) Does supernormal stimulus influence parental behaviour of the cuckoo's host? Behav Ecol Sociobiol 49:322–329 (BE)

-

Gross EM, Johnson RL, Hairston Jr NG (2001) Experimental evidence for changes in submersed macrophyte species composition caused by the herbivore Acentria ephemerella (Lepidoptera). Oecologia 127:105–114 (CE)

-

Gunness MA, Clark RG, Weatherhead PJ (2001) Counterintuitive parental investment by female dabbling ducks in response to variable habitat quality. Ecology 82:1151–1158 (PE)

-

Harding EK, Stevens E (2001) Using stable isotopes to assess seasonal patterns of avian predation across a terrestrial-marine landscape. Oecologia 129:436–444 (CE)

-

Hassall M, Riddington R, Helden A (2001) Foraging behaviour of brent geese, Branta b. bernicla, on grasslands: effects of sward length and nitrogen content. Oecologia 127:97–104 (BE)

-

Hättenschwiler S (2001) Tree seedling growth in natural deep shade: functional traits related to interspecific variation in response to elevated CO2. Oecologia 129:31–42 (EP)

-

Hauxwell J, Cebrian J, Furlong C, Valiela I (2001) Macroalgal canopies contribute to eelgrass (Zostera marina) decline in temperate estuarine ecosystems. Ecology 82:1007–1022 (CE)

-

He JX, Stewart DJ (2001) Age and size at first reproduction of fishes: predictive models based only on growth trajectories. Ecology 82:784–791 (EP)

-

Hedrick P, Fredrickson R, Ellegren H (2001) Evaluation of δ2, a microsatellite measure of inbreeding and outbreeding, in wolves with a known pedigree. Evolution 55:1256–1260 (EV)

-

Heil M, Hilpert A, Fiala B, Linsenmair KE (2001) Nutrient availability and indirect (biotic) defence in a Malaysian ant-plant. Oecologia 126:404–408 (EP)

-

Hikosaka K, Hirose T (2001) Nitrogen uptake and use by competing individuals in a Xanthium canadense stand. Oecologia 126:174–181 (EP)

-

Hill WR, Mulholland PJ, Marzolf ER (2001) Stream ecosystem responses to forest leaf emergence in spring. Ecology 82:2306–2319 (EE)

-

Hoffman AA, Hallas R, Sinclair C, Mitrovski P (2001) Levels of variation in stress resistance in Drosophila among strains, local populations, and geographic regions: patterns for desiccation, starvation, cold resistance, and associated traits. Evolution 55:1621–1630 (EV)

-

Hoffmann AA, Hallas R, Sinclair C, Partridge L (2001) Rapid loss of stress resistance in Drosophila melanogaster under adaptation to laboratory culture. Evolution 55:436–438 (EV)

-

Hosken DJ, Jones KE, Chipperfield K, Dixson A (2001) Is the bat os penis sexually selected? Behav Ecol Sociobiol 50:450–460 (BE)

-

Hovel KA, Lipcius RN (2001) Habitat fragmentation in a seagrass landscape: Patch size and complexity control blue crab survival. Ecology 82:1814–1829 (PE)

-

Howard JJ (2001) Costs of trail construction and maintenance in the leaf-cutting ant Atta columbica. Behav Ecol Sociobiol 49:348–356 (BE)

-

Howard TG, Goldberg DE (2001) Competitive response hierarchies for germination, growth, and survival and their influence on abundance. Ecology 82:979–990 (CE)

-

Hu FS, Finney BP, Brubaker LB (2001) Effects of holocene Alnus expansion on aquatic productivity, nitrogen cycling, and soil development in southwestern Alaska. Ecosystems 4:358–368 (EE)

-

Jackson CR, Churchill PF, Roden EE (2001) Successional changes in bacterial assemblage structure during epilithic biofilm development. Ecology 82:555–566 (CE)

-

Jenkins SG, Partridge ST, Stephenson TR, Farley SD, Robbins CT (2001) Nitrogen and carbon isotope fractionation between mothers, neonates, and nursing offspring. Oecologia 129:336–341 (EP)

-

Jones M, Mandelik Y, Dayan T (2001) Coexistence of temporally partitioned spiny mice: Roles of habitat structure and foraging behavior. Ecology 82:2164–2176 (BE, CE)

-

Keeling CI, Nelson DE (2001) Changes in the intramolecular stable carbon isotope ratios with age of the European cave bear (Ursus spelaeus). Oecologia 127:495–500 (EP, PE)

-

Kim J, Thorp RW (2001) Maternal investment and size-number trade-off in a bee, Megachile apicalis, in seasonal environments. Oecologia 126:451–456 (BE, PE)

-

Klukowski M, Nelson CE (2001) Ectoparasite loads in free-ranging northern fence lizards, Sceloporus undulats hyacinthinus: effects of testosterone and sex. Behav Ecol Sociobiol 49:289–295 (BE)

-

Knapp AK, Briggs JM, Koelliker JK (2001) Frequency and extent of water limitation to primary production in a mesic temperate grassland. Ecosystems 4:19–28 (EE)

-

Kramer MG, Hansen AJ, Taper ML, Kissinger EJ (2001) Abiotic controls on long-term windthrow disturbance and temperate rain forest dynamics in southeast Alaska. Ecology 82:2749–2768 (CE)

-

Kramer MG, Templeton AR (2001) Life-history changes that accompany the transition from sexual to parthenogenetic reproduction in Drosophila mercatorum. Evolution 55:748–761 (EV)

-

Langellotto GA, Denno RF (2001) Benefits of dispersal in patchy environments: Mate location by males of a wing-dimorphic insect. Ecology 82:1870–1878 (BE)

-

Lara C, Ornelas JF (2001) Preferential nectar robbing of flowers with long corollas: experimental studies of two hummingbird species visiting three plant species. Oecologia 128:263–273 (CE)

-

Leiss KA, Müller-Schärer H (2001) Performance of reciprocally sown populations of Senecio vulgaris from ruderal and agricultural habitats. Oecologia 128:210–216 (PE)

-

Lewis DB (2001) Trade-offs between growth and survival: responses of freshwater snails to predacious crayfish. Ecology 82:758–765 (BE, CE)

-

Lill JT, Marquis RJ (2001) The effects of leaf quality on herbivore performance and attack from natural enemies. Oecologia 126:418–428 (CE)

-

Lord LA, Lee TD (2001) Interactions of local and regional processes: species richness in tussock sedge communities. Ecology 82:313–318 (CE)

-

MacNally R, Brown GW (2001) Reptiles and habitat fragmentation in the box-ironbark forests of central Victoria, Australia: predictions, compositional change and faunal nestedness. Oecologia 128:116–125 (CE)

-

Manson RH, Ostfeld RS, Canham CD (2001) Long-term effects of rodent herbivores on tree invasion dynamics along forest-field edges. Ecology 82:3320–3329 (CE)

-

Maron JL, Harrison S, Greaves M (2001) Origin of an insect outbreak: escape in space or time from natural enemies? Oecologia 126:595–602 (CE)

-

Marr DL, Brock MT, Pellmyr O (2001) Coexistence of mutualists and antagonists: exploring the impact of cheaters on the yucca—yucca moth mutualism. Oecologia 128:454–463 (CE)

-

Marsh DM (2001) Behavioral and demographic responses of tungara frogs to variation in pond density. Ecology 82:1283–1292 (BE, PE)

-

Martin PR, Martin TE (2001) Behavioral interactions between coexisting species: Song playback experiments with wood warblers. Ecology 82:207–218 (BE)

-

Matthews JW, Clay K (2001) Influence of fungal endophyte infection on plant-soil feedback and community interactions. Ecology 82:500–509 (CE)

-

Melcher PJ, Goldstein G, Meinzer FC, Yount DE, Jones TJ, Holbrook NM, Huang CX (2001) Water relations of coastal and estuarine Rhizophora mangle: xylem pressure potential and dynamics of embolism formation and repair. Oecologia 126:182–192 (EP)

-

Michimae H, Wakahara M (2001) Factors which affect the occurrence of cannibalism and the broad-headed "cannibal" morph in larvae of the salamander Hynobius retardatus. Behav Ecol Sociobiol 50:339–345 (BE)

-

Milchunas DG, Lauenroth WK (2001) Belowground primary production by carbon isotope decay and long-term root biomass dynamics. Ecosystems 4:139–150 (EE)

-

Minchinton TE, Dalby-Ball M (2001) Frugivory by insects on mangrove propagules: effects on the early life history of Avicennia marina. Oecologia 129:243–252 (CE)

-

Mulder CPH, Keall SN (2001) Burrowing seabirds and reptiles: impacts on seeds, seedlings and soils in an island forest in New Zealand. Oecologia 127:350–360 (CE)

-

Munday PL (2001) Fitness consequences of habitat use and competition among coral-dwelling fishes. Oecologia 128:585–593 (EV, CE)

-

Mysterud A, Pérez-Barbería FJ, Gordon IJ (2001) The effect of season, sex and feeding style on home range area versus body mass scaling in temperate ruminants. Oecologia 127:30–39 (EP)

-

Negovetic S, Jokela J (2001) Life-history variation, phenotypic plasticity, and subpopulation structure in a freshwater snail. Ecology 82:2805–2815 (EV)

-

Negovetic S, Anholt BR, Semlitsch RD, Reyer HU (2001) Specific responses of sexual and hybridogenetic European waterfrog tadpoles to temperature. Ecology 82:766–774 (EP)

-

Niinemets U (2001) Global-scale climatic controls of leaf dry mass per area, density, and thickness in trees and shrubs. Ecology 82:453–469 (EP)

-

Nilsson J, Råberg L (2001) The resting metabolic cost of egg laying and nestling feeding in great tits. Oecologia 128:187–192 (EP)

-

Nordin A, Högberg P, Näsholm T (2001) Soil nitrogen form and plant nitrogen uptake along a boreal forest productivity gradient. Oecologia 129:125–132 (EE)

-

Oren R, Pataki DE (2001) Transpiration in response to variation in microclimate and soil moisture in southeastern deciduous forests. Oecologia 127:549–559 (EE)

-

Parker BR, Schindler DW, Donald DB, Anderson RS (2001) The effects of stocking and removal of a nonnative salmonid on the plankton of an alpine lake. Ecosystems 4:334–345 (EE)

-

Oren R, Sperry JS, Ewers BE, Pataki DE, Phillips N, Megonigal JP (2001) Sensitivity of mean canopy stomatal conductance to vapor pressure deficit in a flooded Taxodium distichum L. forest: hydraulic and non-hydraulic effects. Oecologia 126:21–29 (EP)

-

Oren U, Benayahu Y, Lubinevsky H, Loya Y (2001) Colony integration during regeneration in the stony coral Favia favus. Ecology 82:802–813 (CE)

-

Parris MJ (2001) High larval performance of leopard frog hybrids: effects of environment-dependent selection. Ecology 82:3001–3009 (EV)

-

Peinetti HR, Menezes RSC, Coughenour MB (2001) Changes induced by elk browsing in the aboveground biomass production and distribution of willow (Salix monticola Bebb): their relationships with plant water, carbon, and nitrogen dynamics. Oecologia 127:334–342 (EP)

-

Pennings SC, Moore DJ (2001) Zonation of shrubs in western Atlantic salt marshes. Oecologia 126:587–594 (CE)

-

Plath K, Boersma M (2001) Mineral limitation of zooplankton: stoichiometric constraints and optimal foraging. Ecology 82:1260–1269 (PE)

-

Polak M, Starmer WT (2001) The quantitative genetics of fluctuating asymmetry. Evolution 55:498–511 (EV)

-

Pryke SR, Andersson S, Lawes MJ (2001) Sexual selection of multiple handicaps n the red-collared widowbird: female choice of tail length but not carotenoid display. Evolution 55:1452–1463 (EV)

-

Puettmann KJ, Saunders MR(2001) Patterns of growth compensation in eastern white pine (Pinus strobus L.): the influence of herbivory intensity and competitive environments. Oecologia 129:376–384 (CE)

-

Reich PB, Bakken P, Carlson D, Frelich LE, Friedman SK, Grigal DF (2001) Influence of logging, fire, and forest type on biodiversity and productivity in southern boreal forests. Ecology 82:2731–2748 (EE)

-

Rendon MA, Garrido A, Ramirez JM, Rendon-Martos M, Amat JA (2001) Despotic establishment of breeding colonies of greater flamingos, Phoenicopterus ruber, in southern Spain. Behav Ecol Sociobiol 50:55–60 (BE)

-

Roda A, Nyrop J, English-Loeb G, Dicke M (2001) Leaf pubescence and two-spotted spider mite webbing influence phytoseiid behavior and population density. Oecologia 129:551–560 (PE)

-

Rode KD, Robbins CT, Shipley LA (2001) Constraints on herbivory by grizzly bears. Oecologia 128:62–71 (CE)

-

Roff DA, DeRose MA (2001) The evolution of trade-offs: effects of inbreeding on fecundity relationships in the cricket Gryllus firmus. Evolution 55:111–121 (EV)

-

Rogers DW, Chase R (2001) Dart receipt promotes sperm storage in the garden snail Helix aspersa. Behav Ecol Sociobiol 50:122–127 (BE)

-

Rowcliffe JM, Watkinson AR, Sutherland WJ, Vickery JA (2001) The depletion of algal beds by geese: a predictive model and test. Oecologia 127:361–371 (CE)

-

Sala A, Carey EV, Callaway RM (2001) Dwarf mistletoe affects whole-tree water relations of Douglas fir and western larch primarily through changes in leaf to sapwood ratios. Oecologia 126:42–52 (EP)

-

Schindler DE, Knapp RA, Leavitt PR (2001) Alteration of nutrient cycles and algal production resulting from fish introductions into mountain lakes. Ecosystems 4:308–321 (EE)

-

Schmalhofer VR (2001) Tritrophic interactions in a pollination system: impacts of species composition and size of flower patches on the hunting success of a flower-dwelling spider. Oecologia 129:292–303 (CE)

-

Schultz CB, Crone EE (2001) Edge-mediated dispersal behavior in a prairie butterfly. Ecology 82:1879–1892 (PE)

-

Schuur EAG, (2001) The effect of water on decomposition dynamics in mesic to wet Hawaiian montane forests. Ecosystems 4:259–273 (EE)

-

Schuur EAG, Chadwick OA, Matson PA (2001) Carbon cycling and soil carbon storage in mesic to wet Hawaiian montane forests. Ecology 82:3182–3196 (EE)

-

Searcy SP, Sponaugle S (2001) Selective mortality during the larval-juvenile transition in two coral reef fishes. Ecology 82:2452–2470 (PE)

-

Seitz RD, Lipcius RN, Hines AH, Eggleston DB (2001) Density-dependent predation, habitat variation, and the persistence of marine bivalve prey. Ecology 82:2435–2451 (CE)

-

Shaver GR, Bret-Harte SM, Jones MH, Johnstone J, Gough L, Laundre J, Chapin FS (2001) Species composition interacts with fertilizer to control long-term change in tundra productivity. Ecology 82:3163–3181 (CE)

-

Shima JS (2001) Recruitment of a coral reef fish: roles of settlement, habitat, and postsettlement losses. Ecology 82:2190–2199 (PE)

-

Silliman BR, Zieman JC (2001) Top-down control of Spartina alterniflora production by periwinkle grazing in a Virginia salt marsh. Ecology 82:2830–2845 (CE)

-

Singer MS, Stireman JO III (2001) How foraging tactics determine host-plant use by a polyphagous caterpillar. Oecologia 129:98–105 (BE)

-

Slocum MG (2001) How tree species differ as recruitment foci in a tropical pasture. Ecology 82:2547–2559 (CE)

-

Slusarczyk M (2001) Food threshold for diapause in Daphnia under the threat of fish predation. Ecology 82:1089–1096 (CE)

-

Smith F (2001) Historical regulation of local species richness across a geographic region. Ecology 82:792–801 (EE)

-

Sparks JP, Campbell GS, Black RA (2001) Water content, hydraulic conductivity, and ice formation in winter stems of Pinus contorta: a TDR case study. Oecologia 127:468–475 (EP)

-

Steenbeek R, van Schaik CP (2001) Competition and group size in Thomas's langurs (Presbytis thomasi): the folivore paradox revisited. Behav Ecol Sociobiol 49:100–110 (BE)

-

Steiner CF (2001) The effects of prey heterogeneity and consumer identity on the limitation of trophic-level biomass. Ecology 82:2495–2506 (CE)

-

Stepien CA, Rosenblatt RH, Bargmeyer BA (2001) Phylogeography of the spotted sand bass Paralabrax maculatofasciatus: divergence of gulf of California and pacific coast populations. Evolution 55:1852–1862 (EV)

-

Strohm E, Laurien-Kehnen C, Bordon S (2001) Escape from parasitism: spatial and temporal strategies of a sphecid wasp against a specialised cuckoo wasp. Oecologia 129:50–57 (PE)

-

Sultan SE (2001) Phenotypic plasticity for fitness components in Polygonum species of contrasting ecological breadth. Ecology 82:328–343 (EV)

-

Svensson E, Sinervo B, Comendant T (2001) Condition, genotype-by-environment interaction, and correlational selection in lizard life-history morphs. Evolution 55:2053–2069 (EV)

-

Syms C, Jones GP (2001) Soft corals exert no direct effects on coral reef fish assemblages. Oecologia 127:560–571 (CE)

-

Taylor GM (2001) The evolution of armament strength: evidence for a constraint on the biting performance of claws of durophagous decapods. Evolution 55:550–560 (EV)

-

Taylor RC, Trexler JC, Loftus WF (2001) Separating the effects of intra- and interspecific age-structured interactions in an experimental fish assemblage. Oecologia 127:143–152 (CE)

-

Toonen RJ, Pawlik JR (2001) Foundations of gregariousness: a dispersal polymorphism among the planktonic larvae of a marine invertebrate. Evolution 55:2439–2454 (EV)

-

Totland O (2001) Environment-dependent pollen limitation and selection on floral traits in an alpine species. Ecology 82:2233–2244 (EV)

-

Tsutsui ND, Case TJ (2001) Population genetics and colony structure of the Argentine ant (Linepithema humile) in its native and introduced ranges. Evolution 55:976–985 (EV)

-

Turgeon J, Bernatchez L (2001) Clinal variation at microsatellite loci reveals historical secondary intergradation between glacial races of Coregonus artedi (Teleostei: coregoninae). Evolution 55:2274–2286 (EV)

-

Utelli AB, Roy BA (2001) Causes and consequences of floral damage in Aconitum lycoctonum at high and low elevations in Switzerland. Oecologia 127:266–273 (PE)

-

Vadeboncoeur Y, Lodge DM, Carpenter SR (2001) Whole-lake fertilization effects on distribution of primary production between benthic and pelagic habitats. Ecology 82:1065–1077 (CE, EE)

-

Valenzuela N (2001) Constant, shift, and natural temperature effects on sex determination in Podocnemis expansa. Ecology 82:3010–3024 (EP)

-

Van Kleunen M, Fischer M (2001) Adaptive evolution of plastic foraging responses in a clonal plant. Ecology 82:3309–3319 (EV)

-

Voigt CC, von Helversen O, Michener R, Kunz TH (2001) The economics of harem maintenance in the sac-winged bat, Saccopteryx bilineata (Emballonuridae). Behav Ecol Sociobiol 50:31–36 (BE)

-

Wacker A, von Elert E (2001) Polyunsaturated fatty acids: Evidence for non-substitutable biochemical resources in Daphnia galeata. Ecology 82:2507–2520 (EP)

-

Wagner WE Jr, Kelley RJ, Tucker KR, Harper CJ (2001) Females receive a life-span benefit from male ejaculates in a field cricket. Evolution 55:994–1001 (EV)

-

Walls SC, Williams MG (2001) The effect of community composition on persistence of prey with their predators in an assemblage of pond-breeding amphibians. Oecologia 128:134–141 (CE)

-

Warren CR, McGrath JF, Adams MA (2001) Water availability and carbon isotope discrimination in conifers. Oecologia 127:476–486 (EP)

-

Westover KM, Bever JD (2001) Mechanisms of plant species coexistence: Roles of rhizosphere bacteria and root fungal pathogens. Ecology 82:3285–3294 (CE)

-

Wiens JJ, Slingluff JL (2001) How lizards turn into snakes: a phylogenetic analysis of body-form evolution in anguid lizards. Evolution 55:2303–2318 (EV)

-

Wiernasz DC, Sater AK, Abell AJ, Cole BJ (2001) Male size, sperm transfer, and colony fitness in the western harvester and, Pogonomyrmex occidentalis. Evolution 55:324–239 (EV)

-

Williams JW, Shuman BN, Webb T (2001) Dissimilarity analyses of late-Quaternary vegetation and climate in eastern North America. Ecology 82:3346–3362 (EE)

-

Wimberly MC, Spies TA (2001) Influences of environment and disturbance on forest patterns in coastal Oregon watersheds. Ecology 82:1443–1459 (CE)

-

Wolfenbarger LL, Wilkinson GS (2001) Sex-linked expression of a sexually selected trait in the stalk-eyed fly, Cyrtodiopsis dalmanni. Evolution 55:103–110 (EV)

-

Worm B, Lotze HK, Sommer U (2001) Algal propagule banks modify competition, consumer and resource control on Baltic rocky shores. Oecologia 128:281–293 (CE)

-

Xenopoulos MA, Schindler DW (2001) The environmental control of near-surface thermoclines in boreal lakes. Ecosystems 4:699–707 (EE)

-

Yoshida T, Gurung TB, Kagami M, Urabe J (2001) Contrasting effects of a cladoceran (Daphnia galeata) and a calanoid copepod (Eodiaptomus japonicus) on algal and microbial plankton in a Japanese lake, Lake Biwa. Oecologia 129:602–610 (EE)

-

Zhao FJ, Spiro B, McGrath SP (2001) Trends in 13C/12C ratios and C isotope discrimination of wheat since 1845. Oecologia 128:336–342 (EP)

-

Zotz G, Thomas V, Hartung W (2001) Ecophysiological consequences of differences in plant size: abscisic acid relationships in the epiphytic orchid Dimerandra emarginata. Oecologia 129:179–185 (EP)

Rights and permissions

About this article

Cite this article

Peek, M.S., Leffler, A.J., Flint, S.D. et al. How much variance is explained by ecologists? Additional perspectives. Oecologia 137, 161–170 (2003). https://doi.org/10.1007/s00442-003-1328-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00442-003-1328-y