Abstract

The Fredrickson-Andersen 2-spin facilitated model on \({{\mathbb {Z}}} ^d\) (FA-2f) is a paradigmatic interacting particle system with kinetic constraints (KCM) featuring dynamical facilitation, an important mechanism in condensed matter physics. In FA-2f a site may change its state only if at least two of its nearest neighbours are empty. Although the process is reversible w.r.t. a product Bernoulli measure, it is not attractive and features degenerate jump rates and anomalous divergence of characteristic time scales as the density q of empty sites tends to 0. A natural random variable encoding the above features is \(\tau _0\), the first time at which the origin becomes empty for the stationary process. Our main result is the sharp threshold

with \(\lambda (d,2)\) the sharp threshold constant for 2-neighbour bootstrap percolation on \({{\mathbb {Z}}} ^d\), the monotone deterministic automaton counterpart of FA-2f. This is the first sharp result for a critical KCM and it compares with Holroyd’s 2003 result on bootstrap percolation and its subsequent improvements. It also settles various controversies accumulated in the physics literature over the last four decades. Furthermore, our novel techniques enable completing the recent ambitious program on the universality phenomenon for critical KCM and establishing sharp thresholds for other two-dimensional KCM.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fredrickson–Andersen j-spin facilitated models (FA-jf) are a class of interacting particle systems that were introduced by physicists in the 1980s [14] to model the liquid/glass transition, a major and still largely open problem in condensed matter physics [2, 7]. Later on, several models with different update rules were introduced, and this larger class has been dubbed kinetically constrained models (KCM) (see e.g. [16] and references therein). The key feature of KCM is that an update at a given vertex x can occur only if a suitable neighbourhood of x contains only holes, the facilitating vertices. The presence of this dynamical constraint gives rise to a mechanism dubbed dynamical facilitation [39] in condensed matter physics: motion on smaller scales begets motion on larger scales. Extensive numerical simulations indicate that indeed KCM can display a remarkable glassy behaviour, featuring in particular an anomalous divergence of characteristic time scales. As a good representative of a random variable whose law encodes the above behaviour one could take \(\tau _0\), the first time the origin becomes a hole (or infected, in the jargon of the sequel). In the last forty years physicists have put forward several different conjectures on the scaling of \(\tau _0\) as the equilibrium density of the holes goes to zero for FA-jf models. However, to date a clear cut answer on the form of this scaling has proved elusive due to the very slow dynamics and large finite size effects intrinsic to its glassy dynamics.

From the mathematical point of view, the study of FA-jf and KCM in general poses very challenging problems. This is largely due to the fact that these models do not feature an attractive dynamics (in the sense of [30, Chapter III]), and therefore many of the powerful tools developed to study attractive stochastic spin dynamics, e.g. monotone coupling or censoring, cannot be used. A central issue has been therefore that of developing novel mathematical tools to determine the long time behaviour of the stationary process and, more specifically, to find the scaling of the associated infection time of the origin, \(\tau _0\) in the sequel, as the density q of the empty sites (the facilitating ones) shrinks to zero.

With this motivation, an ambitious program was recently initiated in [33] to determine as accurately as possible the divergence of the infection time for the stationary process, as \(q\rightarrow 0\) for the FA-jf models in any dimension and for general KCM in two dimensions. This program mirrors in some aspects the analogous program for general \({\mathcal {U}}\)-bootstrap percolation cellular automata (\({\mathcal {U}}\)-BP) launched by [10] and carried out in [4, 9] and for j-neighbour bootstrap percolation [5, 18, 27, 28]. Indeed \({\mathcal {U}}\)-BP models and j-neighbour bootstrap percolation can be viewed as the monotone deterministic counterparts of generic KCM and FA-jf models respectively. Despite the above analogy, the lack of monotonicity for KCM induces a much more complex behaviour and richer universality classes than BP [22,23,24,25, 31, 32].

In spite of several important advances [12, 24, 25, 31,32,33], the sharp estimates of the divergence of \(\tau _0\) for stationary KCM still remained a milestone open problem. Solving it requires discovering the optimal infection/healing mechanism to reach the origin and crafting the mathematical tools to transform the knowledge of this mechanism into tight upper and lower bounds for \(\tau _0\) for the stationary process. In this paper we solve this problem (see Sect. 1.5 for an account of our most prominent innovations) for the first time and we establish the sharp scaling for FA-2f models in any dimension (Theorem 1.3). In doing so, we also settle various unresolved controversies in the physics literature (see Sect. 1.4 for a detailed account).

Our novel approach not only leads to deeper results, but also extends in breadth. Indeed, it opens the way for accomplishing the final step [22] to complete the program of [33] for establishing KCM universality.

1.1 Bootstrap percolation background

Let us start by recalling some background on j-neighbour bootstrap percolation. Let \(\varOmega =\{0,1\}^{{{\mathbb {Z}}} ^d}\) and call a site \(x\in {{\mathbb {Z}}} ^d\) infected (or empty) for \(\omega \in \varOmega \) if \(\omega _x=0\) and healthy (or filled) otherwise. For fixed \(0<q<1\), we denote by \(\mu _q\) the product Bernoulli probability measure with parameter q under which each site is infected with probability q. When confusion does not arise, we write \(\mu =\mu _q\). Given two integers \(1\leqslant j\leqslant d\) the j-neighbour BP model (j-BP for short) on the d-dimensional lattice \({{\mathbb {Z}}} ^d\) is the monotone cellular automaton on \(\varOmega \) evolving as follows. Let \(A_0\subset {{\mathbb {Z}}} ^d\) be the set of initially infected sites distributed according to \(\mu \). Then for any integer time \(t\geqslant 0\) we recursively define

where \(N_x\) denotes the set of neighbours of x in the usual graph structure of \({{\mathbb {Z}}} ^d\). In other words, a site becomes infected forever as soon as its constraint becomes satisfied, namely as soon as it has at least j already infected neighbours.

Remark 1.1

The j-BP is clearly monotone in the initial set of infection i.e. \(A_t\subset A'_t\) for all \(t\geqslant 1\) if \(A_0\subset A'_0\). Such a monotonicity will, however, be missing in the KCM models analysed in this work.

A key quantity for bootstrap percolation is the infection time of the origin defined as \({\tau _0^{\mathrm {BP}}} =\inf \{t\geqslant 0,0\in A_t\}\). For j=1, trivially, \({\tau _0^{\mathrm {BP}}} \) scales as the distance to the origin of the nearest infected site and thus behaves w.h.p. as \(q^{-1/d}\). For  , the typical value of \({\tau _0^{\mathrm {BP}}} \) w.r.t. \(\mu _q\) has been investigated in a series of works, starting with the seminal paper of Aizenman and Lebowitz [1] and Holroyd’s breakthrough [28] determining a sharp threshold for \(d=j=2\). We refer to [34] for an account of the field and only recall the more recent results that include second order corrections to the sharp threshold. Here and throughout the paper, when using asymptotic notation we refer to \(q\rightarrow 0\).Footnote 1 For 2-BP in \(d=2\), w.h.p. it holds [18, 27] that

, the typical value of \({\tau _0^{\mathrm {BP}}} \) w.r.t. \(\mu _q\) has been investigated in a series of works, starting with the seminal paper of Aizenman and Lebowitz [1] and Holroyd’s breakthrough [28] determining a sharp threshold for \(d=j=2\). We refer to [34] for an account of the field and only recall the more recent results that include second order corrections to the sharp threshold. Here and throughout the paper, when using asymptotic notation we refer to \(q\rightarrow 0\).Footnote 1 For 2-BP in \(d=2\), w.h.p. it holds [18, 27] that

For j-BP for all \(d\geqslant j\geqslant 2\), w.h.p. it holds [5, 42]

where \(\exp ^k\) denotes the exponential iterated k times and \(\lambda (d,j)\) are the positive constants defined explicitly in [6, (1–3)]. We recall that \(\lambda (2,2)=\pi ^2/18\) [28, Proposition 5] and we refer the interested reader to [6, Table 1 and Proposition 4] for other values of d, j.

We are now ready to introduce the Fredrickson-Andersen model, a natural stochastic counterpart of j-BP and the main focus of this work.

1.2 The Fredrickson–Andersen model and main result

For integers \(1\leqslant j\leqslant d\) the Fredrickson–Andersen j-spin facilitated model (FA-jf) is the interacting particle system on \(\varOmega =\{0,1\}^{{{\mathbb {Z}}} ^d}\) constructed as follows. Each site is endowed with an independent Poisson clock with rate 1. At each clock ring the state of the site is updated to an independent Bernoulli random variable with parameter \(1-q\) subject to the crucial constraint that if the site has fewer than j infected (nearest) neighbours currently, then the update is rejected. We refer to updates occurring at sites with at least j infected neighbours at the time of the update as legal.

Remark 1.2

Contrary to the j-BP model, the FA-jf process is clearly non-monotone because of the possible recovery of infected sites with at least j infected neighbours. This feature is one of the major obstacles in the analysis of the process.

It is standard to show (see [30]) that the FA-jf process is well defined and it is reversible w.r.t. \(\mu _q\). When the initial distribution at time \(t=0\) is a measure \(\nu \), the law and expectation of the process on the Skorokhod space \(D([0,\infty ) , \varOmega )\) will be denoted by \({{\mathbb {P}}} _{\nu }\) and \({{\mathbb {E}}} _{\nu }\) respectively. As for j-BP let

be the first time the origin becomes infected. Our main goal is to quantify precisely \({{\mathbb {E}}} _{\mu _q}[\tau _0]\), the average of \(\tau _0\) w.r.t. the stationary process as \(q\rightarrow 0\). In order to keep the setting simple and the results more transparent, we will focus on the FA-2f model. Other models, including FA-jf for all values of \(3\leqslant j\leqslant d\), are discussed in Sect. 1.3. Recall the constants \(\lambda (d,2)\) from (1.2), (1.3), so that \(\lambda (2,2)=\pi ^2/18\).

Theorem 1.3

As \(q\rightarrow 0\) the stationary FA-2f model on \({{\mathbb {Z}}} ^d\) satisfies:

if \(d=2\), and

if \(d\geqslant 3\). Moreover, (1.4–1.7) also hold for \(\tau _0\) w.h.p.

In particular, recalling (1.2), (1.3), we have the following.

Corollary 1.4

W.h.p. \(\tau _0=({\tau _0^{\mathrm {BP}}} )^{d+o(1)}\).

The above are the first results that establish the sharp asymptotics of \(\log {{\mathbb {E}}} _{\mu _q}[\tau _0]\) within the whole class of “critical” KCM.

Remark 1.5

We will not provide an explicit proof of the case \(d\geqslant 3\) as it does not require any additional effort with respect to the case \(d=2\). The only significant difference is that the lower bound from (1.1) is not available in higher dimensions, leading to a corresponding weakening of the lower bound (1.6) as compared to (1.4).

Remark 1.6

Despite the resemblance, our results are by no means a corollary of their 2-BP counterpart (1.1). While the lower bounds (1.4) and (1.6) do indeed follow rather easily from (1.1) and (1.2) together with an improvement of the “automatic” lower bound from [12, Theorem 6.9], the proof of (1.5) and (1.7) is much more involved. In particular, it requires guessing an efficient infection/healing mechanism to infect the origin, which has no counterpart in the monotone j-BP dynamics (see Sect. 1.5).

1.3 Extensions

1.3.1 FA-jf with \(j\ne 2\)

For the sake of completeness, let us briefly discuss the FA-jf model with other values of j. The case \(j=1\) is the simplest to analyse and behaves very differently: relaxation is dominated by the motion of single infected sites and time scales diverge as \(1/q^{\varTheta (1)}\) (see [12, 38] for the values of the exponent). For \(d\geqslant j\geqslant 4\) we believe that minor modifications of the treatment of [33] along the lines provided by [6] should be sufficient to prove that \({{\mathbb {E}}} _{\mu _q}[\tau _0]\) scales as \({\tau _0^{\mathrm {BP}}} \) (see (1.2), (1.3)). The only remaining case, \(d\geqslant j=3\), should require some more work, still following the approach of [33]. Let us emphasise that it should be possible to treat all \(d\geqslant j\geqslant 3\), using the techniques of the present paper. However, the much faster divergence of the scaling involved should allow the less refined technique of [33] to work, as there is a much larger margin for error, making those results easier. We leave the above considerations to future work.

1.3.2 More general update rules: \({\mathcal {U}}\)-KCM

The full power of the method developed in the present work is required to treat two-dimensional \({\mathcal {U}}\)-KCM, a very general class of interacting particle systems with kinetic constraints on \({{\mathbb {Z}}} ^2\). These models and their bootstrap percolation counterpart, \({\mathcal {U}}\)-BP, are defined similarly to FA-jf and j-BP but with arbitrary local monotone constraints (or update rules) \({\mathcal {U}}\) [12, 33]. There exist several very symmetric constraints, including the so-called modified 2-BP, requiring two non-opposite neighbours to be infected, for which the exact asymptotics of \(\log {\tau _0^{\mathrm {BP}}} \), and sometimes even the higher order corrections, are known [11]. Our methods should adapt to this setting to yield equally sharp results for \({{\mathbb {E}}} _{\mu _q}[\tau _0]\) of the corresponding \({\mathcal {U}}\)-KCM. In this general setting the outcome would again be of the form \({{\mathbb {E}}} _{\mu _q}[\tau _0]\simeq ({\tau _0^{\mathrm {BP}}} )^{2}\) as for FA-2f.

We warn the reader that the exponent 2 in two dimensions relating \({{\mathbb {E}}} _{\mu _q}[\tau _0]\) to \({\tau _0^{\mathrm {BP}}} \) is not general [23, 24] and only applies to ‘isotropic’ models [22]. Nevertheless, developing the approach of the present work further, in [22] \(\log {{\mathbb {E}}} _{\mu _q}[\tau _0]\) is determined up to a constant factor for all so-called “critical” KCM in two dimensions, matching the lower bounds established in [23] and establishing a richer KCM analogue of the BP universality result of [9].

1.4 Settling a controversy in the physics literature

Soon after the FA-jf models were introduced, some conjectures in the physics literature predicted the divergence of \({{\mathbb {E}}} _{\mu _q}[\tau _0]\) at a positive critical density \(q_c\) ( [14, 15, 17]). These conjectures were subsequently ruled out in [12], the first contribution analysing rigorously FA-jf. After [12] and prior to the present work, the best known bounds on the infection time were

The lower bounds follow from the general lower bound [33, Lemma 4.3] \({\mathbb {E}}_{\mu _q}[\tau _0]=\varOmega (\text {median of } {\tau _0^{\mathrm {BP}}} )\) together with the j-nBP lower bounds (see Sect. 1.1) while the upper bounds were recently obtained by the second and third author in [33]. As such, the above results do not settle a controversy between several conjectures that were put forward in the physics literature.

The first quantitative prediction for the scaling of \(E_{\mu _q}[\tau _0]\) appeared in [35] where, based on numerical simulations, a faster than exponential divergence in 1/q was conjectured for FA-2f in \(d=2\). For the latter, the first to claim an exponential scaling \(\exp (\varTheta (1)/q)\) was Reiter [36]. He argued that the infection process of the origin is dominated by the motion of macro-defects, i.e. rare regions having probability \(\exp (-\varTheta (1)/q)\) and size poly(1/q) that move at an exponentially small rate \(\exp (-\varTheta (1)/q)\). Later Biroli, Fisher and the last author [41] considerably refined the above picture. They argued that macro-defects should coincide with the critical droplets of 2-BP having probability \(\exp (-\pi ^2/(9 q))\) and that the time scale of the relaxation process inside a macro-defect should be \(\exp (c/\sqrt{q})\), i.e. sub-dominant with respect to the inverse of their density, in sharp contrast with the prediction of [36]. Based on this and on the idea that macro-defects move diffusively, the relaxation time scale of FA-2f in \(d=2\) was conjectured to diverge as \(\exp (\pi ^2/(9q))\) in \(d=2\) [41, Section 6.3]. Yet, a different prediction was later made in [40] implying a different scaling of the form \(\exp (2\pi ^2/(9q))\). Concerning the behaviour of FA-2f in higher dimensions, in [41] the relaxation time was predicted to diverge as \((\tau _0^{\mathrm{BP}})^d\), though the prediction was less precise than for the two dimensional case since the sharp results for 2-BP in dimension \(d>2\) proved in [5] were yet to be established.

Theorem 1.3 settles the above controversy by confirming the scaling prediction of [36, 41] and by disproving those of [35, 40]. Moreover, our result on the characteristic time scale of the relaxation process inside a macro-defect (see Proposition 4.7) agrees with the prediction of [41] and disproves the one of [36].

1.5 Behind Theorem 1.3: high-level ideas

The main intuition behind Theorem 1.3 is that for \(q\ll 1\) the relaxation to equilibrium of the stationary FA-2f process is dominated by the slow motion of patches of infection dubbed mobile droplets or just droplets with very small probability of occurrence, roughly \(\exp (-\pi ^2/(9q))\). In analogy with the critical droplets of bootstrap percolation (see [28]), mobile droplets have a linear size which is polynomially increasing in q (with some arbitrariness), i.e. they live on a much smaller scale than the metastable length scale \(e^{\varTheta (1/q^{{1/(d-1)}})}\) arising in 2-BP percolation model. One of the main requirements dictating the choice of the scale of mobile droplets is the requirement that the typical infection environment around a droplet is w.h.p. such that the droplet is able to move under the FA-2f dynamics in any direction. Within this scenario the main contribution to the infection time of the origin for the stationary FA-2f process should come from the time it takes for a droplet to reach the origin.

In order to translate the above intuition into a mathematically rigorous proof, one is faced with two different fundamental problems:

-

(1)

a precise, yet workable, definition of mobile droplets;

-

(2)

an efficient model for their “effective” random evolution.

In [25, 32, 33] mobile droplets (dubbed “super-good” regions there) have been defined rather rigidly as fully infected regions of suitable shape and size and their motion has been modelled as a generalised FA-1f process on \({{\mathbb {Z}}} ^2\) [32, Section 3.1]. In the latter process mobile droplets are freely created or destroyed with the correct heat-bath equilibrium rates but only at locations which are adjacent to an already existing droplet. The main outcome of these papers have been (upper) bounds on the infection time of the origin of the form \(\tau _0\leqslant 1/\rho _{\mathrm {D}}^{\log \log (1/\rho _{\mathrm {D}})^{O(1)}}\) w.h.p., where \(\rho _{\mathrm {D}}\) is the density of mobile droplets.

While rather powerful and robust, the solution proposed in [25, 32, 33] to 1 and 2 above has no chance to get the exact asymptotics of the infection time because of the rigidity in the definition of the mobile droplets and of the chosen model for their effective dynamics. Indeed, a mobile droplet should be allowed to deform itself and move to a nearby position like an amoeba, by rearranging its infection using the FA-2f moves. This “amoeba motion” between nearby locations should occur on a time scale much smaller than the global time scale necessary to bring a droplet from far away to the origin. In particular, it should not require to first create a new droplet from the initial one and only later destroy the original one (the main mechanism of the droplet dynamics under the generalised FA-1f process).

With this in mind we offer a new solution to 1 and 2 above which indeed leads to determining the exact asymptotics of the infection time. Concerning 1, our treatment in Sect. 4 consists of two steps. We first propose a sophisticated multiscale definition of mobile droplets which, in particular, introduces a crucial degree of softness in their microscopic infection’s configurationFootnote 2. The second and much more technically involved step is developing the tools necessary to analyse the FA-2f dynamics inside a mobile droplet. In particular, we then prove two key features (see Propositions 4.6 and 4.7 for the case \(d=2\)):

-

(1.a)

to the leading order the probability \(\rho _{\mathrm {D}}\) of mobile droplets satisfies

$$\begin{aligned} \rho _{\mathrm {D}}\geqslant \exp {\Big (- \frac{d\lambda (d,2)}{q^{1/(d-1)}}-\frac{O(\log ^2(1/q))}{q^{1/(2d-2)}}\Big )}, \end{aligned}$$ -

(1.b)

the “amoeba motion” of mobile droplets between nearby locations occurs on a time scale \(\exp (O(\log (1/q)^3)/{q^{1/(2d-2)}})\) which is sub-leading w.r.t. the main time scale of the problem and only manifests in the second term of (1.5).

Property (1.a) follows rather easily from well known facts from bootstrap percolation theory, while proving property (1.b), one of the most innovative steps of the paper, requires a substantial amount of new ideas.

While properties (1.a) and (1.b) above are essential, they are not sufficient on their own for solving problem 2 above. In Sect. 5 we propose to model (admittedly only at the level of a Poincaré inequality, which however suffices for our purposes) the random evolution of mobile droplets as a symmetric simple exclusion process with two additional crucial add-ons: a coalescence part (when two mobile droplets meet they are allowed to merge) and a branching part (a single droplet can create a new one nearby as in the generalised FA-1f process). This model, which we call g-CBSEP, was studied for the purpose of its present application in the preparatory work [26]. Finally, the fact that g-CBSEP relaxes on a time scale proportional to the inverse density of mobile droplets (modulo logarithmic corrections) (see Proposition 5.2) yields the scaling of Theorem 1.3. We emphasise that modelling the large-scale motion of droplets by g-CBSEP instead of a generalised FA-1f process is an absolute novelty, also with respect to the physics literature.

2 Proof of Theorem 1.3: lower bound

In this section we establish the lower bounds (1.4) and (1.6) of Theorem 1.3. Our proof is actually a procedure to establish a general lower bound for \({{\mathbb {E}}} _{\mu _q}[\tau _0]\) based on bootstrap percolation. This approach improves upon a previous general result [33] Lemma 4.3 which lower bounds \({{\mathbb {E}}} _{\mu _q}[\tau _0]\) with the mean infection time for the corresponding bootstrap percolation model.

Before spelling the details out, let us explain the proof idea. In BP it is known that the origin typically gets infected by a rare “critical droplet” of size roughly 1/q which can be infected only using internal infections. This droplet, initially at distance \(\approx (\text {density of critical droplets})^{-1/d}\) from the origin, grows linearly until hitting the origin. Hence \({\tau _0^{\mathrm {BP}}} \approx (\text {density of critical droplets})^{-1/d}\). On the contrary, the leading behaviour of \(\tau _0\) is governed by the inverse probability of a critical droplet, because one needs to wait for a critical droplet to reach the origin under the FA-2f dynamics. Thus, we expect \(\tau _0\approx ({\tau _0^{\mathrm {BP}}} )^{d}\).

In order to turn this idea into a proof we need a little notation. We call any cuboid of \({{\mathbb {Z}}} ^d\) with faces perpendicular to the lattice directions simply cuboid. For a cuboid \(R\subset {{\mathbb {Z}}} ^d\) and \(\eta \in \varOmega _{{{\mathbb {Z}}} ^d}\) we denote by \([\eta ]_R\) the set of sites \(x\in R\) which can become infected by legal updates (recall Sect. 1.2) only using infections in R. Equivalently, \([\eta ]_R\) can be viewed as the set of sites eventually infected by 2-BP with initial condition the set \(\{x\in R:\eta _x=0\}\). Note that \([\eta ]_R\) is a union of disjoint cuboids. For \(x,y\in R\) we write \(\{x\overset{R}{\longleftrightarrow }y\}\) for the event that \([\eta ]_R\) contains a cuboid containing x and y.

The next proposition essentially states that the infection time is at least the inverse density of critical droplets.

Proposition 2.1

Let \(V=[-\ell ,\ell ]^d\) with \(\ell =\ell (q)\) be such that

and let

Then

and \(\tau _0\geqslant q/(\rho |V|^2)\) w.h.p.

Proof

Let \((\eta (t))_{t\geqslant 0}\) denote the stationary KCM on \({{\mathbb {Z}}} ^d,\) let \({\mathcal {I}}=\{\omega :0\in [\omega ]_V\}\) and let \(\tau =\inf \{t\geqslant 0,\eta (t)\in {\mathcal {I}}\}.\) Given a configuration \(\eta \in \varOmega \), we say that the origin is infectable inside V iff \(\eta \in {\mathcal {I}}\). The key observation here is that, by construction, \(\tau _0\geqslant \tau \).

Suppose that at time \(t=0\) the origin is not infectable inside V or, equivalently, that \(\tau >0\). Then we claim that at time \(\tau >0\) there exists a site x at the boundary of V such that \(\eta (\tau )\in \{x\overset{V}{\longleftrightarrow }0\}.\) In other words, at time \(\tau \) a suitable very unlikely infection has appeared in V. To prove the claim assume \(\tau >0\) and consider the site \(x\in {{\mathbb {Z}}} ^d\) which is updated at time \(\tau \). Necessarily \(x\in V\) and \(d(x,V^c)=1\), since otherwise \([\eta ^x(\tau )]_V=[\eta (\tau )]_V\), where \(\eta ^x(\tau )\) is the configuration equal to \(\eta (\tau )\) except at site x. Furthermore, by definition of \(\tau \), \(\eta (\tau )\in {\mathcal {I}}\) and \(\eta ^x(\tau )\not \in {\mathcal {I}}\). But this implies \(\eta (\tau )\in \{x\overset{V}{\longleftrightarrow }0\}\), since otherwise a change of the state at x could not change the infectability of the origin inside V.

Recall now the rate one Poisson clocks discussed at the beginning of Sect. 1.2 and let \(N_V(s)\) denote the random number of clock rings (legal or not) at sites in V up to time s. Let also \(\eta ^{(j)}\) denote the configuration right after the j-th clock ring. By the above we have

Yet, conditionally on the clock rings, \(\eta ^{(j)}\) is distributed according to \(\mu _q\) for the stationary FA-2f process (see e.g. [23] Claim 3.11). Hence, recalling (2.2), we get

Using \({{\mathbb {E}}} (N_V(s))=s|V|\), (2.3) gives

where \({{\mathbb {E}}} \) denotes the average w.r.t. \(N_V(s)\) and we used (2.1) to get \({{\mathbb {P}}} _{\mu _q}(\tau =0)=\mu _q({\mathcal {I}})=o(1)\). In conclusion, for all \(\varepsilon >0\) we have

which concludes the proof by Markov’s inequality. \(\square \)

We can now easily deduce the lower bounds of Theorem 1.3 from Proposition 2.1 and the following bootstrap percolation results.

Theorem 2.2

(Eq. (5.11) of [1]) . For any \(d\geqslant 2\) there exists \(c=c(d)>0\) such that (2.1) holds for any \(d\geqslant 2\) and \(\ell \leqslant \exp (cq^{-1/(d-1)})\).

Theorem 2.3

(Theorem 6.1, Lemma 3.9 and Eq. (4) of [27]) . Let \(d=2\) and \(\ell = \frac{1}{4q'}\log (1/q')\), where \(q'=-\log (1-q)\). Fix a cuboid (i.e. rectangle) \(R\subset {{\mathbb {Z}}} ^2\) with side lengths a, b such that \(1\leqslant a\leqslant b\leqslant 2\ell \) and \(b\geqslant \ell \). Then

Theorem 2.4

(Theorem 17 of [5]) . Let \(d\geqslant 2\) and \(\varepsilon >0\). Let \(C_0\) be sufficiently large depending on d and \(\varepsilon \). Then for any q small enough, \(C>C_0\) not depending on q and cuboid R with longest edge of length \(\ell =C/q^{1/(d-1)}\) we have

Proof of the lower bounds (1.4) and (1.6) in Theorem 1.3

Fix d and \(\ell \) as in Theorem 2.3. Theorem 2.2 implies (2.1). Then Theorem 2.3 and a union bound over all possible cuboids \(R\subset V=[-\ell ,\ell ]^2\) containing both 0 and some x with \(d(x,V^c)=1\) give \(\rho \leqslant \exp (-\frac{\pi ^2}{9q}+\frac{O(1)}{\sqrt{q}})\). Thus, (1.4) follows from Proposition 2.1.

Fix d and \(\ell \) as in Theorem 2.4. Theorem 2.2 implies (2.1). The upper bound on \(\rho \) leading to (1.6) follows from Theorem 2.4 together with a union bound as above, so we may conclude by Proposition 2.1. \(\square \)

3 Constrained Poincaré inequalities

In this section we state and prove various Poincaré inequalities for the auxiliary chains that will be instrumental in Sect. 4 (see Lemmas 4.9 and 4.10).

3.1 Notation

Given \(\varLambda \subset {{\mathbb {Z}}} ^2\) and \(\omega \in \varOmega \), we write \(\omega _{\varLambda }\in \varOmega _{\varLambda }:=\{0,1\}^\varLambda \) for the restriction of \(\omega \) to \(\varLambda \) and we denote by \(\mu _\varLambda \) the marginal of \(\mu \) on \(\varOmega _\varLambda \). The configuration (in \(\varOmega \) or \(\varOmega _\varLambda \)) identically equal to one is denoted by \({\mathbf {1}}\). Given disjoint \(\varLambda _1,\varLambda _2\subset {{\mathbb {Z}}} ^2\), \(\omega ^{(1)}\in \varOmega _{\varLambda _1}\) and \(\omega ^{(2)}\in \varOmega _{\varLambda _2}\), we write \(\omega ^{(1)}\cdot \omega ^{(2)}\in \varOmega _{\varLambda _1\cup \varLambda _2}\) for the configuration equal to \(\omega ^{(1)}\) in \(\varLambda _1\) and to \(\omega ^{(2)}\) in \(\varLambda _2\). For \(f:\varOmega \rightarrow {\mathbb {R}}\) we will denote by \(\mu (f)\) its expectation w.r.t. \(\mu \) and by \(\mu _{\varLambda }(f)\) and \({\text {Var}}_\varLambda (f)\) the mean and variance w.r.t. \(\mu _\varLambda \), given \(\omega _{{{\mathbb {Z}}} ^2\setminus \varLambda }\).

For sake of completeness, we recall the classic definitions of Dirichlet form, Poincaré inequality, and relaxation time. Given a measure \(\nu \) and a Markov process with generator \({\mathcal {L}}\) reversible w.r.t. \(\nu \), the corresponding Dirichlet form \({\mathcal {D}}:{{\,\mathrm{Dom}\,}}({\mathcal {L}})\rightarrow {\mathbb {R}}\) is defined as

For the FA-2f model, the definition of Sect. 1.2 yields the following Dirichlet form

with \(c_x\) the indicator function of the event “the constraint at x is satisfied”, namely for \(x\in {{\mathbb {Z}}} ^2\) and \(\eta \in \varOmega \) we set

where \(y\sim x\) if x, y are nearest neighbours.

We say that a Poincaré inequality with constant C is satisfied by the Dirichlet form if for any function \(f\in {{\,\mathrm{Dom}\,}}({\mathcal {L}})\) it holds

Finally, the relaxation time is defined as the best constant in the Poincaré inequality, namely

A finite relaxation time implies that the reversible measure is mixing for the semigroup \(P_t:=e^{t{\mathcal {L}}}\) with exponentially decaying correlations (see e.g. [37]), namely for all \(f\in L^2(\nu )\) it holds

3.2 FA-1f-type Poincaré inequalities

Fix \(\varLambda \subset {\mathbb {Z}}^2\) a connected set and let \(\varOmega ^+_{\varLambda }=\varOmega _\varLambda \setminus {\mathbf {1}}\). Given \(x\in \varLambda \) let \(N^\varLambda _x\) be the set of neighbours of x in \(\varLambda \) and let \({\mathcal {N}}_x^\varLambda \) be the event that \(N_x^\varLambda \) contains at least one infection. For any \(z\in \varLambda \) consider the two Dirichlet forms

Remark 3.1

The alert reader will recognise the above expressions as the Dirichlet forms of the FA-1f process on \(\varOmega ^+_\varLambda \) or on \(\varOmega _\varLambda \) with the site z unconstrained.

Our first tool is a Poincaré inequality for these Dirichlet forms.

Proposition 3.2

Let \(\varLambda \) be a connected subset of \({\mathbb {Z}}^2\) and let \(z\in \varLambda \) be an arbitrary site. Then:

-

(1)

for any \(f:\varOmega ^+_\varLambda \rightarrow {{\mathbb {R}}} \),

$$\begin{aligned} {\text {Var}}_{\varLambda }(f | \varOmega ^+_{\varLambda }) \leqslant \frac{1}{q^{O(1)}}{\mathcal {D}}_\varLambda ^{\mathrm {FA-1f}}(f); \end{aligned}$$(3.7) -

(2)

for any \(f:\varOmega _\varLambda \rightarrow {{\mathbb {R}}} \),

$$\begin{aligned} {\text {Var}}_{\varLambda }(f) \leqslant \frac{1}{q^{O(1)}}{\mathcal {D}}_\varLambda ^{\mathrm {FA-1f},z}(f), \end{aligned}$$(3.8)

where the constants in the O(1) do not depend on z or \(\varLambda \).

Proof

Inequality (3.7) is proved in [8, Theorem 6.1]. In order to prove (3.8), consider the auxiliary Dirichlet form

The corresponding ergodic, continuous time Markov chain on \(\varOmega _\varLambda \), reversible w.r.t. \(\mu _\varLambda \), updates the state of z at rate 1 and, if \(\omega \in \varOmega ^+_\varLambda \), it updates the entire configuration w.r.t. \(\pi (\cdot | \varOmega ^+_\varLambda )\). Observe that two copies of this chain attempting the same updates simultaneously couple as soon as they update the state of z to state 0 and then change to the same configuration in \(\varOmega _\varLambda ^+\). Thus, by [29, Corollary 5.3 and Theorem 12.4] the relaxation time of this chain is O(1/q), as the first step occurs at rate q. Indeed, after time 1/q there is probability \(\varOmega (1)\) that the above sequence of two consecutive updates has been performed.

Hence,

where the second inequality follows from (3.7). We may then conclude by observing that \(\mu _\varLambda ({\text {Var}}_z(f))+\mu _\varLambda (\varOmega ^+_\varLambda ){\mathcal {D}}_\varLambda ^{\mathrm {FA-1f}}(f)\leqslant 2{\mathcal {D}}_\varLambda ^{\mathrm {FA-1f},z}(f)\). \(\square \)

Our second tool is a general constrained Poincaré inequality for two independent random variables.

Proposition 3.3

(See [25, Lemma 3.10]). Let \(X_1,X_2\) be two independent random variable taking values in two finite sets \({{\mathbb {X}}} _1,{{\mathbb {X}}} _2\) respectively. Let also \({\mathcal {H}}\subset {{\mathbb {X}}} _{1}\) with \({{\mathbb {P}}} (X_1\in {\mathcal {H}})>0\). Then for any \(f:{{\mathbb {X}}} _1\times {{\mathbb {X}}} _2\rightarrow {{\mathbb {R}}} \) it holds

with \({\text {Var}}_i(f)= {\text {Var}}(f(X_1,X_2) | X_i)\).

Roughly speaking, this states that the chain that updates \(X_1\) at rate 1 and \(X_2\) at rate 1 only if \({\mathcal {H}}\) occurs, has relaxation time given by the inverse probability of \({\mathcal {H}}\).

3.3 Constrained block chains

In this section we define two auxiliary constrained reversible Markov chains and give an upper bound for the corresponding Poincaré constants (Propositions 3.5 and 3.7).

Let \((\varOmega _i,\pi _i)_{i=1}^3\) be finite probability spaces and let \((\varOmega ,\pi )\) denote the associated product space. For \(\omega \in \varOmega \) we write \(\omega _i\in \varOmega _i\) for its \(i^{\mathrm{th}}\) coordinate and we assume for simplicity that \(\pi _i(\omega _i)>0\) for each \(\omega _i\). Fix \(\mathcal A_3\subset \varOmega _3\) and for each \(\omega _3\in {\mathcal {A}}_3\) consider an event \({\mathcal {B}}_{1,2}^{\omega _3}\subset \varOmega _1\times \varOmega _2\). Analogously, fix \({\mathcal {A}}_1\subset \varOmega _1\) and for each \(\omega _1\in {\mathcal {A}}_1\) consider an event \(\mathcal B_{2,3}^{\omega _1}\subset \varOmega _2\times \varOmega _3\). We then set

and let for any \(f:{\mathcal {H}}\cup {\mathcal {K}}\rightarrow {{\mathbb {R}}} \)

Observation 3.4

It is easy to check that \({\mathcal {D}}_{\mathrm {aux}}^{(1)}(f)\) is the Dirichlet form of the continuous time Markov chain on \({\mathcal {H}}\cup {\mathcal {K}}\) in which if \(\omega \in {\mathcal {H}}\) the pair \((\omega _1,\omega _2)\) is resampled with rate one from \(\pi _1\otimes \pi _2(\cdot | {\mathcal {B}}_{1,2}^{\omega _3})\) and if \(\omega \in {\mathcal {K}}\) the pair \((\omega _2,\omega _3)\) is resampled with rate one from \(\pi _2\otimes \pi _3(\cdot | {\mathcal {B}}_{2,3}^{\omega _1})\). This chain is reversible w.r.t. \(\pi (\cdot | {\mathcal {H}}\cup {\mathcal {K}})\) and its constraints, contrary to what happens for general KCM, depend on the to-be-updated variables.

Proposition 3.5

There exists a universal constant c such that the following holds. Suppose that there exist two events \({\mathcal {F}}_{1,2}\), \({\mathcal {F}}_{2,3}\) such that

and let

Then, for all \(f:{\mathcal {H}}\cup {\mathcal {K}}\rightarrow {{\mathbb {R}}} \),

Proof

Consider the Markov chain \((\omega (t))_{t\geqslant 0}\) determined by the Dirichlet form \({\mathcal {D}}_{\mathrm {aux}}^{(1)}\) as described in Observation 3.4. Given two arbitrary initial conditions \(\omega (0)\) an \(\omega '(0)\) we will construct a coupling of the two chains such that with probability \(\varOmega (1)\) we have \(\omega (t)=\omega '(t)\) for any \(t>T_{\mathrm {aux}}^{(1)}\). Standard arguments (see for example [29, Theorem 12.4 and Corollary 5.3]) then prove that for this chain it holds  and the conclusion of the proposition follows. To construct our coupling, we use the following representation of the Markov chain. We are given two independent Poisson clocks with rate one and the chain transitions occur only at the clock rings. Suppose that the first clock rings. If the current configuration \(\omega \) does not belong to \({\mathcal {H}}\) the ring is ignored. Otherwise, a Bernoulli variable \(\xi \) with probability of success \(\pi ({\mathcal {F}}_{1,2} | {\mathcal {B}}_{1,2}^{\omega _3})\) is sampled. If \(\xi =1\), then the pair \((\omega _1,\omega _2)\) is resampled w.r.t. the measure \(\pi (\cdot | {\mathcal {F}}_{1,2},{\mathcal {B}}_{1,2}^{\omega _3})\), while if \(\xi =0\), then \((\omega _1,\omega _2)\) is resampled w.r.t. the measure \(\pi (\cdot | {\mathcal {F}}^c_{1,2},{\mathcal {B}}_{1,2}^{\omega _3})\). Clearly, in doing so the couple \((\omega _1,\omega _2)\) is resampled w.r.t. \(\pi (\cdot | {\mathcal {B}}_{1,2}^{\omega _3})\). Similarly if the second clock rings but with \({\mathcal {H}}\), \((\omega _1,\omega _2)\), \({\mathcal {F}}_{1,2}\) and \({\mathcal {B}}_{1,2}^{\omega _3}\) replaced by \({\mathcal {K}}\), \((\omega _2,\omega _3)\), \({\mathcal {F}}_{2,3}\) and \({\mathcal {B}}_{2,3}^{\omega _1}\) respectively. It is important to notice that \(\pi (\cdot | {\mathcal {F}}_{1,2},{\mathcal {B}}_{1,2}^{\omega _3})=\pi (\cdot | {\mathcal {F}}_{1,2})\) for all \(\omega _3\in {\mathcal {A}}_3\), as, by assumption, \({\mathcal {F}}_{1,2}\subset \bigcap _{\omega _3\in {\mathcal {A}}_3}{\mathcal {B}}_{1,2}^{\omega _3}\). Similarly, \(\pi (\cdot | {\mathcal {F}}_{2,3},{\mathcal {B}}_{2,3}^{\omega _1})=\pi (\cdot | {\mathcal {F}}_{2,3})\) for all \(\omega _1\in {\mathcal {A}}_1\).

and the conclusion of the proposition follows. To construct our coupling, we use the following representation of the Markov chain. We are given two independent Poisson clocks with rate one and the chain transitions occur only at the clock rings. Suppose that the first clock rings. If the current configuration \(\omega \) does not belong to \({\mathcal {H}}\) the ring is ignored. Otherwise, a Bernoulli variable \(\xi \) with probability of success \(\pi ({\mathcal {F}}_{1,2} | {\mathcal {B}}_{1,2}^{\omega _3})\) is sampled. If \(\xi =1\), then the pair \((\omega _1,\omega _2)\) is resampled w.r.t. the measure \(\pi (\cdot | {\mathcal {F}}_{1,2},{\mathcal {B}}_{1,2}^{\omega _3})\), while if \(\xi =0\), then \((\omega _1,\omega _2)\) is resampled w.r.t. the measure \(\pi (\cdot | {\mathcal {F}}^c_{1,2},{\mathcal {B}}_{1,2}^{\omega _3})\). Clearly, in doing so the couple \((\omega _1,\omega _2)\) is resampled w.r.t. \(\pi (\cdot | {\mathcal {B}}_{1,2}^{\omega _3})\). Similarly if the second clock rings but with \({\mathcal {H}}\), \((\omega _1,\omega _2)\), \({\mathcal {F}}_{1,2}\) and \({\mathcal {B}}_{1,2}^{\omega _3}\) replaced by \({\mathcal {K}}\), \((\omega _2,\omega _3)\), \({\mathcal {F}}_{2,3}\) and \({\mathcal {B}}_{2,3}^{\omega _1}\) respectively. It is important to notice that \(\pi (\cdot | {\mathcal {F}}_{1,2},{\mathcal {B}}_{1,2}^{\omega _3})=\pi (\cdot | {\mathcal {F}}_{1,2})\) for all \(\omega _3\in {\mathcal {A}}_3\), as, by assumption, \({\mathcal {F}}_{1,2}\subset \bigcap _{\omega _3\in {\mathcal {A}}_3}{\mathcal {B}}_{1,2}^{\omega _3}\). Similarly, \(\pi (\cdot | {\mathcal {F}}_{2,3},{\mathcal {B}}_{2,3}^{\omega _1})=\pi (\cdot | {\mathcal {F}}_{2,3})\) for all \(\omega _1\in {\mathcal {A}}_1\).

In our coupling both chains use the same clocks. Suppose that the first clock rings and that the current pair of configurations is \((\omega ,\omega ')\). Assume also that at least one of them, say \(\omega \), is in \({\mathcal {H}}\) (otherwise, both remain unchanged). In order to construct the coupling update we proceed as follows.

-

If \(\omega '\notin {\mathcal {H}}\) then \(\omega \) is updated as described above, while \(\omega '\) stays still.

-

If \(\omega '\in {\mathcal {H}}\) we first maximally couple the two Bernoulli variables \(\xi ,\xi '\) corresponding to \(\omega ,\omega '\) respectively. Then:

-

if \(\xi =\xi '=1\), we update both \((\omega _1,\omega _2)\) and \((\omega '_1,\omega '_2)\) to the same couple \((\eta _1,\eta _2)\in {\mathcal {F}}_{1,2}\) with probability \(\pi ((\eta _1,\eta _2) | {\mathcal {F}}_{1,2});\)

-

otherwise we resample \((\omega _1,\omega _2)\) and \((\omega '_1,\omega '_2)\) independently from their respective law given \(\xi ,\xi '\).

-

Similarly if the ring comes from the second clock. The final coupling is then equal to the Markov chain on \(\varOmega \times \varOmega \) with the transition rates described above. Suppose now that there are three consecutive rings occurring at times \(t_1<t_2<t_3\) such that:

-

the first and last ring come from the first clock while the second ring comes from the second clock, and

-

the sampling of the Bernoulli variables (if any) at times \(t_1\), \(t_2\) and \(t_3\) all produce the value one.

Then we claim that at time \(t_3\) the two copies are coupled.

To prove the claim, we begin by observing that after the first update at \(t_1\) both copies of the coupled chain belong to \({\mathcal {K}}\). Here we use (3.9). Indeed, if the first update is successful for \(\omega \) (i.e. \(\omega \in {\mathcal {H}}\)) then the updated configuration belongs to \({\mathcal {F}}_{1,2}\times \{\omega _3\}\subset {\mathcal {K}}\), because of our assumption \(\xi =1\). If, on the contrary, the first update fails (i.e. \(\omega \not \in {\mathcal {H}}\)) then \(\omega \in {\mathcal {K}}\setminus {\mathcal {H}}\) before and after the update. The same applies to \(\omega '\).

Next, using again the assumption on the Bernoulli variables together with the previous observation, we get that after the second ring the new pair of current configurations agree on the second and third coordinate. Moreover both copies belong to \({\mathcal {H}}\) thanks to (3.10). Finally, after the third ring the two copies couple on the first and second coordinates using again the assumption on the outcome for the Bernoulli variables.

In order to conclude the proof of the proposition it is enough to observe that for any given time interval \(\varDelta \) of length one the probability that there exist \(t_1<t_2<t_3\) in \(\varDelta \) satisfying the requirements of the claim is bounded from below by

for some constant \(c>0\). \(\square \)

In the same setting consider two other events \({\mathcal {C}}_{1,2}\subset \varOmega _1\otimes \varOmega _2\), \({\mathcal {C}}_{2,3}\subset \varOmega _2\otimes \varOmega _3\) and let

The Dirichlet form of our second Markov chain on \({\mathcal {M}}\cup {\mathcal {N}}\) is then

Observation 3.6

Similarly to the first case, the continuous time chain defined by (3.11) is reversible w.r.t. \(\pi (\cdot | {\mathcal {M}}\cup {\mathcal {N}})\) and it can be described as follows. If \(\omega \in {\mathcal {M}}\) then with rate one \((\omega _1,\omega _2)\) is resampled w.r.t. \(\pi _1\otimes \pi _2(\cdot | {\mathcal {C}}_{1,2})\) and, independently at unit rate, \(\omega _3\) is resampled w.r.t. \(\pi _3(\cdot | {\mathcal {A}}_3)\). Similarly, independently from the previous updates at rate one, if \(\omega \in {\mathcal {N}}\) then \((\omega _2,\omega _3)\) is resampled w.r.t. \(\pi _2\otimes \pi _3(\cdot | {\mathcal {C}}_{2,3})\) and, independently, \(\omega _1\) is resampled from \(\pi _1(\cdot | {\mathcal {A}}_1)\).

Proposition 3.7

There exists a universal constant c such that the following holds. Suppose that there exist an event \({\hat{{\mathcal {C}}}}_{1,2}\subset {\mathcal {C}}_{1,2}\) and a collection \(({\mathcal {A}}_3^{\omega _1,\omega _2})_{(\omega _1,\omega _2)\in {\hat{{\mathcal {C}}}}_{1,2}}\) of subsets of \({\mathcal {A}}_3\) such that

and let

Then there exists \(c>0\) such that for all \(f:{\mathcal {M}}\cup {\mathcal {N}}\rightarrow {{\mathbb {R}}} \),

Proof

We proceed as in the proof of Proposition 3.5 with the following representation for the Markov chain. We are given four independent Poisson clocks of rate one and each clock comes equipped with a collection of i.i.d. random variables. The four independent collections, the first being for the first clock etc, are

where the laws of the collections are \(\pi _1\otimes \pi _2(\cdot | {\mathcal {C}}_{1,2})\), \(\pi _3(\cdot | {\mathcal {A}}_3)\), \(\pi _2\otimes \pi _3(\cdot | {\mathcal {C}}_{2,3})\) and \(\pi _1(\cdot | {\mathcal {A}}_1)\) respectively.

At each ring of the first and second clocks the configuration is updated with the variables from the corresponding collection iff \(\omega \in {\mathcal {M}}\). Similarly for the third and fourth clocks with \({\mathcal {N}}\). In order to couple different initial conditions, we use the same collections of clock rings and update configurations.

Suppose now that there are four consecutive rings \(t_1<t_2<t_3<t_4\), coming from the first, second, third and fourth clocks in that order, such that:

-

at \(t_1\) the proposed update \((\eta _1,\eta _2)\) of the first two coordinates belongs to \({\hat{{\mathcal {C}}}}_{1,2}\), and

-

at \(t_2\) the proposed update \(\eta _3\) of the third coordinate belongs to \( {\mathcal {A}}_3^{(\eta _1,\eta _2)}\).

We then claim that after \(t_4\) all initial conditions \(\omega \) are coupled. To prove this, we first observe that after the second ring each chain belongs to \({\mathcal {N}}\). Indeed, if \(\omega \not \in {\mathcal {M}}\), then the first two proposed updates are ignored and the configuration \(\omega \in {\mathcal {N}}\setminus {\mathcal {M}}\). If, on the contrary, \(\omega \in {\mathcal {M}}\), then both updates are successful and the configuration is updated to \((\eta _1,\eta _2,\eta _3)\in {\hat{{\mathcal {C}}}}_{1,2}\times {\mathcal {A}}^{\eta _1,\eta _2}_3\subset {\mathcal {M}}\cap {\mathcal {N}}\) by (3.12).

Since after \(t_2\) the state of the chain is necessarily in \({\mathcal {N}}\), the third and fourth updates to states \((\eta '_2,\eta '_3)\) and \(\eta '_1\) respectively are both successful and thus any initial condition leads to the state \((\eta '_1,\eta '_2,\eta '_3)\) after \(t_4\), which proves the claim. The proof is then completed as in Proposition 3.5. \(\square \)

4 Mobile droplets

This section, which represents the core of the paper, is split into two parts:

-

the definition of mobile droplets together with the choice of the mesoscopic critical length scale \(L_{\mathrm {D}}\) characterising their linear size;

-

the analysis of two key properties of mobile droplets namely:

-

their equilibrium probability \(\rho _{\mathrm {D}}\);

-

the relaxation time of FA-2f in a box of linear size \(\varTheta (L_D)\) conditionally on the presence of a mobile droplet.

-

Mobile droplets are defined as boxes of suitable linear size in which the configuration of infection is super-good (see Definition 4.5). In turn, the super-good event (see Sect. 4.2) is constructed recursively via a multi-scale procedure on a sequence of exponentially increasing length scales \((\ell _n)_{n=1}^N\) (see Definition 4.2). While clearly inspired by the classical procedure used in bootstrap percolation [28], an important novelty in our construction is the freedom that we allow for the position of the super-good core of scale \(\ell _n\) inside the super-good region of scale \(\ell _{n+1}\). The final scale \(\ell _N\) corresponds to the critical scale \(L_{\mathrm {D}}\) mentioned above and a convenient choice is \(L_{\mathrm {D}}\sim q^{-17/2}\) (see (4.4)). There is nothing special in the exponent 17/2: as long as we choose a sufficiently large exponent our results would not change. The choice of \(L_D\) is in fact only dictated by the requirement that w.h.p. there exist no \(L_{\mathrm {D}}\) consecutive lattice sites at distance \(\exp (\log ^{O(1)}(1/q)/q)\) from the origin which are healthy and \(L_{\mathrm {D}}= e^{o(1/q)}\). Finally, similarly to their bootstrap percolation counterparts, the probability \(\rho _{\mathrm {D}}\) of mobile droplets crucially satisfies \(\rho _{\mathrm {D}}\simeq ({\tau _0^{\mathrm {BP}}} )^{-2}\) (see Proposition 4.6) and in general for FA-2f in dimension d it satisfies \(\rho _{\mathrm {D}}\simeq ({\tau _0^{\mathrm {BP}}} )^{-d}\).

The extra degree of freedom in the construction of the super-good event provides a much more flexible structure that can be moved around using the FA-2f moves without going through the bottleneck corresponding to the creation of a brand new additional droplet nearby. The main consequence of this feature (see Proposition 4.7) is that the relaxation time of the FA-2f dynamics in a box of side \(L_{\mathrm {D}}\) conditioned on being super-good is sub-leading w.r.t. \(\rho _{\mathrm {D}}^{-1}\) as \(q\rightarrow 0\) and it contributes only to the second order term in Theorem 1.3.

4.1 Notation

For any integer n, we write [n] for the set \(\{1,\dots ,n\}\). We denote by \(\mathbf {e}_1, \mathbf {e}_2\) the standard basis of \({{\mathbb {Z}}} ^2\), and write d(x, y) for the Euclidean distance between \(x,y\in {{\mathbb {Z}}} ^{{2}}\). Given a set \(\varLambda \subset {{\mathbb {Z}}} ^2\), we set \(\partial \varLambda :=\{y\in {{\mathbb {Z}}} ^{d}\setminus \varLambda ,d(y,\varLambda )=1\}\). Given two positive integers a, b, we write \(R(a,b)\subset {\mathbb {Z}}^2\) for the rectangle \([a]\times [b]\) and we refer to a, b as the width and height of R respectively. We also write \(\partial _r R\) (\(\partial _l R\)) for the column \(\{a+1\}\times [b]\) (the column \(\{0\}\times [b]\)), and \(\partial _u R\) (\(\partial _d R\)) for the the row \([a]\times \{b+1\}\) (the row \([a]\times \{0\}\)). Similarly for any rectangle of the form \(R+x,x\in {{\mathbb {Z}}} ^2\).

Given \(\varLambda \subset {{\mathbb {Z}}} ^2\) and \(\omega \in \varOmega \), we write \(\omega _{\varLambda }\in \varOmega _{\varLambda }:=\{0,1\}^\varLambda \) for the restriction of \(\omega \) to \(\varLambda \). The configuration (in \(\varOmega \) or \(\varOmega _\varLambda \)) identically equal to one is denoted by \({\mathbf {1}}\). Given disjoint \(\varLambda _1,\varLambda _2\subset {{\mathbb {Z}}} ^2\), \(\omega ^{(1)}\in \varOmega _{\varLambda _1}\) and \(\omega ^{(2)}\in \varOmega _{\varLambda _2}\), we write \(\omega ^{(1)}\cdot \omega ^{(2)}\in \varOmega _{\varLambda _1\cup \varLambda _2}\) for the configuration equal to \(\omega ^{(1)}\) in \(\varLambda _1\) and to \(\omega ^{(2)}\) in \(\varLambda _2\). We write \(\mu _\varLambda \) for the marginal of \(\mu _q\) on \(\varOmega _\varLambda \) and \({\text {Var}}_\varLambda (f)\) for the variance of f w.r.t. \(\mu _\varLambda \), given the variables \((\omega _x)_{x\notin \varLambda }\).

4.2 Super-good event and mobile droplets

As anticipated, mobile droplets will be square regions of a certain side length in which the infection configuration satisfies a specific condition dubbed super-good. The latter requires in turn the definition of a key event for rectangles—\(\omega \)-traversability (see also [28])—together with a sequence of exponentially increasing length scales.

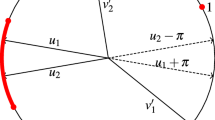

Black circles denote infected sites. The boundary condition \(\omega \) in the figure is fully infected on \(\partial _r R\) and fully healthy elsewhere. The rectangle R is \(\omega \)-right-traversable (i.e \({\mathcal {T}}_\rightarrow ^\omega (R)\) occurs) but it is neither \(\omega \)-up-traversable, nor \(\omega \)-left-traversable. It is also down-traversable (\({\mathcal {T}}_\downarrow (R)\) occurs) but not traversable in any other direction

Definition 4.1

(\(\omega \)-Traversability). Fix a rectangle \(R=R(a_1,a_2)+x\) together with \(\eta \in \varOmega _R\) and a boundary configuration \(\omega \in \varOmega _{\partial R} \). We say that R is \(\omega \)-right-traversable for \(\eta \) if each pair of adjacent columns of \(R\cup \partial _r R\) contains at least one infection in \(\eta \cdot \omega \) (see Fig. 1). We denote this event by \({{\mathcal {T}}}^\omega _{\rightarrow }(R)\subset \varOmega _R\).

We say that R is right-traversable for \(\eta \) if it is \({\mathbf {1}}\)-right-traversable or, equivalently, if it is \(\omega \)-right-traversable for all \(\omega \). We denote this event by \({\mathcal {T}}_{\rightarrow }(R)\equiv {\mathcal {T}}_\rightarrow ^{\mathbf{1}}(R)\subset \varOmega _R\).

Up/left/down-traversability and \(\omega \)-up/left/down-traversability is defined identically up to rotating \(\eta \) and \(\omega \) appropriately (see Fig. 1).

In figures we depict traversability by solid arrows and \(\omega \)-traversability by dashed arrows (see Fig. 1). Notice that right-traversability requires that the rightmost column contains an infection. Similarly for the other directions.

Definition 4.2

(Length scales and nested rectangles). For all integer n we setFootnote 3

and

(see Fig. 2). We say that a rectangle R is of class n if there exist \(w,z\in {{\mathbb {Z}}} ^2\) such that \(\varLambda ^{(n-1)}+w\subsetneq R\subset \varLambda ^{(n)}+z\). We refer to single sites as rectangles of class 0.

Note that \((\varLambda ^{(2m)})_{m\geqslant 0}\) is a sequence of squares, while \((\varLambda ^{(2m+1)})_{m\geqslant 0}\) is a sequence of rectangles elongated horizontally and \(\varLambda ^{(n_1)}\subset \varLambda ^{(n_2)}\) if \(n_1<n_2\). Moreover, for \(n=2m> 0\), a rectangle of class n is a rectangle of width \(\ell _m\) and height \(a_2\in (\ell _{m-1},\ell _m]\) and for \(n=2m+1\) it is a rectangle of height \(\ell _m\) and width \(a_1\in (\ell _m,\ell _{m+1}]\).

We are now ready to introduce the key notion of the \(\omega \)-super-good event on different scales. This event is defined recursively on n and it has a hierarchical structure. Roughly speaking, a rectangle R of the form \(R=\varLambda ^{(n)}+x, x\in {{\mathbb {Z}}} ^2\), is \(\omega \)-super-good if it contains a \({\mathbf {1}}\)-super-good rectangle \(R'\) of the form \(R'=\varLambda ^{(n-1)}+x'\) called the core and outside the core it satisfies certain \(\omega \)-traversability conditions (see Fig. 2).

An example of super-good configuration in the square \(\varLambda ^{(6)}\). The black square, of the form \(\varLambda ^{(2)}+x\), is completely infected and it is a super-good core for the rectangle of the form \(\varLambda ^{(3)}+x\) formed by it together with the two hatched rectangles. This rectangle of the form \(\varLambda ^{(3)}+x\) is also super-good because of the right/left-traversability of the hatched parts (arrows) and it is a super-good core for the square containing it and so on

Definition 4.3

(\(\omega \)-Super-good rectangles). Let us fix an integer \(n\geqslant 0\), a rectangle \(R=R(a_1,a_2)+x\) of class n and \(\omega \in \varOmega _{\partial R}\). We say that R is \(\omega \)-super-good for \(\eta \in \varOmega _{R}\) and denote the corresponding event by \({\mathcal {S}}{\mathcal {G}}^\omega (R)\) if the following occurs in \(\eta \cdot \omega \).

-

\(n=0\). In this case R consists of a single site and \({\mathcal {S}}{\mathcal {G}}^\omega (R)\) is the event that this site is infected.

-

\(n=2m\). For any \(s\in [0,\ell _{m}-\ell _{m-1}]\) write \(R=C_s\cup (\varLambda ^{(n-1)}+ x+s\mathbf {e}_2)\cup D_s\), where \(C_s\) (\(D_s\)) is the part of R below (above) \(\varLambda ^{(n-1)}+x +s\mathbf {e}_2\). With this notation we set

$$\begin{aligned} {\mathcal {S}}{\mathcal {G}}^\omega _s(R):={\mathcal {T}}^{\omega }_{\downarrow }(C_s)\cap {\mathcal {S}}{\mathcal {G}}^\mathbf{1}(\varLambda ^{(n-1)}+x+s\mathbf {e}_2) \cap {\mathcal {T}}^{\omega }_{\uparrow }(D_s) \end{aligned}$$and let \({\mathcal {S}}{\mathcal {G}}^\omega (R)=\bigcup _{ s\in [0,\ell _{m}-\ell _{m-1}]}{\mathcal {S}}{\mathcal {G}}^\omega _s(R)\).

-

\(n=2m+1\). In this case \({\mathcal {S}}{\mathcal {G}}^\omega (R)\) requires that there is a core in R of the form \(\varLambda ^{(n-1)}+ x+s\mathbf {e}_1, s\in [0,\ell _{m+1}-\ell _{m}]\), which is \({\mathbf {1}}\)-super-good, and the two remaining rectangles forming R to the left and to the right of the core are \(\omega \)-left-traversable and \(\omega \)-right-traversable respectively.

We will say that R is super-good if it is \(\mathbf{1}\)-super-good and denote the corresponding event by \({\mathcal {S}}{\mathcal {G}}(R)\).

Note that \({\mathcal {S}}{\mathcal {G}}^\omega (R)\) is monotone in the boundary condition in the sense that if R is super-good then R is \(\omega \)-super-good for all \(\omega \in \varOmega _{\partial R}\). In order to make notation more concise, whenever a \({\mathcal {S}}{\mathcal {G}}\) event appears in an average or a variance with respect to a rectangle R, we leave out the argument R of the \({\mathcal {S}}{\mathcal {G}}\) event, unless confusion arises. For example, \(\mu _R({\mathcal {S}}{\mathcal {G}})\) will stand for \(\mu _R({\mathcal {S}}{\mathcal {G}}(R))\).

Remark 4.4

(Irreducibility of the FA-2f chain in \({\mathcal {S}}{\mathcal {G}}^\omega (R)\)) It is not difficult to verify that for all \(\eta \in {\mathcal {S}}{\mathcal {G}}^\omega (R)\), there exists a sequence of legal updates that transforms \(\eta \) into the fully infected configuration. Since the FA-2f dynamics is reversible, the above property implies that the FA-2f chain in R restricted to \({\mathcal {S}}{\mathcal {G}}^\omega (R)\) is irreducible.

Now let

and observe that

Definition 4.5

(Mobile droplets). Given \(\omega \in \varOmega \), a mobile droplet for \(\omega \) is any square R of the form \(R=\varLambda ^{(2N)}+x\) for which \(\omega _R\in {\mathcal {S}}{\mathcal {G}}(R)\). We set \(\rho _{\mathrm {D}}=\mu _{\varLambda ^{(2N)}}({\mathcal {S}}{\mathcal {G}})\) to be the probability of a mobile droplet.

The first key property of mobile droplets we will need is the following.

Proposition 4.6

(Probability of mobile droplets). For all \(n\leqslant 2N\),

In particular, this lower bound holds for \(\rho _{\mathrm {D}}\).

The proof of Proposition 4.6 follows from standard 2-BP techniques and it is deferred to “Appendix A”. The second property of mobile droplets requires a bit of preparation.

For \(\varLambda \subset {\mathbb {Z}}^2\), \(\omega \in \varOmega _{{{\mathbb {Z}}} ^2\setminus \varLambda }\), \(\eta \in \varOmega \) and \(x\in \varLambda \) we denote by

with \(c_x\) defined in (3.3), so that \(c_x^{\varLambda ,\omega }\) encodes the constraint at x in \(\varLambda \) with boundary condition \(\omega \). Given a rectangle R of class n and \(\omega \in \varOmega _{{\mathbb {Z}}^2\setminus R}\), let \(\gamma ^\omega (R)\) be the smallest constantFootnote 4\(C\geqslant 1\) such that the Poincaré inequality (recall Sect. 3.1)

holds for every \(f:\varOmega _R\rightarrow {{\mathbb {R}}} \). In the sequel we will sometimes refer to \(\gamma ^\omega (R)\) as the relaxation time of \({\mathcal {S}}{\mathcal {G}}^\omega (R)\). The fact that FA-2f restricted to \({\mathcal {S}}{\mathcal {G}}^\omega (R)\) is irreducible (see Remark 4.4) implies that \(\gamma ^\omega (R)\) is finite. However, proving a good upper bound on \(\gamma ^\omega (R)\) is quite hard.

Proposition 4.7

(Relaxation time of mobile droplets) . For all \(n\leqslant 2N\)

In particular, recalling (4.3), on the final scale this yields

Remark 4.8

We stress an important difference in the definition of \(\gamma ^\omega (\varLambda ^{(n)})\) w.r.t. a similar definition in [25, (12)]. Indeed, in (4.5) the conditioning w.r.t. the super-good event \({\mathcal {S}}{\mathcal {G}}^\omega (R)\) appears in the l.h.s. and in the r.h.s. of the inequality, while in [25, (12)] the conditioning was absent in the r.h.s. Keeping the conditioning also in the r.h.s. is a delicate and important point if one wants to get a Poincaré constant which is sub-leading w.r.t. \(\rho _{\mathrm {D}}^{-1}\). Theorem 4.6 of [25] in the context of FA-2f would give a Poincaré constant bounded from above by \(\exp (\log (1/q)^3/q)\), much bigger than \(\rho _{\mathrm {D}}^{-1}\).

4.3 Proof of Proposition 4.7

The proof of the constrained Poincaré inequality of Proposition 4.7 is unfortunately rather long and technical but the main idea and technical ingredients can be explained as follows.

Given the recursive definition of the super-good event \({\mathcal {S}}{\mathcal {G}}^\omega (\varLambda ^{(n)})\) it is quite natural to try to bound from above its relaxation time in progressively larger and larger volumes. A high-level “dynamical intuition” here goes as follows. After every time interval of length \(\varTheta (\gamma ^{{\mathbf {1}}}(\varLambda ^{(n-1)}))\) the core of \(\varLambda ^{(n)}\), namely a super-good translate of \(\varLambda ^{(n-1)}\) inside \(\varLambda ^{(n)}\), will equilibrate under the FA-2f dynamics. Therefore, the relaxation time of \({\mathcal {S}}{\mathcal {G}}(\varLambda ^{(n)})\) should be at most \(T^{(n)}_{\mathrm{eff}}\times \gamma ^{{\mathbf {1}}}(\varLambda ^{(n-1)})\), where \(T^{(n)}_{\mathrm{eff}}\) is the time that it takes for the core to equilibrate its position inside \(\varLambda ^{(n)}\), assuming that at each time the infections inside it are at equilibrium. The main step necessary to transform this rather vague idea into a proof is as follows.

In order to analyse the characteristic time scale of the effective dynamics of a core, we need to improve and expand a well established mathematical technique for KCM to relate the relaxation times of two \(\omega \)-super-good regions on different scales. Such a technique introduces various types of auxiliary constrained block chains and a large part of our argument is devoted to proving good bounds on their relaxation times (see Sect. 3). The main application of this technique to our concrete problem is summarised in Lemmas 4.9 and 4.10 below which easily imply Proposition 4.7. Let

The two key steps connecting the relaxation times of super-good rectangles of increasing length scale are as follows.

Lemma 4.9

(From \(\ell _{\lfloor n/2\rfloor }+1\) to \(\ell _{\lfloor n/2\rfloor +1}\)) For all \(0\leqslant n\leqslant 2N-1\)

Lemma 4.10

(From \(\ell _{\lfloor n/2\rfloor }\) to \(\ell _{\lfloor n/2\rfloor }+1\)) For all \(0\leqslant n\leqslant 2N-1\)

Proof of Proposition 4.7

Lemmas 4.9 and 4.10 combined imply that

Thus, Proposition 4.7 follows by induction over n. Indeed, \(\gamma ^\omega (\varLambda ^{(0)})=1\) for all \(\omega \in \varOmega _{{{\mathbb {Z}}} ^2\setminus \varLambda ^{(0)}}\), since the l.h.s. of (4.5) is zero. \(\square \)

Before proving Lemma 4.9 formally, let us provide an informal description of the argument. We seek to apply a bisection technique (see [12, 21]) proceeding by a further induction. At each step of this bisection, we divide by two the difference of the widths (or heights) between our current rectangle (initially \(\varLambda ^{(n+1)}\)) and \(\varLambda ^{(n,+)}\). In order to prove a recursive bound on the relaxation times \(\gamma ^\omega \) of the intermediate rectangles of class \(n+1\) arising in the process, we rely on Proposition 3.5 as follows.

We want to prove a Poincaré inequality on a larger rectangle, given such an inequality on a smaller one. We cover the larger one with two overlapping copies of the smaller one. We then use the relaxation in the smaller one to move the core of shape \(\varLambda ^{(n)}\), witnessing it being super-good, to the intersection of the two translates. This makes the second copy super-good and allows us to resample it as well, thanks to the lower-scale Poincaré inequality. Thus, the events \({\mathcal {F}}_{1,2}\) and \({\mathcal {F}}_{2,3}\) in Proposition 3.5 will roughly correspond to finding the core in the aforementioned overlap region (see Fig. 3).

Proof of Lemma 4.9

Given \(0\leqslant n\leqslant 2N-1\), let \(K_n\) be the smallest integer \(K>0\) such that \(\lceil (2/3)^{K} (\ell _{\lfloor n/2\rfloor +1}-\ell _{\lfloor n/2\rfloor })\rceil =1\) (if \(K=0\), there is nothing to prove, since \(\varLambda ^{(n,+)}=\varLambda ^{(n+1)}\)). Equations (4.1) and (4.3) give \(\max _{n\leqslant 2N-1}K_n \leqslant O(\log (1/q))\). Consider the (exponentially increasing) sequence

The partition of \(R^{(k+1)}\) into the rectangles \(V_1,V_2,V_3\). Here we illustrate the event \({\mathcal {F}}_{1,2}\cap {\mathcal {A}}_3\). The grey region \(\varLambda ^{(n)}+s_k\mathbf {e}_1\) at the left boundary of \(V_2\) is \({\mathcal {S}}{\mathcal {G}}\) and the dashed arrows in \(V_1\) and \(V_3\) indicate their \(\omega \)-traversability. The solid arrow in \(V_2\setminus (\varLambda ^{(n)}+s_k\mathbf {e}_1)\) indicates instead the \({\mathbf {1}}\)-traversability of \(V_2\setminus (\varLambda ^{(n)}+s_k\mathbf {e}_1)\). Clearly the entire configuration belongs to the events \({\mathcal {H}}\) and \({\mathcal {K}}\) defined in (4.11), (4.12). Indeed, the two (\(\omega \)-)right-traversability events together imply the \(\omega \)-right-traversability of \((V_2\cup V_3)\setminus (\varLambda ^{(n)}+s_k\mathbf {e}_1)\)

and let \(s_k=d_{k+1}-d_{k}\) for \(k\leqslant K_n-1\). Next consider the collection \((R^{(k)})_{k=0}^{K_n}\) of rectangles of class \(n+1\) interpolating between \(\varLambda ^{(n,+)}\) and \(\varLambda ^{(n+1)}\) defined by

By construction, \(R^{(k)}\subset R^{(k+1)}\), \(R^{(0)}=\varLambda ^{(n,+)}\) and \(R^{(K_n)}=\varLambda ^{(n+1)}\). Finally, recall the events \({\mathcal {S}}{\mathcal {G}}^\omega (R)\) and \({\mathcal {S}}{\mathcal {G}}_s^\omega (R)\) constructed in Definition 4.3 for any rectangle R of class \(n+1\leqslant 2N\) and let

where \(\max _\omega \) is over all \(\omega \in \varOmega _{\partial R^{(k)}}\). In Corollary A.3 we prove that

uniformly over all rectangles R of class \(n+1\leqslant 2N\), all possible values of the offset s and all choices of the boundary configurations \(\omega ,\omega '\in \varOmega _{\partial R}\). As a consequence

With the above notation the key inequality for proving Lemma 4.9 is

for some universal constant \(C>0\). Recalling that \(R^{(0)}=\varLambda ^{(n,+)}\) and \(R^{(K_n)}=\varLambda ^{(n+1)}\), from (4.9) it follows that

which in turn implies Lemma 4.9 by (4.8) and \(K_n \leqslant O(\log (1/q))\).

The proof of (4.9), which is detailed for simplicity only in the even case \(n=2m\), relies on the Poincaré inequality for a properly chosen auxiliary block chain proved in Proposition 3.5.

In order to exploit that proposition we partition \(R^{(k+1)}\) into three disjoint rectangles \(V_1\), \(V_2\), \(V_3\) as follows (see Fig. 3):

Then, given a boundary configuration \(\omega \in \varOmega _{\partial R^{(k+1)}}\), let

where \(\eta _i:=\eta _{V_i}\). In words, \({\mathcal {H}}\) requires that \(V_3\) is \(\omega \)-right-traversable and \(R^{(k)}=V_1\cup V_2\) is \(\omega \cdot \eta _{R^{(k+1)}\setminus R^{(k)}}\)-super good and similarly for \({\mathcal {K}}\). Notice that \({\mathcal {H}}\cup {\mathcal {K}}={\mathcal {S}}{\mathcal {G}}^\omega (R^{(k+1)})\). Indeed, the width of \(V_2\) is in fact \(\ell _m+2d_{k}-d_{k+1}\geqslant \ell _m\) and therefore any configuration in \({\mathcal {S}}{\mathcal {G}}^\omega (R^{k+1})\) necessarily contains a super-good core in either \(V_1\cup V_2\) or \(V_2\cup V_3\).

We next introduce two additional events (see Fig. 3)

In words, \({\mathcal {F}}_{1,2}\) (\({\mathcal {F}}_{2,3}\)) consists of super-good configurations in \(V_1\cup V_2\) (\(V_2\cup V_3\)) with a super-good core of type \(\varLambda ^{(n)}\) inside \(V_2\) in the leftmost possible position. Monotonicity in the boundary condition easily implies that

and similarly for \({\mathcal {F}}_{2,3}\) (see Fig. 3).

We can now apply Proposition 3.5 with parameters \(\varOmega _i=\varOmega _{V_i}\) for \(i\in \{1,2,3\}\), \({\mathcal {A}}_1={\mathcal {T}}^\omega _\leftarrow (V_1)\), \({\mathcal {A}}_3={\mathcal {T}}^\omega _\rightarrow (V_3)\), \({\mathcal {B}}_{1,2}^{\eta _3}={\mathcal {S}}{\mathcal {G}}^{\eta _3\cdot \omega }(V_1\cup V_2)\), \({\mathcal {B}}_{2,3}^{\eta _1}={\mathcal {S}}{\mathcal {G}}^{\eta _1\cdot \omega }(V_2\cup V_3)\) and \({\mathcal {F}}_{1,2},{\mathcal {F}}_{2,3}\) from (4.13). We claim that

Indeed, the second equality follows from (4.13) together with the fact that \(V_1\cup V_2= R^{(k)}\) and \(V_2\cup V_3=R^{(k)}+s_k\), while the inequality follows from (4.7). For the inequality it suffices to use monotonicity in the boundary condition for the first term and observe that \({\mathcal {S}}{\mathcal {G}}^{\eta _1\cdot \omega }_0(s_k\mathbf {e}_1+R^{(k)})\) does not depend on \(\eta _1\) for the second one. Thus, Proposition 3.5 yields

for some universal constant \(c>0\).

In order to conclude the proof of (4.9) we are left with the analysis of the average w.r.t. \(\mu _{R^{(k+1)}}(\cdot | {\mathcal {H}}\cup {\mathcal {K}})\) in the r.h.s. of (4.14). Recalling (4.5) and (4.11), for any \(\eta _3\in {\mathcal {T}}^\omega _{\rightarrow }(V_3)\) we get

An analogous inequality holds for \({\text {Var}}(f | {\mathcal {K}},\eta _1)\) when \(\eta _1\in {\mathcal {T}}^\omega _{\leftarrow }(V_1)\). Finally, we observe that for any \(x\in R^{(k)}\)

since \({\mathbb {1}} _{\mathcal {H}}={\mathbb {1}} _{{\mathcal {A}}_3}{\mathbb {1}} _{{\mathcal {S}}{\mathcal {G}}^{\eta _3\cdot \omega }(R^{(k)})}\leqslant {\mathbb {1}} _{{\mathcal {S}}{\mathcal {G}}^\omega (R^{(k+1)})}\) by (4.11) and \(\mu _{R^{(k+1)}}=\mu _{R^{(k)}}\otimes \mu _{V_3}\). A similar result relation holds for \({\mathcal {K}}\). Inserting (4.15) and (4.16) into (4.14), we get

which proves (4.9) in view of (4.5). \(\square \)

The proof of Lemma 4.10 is similar to that of Lemma 4.9, but in this case we plan to use Proposition 3.7 instead of Proposition 3.5. The reason why the same proof does not apply is that the intersection of two distinct copies of \(\varLambda ^{(n)}\) is never large enough to contain another copy of \(\varLambda ^{(n)}\). Therefore, we are forced to look inside the \(\varLambda ^{(n)}\) core in order to shrink it by one line (see Fig. 4). Namely, we will position the core of type \(\varLambda ^{(n-2)}\) so that it is in the middle region corresponding to \(V_2\) in the previous proof. We will then ask for events stronger than traversability on \(V_1\) and \(V_3\) in order to fit the structure in \(V_2\) (see Fig. 4).

The partition of \(\varLambda ^{(n,+)}\) into the rectangle \(V_2\) and the two columns \(V_1\) and \(V_3\). Here we illustrate the event \(\overline{{\mathcal {S}}{\mathcal {G}}}(V_2)\): the grey region is a super-good rectangle of the type \(\varLambda ^{(n-2)}\), while the patterned rectangles are \({\mathbf {1}}\)-traversable in the arrow directions. If there is at least one infection in \(I_3\) then the rectangle \(V_2\cup V_3\) is super-good. Similarly, an infection in \(I_1\) suffices to make \(V_1\cup V_2\) super good

Proof of Lemma 4.10

Once again, we provide the details only in the case \(n=2m\). Let us start with the case \(m=0\). Firstly, \(\gamma ^\omega (\varLambda ^{(0)})=1\) for all \(\omega \) by the definition (4.5), as \({\text {Var}}_{\varLambda ^{(0)}}(f | {\mathcal {S}}{\mathcal {G}}^\omega )=0\) for all f and \(\omega \). Moreover, \({\mathcal {S}}{\mathcal {G}}^\omega (\varLambda ^{(0,+)})\subset \varOmega _{\varLambda ^{(0,+)}}\) has 1, 2 or 3 elements (depending on \(\omega \)). If this space has a single point, \(\gamma ^\omega (\varLambda ^{(0,+)}=1\) as for \(\varLambda ^{(0)}\) and we are done. Otherwise, we are dealing with an irreducible reversible Markov process on at most 3 states and transition rates bounded from below by q, so \(\max _{\omega }\gamma ^{\omega }(\varLambda ^{(0,+)})=q^{O(1)}\).

Let \(m\geqslant 1\). We begin by writing \(\varLambda ^{(n,+)}=R(\ell _m+1,\ell _m)=V_1\cup V_2\cup V_3\), where \(V_1\) denotes the leftmost column, \(V_3\) the rightmost column and \(V_2\) all the remaining columns (see Fig. 4). By construction \(V_1\cup V_2\) and \(V_2\cup V_3\) are translates of \(\varLambda ^{(n)}\). Then, for any given \(\omega \in \varOmega _{\partial \varLambda ^{(n,+)}}\), we introduce the events

and observe that \({\mathcal {S}}{\mathcal {G}}^\omega (\varLambda ^{(n,+)})={\mathcal {M}}\cup {\mathcal {N}}\), since the only possible values of the offset s in Definition 4.3 in our case are 0 and 1. In order to be able to use Proposition 3.7 we need some further events. The first one is the event \(\overline{{\mathcal {S}}{\mathcal {G}}}(V_2)\) which is best explained by Fig. 4. It corresponds to requiring that inside the rectangle \(V_2=R(\ell _m-1,\ell _m)+\mathbf {e}_1\) there exists a \({\mathbf {1}}\)-super-good square \(R(\ell _{m-1},\ell _{m-1})+x\) and the remaining rectangles in \(V_2\setminus R(\ell _{m-1},\ell _{m-1})+x\) which sandwich \(R(\ell _{m-1},\ell _{m-1})+x\) are \({\mathbf {1}}\)-traversable. The formal definition is as follows.

Definition 4.11

(Shrunken super-good) . Let \(R=R(\ell _m-1,\ell _m)=V_2-\mathbf {e}_1\). We say that \({\overline{{\mathcal {S}}{\mathcal {G}}}}(R)\) occurs if there exist integers \(0\leqslant s_1\leqslant \ell _m-\ell _{m-1}-1\) and \(0\leqslant s_2\leqslant \ell _m-\ell _{m-1}\) such that the intersection of the following events, in the sequel \({\overline{{\mathcal {S}}{\mathcal {G}}}}_{s_1,s_2}(R),\) occurs (see Fig. 4)

The event \({\overline{{\mathcal {S}}{\mathcal {G}}}}(V_2)\) is defined by translation of \({\overline{{\mathcal {S}}{\mathcal {G}}}}(R)\). Then for any \(\eta _{2}\in {\overline{{\mathcal {S}}{\mathcal {G}}}}(V_2)\), the segments \(I_1\) and \(I_3\) are given by

where \(s_2(\eta _2)\) is an arbitrary one of the choices of \(s_2\) such that \(\eta _2\in {\overline{{\mathcal {S}}{\mathcal {G}}}}_{s_1,s_2}(R)\) for some \(s_1\).

As before, let \(\eta _i:=\eta _{V_i}\). Recalling Definition 4.3 and Figs. 2 and 4, it is not hard to check that

since \(I_1\) extends the horizontal traversability, while the vertical one and the core of type \(\varLambda ^{(n-2)}\) are witnessed by \({\overline{{\mathcal {S}}{\mathcal {G}}}}(V_2)\). For \(\eta \in {\hat{{\mathcal {C}}}}_{1,2}\) we set

By (4.17) and its analogue for \(I_3\) we have

We can finally apply Proposition 3.7 with parameters \(\varOmega _i=\varOmega _{V_i}\) for \(i\in \{1,2,3\}\), \({\mathcal {C}}_{1,2}={\mathcal {S}}{\mathcal {G}}(V_1\cup V_2)\), \({\mathcal {C}}_{2,3}={\mathcal {S}}{\mathcal {G}}(V_2\cup V_3)\), \({\mathcal {A}}_1={\mathcal {T}}^\omega _{\leftarrow }(V_1)\), \({\mathcal {A}}_3={\mathcal {T}}^\omega _{\rightarrow }(V_3)\) and \({\hat{{\mathcal {C}}}}_{1,2}\) and \({\mathcal {A}}_3^{\eta _1\cdot \eta _2}\) as above. Set

Then Proposition 3.7 gives that for some \(c>0\) we have

By (4.18), \(\min _{\eta \in {\hat{{\mathcal {C}}}}_{1,2}}\mu _{\varLambda ^{(n,+)}}({\mathcal {A}}_3^{\eta _1\cdot \eta _2})\geqslant q\). Furthermore, in Lemma A.4 we will establish that \(\mu _{V_1\cup V_2}({\hat{{\mathcal {C}}}}_{1,2} | {\mathcal {C}}_{1,2})\geqslant q^{O(1)}\). Combining these observations with (4.19), we get

We now turn to examine the four averages w.r.t. \(\mu _{\varLambda ^{(n,+)}}(\cdot | {\mathcal {M}}\cup {\mathcal {N}})\) appearing in the r.h.s. of (4.20). Recall that \({\mathcal {M}}\cup {\mathcal {N}}={\mathcal {S}}{\mathcal {G}}^\omega (\varLambda ^{(n,+)} )\). Proceeding as for the r.h.s. of (4.14), we obtain that

Indeed, the only difference is that \({\mathcal {C}}_{1,2}={\mathcal {S}}{\mathcal {G}}(\varLambda ^{(n)})\), so that we recover a \({{\mathbf {1}}} \) boundary condition, and we use that \(c_x^{\varLambda ^{(n)},{{\mathbf {1}}} }\leqslant c_x^{\varLambda ^{(n,+)},{{\mathbf {1}}} }\) and similarly for \(V_2\cup V_3\) instead of \(\varLambda ^{(n)}\). We will now explain how to upper bound the third average in (4.20),

the fourth one being similar. We need to distinguish two cases, according to whether the boundary condition \(\omega \) has an infection on the column \(V_3+\mathbf {e}_1\) or not.

Assume \(\omega _{V_3+\mathbf {e}_1}={\mathbf {1}}\). In this case \({\mathcal {A}}_3={\mathcal {T}}_{\rightarrow }(V_3)=\varOmega _{V_3}\setminus \{{\mathbf {1}}\}\) and Proposition 3.2(1), gives that

with \({\tilde{c}}_x(\eta )=1\) if x has at least one infected neighbour inside \(V_3\) and \({\tilde{c}}_x(\eta )=0\) otherwise. For \(x\in V_3\) let

Recall that \({\mathcal {S}}{\mathcal {G}}^\omega (\varLambda ^{(n,+)})={\mathcal {M}}\cup {\mathcal {N}}\supset {\mathcal {M}}={\mathcal {S}}{\mathcal {G}}(\varLambda ^{(n)})\cap {\mathcal {T}}_{\rightarrow }(V_3)\) and \(\mu _{\varLambda ^{(n,+)}}=\mu _{\varLambda ^{(n)}}\otimes \mu _{V_3}\). Then we have

the inequality using \({\mathcal {M}}\subset {\mathcal {S}}{\mathcal {G}}^\omega (\varLambda ^{(n,+)})\), the fact that \({\tilde{c}}_x=1\) implies \({\mathcal {T}}_\rightarrow (V_3)\) and \(\mu ({\tilde{c}}_x | {\mathcal {T}}_\rightarrow (V_3))\geqslant q\) (here we use that \(V_3\) is not a singleton, which follows from \(m\geqslant 1\)). Then, by the law of total variance, we get

Next, we use Proposition 3.3 with parameters \({{\mathbb {P}}} =\mu _{\{x\}\cup \varLambda ^{(n)}}(\cdot | {\mathcal {S}}{\mathcal {G}}(\varLambda ^{(n)}))\), \(X_1=\eta _{\varLambda ^{(n)}}\), \(X_2=\eta _{x}\), \({\mathcal {H}}=\{\eta \in \varOmega _{\varLambda ^{(n)}}:\eta _{x-\mathbf {e}_1}=1\}\), in order to write

Recalling (4.5), we get