Abstract

The angular and supramarginal gyri (AG and SMG) together constitute the inferior parietal lobule (IPL) and have been associated with cognitive functions that support reading. How those functions are distributed across the AG and SMG is a matter of debate, the resolution of which is hampered by inconsistencies across stereotactic atlases provided by the major brain image analysis software packages. Schematic results from automated meta-analyses suggest primarily semantic (word meaning) processing in the left AG, with more spatial overlap among phonological (auditory word form), orthographic (visual word form), and semantic processing in the left SMG. To systematically test for correspondence between patterns of neural activation and phonological, orthographic, and semantic representations, we re-analyze a functional magnetic resonance imaging data set of participants reading aloud 465 words. Using representational similarity analysis, we test the hypothesis that within cytoarchitecture-defined subregions of the IPL, phonological representations are primarily associated with the SMG, while semantic representations are primarily associated with the AG. To the extent that orthographic representations can be de-correlated from phonological representations, they will be associated with cortex peripheral to the IPL, such as the intraparietal sulcus. Results largely confirmed these hypotheses, with some nuanced exceptions, which we discuss in terms of neurally inspired computational cognitive models of reading that learn mappings among distributed representations for orthography, phonology, and semantics. De-correlating constituent representations making up complex cognitive processes, such as reading, by careful selection of stimuli, representational formats, and analysis techniques, are promising approaches for bringing additional clarity to brain structure–function relationships.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The angular gyrus (AG) consists of higher order association cortex and along with the supramarginal gyrus (SMG) constitutes the inferior parietal lobule (IPL). Numerous distinct functions have been attributed to the AG, including language, mathematics, memory, attention, social cognition, and domain-general effects of task difficulty (Geng and Vossel 2013; Humphreys and Tibon 2022; Kuhnke et al. 2022; Lin et al. 2018, 2020; Mattheiss et al. 2018; Seghier 2013; Wang et al. 2017; Zhang et al. 2022). Even within the relatively well-defined task of single-word reading (also referred to as word naming or decoding), multiple functions have been identified in this area. For example, a meta-analysis with strict inclusion and exclusion criteria for studies using words as stimuli showed the left AG to be among several brain regions strongly related to processing word meanings (semantics; Binder et al. 2009). However, reading involves deriving from visual input not only semantics, but also the spoken word form (phonology) as well as processing the visual word form itself (orthography). Like semantics, there is also evidence that phonology and orthography are processed by multiple subregions of the left IPL (Cattinelli et al. 2013; Pugh et al. 2010; Taylor et al. 2013). However, as we will explore in the next section, the functional segregation of these anatomical subregions has been equivocal at times, which may be due to variations in task and stimuli as well as variations in definitions of anatomical boundaries and nomenclature in a field still struggling to find standards in analytic procedures.

It is critical to note that much of the vast literature on semantic, phonological, and orthographic processing in the IPL relies on univariate approaches to cortical activation. This approach separately analyzes each parcel of brain (usually a voxel, or a set of voxels collected into an ROI) and compares overall means between conditions. Here, we use representational similarity analysis (RSA; Kriegeskorte et al. 2008) and partial correlations among the representations to test for neural correspondence with all three of these written word representations in the same data set, with the goal of determining whether the spatial distribution of these representations separates along known boundaries between the AG and SMG within the IPL. RSA is a multivariate approach that analyzes differences in patterns across parcels of brain being analyzed. While univariate and multivariate approaches often converge, they are known to diverge in cases, where the overall means between conditions may be similar, but the values constituting those means are distributed differently between conditions (Cox et al. 2015). While the RSA approach is used here to test for activation patterns that correspond with stimulus similarity spaces defined in terms of semantics, phonology, or orthography, our hypotheses were based on the substantial prior relevant literature detailed in the following sections.

Challenges to identifying structure–function correspondence in reading

There are at least two issues contributing to unresolved questions about the distribution of function in the AG and SMG—one relating to neural structure that is relevant across cognitive domains, the other relating to function and hence specific to reading. On the neural side, the IPL, as noted above, consists of the SMG and AG, roughly corresponding to Brodmann areas 40 and 39, and von Economo areas PF and PG. While there is ample evidence for these areas being anatomically and functionally distinct (Caspers et al. 2006; Nelson et al. 2010), their borders are not always clear on gross surface anatomy. Adding to the confusion is that many users of major brain imaging analysis software packages rely on atlas-registered approximate segmentations for automated labeling, and the exact borders of those labels do not entirely agree across atlases (Devlin and Poldrack 2007). Further complicating matters is that several major stereotactic atlases label a separate area as the IPL that is spatially distinct from the SMG and AG. This can be seen in Fig. 1A from the electronic version of the Talairach and Tournoux atlas (Lancaster et al. 2000), Fig. 1B from the macro labels provided in the Eickhoff-Zilles Anatomy Toolbox (Eickhoff et al. 2005), and Fig. 1C from the Automated Anatomical Labeling atlas (Tzourio-Mazoyer et al. 2002). The curiosity of labeling an area of IPL as separate from its SMG and AG constituents is not found in other major brain atlases, such as the electronic Harvard–Oxford atlas (Fig. 1D; Desikan et al. 2006) or in standard hard copy atlases (Afifi and Bergman 1998; Damasio 2005; Haines 2000).Footnote 1 Here we use the current cytoarchitecture-based definitions (as shown in Fig. 1E), where PF generally corresponds to SMG, and PG corresponds to AG (Caspers et al. 2008). These cytoarchitectonic maps have the advantage of quantifying (rather than ignoring) known inter-individual differences by providing maximum probability maps, as used in Fig. 1E.

Left inferior parietal lobule as defined in four electronic atlases. A Talairach and Tournoux, B Eickhoff–Zilles macro-labels, C automated anatomical labelling atlas, D and the Harvard–Oxford atlas, and E the cytoarchitectonic atlas of Caspers et al. (2008)

A second issue contributing to unresolved questions about the spatial distribution of distinct reading-related functions is that alphabetic writing systems are designed to have correlations between orthography and phonology. Although the English writing system is an outlier in that its correspondences between orthography and phonology are less systematic than other alphabetic systems (Venezky 1970), it is still the case that some 80% of English words have systematic orthography–phonology mappings (Ziegler et al. 2014). This correlation between letter patterns and speech sounds makes it difficult to decisively attribute neural activation to variance in one compared to the other. While this is true generally, it is also relevant to the combination of orthographic and phonological effects that have been reported in the IPL (Bolger et al. 2008). In addition, while the mapping of those representations to semantics is nearly arbitrary (there is nothing in the string dog compared to, say, canis, that would make one inherently refer more than the other to a favorite type of pet), there is ample evidence to suggest that reading some word forms recruits semantics more than others, as a consequence of an integrated and dynamic cognitive system for reading (Binder et al. 2005; Graves et al. 2010; Sabsevitz et al. 2005; Strain et al. 1995; Woollams et al. 2007). Therefore, specific, targeted effort is required to disentangle effects of the orthographic, phonological, and semantic sub-components of reading. Some sense of the consistent neural processing related to these components can be obtained through meta-analysis of existing functional neuroimaging studies, either through careful, systematic selection of studies related to one or another of the components, or less formally through automated meta-analysis (the use of which we illustrate below in Fig. 2). We are aware of no previous study, however, that has specifically examined the spatial distribution of representations related to orthography, phonology, and semantics in the same study, either throughout the whole cortex or by comparing areas within the IPL.

Semantic processing in the IPL

Meta-analyses aggregating data primarily from univariate fMRI studies of word-based (lexical) semantics have found evidence for a role of the angular gyrus in processing semantics (Binder et al. 2009; Cattinelli et al. 2013). Notably, the angular gyrus was highlighted alongside other areas forming the putative default mode networkFootnote 2 (Binder et al. 1999; Humphreys and Lambon Ralph 2015). Subsequent studies using multivariate approaches to focus on the neural areas corresponding to distributed semantic features have also reported correspondences with the angular gyrus (Handjaras et al. 2017), alongside other areas of the default mode network (Fernandino et al. 2016, 2022; Huth et al. 2016; Mattheiss et al. 2018).

As fMRI studies, such as these are largely correlational, it is also instructive to consider findings from other modalities by which more causal inferences may be derived. Historically, the role of the IPL in reading was documented as early as the nineteenth century with Déjerine (1891), who reported that a patient suffering from “alexia with agraphia” had a lesion in the left AG on post-mortem investigation (Catani and Ffytche 2005). These findings were subsequently supported, extended, and refined by the work of Geschwind (1965) to include a more general role for the AG as higher order association cortex capable of supporting multi-modal conceptual or semantic processing (Price 2000). Additional evidence for this comes from studies of transcortical sensory aphasia. This condition is characterized by relatively selective semantic deficits without phonological impairment and is often associated with damage to the cortex and white matter underlying the left posterior middle temporal gyrus (pMTG) and AG (Alexander 2003; Damasio 1992). Overall there appears to be considerable evidence for the AG as a critical node in the network of brain areas associated with semantic processing.

Phonological processing in the IPL

While the evidence just summarized may seem to clearly associate the AG with semantics, the AG has also been associated in some studies with other language and reading processes, such as phonology. For example, phonological skills in reading have been reported to be correlated with differences in local neural metabolism in the left AG (Bruno et al. 2013). Effects of reading pseudohomophones (e.g., one spelled as wun) have also been interpreted as recruiting orthography–phonology mapping, with effects reported in both the SMG and AG (Borowsky et al. 2006). Aggregating across studies, a meta-analysis of fMRI studies examining the cognitive components of reading also found orthography–phonology mapping to be associated with the left “inferior parietal cortex” (Taylor et al. 2013). The authors did not specify which parts of the IPL they were referring to, but visual inspection of their figure (numbered 8) suggests they were referring to part of the area highlighted here in green in Fig. 1. That is, they appear to be referring to an area as IPL that includes neither the SMG nor AG. A different meta-analysis zoomed in on the IPL to examine the spatial distribution of multiple functions that have prominently been associated with it, including semantic and phonological processing (Humphreys and Lambon Ralph 2015). When separating out tasks that included a decision component, such as lexical decision or rhyme judgment, tasks that instead focused on phonological access such as reading or naming found consistent evidence for phonological processing in the SMG rather than AG. Their finding is in agreement with other meta-analyses that found effects of phonology in SMG rather than AG when testing across the whole brain (Tan et al. 2005; Vigneau et al. 2006). Direct comparison of phonological (rhyme judgment) to semantic judgment tasks as functional localizers has shown activation of SMG for phonology and AG for semantics at the level of both the group and individual participants (Yen et al. 2019). Thus, it seems that while considering a broad array of studies and the IPL in general can lead to ambiguities in spatial interpretation, applying more selective criteria for study inclusion and focusing on anatomical distinctions within the IPL leads to results that more clearly implicate the SMG rather than the AG in phonological processing.

The functional neuroimaging studies just described were focused on neurotypical participants. Clinical conditions such as dyslexia and aphasia can also offer insight into the neural regions critically involved in phonology. Dyslexia is a reading impairment thought to be primarily related to difficulties either with mapping orthography to phonology (Rayner et al. 2001), or with phonological processing itself (Snowling 1998). Meta-analyses comparing participants with developmental dyslexia to those without it showed reduced activations during reading for the dyslexia group in left SMG and vOT (Maisog et al. 2008; Richlan et al. 2009; Vandermosten et al. 2016), consistent with a role for the left SMG in processing phonology or orthography–phonology mapping. In terms of more focal neurological differences, conduction aphasia is characterized by difficulty with auditory verbal repetition, phonological working memory, and phonological deficits, such as phonemic paraphasias. While classically associated with damage to the arcuate fasciculus, more contemporary studies have shown conduction aphasia to be associated with damage to the cortex and white matter underlying the left posterior superior temporal gyrus (pSTG) and SMG (Alexander 2003; Buchsbaum et al. 2011; Damasio and Damasio 1980). Impaired judgment of phonology in terms of word rhymes, but not semantics, has also been associated with damage to the left pSTG and SMG but not pMTG or AG (Pillay et al. 2014). Together these studies point to a relatively selective role for the SMG in phonology and the AG in semantics. However, typical reading is interactive, leaving open the question of how these areas support reading in an intact (rather than damaged or perturbed) system, where processing and activation of representations may be dynamically distributed, depending on the properties of the words being read.

Orthographic processing in the IPL

Somewhat less clear is the role of the IPL in representing orthography. Whereas the extant literature on orthography focuses on regions largely outside the IPL in the vOTC thought to house the visual word form area (Dehaene and Cohen 2011; McCandliss et al. 2003), findings dating back to Dejerine have suggested a role of the IPL in orthographic word forms. More recent findings suggest that orthographically sensitive sub-regions within the vOTC form a posterior visual analysis system projecting to the IPS and a more anterior system in mid-vOTC projecting to the AG (Lerma-Usabiaga et al. 2018) or the SMG (Seghier and Price 2013). Moreover, evidence from multiple imaging modalities points to the influence of orthography even in tasks involving auditory words in SMG (Pattamadilok et al. 2010) and AG (Booth et al. 2004). The inconsistent findings related to orthography in the IPL may be due to the extent to which the orthographic stimuli or the tasks involved are related to phonology. To the degree that orthographic and phonological representations can be distinguished, we hypothesize that orthographic processing may be found primarily outside of the IPL proper, including in dorsal areas of the IPS that are known to process spatial information (Cona and Scarpazza 2019; Sack 2009) and attentional information (Duncan 2010; Vossel et al. 2014). With regard to written language, the IPS has been associated with orthographic processing for tasks involving reading degraded written words (Cohen et al. 2008) and spelling (Purcell et al. 2011).

Current study

This study aims to provide a roadmap for clarifying neural distributions of cognitive functions using stimuli and features selected to minimize multicollinearity among representations, along with partial correlations for revealing how those representations account for variance in neural activation across different cortical areas. We first use automated tools for meta-analyses to gain a general sense of how phonological, orthographic, and semantic processing appear to be neurally distributed based on an aggregate of previous studies. Critically, we then use RSA to test for cortical regions, where activation patterns correlate with these cognitive representations. Use of RSA, in contrast to the numerous univariate studies described above, allows us to take multiple representations into account in the same multivariate study. This approach is similar to Fischer-Baum et al. (2017), except here we initially focus on subregions of the IPL to test our specific hypotheses, then broaden our scope to test how patterns in the IPL fit within the context of whole-cortex RSA results. What emerges is a more specific picture than has previously been offered of how phonological, orthographic, and semantic patterns are distributed in the IPL during the complex cognitive process of reading.

Methods

ROI definitions

Areas PF and PG from Caspers et al. (2008) were used as approximations of the SMG and AG. Specifically, the SMG was approximated by combining PF proper with sub-areas PFm, PFt, and PFcm (black in Fig. 1E, with sub-parts shown in supplementary Fig. S1). The AG was approximated by combining PGa and PGp (white in Fig. 1E, with sub-parts shown in Fig. S1). To test for selectivity of left hemisphere patterns, while also holding size of the ROIs constant, we tested mirror images of these ROIs in the right hemisphere as well. These ROIs were based on a map of maximum probabilities, as described by Eickhoff et al. (2005) and distributed with AFNI in the file, “TT_caez_mpm_22 + tlrc”. The defined areas of maximum probability were used as provided, with no threshold required.

Informal meta-analyses

We performed an informal meta-analysis using the Neurosynth tool to gain an overall sense of how results from previous studies of orthographic, phonological, and semantic processing are distributed both in the IPL and throughout the cortex. Neurosynth aggregates results across studies, enabling rapid meta-analyses based on search terms to summarize the relevant scientific literature.

Neurosynth is based on activation coordinates derived from published studies (Yarkoni et al. 2011). The coordinates are extracted using an automated parser that only considers hyper-text markup language (HTML) versions of articles from journal websites. The developers include a specific caveat that because of lack of precision in the parser, and due to variation in how activations are converted from Talairach to MNI space, detailed, spatially specific structure–function inferences should not be drawn from Neurosynth results. For this reason, we consider the Neurosynth results reported here to be an informal meta-analysis, included only to give a rough estimate of what the extant literature may say in general about the spatial distribution of semantic, phonological, and orthographic processing.

We performed an association test for the terms: “semantic” (1031 studies returned), “phonological” (377 studies), and “orthographic” (132). In the case of orthographic and phonological, those were the only matching terms. We chose “semantic” because that term matched an order of magnitude more studies than did alternatives, such as “semantic memory” (123 studies), “semantically” (122), or “semantics” (84). Maps from Neurosynth are automatically thresholded using a False Discovery Rate (Benjamini and Hochberg 1995) of 0.01.

Experimental data set

The current RSA analyses were performed on fMRI data from a study of single-word reading aloud that has been published previously (Graves et al. 2010). Relevant methodological details are summarized here.

Participants

A group of 18 healthy, typical readers with a mean age of 23.2 years (SD: 3.4), all right-handed speakers of English as a first language, gave written informed consent to participate in the study. Participants met inclusion criteria for being able to safely undergo fMRI scanning, including not being claustrophobic, pregnant, or having other contraindicated medical conditions. The participants reported having no history of neurological or psychiatric diagnosis, and no history of learning disability.

Task and stimuli

The task participants were asked to perform was to simply read individual words out loud during fMRI scanning. Stimulus presentation followed a rapid event-related design, which relative to blocked designs has been shown to minimize MRI data quality concerns from overt speech production (Grabowski et al. 2006; Mehta et al. 2006; Soltysik and Hyde 2006).

Stimuli were 465 monosyllabic English words, all of which had a noun lemma that was more frequent than any other part of speech the word might have according to the CELEX psycholinguistic database (Baayen et al. 1995). The words were selected to be de-correlated across six psycholinguistic variables related to word form (length-in-letters, bigram frequency, biphone frequency, spelling-sound consistency) and meaning (word frequency, imageability).

Data collection

Details of data collection have been documented previously (Graves et al. 2010). Briefly, MRI data were collected using a 3 T GE Excite scanner with an 8-channel array head coil. High-resolution anatomical scans were acquired using a spoiled-gradient echo sequence consisting of 134 contiguous axial slices (0.938 × 0.938 × 1.000 mm). Functional time series scans were acquired using a T2*-weighted echo-planar imaging sequence with the following acquisition parameters: TR = 2 s, TE = 25 ms, field of view = 192 mm, acquisition matrix: 64 × 64 pixels for in-plane voxel dimensions of 3.0 × 3.0 mm, and a slice thickness of 2.5 mm with a 0.5 mm gap. Thirty-two interleaved axial slices were acquired, and each of the five functional runs consisted of 240 whole-brain image volumes.

During continuous acquisition, participants read words aloud into a scanner-compatible microphone connected to signal processing equipment outside the scanner that performed spectral filtering of scanner noise from speech. This enabled scoring of the content of speech for correct compared to incorrect responses (e.g., mispronunciations, partial repetitions, or occasional failures to respond) and calculation of response times as the time from stimulus display onset to speech onset for correct responses.

Predicted representation matrices

The relationships among the word stimuli were characterized in terms of relative distances based on semantic, phonological, and orthographic representations. For semantic representations, we chose according to three criteria: (1) distributed representations, (2) capturing associative meanings between words, (3) that correlate reliably with behavioral and neural measures from tasks that depend on word meanings. The Global Vectors for Word Representation (GloVe) model of word embeddings fit all three criteria (Pennington et al. 2014; Pereira et al. 2016, 2018). Specifically, the GloVe representations consisted of 300-unit vectors for each word. Pre-trained word vectors were used based on the text contained in Wikipedia as of 2014. Each unit in the vector could take positive or negative decimal values. While the individual units themselves are not interpretable, the vectors are interpretable. For example, the Euclidean distance between the vectors for frog and toad is closer than the distance between frog and lizard. The vectors are also arithmetically meaningful, such that the difference between the vectors for man and woman is proportional to the difference between king and queen. Essentially the GloVe vectors represent contextual associations (where dog would be closer to leash than mouse), rather than, for example, taxonomic relations (where dog would be closer to mouse than leash) (Mirman et al. 2017).

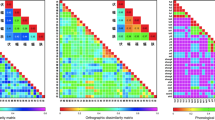

In contrast to correlation distances between semantic representation vectors for each word pair, orthographic and phonological distances were defined in terms of string edit distance. The orthographic measure was Levenshtein edit distance (Yarkoni et al. 2008). This was defined as the minimum number of one-letter additions, substitutions, or deletions needed to turn one string into another, as implemented in the Phonological CorpusTools software (Hall et al. 2019). Phonological distances were defined similar to orthographic distances, except that phoneme transcriptions of the words were used instead of letters, and each phoneme was weighted by its phonetic features (manner and place of articulation). Including phonetic features when calculating phonological edit distance (Allen and Becker 2015) allowed us to distinguish between orthographic and phonological distances in a way that resulted in a significant but somewhat modest correlation between the two measures (Spearman rho = 0.437, p < 0.0001). The phonological and semantic patterns across the stimulus set were also significantly correlated, but of minimal magnitude (rho = −0.025, p < 0.0001), while there was no significant correlation between the semantic and orthographic patterns (rho = −0.005, p > 0.1).

Representational similarity analyses

FMRI data pre-processing

The AFNI software suite was used for pre-processing the fMRI data (Cox 1996; Cox and Hyde 1997). This included skull stripping the anatomical and functional data, slice-timing and motion correcting the functional data, for which the first six images were discarded to avoid initial saturation effects. The high spatial resolution anatomical scan for each participant was then co-registered to the motion corrected time series data (Saad et al. 2009). Voxelwise single-trial effects were estimated in native space using least-squares-sum multiple regression (Mumford et al. 2012), as implemented in the AFNI program 3dLSS. The non-linear transform between the aligned anatomical image and the Colin brain in Talairach atlas space (Lancaster et al. 2000) was calculated and applied to move the data into group space for RSA analyses.

Spatial smoothing using a 6 mm full-width half-maximum kernel was applied after RSA maps were calculated for individual subjects, before combining them for group-level inference (as in Staples and Graves 2020). We accounted for the level of smoothing during mapwise cluster correction for multiple comparisons (Cox et al. 2017). Note that applying smoothing has been shown to not have deleterious effects on multivariate pattern analyses, of which RSA is an instance (de Beeck 2010). Indeed, smoothing may even slightly enhance the ability to detect valid effects (Hendriks et al. 2017).

ROI partial correlation analyses

We use representational similarity analysis (RSA) to characterize the relationship between the brain and the derived representations of semantics, orthography, and phonology. RSA allows the direct comparison of representations from different modalities by transforming them into a stimulus–stimulus pairwise similarity space (Kriegeskorte et al. 2008). For the semantic, phonological, and orthographic representations, representational dissimilarity matrices (RDMs) were generated by finding the pairwise correlation distance (1—Pearson’s r) for all stimulus vectors. The ROI analyses were performed in terms of partial correlations, where the influence of two of the three representations were partialled out from the main representation of interest. The neural representation for an area of cortex was defined as the vector of beta weights within the ROI. Beta values were z-score normalized across stimuli within each voxel. The neural and psycholinguistic-based RDMs were then compared using Spearman’s rho, and the resultant value was assigned to the ROI. Significance of the correlations was evaluated using a two-tailed, one-sample bootstrap test.

Whole-cortex searchlight RSA

To test for the possibility of functional heterogeneity within the ROIs, and to gain a larger picture of how the relevant representations are distributed across the cortex, we performed a whole-cortex RSA searchlight analysis using the PyMVPA package (Hanke et al. 2009) in Python 2.7.17. The neural representation for each area of cortex in this analysis was defined as the vector of beta weights within a 3-voxel radius sphere. An RDM was then constructed by finding the pairwise correlation distance between the beta weight vectors for each stimulus. The neural and psycholinguistic-based RDMs were then compared using Spearman’s rho, and the resultant value was assigned to the center voxel. This sphere was moved over the whole cortex, such that each gray matter voxel served as its center exactly once. The resulting correlation coefficient maps for each subject were then smoothed using a 6 mm full-width half-maximum kernel and entered into a 1-sample t test, before being Fisher z-transformed and thresholded at a voxel-level p < 0.005, with a cluster extent 234.9 mm3 for a mapwise correction to p < 0.05.

In addition to the bivariate RSA just described, we also performed partial correlation RSA as was done with the ROI analyses, but here implemented with a searchlight using the CoSMoVMPA package (Oosterhof et al. 2016) for Matlab (R2021b version 9.11.0.1837725). Partial correlation RSA determines the correspondence between the neural RDM and one of the psycholinguistic-based RDMs, having statistically controlled for the effect of the other psycholinguistic-based RDMs. We did this separately for the semantic, phonological, and orthographic representations, partialling out the other two psycholinguistic representation RDMs that were not the focus of the analysis in each case. The process was identical to the RSA method outlined above, other than the inclusion of the nuisance RDMs.

Previous univariate analyses

While the current study is focused on multivariate pattern analysis (specifically, RSA) of the fMRI data, for comparison we provide maps of the previous univariate results with the current ROIs overlaid onto the same cortical surfaces as the RSA results (supplementary Figs. 2–5). The basic contrast of all word reading trials (with the small number of production error trials modeled separately) compared to baseline (fixation) is provided to illustrate the overall set of brain areas, where the mean signal intensity is activated for reading aloud. Additional univariate results are from the following parametric analyses: word frequency, defined as log-transformed occurrences per million from the CELEX lexical database (Baayen et al. 1995), word imageability, defined as mean subjective human ratings of the degree to which each word calls to mind a sensory impression, and bigram frequency, defined as the mean log-frequency of each word with the same number of letters as the target word that shares the same two-letter (bigram) combination in the same relative position. For additional details see Graves et al. (2010).

Results

Informal meta-analyses

To gain an overall sense of how previous functional neuroimaging results related to semantic, phonological, and orthographic processing are distributed throughout the cortex, and particularly with respect to SMG and AG divisions of the IPL, we performed informal meta-analyses using Neurosynth (Yarkoni et al. 2011). Figure 2 shows results from studies of semantic, phonological, and orthographic processing, where results from these cognitive domains partially overlap, occurring largely within association cortex rather than primary somato-sensory cortex. Within the IPL, the left SMG showed primarily phonology-associated activations, while the left AG showed primarily semantics-associated activations. In the right hemisphere versions of the ROIs, results only appeared for the AG in terms of semantics. That phonological activation appeared in the left but not right SMG is consistent with a great deal of literature on left-hemisphere lateralization for phonology, including from multiple systematic meta-analyses (Jobard et al. 2003; Tan et al. 2005; Vigneau et al. 2006).

As Neurosynth returns whole-brain results, we thought it would be instructive to view results in the IPL alongside those whole-brain results. In contrast to the relative separation of phonology and semantics-related activations in the IPL, semantic, phonological, and orthographic activations spatially overlapped in left-sided areas including the inferior frontal junction (IFJ, an area centered on the intersection of the precentral and inferior frontal sulci), IPS, supplementary motor area (SMA), posterior superior temporal gyrus (pSTG), and vOTC. With the exception of pSTG, these areas have been consistently associated with what has been termed the multiple demand network, because it responds to tasks across multiple modalities (Duncan 2010; Fedorenko et al. 2013). As in the IPL, whole-brain results were generally left-lateralized, as expected for the language-related search terms used here.

ROI partial correlation analyses

Our primary hypothesis-driven results were in terms of planned ROI analyses (Fig. 3). Specifically, we considered the left hemisphere ROIs for the AG (white in Fig. 1E) and the SMG (black in Fig. 1E). In the left and right AG, only semantics was significantly associated with cortical activation patterns. However, semantics only differed significantly from the other conditions in the right AG. The patterns of cortical activation in left SMG were significantly associated with both semantic and phonological representations, while none of the tested representations were significantly associated with the right SMG.

RSA partial correlation results for a priori ROIs in the left and right angular gyrus (AG) and supramarginal gyrus (SMG). Stars without bars indicate conditions that are significant relative to zero, whereas stars over bars are differences between conditions. *p < 0.05, **p < 0.01, ***p < 0.005, ****p < 0.0001

Searchlight RSA analyses

Focusing first on searchlight results within the IPL, Fig. 4 shows a more detailed view of how the different bivariate (Fig. 4A) and partialled (Fig. 4B) RSA results are distributed with respect to the cytoarchitecture-defined boundaries of the SMG and AG. Without attempting to partial out the effects of each factor on the others within the IPL, semantic representations are localized to the AG and are not found in the SMG (Fig. 4A). Phonological representations are largely found in the SMG. Phonological effects also extend into the anterior sector of the AG (generally corresponding to PGa, see sub-area overlays in Fig. S1), including a small area of overlap with orthographic representations. Other than that area of overlap, orthographic representations lie almost entirely outside the IPL, including within adjacent areas of the pMTG, IPS, and superior parietal lobule (SPL). After partialling out the effects of each set of representations from the others, the representations that remained in the IPL were almost exclusively semantic (Fig. 4B), spanning PGa and PGp sub-areas of the AG (Fig. S1) and posterior–superior SMG (sub-area PFm). The one exception was a couple of small patches of cortex in the anterior SMG (primarily PFt) associated with phonological representations.

Expanding out from the IPL, we also consider the overall patterns of searchlight results at the whole-cortex level. Results from the separate bivariate RSA analyses of semantic, phonological, and orthographic representations with cortical patterns are shown in Fig. 5A. Beyond the left IPL, semantics-related representations were found to a lesser spatial extent in the left pMTG and the PCC region. When stimuli were characterized in terms of phonological similarity, corresponding neural representations were found in the IFJ, inferior frontal gyrus (IFG), anterior insula, anterior temporal lobe (ATL), superior and middle temporal gyri, IPS, and lateral and medial occipital lobe. These results were bilateral, but more spatially extensive on the left. Orthographic representations were found in left IFG extending into anterior middle frontal gyrus (aMFG), precentral gyrus, IPS, pSTG, along with lateral and medial occipital cortices. Phonological and orthographic representations overlapped in the left IFJ and ATL, along with lateral and ventral visual cortex.

Same searchlight results as Fig. 4, but zoomed out to show whole-cortex for A RSA results from separate bivariate comparisons of semantic, phonological, and orthographic representations with cortical representations. B RSA searchlight results from partial correlations with cortical representations, where the other two representations are partialled out from the representation of interest (e.g., semantic representations with orthographic and phonological representations partialled out)

Partialling out orthographic and phonological representations in the RSA searchlight analyses revealed more spatially extensive cortical correspondences with semantics beyond the IPL in left posterior MFG, STG, and lateral occipital cortex (Fig. 5B). Bilateral cortical representations corresponding to semantics were found in the IPS, along with right-sided results in medial occipital cortex and PCC. Results for phonological representations were largely in left insula, STG, and MTG, along with bilateral results in IFG pars opercularis, somato-motor and somato-sensory cortices, middle fusiform gyri, and ATL (partly overlapping with orthography on the left). Orthographic representations corresponded with cortical patterns in left IFG extending into aMFG, precentral gyrus, posterior inferior temporal gyrus, and IPS. Bilateral orthographic representations were found in lateral and ventral occipital cortices. As expected, partial correlation analyses revealed much less spatial overlap among representations compared to analyses, where the effects were not partialled out from each other.

For ease of comparison with the current RSA results, previously published univariate results from this data set (Graves et al. 2010) have been re-mapped to the current cortical surface with the SMG and AG contour overlays included (Supplementary Figs. 2–5). Note that the contrast of word reading relative to baseline (fixation) reveals activation for words in numerous areas often reported for this contrast (Fiez and Petersen 1998; Turkeltaub et al. 2002), including in this case the medial anterior temporal lobes (Fig. S2). Parametric results for relevant psycholinguistic factors are provided for word frequency to test for general lexical/word-level effects (Monsell et al. 1989; Seidenberg and McClelland 1989), imageability as a test for sensory aspects of semantics (Paivio 1991; Plaut and Shallice 1993), and bigram frequency as a test for effects of sub-lexical orthographic familiarity (Hauk et al. 2008). For the previous parametric results relevant to the current ROIs, greater activation for higher frequency words was found in bilateral AG, spreading to a small extent into posterior SMG. The opposite pattern, greater activation for lower frequency words was found in a small area of anterior SMG (Fig. S3). Similar to activation for higher frequency words, higher imageability words also showed activation in bilateral AG, with a slight spreading into right SMG (Fig. S4). Reading words of lower bigram frequency resulted in activation left SMG (Fig. S5).

Discussion

In this study we sought to determine the spatial distribution of semantic, phonological, and orthographic representations for reading within the IPL. In doing so our aim was to resolve longstanding questions about whether semantics is represented within the AG as distinct from phonology in the SMG, and whether orthography is processed within or adjacent to the IPL. Results from exploratory meta-analyses suggested at least a partial segregation within the IPL for semantics in AG and phonology in SMG. We were able to test these patterns directly using stimuli and representations that minimized multi-colinearity between orthography, phonology, and semantics, along with partial correlation approaches to RSA that allowed for statistical control over additional variables to focus on the variable of interest. Neuroanatomically (Fig. 1), we used cytoarchitectonic maps of PF (corresponding to SMG) and PG (corresponding to AG) for spatial localization, thereby avoiding the confusion of potential overlap with areas labeled IPL in some software-based atlases that lie outside the boundaries of the SMG and AG, even extending dorsally to the IPS and into the SPL.

The hypothesis-driven nature of this study is borne out in the ROI analyses (Fig. 3). As expected based on the automated meta-analysis (Fig. 2), as well as a previous systematic meta-analysis of largely univariate studies of semantic processing (Binder et al. 2009), neural correspondence with semantic representations was found in the AG ROI bilaterally. In the meta-analysis by Binder et al. (2009), semantic activation peaks were found to cluster in both left and right AG, although the effects were of greater magnitude and more spatially extensive on the left. No obvious left–right differences are found for semantics in our ROI analyses. However, we also examined the more fine-grained spatial distribution of results throughout the cortex with searchlight analyses. Figure 5B shows the partial correlation searchlight results, corresponding to the ROI partial correlations. Qualitatively, the RSA results for semantics appear more spatially extensive in the left than right AG. As our approach averaged neural activity across voxels within the ROI, it is likely that such averaging obscured the wider distribution of semantics that was revealed by the searchlight approach.

For phonology, as expected the distance-based patterns corresponded with neural patterns in the left but not right SMG. This result from the ROI analysis (Fig. 3) also corresponds with the partial correlation searchlight analysis (Fig. 5B). Minimal phonology-related searchlight results are seen in the right SMG, while zooming in on the left IPL (Fig. 4B) clearly shows phonology results within the left SMG. We note, however, that phonology results also extend more into the AG when the analysis lacks the statistical control of partial correlations. Therefore, in addition to the structure labeling ambiguities shown in Fig. 1, a lack of control over other relevant sources of variance might also be contributing to the lack of clarity for where exactly within the IPL phonological effects are most likely to be found.

One especially likely source of covariance with phonology comes from orthography. As languages with alphabetic writing systems such as English have a strong correspondence between orthography and phonology (Venezky 1970), examining the neural basis of these two types of representations separately presents a challenge. Although we used string edit distance for deriving both orthographic and phonological distances among our word stimuli, we critically included phonetic features corresponding to place and manner of articulation for each transcribed phoneme when calculating the phonological edit distance (Allen and Becker 2015; Hall et al. 2019). This enabled separate specification of orthographic and phonological relationships among our stimuli with only a moderate correlation between them.

Bivariate correlation RSA (Figs. 4A and 5A) revealed some overlap between orthography and phonology in the left AG. The spatially restricted nature of that overlap is presumably due at least in part to the only modest correlation between orthography and phonology in the stimulus representations. However, when the variance due to orthography, phonology, and semantics are statistically controlled relative to each other using partial correlations, no overlap between orthography and phonology is found within the IPL. Instead, neural correspondence with orthography is found within the IPS and SPL, largely in agreement with previous meta-analysis of orthographic processing (Purcell et al. 2011).

The whole-cortex RSA searchlight results largely agreed with the meta-analysis results in the IPL, but differed markedly in other areas, notably in the ATL. The ATL has been associated with semantic processing in multiple systematic meta-analyses (Binder et al. 2009; Visser et al. 2010a, b; Wang et al. 2010). One possibility for the lack of association between the ATL and semantics in the current study has to do with the potential for reduced signal surrounding air–bone interfaces that occur near the ATLs (Visser et al. 2010a, b). The current RSA results do, however, show associations between neural activity in the ATL and phonology when using partial correlations, and overlap between phonology and orthography when using simple bivariate correlations. It seems unlikely that semantic associations would be attenuated in the ATL when phonological and orthographic associations were not. In addition, the initial report on this data set included a univariate contrast of word reading compared to baseline (re-mapped here in Fig. S2). That contrast showed bilateral activation for words in the medial ATL, further pointing to the presence of detectable signal in that area.

A more likely explanation for lack of semantics-related findings in the ATL may relate to the particulars of the single-word reading aloud task and the hub-and-spoke model of neural semantics (Lambon Ralph et al. 2017; Patterson et al. 2007). Models of reading that use artificial neural networks (ANN) to simulate single word reading suggest that reading involves recruiting semantics to differing degrees depending on the nature of the words (Plaut et al. 1996). However, the minimum necessary to accomplish reading aloud is through mappings between orthography and phonology (Harm and Seidenberg 1999; Seidenberg and McClelland 1989). One possibility then is that the current task elicited correlations between neural activity and semantic representations or features for the words, but those features did not require the kind of integration that the hub-and-spoke model proposes to occur in the ATL. Indeed, Hoffman et al. (2015) showed that the lateral ATL only activates for semantics in reading aloud for spelling-sound inconsistent words that the ANN model predicts to particularly rely on semantics. While the current study included some words with low spelling-sound consistency, we manipulated consistency in a continuous rather than discrete fashion, raising the possibility that there were not sufficient numbers of inconsistent words to elicit semantic activation in the ATL.

Another pattern that occurred outside the IPL is that activity related to phonology was more widespread than activity related to semantics. This finding may be due to much of the variance explained in the bivariate analysis (Fig. 5A) reflecting correlations most related to the orthography–phonology transform involved in reading. In previous work (Staples and Graves 2020) we re-implemented an ANN model of reading that mapped orthographic inputs to a “hidden” intermediate layer of learned representations, which then mapped to phonological outputs, as in Plaut et al. (1996). To answer the question of how similar the current phonological representations are to the modeled orthography–phonology mappings, we constructed a representational dissimilarity matrix of the type used in the current study to define stimulus relationships in terms of phonology, but instead based it on representations from the hidden layer of the implemented ANN model. The two matrices were correlated at r = 0.43. This suggests that the current phonological representations are indeed related to the orthography–phonology transformation necessary for reading aloud, which may explain why bivariate correlations with phonology are found throughout the neural areas typically associated with reading. By contrast, results of the semantic RSA analysis were not as widespread. This may be related to the reasons outlined above for why neural associations with semantics were not found in the ATL. That is, semantic associations may come online when automatically processing word meanings, but semantics is only critical for reading aloud a smaller subset of spelling-sound inconsistent words, such as correctly pronouncing sew to rhyme with low instead of new (Woollams et al. 2007).

The more widespread results for phonology may also relate to the nature of the phonological representations themselves. Here, we have defined phonological relationships between words as consisting of both the identities of the sequence of phonemes used to say the word aloud, and the features associated with the production of each phoneme. Phonetic features include place (labial, dental, velar, etc.) and manner (stop, nasal, fricative, etc.) of articulation. One possibility is that the identity of phoneme sequences for words is related to function in the left SMG, while articulatory information in the features is related to function in more anterior areas, such as the IFG and insula. This distinction is consistent with previous studies showing difficulties with phoneme sequencing in cases of Wernicke and conduction aphasia associated with damage to the SMG, but relatively preserved articulation (Binder 2015; Buchsbaum et al. 2011; Damasio and Damasio 1980). Conversely, difficulties with articulation but relatively preserved retrieval of phoneme sequences occur in cases of apraxia of speech, which has been associated with damage to the IFG and anterior insula (Dronkers 1996; Hillis et al. 2004). That both these aspects of phonology are contained in the phonological representations used here may account for the presence of phonological results in both SMG and more anterior areas, such as IFG and anterior insula.

Comparing the RSA results to the informal meta-analysis, we note that the RSA results generally appear more focal (with the exception of phonology, likely for reasons noted above). That could be due either to the RSA results being from a single study or because the representation-based approach is more selective in examining particular sets of representations rather than activation contrasts, where specificity depends on assumptions about how the conditions that make up the contrast should be matched. Another likely possibility is the heterogeneity of task conditions that are included in meta-analyses across numerous studies. Together these multiple potential sources of differences between the RSA and meta-analyses make it difficult to interpret divergent results. Convergent results that occur despite the multiple sources of differences, however, are particularly compelling. This is especially true for results within the IPL focus of the current study, where semantic processing is shown within the left AG (along with phonology and orthography in the bivariate analysis), while phonological processing is shown primarily within the left SMG (though only for the bivariate analysis, where it was also found in left AG), lending partial support to the current hypotheses.

Departures from a simple dichotomy between semantics and phonology in the AG and SMG

The fact that semantics is significantly associated with activity patterns in both the left AG and SMG only partially fits with our hypothesis. This pattern is found in both the ROI and searchlight partial correlation analyses. The alternate finding that semantics is significant only in the AG for the bivariate correlation analysis is in closer agreement with the informal meta-analysis, where semantic results in the IPL were largely restricted to the left AG. While further work will be needed to definitively resolve the source of this divergence, we note that the semantic result in the SMG was primarily in sub-area PFm (sub-parcellation outlines in Fig. S1). The distinction between PFm and PG sub-areas noted in Caspers et al. (2008) also obtains in the Glasser et al. (2016) atlas, based on a combination of structural, functional, and connectional data. In terms of what relevant features these areas may have in common, beyond being associated with semantics in our study, both PG and PFm have been reliably associated with the putative default mode network (Briggs et al. 2018). Notably, the same study also associated PFm with the ventral attention network, as well as having reliable connections with the superior longitudinal fasciculus (SLF; Briggs et al. 2018). The SLF is considered to be synonymous with the dorsal part of the arcuate fasciculus (Porto de Oliveira et al. 2021), and PG is known to be connected to the arcuate through its posterior branch (Thiebaut de Schotten et al. 2014). Phonology was also associated with activity in PFt, anterior to PFm in the SMG. One speculative possibility is that during language tasks such as reading the more posterior PG activates bilaterally during retrieval of semantic information, while the more anterior PFm shows left-lateralized co-activation of semantics with phonology in PFt to populate a full lexical representation containing semantic and phonological information for production.

More generally, we note that while starting with the Caspers et al. (2008) parcellation is useful for grounding the ROIs in cytoarchitectual neuroanatomy, follow-up comparisons with multi-modal atlases are also useful. Comparing the spatial location of our results with parcellations from atlases such as Glasser et al. (2016) and Briggs et al. (2018) that also incorporate functional and tractographic information appears to be a helpful way to inform interpretations that may ultimately lead to data-guided hypotheses for future studies.

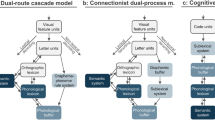

Theoretical implications

In this study we attempted to bring a greater degree of accuracy and precision to the mapping of reading-related function onto structure. We chose RSA because of its focus on representation spaces. This raises the larger questions of (1) what is a representation, and (2) what does it mean for a representation to be “stored”? Large-scale systems neuroscience frameworks extending Geschwind’s conception of dysconnection syndromes (Geschwind 1965) into the current era of cognitive neuroscience (Damasio and Damasio 1994; Damasio 1989; Mesulam 1998) may be helpful in this context, particularly when considered in light of the recent successes in finding useful convergence between ANNs and neural responses (Devereux et al. 2018; Kriegeskorte 2015; Staples and Graves 2020). Neural systems frameworks suggest a hierarchy of representations, where features of a concept are stored in distributed fashion across multiple primary and secondary sensory-motor cortices. During concept retrieval, the relevant features are bound together by temporally coincident re-activation. These re-activated patterns are similar to the experiential pattern that occurred during initial perceptual event, as coordinated by convergence zones in higher order association cortex (Damasio 1989). Elementary features such as lines, curves, and edges, in the case of a visual concept, are thought to be stored in early visual cortex. Combining these features into combinations to represent a table leg or surface is thought to occur in secondary visual cortex. Ultimately recall of the whole concept of a table, perhaps along with experiential associations with the concept such as impressions from previous uses of tables, are directed by higher order association cortices, such as those in the AG, MTG, and ATL, with the exact balance among them presumably depending on the task being performed.

A hierarchy not identical to the neural systems framework just described but analogous to it is implemented in ANNs, particularly those with a “deep” architecture containing multiple hidden layers. Ultimately, both types of systems involve initial extraction of elementary features, which are combined into successively higher order features, as guided by the relevant output or task at hand. What we have done with RSA is select a particular level of features thought to be relevant to a particular type of representation, such as semantics, defined a set of relationships among the stimuli in terms of those representations, and tested for areas showing patterns of activity that correlate with those hypothesized relationships. This approach should reveal, where in the neural hierarchy, those relationships (defined in terms of semantics, phonology, or orthography) are represented. Therefore, the areas shown in the current results to be associated with semantics, phonology, or orthography are not interpreted as containing complete, unitary storage of those representations. Rather, we interpret them as units (groups of neurons) that are active when sending and receiving information that combines at a particular level, which corresponds to the level of representations being probed in our RSA analyses.

Representations are often contrasted with more general effects of attention, working memory, difficulty, or time-on-task (Fedorenko et al. 2013; Graves et al. 2017; Taylor et al. 2014). While ANNs can be built that do or do not contain attention mechanisms, it is an open question as to whether or how attention influences the kinds of representations tested for here in RSA. Indeed, there is evidence to suggest that attention sharpens neural representations (Ester et al. 2016; Rothlein et al. 2018), and such an interpretation is not incompatible with our results. This may be particularly relevant for orthography. As expected, neural patterns associated with orthography were found along the ventral visual stream. Such effects were also found in the IPL-adjacent IPS. This pattern of both ventral temporal and IPS findings is similar to those from a meta-analysis of written word production (Purcell et al. 2011), a task that draws particular attention to the orthographic aspect of words. In light of our conception of distributed representations as described above, we suggest that the association of orthographic patterns with activity in the IPS is part of the overall neural representation of orthography that also includes patterns in the ventral visual stream, where IPS activity may reflect an attention or working memory component of the overall representation.

More generally, the goal of the field of cognitive neuroscience is to determine how the brain implements cognitive processes. A major component of that research involves testing the degree to which those processes or representations are localized to particular brain areas, presumably as nodes in larger networks. For progress to be made, we must at least agree on the neural locations to which we are referring. Unfortunately, and somewhat to our surprise, there appears to be no single gold standard for location and nomenclature, even within the relatively circumscribed area of the IPL. Landmark-based atlases would seem to be promising candidates for a gold standard (Devlin and Poldrack 2007), but even they show some disagreement over whether the entire lateral parietal lobe ventral to the IPS consists of the IPL, divided into the SMG and AG. In light of this inconsistency across sources, we suggest using data-based cytoarchitectonic maps of the relevant human brain areas wherever they are available. Like the other atlases shown in Fig. 1A–D, the Caspers et al. (2008) cytoarchitecture-based segmentation is also available in convenient electronic format through major brain image analysis software packages.

Overall, our results lend new clarity to the spatial organization of reading-related cognitive representations within the left IPL, with semantics represented in the AG, phonology in the SMG, and orthography represented in more dorsal parietal regions. This additional precision was achieved through careful selection of word stimuli and representational formats to aid in using partial correlation RSA for localizing orthographic, phonological, and semantic representations. On the neural side, cytoarchitecture-based segmentations were used to distinguish PF/SMG from PG/AG in a way that largely agreed with landmark-based atlases. We propose this approach as a roadmap for achieving additional cognitive and neural precision in future cognitive neuroscience investigations.

Data availability

The data sets analyzed for the current study are not publicly available due them containing information that could compromise research participant privacy/consent but are available from the corresponding author on reasonable request.

Notes

We note that the Duvernoy (1999) atlas labels an area in the parietal lobe that is anterior to the SMG and posterior to the postcentral gyrus as the parietal operculum, or “inferior parietal gyrus”. However, it is clear that this area does not correspond to that shown in green in Fig. 1A–C because the Duvernoy labeled area does not extend into the superior parietal lobule.

We initially refer to the default mode network as putative because the interpretation of this network is a matter of ongoing debate (Smallwood et al. 2021). Still unresolved is whether it supports, for example, semantic processing (Binder et al. 2009; Binder et al. 1999), monitoring of internal states (Whitfield-Gabrieli et al. 2011), or a more general state of rest for balancing metabolic demands (Raichle 2015).

References

Afifi AK, Bergman RA (1998) Functional neuroanatomy. McGraw-Hill, New York

Alexander MP (2003) Aphasia: clinical and anatomic issues. In: Feinberg TE, Farah MJ (eds) Behavioral neurology and neuropsychology, 2nd edn. McGraw-Hill, New York, pp 147–164

Allen B, Becker M (2015) Learning alternations from surface forms with sublexical phonology. Unpublished manuscript, University of British Columbia and Stony Brook University. Available as lingbuzz/002503

Baayen RH, Piepenbrock R, Gulikers L (1995) The CELEX lexical database, 2.5 edn. Linguistic Data Consortium, University of Pennsylvania

Benjamini Y, Hochberg Y (1995) Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc B 57(1):289–3000

Binder JR (2015) The Wernicke area: modern evidence and a reinterpretation. Neurology 85(24):2170–2175

Binder JR, Frost JA, Hammeke TA, Bellgowan PSF, Rao SM, Cox RW (1999) Conceptual processing during the conscious resting state: a functional MRI study. J Cogn Neurosci 11(1):80–93

Binder JR, Medler DA, Desai R, Conant LL, Liebenthal E (2005) Some neurophysiological constraints on models of word naming. Neuroimage 27:677–693

Binder JR, Desai RH, Graves WW, Conant LL (2009) Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cereb Cortex 19:2767–2796. https://doi.org/10.1093/cercor/bhp055

Bolger DJ, Hornickel J, Cone NE, Burman DD, Booth JR (2008) Neural correlates of orthographic and phonological consistency effects in children. Hum Brain Mapp 29:1416–1429

Booth JR, Burman DD, Meyer JR, Gitelman DR, Parrish TB, Mesulam MM (2004) Development of brain mechanisms for processing orthographic and phonologic representations. J Cogn Neurosci 16(7):1234–1249

Borowsky R, Cummine J, Owen WJ, Friesen CK, Shih F, Sarty GE (2006) FMRI of ventral and dorsal processing streams in basic reading processes: insular sensitivity to phonology. Brain Topogr 18(4):233–239

Briggs RG, Conner AK, Baker CM, Burks JD, Glenn CA, Sali G et al (2018) A connectomic atlas of the human cerebrum—chapter 18: the connectional anatomy of human brain networks. Oper Neurosurg 15(suppl_1):S470–S480

Bruno JL, Lu Z-L, Manis FR (2013) Phonological processing is uniquely associated with neuro-metabolic concentration. Neuroimage 67:175–181

Buchsbaum BR, Baldo J, Okada K, Berman KF, Dronkers N, D’Esposito M, Hickok G (2011) Conduction aphasia, sensory-motor integration, and phonological short-term memory—an aggregate analysis of lesion and fMRI data. Brain Lang 119:119–128

Caspers S, Geyer S, Schleicher A, Mohlberg H, Amunts K, Zilles K (2006) The human inferior parietal cortex: cytoarchitectonic parcellation and interindividual variability. Neuroimage 33(2):430–448

Caspers S, Eickhoff SB, Geyer S, Scheperjans F, Mohlberg H, Zilles K, Amunts K (2008) The human inferior parietal lobule in stereotaxic space. Brain Struct Funct 212(6):481–495

Catani M, Ffytche DH (2005) The rises and falls of disconnection syndromes. Brain 128(10):2224–2239

Cattinelli I, Borghese NA, Gallucci M, Paulesu E (2013) Reading the reading brain: a new meta-analysis of functional imaging data on reading. J Neurolinguist 26(1):214–238

Cohen L, Dehaene S, Vinckier F, Jobert A, Montavont A (2008) Reading normal and degraded words: contribution of the dorsal and ventral visual pathways. Neuroimage 40(1):353–366

Cona G, Scarpazza C (2019) Where is the “where” in the brain? A meta-analysis of neuroimaging studies on spatial cognition. Hum Brain Mapp 40(6):1867–1886

Cox RW (1996) AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res 29:162–173

Cox RW, Hyde JS (1997) Software tools for analysis and visualization of fMRI data. NMR Biomed 10(4–5):171–178

Cox CR, Seidenberg MS, Rogers TT (2015) Connecting functional brain imaging and parallel distributed processing. Lang Cogn Neurosci 30(4):380–394

Cox RW, Chen G, Glen DR, Reynolds RC, Taylor PA (2017) FMRI clustering in AFNI: false-positive rates redux. Brain Connect 7(3):152–171. https://doi.org/10.1089/brain.2016.0475

Damasio AR (1989) Time-locked multiregional retroactivation: a systems-level proposal for the neural substrates of recall and recognition. Cognition 33(1–2):25–62

Damasio AR (1992) Aphasia. N Engl J Med 326:531–539

Damasio H (2005) Human brain anatomy in computerized images, 2nd edn. Oxford University Press, New York

Damasio H, Damasio AR (1980) The anatomical basis of conduction aphasia. Brain 103:337–350

Damasio A, Damasio H (1994) Cortical systems for retrieval of concrete knowledge: the convergence zone framework. In: Koch C, Davis J (eds) Large-scale neuronal theories of the brain. The MIT Press, Cambridge, MA, pp 61–74

de Beeck HPO (2010) Against hyperacuity in brain reading: spatial smoothing does not hurt multivariate fMRI analyses? Neuroimage 49(3):1943–1948

Dehaene S, Cohen L (2011) The unique role of the visual word form area in reading. Trends Cogn Sci 15(6):254–262

Déjerine J (1891) Sur un cas de cécité verbale avec agraphie suivi d’autopsie. Mém Soc Biol 3:197–201

Desikan RS, Ségonne F, Fischl B, Quinn BT, Dickerson BC, Blacker D et al (2006) An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage 31(3):968–980

Devereux BJ, Clarke A, Tyler LK (2018) Integrated deep visual and semantic attractor neural networks predict fMRI pattern-information along the ventral object processing pathway. Sci Rep 8(1):1–12

Devlin JT, Poldrack RA (2007) In praise of tedious anatomy. Neuroimage 37:1033–1041

Dronkers NF (1996) A new brain region for coordinating speech articulation. Nature 384(6605):159–161

Duncan J (2010) The multiple-demand (MD) system of the primate brain: mental programs for intelligent behaviour. Trends Cogn Sci 14(4):172–179

Duvernoy HM (1999) The human brain: surface, three-dimensional sectional anatomy with MRI, and blood supply. Springer Science & Business Media, Berlin

Eickhoff SB, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, Zilles K (2005) A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. Neuroimage 25(4):1325–1335

Ester EF, Sutterer DW, Serences JT, Awh E (2016) Feature-selective attentional modulations in human frontoparietal cortex. J Neurosci 36(31):8188–8199

Fedorenko E, Duncan J, Kanwisher N (2013) Broad domain generality in focal regions of frontal and parietal cortex. Proc Natl Acad Sci USA 110(41):16616–16621

Fernandino L, Humphries CJ, Conant LL, Seidenberg MS, Binder JR (2016) Heteromodal cortical areas encode sensory-motor features of word meaning. J Neurosci 36(38):9763–9769

Fernandino L, Tong J-Q, Conant LL, Humphries CJ, Binder JR (2022) Decoding the information structure underlying the neural representation of concepts. Proc Natl Acad Sci 119(6):e2108091119

Fiez JA, Petersen SE (1998) Neuroimaging studies of word reading. Proc Natl Acad Sci USA 95:914–921

Fischer-Baum S, Bruggemann D, Gallego IF, Li DSP, Tamez ER (2017) Decoding levels of representation in reading: a representational similarity approach. Cortex 90:88–102

Geng JJ, Vossel S (2013) Re-evaluating the role of TPJ in attentional control: contextual updating? Neurosci Biobehav Rev 37(10):2608–2620

Geschwind N (1965) Disconnexion syndromes in animals and man. Brain 88:237–294

Glasser MF, Coalson TS, Robinson EC, Hacker CD, Harwell J, Yacoub E et al (2016) A multi-modal parcellation of human cerebral cortex. Nature 536(7615):171–178

Grabowski TJ, Bauer MD, Foreman D, Mehta S, Eaton BL, Graves WW et al (2006) Adaptive pacing of visual stimulation for fMRI studies involving overt speech. Neuroimage 29:1023–1030

Graves WW, Desai R, Humphries C, Seidenberg MS, Binder JR (2010) Neural systems for reading aloud: a multiparametric approach. Cereb Cortex 20:1799–1815. https://doi.org/10.1093/cercor/bhp245

Graves WW, Boukrina O, Mattheiss SR, Alexander EJ, Baillet S (2017) Reversing the standard neural signature of the word–nonword distinction. J Cogn Neurosci 29(1):79–94

Haines DH (2000) Neuroanatomy: an atlas of structures, sections, and systems, 5th edn. Lippincott Williams & Wilkins, Philadelphia

Hall KC, Mackie JS, Lo RY-H (2019) Phonological CorpusTools: software for doing phonological analysis on transcribed corpora. Int J Corpus Linguist 24(4):522–535

Handjaras G, Leo A, Cecchetti L, Papale P, Lenci A, Marotta G et al (2017) Modality-independent encoding of individual concepts in the left parietal cortex. Neuropsychologia 105:39–49

Hanke M, Halchenko YO, Sederberg PB, Hanson SJ, Haxby JV, Pollmann S (2009) PyMVPA: a python toolbox for multivariate pattern analysis of fMRI data. Neuroinformatics 7:37–53

Harm MW, Seidenberg MS (1999) Phonology, reading acquisition, and dyslexia: insights from connectionist models. Psychol Rev 106(3):491–528

Hauk O, Davis MH, Pulvermüller F (2008) Modulation of brain activity by multiple lexical and word form variables in visual word recognition: a parametric fMRI study. Neuroimage 42:1185–1195

Hendriks MH, Daniels N, Pegado F, Op de Beeck HP (2017) The effect of spatial smoothing on representational similarity in a simple motor paradigm. Front Neurol 8:222

Hillis AE, Work M, Barker PB, Jacobs MA, Breese EL, Maurer K (2004) Re-examining the brain regions crucial for orchestrating speech articulation. Brain 127(7):1479–1487

Hoffman P, Lambon Ralph MA, Woollams AM (2015) Triangulation of the neurocomputational architecture underpinning reading aloud. Proc Natl Acad Sci USA 112(28):E3719–E3728. https://doi.org/10.1073/pnas.1502032112

Humphreys GF, Lambon Ralph MA (2015) Fusion and fission of cognitive functions in the human parietal cortex. Cereb Cortex 25(10):3547–3560. https://doi.org/10.1093/cercor/bhu198

Humphreys GF, Tibon R (2022) Dual-axes of functional organisation across lateral parietal cortex: the angular gyrus forms part of a multi-modal buffering system. Brain Struct Funct. https://doi.org/10.1007/s00429-022-02510-0

Huth AG, de Heer WA, Griffiths TL, Theunissen FE, Gallant JL (2016) Natural speech reveals the semantic maps that tile human cerebral cortex. Nature 532:453–532

Jobard G, Crivello F, Tzourio-Mazoyer N (2003) Evaluation of the dual route theory of reading: a metanalysis of 35 neuroimaging studies. Neuroimage 20:693–712

Kriegeskorte N (2015) Deep neural networks: a new framework for modeling biological vision and brain information processing. Annu Rev vis Sci 1:417–446

Kriegeskorte N, Mur M, Bandettini P (2008) Representational similarity analysis—connecting the branches of systems neuroscience. Front Syst Neurosci. https://doi.org/10.3389/neuro.06.004.2008

Kuhnke P, Chapman CA, Cheung VK, Turker S, Graessner A, Martin S et al (2022) The role of the angular gyrus in semantic cognition: a synthesis of five functional neuroimaging studies. Brain Struct Funct. https://doi.org/10.1007/s00429-022-02493-y

Lambon Ralph MA, Jefferies E, Patterson K, Rogers TT (2017) The neural and computational bases of semantic cognition. Nat Rev Neurosci 18(1):42–55

Lancaster JL, Woldorff MG, Parsons LM, Liotti M, Freitas CS, Rainey L et al (2000) Automated Talairach atlas labels for functional brain mapping. Hum Brain Mapp 10:120–131

Lerma-Usabiaga G, Carreiras M, Paz-Alonso PM (2018) Converging evidence for functional and structural segregation within the left ventral occipitotemporal cortex in reading. Proc Natl Acad Sci 115(42):E9981–E9990

Lin N, Wang X, Xu Y, Wang X, Hua H, Zhao Y, Li X (2018) Fine subdivisions of the semantic network supporting social and sensory–motor semantic processing. Cereb Cortex 28(8):2699–2710. https://doi.org/10.1093/cercor/bhx148

Lin N, Xu Y, Yang H, Zhang G, Zhang M, Wang S et al (2020) Dissociating the neural correlates of the sociality and plausibility effects in simple conceptual combination. Brain Struct Funct 225(3):995–1008

Maisog JM, Einbinder ER, Flowers DL, Turkeltaub PE, Eden GF (2008) A meta-analysis of functional neuroimaging studies of dyslexia. Ann N Y Acad Sci 1145:237–259

Mattheiss SR, Levinson H, Graves WW (2018) Duality of function: activation for meaningless nonwords and semantic codes in the same brain areas. Cereb Cortex 28(7):2516–2524. https://doi.org/10.1093/cercor/bhy053

McCandliss BD, Cohen L, Dehaene S (2003) The visual word form area: expertise for reading in the fusiform gyrus. Trends Cogn Sci 7(7):293–299

Mehta S, Grabowski TJ, Razavi M, Eaton B, Bolinger L (2006) Analysis of speech-related variance in rapid event-related fMRI using a time-aware acquisition system. Neuroimage 29:1278–1293

Mesulam M-M (1998) From sensation to cognition. Brain 121:1013–1052

Mirman D, Landrigan J-F, Britt AE (2017) Taxonomic and thematic semantic systems. Psychol Bull 143(5):499

Monsell S, Doyle MC, Haggard PN (1989) Effects of frequency on visual word recognition tasks: where are they? J Exp Psychol Gen 118(1):43–71

Mumford JA, Turner BO, Ashby FG, Poldrack RA (2012) Deconvolving BOLD activation in event-related designs for multivoxel pattern classification analyses. Neuroimage 59:2636–2643

Nelson SM, Cohen AL, Power JD, Wig GS, Miezin FM, Wheeler ME et al (2010) A parcellation scheme for human left lateral parietal cortex. Neuron 67:156–170

Oosterhof NN, Connolly AC, Haxby JV (2016) CoSMoMVPA: multi-modal multivariate pattern analysis of neuroimaging data in Matlab/GNU Octave. Front Neuroinform 10:27

Paivio A (1991) Dual coding theory: retrospect and current status. Can J Psychol 45(3):255–287

Pattamadilok C, Knierim IN, Duncan KJK, Devlin JT (2010) How does learning to read affect speech perception? J Neurosci 30(25):8435–8444

Patterson K, Nestor PJ, Rogers TT (2007) Where do you know what you know? The representation of semantic knowledge in the human brain. Nat Rev Neurosci 8:976–987

Pennington J, Socher R, Manning CD (2014) Glove: global vectors for word representation. Paper presented at the proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP)

Pereira F, Gershman S, Ritter S, Botvinick M (2016) A comparative evaluation of off-the-shelf distributed semantic representations for modelling behavioural data. Cogn Neuropsychol 33(3–4):175–190

Pereira F, Lou B, Pritchett B, Ritter S, Gershman SJ, Kanwisher N et al (2018) Toward a universal decoder of linguistic meaning from brain activation. Nat Commun 9(1):1–13

Pillay SB, Stengel BC, Humphries C, Book DS, Binder JR (2014) Cerebral localization of impaired phonological retrieval during rhyme judgment. Ann Neurol 76:738–746

Plaut DC, Shallice T (1993) Deep dyslexia: a case study of connectionist neuropsychology. Cogn Neuropsychol 10(5):377–500

Plaut DC, McClelland JL, Seidenberg MS, Patterson K (1996) Understanding normal and impaired word reading: Computational principles in quasi-regular domains. Psychol Rev 103(1):56–115

Porto de Oliveira JVM, Raquelo-Menegassio AF, Maldonado IL (2021) What’s your name again? A review of the superior longitudinal and arcuate fasciculus evolving nomenclature. Clin Anat 34(7):1101–1110

Price CJ (2000) The anatomy of language: contributions from functional neuroimaging. J Anat 197:335–359

Pugh KR, Frost SJ, Sandak R, Landi N, Moore D, Della Porta G, Mencl W (2010) Mapping the word reading circuitry in skilled and disabled readers. In: Cornelissen P, Hansen P, Kringelbach M, Pugh K (eds) The neural basis of reading. Oxford University Press, Oxford, pp 281–305

Purcell JJ, Turkeltaub PE, Eden GF, Rapp B (2011) Examining the central and peripheral processes of written word production through meta-analysis. Front Psychol 2:239

Raichle ME (2015) The brain’s default mode network. Annu Rev Neurosci 38:433–447

Rayner K, Foorman BR, Perfetti CA, Pesetsky D, Seidenberg MS (2001) How psychological science informs the teaching of reading. Psychol Sci Public Interest 2(2):31–74

Richlan F, Kronbichler M, Wimmer H (2009) Functional abnormalities in the dyslexic brain: a quantitative meta-analysis of neuroimaging studies. Hum Brain Mapp 30(10):3299–3308