Abstract

Processing of emotions has been an enduring topic of interest in neuroimaging research, but studies have mostly used facial emotional stimuli. The aim of this study was to determine neural networks involved in emotion processing using scenic emotional visual stimuli. One hundred and twenty photographs from the International Affective Picture System (IAPS), including ecological scenes of disgust, fear, happiness, and sadness, were presented to 40 healthy participants while they underwent functional magnetic imaging resonance (fMRI). Afterwards they evaluated the emotional content of the pictures in an offline task. The occipito-temporal cortex and the amygdala–hippocampal complex showed a non-specific emotion-related activation, which was more marked in response to negative emotions than to happiness. The temporo-parietal cortex and the ventral anterior cingulate gyrus showed deactivation, with the former being marked for all emotions except fear and the latter being most marked for disgust. The fusiform gyrus showed activation in response to disgust and deactivation in response to happiness or sadness. Brain regions involved in processing of scenic emotion therefore resemble those reported for facial expressions of emotion in that they respond to a range of different emotions, although there appears to be specificity in the intensity and direction of the response.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Processing of emotional stimuli has been a topic of considerable interest in functional imaging research. Thus, a recent meta-analysis that used functional magnetic resonance imaging (fMRI) to investigate brain responses to facial emotions found 377 studies carried out between 1990 and 2008 (Fusar-Poli et al. 2009). An important finding of this meta-analysis was that different emotions (e.g., happiness or fear) appear to be associated with activation of the same brain regions (e.g., the amygdala and the fusiform gyrus), although probably at different intensities. Several further studies have also supported the involvement of a common emotional network involving limbic and visual regions in the processing of emotional faces in a relatively non-specific way (Surguladze et al. 2012; Winston et al. 2003; Tettamanti et al. 2012).

It should be noted, however, that the strategy used in the above studies amalgamates brain responses associated with general emotional processing with those associated with processing of faces. A brain system is known to exist which deals specifically with facial information, including that related to both emotional and identity recognition (Ellis and Young 1988; Calder and Young 2005), and so it is possible that the responses seen in studies using facial emotions might partly reflect processing of other kinds of facial information and/or might not capture responses to other kinds of emotional stimuli. Other factors that need to be considered here are that, at the behavioral level, faces have been found to elicit emotional responses even when they are emotionally neutral (Lee et al. 2008), and that the use of facial stimuli may present hidden sources of bias in illness groups—individuals with autism, for example, tend to avoid looking at other people’s eyes, and this has been showed to affect the results of fMRI studies employing emotional faces (Dalton et al. 2005).

Only a relatively small number of functional imaging studies have employed non-facial stimuli to investigate emotional processing. Hariri et al. (2003) used threatening and fearful scenes derived from the International Affective Picture System (IAPS) (Lang et al. 1997) and found that perception of fearful stimuli was associated with activation of the amygdala. Britton et al. (2006) compared the brain responses elicited by IAPS photographs depicting a range of emotions (happiness, sadness, anger, fear) to those elicited by faces showing corresponding emotions. They found that emotion-induced activations were similar for the facial and IAPS stimuli (e.g., amygdala and visual cortex), but the former activated a broader network than the latter. Both these studies’ findings have to be regarded as preliminary, however, because their sample sizes were small (n = 11 and n = 12, respectively).

The aim of this study was to establish the neural networks involved in general emotion processing, using scenic stimuli selected to elicit a range of different emotions. To this end, 40 healthy subjects were scanned while they were presented with emotional and neutral visual stimuli from the IAPS. We hypothesized that brain regions activated by the task would be similar to those involved in the processing of emotional faces, but with differences in those brain areas known to be involved in face processing such as the fusiform gyrus (Kanwisher et al. 1997). We further hypothesized that the brain responses to different emotions would share a common network, as found in studies using facial emotion tasks, although we also anticipated some emotion-specific effects.

Materials and methods

Participants

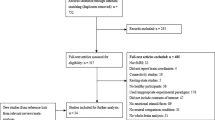

Fifty-one healthy adults were recruited from non-medical staff working in the hospital, their relatives and acquaintances, plus independent sources in the community. All participants were right-handed, had normal or corrected-to-normal vision, and did not report a personal or family history of mental illness and/or treatment with psychotropic medication. Seven fMRI datasets had to be discarded due to excessive head motion (n = 4) or technical artifacts (n = 3). In addition, as described below, four participants were excluded because of probable lack of attention to the photographs as detected in a post-scan task. Therefore, the final sample was composed of 40 healthy adults. Their age range was 19–59 (mean ± SD: 38 ± 11 years), 70 % were female, and their IQ (as estimated from four subtests of the WAIS III) was 112 ± 10.

Online task

The stimuli consisted of 80 photographs depicting ecological scenes selected to elicit emotional responses (20 of disgust, 20 of fear, 20 of happiness, and 20 of sadness). These were selected from the IAPS database on the basis that they had achieved the highest agreement in type of emotion, intensity of emotion, and valence (i.e., positive or negative) in a preliminary offline study of 21 different healthy volunteers. The final set of images used and their IAPS numbers are listed in the “Appendix”. There were also 40 photographs showing non-emotional material; these were also selected from the IAPS database.

Photographs that showed peoples’ faces were not a priori excluded from the selection process. This would have been impractical in the cases of happiness and sadness, where facial expression and body language are key indicators of the interpretation of the emotional context of a scene. Therefore, among the 20 scenes showing happiness there were 6 showing this facial expression, plus 1 not showing emotion; in the case of sadness there were 9 faces showing sadness or distress and 6 which did not show emotion. The remaining scenes did not include emotional faces, but in the case of fear some photographs showed neutral or masked faces. In all cases, faces were small and facial expressions were part of a complex scene whose interpretation depended on other factors as well.

Participants were instructed simply to look at the photographs. Presentation was divided into 24 blocks, each composed of 5 photographs of the same emotion type. In order to avoid hangover effects from the emotional content of previous blocks, each block was followed by a ‘washout’ in which three simple symbols such as ampersands were presented. Each photograph or symbol was presented for 4 s, so that each block lasted for 20 s, plus 12 s of washout. Emotional blocks were presented in a counterbalanced order to avoid sequence effects. For example, photographs of happiness were presented once after photographs of sadness, once after photographs of fear, once after photographs of disgust and once after neutral photographs.

fMRI data acquisition

Functional MRI scanning was performed on a 1.5-T GE Signa scanner (General Electric Medical Systems, Milwaukee, WI, USA) at Sant Joan de Déu Hospital in Barcelona. A gradient-echo echo-planar (EPI) sequence depicting the blood-oxygenation-level-dependent (BOLD) contrast was acquired with the following parameters: 394 volumes with the first 10 discarded to avoid T1 saturation effects, 16 axial slices (thickness 7 mm, gap 0.7 mm), matrix size 64 × 64, voxel resolution 3.0 × 3.0 × 7.7 mm3, TE 40 ms, TR 2,000 ms, flip angle 70°. Visual inspection of the raw images led to the detection of technical artifacts in three of the datasets, which were therefore excluded.

Offline task

After the scanning session, the participants were presented with the same 120 photographs, plus 41 new photographs (19 emotional, 22 non-emotional). They were instructed to state whether they had previously seen the photograph in the scanner or not, to evaluate the intensity and valence of the emotion evoked by dragging a bar using the computer mouse, and to specify the type of emotion shown in the photograph. Participants’ answers were re-coded into: (a) percentage of photographs correctly classified as previously presented or new; (b) mean emotional valence-signed intensity of the online photographs (from highly negative to highly positive); (c) mean absolute emotional intensity of the online photographs (from neutral to high); and (d) percentage of online photographs correctly classified according to the emotional type. As stated earlier, four participants correctly classified less than 50 % photographs as previously presented or new; they were considered not to have attended the photographs and excluded from the rest of the study.

Analysis

The following standard preprocessing and first level processing steps were conducted with fMRI Expert Analysis Tool (FEAT), part of the FMRIB Software Library (FSL) tools (Smith et al. 2004): (a) motion correction; (b) non-brain removal; (c) isotropic 5 mm full width at half maximum (FWHM) Gaussian smoothing; (d) grand-mean intensity normalization of the entire 4D dataset by a single multiplicative factor; (e) high-pass temporal filtering; (f) time-series statistical analysis of the differences between each emotion and the neutral blocks with local autocorrelation correction; and (g) registration to the MNI standard space. To minimize unwanted movement-related effects, four participants presenting an estimated maximum absolute movement >4.0 mm or an average absolute movement >0.3 were excluded from the study.

Group analyses included a group mean activation map per emotional contrast (e.g., fearful vs. neutral images), as well as a repeated measures analysis of variance (RM-ANOVA) of the four emotions. It should be noted that the separate mean analyses localize those brain regions activated by one or more emotions, while the RM-ANOVA analysis localizes those brain regions where different emotions are associated with different responses. All analyses were fitted as mixed-effect models (Beckmann et al. 2003) and cluster-thresholded to p < 0.05 corrected for multiple comparisons.

Grouping of regions by their emotional response

In order to better comprehend the commonality and specificity of the brain responses to the different emotions, brain regions that showed a similar brain response to a given emotion were grouped together. For instance, all brain regions that displayed marked activations in response to a given emotion but no substantial response to another emotion would be grouped together.

First, a set of empirical brain regions was obtained from the intersection of the spatial clusters of activation obtained in the different mean and RM-ANOVA analyses. Second, the response of each of these regions to each emotion was coded as “activation”, “deactivation”, or “non-response”. Finally, these codes were entered into a hierarchical clustering analysis with complete linkage (i.e., the similarity of two groups of regions was computed as the similarity of their most dissimilar regions). This analysis yielded a dendrogram (i.e., a tree diagram of the regions according to the similarity of their emotional response, see Fig. 1), from which the groups of regions could be derived.

Dendrogram of the hierarchical clustering analysis. Tree diagram showing the grouping of the selected brain regions according to the similarity of their response to the different emotions. Regions were obtained from the intersection of the spatial clusters of activation obtained in the different mean and repeated measures analysis of variance (RM-ANOVA) analyses

Results

Offline task

The participants recognized whether the pictures had been presented during the scanning session at a level of 76 % correct (range 50–97 %; emotional pictures = 77 %, neutral pictures = 75 %). The subjects also identified the four emotions at a high rate (88 %, range 75–99 %; disgust = 78 %, fear = 83 %, happiness = 86 %, sadness = 91 %, neutral = 94 %). Mean subjective emotional valence-signed intensity, rated from −1 to +1, was positive in the photographs of happiness (+0.59), negative in the photographs of disgust, fear or sadness (−0.55, −0.56 and −0.54, respectively), and close to zero in neutral photographs (+0.04). There were no differences of absolute subjective emotional intensity between the four emotions (RM-ANOVA F 3,117 = 1.9, P = 0.138).

Brain responses to the emotional photographs

As shown in Table 1 and Fig. 2, the brain responses to each of the four types of emotional pictures generally involved strong and bilateral changes in the visual areas (occipital and fusiform cortices extending to adjacent temporal regions and the cerebellum), the limbic system (amygdala, hippocampus and anterior cingulate cortex), the thalamus and parts of the prefrontal and temporo-parietal cortices.

Maps of the brain response to the different emotional scenes. Red to yellow colors indicate regions with statistically significant activation (i.e., emotion > neutral); blue to cyan colors indicate regions with statistically significant deactivation (i.e., emotion < neutral); purple to pink colors indicate regions with statistically significant differences between the emotions

Specifically, responses to disgusting pictures were characterized by activations in the occipito-temporal cortex and the amygdala-hippocampal complex (extending to thalamus, insula, inferior frontal cortex and tectum), while there was deactivation of the ventral anterior cingulate and temporo-parietal cortices. Pictures of fear and sadness were also associated with activations in the occipito-temporal cortex and the amygdala-hippocampal complex. However, deactivation associated with sad pictures was mainly limited to the temporo-parietal cortex, and fearful pictures were not accompanied by any substantial deactivation. Finally, responses to happy pictures consisted mainly of deactivations in the temporo-parietal and dorsal frontal cortices.

RM-ANOVA between the four emotions revealed statistically significant differences in nearly all these areas (Fig. 2). These differences were particularly marked in the occipito-temporal cortex including the fusiform gyrus—which showed activation in response to photographs of disgust, but deactivation in response to photographs eliciting happiness or sadness.

Grouping of regions by their emotional response

Four groups of regions could be defined according to their response to the different emotional photographs (Fig. 3). The first group included regions that responded with a pattern of activation: mainly the bilateral occipito-temporal cortex, amygdala and thalamus. Interestingly, the response in these areas was more intense for negative emotions than for happiness. The other three groups of regions were composed of regions that responded with deactivation patterns. The temporo-parietal cortex, for example, showed marked deactivation in all emotions except fear. The ventral anterior cingulate gyrus, conversely, showed a mild deactivation that was more marked in photographs of disgust. The bilateral fusiform gyrus constituted a special group in which deactivation was observed in photographs of happiness or sadness, but activation in photographs of disgust.

Patterns of brain response to the different emotional scenes. Effect size of the blood-oxygenation-level-dependent (BOLD) response to the different emotions in the selected brain regions. Regions were obtained from the intersection of the spatial clusters of activation obtained in the different mean and repeated measures analysis of variance (RM-ANOVA) analyses. Note that this plot is based on the voxels where the responses achieved statistical significance and may thus overestimate the true effect sizes

Discussion

Brain processing of emotional stimuli has been heavily investigated using functional imaging, but studies to date have mostly used emotional faces, which may mix the response to emotion with the processing of other facial information. In this study, scenic photographs selected to ecologically elicit different emotions were presented to a sample of 40 healthy volunteers while fMRI data were acquired. We found a set of brain responses in regions broadly similar to those observed in studies using facial emotion tasks, as well as additional changes that, as far as we know, have not been reported using facial stimuli. There was some evidence to support the concept of a common set of brain regions involved in processing of emotional stimuli.

In agreement with studies using facial emotional stimuli (Fusar-Poli et al. 2009), this study found that limbic and extrastriate visual areas (i.e., amygdala and occipito-temporal cortex) showed activation in response to emotional scenes. The amygdala is the structure traditionally most associated with emotional processing (Breiter et al. 1996; Adolphs et al. 1995), and is considered to represent an important hub in the organization of emotional responses to perceptual stimuli. It receives information from all sensory modalities, including the primary visual cortex and the visual-related inferior temporal cortex (Emery et al. 2000), whilst its larger efferent projections connect to hypothalamic, midbrain, and brainstem nuclei that control psycho-physiological and behavioral emotional responses (Davis and Whalen 2001), as well as several levels of the ventral visual processing stream (Emery et al. 2000). At the behavioral level, the involvement of the amygdala in emotional reactions has been well documented in animals (Knapska et al. 2006).

It should be noted, however, that no significant amygdala response to happy scenic stimuli was detected, in contrast to studies using happy faces (Fusar-Poli et al. 2009). Amygdala activation has also been proposed to be modulated by the saliency of stimuli (Whalen 2007), which may include specific aspects of faces such as the area of sclera visible around the iris (Radua et al. 2010; Whalen et al. 2004) or specific aspects of scenes such as sharp object contours (Bar and Neta 2007). One possible explanation, therefore, could be that the lack of amygdala activation to happy scenes simply reflected a lower salience of these images than the scenes portraying other emotions.

We also found that disgust-related activation of the amygdala–hippocampal complex extended to the insular cortex. This finding is in agreement with previous studies reporting that the insula responds selectively to facial expressions of disgust and disgust-inducing pictures (Wright et al. 2004; Fusar-Poli et al. 2009).

The fusiform gyrus showed activation in response to disgust but deactivation in response to happiness or sadness. The fusiform gyrus has been found to be involved in facial emotion processing (Fusar-Poli et al. 2009; Wang et al. 2005). Interestingly, the fusiform (de-)activation found in our study spatially overlapped the face-selective and the emotion-related activation described in the meta-analysis of the brain response to facial stimuli by Fusar-Poli et al. (2009). Specifically, their (MNI-converted) meta-analytic peaks associated with perception of neutral faces lay within the areas of activation we found in response to scenes eliciting disgust, fear or sadness. Also, Fusar-Poli et al’s. (2009) meta-analytic peaks associated with perception of emotional (vs. neutral) faces lay within the areas of activation in response to disgust or fear scenes, or within the areas of deactivation in response to sadness or happiness scenes.

In the present study, the fusiform gyrus had a clear emotion-specific pattern, in that it is activated in response to disgust but deactivated in response to happiness or sadness. However, this emotional specificity should be taken with some caution as it contrasts with previous studies using emotional faces, which have reported this region to show activation not only in response to expressions of disgust or fear but also to expressions of happiness or sadness. A potential explanation for this discrepancy may be related to the recent distinction between the lateral facial-selective and the medial scene-selective parts of the fusiform gyrus (Nasr et al. 2011). It is plausible that the activation found in our study mainly involves the scene-selective areas. However, several of the happy and sad scenes included faces and so their associated responses may have been displaced to the facial-selective areas, ultimately involving a lower activity of the scene-selective areas.

Finally, this study also found evidence of deactivations. First, the temporo-parietal cortex showed deactivation when emotional photographs were presented, with this response being only mild with photographs of fear. Second, the ventral anterior cingulate/medial frontal cortex (extending to adjacent orbito-frontal areas) showed a mild deactivation that was more noticeable while viewing photographs of disgust. Similar deactivations have occasionally been reported by studies using facial stimuli (Rahko et al. 2010), although this low frequency may be related to the fact that most of the studies to date have been focused on fear and only a few have reported deactivations.

It is tempting to speculate that these deactivations reflect changes in activity in the so-called default mode network, a series of interconnected brain regions, including the medial frontal cortex, the posterior cingulate cortex, and parts of the parietal and temporal cortex, which are active at rest but which deactivate during performance of a wide range of attention-demanding tasks (Buckner et al. 2008; Gusnard and Raichle 2001). The fact that the pattern did not involve the whole of the network could be due to the fact that the task under study (viewing emotional scenes) was not highly attention-demanding, and it was compared with viewing other scenes of equivalent complexity rather than a baseline condition of visualization of simple symbols or a fixation cross. Nevertheless, it seems unlikely that all the deactivations we observed could be explained in this way: for example, the fusiform gyrus showed changes with several emotions, although here there was a pattern of activation in response to disgust but deactivation in response to happiness or sadness.

This study has some limitations. First, while IAPS photographs may induce a more general emotional response than that elicited by facial expressions of emotion, some of the findings could still be specific to the processing of visual stimuli. Brain processing of emotional music, for example, has been reported to involve limbic and auditory (rather than visual) areas (Mitterschiffthaler et al. 2007). Second, it should be noted that IAPS was developed to evaluate valence and arousal, rather than the response to specific emotions. A new set of both facial and scenic emotional images, developed to evaluate the response to specific emotions from facial and non-facial stimuli, would probably further clarify the common and specific components of the brain response. Third, in the offline task participants were only allowed to choose between four emotions (and neutral), and so if they experienced other emotional reactions to these photographs, they would have been forced to define them as one of these states. However, only photographs for which there had been a good consensus and high inter-rater agreement in the preliminary offline study were selected, thus minimizing this possibility. Fourth, as noted in the “Methods” section, this study did not aim to completely exclude faces from the emotion-provoking photographs in relation to happy and sad scenes, where in some cases facial expression and body language to provide necessary emotional context. We therefore cannot completely discount the possibility that the presence of faces may have influenced the brain responses produced, especially in the fusiform gyrus. Indeed, it must be noted that human individuals have been reported to quickly saccade to the faces embedded in a natural scene (Crouzet and Thorpe 2011; Crouzet et al. 2010), and face-selective responses have been reported even if the faces are defined contextually (Cox et al. 2004), as it was the case in two fear photographs.

To sum up, this study investigated brain responses to scenic photographs that were selected to elicit specific emotions. Regions showing activation in comparison to neutral photographs appeared to partially overlap with those reported in studies employing emotional faces, particularly in the amygdala and occipito-temporal cortex. However, this study was also able to find a new emotion-specific response pattern in fusiform gyrus, as well as deactivation patterns in the temporo-parietal and anterior cingulate cortex. The approach used here thus has the potential to complement and add to findings from studies using faces to elicit emotional responses, ultimately offering a better understanding of the brain substrates of the emotion processing abnormalities. It may also prove to be useful in the study of disorders such as autism, where observed emotional responses in standard facial paradigms may be affected by the known tendency to avoid looking at other people’s eyes (Dalton et al. 2005).

References

Adolphs R, Tranel D, Damasio H, Damasio AR (1995) Fear and the human amygdala. J Neurosci 15(9):5879–5891

Bar M, Neta M (2007) Visual elements of subjective preference modulate amygdala activation. Neuropsychol 45:2191–2200

Beckmann C, Jenkinson M, Smith SM (2003) General multi-level linear modelling for group analysis in FMRI. Neuroimage 20:1052–1063

Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, Buckner RL, Strauss MM, Hyman SE, Rosen BR (1996) Response and habituation of the human amygdala during visual processing of facial expression. Neuron 17(5):875–887

Britton JC, Taylor SF, Sudheimer KD, Liberzon I (2006) Facial expressions and complex IAPS pictures: common and differential networks. Neuroimage 31(2):906–919

Buckner RL, Andrews-Hanna JR, Schacter DL (2008) The brain’s default network: anatomy, function, and relevance to disease. Ann N Y Acad Sci 1124:1–38. doi:10.1196/annals.1440.011

Calder AJ, Young AW (2005) Understanding the recognition of facial identity and facial expression. Nat Rev Neurosci 6(8):641–651. doi:10.1038/nrn1724

Cox D, Meyers E, Sinha P (2004) Contextually evoked object-specific responses in human visual cortex. Science 304(5667):115–117. doi:10.1126/science.1093110304/5667/115

Crouzet SM, Thorpe SJ (2011) Low-level cues and ultra-fast face detection. Front Psychol 2:342. doi:10.3389/fpsyg.2011.00342

Crouzet SM, Kirchner H, Thorpe SJ (2010) Fast saccades toward faces: face detection in just 100 ms. J Vis 10 (4):16 11–17. doi:10.1167/10.4.16

Dalton KM, Nacewicz BM, Johnstone T, Schaefer HS, Gernsbacher MA, Goldsmith HH, Alexander AL, Davidson RJ (2005) Gaze fixation and the neural circuitry of face processing in autism. Nat Neurosci 8(4):519–526

Davis M, Whalen PJ (2001) The amygdala: vigilance and emotion. Mol Psychiatry 6(1):13–34

Ellis AW, Young AW (1988) Human cognitive neuropsychology. Erlbaum, Hove

Emery NJ, Amaral DG, Lane RD, Nadel L (2000) The role of the amygdala in primate social cognition. Cognitive neuroscience of emotion. Oxford University Press, New York, pp 156–191

Fusar-Poli P, Placentino A, Carletti F, Landi P, Allen P, Surguladze S, Benedetti F, Abbamonte M, Gasparotti R, Barale F, Perez J, McGuire P, Politi P (2009) Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. J Psychiatry Neurosci 34(6):418–432

Gusnard DA, Raichle ME (2001) Searching for a baseline: functional imaging and the resting human brain. Nat Rev Neurosci 2(10):685–694. doi:10.1038/3509450035094500

Hariri AR, Mattay VS, Tessitore A, Fera F, Weinberger DR (2003) Neocortical modulation of the amygdala response to fearful stimuli. Biol Psychiatry 53(6):494–501. pii: S0006322302017869

Kanwisher N, McDermott J, Chun MM (1997) The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci 17(11):4302–4311

Knapska E, Nikolaev E, Boguszewski P, Walasek G, Blaszczyk J, Kaczmarek L, Werka T (2006) Between-subject transfer of emotional information evokes specific pattern of amygdala activation. Proc Natl Acad Sci USA 103(10):3858–3862. doi:10.1073/pnas.0511302103

Lang PJ, Bradley MM, Cuthbert BN (eds) (1997) International Affective Picture System (IAPS): technical manual and affective ratings. NIMH Center for the Study of Emotion and Attention. University of Florida, Gainesville

Lee E, Kang JI, Park IH, Kim JJ, An SK (2008) Is a neutral face really evaluated as being emotionally neutral? Psychiatry Res 157(1–3):77–85

Mitterschiffthaler MT, Fu CH, Dalton JA, Andrew CM, Williams SC (2007) A functional MRI study of happy and sad affective states induced by classical music. Hum Brain Mapp 28(11):1150–1162. doi:10.1002/hbm.20337

Nasr S, Liu N, Devaney KJ, Yue X, Rajimehr R, Ungerleider LG, Tootell RB (2011) Scene-selective cortical regions in human and nonhuman primates. J Neurosci 31(39):13771–13785. doi:10.1523/JNEUROSCI.2792-11.2011

Radua J, Phillips ML, Russell T, Lawrence N, Marshall N, Kalidindi S, El-Hage W, McDonald C, Giampietro V, Brammer MJ, David AS, Surguladze SA (2010) Neural response to specific components of fearful faces in healthy and schizophrenic adults. Neuroimage 49(1):939–946

Rahko J, Paakki JJ, Starck T, Nikkinen J, Remes J, Hurtig T, Kuusikko-Gauffin S, Mattila ML, Jussila K, Jansson-Verkasalo E, Katsyri J, Sams M, Pauls D, Ebeling H, Moilanen I, Tervonen O, Kiviniemi V (2010) Functional mapping of dynamic happy and fearful facial expression processing in adolescents. Brain Imaging Behav 4(2):164–176. doi:10.1007/s11682-010-9096-x

Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TE, Johansen-Berg H, Bannister PR, De Luca M, Drobnjak I, Flitney DE, Niazy RK, Saunders J, Vickers J, Zhang Y, De Stefano N, Brady JM, Matthews PM (2004) Advances in functional and structural MR image analysis and implementation as FSL. Neuroimage 23(Suppl 1):S208–S219. doi:10.1016/j.neuroimage.2004.07.051

Surguladze SA, Radua J, El-Hage W, Gohier B, Sato J, Kronhaus D, Proitsi P, Powel J, Phillips ML (2012) Interaction of catechol O-methyltransferase and serotonin transporter genes modulates effective connectivity in a facial emotion processing circuitry. Transl Psychiatry 2:e70

Tettamanti M, Rognoni E, Cafiero R, Costa T, Galati D, Perani D (2012) Distinct pathways of neural coupling for different basic emotions. Neuroimage 59(2):1804–1817. doi:10.1016/j.neuroimage.2011.08.018

Wang L, McCarthy G, Song AW, Labar KS (2005) Amygdala activation to sad pictures during high-field (4 tesla) functional magnetic resonance imaging. Emotion 5(1):12–22. doi:10.1037/1528-3542.5.1.12

Whalen PJ (2007) The uncertainty of it all. Trends Cogn Sci 11:499–500

Whalen PJ, Kagan J, Cook RG, Davis FC, Kim H, Polis S, McLaren DG, Somerville LH, McLean AA, Maxwell JS, Johnstone T (2004) Human amygdala responsivity to masked fearful eye whites. Science 306(5704):2061

Winston JS, O’Doherty J, Dolan RJ (2003) Common and distinct neural responses during direct and incidental processing of multiple facial emotions. Neuroimage 20(1):84–97 pii: S1053811903003033

Wright P, He G, Shapira NA, Goodman WK, Liu Y (2004) Disgust and the insula: fMRI responses to pictures of mutilation and contamination. NeuroReport 15(15):2347–2351. pii: 00001756-200410250-00009

Acknowledgments

This work was supported by the Centro de Investigación Biomédica en Red de Salud Mental (CIBERSAM), the Catalonian Government (2009SGR211 to the Research Unit of Benito Menni) and the Instituto de Salud Carlos III (Río Hortega research contract to Dr. Radua (CM11/00024); Miguel Servet research contracts to Drs. Salvador (CP07/00048) and Pomarol-Clotet (CP10/00596); Intensification grant to Dr. Sarró (10/231); and Research Projects to Drs. Pomarol-Clotet (PI10/01058) and Salvador (PI05/1874)).

Conflict of interest

The authors declare no conflict of interest in relation to the present manuscript.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Pictures of the International Affective Picture System (IAPS) used in the fMRI task:

Disgust: 1111, 1280, 1945, 2720, 7359, 7361, 7380, 9008, 9140, 9290, 9300, 9301, 9320, 9330, 9342, 9373, 9500, 9570, 9571 and 9830.

Fear: 1052, 1300, 1301, 1303, 1525, 1726, 1932, 2811, 5940, 6211, 6230, 6244, 6260, 6300, 6370, 6510, 6825, 7640, 9600 and 9620.

Happiness: 1340, 1441, 1463, 1811, 2154, 2216, 2332, 2345, 2391, 5201, 5551, 5600, 5760, 5811, 5831, 5833, 8170, 8190, 8496 and 8540.

Sadness: 2053, 2141, 2205, 2312, 2455, 2520, 2590, 2700, 2703, 2718, 2750, 2799, 3220, 3230, 3300, 3350, 9220, 9415, 9421 and 9435.

Neutral scenes: 2383, 2393, 2575, 5471, 5520, 5534, 5535, 6150, 7002, 7004, 7009, 7010, 7025, 7035, 7036, 7037, 7041, 7050, 7059, 7080, 7100, 7130, 7140, 7161, 7175, 7186, 7205, 7211, 7217, 7224, 7233, 7235, 7491, 7495, 7500, 7503, 7510, 7595, 7950 and 2745.1.

Rights and permissions

About this article

Cite this article

Radua, J., Sarró, S., Vigo, T. et al. Common and specific brain responses to scenic emotional stimuli. Brain Struct Funct 219, 1463–1472 (2014). https://doi.org/10.1007/s00429-013-0580-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00429-013-0580-0