Abstract

The use of signs as a major means for communication affects other functions such as spatial processing. Intriguingly, this is true even for functions which are less obviously linked to language processing. Speakers using signs outperform non-signers in face recognition tasks, potentially as a result of a lifelong focus on the mouth region for speechreading. On this background, we hypothesized that the processing of emotional faces is altered in persons using mostly signs for communication (henceforth named deaf signers). While for the recognition of happiness the mouth region is more crucial, the eye region matters more for recognizing anger. Using morphed faces, we created facial composites in which either the upper or lower half of an emotional face was kept neutral while the other half varied in intensity of the expressed emotion, being either happy or angry. As expected, deaf signers were more accurate at recognizing happy faces than non-signers. The reverse effect was found for angry faces. These differences between groups were most pronounced for facial expressions of low intensities. We conclude that the lifelong focus on the mouth region in deaf signers leads to more sensitive processing of happy faces, especially when expressions are relatively subtle.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Deaf persons using sign language have been extensively studied to explore if language is an innate ability, if there are universals that can be found in all languages and if the underlying network of sign language is similar to spoken languages (for an introduction see Emmorey, 2001). Because signers use three-dimensional space as a means to transport semantic content, grammar and phonology, differences in signers compared to non-signers in spatial processing have been described in both linguistic and non-linguistic contexts (Emmorey & Tversky, 2002). As an example for a non-linguistic task, signers outperform speakers of spoken languages in mental spatial transformations (Emmorey, Kosslyn, & Bellugi, 1993). The presence of language effects in a non-linguistic domain was even taken as support for linguistic relativity (Whorf, 2012), in the sense that interpretations of signed or spoken sentences depend on language-related factors and constraints (Dobel, Enriquez-Geppert, Hummert, Zwitserlood, & Bolte, 2011).

While the strong relation between spatial processing and language is well documented (e.g. Levinson, 2003), it may be surprising that signers display heightened expertise in a domain that appears unrelated to language, e.g. non-linguistic aspects of face processing. A series of studies demonstrated that signers perform better than speakers of spoken languages in face recognition tasks (Bellugi et al., 1990; Bettger, 1992; Bettger, Emmorey, McCullough, & Bellugi, 1997). This is even the case in deaf signing children aged 6–9 years (Bellugi et al., 1990; Bettger et al., 1997) when performing a matching task with unfamiliar faces (Benton, 1983). In contrast, deaf children not using sign language do not show an advantage in face recognition (Parasnis, Samar, Bettger, & Sathe, 1996) and thus, it appears that it is not deafness per se that produces the effect, but the expertise with and use of sign language, at least in children. The use of sign language per se, independent of hearing status, might change subordinate levels of face processing as evidenced by speed–accuracy trade-off for face recognition in deaf and hearing signers (Stoll et al., 2017). Exploring face recognition in more detail, McCullough and Emmorey (1997) suggest that the underlying mechanism is enhanced processing of local facial features that are used in sign language, i.e. the eyes and, most importantly, the mouth region which is used for speechreading. By contrast, more global, “Gestalt” like processing of faces is not altered in signers (McCullough & Emmorey, 1997), nor is the amount of holistic/configural face processing (de Heering, Aljuhanay, Rossion, & Pascalis, 2012).

A common misconception about using signs for communication is that signers rely exclusively on manual signs. The face is a particularly communicative part of the human body, conveying information not only about a person´s emotion or identity, but also dynamic cues to speech content aiding speech comprehension (Campbell, Brooks, Haan, & Roberts, 1996). While most aspects of face perception are characterized as holistic rather than feature-based (Maurer, Le Grand, & Mondloch, 2002), speechreading may be a possible exception: The mouth region is crucial, and there is little or no direct contribution from the upper half of the face (Marassa & Lansing, 1995), but more subtle effects of holistic processing on speechreading may exist (Schweinberger & Soukup, 1998). In most sign languages, the eye region is used, e.g. to signal turn-taking as in American Sign Language (ASL). Interestingly, beginning signers fixate more the mouth region of signers, possibly to perceive information from speechreading, while native signers fixate on or near the eyes (Emmorey, Thompson, & Colvin, 2008). Even though everybody reads lips (Rosenblum, 2008), persons with severe hearing loss outperform hearing persons in speechreading even if they do not speak official sign languages (Bernstein, Tucker, & Demorest, 2000). The success of speechreading depends on various cognitive factors (Andersson, Lyxell, Ronnberg, & Spens, 2001) such as working memory functions. Deaf persons using signs as major means of communication, i.e. as their native language or sign-supported speech (SSS), look more on the mouth region than hearing participants. Hearing persons, however, equally inspect upper and lower areas of faces. It thus appears that persons using signs for communication employ mostly peripheral vision to perceive signs (Mastrantuono, Saldana, & Rodriguez-Ortiz, 2017). A similar conclusion was reached by He, Xu and Tanaka (2016) who reported that deaf signers compared to hearing non-signers show a smaller inversion effect in the mouth region. The authors concluded that deaf signing participants had enhanced peripheral field attention. Using event-related potentials Mitchell, Letourneau and Maslin (2013) also demonstrated that deaf signers display increased attention to the lower part of faces, even in the absence of gaze shifts. The authors assume that a lifelong tendency to fixate the lower part of faces leads to increased saliency of this region even before overt attention shifts start. When fixation patterns are measured, deaf users of ASL fixate the bottom half of faces more than upper halves (Letourneau & Mitchell, 2011).

Altered processing of faces not only affects person recognition, but was extended to emotional faces displaying, e.g. anger and disgust (McCullough & Emmorey, 2009) even though earlier studies suggested that there is no advantage, or even a disadvantage for signers in the comprehension of nonverbal expression of emotions (Schiff, 1973; Weisel, 1985). McCullough and Emmorey (2009) compared categorical perception for affective facial expressions and linguistic facial expressions. Continua of morphed images of faces going in eleven steps from anger to disgust (affective facial expression) and from Wh-questions to yes–no questions (linguistic facial expression) served as stimuli. The results demonstrated that categorical perception in faces is not limited to affective stimuli, but also extends to linguistic facial stimuli in hearing non-signers. Importantly, while signers displayed categorical perception in both tasks, categorical perception of affective facial stimuli was only seen when preceded by linguistic stimuli. Thus, exposure to linguistic stimuli affects categorical perception of affective facial expressions.

Taken together, the use of signs for communication has an impact on several aspects of face perception. It remains unclear whether signers classify emotional expressions differently than hearing non-signers.

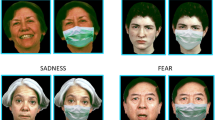

In the current study, we ask if the perception of emotional facial expressions is altered in deaf persons using signs for communication, i.e. speakers of DGS (German sign language) and/or persons using sign supported speech (SSS), all henceforth called deaf signers. We use morphed versions of happy and angry faces going from a neutral expression (0% morphing) to a maximum of expression (100% morphing), thereby varying the intensity of expression. Participants were asked to classify the faces as happy or angry. Importantly, we used original stimuli, but also created composite faces in which either the top or bottom half of the face was neutral (for an example see Fig. 1) while the other half expressed the respective emotional content in various degrees. Expressions of happiness and anger were chosen, because, firstly, both are well recognized compared to other emotional expressions (Ekman & Friesen, 1971, 1986; Gosselin & Kirouac, 1995; Izard, 1994; Tracy & Robins, 2008; Vassallo, Cooper, & Douglas, 2009). Secondly, for the perception of happiness, the mouth region constitutes a crucial part of the face (e.g. Beaudry, Roy-Charland, Perron, Cormier, & Tapp, 2014), whereas the eyes/eyebrows are more important for the recognition of anger (Calvo & Nummenmaa, 2008) even though these latter effects are inconsistent (for eye tracking results and an overview see Beaudry et al., 2014).

Based on the presented literature, we predict that deaf persons using signs outperform hearing persons in the recognition of happy expressions. The assumed underlying mechanism is enhanced processing of the mouth region due to a lifelong focus on this region. Focusing on the mouth region should come with costs for processing of information in the eye region, which should result in impaired processing of angry faces in deaf signers compared to hearing non-signers (but see Emmorey et al., 2008 for evidence for stronger processing of the eye region in native signers compared to beginners). We assume that the predicted effects become already visible when the emotions are not completely expressed (less than 100% morphing).

Methods

Participants

Twenty individuals with hearing loss contributed data to this study (mean age, 51.8 years ± SD 14.5; 11 women). They all used signing as a major means for communication. Thirteen were congenitally deaf and seven had an onset of deafness within the first 3 years of life. All started using signs as means for communication since the age of three or earlier. None of them indicated problems in communicating with signs and the experimenter (the second author B.N.-K. was raised bilingually with spoken and signed German) did not perceive any problems in communication. All twenty individuals indicated to comprehend sign supported speech at a native speaker level. None of them learned the Deutsche Gebärdensprache (German sign language, DGS) as a first language, because DGS was only later introduced as an official language in Germany (since 2002). Fourteen individuals were also speakers of the DGS, of which ten individuals mainly used sign supported speech. The remaining six used speech-accompanying gestures only. The experimenter used sign supported speech and switched to DGS if, e.g. under time pressure (DGS uses less words than speech-accompanying gestures). One further participant was excluded from data analysis due to premature abortion of the experiment. Recruitment of participants was performed via self-help groups in the area of Leipzig, social networks and personal acquaintances.

The group of signers was compared to a group of N = 20 hearing non-signers (mean age, 48.9 years ± SD 15.4; 8 women). None of them had any experience with sign language or SSS. All participants from both groups had at least 10 years of schooling.

Participants were tested individually either in their homes or laboratories of our departments.

Materials

Stimuli were faces of four men and four women from the FEEST database (Young, Perrett, Calder, Sprengelmeyer, & Ekman, 2002). Each individual displayed the emotional expressions “happy” and “angry”, or a neutral expression. Based on these original faces we created four different morphing levels (ML: 25%, 50%, 75%, 100%) for “happy” and “angry”. The neutral faces and face morphs served to generate additional stimuli of composite faces, in which the upper (or lower) halves of the neutral faces were combined with the lower (or upper) halves of the emotional faces. This was performed using Photoshop CC 2017 by cutting and recombining the pictures horizontally at the horizontal nose level. This resulted in three stimulus type conditions, i.e. original (O), eye region emotional–mouth region neutral (EEmo) and eye region neutral–mouth region emotional (MEmo). Thus, there were 192 stimuli (8 individuals × 2 expressions × 3 conditions × 4 MLs), see Fig. 2 for examples.

Top panel: example of an original stimulus set in four morphing levels (stimulus type O). Middle panel: example for a composite face: eye region neutral–mouth region emotional (stimulus type MEmo). Lower panel: example for a composite face: eye region emotional–mouth region neutral (stimulus type EEmo)

Procedure

The experiment was programmed using E-Prime 2.0 (Psychology Software Tools) and was performed on a Lenovo Thinkpad Laptop Z61 m. Each trial began with a fixation cross displayed for 1000 ms, placed on the nose tip of the faces in the center of the screen. The pictures were then presented for 200 ms and were replaced by a question mark on the same position where the fixations cross was placed. Each trial was terminated by a button press from the participants indicating if the face was categorized as “happy” or “angry”. The button press initiated a blank screen for 1000 ms. Participants entered their responses using “d” or “l” on a German keyboard layout. The allocation of facial expression to key was balanced across participants. The 192 faces were repeated three times and distributed across six blocks to enable five short breaks for participants. Before the actual experiment, participants performed 16 training trials with morphed pictures of the FEEST database which were not used in the experiment, and which all appeared in the original database version, i.e. the participants did not encounter the actual composite face conditions until the experiment started. Before the experiment, each participant filled out a consent form informing them about anonymity, the voluntariness of participation and how their data would be handled. The experimenter was a bilingual speaker of German and German sign language. Hearing participants were interviewed about their experience with sign languages and deaf individuals. Deaf persons completed a questionnaire about the nature of their deafness and their use of sign language.

The whole session lasted between 20–45 min in non-signers and about 30–60 min in signers.

The experiment conforms to the ethical principles of the declaration of Helsinki. The study was approved by the ethics committee of the medical faculty of the University of Jena (5498-04/18).

Data analysis

We analyzed accuracy, i.e. that happy expressions were classified as “happy” and vice versa for angry expressions. To rule out speed–accuracy tradeoffs we also analyzed latencies for correct responses. Responses longer than 6000 ms following stimulus onset were excluded from individual averages (0.22%). There were no responses faster than 200 ms.

Statistical analyses were performed using IBM SPSS Statistics 24. For accuracies, we first performed an ANOVA with repeated measures on the factors EMOTION (happy, angry), INTENSITY (ML 25%, 50%, 75%, 100%), STIMULUS TYPE (O, MEmo, EEmo) and the between-subject factor GROUP (signers, non-signers). To pursue significant interactions, follow-up ANOVAS including fewer factors or fewer levels within a factor, or two-sided t-Tests were performed where appropriate. In case of violation of the sphericity assumption, Huynh–Feldt corrections were performed (Huynh & Feldt, 1976). For latencies, the same analyses were performed based on individual averages of correct responses separated by conditions. Regarding our hypotheses, we report only the main effects for the four factors, and effects containing interactions involving GROUP and EMOTION.

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Results

Accuracies

Faces with angry expressions were classified more correctly (angry: M = 0.81, SEM = 0.017) than faces with happy expression (happy: M = 0.73, SEM = 0.014), (main effect for EMOTION F (1, 38) = 8.990, p = 0.005, η2p = 0.191). Increasing the intensity of emotional expression led to higher recognition rates (main effect for INTENSITY (F (3, 114) = 286.257, p < 0.001, η2p = 0.883). This effect was best explained as a linear effectFootnote 1 (F = 479.079; p < 0.001) indicating a linear increase of accuracy with increasing intensity. There was also a main effect for STIMULUS TYPE (F(2, 76) = 256.769, p < 0.001, η2p = 0.871) with higher accuracy for the original version (O: M = 0.87 SEM = 0.01) than the composite faces (MEmo: M = 0.80 SEM = 0.01; EEmo: M = 0.64; SEM = 0.01). There was no main effect for GROUP (F(1, 38) < 1). Most importantly, we found the predicted two-way interaction EMOTION × GROUP (F(1, 38) = 11.158; p = 0.002; η2p = 0.227) and two three-way interactions, i.e. GROUP × EMOTION × STIMULUS TYPE (F(2, 76) = 5.950, p = 0.008, η2p = 0.135) and GROUP × EMOTION × INTENSITY (F(3, 114) = 6.887, p = 0.004, η2p = 0.153).

To explore the origin of the interactions, we performed two additional ANOVAS, one for each emotional expression.

Angry faces

Non-signers recognized angry faces better than signers in all intensity levels (ML 25–75%: all p < 0.05), except for ML 100% (p = 0.19). This became expressed as a two-way interaction of GROUP and INTENSITY (F(3, 114) = 5.290, p = 0.010, η2p = 0.122), see Fig. 3.

Happy faces

We found two two-way interactions, i.e. GROUP × INTENSITY (F(3, 114) = 4.401, p = 0.014, η2p = 0.104) and GROUP × STIMULUS TYPE (F(2, 76) = 6.194, p = 0.014, η2p = 0.140), see Fig. 3 (top panel).

The interaction of group with intensity arose, because signers classified happy faces more accurately than hearing non-signers on all intensity levels, except for ML 100% (ML 25–75%: all p < 0.05; ML 100%: p > 0.05).

Regarding the interaction of group with STIMULUS TYPE, signers perform better than non-signers in the condition with happy eyes and neutral mouths (t(38) = 2.994; p = 0.005). Note that the signers were close to chance levels, whereas the non-signers tended to classify this stimulus type as angry. The two other comparisons did not reach significance (p > 0.07).

Latencies

We observed a main effect of INTENSITY (F(3, 114) = 27.806, p < 0.001, η2p = 0.423) reflecting shorter latencies with increasing intensity (linear contrast: F = 37.466, p < 0.001).Footnote 2 The main effect of STIMULUS TYPE (F(2, 76) = 47.081, p < 0.001, η2p = 0.553) indicated the shortest latency for the original version (M = 713 ms; SEM = 24 ms), followed by MEmo composite faces (M = 763; SEM = 29 ms), and the EEmo composite faces (M = 827 ms; SEM = 34 ms). Pairwise comparisons confirmed significant differences between all conditions (− 8.427 ≤ t(39) ≤ − 3.451, p < 0.004, t tests Bonferroni-corrected). Note, that higher accuracies generally go along with shorter latencies and vice versa, a pattern which does not suggest a speed–accuracy trade-off. The interaction of EMOTION × GROUP (F(1, 38) = 4.348, p = 0.044, η2p = 0.103) was further qualified by an interaction of EMOTION × GROUP × INTENSITY (F(3, 114) = 2.702, p = 0.049, η2p = 0.066). To follow-up on this three-way interaction we performed two additional ANOVAS, one for each emotional expression, see Fig. 3 (lower panel).

Angry faces

An interaction of GROUP × INTENSITY (F(3, 114) = 3.513, p = 0.018, η2p = 0.085) reflected an effect of INTENSITY for deaf signers (F(3, 57) = 9.460, p < 0.001, η2p = 0.332), i.e. decreasing latencies with higher intensities. This effect was not found for the hearing non-signers (F(3, 57) = 2.476, p = 0.071, η2p = 0.115).

Happy faces

There were no effects involving the factor GROUP.Footnote 3

Discussion

Based on the emphasis on the mouth region for speechreading, we hypothesized that deaf signers compared to non-signers should be more accurate to recognize happy faces, i.e. an emotional expression for which the mouth region is crucial. Regarding angry faces for which the eye region is more important, there should be a difference between groups, but earlier studies argued for both directions. We used composite faces in which the upper or lower part was kept neutral while the other part varied in intensity of emotional expression. The results support our hypothesis for happy faces with higher performance in deaf signers. For angry faces, they performed worse than non-signers. These effects appeared especially under conditions when emotional expression was only subtle.

Our results add to the long-standing observation that the constant use of signs for communication alters functions not directly related to language. Face perception and processing of emotional faces is an example. In contrast to hearing persons, deaf signers exhibit a right visual field advantage during emotion judgment in faces that was also observed for, e.g. famous faces (Letourneau & Mitchell, 2013). The lifelong focus on hands as means of communication also elicited a left-lateralized N170 for handshapes due to their linguistic meaning (Mitchell, 2017). As an example, for changes in face perception, signers outperform non-signers in face recognition. McCullough and Emmorey (1997) suggested that the underlying mechanism is enhanced processing of featural information caused by focusing on these features during communication. Our data support this hypothesis, suggesting an emphasis on the mouth region in signers, with less focus on the eye region. This significant interaction of group and stimulus type is particularly prominent for facial expressions of low intensity, i.e. when the emotional information is only subtle (see Fig. 3). At first sight, the condition with happy eyes and a neutral mouth (EEmo) appears remarkable. While non-signers classify faces in this condition as angry, deaf signers are at chance level. The reason for this response pattern in non-signers is likely grounded in the impression that smiling eyes combined with a neutral mouth might convey suspicion or disbelief, rather than genuine happiness (see Fig. 2b, Stimulus Type EEmo). Hearing participants may thus have chosen “angry” more often than “happy”. However, this does not explain why deaf signers perceived these faces as “neutral” on average, and therefore, as relatively happier as did non-signers. In principle, this could be the result of deaf signers selectively relying on the eye region more often than non-signers, such that deaf signers had the impression of a happy face more often than non-signers. Alternatively, deaf signers relied solely on the information in the neutral mouth region in the sense that their processing mode was more feature-based. Consequently, deaf signers would have chosen the response “neutral”, had there been an option too. While it is difficult to decide between these alternatives in the absence of eye-tracking data in our study (but see Letourneau & Mitchell, 2011), we favor the second “neutral mouth” view for three reasons: First, holistic face processing was suggested to be similar for deaf signers and hearing non-signers (de Heering et al., 2012; McCullough & Emmorey, 1997), such that both groups should be equally able to process faces as a whole, i.e. including (ambiguous) information from the upper and lower half. Nevertheless, deaf signers did not behave like non-signers and did not preferentially choose the “angry” response. Second, although signers may preferentially process or attend to information in the visual periphery (Chen, He, Chen, Jin, & Mo, 2010; Chen, Zhang, & Zhou, 2006; Dye, Baril, & Bavelier, 2007; Hauthal, Neumann, & Schweinberger, 2012; Lore & Song, 1991; Neville & Lawson, 1987a, b; Proksch & Bavelier, 2002; Sladen, Tharpe, Ashmead, Grantham, & Chun, 2005), this does not account for the overall “neutral” responses in signers, because holistic processing would evoke the impression of disbelief and suspicion as in the non-signers. Third, while signers and non-signers are equally able to spot alterations in the eye region of unfamiliar faces, signers outperform non-signers when the mouth region is altered (McCullough & Emmorey, 1997).

This suggests that face perception in deaf signers is most likely characterized by a preferential processing of the mouth region and/or enhanced sensitivity to mouth changes due to substantial perceptual expertise with lip-reading. The notion of preferential processing implies that emotion perception in signers is largely driven by a selective (feature-based) processing of the mouth region, i.e. enhanced sensibility for this region. In models on face processing, a face is represented within a multi-dimensional space in the center of which a prototype or average exemplar face is located (Valentine, 1991). According to such models, the template prototype or exemplar face in signers would have a more detailed mouth representation due to ample perceptual experience and relevance. It is an intriguing hypothesis that expertise in a specific language changes such processing mechanisms that, at first sight, do not appear to be directly related to language.

How fast does the influence of using signs for communication on the processing of emotional faces occur? While there are currently no studies that monitor this development over time, there is some evidence that persons who formally learn ASL (ranging between 10 months and 5 years of experience) outperform non-signers in recognizing expressions from video clips (Goldstein & Feldman, 1996). This was most expressed for disgust and anger, but not for happiness, sadness and fear–surprise. The authors assume that there was a ceiling effect for happiness in their study. Regarding the negative emotions, Goldstein and Feldman (1996) do not offer an explanation, but assume that the “nature or content of ASL somehow differentially affects decoding of specific emotions. Perhaps the signs involving the communication of particular emotions vary in certain ways that make signers more attuned to some emotional displays than others” (p. 119). We agree with this assumption and suggest for future studies a comparison between static and dynamic stimuli displaying various emotional expressions.

Future research should also address what is driving the effects reported by us and others. As outlined in the introduction, speechreading is quite common and not restricted to deaf signers. As it also has been found in deaf non-signers (Bernstein et al., 2000), the present data alone do not allow to determine whether the effects depend on sign usage for communication, or on deafness per se. Of relevance, a recent study (Sidera, Amado, & Martinez, 2017) explored facial emotion recognition (matching emotion labels to drawings of emotional faces) in deaf children using hearing aids. Their capacity to recognize emotions was delayed for some emotions (“fear”, “surprise”, disgust”), but not for “happiness”, “anger” and “sadness”. The ability to recognize emotions also was related to linguistic skills, but not to the degree of deafness (Dyck & Denver, 2003; Ludlow, Heaton, Rosset, Hills, & Deruelle, 2010), which could argue for sign usage as the likely factor underlying the present effects. Nevertheless, the degree to which changes in emotion recognition is influenced by sign usage or by deafness per se clearly warrants further investigation, particularly in adults. In a similar vein, the registration of eye gaze patterns might provide fruitful insights in contrasting deaf signers using a sign language, deaf signers using SSS and hearing signers. Although we have no indication in our deaf group that individuals using solely SSS differ from those using SSS and German sign language, it is possible that the gaze pattern while inspecting faces differs within individuals depending on the way of communication, using either DGS/ALS or SSS.

Similar to the issue of how the linguistic or mode of communication background influences perceptual and gaze patterns, there is also the issue of culture. While, as reported, a whole body of evidence exists that deaf signers attend and fixate to the mouth region or bottom part of the face compared the upper part, one study showed the inverse effect: Watanabe, Matsuda, Nishioka, & Namatame (2011) reported more and longer fixations on the eyes than the nose in Japanese deaf signers compared to hearing controls. The authors attribute this discrepancy to the literature to cultural factors, because in many Asian cultures it is regarded as rude to look directly into the eyes. As such, while our study confirms earlier studies on deaf signers using sign languages such as ALS, it will be fascinating to follow this in different cultures exhibiting various gaze patterns for social communication.

Taken together, the perception of faces and, as shown here, of faces with emotional expressions is altered in deaf signers. The study by Goldstein and Feldman (1996) suggests that this does not only happen in childhood, but also later and in a relatively short amount of time. Amazingly, learning to communicate via signs does not only affect perceptive processes, but also those responsible for expression of emotions. Persons who learn ASL are more adept to pose facial emotional expressions than non-signers (Goldstein, Sexton, & Feldman, 2000). It will be a fascinating endeavor for future research to find out how fast the perceptual system adapts to the requirements of language and how the interplay of perception and expression develops.

Notes

There was also a smaller but significant quadratic trend (F = 115.144; p < 0.001) and cubic trend (F = 4.398; p = 0.043).

There was also a smaller but significant quadratic trend (F = 13.506; p = 0.001).

We performed two additional ANOVAs on accuracies and latencies, comparing two sub-groups of deaf signers, i.e. those using DGS and SSS (N = 14) with those using SSS (N = 6) only. For accuracies, the only significant effect involving the factor GROUP was an interaction of STIMULUS TYPE × INTENSITY × GROUP (F(6, 108) = 2.579, p = 0.026, ηp2 = 0.125). Running the ANOVA for each group separately, revealed a significant interaction of STIMULUS TYPE × INTENSITY in both groups (F(6, 78) = 3.617, p = 0.007, ηp2 = 0.218 for the signers using DGS and SSS; F(6, 30) = 10.154, p < 0.001, ηp2 = .670 for the signers using SSS only. With increasing intensity both groups displayed the effect of TYPE with the highest accuracy for O stimuli followed by MEmo and Eemo. However, in the group using DGS and SSS the effect of STIMULUS TYPE appeared already with the lowest morphing intensity (F(2,26) = 9.560, p = 0.001, η2p = 0.424), while this was not the case in participants using SSS only (F(2,10) = 1.258, p = 0.326, η2p = 0.201)..For latencies, there were no effects involving GROUP.

References

Andersson, U., Lyxell, B., Ronnberg, J., & Spens, K. E. (2001). Cognitive correlates of visual speech understanding in hearing-impaired individuals. Journal of Deaf Studies and Deaf Education,6(2), 103–116. https://doi.org/10.1093/deafed/6.2.103.

Beaudry, O., Roy-Charland, A., Perron, M., Cormier, I., & Tapp, R. (2014). Featural processing in recognition of emotional facial expressions. Cognition and Emotion,28(3), 416–432. https://doi.org/10.1080/02699931.2013.833500.

Bellugi, U., O’Grady, L., Lillo-Martin, D., Hynes, M. G., van Hoek, K., & Corina, D. (1990). Enhancement of spatial cognition in deaf children. In V. Volterra & C. J. Erting (Eds.), From gesture to language in hearing and deaf children (pp. 278–298). Berlin: Springer.

Benton, A. L. (1983). Facial recognition: Stimulus and multiple choice pictures; Contributions to neuropsychological assessment. Oxford: Oxford University Press.

Bernstein, L. E., Tucker, P. E., & Demorest, M. E. (2000). Speech perception without hearing. Perception and Psychophysics,62(2), 233–252. https://doi.org/10.3758/bf03205546.

Bettger, J. (1992). The effects of experience on spatial cognition: Deafness and knowledge of ASL. Unpublished dissertation. Urbana-Champaign: University of Illinois.

Bettger, J. G., Emmorey, K., McCullough, S. H., & Bellugi, U. (1997). Enhanced facial diserimination: Effects of experience with American sign language. Journal of Deaf Studies and Deaf Education,2(4), 223–233. https://doi.org/10.1093/oxfordjournals.deafed.a014328.

Calvo, M. G., & Nummenmaa, L. (2008). Detection of emotional faces: salient physical features guide effective visual search. Journal of Experimental Psychology: General,137(3), 471–494. https://doi.org/10.1037/a0012771.

Campbell, R., Brooks, B., Haan, E. D., & Roberts, T. (1996). Dissociating face processing skills: Decisions about lip read speech, expression, and identity. The Quarterly Journal of Experimental Psychology A,49(2), 295–314. https://doi.org/10.1080/027249896392649.

Chen, Q., He, G., Chen, K., Jin, Z., & Mo, L. (2010). Altered spatial distribution of visual attention in near and far space after early deafness. Neuropsychologia,48(9), 2693–2698. https://doi.org/10.1016/j.neuropsychologia.2010.05.016.

Chen, Q., Zhang, M., & Zhou, X. (2006). Effects of spatial distribution of attention during inhibition of return (IOR) on flanker interference in hearing and congenitally deaf people. Brain Research,1109(1), 117–127. https://doi.org/10.1016/j.brainres.2006.06.043.

de Heering, A., Aljuhanay, A., Rossion, B., & Pascalis, O. (2012). Early deafness increases the face inversion effect but does not modulate the composite face effect. Frontiers in Psychology,3, 124. https://doi.org/10.3389/fpsyg.2012.00124.

Dobel, C., Enriquez-Geppert, S., Hummert, M., Zwitserlood, P., & Bolte, J. (2011). Conceptual representation of actions in sign language. Journal of Deaf Studies and Deaf Education,16(3), 392–400. https://doi.org/10.1093/deafed/enq070.

Dyck, M. J., & Denver, E. (2003). Can the emotion recognition ability of deaf children be enhanced? A pilot study. Journal of Deaf Studies and Deaf Education,8(3), 348–356. https://doi.org/10.1093/deafed/eng019.

Dye, M. W., Baril, D. E., & Bavelier, D. (2007). Which aspects of visual attention are changed by deafness? The case of the Attentional Network Test. Neuropsychologia,45(8), 1801–1811. https://doi.org/10.1016/j.neuropsychologia.2006.12.019.

Ekman, P., & Friesen, W. V. (1971). Constants across cultures in the face and emotion. Journal of Personality and Social Psychology,17(2), 124–129. https://doi.org/10.1037/h0030377.

Ekman, P., & Friesen, W. V. (1986). A new pan-cultural facial expression of emotion. Motivation and Emotion,10(2), 159–168.

Emmorey, K. (2001). Language, cognition, and the brain: Insights from sign language research. London: Psychology Press.

Emmorey, K., Kosslyn, S. M., & Bellugi, U. (1993). Visual imagery and visual-spatial language: Enhanced imagery abilities in deaf and hearing ASL signers. Cognition,46(2), 139–181.

Emmorey, K., Thompson, R., & Colvin, R. (2008). Eye gaze during comprehension of American Sign Language by native and beginning signers. Journal of Deaf Studies and Deaf Education,14(2), 237–243.

Emmorey, K., & Tversky, B. (2002). Spatial perspective choice in ASL. Sign Language and Linguistics,5(1), 3–26. https://doi.org/10.1075/sll.5.1.03emm.

Goldstein, N. E., & Feldman, R. S. (1996). Knowledge of American sign language and the ability of hearing individuals to decode facial expressions of emotion. Journal of Nonverbal Behavior,20(2), 111–122. https://doi.org/10.1007/bf02253072.

Goldstein, N. E., Sexton, J., & Feldman, R. S. (2000). Encoding of facial expressions of emotion and knowledge of American sign language. Journal of Applied Social Psychology,30(1), 67–76. https://doi.org/10.1111/j.1559-1816.2000.tb02305.x.

Gosselin, P., & Kirouac, G. (1995). Le décodage de prototypes émotionnels faciaux. Canadian Journal of Experimental Psychology/Revue canadienne de psychologie expérimentale,49(3), 313–329. https://doi.org/10.1037/1196-1961.49.3.313.

Hauthal, N., Neumann, M. F., & Schweinberger, S. R. (2012). Attentional spread in deaf and hearing participants: face and object distractor processing under perceptual load. Attention, Perception and Psychophysics,74(6), 1312–1320. https://doi.org/10.3758/s13414-012-0320-1.

He, H., Xu, B., & Tanaka, J. (2016). Investigating the face inversion effect in a deaf population using the Dimensions Tasks. Visual Cognition,24(3), 201–211.

Huynh, H., & Feldt, L. S. (1976). Estimation of the Box correction for degrees of freedom from sample data in randomized block and split-plot designs. Journal of Educational Statistics,1(1), 69–82.

Izard, C. E. (1994). Innate and universal facial expressions: Evidence from developmental and cross-cultural research. Psychological Bulletin,115(2), 288–299. https://doi.org/10.1037/0033-2909.115.2.288.

Letourneau, S. M., & Mitchell, T. V. (2011). Gaze patterns during identity and emotion judgments in hearing adults and deaf users of American Sign Language. Perception,40(5), 563–575. https://doi.org/10.1068/p6858.

Letourneau, S. M., & Mitchell, T. V. (2013). Visual field bias in hearing and deaf adults during judgments of facial expression and identity. Frontiers in Psychology,4, 319. https://doi.org/10.3389/fpsyg.2013.00319.

Levinson, S. C. (2003). Space in language and cognition: Explorations in cognitive diversity. Cambridge: Cambridge University Press.

Lore, W. H., & Song, S. (1991). Central and peripheral visual processing in hearing and nonhearing individuals. Bulletin of the Psychonomic Society,29(5), 437–440.

Ludlow, A., Heaton, P., Rosset, D., Hills, P., & Deruelle, C. (2010). Emotion recognition in children with profound and severe deafness: Do they have a deficit in perceptual processing? Journal of Clinical and Experimental Neuropsychology,32(9), 923–928. https://doi.org/10.1080/13803391003596447.

Marassa, L. K., & Lansing, C. R. (1995). Visual word recognition in two facial motion conditions: Full-face versus lips-plus-mandible. Journal of Speech Language and Hearing Research,38(6), 1387–1394. https://doi.org/10.1044/jshr.3806.1387.

Mastrantuono, E., Saldana, D., & Rodriguez-Ortiz, I. R. (2017). An eye tracking study on the perception and comprehension of unimodal and bimodal linguistic inputs by deaf adolescents. Frontiers in Psychology,8, 1044. https://doi.org/10.3389/fpsyg.2017.01044.

Maurer, D., Le Grand, R., & Mondloch, C. J. (2002). The many faces of configural processing. Trends in Cognitive Sciences,6(6), 255–260.

McCullough, S., & Emmorey, K. (1997). Face processing by deaf ASL signers: Evidence for expertise in distinguishing local features. Journal of Deaf Studies and Deaf Education,2(4), 212–222. https://doi.org/10.1093/oxfordjournals.deafed.a014327.

McCullough, S., & Emmorey, K. (2009). Categorical perception of affective and linguistic facial expressions. Cognition,110(2), 208–221. https://doi.org/10.1016/j.cognition.2008.11.007.

Mitchell, T. V. (2017). Category selectivity of the N170 and the role of expertise in deaf signers. Hearing Research,343, 150–161.

Mitchell, T. V., Letourneau, S. M., & Maslin, M. C. (2013). Behavioral and neural evidence of increased attention to the bottom half of the face in deaf signers. Restorative Neurology and Neuroscience,31(2), 125–139. https://doi.org/10.3233/rnn-120233.

Neville, H. J., & Lawson, D. (1987a). Attention to central and peripheral visual space in a movement detection task: An event-related potential and behavioral study. I. Normal hearing adults. Brain Research,405(2), 253–267.

Neville, H. J., & Lawson, D. (1987b). Attention to central and peripheral visual space in a movement detection task: an event-related potential and behavioral study. II. Congenitally deaf adults. Brain Research,405(2), 268–283.

Parasnis, I., Samar, V. J., Bettger, J. G., & Sathe, K. (1996). Does deafness lead to enhancement of visual spatial cognition in children? Negative evidence from deaf nonsigners. The Journal of Deaf Studies and Deaf Education,1(2), 145–152.

Proksch, J., & Bavelier, D. (2002). Changes in the spatial distribution of visual attention after early deafness. Journal of Cognitive Neuroscience,14(5), 687–701. https://doi.org/10.1162/08989290260138591.

Rosenblum, L. D. (2008). Speech perception as a multimodal phenomenon. Current Directions in Psychological Science,17(6), 405–409. https://doi.org/10.1111/j.1467-8721.2008.00615.x.

Schiff, W. (1973). Social-event perception and stimulus pooling in deaf and hearing observers. The American Journal of Psychology,86(1), 61–78. https://doi.org/10.2307/1421848.

Schweinberger, S. R., & Soukup, G. R. (1998). Asymmetric relationships among perceptions of facial identity, emotion, and facial speech. Journal of Experimental Psychology: Human Perception and Performance,24(6), 1748–1765. https://doi.org/10.1037/0096-1523.24.6.1748.

Sidera, F., Amado, A., & Martinez, L. (2017). Influences on Facial Emotion Recognition in Deaf Children. Journal of Deaf Studies and Deaf Education,22(2), 164–177. https://doi.org/10.1093/deafed/enw072.

Sladen, D. P., Tharpe, A. M., Ashmead, D. H., Grantham, D. W., & Chun, M. M. (2005). Visual attention in deaf and normal hearing adults. Journal of Speech Language and Hearing Research,48(6), 1529–1537. https://doi.org/10.1044/1092-4388(2005/106).

Stoll, C., Palluel-Germain, R., Caldara, R., Lao, J., Dye, M. W., Aptel, F., & Pascalis, O. (2017). Face recognition is shaped by the use of sign language. The Journal of Deaf Studies and Deaf Education,23(1), 62–70.

Tracy, J. L., & Robins, R. W. (2008). The automaticity of emotion recognition. Emotion,8(1), 81–95. https://doi.org/10.1037/1528-3542.8.1.81.

Valentine, T. (1991). A unified account of the effects of distinctiveness, inversion, and race in face recognition. The Quarterly Journal of Experimental Psychology Section A,43(2), 161–204.

Vassallo, S., Cooper, S. L., & Douglas, J. M. (2009). Visual scanning in the recognition of facial affect: is there an observer sex difference? Journal of Vision,9(3), 1–10. https://doi.org/10.1167/9.3.11.

Watanabe, K., Matsuda, T., Nishioka, T., & Namatame, M. (2011). Eye gaze during observation of static faces in deaf people. PLoS One,6(2), e16919.

Weisel, A. (1985). Deafness and perception of nonverbal expression of emotion. Perceptual and Motor Skills,61(2), 515–522. https://doi.org/10.2466/pms.1985.61.2.515.

Whorf, B. L. (2012). Language, thought, and reality. In J. B. Carroll, S. C. Levinson, P. Lee (Eds.), Selected writings of Benjamin Lee Whorf (2nd ed.). Cambridge, Massachusetts: MIT Press.

Young, A., Perrett, D., Calder, A., Sprengelmeyer, R., & Ekman, P. (2002). Facial expressions of emotion: Stimuli and tests (FEEST). Bury St. Edmunds: Thames Valley Test Company.

Acknowledgements

We want to cordially thank both the deaf and hearing participants for their willingness to take part in our study. We are grateful to Christine Wulf for technical assistance.

Author information

Authors and Affiliations

Contributions

CD, RZ designed the study, analyzed the data and wrote the paper. BNK designed the study, collected and analyzed the data, SRS and OGL wrote the paper.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Dobel, C., Nestler-Collatz, B., Guntinas-Lichius, O. et al. Deaf signers outperform hearing non-signers in recognizing happy facial expressions. Psychological Research 84, 1485–1494 (2020). https://doi.org/10.1007/s00426-019-01160-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00426-019-01160-y