Abstract

The present study investigated modality-specific differences in processing of temporal information in the subsecond range. For this purpose, participants performed auditory and visual versions of a rhythm perception and three different duration discrimination tasks to allow for a direct, systematic comparison across both sensory modalities. Our findings clearly indicate higher temporal sensitivity in the auditory than in the visual domain irrespective of type of timing task. To further evaluate whether there is evidence for a common modality-independent timing mechanism or for multiple modality-specific mechanisms, we used structural equation modeling to test three different theoretical models. Neither a single modality-independent timing mechanism, nor two independent modality-specific timing mechanisms fitted the empirical data. Rather, the data are well described by a hierarchical model with modality-specific visual and auditory temporal processing at a first level and a modality-independent processing system at a second level of the hierarchy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Research on modality-specific differences in temporal processing suggests better temporal resolution for the auditory compared to the visual sensory system. Higher temporal sensitivity of the auditory modality could be established for different elementary temporal experiences in the range of milliseconds such as simultaneity/successiveness, perceived duration, and duration discrimination (for concise reviews see Fraisse, 1985; Pöppel 1978; Rammsayer, 1992; van Wassenhove, 2009).

Investigations of simultaneity/successiveness are concerned with the size of the temporal interval between two events that is required for them to be perceived as two separate events (successiveness) rather than fused as one event (simultaneity; Fraisse, 1985; Rammsayer, 1992). Work on simultaneity and successiveness reveals much lower fusion thresholds for the auditory than for the visual modality (cf., Li, Huang, Wu, Qi, & Schneider, 2009; Rammsayer, 1989, 1994; Rammsayer & Brandler, 2002).

The concept of perceived duration refers to the subjectively perceived duration of a certain stimulus interval, independently of the objective duration of the interval. Numerous studies consistently demonstrated effects of sensory modality on perceived duration indicating that auditory stimuli are perceived as longer than visual ones of the same physical duration (Ortega, Lopez, & Church, 2009; Penney, 2003; Penney, Gibbon, & Meck, 2000; Penney, & Tourret, 2005; Ulrich, Nitschke, & Rammsayer, 2006; Walker, & Scott, 1981; Wearden, Edwards, Fakhri, & Percival, 1998).

Unlike perceived duration, duration discrimination refers to the ability to discriminate the smallest possible differences in duration between two stimulus intervals. Temporal sensitivity, as reflected by performance on duration discrimination, appears to be based on, at least partially, different mechanisms than perceived duration (Grondin, & Rammsayer, 2003; Rammsayer, 2010). In contrast to the large number of studies on modality-specific effects on perceived duration, systematic investigations of the effects of sensory modality on performance on duration discrimination are extremely scant. The available data suggest better discrimination of auditory compared to visually presented intervals (Grondin, 1993; Grondin, Meilleur-Wells, Ouellette, & Macar, 1998; Ulrich et al., 2006). This indicates higher temporal sensitivity in the auditory than in the visual sensory mode.

There are usually two types of stimuli used in duration discrimination tasks. One type is the filled interval and the other type is the empty interval. In filled intervals, onset and offset of a continuous signal serve as markers, whereas an empty interval is a silent duration marked by an onset and an offset signal with no stimulus presented during the interval itself. Experimental evidence suggests that performance in duration discrimination is affected by stimulus type. It was found that filled auditory intervals (continuous tones) were discriminated more accurately than empty intervals (with onset and offset marked by clicks) at a 50-ms base duration (Rammsayer, 2010; Rammsayer, & Lima, 1991). No such performance differences could be shown for longer intervals.

Another elementary time experience represents the perception of rhythm. Rhythm perception refers to the subjective grouping of objectively separate events (Demany, McKenzie, & Vurpillot, 1977) or discrimination processes in serial temporal patterns (ten Hoopen et al., 1995). In a typical rhythm perception task, participants are presented with a click pattern devoid of any pitch, timbre, or dynamic variations to avoid possible confounding influences on perceived rhythm. The participant’s task is to detect a deviation from regular, periodic click-to-click intervals. To the best of our knowledge, there are only a few studies directly comparing performance on auditory and visual rhythm perception. While Collier and Logan (2000) reported reliably better performance for the auditory than for the visual modality, the findings of a more recent study (Jokiniemi, Raisamo, Lylykangas, & Surakka, 2008) point in the same direction but failed to reach statistical significance. Additional indirect evidence for better rhythm perception in the auditory compared to the visual modality comes from two studies using a stop-reaction time task with auditory and visual stimuli (Penney, 2004; Rousseau, & Rousseau, 1996).

The main goal of the present study was to further elucidate modality-specific differences in rhythm perception and duration discrimination in the range of milliseconds. For this purpose, a rhythm perception task and three different duration discrimination tasks (discrimination of filled intervals, discrimination of empty intervals, and temporal generalization) were employed in the present experiment. Identical versions of each of the four tasks were presented in the auditory and in the visual modality to allow for a direct, systematic comparison of temporal sensitivity across both sensory modalities.

Performance on duration discrimination may be influenced by various factors such as type of interval and the psychophysical procedure applied. Inconsistent findings as a function of type of interval prompted Craig (1973) to put forward the idea of different timing mechanisms required for the processing of filled and empty intervals, although he did not specify these mechanisms. Proceeding from these considerations, both filled and empty intervals were used in the present study to investigate potential differences in duration discrimination with auditory and visual stimuli.

There is converging evidence for the notion that the psychophysical procedure applied for quantification of performance on duration discrimination may also influence the results. One of the most common tasks in time psychophysics represents temporal discrimination based on the reminder task paradigm (cf., Lapid, Ulrich, & Rammsayer, 2008; Macmillan, & Creelman, 2005). With this type of task, the standard interval is always presented first followed by the comparison interval. The participant’s task is to indicate whether the first or second interval appeared longer. Another type of duration discrimination task is represented by the temporal generalization task. Unlike temporal discrimination, temporal generalization does not entirely rely upon genuine timing processes but also on additional long-term memory processes (McCormack, Brown, Maylor, Richardson, & Darby, 2002). This is because, with this type of task, participants are instructed to memorize a reference duration during a preexposure phase and are required to judge whether or not the durations presented during the test phase were the same as the reference duration. There is some evidence, that performance on temporal generalization is better in the auditory modality compared to the visual modality (Klapproth, 2002). Furthermore, visual judgments were shown to be more variable than auditory ones (Wearden et al., 1998). To further elucidate modality-specific differences in temporal generalization, an auditory and a visual temporal generalization task were included in the present experiment.

Besides a direct comparison of performance on the four psychophysical timing tasks as a function of sensory modality, another goal of the present study was to answer the question of whether there is a common, modality-independent timing mechanism rather than two modality-specific timing mechanisms underlying auditory and visual processing of temporal information. Duration discrimination is often explained by the general assumption of a hypothetical internal clock based on neural counting (Allan, & Kristofferson, 1974; Creelman, 1962; Rammsayer, & Ulrich, 2001; Treisman, 1963). The main features of such an internal clock mechanism are a pacemaker and an accumulator. The pacemaker emits pulses and the number of pulses relating to a physical time interval is recorded by the accumulator. Thus, the number of pulses counted during a given time interval is the internal temporal representation of the interval. The higher the clock rate, the finer the temporal resolution of the internal clock will be, which is equivalent to higher temporal sensitivity as indicated by better performance on duration discrimination and rhythm perception. Some neurophysiological evidence supports the notion of such a common timing mechanism underlying visual and auditory temporal processing. In a recent fMRI study, Shih, Kuo, Yeh, Tzen, and Hsieh (2009) identified the supplementary motor area and the basal ganglia as common neural substrates involved in temporal processing of both auditory and visual intervals in the subsecond range.

Other psychophysiological and psychophysical findings, however, seriously challenge the notion of a modality-independent common timing mechanism and rather suggest two distinct modality-specific timing mechanisms. For example, an electrophysiological study (Chen, Huang, Luo, Peng, & Liu, 2010) revealed differences between auditory and visual duration-dependent mismatch negativity under attended and unattended conditions. Based on this observation, these authors concluded that auditory temporal information is processed automatically, whereas processing of visual temporal information draws on additional attentional resources. Furthermore, Lapid, Ulrich, and Rammsayer (2009) examined perceptual learning from the auditory to the visual modality. They investigated if training on an auditory duration discrimination task facilitates the discrimination of visual durations. No such cross-modal training effect could be found. All these latter findings favor the idea of two distinct modality-specific mechanisms for the processing of auditorily and visually presented temporal intervals rather than the notion of a general, modality-independent timing mechanism.

In view of the few existing and rather ambiguous data, a major goal of the present study was to investigate the relation between auditory and visual temporal information processing using structural equation modeling (SEM). Even if differences in mean performance on auditory and visual timing tasks could be confirmed, additional analyses of variances and covariances by means of SEM may reveal whether auditory and visual temporal information processing involve the same or different timing mechanisms. To achieve this goal, three basic models for temporal processing of auditory and visual information processing were tested.

If it is supposed that the same mechanism underlies visual and auditory temporal information processing, one latent variable, referring to a general, modality-independent timing mechanism, should be able to explain the pattern of correlations between the eight timing tasks. This assumption is expressed in Model 1 (Fig. 1a).

Proceeding from the above mentioned findings that support the idea of two distinct, modality-specific timing mechanisms, Model 2 (see Fig. 1b) assumes that two independent latent variables should account better for the pattern of correlations between the visual and auditory timing tasks than a general, modality-independent timing mechanism as suggested by Model 1. To test this hypothesis, we derived one latent variable referred to as “visual temporal processing” from the four visual timing tasks and another latent variable referred to as “auditory temporal processing” from the four auditory timing tasks. In case that these two latent variables reflect two largely independent timing mechanisms, the correlation between the two latent variables is expected to be zero.

Finally, a third theoretical model endorses the notion of a hierarchical structure of modality-specific and modality-independent levels of information processing (Fig. 1c). This model acts on the assumption of modality-specific visual and auditory processing of temporal information at a first level of processing which, however, is controlled by a superordinate, modality-independent mechanism of sorts.

Method

Participants

Participants were 60 male and 54 female volunteers ranging in age from 18 to 30 years (mean and standard deviation of age 22.9 ± 3.3 years). All participants had normal hearing and normal or corrected-to-normal vision. The study was approved by the local ethics committee.

Psychophysical timing tasks

Apparatus and stimuli

For stimulus presentation and response recording on all tasks, E-Prime Version 2.0 experimental software (Psychology Software Tools Inc., Pittsburgh, PA, USA) was used. Auditory stimuli were white-noise bursts presented binaurally through headphones (Sony CD 450) at an average intensity of 67 dB. Visual stimuli were generated by a red LED (diameter .38°, viewing distance 60 cm, luminance 68 cd/m2) positioned at eye level of the participant. The intensity of the LED was clearly above threshold, but not dazzling.

Auditory and visual temporal discrimination of filled intervals (DDF)

The temporal discrimination task comprised two blocks, one block of visual and one block of auditory stimuli. Each block consisted of 64 trials, and each trial consisted of one standard interval and one comparison interval. The duration of the comparison interval varied according to an adaptive rule (Kaernbach, 1991) to estimate x.25 and x.75 of the individual psychometric function; that is, the two comparison intervals at which the response “longer” was given with a probability of .25 and .75, respectively.

For both the auditory and the visual task, the standard interval was 100 ms and initial durations of the comparison interval were 35 ms below and above the standard interval for x.25 and x.75, respectively. To estimate x.25, the duration of the comparison interval was increased for Trials 1–5 by 5 ms if the participant had judged the standard interval to be longer and decreased by 15 ms after a “short” judgment. For Trials 6–32, the duration of the comparison interval was increased by 3 ms and decreased by 9 ms, respectively. The opposite step sizes were employed for x.75. In each experimental block, one series of 32 trials converging to x.75 and one series of 32 trials converging to x.25 were presented. Within each series, the order of presentation for the standard interval and the comparison interval was fixed, with the standard interval being presented first. Trials from both series were randomly interleaved within a block.

Each participant was seated at a table with a keyboard and a computer monitor. To initiate a trial, the participant pressed the space bar; auditory presentation began 900 ms later. The two intervals were presented with an interstimulus interval of 900 ms. The participant’s task was to decide which of the two intervals was longer and to indicate his/her decision by pressing one of two designated keys on a computer keyboard. One key was labeled “First interval longer” and the other was labeled “Second interval longer”. The instructions to the participants emphasized accuracy; there was no requirement to respond quickly. After each response, visual feedback (“+”, i.e., correct; “−”, i.e., false) was displayed on the computer screen during 1,500 ms. The next trial started 900 ms after presentation of the feedback.

As a measure of performance, mean differences between standard and comparison intervals were computed for the last 20 trials of each series. Thus, estimates of the 25 and 75% difference thresholds in relation to the 100-ms standard intervals were obtained for the auditory and the visual task, respectively. In a second step, half the interquartile range [(75% threshold value − 25% threshold value)/2], representing the difference limen, DL (Luce, & Galanter, 1963), were determined for both temporal discrimination tasks. With this psychophysical measure, better performance on duration discrimination is indicated by smaller values.

Auditory and visual temporal discrimination of empty intervals (DDE)

With this type of task, the intervals to be compared were empty intervals marked by an onset and an offset signal. For the auditory and the visual task, the intervals were bounded by 3-ms white-noise bursts and 3-ms light flashes, respectively. All other parameters of this task were the same as in the temporal discrimination task with filled intervals.

Auditory and visual temporal generalization (TG)

In addition to the temporal discrimination tasks, an auditory and a visual temporal generalization task were used with a standard duration of 100 ms. For visual temporal generalization, the nonstandard stimulus durations were 55, 70, 85, 115, 130, and 145 ms, whereas for auditory temporal generalization, the nonstandard stimulus durations were 67, 78, 89, 111, 122, and 133 ms. These shorter nonstandard durations for the auditory task were chosen because a pilot study showed that the auditory task would be too easy when using nonstandard durations as long as those used with the visual task.

With both generalization tasks, participants were required to identify the standard stimulus among the six nonstandard stimuli. In the first part of the experiment, the learning phase, participants were instructed to memorize the standard stimulus duration. For this purpose, the standard interval was presented five times. Then participants were asked to start the test. Each generalization task consisted of eight blocks. Within each block, the standard duration was presented twice, while each of the six nonstandard intervals was presented once. All duration stimuli were presented in randomized order.

On each test trial, one duration stimulus was presented. Participants were instructed to decide whether or not the presented stimulus was of the same duration as the standard stimulus presented during the learning phase. Immediately after presentation of a stimulus, the participant responded by pressing one of two designated response keys. One key was labeled “Standard” and the other was labeled “Non-Standard”. Each response was followed by visual feedback. As a quantitative measure of performance on temporal generalization an individual index of response dispersion (McCormack, Brown, Maylor, Darby, & Green, 1999) was determined. For this purpose, the proportion of total “Standard”-responses to the standard duration was divided by the sum of the relative frequencies of “Standard”-responses to all seven durations. This measure would approach 1.0 if all “Standard”-responses are produced to the standard duration and none to the nonstandard stimuli.

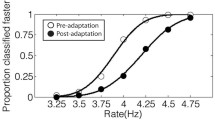

Auditory and visual rhythm perception (RP)

Apparatus and stimuli were the same as in the previous experimental tasks. For the auditory rhythm perception task, the stimuli consisted of 3-ms white-noise bursts presented binaurally through headphones, while light flashes with a duration of 3 ms were used in the visual task. Participants were presented with rhythmic patterns, each consisting of a sequence of six noise bursts (auditory task) or six flashes (visual task) marking five beat-to-beat intervals. Four of these intervals were of a constant duration of 150 ms, while one interval was variable (150 ms + x). The initial duration of x was 20 ms. The magnitude of x changed from trial to trial depending on the participant’s previous response according to the weighted up-down procedure (Kaernbach, 1991) which converged on a probability of hits of .75. Correct responding resulted in a decrease of 4 ms and incorrect responses made the task easier by increasing the value of 12 ms. For each task, a total of 64 experimental trials were grouped in two independent series of 32 trials each. In Series 1, the third beat-to beat interval was the deviant interval, while, in Series 2, the fourth beat-to-beat interval was the deviant interval. Trials from both series were presented in random order.

The participant’s task was to decide whether the presented rhythmic pattern was perceived as “regular” (i.e., all beat-to-beat intervals appeared to be of the same duration) or “irregular” (i.e., one beat-to-beat interval was perceived as deviant). Participants indicated their decision by pressing one of two designated response keys (either “Regular” or “Non-regular”). No feedback was given, as there were no perfectly isochronous (“regular”) patterns presented. As a psychophysical indicator of performance on auditory and visual rhythm perception, the 75% threshold for detection of irregularity was determined. Individual threshold estimates represented the mean threshold value across Series 1 and 2.

Time course of the experiment

Auditory and visual timing tasks were presented blockwise within one session. Order of blocks was counterbalanced across participants. Order of tasks within blocks was balanced across participants. Testing took approximately 45 min.

Data analysis

To investigate modality-specific differences in temporal sensitivity within each timing task, paired t tests were performed. In a next step, we examined which of the three above defined models of temporal information processing will provide the best description of the empirical data. For this purpose, the model-implied covariance matrix was compared with the empirically observed covariance matrix using SEM. The fit between the two matrices can be judged in terms of several fit indices. The most important index is the χ2 value which denotes the degree of deviance between the two matrices. A statistically nonsignificant χ2 value indicates that the empirically observed matrix of covariance does not deviate significantly from the model-implied matrix. To judge which of the three theoretical models describes the empirical data best, χ2 values of the three models will be compared. In case that the χ2 value of one model is significantly smaller than that of another model, the model with the smaller χ2 value describes the empirical data more adequately.

The disadvantage of the χ2 value, however, is its dependence on sample size (e.g., Kline, 1998; Schermelleh-Engel, Moosbrugger, & Müller, 2003). Therefore, the common procedure is to use further fit indices (for overview see Schermelleh-Engel et al., 2003). The comparative fit index (CFI) is less affected by the sample size and signifies whether the estimated model is better than a null model, i.e., a model where all observed variables are assumed to be uncorrelated (cf., Kline, 1998). The CFI can vary from 0 to 1 with higher values indicating an improvement by the estimated model over the null model. A value of .95 or higher is assumed to be an acceptable fit (cf., Kline, 1998; Schermelleh-Engel et al., 2003). The root mean square error of approximation (RMSEA) compares the estimated model with a perfect fitting model. Smaller RMSEA values indicate less difference between the estimated model and the perfect model. Thus, RMSEA values smaller than .05 suggest a close fit, but also values between .05 and .08 are acceptable (Browne, & Cudeck, 1993; Schermelleh-Engel et al., 2003). Furthermore, the 90% confidence interval (CI) of the RMSEA is supposed to include 0 indicating that the true value of the RMSEA approximates a perfect model fit (Schermelleh-Engel et al., 2003).

A theoretical model should be as parsimonious as possible. Building models for more complex phenomena, however, requires the inclusion of additional parameters at the expense of decreasing parsimony of the model. Concurrently, a more complex model exhibits a smaller χ2 value (i.e., a better fit) compared to a less complex model. To account for the complexity of a given model, the Akaike information criterion (AIC) is computed by charging the χ2 value against the complexity of the model. When calculating the AIC, the χ2 value is less reduced for a more complex model and more strongly reduced for a less complex model. As a consequence, the AIC of a less complex model can be smaller than the AIC of a more complex model although its χ2 value has to be larger. Therefore, when comparing two or more models to each other, the model with the lower AIC represents the better fitting model (Schermelleh-Engel et al., 2003). Finally, the standardized root mean square residual (SRMR) represents an index of covariance residuals. Covariance residuals describe the remaining differences between the empirically observed and the model-implied covariances (Kline, 1998). Thus, lower SRMR values indicate smaller covariance residuals. Thus, usually SRMR values ≤.10 are considered acceptable (Kline, 1998).

SEM analyses were carried out with the statistical modeling program Mplus (Muthén, & Muthén, 2009). To estimate the model parameters on the basis of the empirical data, a maximum-likelihood procedure was used.

Results

Descriptive statistics of temporal sensitivity measures for all auditory and visual timing tasks are listed in Table 1. Paired t tests revealed significant differences between modalities for all timing tasks (see Table 1). These results indicate significantly higher temporal sensitivity in the auditory than in the visual sensory mode for all four types of timing tasks.

It is important to note that the index of response dispersion obtained from the temporal generalization task is positively related to performance, i.e., better performance is indicated by higher values of response dispersion. All other psychophysical measures based on threshold estimates are negatively associated with timing performance. To enhance clarity of data presentation, for the following analyses algebraic signs of performance measures based on threshold estimates were reversed, so that higher positive values consistently indicate better performance for all temporal tasks. Correlational analysis yielded statistically significant positive correlations between performance scores of most temporal tasks irrespective of sensory modality (see Table 2). This indicates a positive manifold (cf., Carroll, 1993) and, thus, may suggest a common modality-independent timing mechanism.

To further evaluate whether the positive manifold is due to one common modality-independent timing mechanism or to multiple modality-dependent mechanisms, the three above outlined theoretical models were investigated using SEM. Because of the substantial portion of variance shared by the same type of task, we allowed intercorrelations between the residuals of visual and auditory timing performance on the TG and RP task, respectively, in all three models.

With the first model, we tested the assumption of one general, modality-independent timing mechanism underlying both auditory and visual temporal information processing. This model yielded a rather poor model fit as indicated by the significant χ2 value [χ2(18) = 41.34; p = .001]. Most of the other fit indices also failed to support this model (CFI = .88; RMSEA = .11; CI of RMSEA ranging from .06 to .15). Only SRMR = .07 suggests sufficiently small covariance residuals.

The second model assumed two independent, modality-specific timing mechanisms, one mechanism for the processing of auditory and another one for the processing of visual temporal information. Because the modality factors in Model 2 were assumed to be independent the correlation between these two factors was set at zero. SEM also revealed a poor fit to the empirical data for this model [χ2(18) = 49.66; p < .001; CFI = .83; RMSEA = .12; CI of RMSEA ranging from .08 to .17; SRMR = .14].

Finally, with the third model, the notion of a hierarchical structure with two modality-specific mechanisms for the processing of temporal information at the first-order level and a more general, modality-independent mechanism at the second-order level was tested. For this purpose, we derived a second-order factor from the initial two-factor solution. In case that a second-order factor is derived from only two first-order factors, it is necessary to fix one factor loading at 1 as a starting value for parameter estimation in order to yield an unambiguous estimation of the factor loadings (e.g., Kline, 1998). Therefore, the factor loading from the visual modality factor to the second-order factor was fixed at 1. The empirical data fitted this model quite well [χ2(17) = 24.84; p = .10; CFI = .96; RMSEA = .06; CI of RMSEA ranging from .00 to .11; SRMR = .06] and all factor loadings were significant with p < .001 except for auditory TG (p = .004). Correlations between visual and auditory RP (r = .54; p < .001) and between visual and auditory TG (r = .30; p = .001) were both significant. The modality-specific factors showed substantial loadings on the modality-independent general timing factor with p < .001 (see Fig. 2). Model 3 provided a significantly better fit compared with Model 1 [Δχ2(1) = 16.50; p < .001] and Model 2 [Δχ2(1) = 24.82; p < .001], respectively. Furthermore, the AIC for Model 3 (−9.16) was lower than for Model 1 (5.34) and Model 2 (13.66). Thus, although Model 3 was more complex compared to Models 1 and 2, the better model fit cannot be attributed to its higher complexity. The finding that Model 3 described the empirical data most adequately suggests modality-specific processing of temporal information at the initial level controlled by a superordinate, modality-independent processing system. It should be noted that Model 3 is statistically equivalent to the assumption of two intercorrelated first-order modality-specific factors. In this latter model, the correlation between the visual and the auditory timing mechanism is r = .64 (p < .001) which is equivalent to the product of the factor loadings of the first-order factors on the second-order factor.

Structural equation model with two modality-specific mechanisms for the processing temporal information and a second-order, modality-independent processing system. DDF duration discrimination with filled intervals, DDE duration discrimination with empty intervals, TG temporal generalization, RP rhythm perception, a auditory, v visual

Discussion

The main goal of the present study was to investigate differences and relations between modality-specific temporal sensitivity as measured by a rhythm perception and three different duration discrimination tasks in the subsecond range. For this purpose, participants performed an auditory and a visual version of each of the four timing tasks to allow for a direct, systematic comparison across both sensory modalities. Our findings clearly indicate higher temporal sensitivity in the auditory than in the visual domain irrespective of type of timing task. This outcome confirms previous reports of superior auditory compared to visual temporal sensitivity obtained with duration discrimination (Grondin, 1993; Grondin et al., 1998; Ulrich et al., 2006) and rhythm perception tasks (Collier, & Logan, 2000; Penney, 2004; Rousseau, & Rousseau, 1996).

Higher temporal sensitivity in the auditory compared to the visual modality can be explained from different perspectives. First, higher auditory temporal sensitivity may be due to faster and more accurate processing of auditory as compared to visual information. There is considerable evidence for faster auditory reaction time (RT) compared to visual RT (e.g., Brebner, & Welford, 1980; Goldstone, 1968; Woodworth, & Schlosberg, 1954). Because the primary visual cortex is located in the occipital lobe whereas the primary auditory cortex is located in the temporal lobe (e.g., Pinel, 2006), visual information has a longer path from its receptors to the primary sensory cortex area than auditory information. Thus, auditory information reaches its central processing stage faster than visual information does (Brebner, & Welford, 1980). With longer distance, also the possibility of larger signal variability and of more interruptions during signal processing increases (cf., Levine, 2001) as reflected by larger variability in visual compared to auditory RT (Ulrich, & Stapf, 1984). These factors may result in a lower signal-to-noise ratio and decreased efficiency for visual compared to auditory information processing. Findings from electrophysiological studies revealed further evidence on this issue. Investigations of the N1, an early negative component of the event-related potential (ERP) which reflects the analysis of physical stimulus properties (e.g., Stöhr, Dichgans, Buettner, & Hess, 2005), identified differences in latency between visually and auditorily elicited N1. The earliest subcomponent of the visual N1 peaks 100–150 ms after stimulus presentation at anterior electrode sites. In contrast, the earliest auditory N1 subcomponent, originating from the auditory cortex, peaks approximately 75 ms after the stimulus presentation at frontal–central sites (cf., Luck, 2005). Obviously, auditory ERPs are faster than visual ones, which also supports the notion of faster auditory information processing.

Second, our finding of more efficient processing of auditory compared to visual temporal information is also in line with investigations on simultaneity versus successiveness and temporal-order judgments (TOJs). The former describes the length of a temporal interval between two events that is required for them to be perceived as two separate events (successiveness) rather than fused as one event (simultaneity; for concise reviews see Fraisse, 1985; Rammsayer, 1992). The threshold for perception of simultaneity has frequently been shown to be reliably lower in the auditory modality than in the visual modality (cf., Exner, 1875; Li et al., 2009; Rammsayer, 1989, 1994; Rammsayer, & Brandler, 2002). TOJ refers to the size of a temporal interval between two events (stimuli) that is required to accurately determine which event occurred first (Fraisse, 1985; Rammsayer, 1992; Ulrich, 1987). Performance on TOJ tasks is much better when the two stimuli are presented in the auditory than in the visual modality (Kanabus, Szelag, Rojek, & Pöppel, 2002). Moreover, when the stimulus presented first was auditory and the second visual, lower TOJ threshold are obtained than when the first stimuli was visual followed by an auditory one (Hirsh, & Fraisse, 1964). These findings provide additional converging evidence for faster and more efficient processing of auditory compared to visual temporal information.

Finally, modality-dependent differences in temporal sensitivity can also be explained within the framework of psychophysical models of timing and time perception. Performance on duration discrimination is often interpreted by the assumption of a neural counting mechanism (e.g., Creelman, 1962; Rammsayer, & Ulrich, 2001). This means that a neural pacemaker generates pulses and that the number of pulses relating to a physical time interval is the internal (subjective) representation of this interval. The higher the rate of pulses, the better the temporal resolution of the timing mechanism will be. Thus, the neural basis of better timing performance with auditory than with visual stimuli can be envisioned as an increase in neural firing rate in the case of auditory temporal stimuli (Grondin, 2001; Wearden et al., 1998). This higher pacemaker rate yields finer temporal resolution and, thus, less uncertainty about interval duration with auditory intervals than with visual ones.

According to the process model of timing described by Church (1984) and Gibbon and Church (1984), the internal clock is composed of a pacemaker, a switch, and an accumulator. The pacemaker generates pulses that are switched into the accumulator. Within this theoretical framework, less variable internal temporal representation of the auditory stimuli is explained by less variable opening and closing latencies of the switch for auditory than for visual stimuli (Penney, & Tourret, 2005; Rousseau, & Rousseau, 1996). This latter interpretation is reminiscent of an explanation of modality-dependent timing differences derived from the onset–offset latency model (Allan, & Kristofferson, 1974; Allan, Kristofferson, & Wiens, 1971). This account proceeds from the general assumption that timing variability is caused by variation in the times at which the internal representation of a given duration begins and ends. The former variation is referred to as the perceptual onset latency, while the latter variation is reflected by the perceptual offset latency. Given the faster and less variable neural transmission of auditory compared to visual information, less variable onset and offset latencies for auditory than for visual time intervals are conceivable. This results in less timing variability and, thus, a more veridical internal representation of the physical interval to be timed. All these considerations are consistent with our finding of superior temporal sensitivity for the auditory compared to the visual sensory modality.

Inspection of the correlation matrix (see Table 2) revealed substantial correlations between auditory and visual temporal sensitivity for each type of timing task. Of the 28 correlation coefficients depicted in Table 2, 20 were statistically significant. This pattern of results indicates a positive manifold (cf., Carroll, 1993), such that high temporal sensitivity in one task in a given modality strongly suggests high temporal sensitivity in the other tasks irrespective of sensory modality. There are several possible explanations for this correlational pattern. First, correlations between modalities and within one type of task were significant because of a substantial portion of shared task-specific variance. This may account for the strong association between DDF and DDE since, on both these tasks, two intervals had to be compared. Furthermore, similar physical stimuli employed in different tasks could also have contributed to the reliable correlations observed between two tasks. This, for example, might be true for RP and DDE (both these task used series of brief clicks or light flashes as stimuli) and for DDF and TG (both used filled intervals). Eventually, the positive manifold might also have occurred because of a common timing mechanism underlying aspects of temporal processing in both sensory modalities. Thus, even though there are modality-related differences in temporal sensitivity, auditory and visual timing tasks are systematically correlated.

To further evaluate whether there is evidence for one common modality-independent timing mechanism or for multiple modality-specific mechanisms, we used SEM to test three different theoretical models. Model 1 assumed the existence of a general, modality-independent timing mechanism. In Model 2, two distinct modality-specific timing mechanisms were proposed, one mechanism for the processing of auditory and another one for the processing of visual temporal information. Both these timing mechanisms were predicted to operate independent of each other. Model 3 proceeded from the assumption of a hierarchical structure of modality-specific and modality-independent levels of information processing.

Almost all fit indices of the first model did not support the idea of a single modality-independent timing mechanism to account for the empirically observed relationships among all types of timing tasks and across both modalities. One exception was the SRMR, which indicated sufficiently small covariance residuals for this model. Nevertheless, as indicated by the other fit indices, Model 1 seems not to be able to satisfactorily explain the observed structure of the correlational pattern.

Also Model 2 with two independent modality-specific timing mechanisms failed to appropriately describe the empirical data as indicated by all five fit indices computed.

Unlike the first two models, the third model fitted the data well. The nonsignificant χ2 value revealed that the empirically observed matrix did not significantly differ from the model-implied matrix. CFI indicated that Model 3 is better than a null model. RMSEA was sufficiently low to assume no significant better fit by a perfect model. This was confirmed by the CI of the RMSEA which included the possibility of a true RMSEA value of zero signifying an exact fit of Model 3. Finally, the SRMR value of Model 3 was sufficiently low to assume acceptably small covariance residuals. In contrast to Model 1, however, that also yielded an appropriate SRMR value, Model 3 did not only acceptably describe the correlational pattern of the empirical data, but was also able to explain the structure of the correlational pattern as indicated by the other fit indices (i.e., χ2 value, CFI, RMSEA and CI of RMSEA).

Clearly, Model 3 has more parameters to be estimated and, thus, is more complex compared to Models 1 and 2. Therefore, the AIC was computed to rule out that the better χ2 value was due to the higher complexity (i.e., the more parameters specified) of Model 3. Model 3 revealed the smallest AIC, indicating that Model 3 still described the empirical data better than Model 1 and Model 2, even when taking complexity into account. Furthermore, comparison of the χ2 values of the three models revealed that Model 3 fitted the empirical data significantly better than Models 1 and 2. In conclusion all fit indices were in favor of Model 3.

Model 3 suggests a hierarchical structure for the processing of temporal information in the subsecond range. It should be noted, however, that this model is statistically equivalent to the assumption of intercorrelated modality-specific first-order factors. The obtained correlation of r = .64, however, may be indicative of a common mechanism underlying auditory and visual temporal information processing as implied by the notion of a second-order factor. Thus, while at a first level, auditory and visual temporal information is processed in distinct modes, this stage of modality-specific processing appears to be controlled by a superordinate, modality-independent processing system. Certainly, this interpretation is limited to the present data and, thus, further studies are needed to prove the general validity of our findings.

Until now, virtually no studies seem to exist associating the structure of temporal processing with modality-dependency of this structure. To our knowledge, there is only one study (Merchant, Zarco, & Prado, 2008) which suggests in the widest sense a similar structure for processing of time in the subsecond range. Merchant et al. (2008) used interval discrimination as well as motor timing tasks with single or multiple intervals presented in the auditory and the visual modality. Based on regression analyses, they proposed a model of partially overlapping timing mechanisms, such that overall task variability is composed of timing variability plus variability related to the interaction of timing and task properties. Thus, they propose the existence of a largely distributed neural system for the processing of temporal information. Specific components of this system will be activated depending on particular task properties. Unfortunately, Merchant et al. (2008) did not further elaborate this tentative model of overlapping timing mechanisms.

According to the present data, temporal information is processed in a modality-specific way at an initial stage that is influenced by a common superordinate, modality-independent component. This superordinate component can be tentatively interpreted as a broader and more general process encompassing not only the processing of temporal information. Such a process may reflect a general functional principle of the central nervous system that effectively modulates both modality-specific timing systems. Originally, the idea of a general functional principle has been introduced to explain observed correlational relationships between different psychological functions such as the positive association between speed of information processing and psychometric intelligence (cf., Jensen, 2006; Neubauer, & Fink, 2005; Rammsayer, & Brandler, 2007). In the latter case, several neural quality characteristics of the central nervous system, such as neuronal oscillations (Jensen, 1982, 2006), neural pruning (Haier, 1993), myelination of neurons (Miller, 1994), or differences in neural plasticity (Garlick, 2002), has been proposed to account for this functional relationship. It is conceivable that an analogous neural functional principle exerts a modulating influence on the modality-specific timing mechanisms. As a result, both modality-specific timing mechanisms share some common variance. Clearly, future research is needed to further elaborate this preliminary assumption.

In conclusion, the present study confirms higher temporal sensitivity for rhythm perception and duration discrimination in the range of milliseconds in the auditory compared to the visual sensory modality. Furthermore, our data provide empirical evidence for a hierarchical structure of modality-specific and modality-independent levels of temporal information processing. More specifically, the present data are well described by a hierarchical model with modality-specific visual and auditory temporal processing at a first level and a modality-independent processing system at a second level of the hierarchy.

References

Allan, L. G., & Kristofferson, A. B. (1974). Psychophysical theories of duration discrimination. Perception & Psychophysics, 16, 26–34.

Allan, L. G., Kristofferson, A. B., & Wiens, E. W. (1971). Duration discrimination of brief light flashes. Perception & Psychophysics, 9, 327–334.

Brebner, J. M. T., & Welford, A. T. (1980). Introduction: An historical background sketch. In A. T. Welford (Ed.), Reaction times. London: Academic Press.

Browne, M. W., & Cudeck, R. (1993). Alternative ways of assessing model fit. In K. A. Bollen & J. S. Long (Eds.), Testing structural equation models (pp. 136–162). Newbury Park, CA: Sage.

Carroll, J. B. (1993). Human cognitive abilities. A survey of factoranalytical studies. New York: Cambridge University Press.

Chen, Y. G., Huang, X. T., Luo, Y. M., Peng, C. H., & Liu, C. X. (2010). Differences in the neural basis of automatic auditory and visual time perception: ERP evidence from an across-modal delayed response oddball task. Brain Research, 1325, 100–111.

Church, R. M. (1984). Properties of the internal clock. In J. Gibbon & L. Allan (Eds.), Timing and time perception. New York: Academy of Sciences.

Collier, G. L., & Logan, G. (2000). Modality differences in short-term memory for rhythms. Memory & Cognition, 28, 529–538.

Craig, J. C. (1973). Constant error in perception of brief temporal intervals. Perception & Psychophysics, 13, 99–104.

Creelman, C. D. (1962). Human discrimination of auditory duration. Journal of the Acoustical Society of America, 34, 582–593.

Demany, L., McKenzie, B., & Vurpillot, E. (1977). Rhythm perception in early infancy. Nature, 266, 718–719.

Exner, S. (1875). Experimentelle Untersuchung der einfachsten psychischen Processe. Archiv für die gesammte Physiologie des Menschen und der Thiere, 11, 403–432.

Fraisse, P. (1985). Psychologie der Zeit. München: Ernst Reinhardt.

Garlick, D. (2002). Understanding the nature of the general factor of intelligence: The role of individual differences in neural plasticity as an explanatory mechanism. Psychological Review, 109, 116–136.

Gibbon, J., & Church, R. M. (1984). Sources of variance in an information processing theory of timing. In H. L. Roitblat, T. G. Bever, & H. S. Terrace (Eds.), Animal cognition (pp. 465–488). Hillsdale, NJ: Erlbaum.

Goldstone, S. (1968). Reaction time to onset and termination of lights and sounds. Perceptual and Motor Skills, 27, 1023–1029.

Grondin, S. (1993). Duration discrimination of empty and filled intervals marked by auditory and visual signals. Perception & Psychophysics, 54, 383–394.

Grondin, S. (2001). From physical time to the first and second moments of psychological time. Psychological Bulletin, 127, 22–44.

Grondin, S., Meilleur-Wells, G., Ouellette, C., & Macar, F. (1998). Sensory effects on judgments of short time-intervals. Psychological Research, 61, 261–268.

Grondin, S., & Rammsayer, T. (2003). Variable foreperiods and temporal discrimination. Quarterly Journal of Experimental Psychology, Section A: Human Experimental Psychology, 56, 731–765.

Haier, R. J. (1993). Cerebral glucose metabolism and intelligence. In P. A. Vernon (Ed.), Biological approaches to the study of human intelligence (pp. 317–332). Norwood, NJ: Ablex.

Hirsh, I. J., & Fraisse, P. (1964). Simultanéité et succession de stimuli hétérogènes. Année Psychologique, 64, 1–19.

Jensen, A. R. (1982). Reaction time and psychometric g. In H. J. Eysenck (Ed.), A model for intelligence (pp. 93–132). New York: Springer.

Jensen, A. R. (2006). Clocking the mind. Mental chronometry and individual differences. Amsterdam: Elsevier.

Jokiniemi, M., Raisamo, R., Lylykangas, J., & Surakka, V. (2008). Crossmodal rhythm perception. Haptic and Audio Interaction Design, 5270, 111–119.

Kaernbach, C. (1991). Simple adaptive testing with the weighted up-down method. Perception & Psychophysics, 49, 227–229.

Kanabus, M., Szelag, E., Rojek, E., & Pöppel, E. (2002). Temporal order judgement for auditory and visual stimuli. Acta Neurobiologiae Experimentalis, 62, 263–270.

Klapproth, F. (2002). The effect of study-test modalities on the remembrance of subjective duration from long-term memory. Behavioural Processes, 59, 37–46.

Kline, R. B. (1998). Principles and practice of structural equation modeling. New York: Guilford Press.

Lapid, E., Ulrich, R., & Rammsayer, T. (2008). On estimating the difference limen in duration discrimination tasks: A comparison of the 2AFC and the reminder task. Perception & Psychophysics, 70, 291–305.

Lapid, E., Ulrich, R., & Rammsayer, T. H. (2009). Perceptual learning in auditory temporal discrimination: No evidence for a cross-modal transfer to the visual modality. Psychonomic Bulletin & Review, 16, 382–389.

Levine, M. W. (2001). Principles of neural processing. In E. B. Goldstein (Ed.), Blackwell handbook of perception (pp. 24–52). Oxford: Blackwell.

Li, L., Huang, J., Wu, X. H., Qi, J. G., & Schneider, B. A. (2009). The effects of aging and interaural delay on the detection of a break in the interaural correlation between two sounds. Ear and Hearing, 30, 273–286.

Luce, R. D., & Galanter, E. (1963). Discrimination. In R. D. Luce, R. R. Bush, & E. Galanter (Eds.), Handbook of mathematical psychology (Vol. 1). New York: Wiley.

Luck, S. J. (2005). An introduction to the event-related potential technique. Cambridge: MIT Press.

Macmillan, N. A., & Creelman, C. D. (2005). Detection theory: A user’s guide. Mahwah, NJ: Erlbaum.

McCormack, T., Brown, G. D. A., Maylor, E. A., Darby, R. J., & Green, D. (1999). Developmental changes in time estimation: Comparing childhood and old age. Developmental Psychology, 35, 1143–1155.

McCormack, T., Brown, G. D. A., Maylor, E. A., Richardson, L. B. N., & Darby, R. J. (2002). Effects of aging on absolute identification of duration. Psychology and Aging, 17, 363–378.

Merchant, H., Zarco, W., & Prado, L. (2008). Do we have a common mechanism for measuring time in the hundreds of millisecond range? Evidence from multiple-interval timing tasks. Journal of Neurophysiology, 99, 939–949.

Miller, E. M. (1994). Intelligence and brain myelination: A hypothesis. Personality and Individual Differences, 17, 803–832.

Muthén, L. K., & Muthén, B. O. (2009). Mplus user’s guide. Los Angeles: Muthén & Muthén.

Neubauer, A. C., & Fink, A. (2005). Basic information processing and the psychophysiology of intelligence. In R. J. Sternberg & J. E. Pretz (Eds.), Cognition and intelligence. Identifying the mechanisms of the mind (pp. 68–87). Cambridge: Cambridge University Press.

Ortega, L., Lopez, F., & Church, R. M. (2009). Modality and intermittency effects on time estimation. Behavioural Processes, 81, 270–273.

Penney, T. B. (2003). Modality differences in interval timing: Attention, clock speed, and memory. In W. H. Meck (Ed.), Functional and neural mechanisms of internal timing (pp. 209–228). Boca Raton, FL: CRC Press.

Penney, T. B. (2004). Electrophysiological correlates of interval timing in the stop-reaction-time task. Cognitive Brain Research, 21, 234–249.

Penney, T. B., Gibbon, J., & Meck, W. H. (2000). Differential effects of auditory and visual signals on clock speed and temporal memory. Journal of Experimental Psychology-Human Perception and Performance, 26, 1770–1787.

Penney, T. B., & Tourret, S. (2005). Les effets de la modalité sensorielle sur la perception du temps. Psychologie Française, 50, 131–143.

Pinel, P. J. (2006). Biopsychology. Boston: Allyn and Bacon.

Pöppel, E. (1978). Time perception. In R. Held, H. W. Leibowitz, & H.-L. Teuber (Eds.), Handbook of sensory physiology (Vol. 8, pp. 713–729). Heidelberg: Springer.

Rammsayer, T. H. (1989). Dopaminergic and serotoninergic influence on duration discrimination and vigilance. Pharmacopsychiatry, 22, 39–43.

Rammsayer, T. H. (1992). Die Wahrnehmung kurzer Zeitdauern. Allgemeinpsychologische und psychobiologische Ergebnisse zur Zeitdauerdiskrimination im Millisekundenbereich. Münster: Waxmann.

Rammsayer, T. H. (1994). A cognitive-neuroscience approach for elucidation of mechanisms underlying temporal information processing. Neuroscience, 77, 61–76.

Rammsayer, T. H. (2010). Differences in duration discrimination of filled and empty auditory intervals as a function of base duration. Attention, Perception, & Psychophysics, 72, 1591–1600.

Rammsayer, T. H., & Brandler, S. (2002). On the relationship between general fluid intelligence and psychophysical indicators of temporal resolution in the brain. Journal of Research in Personality, 36, 507–530.

Rammsayer, T. H., & Brandler, S. (2007). Performance on temporal information processing as an index of general intelligence. Intelligence, 35, 123–139.

Rammsayer, T. H., & Lima, S. D. (1991). Duration discrimination of filled and empty auditory intervals: Cognitive and perceptual factors. Perception & Psychophysics, 50, 565–574.

Rammsayer, T. H., & Ulrich, R. (2001). Counting models of temporal discrimination. Psychonomic Bulletin & Review, 8, 270–277.

Rousseau, L., & Rousseau, R. (1996). Stop-reaction time and the internal clock. Perception & Psychophysics, 58, 434–448.

Schermelleh-Engel, K., Moosbrugger, H., & Müller, H. (2003). Evaluating the fit of structural equation models: Tests of significance and descriptive goodness-of-fit measures. Methods of Psychological Research Online, 8, 23–74.

Shih, L. Y. L., Kuo, W. J., Yeh, T. C., Tzen, O. J. L., & Hsieh, J. C. (2009). Common neural mechanisms for explicit timing in the sub-second range. Neuroreport, 20, 897–901.

Stöhr, M., Dichgans, J., Buettner, U. W., & Hess, C. W. (2005). Evozierte Potentiale. Heidelberg: Springer.

ten Hoopen, G., Hartsuiker, R., Sasaki, T., Nakajima, Y., Tanaka, M., & Tsumura, T. (1995). Auditory isochrony: Time shrinking and temporal patterns. Perception, 24, 577–593.

Treisman, M. (1963). Temporal discrimination and the indifference interval: Implications for a model of the internal clock. Psychological Monographs, 77, 1–31.

Ulrich, R. (1987). Threshold models of temporal-order judgments evaluated by a ternary response task. Perception & Psychophysics, 42, 224–239.

Ulrich, R., Nitschke, J., & Rammsayer, T. (2006). Crossmodal temporal discrimination: Assessing the predictions of a general pacemaker-counter model. Perception & Psychophysics, 68, 1140–1152.

Ulrich, R., & Stapf, K. H. (1984). A double-response paradigm to study stimulus intensity effects upon the motor system in simple reaction time experiments. Perception & Psychophysics, 36, 545–558.

van Wassenhove, V. (2009). Minding time in an amodal representational space. Philosophical Transactions of the Royal Society B, Biological Sciences, 364, 1815–1830.

Walker, J. T., & Scott, K. J. (1981). Auditory-visual conflicts in the perceived duration of lights, tones, and gaps. Journal of Experimental Psychology: Human Perception and Performance, 7, 1327–1339.

Wearden, J. H., Edwards, H., Fakhri, M., & Percival, A. (1998). Why “sounds are judged longer than lights”: Application of a model of the internal clock in humans. Quarterly Journal of Experimental Psychology, Section B: Comparative and Physiological Psychology, 51, 97–120.

Woodworth, R. S., & Schlosberg, H. (1954). Experimental psychology. New York: Holt, Rinehart and Winston.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Stauffer, C.C., Haldemann, J., Troche, S.J. et al. Auditory and visual temporal sensitivity: evidence for a hierarchical structure of modality-specific and modality-independent levels of temporal information processing. Psychological Research 76, 20–31 (2012). https://doi.org/10.1007/s00426-011-0333-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00426-011-0333-8