Abstract

Neurophysiological observations suggest that attending to a particular perceptual dimension, such as location or shape, engages dimension-related action, such as reaching and prehension networks. Here we reversed the perspective and hypothesized that activating action systems may prime the processing of stimuli defined on perceptual dimensions related to these actions. Subjects prepared for a reaching or grasping action and, before carrying it out, were presented with location- or size-defined stimulus events. As predicted, performance on the stimulus event varied with action preparation: planning a reaching action facilitated detecting deviants in location sequences whereas planning a grasping action facilitated detecting deviants in size sequences. These findings support the theory of event coding, which claims that perceptual codes and action plans share a common representational medium, which presumably involves the human premotor cortex.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In recent years, the theoretical and methodological marriage of cognitive psychology and cognitive neurosciences has led to a re-evaluation of the role of action systems in information processing: task demands and action requirements have been found to affect visual perception (Craighero, Fadiga, Rizzolatti, & Umiltà, 1999; Hamilton, Joyce, Flanagan, Frith, & Wolpert, 2005; Müsseler & Hommel, 1997; Wohlschläger, 2000), the selection of visual objects (Hommel & Schneider, 2002; Lupiáñez, Ruz, Funes, & Milliken, 2005; Rizzolatti, Riggio, & Sheliga, 1994; Tipper, Howard, & Houghton, 1999), and memory (Genzano, Di Nocera, & Ferlazzo, 2001; Hommel & Knuf, 2000; Pickering, Gathercole, Hall, & Lloyd, 2001), suggesting a more dynamic interplay between action control and other cognitive systems than the standard unidirectional stage model of human information processing suggests (Ward, 2002). However, despite the increase of empirical evidence, the nature and functional meaning of these interactions are still a matter of debate. In this article, we suggest that action can affect perception in at least two ways: at a feature level, by biasing the perception of objects and events towards some but not other properties, and at a dimensional level, by biasing attention towards some but not other perceptual dimensions—thereby facilitating the processing of the features defined on these dimensions and thus supporting the selection of objects and events based on these features.

Interestingly, almost all studies looking into the impact of action on perception have focused on the feature level, that is, on whether particular actions prime or impair the perception of objects with particular, action-related features. For instance, Müsseler and Hommel (1997) demonstrated that planning to press a left- or right-hand key impairs the perception of a masked arrow pointing into the congruent direction. Likewise, Craighero et al. (1999) found that planning a grasping action is initiated more quickly if signaled by an object that has the same shape as the object to be grasped, and Wohlschläger (2000) reported that carrying out a manual rotation biases the perception of the direction in which an observed object rotates. These observations suggest that the representations of objects and of action plans overlap to a degree that is determined by the number of features shared between a given object and action (Hommel, Müsseler, Aschersleben, & Prinz, 2001; Prinz, 1990). If so, activating a particular feature code in the process of planning an action that includes that feature primes the processing of feature-overlapping perceptual objects. However, completing and maintaining an action plan involves the integration and binding of the codes that represent the features of the intended action (Hommel, 2004). This binding process “occupies" the codes in question and makes them less available for the representation of other events, such as the planning of other feature-overlapping actions (Stoet & Hommel, 1999) or feature-overlapping visual objects (Hommel & Müsseler, 2005; Milliken & Lupiáñez, 2005; Müsseler & Hommel, 1997; Oriet, Stevanovski, & Jolicoeur, 2005). Thus, action planning can prime or impair the perception of feature-overlapping events, depending on the planning stage (for a recent overview, see Hommel, 2004).

The present study was motivated by the idea that perception or, more precisely, visual attention might be affected by action planning not only in terms of feature-based interactions but also on a more general level. In particular, we claim that planning an action biases perceptual systems towards action-relevant feature dimensions—an “intentional-weighting" process (Hommel et al., 2001) that facilitates the detection, discrimination, and selection of stimulus events defined by features coded on these dimensions. Footnote 1. In other words, we aim at distinguishing a feature priming effect, defined as the enhanced processing of stimulus features that are shared by the action that is currently planned or carried out, from a dimensional priming effect, defined as the enhanced processing of any stimulus feature on perceptual dimensions that are related to the action that is currently planned or carried out. In the present study, we focus on the perceptual dimensions size and location, which we think are related to the actions of grasping and pointing, respectively.

The point of departure for our considerations was an observation of Schubotz and von Cramon (2001, 2002, 2003) and Schubotz, Friederici, and von Cramon (2000). Schubotz and colleagues had subjects monitor streams of visual or auditory events for deviants, that is, for stimuli violating the otherwise repetitive structure of the stream, while being fMRI scanned. Even though the task was purely visual, premotor areas were strongly activated: a fronto-parietal prehension network when subjects monitored for shape deviants, areas involved in manual reaching when they monitored for location deviants, and a network associated with tapping and uttering speech when monitoring for temporal deviants. Hence, activation was highest in areas that are known to be involved in actions that would profit most from information defined on the respective stimulus-feature dimension. This might point to an important integrative role of the human premotor cortex in the anticipation of perceptual events and the control of actions related to these events. More specifically, it may integrate actions and their expected consequences into a kind of habitual pragmatic body map (Schubotz & von Cramon, 2001, 2003), which may represent a (part of a) representational system for the “common coding" of perceptual events and action plans as claimed by the theory of event coding (TEC; Hommel et al., 2001; cf., Prinz, 1990).

Given Schubotz and colleagues’ observation that attending to particular perceptual dimensions engages dimension-related action systems, one may speculate that activating these action systems also supports the processing of stimuli defined on these dimensions. That is, preparing for a grasping action may prime the processing of shape information and preparing for a reaching action may prime the processing of location information. In the present study, we tested this idea by having subjects plan a grasping or reaching action and then presented them with either size- or location-defined stimulus events while maintaining the planned action for later execution. In particular, we had them monitor a predictable sequence of visual stimuli varying in size or location for a deviant, much like in the studies of Schubotz and colleagues. According to our considerations, preparing a grasping action was expected to facilitate detecting the deviant in size sequences, whilst preparing a reaching action should facilitate detecting the deviant in location sequences.

Experiment 1

Method

Participants

Twelve students of the University of Rome “La Sapienza” (11 female) aged 20–26 years were recruited to participate in a single session of about 45 min. All participants were right handed with normal or corrected-to-normal vision, and they were naïve as to the purpose of the experiment.

Apparatus and stimuli

Participants sat at a table (120×75) in a dimly lit room, facing a 21 in. monitor (Silicon Graphics 550, 800×600 pixel, 32 bit color), with a viewing distance of 60 cm. Stimulus presentation and data acquisition were controlled by a Silicon Graphics Double processor Workstation, interfaced with a 3dLabs Oxygen GVX420 video card.

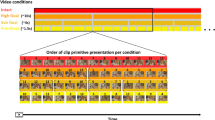

A white asterisk presented at the geometrical center of the screen served as fixation mark. The digits “1” and “2” served as visual cues and were displayed at the same location as the fixation point. They indicated the required action (grasping or reaching). A series of seven yellow circles on a black background served as stimuli for the visual discrimination task. On each trial, they were successively displayed at a rate of 600 ms without temporal gaps, on one of the two main diagonal (x/y) axes of the computer screen (at 100/525, 200/450, 300/375, 400/300, 500/225, 600/150, 700/75, and 100/75, 200/150, 300/225, 400/300, 500/375, 600/450, 700/525, pixels, respectively) starting either from the top or from the bottom of the screen. The size of the circles alternated from “small” (0.7 cm in diameter) to “large” (1.3 cm in diameter), or vice versa (Fig. 1).

Responses were given by releasing a microswitch with the right index finger. The microswitch was mounted on a 10×5.5×3 cm box placed at the center with respect to the subject’s midline, with a distance of 20 cm from the subject’s body and 40 cm from the computer screen. The grasping and reaching actions were made to a 2×2×2 cm white cube and a 5 mm in diameter white dot, respectively. The grasping action consisted in grabbing and lifting the cube with the thumb and the index finger of the right hand. The reaching action consisted in touching the dot with the right index finger-tip. Both the objects were arranged on a 30×23 cm black board placed in front of the subjects at a viewing distance of approximately 40 cm. The arrangement of the objects on the board was balanced between the subjects.

Design and Procedure

On each trial, the participants were instructed to plan an action, then to perform a visual discrimination task, and finally to perform the planned action on the objects placed in front of them. At the beginning of a trial, the fixation mark appeared; when ready, participants pressed the switch with their right index finger and kept it pressed until the response onset. After the switch had been depressed, the cue appeared for 3 s, indicating which action (grasping or reaching) was to be planned. While keeping the switch pressed, the participants were presented with the visual discrimination task consisting of the highly predictable sequences of seven pictures each (yellow circles). In each sequence, the circles were presented successively on one of the two main diagonal axes of the screen. In particular, the sequence was predictable with respect to the size and the spatial location of the stimuli. The circles were displayed at the same distance with regard to each other, and by alternating the size (small and large) of the circles. Importantly, the starting point of the sequence and the alternating small–large pattern of the visual stimuli were balanced across the trials, in order to prevent any predictable combination of the size stimulus and the spatial position on the screen.

Participants were required to attend to the sequential order of the presented visual stimuli. In 75% of the trials, the sequential order of the pictures was violated by presenting a deviant stimulus. Specifically, the size or the spatial location of the fourth, fifth, or sixth stimulus could be repeated, with consecutively presented circles having the same size (size violation) or consecutively presented circles appearing in the same spatial position (location violation). The deviant picture represented the target stimulus for the visual discrimination task.

If participants detected the target stimulus, they were instructed to release the switch and to perform the previously planned action on one of the two objects placed in front of them, according to the cue. In the remaining 25% of the trials, no violation occurred and, thus, no action had to be performed. The experimenter checked for the correctness of the response by classifying as incorrect the responses in which participants performed the wrong action.

Four (within-participants) conditions were created by combining the planned action (grasping vs. reaching) and the stimulus dimension of the deviant (location vs. size). The presentation of the stimulus dimension was blocked, and only one dimension (size or location) could be deviant on each block of trials, whereas the other dimension followed the sequence rule. This manipulation prevented participants from responding to a novel combination of the two stimulus dimensions rather than a rule violation. Note that the block-wise presentation of the deviant dimension cannot account for positive priming of the motor preparation on the processing of the action-related feature dimension. Each condition was replicated 96 times, amounting to six blocks of 64 trials (three blocks including size violations and three blocks including location violations), preceded by 20 practice trials. The cue-action mapping, the object arrangement on the board, and the order of the stimulus-dimension blocks were balanced between subjects. Mean reaction times (RTs, defined as time elapsed between the onset of the deviant and the release of the switch) computed for each experimental condition were used as the dependent variable for the data analysis.

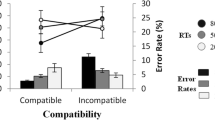

Results

Anticipations (RTs<100 ms), missing responses (RTs>1,000 ms), and wrong actions were considered as incorrect responses. As participants made few errors (less than 5%) no data analysis was performed on error rates. Mean RTs were analyzed by means of a two-way ANOVA with action (grasping vs. reaching) and stimulus dimension (size vs. location) as within-subjects factors. Significant effects were found for action, F(1,11)=10.03; P<0.01, and the two-way interaction, F(1,11)=15.01; P<0.01. A Duncan test showed that when participants prepared a grasping action they were faster in detecting size than location deviants, P<0.01, whilst preparing a reaching action tended to make detecting a location deviant faster than detecting a size deviant, P=0.09 (see Table 1).

Discussion

In line with our hypothesis, experiment 1 provides evidence for an intimate relationship between grasping and shape stimuli on the one hand and between reaching and location stimuli on the other. Clearly, combining “premotorically related" stimuli and actions produced better performance. Our preferred interpretation of this effect is that preparing an action primed the perception of related stimulus events, that is, of stimuli defined on an action-relevant feature dimension. However, note that our experimental design does not allow us to rule out an alternative interpretation in terms of stimulus–response priming. That is, the faster responses with the combinations of grasping and shape and of reaching and location may not reflect an impact of action planning on stimulus perception but, rather, be due to the faster initiation of the prepared action if signaled by a stimulus defined on a perceptual dimension compatible with the planned action. In other words, monitoring stimuli varying in size or spatial location could have biased the motor system by priming the initiation and/or execution of the grasping or reaching action, respectively. Experiment 2 was designed to control for this potential confound.

Experiment 2

Experiment 1 showed a beneficial effect of pairing premotorically related actions and stimulus dimensions. This may be due to either the priming of perceptual analysis by response preparation or to a kind of visuo-motor priming. In experiment 2, we attempted to decide between these two possibilities by disentangling the perceptual and the motor-execution part of the task. The general procedure was analogous to experiment 1 except that participants signaled the detection of the deviant by pressing a foot pedal and then waited for an auditory go signal to carry out the previously planned grasping or reaching movement. Accordingly, the manual actions were no longer signaled by size or location stimuli and, thus, could not differentially benefit from premotoric relatedness. In contrast, perceiving the size or location deviant could still be primed by having prepared and maintained a premotorically related action, so that we expected the same outcome as in experiment 1.

Method

Participants

Nineteen students of the University of Rome “La Sapienza” (14 female) aged 20–29 years participated in a single session of about 60 min. They fulfilled the same criteria as in experiment 1.

Apparatus and stimuli

Stimulus material and apparatus were as in experiment 1 except that a 1,000 Hz sinusoidal tone of 55 dB was used as go-signal for releasing the switch and executing the planned grasping or reaching action. The response to the deviant was given by pressing a foot pedal placed under the table on the right side of the subject. This device consisted of a push button switch mounted on a 18×12×3 cm box and covered by a 18×12 cm plastic board serving as pedal.

Design and procedure

The procedure was largely the same as in experiment 1. After the appearance of a fixation mark, participants depressed the switch and were cued to plan the reaching or grasping action. While keeping the switch depressed, they attended to the visual discrimination task and were instructed to press the foot pedal as soon as they detected the deviant. At the end of the sequence, a tone was presented for 600 ms, at the occurrence of which participants were to release the switch and perform the planned action irrespective of whether or not a deviant appeared in the sequence. Mean foot pedal RT (defined as time elapsed between deviant onset and the onset of the pedal press) and mean releasing RT (defined as time elapsed between tone onset and the release of the switch) were computed for the four experimental conditions. For the sake of comparability, only trials with deviants were considered for the analyses of both measures.

Results

ANOVAs were performed on the foot-pedal RTs and the manual releasing RTs, with action (grasping vs. reaching) and stimulus dimension (size vs. location) as within-subjects factors. The analysis of foot RTs showed a significant interaction between the two factors, F(1,18)=7.02; P<0.05. Duncan tests indicated that planning a reaching action made the processing of location faster than size processing, P<0.05, whereas the planning of a grasping action had the opposite effect, P<0.05 (see Table 2). The manual RTs showed a main effect of dimension, F(1,18)=6.607; P<0.05, with participants being slower in initiating actions when having monitored for a location rather than a size deviant (605 vs. 556 ms).

Anticipations (<5%) and incorrect pedal responses (error rate <5%) were infrequent and not further analyzed. However, given the relatively high occurrence of missing pedal responses (14.4%), these were analyzed as a function of action and stimulus dimension. A main effect of dimension, F(1,18)=8.99; P<0.001, indicated that monitoring for a spatial deviant produced more misses than monitoring for size (17.89 vs. 9.82%). Analyzing the rates of missing release responses (11%) failed to reveal any significant effect.

Discussion

The findings fully replicate the data pattern observed in experiment 1, that is, performance was better if the stimulus dimension in the deviant task was premotorically related to the planned and maintained manual action. Given that the set-up of experiment 2 excluded the visuo-motor priming of the response in the perceptual part of the task (i.e., the foot-pedal response), we are safe to assume that this outcome reflects the impact of action planning on dimension-specific perception. It is interesting to note that the sizes of the congruency effects were larger in experiment 1 than in experiment 2, at least numerically. One may speculate that this difference in size may reflect the extra contribution of visuo-motor priming in experiment 1, but in view of the rather large variability in the data we hesitate to draw strong conclusions from this observation without further, more direct experimental test.

General discussion

In the present study, we investigated whether planning an action affects the perceptual analysis of stimulus events. As pointed out in Introduction, we are not the first to demonstrate that action planning can affect perceptual processes (see Craighero et al., 1999; Müsseler & Hommel, 1997; Wenke, Gaschler, & Nattkemper, 2005; Wohlschläger, 2000; among others). Previous studies focused on what one may call “element-level compatibility”, using the terminology of Kornblum, Hasbroucq, and Osman (1990). That is, they looked into combinations of particular feature values of particular stimuli and responses, such as of the horizontal or vertical orientation of a grasp and the horizontal or vertical orientation of a stimulus object or of a left or right keypress and the left or right direction of an arrow. The outcomes of these studies show that the overlap of a stimulus and an action with respect to a particular feature value does impact performance, suggesting that the cognitive codes of these features overlap as well.

The present study goes beyond previous investigations by demonstrating an effect of “set-level compatibility” (Kornblum et al., 1990), in a wider sense at least. That is, we looked into combinations of particular types of actions with particular stimulus dimensions, disregarding the concrete parameters and feature values of the actions and stimuli in question. As predicted from our extension of the pragmatic body map approach of Schubotz and von Cramon (2001, 2003), we were able to show that preparing for grasping and reaching as such is sufficient to prime size and location information, respectively. This suggests that an intention to act sets up and configures visual attention in such a way that the processing of information about the most action-specific and action-relevant stimulus features is facilitated. Apparently, planning an action is accompanied by pretuning action-related feature maps, so that the access of information coded in these maps to action control is sped up.

This consideration is also consistent with a recent observation of Bekkering and Neggers (2002) and Hannus, Cornelissen, Lindemann, and Bekkering (2005), who had subjects search for visual targets among distractors. Targets could be defined in terms of orientation or color, and subjects were to either look and point at the target or look at and grasp the target. Interestingly, fewer saccades to objects with the wrong orientation were made in the grasping condition than in the reaching condition, whereas the number of saccades to an object with the wrong color was comparable in both conditions. According to the view we are proposing here, we would attribute this outcome pattern to a priming of orientation processing induced by the preparation for a grasp: primed orientation processing should facilitate discriminating a target from distractors, thereby increasing the chances of the target (and/or reducing chances of distractors) to attract saccades. However, Bekkering and colleagues explicitly rejected this possibility on the basis of two arguments (see Hannus et al., 2005).

First, they take Bekkering and Neggers’ (2002) failure to find action-induced effects with small display sizes (four elements) to demonstrate that easy tasks do not benefit from action-induced biases, which according to them argues against an account in terms of discrimination. It is difficult to judge exactly why small displays did not produce the effect in that particular study, but our present findings provide clear evidence that action-induced biases can show up in even extremely easy tasks in principle. Second, Hannus et al. (2005) found that decreasing the discriminability of colors eliminates the action-induced effect of orientation, which they take to demonstrate “that other factors besides motor-visual priming interact in the visual search processes”. However, even if this may be the case it is difficult to see why this would exclude the possibility that action preparation biases visual attention. Thus, we do not see strong evidence against the idea that preparing for a grasping or pointing action primes action-related perceptual dimensions. Moreover, we fail to see how Hannus et al.’s own account can explain our present findings. They argue that, in their experiments, the current action plan may either modify the target template “in favor of the behaviorally relevant visual feature” or “directly increase the activation of task relevant visual features”. Apparently, this explanation focuses on representations of particular features and thus aims at the feature level as defined in Introduction. This is certainly an option for the search task employed by Bekkering and colleagues, where subjects look for a known combination of two particular features, but it fails to account for biases in tasks where the particular target feature is not known in advance—such as employed in the present study.

As we mentioned above, there is growing agreement that interactions between action and perception depend on the activation of representations mediated by the lateral premotor cortex. Specifically, some classes of neurons in the premotor cortex of nonhuman primates have been shown to respond to both the performance and the mere observation of object-directed action (Murata et al., 1997; Rizzolatti & Fadiga, 1998). These neurons have been referred to as “canonical neurons” and they are considered to belong to a larger class of so-called “grasping neurons” (Rizzolatti et al., 1988). Similarly, premotor neurons in the monkey exhibit activity significantly tuned to both target location and arm use in reaching (Hoshi & Tanji, 2002). We suggest that the effects reported in the present experiments reflect a similar pattern of correspondence and representational overlap between grasping actions and object properties on the one hand and reaching actions and spatial properties on the other.

As mentioned in the Introduction, a series of fMRI studies have provided robust evidence for a representational correspondence between action and perception on the dimensional level, as proposed by the habitual pragmatic body map account (Schubotz & von Cramon, 2003). Endowed with very special neuronal properties outlined for the monkey data, and given its robust involvement in both motor and nonmotor sequential representations, the lateral premotor cortex has been suggested to subserve a more complex function than the representation of motor output patterns. More specifically, this cortical region has been claimed to house motor subroutines or action plans (Fadiga, Fogassi, Gallese, & Rizzolatti, 2000), which can be triggered either internally, by action planning, or externally, by appropriate stimulation. It is yet not clear how these action plans are represented. One account can be derived from the common coding framework (see below), which states that action plans are coded in terms of action effects, i.e., perceptual (or perceptually derived but supramodal) events. This model can be further elaborated along the lines of Byrne and Russon (1998), according to whom goal and sub-goals of an action recur in every effective action sequence, whereas the irrelevant details of precisely how each of these intermediate states is achieved will vary between occasions without affecting efficiency. As such, an action could be economically represented by one or several perceptual events. However, most actions will be constructed of several sub-goals and hence a sequence of perceptual events rather than a single perceptual event. The selection and linkage of precompiled motor subroutines stored in lateral premotor cortex is suggested to be realized by the medial premotor cortex or supplementary motor area (SMA, Elsner et al., 2002; Shima & Tanji, 2000). This area contains highly abstract sequence neurons which code the temporal order of action components independent of the movement type, i.e., engaged muscles or action goal. In that way, lateral and medial premotor areas together contribute to action representation and selection.

Based on the assumptions that (a) premotor action representations are not necessarily “motor" but may also be “sensory", “sensorimotor", or “supramodal", and (b) can be triggered either internally or externally (Fadiga et al., 2000), it could be hypothesized that sequential representations may be where premotor cortex comes into play, no matter whether they are biological or nonbiological (i.e., abstract) (Schubotz & von Cramon, 2004a). Direct experimental evidence for this view comes from a recent fMRI study, which tested the hypothesis that the premotor cortex is engaged in both action representation and in making predictions cued by nonbiological stimuli, as in the present task (Schubotz & von Cramon, 2004b). It was tested and confirmed that a common premotor region is activated by outcome-related tasks on action observation, action imagery, or abstract sequences.

Taken together, the empirical data and the functional–anatomical properties of the premotor cortex point to a bi-directional link between motor and perceptual representations, supporting the view that object perception and action planning are mediated by integrated sensorimotor structures. The existence and central role of such sensorimotor structures in human cognition has been claimed by the TEC, a comprehensive framework of the relationship between perception and action (Hommel et al., 2001). The general, ideomotorically inspired idea is that actions are represented in terms of their anticipated distal effects, just like other perceptual events, so that planning an action consists of little more than anticipating its intended perceptual effects (i.e., in activating the codes that represent those effects, which again spreads activation to the associated motoric structures, see Hommel, 1998, 2005). According to this logic, perceiving an event and planning an action are functionally equivalent to a large degree: both perceiving and action planning consists in activating perceptually derived codes that are associated with action programs. If so, it is plausible to assume that to-be-perceived events (“perceptions”) and to-be-generated events (“actions”) are coded and stored together in a common representational domain (Prinz, 1990, 1997).

With respect to perception, the processing of information has been shown to be biased towards task-relevant dimensions, such as color or shape (Bundesen, 1990; Wolfe, 1994). As a consequence, the features of an object that are defined on task-relevant (and therefore biased) dimensions will be more heavily weighted and, thus, more strongly represented (i.e., activate their representational codes more strongly) than other, task-irrelevant features (Müller, Reimann, & Krummenacher, 2003). If it is true that action plans and perceptual events are cognitively coded in the same fashion and even share a representational domain, it follows that dimensional weighting should not only apply to perceived events but to to-be-produced events (i.e., actions) as well (Hommel, 2004; Hommel et al., 2001). More concretely, making a particular dimension relevant for action should prime the corresponding dimension in perception (cf. Hommel, 2005b), so that action-related perceptual objects should be weighted accordingly. With regard to our present study, preparing for an action related to space should induce a stronger weighting of location-related perceptual features and preparing for an action related to shape should induce a stronger weighting of shape-related features. This is exactly what the present findings show, even though more systematic research is necessary to test whether our observations are generalizable to other perceptual dimensions and actions. In any case, our findings support the idea that perception and action are more intimately related than the standard processing-stage model of human cognition suggests.

Notes

One might wonder how our prediction that action planning facilitates perceptual processing fits with previous observations of action-induced blindness, that is, the finding that preparing a left- or right-hand action impairs the processing of a spatially compatible stimulus, such as a left- or right-pointing arrow (Müsseler & Hommel, 1997). The explanation is central for our present argument: We assume that planning an action binds the codes that represent this action’s features, which occupies these particular codes and makes them less available for other purposes like coding a perceptual event (the blindness effect; see Hommel & Müsseler, 2005). That is, occupying a code primes it and prevents it not from being further activated by other events, but it does prevent it (to some degree) from being bound with other features and to other representations. The new assumption we make here is that planning an action may not only activate the relevant feature codes but may also prime whole feature dimensions, such as size or shape in the case of grasping and location in the case of pointing. If true this would mean that any feature on the primed dimension would benefit from preparing a particular action. But please note that whether this benefit turns into a measurable behavioral advantage always depends on whether the particular feature is already bound to another event or not (Hommel, 2004)

References

Bekkering, H., & Neggers, S. F. W. (2002). Visual search is modulated by action intentions. Psychological Science, 13, 370–374.

Bundesen, C. (1990). A theory of visual attention. Psychological Review, 97, 523–547.

Byrne, R. W., & Russon, A. E. (1998). Learning by imitation: A hierarchical approach. Behavioral and Brain Sciences, 21, 667–684.

Craighero, L., Fadiga, L., Rizzolatti, G., & Umiltà, C. A. (1999). Action for perception: A motor-visual attentional effect. Journal of Experimental Psychology: Human Perception and Performance, 25, 1673–1692.

Elsner, B., Hommel, B., Mentschel, C., Drzezga, A., Prinz, W., Conrad, B. et al. (2002). Linking actions and their perceivable consequences in the human brain. Neuroimage, 17, 364–372.

Fadiga, L., Fogassi, L., Gallese, V., & Rizzolatti, G. (2000). Visuomotor neurons: Ambiguity of the discharge or ‘motor’ perception? International Journal of Psychophysiology, 35, 165–177.

Genzano, V. R., Di Nocera, F., & Ferlazzo, F. (2001). Upper/lower visual field asymmetry on a spatial relocation memory task. Neuroreport, 12, 1227–1230.

Hamilton, A., Joyce, D. W., Flanagan, R., Frith, C. D., & Wolpert, D. M. (2005). Kinematic cues in perceptual weight judgment and their origins in box lifting. Psychological Research, this volume.

Hannus, A., Cornelissen, F. W., Lindemann, O., & Bekkering, H. (2005). Selection-for-action in visual search. Acta Psychologica, 118, 171–191.

Hommel, B. (1998). Perceiving one’s own action—and what it leads to. In J. S. Jordan (Ed.), Systems theory and apriori aspects of perception (pp. 143–179). Amsterdam: North-Holland.

Hommel, B. (2004). Event files: Feature binding in and across perception and action. Trends in Cognitive Sciences, 8, 494–500.

Hommel, B. (2005a). How we do what we want: A neuro-cognitive perspective on human action planning. In R. J. Jorna, W. van Wezel, & A. Meystel (Eds.), Planning in intelligent systems: Aspects, motivations and methods. New York: Wiley. In press

Hommel, B. (2005b). Feature integration across perception and action: Event files affect response choice. Psychological Research, this volume.

Hommel, B., & Knuf, L. (2000). Action related determinants of spatial coding in perception and memory. In C. Freksa, W. Brauer, C. Habel, & K. F. Wender (Eds.), Spatial cognition II: Integrating abstract theories, empirical studies, formal methods, and practical applications (pp. 387–398). Berlin Heidelberg New York: Springer.

Hommel, B., & Müsseler, J. (2005). Action-feature integration blinds to feature-overlapping perceptual events: Evidence from manual and vocal actions. Quarterly Journal of Experimental Psychology (A). In press

Hommel, B., Müsseler, J., Aschersleben, G., & Prinz, W. (2001). The theory of event coding (TEC): A framework for perception and action planning. Behavioral and Brain Sciences, 24, 849–878.

Hommel, B., & Schneider, W. X. (2002). Visual attention and manual response selection: Distinct mechanisms operating on the same codes. Visual Cognition, 9, 392–420.

Hoshi, E., & Tanji, J. (2002). Contrasting neuronal activity in the dorsal and ventral premotor areas during preparation to reach. Journal of Neurophysiology, 87, 1123–1128.

Kornblum, S., Hasbroucq, T., & Osman, A. (1990). Dimensional overlap: Cognitive basis for stimulus–response compatibility—a model and taxonomy. Psychological Review, 97, 253–270.

Lupiáñez, J., Ruz, M., Funes, M. J., & Milliken, B. (2005). The manifestation of attentional capture: Facilitation or IOR depending on task demands. Psychological Research, this volume.

Milliken, B., & Lupiáñez, J. (2005). Repetition costs in word identification: Evaluating a stimulus–response integration account. Psychological Research, this volume.

Müller, H. J., Reimann, B., & Krummenacher, J. (2003). Visual search for singleton feature targets across dimensions: Stimulus- and expectancy-driven effects in dimensional weighting. Journal of Experimental Psychology: Human Perception and Performance, 29, 1021–1035.

Murata, A., Fadiga, L., Fogassi, L., Gallese, V., Raos, V., & Rizzolatti, G. (1997). Object representation in the ventral premotor cortex (area F5) of the monkey. Journal of Neurophysiology, 78, 2226–2230

Müsseler, J., & Hommel, B. (1997). Blindness to response-compatible stimuli. Journal of Experimental Psychology: Human Perception and Performance, 23, 861–872.

Oriet, C., Stevanovski, B., & Jolicoeur, P. (2005). Feature binding and episodic retrieval in blindness for congruent stimuli: Evidence from analyses of sequential congruency. Psychological Research, this volume.

Pickering, S. E., Gathercole, M., Hall, S. A., & Lloyd, S. A. (2001). Development of memory for pattern and path: Further evidence for the fractionation of visuo-spatial memory. Quarterly Journal of Experimental Psychology, 54A, 397–420.

Prinz, W. (1990). A common coding approach to perception and action. In O. Neumann, & W. Prinz (Eds.), Relationships between perception and action (pp. 167–201). Berlin Heidelberg New York: Springer.

Prinz, W. (1997). Perception and action planning. European Journal of Cognitive Psychology, 9, 129–154.

Rizzolatti, G., Camarda, R., Fogassi, L., Gentilucci, M., Luppino, G., & Matelli, M. (1988). Functional organization of inferior area 6 in the macaque monkey. II. Area F5 and the control of distal movements. Experimental Brain Research, 71, 491–507.

Rizzolatti, G., & Fadiga, L. (1998). Grasping objects and grasping action meanings: The dual role of monkey rostroventral premotor cortex (area F5). Novartis Foundation Symposion, 218, 81–95.

Rizzolatti, G., Riggio, L., & Sheliga, B. M. (1994). Space and selective attention. In C. A. Umiltà, & M. Moscovitch (Eds.), Attention and performance, XV. Conscious and nonconscious information processing (pp. 231–265), Cambridge: MIT Press.

Schubotz, R. I., & von Cramon, D. Y. (2001). Functional organization of the lateral premotor cortex: fMRI reveals different regions activated by anticipation of object properties, location and speed. Cognitive Brain Research, 11, 97–112.

Schubotz, R. I., & von Cramon, D. Y. (2002). Predicting perceptual events activates corresponding motor schemes in lateral premotor cortex: An fMRI study. Neuroimage, 15, 787–796.

Schubotz, R. I., & von Cramon, D. Y. (2003). Functional-anatomical concepts of human premotor cortex: Evidence from fMRI and PET studies. Neuroimage, 20, S120–S131.

Schubotz, R. I., & von Cramon, D. Y. (2004a). Brains have emulators with brains: Emulation economized. Behavioral and Brain Sciences, 27, 414–415.

Schubotz, R. I., & von Cramon, D. Y. (2004b). Sequences of abstract nonbiological stimuli share ventral premotor cortex with action observation and imagery. Journal of Neuroscience, 24, 5467–5474.

Schubotz, R. I., Friederici, A. D., & von Cramon, D. Y. (2000). Time perception and motor timing: A common cortical and subcortical basis revealed by fMRI. Neuroimage, 11, 1–12.

Shima, K., & Tanji, J. (2000). Neuronal activity in the supplementary and presupplementary motor areas for temporal organization of multiple movements. Journal of Neurophysiology, 84, 2148–2160.

Stoet, G., & Hommel, B. (1999). Action planning and the temporal binding of response codes. Journal of Experimental Psychology: Human Perception and Performance, 25, 1625–1640.

Tipper, S. P., Howard, L. A., & Houghton, G. (1999). Action-based mechanisms of attention. In G. W. Humphreys, J. Duncan, & A. Treisman (Eds.). Attention, space and action (pp. 231–247). Oxford: University Press.

Ward, R. (2002). Independence and integration of perception and action: An introduction. Visual Cognition, 9, 385–391.

Wenke, D., Gaschler, R., & Nattkemper, D. (2005). Instruction-induced feature binding. Psychological Research, this volume.

Wohlschläger, A. (2000). Visual motion priming by invisible actions. Vision Research, 40, 925–930.

Wolfe, J. M. (1994). Guided Search 2.0: A revised model of visual search. Psychonomic Bulletin and Review, 1, 202–238.

Acknowledgments

This research was supported by a grant of the Deutsche Forschungsgemeinschaft to BH (Priority Program on Executive Functions, HO 1430/8-2), and prepared during a sabbatical of SF at Leiden University.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fagioli, S., Hommel, B. & Schubotz, R.I. Intentional control of attention: action planning primes action-related stimulus dimensions. Psychological Research 71, 22–29 (2007). https://doi.org/10.1007/s00426-005-0033-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00426-005-0033-3