Abstract

Recent studies have shown that the Madden-Julian Oscillation (MJO) is significantly modulated by the stratospheric Quasi-Biennial Oscillation (QBO). In general, boreal winter MJO becomes more active during the easterly phase of the QBO (EQBO) than during the westerly phase (WQBO). Based on this finding, here we examine the possible impacts of the QBO on MJO prediction skill in the operational models that participated in the WCRP/WWRP subseasonal-to-seasonal (S2S) prediction project. All models show a higher MJO prediction skill during EQBO winters than during WQBO winters. For the bivariate anomaly correlation coefficient of 0.5, the MJO prediction skill during EQBO winters is enhanced by up to 10 days. This enhancement is insensitive to the initial MJO amplitude, indicating that the improved MJO prediction skill is not simply the result of a stronger MJO. Instead, a longer persistence of the MJO during EQBO winters likely induces a higher prediction skill by having a higher prediction limit.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Madden-Julian Oscillation (MJO, Madden and Julian 1971, 1972) is an equatorially convective system that tends to propagate eastward from the Indian Ocean to the western Pacific with a period of 30–90 days in the boreal winter (e.g., Zhang 2005). The MJO modulates surface weather and climate not only in its active region but also in remote places. For example, the MJO affects tropical cyclone activity and tracks in the western North Pacific (Keen 1982; Ferreira et al. 1996; Kim and Seo 2016). It also modulates extratropical cyclone activity in the North Pacific and North America (e.g., Grise et al. 2013). The surface air temperature and precipitation in East Asia and North America are also significantly modulated by the MJO teleconnection pattern (e.g., Jeong et al. 2005; L’Huereux and Higgins 2008; Lin and Brunet 2009; Seo et al. 2016; Seo and Lee 2017).

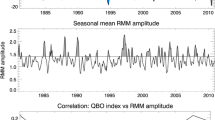

Although the MJO is characterized by intraseasonal variability, it undergoes a pronounced interannual variation in the boreal winter. The amplitude of the MJO, often defined by the real-time multivariate MJO (RMM) index (Wheeler and Hendon 2004), varies by up to a factor of three from 1 year to another year (e.g., Salby and Hendon 1994; Yoo and Son 2016). Son et al. (2017) showed that such a large interannual variability is closely related to the QBO rather than the El Niño-Southern Oscillation (ENSO). A series of studies have shown that the boreal winter MJO becomes more active when the QBO winds are easterly in the lower stratosphere (EQBO) than when the winds are westerly (WQBO; Liu et al. 2014; Yoo and Son 2016; Marshall et al. 2017; Nishimoto and Yoden 2017; Son et al. 2017). The MJO during EQBO winters also exhibits a slower propagation and longer period, and propagates farther into the western Pacific (Marshall et al. 2017; Nishimoto and Yoden 2017; Son et al. 2017; Hendon and Abhik 2018; Zhang and Zhang 2018). Likewise, the MJO-related teleconnections are significantly modulated by the QBO (Son et al. 2017; Wang et al. 2018).

The QBO–MJO link opens a new route for improving MJO prediction. By analyzing reforecasts of the subseasonal-to-seasonal (S2S) prediction model of the Bureau of Meteorology (BoM), Marshall et al. (2017) showed that boreal winter MJO is better predicted during EQBO winters. The MJO prediction skill during EQBO winters is enhanced by up to 8 days based on the bivariate correlation of 0.5 for RMM indices. This enhancement represents over 20% of the overall MJO prediction skill in this model.

The BoM model, however, does not well resolve the stratosphere. As such, the improved prediction skill during EQBO winters may not stem from a direct impact of the QBO on the MJO in the reforecasts. Marshall et al. (2017) indicated that the improved MJO prediction skill partly results from a stronger and more persistent MJO during EQBO winters. The different MJO structure between EQBO and WQBO winters is also suggested to contribute to the improved prediction skill. The MJO convection is better organized during EQBO winters than during WQBO winters.

While this finding is promising, it is based on a single model. More importantly, the model used in Marshall et al. (2017) poorly resolves the stratosphere. It is questionable whether the improved MJO prediction skill during EQBO winters is robust across different models with varying configurations.

A primary goal of this study is to examine the robustness of the QBO modulation of MJO prediction skill in a range of operational forecast models. All available S2S models that are archived for the S2S prediction project (Vitart et al. 2017), are evaluated in this study. The multimodel analyses, which extend Marshall et al. (2017), provide additional insights into the QBO–MJO link in the operational models.

This paper is organized as follows. The S2S models, verification data, and methods are described in Sect. 2. After briefly evaluating the QBO prediction skill in Sect. 3, the QBO impact on MJO prediction skill is quantified by using various MJO evaluation metrics in Sect. 4. Potential causes of the different MJO prediction skills between EQBO and WQBO winters are also analyzed. A summary and discussion are given in Sect. 5.

2 Data and methods

2.1 Observations

As a reference, daily zonal wind from the European Centre for Medium-Range Weather Forecasts (ECMWF) interim reanalysis data (ERA-Interim; Dee et al. 2011) are used. These data are utilized to define the QBO phase and MJO index. The National Oceanic and Atmospheric Administration (NOAA) Outgoing Longwave Radiation (OLR) data (Liebmann and Smith 1996) are also used to describe tropical convective activity. Since NOAA OLR data are available only up to 2013 (as of February 2017), the maximum evaluation period is from 1981 to 2013. Based on the 2.5° × 2.5° resolution of NOAA OLR data, all datasets, including model output, are interpolated into a common horizontal resolution of 2.5° × 2.5°.

2.2 S2S models

The S2S datasets used in the present study are identical to those in Lim et al. (2018). Almost all reforecasts during boreal winter months are considered. As of February 2017, reforecasts are available in the S2S archive from BoM, China Meteorological Administration (CMA), Institute of Atmospheric Sciences and Climate of the National Research Council (CNR-ISAC), Météo-France/Centre National de Recherches Météorologiques (CNRM), Environment and Climate Change Canada (ECCC), ECMWF, Hydrometeorological Centre of Russia (HMCR), Japan Meteorological Agency (JMA), National Centers for Environmental Prediction (NCEP), and United Kingdom Met Office (UKMO) models (Table 1). As summarized in Table 1, each model has a different resolution. The reforecast frequency and length are also appreciably different among the models. Note that Table 1 is identical to Table 1 in Lim et al. (2018), except that MJO events initialized only in December–February (DJF) are considered. These three months are chosen because the QBO–MJO link is stronger in DJF than in the extended winter (Yoo and Son 2016). Because of the unavailability of OLR data since 2014, the reforecasts initialized in December 2013 are not examined.

All available reforecasts are used. Exceptions are the CMA and NCEP models, which are initialized every day. Due to a storage issue, the reforecasts of these two models are subsampled six times per month (initialized on the 1st, 6th, 11th, 16th, 21st, and 26th), similar to the reforecast frequency of the BoM model. Since each reforecast is integrated for at least 31 days, MJO activity in March is included in the MJO events initialized in February.

In Table 1, it is important to note that not all models resolve the stratosphere. Based on the model top at and above 1 hPa, only six models (i.e., CMA, CNRM, ECMWF, JMA, NCEP, and UKMO models) have a reasonable vertical resolution in the stratosphere. These six models are referred to as high-top models, while the other four models (i.e., BoM, CNR-ISAC, ECCC, and HMCR models) are referred to as low-top models (Table 2). However, even low-top models have a realistic initial condition in the stratosphere because all models are initialized with reanalysis data.

2.3 QBO index

The QBO index is defined by 50-hPa zonal-mean zonal wind anomalies over the tropics (10°S–10°N), following previous studies (e.g., Yoo and Son 2016; Marshall et al. 2017; Son et al. 2017). When the DJF QBO index is above 0.5 standard deviation (approximately 5 m s−1), it is defined as WQBO winter. Similarly, EQBO winter is defined when the index is less than − 0.5 standard deviation. The selected QBO years are denoted by a triangle in Fig. 1. For the analysis period from January 1981 to February 2013, a total of 9 and 15 years are defined as EQBO and WQBO winters, respectively.

Time series of the U50 QBO index from ERA-Interim (gray shading) and one-month predictions of each model (colored lines). Blue and red triangles indicate EQBO and WQBO winters, respectively. The correlation coefficient between ERA-Interim and each model (same as the second column in Table 2) is indicated in parentheses

Each S2S model has a different number of QBO winters due to the different reforecast periods (third column in Table 1). For instance, the number of WQBO winters ranges from 5 years in the NCEP model to 15 years in the BoM model. Likewise, the number of EQBO winters ranges from 5 years in the NCEP model to 9 years in the BoM model. Due to this sampling issue, the detailed comparisons between EQBO and WQBO winters are primarily conducted using only seven models that have large samples (indicated by a superscript “a” in Table 1).

2.4 MJO index

Properties of the MJO, such as amplitude and phase, can be quantified by the RMM indices (Wheeler and Hendon 2004). The RMM indices are calculated in reforecasts and observations following previous studies (e.g., Gottschalck et al. 2010; Vitart 2017; Lim et al. 2018). Briefly, the RMM indices are derived from observed and forecasted OLR, 200 hPa (U200), and 850 hPa zonal winds (U850) averaged over the deep tropics (15°S–15°N). The seasonal cycle is removed using the daily climatology from observations and from the lead-time dependent climatology of the reforecasts. The previous 120-day averaged data are also removed to isolate intraseasonal variability, and each field is normalized by the square root of the area-mean variance. The first two empirical orthogonal functions (EOFs) are then obtained from a combined EOF analysis of the OLR, U200 and U850 using the observations. The observed and predicted RMM indices are computed by projecting the normalized observed and reforecast fields onto the first two observed EOFs.

For each model, the RMM indices are averaged across all available ensemble members that are initialized on the same day. As described in Lim et al. (2018) and Table 1, the ensemble size of each model substantially differs from 1 to 33. Since our goal is to examine the QBO-dependent MJO prediction skill, only the ensemble-mean MJO prediction skill is evaluated.

2.5 Evaluation metrics

The MJO prediction skill is evaluated by computing the bivariate anomaly correlation coefficient (BCOR):

Here, \({O_1}\left( t \right)\) and \({O_2}\left( t \right)\) are the verification of RMM1 and RMM2 at time \(t\), and \({M_1}\left( {t,\tau } \right)\) and \({M_2}\left( {t,\tau } \right)\) are the respective ensemble-mean reforecasts for time \(t\) for a lead time of \(\tau\) days. \(N\) is the number of reforecasts. Following previous studies (e.g., Lin et al. 2008; Rashid et al. 2011; Lim et al. 2018), MJO prediction is representatively judged to be skillful when BCOR ≥ 0.5. The values of 0.6, 0.7, and 0.8 are also used for the sensitivity tests. In all analyses, only organized MJO events with initial amplitudes larger than 1.0 are considered.

To understand the relative importance of MJO amplitude and phase errors, the mean-squared amplitude errors (\(\overline {{{\text{A}}{{\text{E}}^2}}}\)) and mean-squared phase errors (\(\overline {{{\text{P}}{{\text{E}}^2}}}\)), which are closely related to BCOR skills (Lim et al. 2018), are also computed. \(\overline {{{\text{A}}{{\text{E}}^2}}}\) and \(\overline {{{\text{P}}{{\text{E}}^2}}}\) are defined below:

Here, \({A_O}\), \({A_M}\), \({\phi _O}\), and \({\phi _M}\) are the observed and forecasted MJO amplitudes and phases in the RMM space and are defined by:

3 QBO prediction skill

The time series of the daily QBO indices from ERA-Interim and those from the reforecasts are illustrated in Fig. 1. Here, to smooth the time series, a 30-day average is applied. Long-term climatology is then removed. For instance, the observed or predicted QBO index on 1 January 1981 represents 50-hPa zonal-mean zonal wind anomalies averaged over 30 days from 1 to 30 January 1981.

All S2S models show a realistic alternation of 50-hPa zonal wind anomalies from easterlies to westerlies (Fig. 1). The correlation coefficient (COR) and root-mean-squared error (RMSE) with respect to ERA-Interim are reasonably small (Table 2). Compared to the high-top models, the low-top models generally underestimate the QBO (Table 2). Among them, the BoM model exhibits the largest underestimation of the QBO amplitude in terms of the absolute value of the QBO index (Table 2). The low vertical resolution (i.e., 17 levels with only 4 levels above 100 hPa) and its low model top (i.e., 10 hPa) likely cause a rapid and significant reduction in stratospheric wind anomalies during the forecast (Table 1; Marshall et al. 2017). The HMCR model has the second lowest vertical resolution (28 levels with 7 levels above 100 hPa), followed by the CMA and ECCC models. The QBO amplitude of this model is somewhat larger than the CMA and ECCC models (Fig. 1), but its variation is less well correlated with the observation (Table 2). However, COR is still greater than 0.90. This good performance simply results from the fact that all models are initialized with reanalysis data.

The S2S models, except the BoM and HMCR models, can be largely divided into three groups according to QBO amplitude. The three European models (CNRM, ECMWF and UKMO models) show the largest QBO amplitude and closest agreement to ERA-Interim (RMSE < 2 m s−1 in Table 2), while the JMA and NCEP models show a moderate QBO amplitude (2 m s−1 < RMSE < 3 m s−1). The remaining three models (CMA, CNR-ISAC and ECCC models) show a relatively weak QBO amplitude (RMSE > 4 m s−1). The difference between the first two groups may not be physically meaningful because they used different initial conditions. The initial condition of the first group is ERA-Interim but that of the second group is either Japanese 55-year Reanalysis (JRA-55) or Climate Forecast System Reanalysis. The CMA model in the third group used the NCEP-NCAR Reanalysis as an initial condition.

Although the CNR-ISAC and ECCC models use ERA-Interim as an initial condition, the QBO amplitudes are weaker than those in the other models. The underestimation of the QBO amplitude seems to be related to the relatively coarse vertical resolution of the models (Table 1). It is known that a fine vertical resolution (less than 1 km) is necessary to capture gravity wave breaking and the associated momentum deposit in the stratosphere that drive the QBO (Kim et al. 2013; Schmidt et al. 2013). Geller et al. (2016) also documented that sufficient vertical resolution is required for the downward propagation of QBO by influencing the simulation of wave-mean flow interaction.

The different initial conditions (seven models with ERA-Interim but three models with other reanalysis datasets) may introduce artificial intermodel differences when verifying these models against ERA-Interim. However, this does not affect the composite analyses. For instance, slightly different QBO amplitudes do not change the number of EQBO and WQBO winters in each model. Even for the MJO, its prediction skill evaluated against JRA-55 is comparable to that against ERA-Interim (Vitart 2017).

4 MJO prediction skill with QBO

The general prediction skill for the MJO using 10 S2S models has been previously evaluated for the boreal winter in Vitart (2017) and Lim et al. (2018). Figure 2a summarizes the BCOR skill of each model. This figure is almost identical to Fig. 1a in Lim et al. (2018) but only for DJF. The S2S models exhibit a significant intermodel spread in MJO prediction skill, ranging from 13 to 35 days (see the dotted line). Here, the MJO prediction skill is evaluated with BCOR = 0.5 unless specified. This large intermodel spread has partly been explained by model mean biases (Gonzalez and Jiang 2017; Lim et al. 2018). Lim et al. (2018) showed that the models with smaller biases in the horizontal moisture gradient and cloud-longwave radiation feedback over the Maritime Continent produce a higher MJO prediction skill.

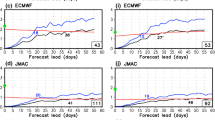

Figure 2b, c present BCORs for EQBO and WQBO winters, respectively. The decrease in BCORs over the first 2 weeks of the forecast is more abrupt during WQBO winters than during EQBO winters. This result is particularly true for the CMA, CNR-ISAC, CNRM, ECCC, and NCEP models. Overall MJO prediction skills range from 17 to 36 days during EQBO winters, but only from 10 to 28 days during WQBO winters. Here we note that the results for the BoM model are very similar, but not identical, to those presented in Marshall et al. (2017). The slight difference is likely caused by (1) inclusion of weak MJO events in Marshall et al. (2017), (2) different reference data for verification, (3) different analysis periods, and (4) different definitions of QBO phase.

This MJO prediction skill is concisely summarized in Fig. 3. On average, the MJO prediction skill is 21.2 ± 7.2 days. This skill increases to 23.6 ± 6.4 days during EQBO winters but decreases to 17.9 ± 6.2 days during WQBO winters. The EQBO–WQBO difference is 6.0 ± 3.2 days on average. A similar difference is also found when BCOR = 0.6 (i.e., 5.2 ± 2.4 days; see medium shading in Fig. 3), 0.7 (i.e., 4.3 ± 2.1 days; see medium-dark shading), or 0.8 (i.e., 2.4 ± 1.6 days; see dark shading) are used. To test the robustness, the same analyses are repeated by using the real-time OLR-based MJO indices (Kiladis et al. 2014). Although not shown, essentially, the same results are obtained. All models show a higher MJO prediction skill during EQBO winters than during WQBO winters.

BCOR skills during ALL (black), EQBO (blue) and WQBO winters (red). The number of reforecasts used in each category is denoted by white at the bottom of each bar. Light, medium, medium-dark, and dark bars denote the prediction skills based on a BCOR of 0.5, 0.6, 0.7 and 0.8, respectively. The yellow double (single) asterisks indicate that 95% (90%) confidence intervals of BCOR skill during EQBO winters are well separated from those during WQBO winters. A bootstrap method is used to determine the confidence interval

These results suggest that the S2S models have a higher MJO prediction skill during EQBO winters than during WQBO winters regardless of the choice of BCOR thresholds and MJO indices. The EQBO–WQBO MJO skill difference, however, significantly varies from model to model. The CNRM model, for instance, shows a 10-day difference. However, the NCEP and UKMO models show only a 1-day difference. To evaluate the significance of these skill differences, a bootstrap significance test is conducted. Specifically, the confidence intervals of MJO prediction skills are computed with 10,000 bootstrap sampling for EQBO and WQBO winters (e.g., Vitart 2017). When their confidence intervals are not overlapped, the skill difference is determined to be statistically significant (Lin and Brunet 2011; Vitart 2017). Six models (i.e., BoM, CMA, CNR-ISAC, ECMWF, JMA, and NCEP models) show statistically significant EQBO–WQBO skill differences at a 90% confidence level for varying BCOR thresholds. However, only three models show significant differences at the 95% confidence level, presumably due to small sample sizes.

This result, however, is still physically meaningful. When the same analyses are repeated with respect to the two ENSO phases, no systematic differences are obtained (not shown). Five models show an enhanced MJO prediction skill during El Niño winters, but the other five show the opposite result. More importantly, none of the 10 S2S models exhibit statistically significant MJO prediction skill differences between El Niño and La Niña winters, even at the 90% confidence level. This finding indicates that the QBO–MJO prediction skill relationship, shown in Figs. 2 and 3, does not likely occur by chance.

Figures 2 and 3 also reveal that a higher QBO prediction skill does not necessarily translate to a larger EQBO–WQBO MJO skill difference. For example, the ECMWF model, which produces one of the best depictions of the QBO and has the best MJO prediction skill, shows an 8-day difference in MJO prediction skill. On the other hand, the BoM model, which produces the lowest QBO prediction skill of all the models, shows a 7-day difference in MJO prediction skill. The same is also true for the CMA model. This result suggests that the QBO-related MJO prediction skill change may not be strongly sensitive to the model physics and dynamics in the stratosphere. Marshall et al. (2017) argued that the behavior of the MJO itself is more important than the mean state in the stratosphere.

To better understand the prediction errors during the two QBO phases, the mean-squared amplitude errors (\(\overline {{{\text{A}}{{\text{E}}^2}}}\)) and the mean-squared phase errors (\(\overline {{{\text{P}}{{\text{E}}^2}}}\)) are further examined. Lim et al. (2018) showed that both amplitude and phase errors are highly correlated with BCOR skills. Figure 4 presents \(\overline {{{\text{A}}{{\text{E}}^2}}}\) and \(\overline {{{\text{P}}{{\text{E}}^2}}}\) of all models and multimodel mean values in the week two forecasts when the EQBO-WQBO MJO skill difference rapidly increases (Fig. 2). Here, the week two forecast is defined by averaging the value over forecast days 8–14, as demonstrated by Lim et al. (2018). Note that the \(\overline {{{\text{A}}{{\text{E}}^2}}}\) are normalized by the MJO amplitude of the observation due to a larger amplitude during EQBO winters.

Both \(\overline {{{\text{A}}{{\text{E}}^2}}}\) and \(\overline {{{\text{P}}{{\text{E}}^2}}}\) are smaller during EQBO winters. Except for the ECMWF and HMCR models, \(\overline {{{\text{A}}{{\text{E}}^2}}}\) range from 0.10 to 0.23 in EQBO winters but from 0.17 to 0.30 in WQBO winters. Likewise, \(\overline {{{\text{P}}{{\text{E}}^2}}}\) range from \(3\pi /16\) to \(4\pi /16\) in EQBO winters, whereas they range from \(4\pi /16\) to \(6\pi /16\) in WQBO winters. This result indicates that both MJO amplitude and phase errors contribute to the MJO prediction skill differences between EQBO and WQBO winters.

Next, we examine the relative roles of circulation and convection anomalies in MJO prediction errors (Fig. 5). Specifically, the pattern correlations of OLR, U850 and U200 anomalies are computed over the Indo-Pacific warm pool region (60°E–180°E, 15°S–15°N) at the 2-week forecast and then averaged over all reforecasts. To focus on the intraseasonal variability, the previous 120-day averaged observation is removed from each variable before computing the pattern correlation. We found that the OLR correlations are typically smaller than the circulation correlations. The OLR pattern correlations range from 0.20 to 0.40, but the U200 and U850 pattern correlations range from 0.30 to 0.60.

However, there is a noticeable difference between the OLR and U850/U200 pattern correlations. While OLR pattern correlations do not differ much between EQBO and WQBO winters (red and blue circles in Fig. 5a), U850/U200 pattern correlations are reasonably well separated (Fig. 5b, c). This result indicates that an enhanced MJO prediction skill during EQBO winters is more closely related to a better prediction of zonal circulation than convection. It is known that MJO convection rapidly weakens within 10 days of model integration (e.g., Kim et al. 2014; Xiang et al. 2015; Kim 2017; see also Sect. 4.3). Although convection is weak, the associated circulations can be maintained for a while, resulting in a high MJO prediction skill. This behavior is reflected in the RMM index which is more weighted to zonal circulation than to convection (Straub 2013; Kiladis et al. 2014; Kim et al. 2014; Kim 2017).

4.1 Sensitivity to the initial MJO amplitude

One of the key factors that may determine an enhanced MJO prediction skill is MJO amplitude. The MJO is typically stronger than normal during EQBO winters (Yoo and Son 2016; Nishimoto and Yoden 2017; Son et al. 2017), and a strong and well-organized MJO event can be predicted better than a weak MJO event (e.g., Rashid et al. 2011; Kim et al. 2014; Lim et al. 2018). Figure 6 shows that MJO events with amplitudes larger than 1.5 are common during EQBO winters but not during WQBO winters. In terms of frequency, the most frequently occurring MJO events (13%) have an amplitude of 1.9 during EQBO winters. In contrast, during WQBO winters, the most frequently occurring MJO events (15%) have an amplitude of 1.1.

Probability distribution function of initial MJO amplitude during ALL (black), EQBO (blue), and WQBO winters (red). The value shown is the ratio of the number of events in each bin (at bin intervals of 0.2) to the total number of events in each category. Seven individual models that have a sufficient number of reforecasts (Table 1) are denoted with light colored lines, and their multimodel mean values are denoted by dark colored lines. The bins in which EQBO–WQBO differences are statistically significant at the 95% confidence level are marked in blue and red asterisks. A Student’s t test is used for the significance test

Marshall et al. (2017) tested the above conjecture using the BoM model and found that the QBO–MJO prediction skill relationship is not simply determined by the initial MJO amplitude. They showed that the MJO prediction skill during EQBO winters is higher than that during WQBO winters even when MJO events with a comparable initial amplitude are considered. Their analyses are extended in Fig. 7 for seven S2S models that have more than 50 MJO events in each QBO phase (indicated by a superscript “a” in Table 1). Regardless of the initial MJO amplitude, most models show a higher MJO prediction skill in EQBO winters than in WQBO winters. The only exception is strong MJO events in the CNR-ISAC model (i.e., 1.9–2.5 and 2.0–2.6 bins of initial amplitude). This result clearly indicates that a higher MJO prediction skill is not simply due to a stronger initial MJO amplitude.

Differences in the MJO prediction skills for BCOR = 0.5 between EQBO and WQBO winters for each MJO amplitude (bin width is 0.6). As in Fig. 6, only seven models that have a sufficient number of reforecasts are considered here

4.2 Sensitivity to the initial MJO phase

The sensitivity of the MJO prediction skill to the initial MJO phase is also tested and shown in Fig. 8. The positive EQBO–WQBO MJO skill differences appear in most phases and in most models. All seven models exhibit a higher MJO prediction skill during EQBO winters than during WQBO winters when initialized in MJO phases 4–5 and 6–7. The enhanced skills for phases 4–5 and 6–7 are relatively large in the high-top models (i.e., CMA, ECMWF, and JMA models) compared to the low-top models (e.g., CNR-ISAC, ECCC, and HMCR models).

Same as Fig. 7 but for each MJO phase

A systematic skill difference, however, does not appear during MJO development and decaying phases (i.e., MJO phases 2–3 and 8–1 in Fig. 8). For example, the BoM and ECMWF models, which are the two best models in terms of the MJO prediction skill, show either no difference or a deficit in prediction skill during EQBO winters compared to WQBO winters when initialized in MJO phase 8–1. A similar result is found for the BoM, CNR-ISAC and HMCR models for MJO phase 2–3. This result may suggest that the QBO–MJO link is better captured when the model is initialized with well-organized MJO circulations. Note that a less systematic QBO–MJO prediction skill relationship in MJO phases 8–1 and 2–3 is not related to MJO amplitude. The initial MJO amplitudes in these MJO phases are robustly stronger during EQBO winters than during WQBO winters (not shown).

4.3 Limiting factors of MJO prediction skill

What is the cause of different MJO prediction skills between the two QBO phases? We speculate that the difference may partly result from the varying persistence of MJO. If the observed MJO is maintained for only 2 weeks, the theoretical limit of the MJO prediction skill would be just 2 weeks. After 2 weeks, unorganized or random perturbations in the observation, which are not necessarily associated with MJO, would have a small correlation with the predicted MJO anomalies. The fact that MJO is less organized and less persistent during WQBO winters (Son et al. 2017; Nishimoto and Yoden 2017; Hendon and Abhik 2018; Zhang and Zhang 2018) then implies that the theoretical limit of MJO prediction is lower in WQBO winters than in EQBO winters.

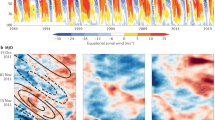

Figure 9 presents U850 and OLR anomalies for MJO phase 4–5 in the observations (contour) and at forecast day 1 from the ECMWF model (shading). The model variables that are statistically significant at the 95% confidence level are dotted. A Student’s t test is used here. As shown in Fig. 5, the previous 120-day averaged observation is subtracted from the anomalies to obtain the MJO-related subseasonal circulation patterns. MJO convection, with negative OLR anomalies over the Maritime Continent and positive OLR anomalies over the central Pacific, is well organized during EQBO winters (Fig. 9c). Consistent with this finding, lower-level westerlies over the Indian Ocean and easterlies over the Pacific Ocean are well defined (Fig. 9a). A similar circulation pattern appears during WQBO winters (Fig. 9b). However, lower-level westerlies over the Indian Ocean and the easterlies over the central Pacific exhibit a large asymmetry. The resulting low-level convergence is weak and spatially broad.

(Top) U850 and (bottom) OLR composite anomalies for MJO phase 4–5 during (left) EQBO and (right) WQBO winters at forecast day 1 from the ECMWF model. The anomalies from reforecasts are shaded, and those from the observations are contoured. Model anomalies, which are statistically significant at the 95% confidence level, are dotted in gray. A Student’s t test is used for the significance test. The contour intervals of U850 and OLR anomalies are 1 m s−1 and 6 W m−2, respectively. The sample size is denoted in the top-left corner

Figure 10a, b present the longitude-time evolution of OLR and U850 anomalies beginning from MJO phase 4–5. All variables are averaged over 15°S–5°N and a 5-day moving average is applied. It is evident that initial circulation and convection anomalies are strong and well organized during EQBO winters (Fig. 10a; see also Fig. 9). More importantly, the MJO persists for a long duration. In particular, statistically significant U850 anomalies are observed for up to 4 weeks, propagating all the way to the date line (see dotted values in Fig. 10a). However, during WQBO winters, significant U850 anomalies are observed for only 2 weeks (Fig. 10b). After 2 weeks, no organized convection or circulation anomalies are observed. This result implies that the theoretical limit of MJO prediction skill would be approximately 4 weeks in EQBO winters but only approximately 2 weeks in WQBO winters.

Longitude-time evolution of (top) NOAA OLR and ERA-Interim U850 anomalies and (bottom) ECMWF OLR and U850 anomalies averaged over 15°S–5°N for MJO phase 4–5 during (left) EQBO and (right) WQBO winters. The shading interval of OLR anomalies 3 W m−2, and the contour interval of the U850 anomalies is 0.5 m s−1. U850 anomalies, which are statistically significant at the 95% confidence level, are dotted in gray. A Student’s t test is used for the significance test. The sample size is denoted in the top-left corner, and the MJO prediction skill for BCOR = 0.5 is indicated in parentheses

Figure 10c, d are the same as Fig. 10a, b but for the ECMWF forecast. During EQBO winters, the model predicts both U850 (contour) and OLR anomalies (shading) remarkably well (compare Fig. 10a, c), although the overall amplitude and eastward propagation speed are somewhat underestimated (Fig. 4). The model, however, exaggerates MJO propagation during WQBO winters (Fig. 10d), failing to reproduce the breakdown of MJO within 2 weeks. Instead, the predicted MJO, although weak, continues to propagate eastward, as observed in EQBO winters. This result indicates that the relatively low MJO prediction skill in WQBO winters is caused by an early breakdown of MJO that is not well predicted by the model.

The MJO evolutions are further examined for the BoM, CMA, and JMA models (Fig. 11). These models show large differences in MJO prediction skill for MJO phase 4–5 (Fig. 8). In all models, U850 anomalies are well maintained for approximately 30 days during EQBO winters (Fig. 11a, c, e). In the BoM model which is the second best model in terms of BCOR skill, not only U850 but also OLR anomalies are well captured for up to 4 weeks.

Same as Fig. 10c, d but for the (top) BoM, (middle) CMA, and (bottom) JMA models

These three models, however, predict somewhat different MJOs during WQBO winters. Unlike the ECMWF model, the BoM model shows a similar spatiotemporal structure to the observation with weakened eastward propagating lower-level wind and convection anomalies (Fig. 11b). However, the model still exaggerates MJO propagation. Although the observed MJO is disorganized in 2 weeks (Fig. 10b), the predicted MJO is maintained for up to 3 weeks. The CMA and JMA models also successfully capture the MJO anomalies in the first week. These anomalies are rapidly disorganized in the CMA model in the second week (Fig. 11d). In contrast, those in the JMA model are maintained for almost 4 weeks over the Indian Ocean without eastward propagation. These diverse MJO predictions, which are particularly evident when MJO becomes disorganized in the observations, are responsible for relatively low MJO prediction skills during WQBO winters.

5 Summary and discussion

This study examines the impact of the QBO on wintertime MJO prediction skill in the S2S models. It is found that all models show a higher MJO prediction skill during EQBO winters than during WQBO winters by 1–10 days, confirming the result by Marshall et al. (2017). Although the enhanced MJO prediction skill might be simply caused by stronger MJOs during EQBO winters than during WQBO winters, the overall result does not change when only MJO events with similar initial amplitudes are examined. Instead, the difference in prediction skill is partly associated with varying MJO persistence by the QBO (Marshall et al. 2017). The MJOs in WQBO winters are often rapidly disorganized within a few weeks. This breakdown of MJO is not well captured by the S2S models, reducing the theoretical limit of MJO prediction. However, it is unclear why the observed MJO is less persistent during WQBO winters.

Marshall et al. (2017) reported that not only the prediction skill but also the potential predictability increases during EQBO winters in the BoM model. The potential predictability, which is determined by the method described in Kim et al. (2014), indeed increases during EQBO winters in most S2S models (not shown). However, a higher predictability does not translate to a higher prediction skill. The EQBO–WQBO difference in the potential predictability is not correlated with that in the prediction skill. Their correlation across nine models with at least three ensemble members is only 0.01 for the 0.7 threshold value. This result implies that the EQBO–WQBO MJO prediction skill difference is not simply controlled by the potential predictability.

The EQBO–WQBO MJO prediction skill difference could also be influenced by the model deficiency. Although all models show systematically higher MJO prediction skills during EQBO winters than during WQBO winters, the differences from the WQBO winters vary widely among the models (Fig. 3). Such an intermodel difference could be associated with model deficiency. Recent work shows that errors in model mean states, related to mean moisture distribution and longwave cloud-radiation feedback, influence MJO prediction skill (Kim 2017; Lim et al. 2018). This finding suggests that if the model biases are influenced by the QBO, the MJO prediction skill would also be influenced accordingly. However, due to the small sample sizes, it is hard to examine the difference in model biases between the two QBO phases.

The QBO–MJO link in both observations and S2S models, shown in the present study, suggests that the stratospheric processes are critical for better understanding and predicting the boreal winter MJO. Considering that the MJO teleconnection is modulated by the QBO (Son et al. 2017; Wang et al. 2018), this result offers an opportunity to improve the prediction skill of the MJO-related midlatitude circulations. Preliminary results already show that MJO-related atmospheric river forecasts significantly vary with the QBO (Baggnett et al. 2017).

References

Baggnett CF, Barnes EA, Maloney ED, Mundhenk BD (2017) Advancing atmospheric river forecasts into subseasonal-to-seasonal time scales. Geophys Res Lett 44:7528–7536. https://doi.org/10.1002/2017GL074434

Dee DP, Uppala SM, Simmons AJ et al (2011) The ERA-Interim reanalysis: configuration and performance of the data assimilation system. Q J R Meteorol Soc 137:553–597. https://doi.org/10.1002/qj.828

Ferreira RN, Schubert WH, Hack JJ (1996) Dynamical aspects of twin tropical cyclones associated with the Madden-Julian oscillation. J Atmos Sci 53:929–945. https://doi.org/10.1175/1520-0469(1996)053%3C0929:DAOTTC%3E2.0.CO;2

Geller MA, Zhou T, Shindell D et al (2016) Modeling the QBO-Improvements resulting from higher model vertical resolution. J Adv Model Earth Syst 8:1092–1105. https://doi.org/10.1002/2016MS000699

Gonzalez AO, Jiang X (2017) Winter mean lower tropospheric moisture over the Maritime Continent as a climate model diagnostic metric for the propagation of the Madden-Julian oscillation. Geophys Res Lett 44:2588–2596. https://doi.org/10.1002/2016GL072430

Gottschalck J, Wheeler M, Weickmann K et al (2010) A frame for assessing operational Madden-Julian Oscillation forecasts: a clivar MJO working group project. Bull Am Meteorol Soc 91:1247–1258. https://doi.org/10.1175/2010BAMS2816.1

Grise KM, Son S-W, Gyakum JR (2013) Intraseasonal and interannual variability in North American storm tracks and its relationship to equatorial Pacific variability. Mon Weather Rev 141:3610–3625. https://doi.org/10.1175/MWR-D-12-00322.1

Hendon HH, Abhik S (2018) Differences in vertical structure of the Madden-Julian Oscillation associated with the quasi-biennial oscillation. Geophys Res Lett 45:4419–4428. https://doi.org/10.1029/2018GL077207

Jeong J-H, Ho C-H, Kim B-M, Kwon W-T (2005) Influence of the Madden-Julian Oscillation on wintertime surface air temperature and cold surges in east Asia. J Geophys Res Atmos 110:D11104. https://doi.org/10.1029/2004JD005408

Keen RA (1982) The role of cross-equatorial tropical cyclone pairs in the Southern Oscillation. Mon Weather Rev 110:1405–1416. https://doi.org/10.1175/1520-0493(1982)110<1405TROCET>2.0.CO;2

Kiladis GN, Dias J, Straub KH, Wheeler MC, Tulich SN, Kikuchi K, Weickmann KM, Ventrice MJ (2014) A comparison of OLR and circulation-based indices for tracking the MJO. Mon Weather Rev 142:1697–1715. https://doi.org/10.1175/MWR-D-13-00301.1

Kim H-M (2017) The impact of the mean moisture bias on the key physics of MJO propagation in the ECMWF reforecast. J Geophys Res Atmos 122:7772–7784. https://doi.org/10.1002/2017JD027005

Kim H-K, Seo K-H (2016) Cluster analysis of tropical cyclone tracks over the western North Pacific using a Self-Organizing Map. J Clim 29:3731–3751. https://doi.org/10.1175/JCLI-D-15-0380.1

Kim J, Grise KM, Son S-W (2013) Thermal characteristics of the cold-point tropopause region in CMIP5 models. J Geophys Res Atmos 118:8827–8841. https://doi.org/10.1002/jgrd.50649

Kim H-M, Webster PJ, Toma VE, Kim D (2014) Predictability and prediction skill of the MJO in two operational forecasting systems. J Clim 27:5364–5378. https://doi.org/10.1175/JCLI-D-13-00480.1

L’Huereux ML, Higgins RW (2008) Boreal winter links between the Madden-Julian Oscillation and the Arctic Oscillation. J Clim 21:3040–3050. https://doi.org/10.1175/2007JCLI1955.1

Liebmann B, Smith CA (1996) Description of a complete (interpolated) outgoing longwave radiation dataset. Bull Am Meteorol Soc 77:1275–1277

Lim Y, Son S-W, Kim D (2018) MJO prediction skill of the Subseasonal-to-Seasonal Prediction models. J Clim 31:4075–4094. https://doi.org/10.1175/JCLI-D-17-0545.1

Lin H, Brunet G (2009) The influence of the Madden-Julian Oscillation on Canadian winter-time surface air temperature. Mon Weather Rev 137:2250–2262. https://doi.org/10.1175/2009MWR2831.1

Lin H, Brunet G (2011) Impact of the North Atlantic Oscillation on the forecast skill of the Madden-Julian Oscillation. Geophys Res Lett 38:L02802. https://doi.org/10.1029/2010GL046131

Lin H, Brunet G, Derome J (2008) Forecast skill of the Madden-Julian Oscillation in two Canadian atmospheric models. Mon Weather Rev 136:4130–4149. https://doi.org/10.1175/2008MWR2459.1

Liu C, Tian B, Li K-F, Manney GL, Livesey NJ, Yung YL, Waliser DE (2014) Northern Hemisphere mid-winter vortex-displacement and vortex-split stratospheric sudden warmings: influence of the Madden-Julian Oscillation and Quasi-Biennial Oscillation. J Geophys Res Atmos 119:12599–12620. https://doi.org/10.1002/2014JD021876

Madden RA, Julian PR (1971) Detection of a 40–50 Day Oscillation in the Zonal wind in the Tropical Pacific. J Atmos Sci 28:702–708. https://doi.org/10.1175/1520-0469(1971)028%3C0702:DOADOI%3E2.0.CO;2

Madden RA, Julian PR (1972) Description of global-scale circulation cells in the tropics with a 40–50 day period. J Atmos Sci 29:1109–1123. https://doi.org/10.1175/1520-0469(1972)029%3C1109:DOGSCC%3E2.0.CO;2

Marshall AG, Hendon HH, Son S-W, Lim Y (2017) Impact of the quasi-biennial oscillation on predictability of the Madden-Julian oscillation. Clim Dyn 49:1365–1377. https://doi.org/10.1007/s00382-016-3392-0

Nishimoto E, Yoden S (2017) Influence of the Stratospheric Quasi-Biennial Oscillation on the Madden–Julian Oscillation during Austral Summer. J Atmos Sci 74:1105–1125. https://doi.org/10.1175/JAS-D-16-0205.1

Rashid HA, Hendon HH, Wheeler MC, Alves O (2011) Prediction of the Madden–Julian Oscillation with the POAMA dynamical prediction system. Clim Dyn 36:649–661. https://doi.org/10.1007/s00382-010-0754-x

Salby ML, Hendon HH (1994) Intraseasonal behavior of clouds, temperature, and motion in the Tropics. J Atmos Sci 51:2207–2224. https://doi.org/10.1175/1520-0469(1994)051%3C2207:IBOCTA%3E2.0.CO;2

Schmidt H, Rast S, Bunzel F et al (2013) Response of the middle atmosphere to anthropogenic and natural forcings in the CMIP5 simulations with the Max Planck Institute Earth system model. J Adv Model Earth Syst 5:98–116. https://doi.org/10.1002/jame.20014

Seo K-H, Lee H-J (2017) Mechanisms for a PNA-Like Teleconnection Pattern in Response to the MJO. J Atmos Sci 74:1767–1781. https://doi.org/10.1175/JAS-D-16-0343.1

Seo K-H, Lee H-J, Frierson DMW (2016) Unraveling the teleconnection mechanisms that induce wintertime temperature anomalies over the Northern Hemisphere continents in response to the MJO. J Atmos Sci 73:3557–3571. https://doi.org/10.1175/JAS-D-16-0036.1

Son S-W, Lim Y, Yoo C, Hendon HH, Kim J (2017) Stratospheric control of the Madden–Julian Oscillation. J Clim 30:1909–1922. https://doi.org/10.1175/JCLI-D-16-0620.1

Straub KH (2013) MJO initiation in the real-time multivariate MJO index. J Clim 26:1130–1151. https://doi.org/10.1175/JCLI-D-12-00074.1

Vitart F (2017) Madden-Julian Oscillation prediction and teleconnections in the S2S database. Q J R Meteorol Soc 143:2210–2220. https://doi.org/10.1002/qj.3079

Vitart F, Ardilouze C, Bonet A et al (2017) The Sub-seasonal to Seasonal (S2S) prediction project database. Bull Am Meteorol Soc 98:163–173. https://doi.org/10.1175/BAMS-D-16-0017.1

Wang J, Kim H-M, Chang EKM, Son S-W (2018) Modulation of the MJO and North Pacific Storm Track relationship by the QBO. J Geophys Res Atmos 123:3976–3992. https://doi.org/10.1029/2017JD027977

Wheeler MC, Hendon HH (2004) An all-season real-time multivariate MJO index: development of an index for monitoring and prediction. Mon Weather Rev 132:1917–1932. https://doi.org/10.1175/1520-0493(2004)132%3C1917:AARMMI%3E2.0.CO;2

Xiang B, Zhao M, Jiang X, Lin S-J, Li T, Fu X, Vecchi G (2015) The 3–4-week MJO prediction skill in a GFDL coupled model. J Clim 28:5351–5364. https://doi.org/10.1175/JCLI-D-15-0102.1

Yoo C, Son S-W (2016) Modulation of the boreal wintertime Madden-Julian Oscillation by the stratospheric quasi-biennial oscillation. Geophys Res Lett 43:1392–1398. https://doi.org/10.1002/2016GL067762

Zhang C (2005) Madden-Julian Oscillation. Rev Geophys 43:RG2003. https://doi.org/10.1029/2004RG000158

Zhang C, Zhang B (2018) QBO-MJO connection. J Geophys Res Atmos 123:2957–2967. https://doi.org/10.1002/2017JD028171

Acknowledgments

This work is supported by the Korea Meteorological Institute under Grant KMI 2018-01011. We thank the operational centers for supplying their model output through the S2S database. We also appreciate the three anonymous reviewers for their constructive comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lim, Y., Son, SW., Marshall, A.G. et al. Influence of the QBO on MJO prediction skill in the subseasonal-to-seasonal prediction models. Clim Dyn 53, 1681–1695 (2019). https://doi.org/10.1007/s00382-019-04719-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00382-019-04719-y