Abstract

Evaluating the response of climate to greenhouse gas forcing is a major objective of the climate community, and the use of large ensemble of simulations is considered as a significant step toward that goal. The present paper thus discusses a new methodology based on neural network to mix ensemble of climate model simulations. Our analysis consists of one simulation of seven Atmosphere–Ocean Global Climate Models, which participated in the IPCC Project and provided at least one simulation for the twentieth century (20c3m) and one simulation for each of three SRES scenarios: A2, A1B and B1. Our statistical method based on neural networks and Bayesian statistics computes a transfer function between models and observations. Such a transfer function was then used to project future conditions and to derive what we would call the optimal ensemble combination for twenty-first century climate change projections. Our approach is therefore based on one statement and one hypothesis. The statement is that an optimal ensemble projection should be built by giving larger weights to models, which have more skill in representing present climate conditions. The hypothesis is that our method based on neural network is actually weighting the models that way. While the statement is actually an open question, which answer may vary according to the region or climate signal under study, our results demonstrate that the neural network approach indeed allows to weighting models according to their skills. As such, our method is an improvement of existing Bayesian methods developed to mix ensembles of simulations. However, the general low skill of climate models in simulating precipitation mean climatology implies that the final projection maps (whatever the method used to compute them) may significantly change in the future as models improve. Therefore, the projection results for late twenty-first century conditions are presented as possible projections based on the “state-of-the-art” of present climate modeling. First, various criteria were computed making it possible to evaluate the models’ skills in simulating late twentieth century precipitation over continental areas as well as their divergence in projecting climate change conditions. Despite the relatively poor skill of most of the climate models in simulating present-day large scale precipitation patterns, we identified two types of models: the climate models with moderate-to-normal (i.e., close to observations) precipitation amplitudes over the Amazonian basin; and the climate models with a low precipitation in that region and too high a precipitation on the equatorial Pacific coast. Under SRES A2 greenhouse gas forcing, the neural network simulates an increase in precipitation over the La Plata basin coherent with the mean model ensemble projection. Over the Amazonian basin, a decrease in precipitation is projected. However, the models strongly diverge, and the neural network was found to give more weight to models, which better simulate present-day climate conditions. In the southern tip of the continent, the models poorly simulate present-day climate. However, they display a fairly good convergence when simulating climate change response with a weak increase south of 45°S and a decrease in Chile between 30 and 45°S. Other scenarios (A1B and B1) strongly resemble the SRES A2 trends but with weaker amplitudes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In a companion paper (Boulanger et al. 2006), we presented a statistical method based on neural network and Bayesian statistics aiming at optimally combining model simulations involved in the Intergovernmental Panel on Climate Change (IPCC) Project. We discussed the projection of future annual mean and seasonal cycle temperature conditions during four 25-year periods (2001–2005, 2026–2050, 2051–2075, 2076–2100) and for the three scenarios A1B, A2 and B1. Our objective is to contribute to one of the major challenges of the scientific climate community: how to take advantage of the large ensembles of multi-model simulations provided by IPCC in the Fourth Assessment Report. Our work mainly focuses on South America as a contribution to the CLARIS European Project (http://www.claris-eu.org).

In Boulanger et al. (2006), the neural network parameters of the two-layer perceptron are optimized with Bayesian statistics. The main advantage provided by this method is that, instead of defining prior distributions dependent on the nature of the data and the choice of an expert, here the choice of prior distributions depends on the neural network architecture. Moreover the use of Bayesian methods to optimize the neural network architecture avoids the over fitting problem and allows computing hyperparameters at each IPCC model entry neuron. Such hyperparameters (also called model weight indices, MWIs) are indicators of the contribution of each model to the IPCC model combination, and can, by analogy, be considered as an optimal linear combination of the climate models in condition under which the models do have some skill in simulating the studied variable.

We demonstrated in the case of temperature that both the neural network and linear projections had some skill to represent twentieth century observations. Moreover, we found that the neural network, when used as an extrapolator for twenty-first century temperature change, was underestimating systematically the raw model projections, and that the multiplicative factor between the neural network projection and the linear ensemble projection had a confidence level between 0 and 1. Similarly to Tebaldi et al. (2005), we demonstrated that such a confidence level actually resulted from a combination of two criteria: the bias criterion (differences between the linear combination and observations) and the divergence (IPCC inter-model variance). Before applying the same method to another field such as precipitation, one should naturally consider whether the same kind of results should be expected. In fact, many differences between temperature and precipitation should be considered.

All climate models simulate fairly well the large-scale temperature patterns and amplitudes, while very few have skill in simulating precipitation patterns and amplitudes. For this reason, the linear combination of climate models based on the MWIs is unlikely to reproduce observations as well for precipitation as for temperature. Moreover, the model low skill in simulating large-scale precipitation patterns is a strong caveat in any climate change projection, and should be kept in mind when interpreting precipitation change projection.

All climate models simulate a large increase of temperature with a relatively high coherence between the models. As a consequence, the future temperature values are out of the range of observations, and so of the neural network training data set. That is why it was relatively easy in Boulanger et al. (2006) to “linearize” the network projection and interpret its results as the product of a linear combination of climate models using a confidence level. As we will show, the simulation of precipitation strongly diverges from one model to another making more difficult to evaluate a linear MWI based projection.

Because of the above, it is likely that the linear combination will have no significant skill for precipitation, and that the network will not be “linearizable”. Thus, we will analyze the network projections considering criteria different from those introduced in Boulanger et al. (2006).

Before presenting the method and results, it is important to note that our method is actually based on a statement and an hypothesis. The statement is that the weight given to a model when computing the mix of their twenty-first climate conditions should depend on its skill in representing present climate conditions. The hypothesis is that our method based on neural network is actually weighting the models that way. While the statement is actually an open question, which answer may vary according to the region or climate signal under study, our results will demonstrate that the neural network approach indeed allows to weighting models according to their skills. As such, our method is an improvement of existing Bayesian methods developed to mix ensembles of simulations (e.g., Tebaldi et al. 2005). However, the general low skill of climate models in simulating precipitation mean climatology implies that the final projection maps (whatever the method used to compute them) may significantly change in the future as models improve. Therefore, the projection results for late twenty-first century conditions are presented as possible projections based on the “state-of-the-art” of present climate modeling, but the reader should be cautious that the statistical optimization of the ensemble mix does not mean that the projection maps are more likely to be representative of the climate changes to be observed in the future.

Data, models and scenarios used in the present study are described in Sect. 2. In Sect. 3, we discuss the method. In Sect. 4, we present the calibration on twentieth century observations. In Sect. 5, climate change projections are analyzed for precipitation mean state and seasonal cycle for the three scenarios A2, A1B and B1. In Sect. 6, we conclude and discuss the results summarizing the regional impacts of mean state and seasonal cycle changes.

2 Data, models and scenarios

2.1 Data

The CRU TS 2.0 dataset comprises 1,200 monthly grids of observed climate and covers the global land surface at 0.5° resolution. There are five climatic variables available: cloud cover, DTR, precipitation, temperature and vapor pressure. The precipitation data set is the CRU TS 2.0 dataset, which comprises 1,200 monthly grids of observed climate (from 1901 to 2002) and covering the global land surface at 0.5° resolution. The authors have already used a previous version (New et al. 2000) of this dataset (Boulanger et al. 2005), and showed that at least for precipitation the comparison to satellite-based rainfall in South America was relatively good. Considering that we are mainly interested by large-scale patterns, the data are interpolated onto a 2.5° × 2.5° grid.

2.2 Models

The seven Atmosphere–Ocean Global Climate Models (AOGCMs) also analyzed in Boulanger et al. (2006) are listed in Table 1. All the model outputs are interpolated over the 2.5° × 2.5° grid defined for the observations. Some models have finer resolutions, others have coarser resolutions, but overall the 2.5° resolution grid is a good compromise, which does not affect the large-scale patterns, and which allows a reasonable level of regional description.

2.3 Scenarios

A detailed description of the IPCC SRES (Special Report on Emissions Scenarios) scenarios is given in Boulanger et al. (2006).

3 Method

The reader is referred to Boulanger et al. (2006) for a full discussion on the methodology. Briefly, the problem we want to solve is to compare spatial maps of a multi-model set to observations. Therefore, we use a two-layer perceptron (MLP) defined by:

-

In the input layer: one neuron for the longitude position, one neuron for the latitude position and as many additional neurons as models (in our case 7).

-

In the output layer: one neuron for observations.

-

In the hidden layer: a number of neurons to optimize.

Moreover, the method computes hyperparameters (Boulanger et al. 2006; Nabney and Netlab 2002), which allow computing MWI values comprised between 0 and 1. The MWI values could, by analogy, be compared to a linear weight applied to each model when combining them linearly. Whether such a linear combination has any skill in representing observations depends on the models used to compute it. For precipitation, we found these MWI values do not add much to the discussion.

As explained in Boulanger et al. (2006), we optimized the MLP for different architectures (number of neurons in the hidden layer). For each architecture (from 1 to 15 neurons), we selected two “best” networks based either on a Bayesian [best evidence value; see Boulanger et al. (2006)’s appendix] or classical criterion (minimum error when comparing the network projection to observations over a test period, 1951–1975, different from the training period). Figure 1 displays the training and test errors based on the mean annual precipitation fields. Our sensitivity results are similar whether we study the seasonal precipitation fields or the mean annual field. In the case displayed in Fig. 1, both the training and test errors decrease from 1 to 5 neurons and then remain relatively stable thanks to the MLP parameter optimization evidence procedure, which avoids the over fitting of the MLP to the data. As our results (twenty-first century precipitation projections) were not found to be sensitive at 15 neurons or more, only the architectures between 3 and 15 neurons selected by the Bayesian or classical approach are taken into account in the present study.

Sensitivity of the evidence (upper panel), training error variance (middle panel, in °C2) and test error variance (lower panel, in °C2) to the number of neurons in the hidden layer. The training error variance is computed over the training period (1976–2000). The test error variance is computed over the 1951–1975 period. Solid lines represent the values for the networks selected using the classical approach. The dashed lines represent the values for the networks selected using the Bayesian approach

4 Calibration to twentieth century observations

4.1 Precipitation mean state

4.1.1 Model–data comparison

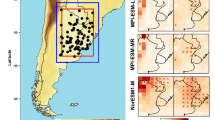

Figure 2 compares the annual mean observations to the seven models. It should be noted here that CRU data are considered as reference for the sake of describing the methodology. Further analysis with more IPCC models should also take into account the uncertainty on the “observed” datasets used to evaluate the twentieth century model skills. The models strongly differ from each other and from observations. However, among these seven models, we can identify two types. The first-type model (CNRM, MPI, UKM, NCAR) displays a Northwest–Southeast precipitation axis over the Amazonian basin characteristics of the South American Monsoon (Zhou and Lau 1998) comparing relatively well to observations. The second-type models (IPSL, GFDL, MIROC) simulate a much weaker precipitation in that region, but very strong precipitations along the equatorial Pacific coasts. Whether such model types can be generalized will require further analysis of all the models available in the IPCC database. Such an analysis goes beyond the scope of the present study.

Over the La Plata basin, most of the models underestimate the precipitation and do not simulate the precipitation amplitude from 15 to 35°S along the Atlantic coast. However, south of 35°S, the models do not reproduce the very weak annual precipitation amplitude and they overestimate precipitation on both the Chilean and Argentinean sides of the southern tip.

Summarizing the characteristics of the seven-model ensemble (Fig. 3), the model mean bias relative to observations is characterized by weaker precipitation in northern South America (Colombia, Venezuela, Guyana, Brazil) and over the La Plata basin, and by stronger precipitation in Nordeste (Brazil) and along the Pacific coast and the southern tip south of 35–40°S. The median is very similar to the mean suggesting the extremes do not strongly affect the mean value. The minimum difference to observations displays negative values almost everywhere except along the Chilean coasts and the southern tip. The existence of positive minimum difference values is indicative of the strong bias of the models in those regions. The maximum difference displays negative values in northern South America and in the La Plata basin in agreement with the common bias of the models and positive values near Bolivia and in Nordeste. Overall, the divergence between the models shows strong values in Nordeste, Bolivia, the Pacific coast and the southern tip of the continent. To conclude, each region of South America either presents a strong bias or a strong divergence, both representative of the overall low skill of the ensemble in representing precipitation patterns and amplitudes.

Indices computed on the annual mean precipitation of each model scaled by the annual mean observed precipitation: mean bias (difference between the models mean and observations); median (median of the difference); minimum difference (minimum value of the differences between each of the seven model ensemble and observations at each point of the grid); maximum difference (maximum value of the differences between each of the seven model ensemble and observations at each point of the grid); divergence (standard deviation of the model–observations differences at each grid point). All contours are every 0.1 U. Values larger or smaller than 1 are not plotted

4.1.2 Precipitation model index

In order to quantify, which models better represent observations, we computed the square of the model–observation difference averaged over the entire continent (Fig. 4a), north of 35°S (Fig. 4b) and south of 35°S (Fig. 4c). Such a separation makes it possible to identify skills in the tropical region vs. the southern tip of the continent. All the results are scaled by the square of the observations averaged over the same regions. As discussed previously, the amplitudes of the model errors are larger in the southern region than in the northern. Overall, the models, which agree better with observations and in the two regions, are CNRM, MPI, UKMO and NCAR (although MIROC displays a better agreement in the Tropics). Interestingly, these are also the models, which better simulate the South American Monsoon System (Fig. 2).

4.2 Precipitation seasonal cycle

4.2.1 Model–data comparisons

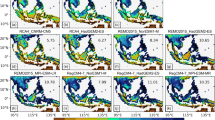

The departure of the four seasons (December–February, DJF; March–May, MAM; June–August, JJA; September–November, SON) from the mean state are displayed in Figs. 5, 6, 7 and 8 for observations and the seven models.

Same as Fig. 2 but for the DJF season. Contours are every 1 mm/day

Same as Fig. 5 but for the MAM season

Same as Fig. 5 but for the JJA season

Same as Fig. 5 but for the SON season

In austral summer (DJF; Fig. 5), observations are characterized by negative anomalies (deficit in precipitation) over the northernmost part of South America, and heavier precipitation along a west–east band around 10°S from the Pacific coast to 50°W, where we can observe the northwest–southeast axis typical of the South American Monsoon. Models such as CNRM, MPI or UKMO do simulate too zonal an axis of heavy precipitation. MIROC and NCAR simulate very large precipitation over Nordeste. Despite models with very big amplitudes in the tropical southeast region, the model mean bias (not shown) to observations is characterized by a deficit in precipitation over most of the Amazonian basin as well as along the Andes on their eastern side from 15 to 35°S, and by too strong precipitation along the Pacific coast and in Nordeste. The divergence between the models is strong over most of the tropics, and weakens south of 30°S.

In austral fall (MAM; Fig. 6), heavy precipitations are observed over northeastern equatorial South America with a relatively zonal band separating northern equatorial region and southern South America. Most of the models represent this large-scale pattern, although they often overestimate the negative anomalies near 15°S. Moreover, most of the models simulate the heavy precipitation at the Atlantic coast south of the equator, when it is observed slightly north or at the equator in the observations. The divergence between the models along the zonal precipitation axis is strong (not shown).

In austral winter (JJA; Fig. 7), the patterns are relatively symmetric respective to austral summer conditions both in observations and in models.

Finally, in austral spring (SON; Fig. 8), the observed and simulated patterns are not anti-symmetric to the MAM patterns, except in the equatorial Atlantic coastal regions. The models have difficulties in representing the patterns during that season, except UKMO and MIROC, which capture most of the patterns and their amplitudes.

4.2.2 Precipitation model index

As previously, we computed the model errors to observations, but we present only the continentally-averaged index (Fig. 9). In agreement with the previous model–data comparison, the models, which agree better with data during the four seasons are the MPI, UKMO and MIROC models.

Same as Fig. 4 but for the four seasons and only for the entire continent

4.3 Neural network validation

Figure 10 compares the neural network (MLP hereafter) projection to observations during the training period (1976–2000) and the test period (1951–1975). First, the MLP projection during the training period displays less biases than the linear ensemble mean (Fig. 3) or any model in particular (not shown), confirming that the MLP is able to build a transfer function based on all the models to correct most of their biases. The MLP projection during the test period displays basically the same patterns and amplitudes as during the training period leading to large differences with observations. Indeed, precipitation amplitudes have been observed to change before and after the 1970s. Actually, no model simulates such changes (Fig. 11) explaining why the MLP does not simulate them either. We can also observe that the MLP ensemble variance (computed on 26-member ensemble) is relatively weak suggesting good coherence between all the network projections. It is interesting to note that the four-season average represents the same patterns as the annual mean projection, although the differences to observations are slightly weaker (not shown).

Upper panels (from left to right): Annual mean observations (1976–2002 mean; contours are every 1 mm/day); neural network projection of the seven models annual mean precipitation (contours are every 1 mm/day); differences between the neural network projection and observations (contours are every 0.4 mm/day); ensemble variance (computed with all the neural network projections based on 3 to 15 neurons in the hidden layer; contours are every 0.1 mm/day). Lower panels (from left to right): differences between (1976–2000) and (1951–1975) averaged observed precipitation (contours are every 0.4 mm/day); same for the neural network projection (contours are every 0.4 mm/day); differences between the first two panels (contours are every 0.4 mm/day); ensemble variance (computed with all the neural network projections of the 1951–1975 period; contours are every 0.1 mm/day)

Same as Fig. 2 but for the differences of both observed and simulated precipitation averaged between the 1976–2001 and 1951–1975 periods (contours are every 0.4 mm/day)

When comparing observations and MLP fit for each season (Fig. 12), it also appears that the MLPs are relatively good in correcting the model biases in order to recover the large scale observed patterns. However, the ensemble error is larger especially in austral winter in the equatorial band when precipitation is the largest.

Observation, neural network projection, difference between the two and neural network ensemble variance for each seasonal mean. From top to bottom: December–January–February (DJF), March–April–May (MAM), June–July–August (JJA) and September–October–November (SON). Contours are every 1 mm/day for both observations and neural network projection, 0.4 mm/day for the difference and 0.1 mm/day for the ensemble variance

5 Twenty-first century projection

5.1 Precipitation mean state

The MLP optimized in the previous section is used as a function transfer to combine simulated twenty-first century climate change conditions.

5.1.1 Model–projection comparison

In a first step, we compared the mean precipitation projection given by the method when mixing the seven model outputs for the three scenarios A2, A1B and B1 and for the four 25-year periods (2001–2005, 2026–2050, 2051–2076 and 2076–2100). For the sake of simplicity, we will only consider such a comparison for scenario A2 during 2076–2100 (Figs. 13, 14).

Indices computed on 2076–2100 SRES A2 annual mean precipitation change simulated by the seven models (relative to 1976–2000 simulated conditions): mean (mean projection of the ensemble; contours are every 0.5 mm/day); median of the ensemble (contours are every 0.5 mm/day); minimum value of the ensemble (contours are every 0.5 mm/day); maximum value of the ensemble contours are every 0.5 mm/day); divergence (standard deviation of the ensemble differences contours are every 0.2 mm/day)

First, the ensemble of models simulate an increase of precipitation on the equatorial Pacific coasts (Figs. 13, 14). The ensemble of models simulate a decrease of precipitation over Colombia and Venezuela with a potential extension to the Amazons mouth (Figs. 13, 14). However, the models strongly disagree on the evolution of precipitation over the Amazon forest. While the minimum displays a strong decrease (due to the UKMO mode, Fig. 14), the maximum difference is positive and the divergence is large. Finally, most of the models simulate a positive increase of precipitation over the La Plata basin along the Atlantic coast from southern Brazil to 35°S (positive mean and low divergence value). Moreover, in the southern tip of the continent, the models display a relatively good agreement projecting an increase south of 50°S and a decrease in precipitation between 30 and 50°S over Chile.

Interestingly, the MLP projection (Fig. 13) is in good agreement with the mean changes described in Fig. 14. The major disagreement between the two methods, and therefore the potential contribution of the neural network to the climate change projection, is over the Amazon basin where the MLP projection displays a strong decrease of precipitation. When comparing the MLP pattern to each of the model patterns (Fig. 13), it is clear that this result is strongly influenced by the UKMO response to greenhouse gases. It is worth noting that the UKMO model is also one of the models, which best-simulated present-day climate conditions. This result confirms the fact that the MLP weights the models according to their skill in simulating present-day climate. Whether a model with skill in simulating observations will have skill in simulating climate change under greenhouse gas forcing is an open question. Anyway, due to the satisfactory comparison between the MLP projection and the model mean projection (which weights all models the same), we will only focus in the following on the MLP projection considering that the MLP is actually skilful in projecting climate model precipitation projections.

5.1.2 Twenty-first century projection

Figure 15 displays the twenty-first century SRES A2 projected mean precipitation changes for the four 25-year periods (2001–2025, 2026–2050, 2051–2076 and 2076–2100). It clearly appears that the patterns displayed during each 25-year period are very similar and that they mainly differ in their amplitudes. As has already been pointed out the ensemble variance is relatively weak and therefore not shown. The major results show that:

-

The positive trend already observed during the last 30 years over the La Plata basin (Boulanger et al. 2005) could continue and expand during the twenty-first century.

-

The negative trend observed in Chile would also continue and be amplified by the end of the century.

-

The extreme southern tip of the continent could experience a weak increase in precipitation.

-

The equatorial Pacific coastal regions could experience a strong increase in precipitation in the second half of the century.

-

The equatorial Atlantic coastal regions could see a strong decrease in precipitation during the second half of the century. Whether the opposite signals on both sides of the equatorial continent are connected should deserve further analysis.

-

Finally the Amazon basin could experience a negative trend during the century. As pointed out previously, all these results projected by the MLP are consistent with the mean projection changes. Only the evolution in the Amazon basin differs due to the stronger weight given by the MLP to the UKMO model.

The same projection for the other scenarios A1B and B1 for the last 25-year period is displayed in Fig. 16. We find the method to be relatively consistent as the patterns are very similar to the ones projected for scenario A2. The figures only differ in amplitude. SRES A1B amplitude pattern is intermediate between the 2051–2075 and 2076–2100 SRES A2 patterns. SRES B1 amplitude pattern is intermediate between the 2026–2050 and 2050–2076 SRES A2 patterns.

5.2 Precipitation seasonal cycle

For sake of clarity and brevity, we do not present here the comparison between the ensemble and the evolution of each model precipitation patterns for each 25-year period and each of the four seasons. We concentrate rather on the description of the late twenty-first century projection under SRES A2 greenhouse gas forcing.

Figure 17 displays late twenty-first century SRES A2 projection for each season analyzing the changes in the departures from the annual mean field. Some striking large-scale patterns can be observed:

-

1.

First, in DJF, the largest increase (0.5 mm/day) is observed north of the equator, and the largest decrease (−0.5 mm/day) is observed in the area of influence of the South American Monsoon and of the low-level jet (along the Andes between 15 and 35°S).

-

2.

In MAM, major patterns of precipitation increase are observed along the equatorial Atlantic coast and east of the Andes between 15 and 40°S in the semi-arid region where a strong positive precipitation jump in that season has been observed during the last 30 years (Minetti and Vargas 1997, 1999; Boulanger et al. 2006). The largest decrease is observed at the equator on the Atlantic side between 20 and 30°S.

-

3.

In JJA, precipitation increases strongly on the equatorial Pacific coast and weakly near the equator over the eastern Amazon basin. A strong decrease is observed near the equator and 60°W in a region of strong southern gradient. This value may thus only result in a latitudinal change in the position of strong precipitation, located north of the equator at this season.

-

4.

Finally in SON, the patterns are characterized by a decrease of precipitation over most of the Amazon basin, the northern South America and the equatorial Pacific coastal region. Nordeste and the Brazilian Atlantic coasts could experience positive anomalies.

As pointed out earlier the large-scale patterns for changes in seasonal anomalies under SRES A1B and SRES B1 are weaker and quite similar to SRES A2 (not shown).

6 Conclusion and discussion

The present study aims at projecting South American climate change conditions during the twenty-first century for three different economic scenarios (A2, A1B and B1). In a companion paper, we analyzed the projection of temperature mean state and seasonal cycle demonstrating how neural networks optimized by Bayesian methods provided useful information. Here, we analyze annual mean and seasonal cycle of precipitation. Many differences exist between temperature and precipitation. First, climate models represent fairly well the general spatial patterns of temperature, while they fail in representing properly precipitation. Such a contrast impedes combining linearly the climate models as we did for temperature in Boulanger et al. (2006). Second, climate models are relatively coherent in their twenty-first century projections simulating a strong warming of the entire continent with larger amplitudes in the Tropics than in the southern regions. Such future values are beyond the range of the dataset used for training the neural network making its extrapolation skill questionable.

Climate models, however, display strong divergence and bias in modeling present-day precipitation conditions. Moreover, they do not simulate similar patterns of precipitation changes during twenty-first century. Despite these differences with the temperature analysis (Boulanger et al. 2006), results on twentieth and twenty-first century climate simulated by the models converged on the following items:

Our set of climate models can be roughly divided into two model-types. The first-type models simulate fairly well the heavy precipitation characteristics of the South American Monsoon System (SAMS). The second-type models simulated poorly the patterns and/or amplitude of the SAMS and overestimated the precipitation along the Pacific equatorial coasts suggesting a potential relationship between these two biases.

Under SRES A2 greenhouse gas forcing, the models converged fairly well in projecting weaker precipitation in northern South America and in southern Chile, stronger precipitation along the Pacific equatorial coasts and in the La Plata basin. The only divergence found (which is also a difference between the model ensemble mean change and the neural network projection) is about precipitation change over the Amazon basin. While the neural network gives more weight to the UKMO model and projects a decrease in precipitation, the mean projection (all models are weighted the same) has a near-zero value. The analysis of a larger set of models will be required.

We found that the three scenarios (A2, A1B and B1) display similar patterns and differ only in amplitude confirming results obtained by Ruosteenoja et al. (2003) and Boulanger et al. (2006) for temperature. However, SRES A1B differ from SRES A2 mainly in the late twenty-first century reaching more or less 90% amplitude respective to SRES A2. SRES B1, however, diverges from the other two scenarios as soon as 2025 (not shown). In late twenty-first century, SRES B1 displays amplitude about half the ones of SRES A2.

In the northern part of South America, anomalous seasonal precipitation increases in summer and decreases in winter. During austral summer, the South American Monsoon would be weaker. Nordeste in Brazil would receive less precipitation in austral summer, but more precipitation in winter and spring. Other scenarios (A1B and B1) strongly resemble the SRES A2 trends but with smaller amplitudes as previously stated.

Before concluding, it is important to highlight that climate models present significant errors in simulating the patterns and amplitudes of present-day climate conditions. Therefore, despite the convergence in future changes shown by various models, the projection maps presented here must be taken with caution. There is no doubt that the improvements in particular of land-surface models will contribute to a significant increase in model skills in simulating observations and the major climate processes at work in South America.

To conclude, our objective was to demonstrate that the use of a neural network optimized by a Bayesian method allowed to mix ensemble of climate simulations weighting each model according to its skill in simulating twentieth century climate conditions. Therefore, our method is a contribution and an improvement of existing Bayesian methods developed to mix ensembles of simulations (e.g., Tebaldi et al. 2005). However, it must be highlighted that the general low skill of climate models in simulating precipitation mean climatology implies that the final projection maps (whatever the method used to compute them) may significantly change in the future as models improve. Therefore, the projection results for late twenty-first century conditions are suggestive of possible projections based on the “state-of-the-art” of present climate modeling. Finally, a major hypothesis of our method as well as many other statistical methods used to project model ensembles is that the weight given to each model when projecting twenty-first century changes depends on the model skill in simulating the twentieth century conditions. We believe this hypothesis is actually a field of new investigation as the physics of response to greenhouse gas forcing is likely to be different from the physics of present-day climate natural variability.

References

Boulanger J-P, Leloup J, Penalba O, Rusticucci M, Lafon F, Vargas W (2005) Low-frequency modes of observed precipitation variability over the La Plata basin. Clim Dyn 24:393–413

Boulanger J-P, Martinez F, Segura EC (2006) Projection of future climate change conditions using IPCC simulations, neural networks and Bayesian statistics. Part 1: Temperature mean state and seasonal cycle in South America. Clim Dyn 27:233–259

Collins WD, Bitz CM, Blackmon ML, Bonan GB, Bretherton CS, Carton JA, Chang P, Doney SC, Hack JJ, Henderson TB, Kiehl JT, Large WG, McKenna DS, Santer BD, Smith RD (2006) The Community Climate System Model: CCSM3. J Clim 19:2122–2143

Delworth TL et al (2006) GFDL’s CM2 global coupled climate models. Part 1: Formulation and simulation characteristics. J Clim 19(5):643–674

Gnanadesikan A et al (2006) GFDL’s CM2 global coupled climate models. Part 2: The baseline ocean simulation. J Clim 19(5):675–697

Gordon C, Cooper C, Senior CA, Banks HT, Gregory JM, Johns TC, Mitchell JFB, Wood RA (2000) The simulation of SST, sea ice extents and ocean heat transports in a version of the Hadley Centre coupled model without flux adjustments. Clim Dyn 16:147–168

Haak H et al (2003) Formation and propagation of great salinity anomalies. Geophys Res Lett 30:1473. DOI 10.1029/2003GL17065

Johns TC, Carnell RE, Crossley JF, Gregory JM, Mitchell JFB, Senior CA, Tett SFB, Wood RA (1997) The second Hadley Centre coupled Ocean–Atmosphere GCM: model description, spinup and validation. Clim Dyn 13:103–134

Marsland S et al (2003) The Max–Planck-Institute global ocean/sea ice model with orthogonal curvelinear coordinates. Ocean Model 5:91–127

Minetti JL, Vargas WM (1997) Trends and jumps in the annual precipitation in South America, south of 15°S. Atmósfera 11:205–221

Minetti JL, Vargas WM (1999) Pressure behaviour of the subtropical Atlantic anticyclone and its influenced region over South America. Aust Met Mag 48:69–77

Nabney I, Netlab T (2002) Algorithms for pattern recognition. Advances in pattern recognition. Springer, Berlin Heidelberg New York, p 420

New MG, Hulme M, Jones PD (2000) Representing twentieth-century space–time climate variability. Part II: Development of 1901–1996 monthly grids of terrestrial surface climate. J Clim 13:2217–2238

Roeckner E et al (2003) The atmospheric general circulation model ECHAM5. Report No. 349OM

Ruosteenoja K, Carter TR, Jylhä K, Tuomenvirta H (2003) Future climate in world regions: an intercomparison of model-based projections for the new IPCC emissions scenarios. The Finnish Environment 644, Finnish Environment Institute, p 83

Salas-Mélia D, Chauvin F, Déqué M, Douville H, Gueremy JF, Marquet P, Planton S, Royer JF, Tyteca S (2004) XXth century warming simulated by ARPEGE-Climat-OPA coupled system

Stouffer R et al (2006) GFDL’s CM2 global coupled climate models. Part 4: Idealized climate response. J Clim 19(5):723–740

Tebaldi C, Smith RL, Nychka D, Mearns LO (2005) Quantifying uncertainty in projections of regional climate change: a Bayesian approach to the analysis of multimodel ensembles. J Clim 8:1524–1540

Wittenberg AT et al (2006) GFDL’s CM2 global coupled climate models. Part 3: Tropical Pacific climate and ENSO. J Clim 19(5):698–722

Zhou J, Lau L-M (1998) Does a monsoon climate exist over South America? 11:1020–1040

Acknowledgments

We wish to thank the Institut de Recherche pour le Développement (IRD), the Institut Pierre-Simon Laplace (IPSL), the Centre National de la Recherche Scientifique (CNRS; Programme ATIP-2002) for their financial support crucial for the development of the authors’ collaboration. We are also grateful to the European Commission for funding the CLARIS Project (Project 001454) in whose framework the present study was undertaken. We are grateful to the University of Buenos Aires and the “Department of Atmosphere and Ocean Sciences” for welcoming Jean-Philippe Boulanger. We thank Tim Mitchell and David Viner for providing the CRU TS2.0 datasets. Finally, we wish to thank the European project CLARIS (http://www.claris-eu.org) for facilitating the access to the IPCC simulation outputs. We acknowledge the international modeling groups for providing their data for analysis, the Program for Climate Model Diagnosis and Intercomparison (PCMDI) for collecting and archiving the model data, the JSC/CLIVAR Working Group on Coupled Modelling (WGCM) and their Coupled Model Intercomparison Project (CMIP) and Climate Simulation Panel for organizing the model data analysis activity, and the IPCC WG1 TSU for technical support. The IPCC Data Archive at Lawrence Livermore National Laboratory is supported by the Office of Science, U.S. Department of Energy. Special thanks are addressed to Alfredo Rolla for his strong support in downloading all the IPCC model outputs.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Boulanger, JP., Martinez, F. & Segura, E.C. Projection of future climate change conditions using IPCC simulations, neural networks and Bayesian statistics. Part 2: Precipitation mean state and seasonal cycle in South America. Clim Dyn 28, 255–271 (2007). https://doi.org/10.1007/s00382-006-0182-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00382-006-0182-0