Abstract

This paper presents an efficient method for overexposure correction utilizing haze removal model and image fusion technique, which draws on the experience of HDR technique. Assuming an OE image can be modeled as a normal exposure image added up with a layer of asymmetrical colorful haze, its submerged information in OE regions is enhanced by an improved haze removal model based on dark channel prior. The enhancement result possesses better visualization in OE regions and color distortion to a certain extent. With the image fusion technique based on weighted least squares filters and global contrast-based saliency, the texture obtained in OE regions is utilized to restore the overexposure. The advantages of the selected image fusion technique are validated in the paper. In the experiments, the proposed method is compared with conventional methods to corroborate the performance. Both the subjective visualization and quantitative indicators show that the result is effective in correcting the overexposure without increasing pseudo-information and oversaturation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Overexposure (OE) refers to a loss of detailed information in highlight regions when imaging, which is caused by the incorrect exposure mode of camera when photographing. What is more, when the photographed scene has a higher dynamic range (HDR) than that the sensor can capture, it is difficult to set a proper exposure mode of camera. The dynamic range of camera sensor is limited by the material and size. While the dynamic range of some high contrary scenes can reach up to millions of times than it. In such scenes, no matter what kind of exposure mode of camera the photographer sets, either information in highlight regions or that in lowlight regions will miss.

Although the naked eye is unable to distinguish the detail, there will remain information about real scenes if these pixels are not completely saturated. Generally, a photograph is expected to present as much information about the real scenes as possible except for certain artificial design. Hence, it is significant to restore image to display the submerged information in overexposed regions.

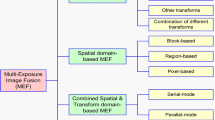

Many approaches to restore overexposed images have been put forward in decades. Some hardware [1, 2] designs are proposed to possess the ability to capture HDR. However, these devices are usually much too expensive. In addition, some HDR methods utilizing multi-exposure fusion are proposed to increase the visualization, which are limited by image registration. Besides, the approaches mentioned above are unable to deal with the existing images. On the other hand, singular image solutions [3,4,5] are brought forward, which enhance the ill images with different parameters or algorithms to acquire the high contrast sub-images in both highlight and lowlight and fuse them to obtain HDR images. In other words, these singular image solutions do multi-exposure in software.

Furthermore, the problem of overexposure correction is similar but different from the problem of conventional image contrast enhancement. The major difference is that the former focuses on the saturation correction while the latter centers on the lightness adjustment. What is more, the former tends to correct the overexposed regions no matter they are completely overexposed or not. The latter generally remains the completely overexposed pixels unchanged.

In this way, numerous methods against the overexposure [6,7,8,9,10,11,12] are proposed. Some of them [7, 9,10,11] estimate the color in overexposed regions by their normal exposed neighborhoods, and some [6, 12] estimate the information using the cross-channel information. These methods will not ensure the authenticity of increased information if the pixels in overexposed regions have weak relationships with their neighborhoods. Another problem is that the estimation algorithms are hard to deal with the regions whose original color is white.

Aiming at those problem, a singular image solution was proposed to correct the overexposure utilizing haze removal model and image fusion technique, which is a HDR method considering the particularity of overexposure. Firstly, a brand-new overexposure model was presented that an OE image can be modeled as a normal exposure image added up with a layer of asymmetrical colorful haze. To guarantee that the restored information is reliable, our method enhances the submerged information and detects the overexposed regions utilizing the proposed overexposure model. Then, it does image fusion while maintaining maximum details to obtain the restored image. A group of efficient techniques including FGS [13] and global contrast-based saliency are adopted with a special strategy for the proposed overexposure model.

2 Related work

The problem of overexposure correction has been approached from several perspectives. Some hardware designs are brought forward. Aggarwal [1] proposed a camera to sample multiple images in the same scene in various exposure modes simultaneously, with a splitting aperture equipped on the camera. Tumblin et al. [2] came up with a camera design to measure the static gradients to capture a HDR image. The differential design can correct its own saturated sensors. The equipment restrictions limit their practical applicability. Another mainstream is to do multi-exposure fusion. Durand [3] employed fast bilateral filter to multi-scale fusion to obtain HDR images. Hasinoff et al. [4] used a mixed integer programming model to acquire the optimal capture in multi-exposure fusion. These approaches have restrictive inputs because the scene must be static. On the other hand, singular image enhancements have been proposed. Jobson et al. [5] enhanced the image visualization by multi-scale retinex model. Kim et al. [14] put forward a fast and optimized dehazing algorithm to enhance the image contrast. Besides the haze images, the method works well in contrast enhancement of OE images as well. These methods exert little effect when the image is heavily overexposed.

In addition, some approaches estimate the color in overexposed regions by their normal exposed neighborhoods. Masood et al. [6] took the rate between two different channels to deliver the color information. Guo et al. [7] established the overexposure likelihood of an image. The color of each pixel is corrected via neighborhood propagation and based on the confidence of original color. The two methods both possess critical defect when the real color is white and cannot ensure authenticity. Meanwhile, they exert no influence on amplifying the submerged details. Lee et al. [8] proposed a correction algorithm to correct the lightness and chrominance separately. Because of the Gaussian function in its enhancement, the restored information in overexposed regions seems obscure. Hou et al. [9] conducted the overexposure correction utilizing wavelet tight frame-based approach and image inpainting technology. Yoon et al. [10] reconstructed the texture of overexposed areas with patch-based region filling method. Abebe et al. [12] recovered lost details in overexposed areas taking advantage of channel correlation in RGB images. Hou et al. [9], Yoon et al. [10], Abebe et al. [12] have the risk of recovering the overexposed areas with fake details.

Haze removal methods aim at the restoration of images with poor contrast and corrupted color. A number of algorithms have been brought forward. Tan [15] maximized the contrast of a haze image. This optimization usually results in halo artifacts. Fattal [16] proposed a haze removal method utilizing albedo of the scene, which is not robust and determined by the statistical information of haze image. He et al. [17] employed dark channel prior to estimating the transmission map. Because of its practicability and favorable performance, it is extensively used in haze removal case. In addition, some adopted and improved algorithms are proposed [18].

In image fusion, one of the most significant techniques is the domain decomposition. Selecting an efficient smoothing filter is the key step. These filters can be classified into two groups. The first group is local filters, such as bilateral filter [19] and its improvement. He et al. [20] proposed the guided filter which is linear-time complexity with the image size. Gastal et al. [21] presented an edge-preserving filter based on a domain transform. The image smoothed by local filters may have halo artifacts. The other group is on the basis of global optimization. Shen et al. [22] came up with an edge-preserving image decomposition algorithm using L1 fidelity with L0 gradient. Farbman et al. [23] put forward a weighted least squares (WLS) method. Though the global optimizations overcome the limitation of local filters, their computational cost is increased. Several improvements of WLS are presented to tackle this problem [13, 24].

3 Haze removal models

The atmospheric scattering model which is extensively used in haze images is defined as follows:

where I refers to the observed intensity, J represents the scene radiance, \(A_\mathrm{h} \) is the Global atmospheric light, and t stands for the medium transmission.

With the minimum operation, Eq. (1) is rewritten as:

where \(J^\mathrm{c}\) and \(I^\mathrm{c}\) are certain channel c of J and I, \(\varOmega \left( x \right) \) stands for a local patch centered at position \(\times \) and \(\tilde{t} \) represents the estimated transmission.

Dark channel prior is a kind of statistics of outdoor haze-free images, which was proposed by He et al. [17]. The main content is that the pixels of a majority of patches besides sky in haze-free images possess quite low intensity in at least one color channel. For an arbitrary image J, its dark channel \(J^\mathrm{dark}\) is defined as:

According to dark channel prior,

Utilizing dark channel prior, \(\tilde{t} \) is:

where \(\omega \) refers to a constant parameter to remain a small amount of haze for better image visualization. A smoothing filter is employed to refine the transmission.

Hence, the haze removal result is obtained as:

where \(t_0 \) is 0.1 to avoid the impact of noise.

4 Our method

4.1 Information enhancement

The major technical challenges in information enhancement are to enhance the submerged information in overexposed areas in an efficient manner without noise enhancement and color distortion concurrently. The conventional contrast enhancement methods operate images in every channel separately. However, it is more significant to adjust the contribution of three channels in saturation correction.

Our overexposure model acquires the experience of haze removal model. In general, overexposed regions in the scene are intense light sources or surfaces with high reflectivity. These scenes can be regarded as the normal exposure scenes with a superposition of intense illumination in certain parts. If the illumination parts are treated as a layer of asymmetrical haze, the haze image model can be adopted to handle the overexposure image, as shown in Fig. 1. Hence, our overexposure model is:

where J refers to the radiance of normal exposed scene without intense light sources, I represents the overexposure intensity, t stands for the medium transmission, \(A_\mathrm{OE} \) is the intensity of highlight sources which are regarded as asymmetrical haze.

Considering an overexposed region, it is the highlight sources such as intense light sources or surfaces with high reflectivity that lead to the problem. Compared with the original haze model, the highlight sources are not only sources but also the multi-color illumination. The transmissions of various channels in scenes with the colorful illumination are different. For example, as an image with the red illumination, the value of transmission in red channel should be lower than those two. Therefore, what needs to be corrected is that the original model employs one transmission to correct three channels. In the light absorption model, the transmission of certain channel \(t^\mathrm{c}\) is defined as:

where \(\alpha ^\mathrm{c}\) refers to the absorption rate of channel c and d means the distance from the light source.

If the average transmission obtained in the original haze removal model with Eq. (5) is denoted as \(\bar{t} \), the transmission of channel c should be corrected with the help of the other two channels.

The correction function should abide by the following rules:

-

1.

The channel of higher value has lower transmission, while that of lower value has higher transmission.

-

2.

The range of the transmission is \(\left[ {0,1} \right] \).

-

3.

The pixels with great differences of channels should be corrected intensely, and the pixels with similar values of each channel should be changed slightly.

-

4.

The adjustment range should be relative to \(\bar{t} \).

-

5.

The correction should be similar with Eq. (9) in form.

Considering the aforementioned items, a simple correction is designed as:

To a certain extent, the proposed transmission correction is a step of white balance in form. The corrected transmission form is compared with the original one in haze removal model to validate the performance as shown in Fig. 2. Our results employing the corrected transmission demonstrate better contrast in colorful light-curtain regions compared with the ones using original haze removal model.

Dark channel prior assumes that the nature scenes possess high saturation in other words. In fact, the highlight sources regions in real world have low saturation, which does not follow dark channel prior. Hence, the overexposed regions whose information is enhanced by dark channel prior have color distortion to a certain degree. Despite this lack, the enhanced texture acquired from the haze removal model possesses more significant edges and better visualization, compared with the other pixel-dependent enhancements. One overexposed image is enhanced with different algorithms including gamma correction, retinex enhancement, HE [25], CLAHE [26] as well as the proposed haze removal model to make comparisons as shown in Fig. 3. As mentioned in the introduction section, the traditional HDR methods lay emphasis on the enhancement of lightness contrast rather than the correction of saturation. The key to correct overexposure is to adjust the proportion of three channels. However, because of ignoring the constraints of the relationship with three channels, the traditional HDR methods result in either little information enhancement (e.g., the result of gamma correction) or unexpected stains (e.g., the result of CLAHE [26]). Hence, the haze removal algorithm is utilized as the information enhancement method and the haze removal result is regarded as Detail Map, whose high-frequency information is used to restore the overexposed regions.

What is more, the transmission obtained during haze removal operation reflects the overexposure degree of each pixel. Low values of the transmission in certain regions mean heavy asymmetrical haze, i.e., overexposure. In addition, M is set to weigh the overexposure degree:

Compared with utilizing another method to weigh the overexposure degree such as the one in Guo et al. [7] or Yoon et al. [27], our definition possesses its advantages. Firstly, it has higher robustness against the outliers because of the regional minimization operation in Eq. (3) and the smoothing operation in transmission refinement. Next, it adapts the information enhancement and avoids the inappropriate splicing traces in image fusion. Then, it weakly depends on the thresholds. In other words, it performs well in a variety of image contents.

4.2 Image fusion technique

In addition to the information enhancement, image fusion is another key technique. The technical challenge in the steps of image fusion is to efficiently obtain the result with smooth transition and maximum information preservation. Fast global image smoothing based on the weighted least squares (FGS) [13] is selected to do multi-scale decomposition. The main performance of a smoothing filter is the ability to reduce noise with edge preservation. FGS [13] performs well with linear-time complexity with the image size.

In image smoothing, given an input image f and a guidance image g, a desired output u is obtained by minimizing the following energy function of weighted least squares (WLS):

\(N\left( p \right) \) represents a set of neighbors (typically 4) of a pixel p. \(w_{p,q} \) refers to the smoothness constraint which is generally defined as \(w_{p,q} =\mathrm{exp}\left( {-\Vert u\left( p \right) -g\left( q \right) \Vert /\sigma } \right) \). \(\lambda \) stands for the penalty parameter that controls the smooth degree of output. The optimization can be tackled by separable approximate iterative algorithm.

Figure 4 shows the smoothing results from various smoothing filters, including weighted least squares method (WLS) [23], domain transform recursive edge-preserving filter (RF) [21], smoothing technique by L0 gradients and L1 fidelity (L0L1) [22], WLS with ADMM [24] and FGS [13]. RF [21] is a local optimized filter, and the others are global optimized filters. The input image has approximately \(650\times 450\) pixels. Table 1 shows the details of comparison, which indicates that FGS [13] performs the best in smoothing quality with acceptable time cost; the local optimized filter RF [21] has problems in edge preservation with the increasing smoothing degrees; WLS [23] performs the best in edge preservation but the speed is quite slow; L0L1 [22] is slower with worse performance than WLS; WLS with ADMM [24] has faster convergence rate than FGS [13]; however, it is not obvious when FGS [13] iterates only three times; meanwhile, its image quality is not satisfactory in the first few iterations. Those are the reasons why FGS [13] is chosen.

Smoothing results. a The input. b The result from weighted least squares method [23]. c The result from fast global image smoothing filter [13]. d The result from WLS with ADMM [24]. e The result from domain transform recursive edge-preserving filter [21]. f The result from smoothing technique by L0 gradients and L1 fidelity [22]

Setting \(g=f\), the input image f is decomposed into various scales with different \(\lambda _0,\lambda _1,\ldots \lambda _{n-1} ,(\lambda _0<\lambda _1<\cdots <\lambda _{n-1} )\) in FGS [13]:

After image decomposition, a simple but high-quality saliency should be defined to draw the richness of information in each layer. Global contrast-based salient region detection [25] is our choice. First, the histogram vector of the input image L is obtained as \(H=\left( {h_0,h_{1,} \ldots ,h_{HN-1} } \right) \). HN is the quantified level of L. The saliency value of a pixel \(I_\mathrm{p} \) is defined as:

As mentioned above, the original OE image and Detail Map are decomposed as \(L_0^\mathrm{OE}, L_1^\mathrm{OE},\ldots ,L_n^\mathrm{OE} \) and \(L_0^\mathrm{DM}, L_1^\mathrm{DM},\ldots ,L_n^\mathrm{DM} \), respectively. The saliencies of each layer of the original OE image are \(S_0^\mathrm{OE}, S_1^\mathrm{OE},\ldots ,S_n^\mathrm{OE} \). The saliencies of Detail Map are \(S_0^\mathrm{DM}, S_1^\mathrm{DM},\ldots ,S_n^\mathrm{DM} \). With all these prepared, the image fusion is completed with the following principles:

-

1.

In layer of low frequency, the original OE image should play a leading role;

-

2.

In the other layers, the original OE image should play a leading role in normal exposed area, and the Detail Map should play a leading role in overexposure area.

Hence, the fused layers \(\tilde{L} _i \) are:

where

\(\alpha , \beta \) stand for two constant parameters. \(\alpha \) controls the balance of information enhancement in the overexposed regions and the color distortion in the other regions. With an increasing \(\alpha \), the degree of correction and potential color distortion are decreasing simultaneously. \(\beta \) controls the smoothness of the enhanced texture. With an increasing \(\beta \), the transitional regions will be smoother.

Finally, the restored result is denoted as:

4.3 Algorithm details and parameters

In the step of image enhancement, the variable parameters are \(A, t_0,\omega \). Different from the traditional haze removal problem, our main target is to enhance the submerged information in the overexposed regions. Hence, \(\omega \) is set to be 0.95 with a little tolerable color distortion. Generally, when the value of certain channel reaches up to 235, it is considered overexposed in this channel in 24 bitmaps. Therefore, we set \(A=0.9216\) and \( t_0 =0.0784\) when the input image is normalized. The patch size of local minimum filter in Eq. (5) is set as \(\left( {\frac{W_J }{200},\frac{H_J }{200}} \right) \) if the input has \(W_J \times H_J \) pixels. What is more, FGS [13] is utilized to refine transmission as the smoothing filter instead of guided filter considering its better edge preserving ability.

In the step of image fusion, 3 values of \(\lambda \) are used to decompose the images into 4 layers and acquire their saliencies. \(\lambda _0 =10,\lambda _1 =100,\lambda _2 =200\). \(\sigma \) is set as 0.02. More layers can make the result perform better, but the time cost increases at the same time. Generally, \(\alpha \in \left[ {0,1} \right] \) and \(\beta \in \left[ {0.2,0.8} \right] \) in Eq. (18). A higher value of \(\alpha \) leads to less color distortion, while the information enhancement in overexposed regions is weaker. On the contrary, a low value of \(\alpha \) leads to better visualization in the overexposed regions while the information in normal exposed regions will seem to be oversaturated in some extreme case. A higher value of \(\beta \) brings about smoother transition between the overexposed regions and normal exposed regions. However, the enhancement in the overexposed regions will decrease correspondingly.

5 Experimental results

We took photographs with SL camera Canon EOS40D. It took about 15 s to handle an image of \(1000 \times 1000\) pixels in MATLAB with a 3.30 GHz Intel (R) Core (TM) i5-4590 CPU. To evaluate the performance of the proposed overexposure correction method, our results are compared with those by [5,6,7, 14]. The codes supplied from these authors’ homepages are adopted to obtain the comparisons. The results are demonstrated in Fig. 5. The notable regions in every series of images are marked in red boxes.

Compared with the typical color estimation methods such as Guo et al. [7] and Masood et al. [6], our method performs better in correcting the overexposed regions that are weakly relative with their neighbors. The restored pixels in overexposed regions rely strongly on their neighborhood in these two noise-sensitive methods.

Meanwhile, there is theoretical weakness in the color estimation methods mentioned above in handling the complete OE pixels. The proposed method remains them unchanged. It is more reliable to maintain the primary color than to fill the fake color. What is more, the color estimation methods exert little influence on the submerged information enhancement.

Compared with the traditional HDR methods such as Jobson et al. [5], our method corrects not only the lightness but also the chrominance (e.g., the left side of Image Slogan). Because the HDR method generally handles each channel separately, the correction of overexposure has little increase in saturation and seems gray.

Compared with the other methods based on haze removal model such as Kim et al. [14], our method possesses less color distortion with the effect of transmission correction (e.g., the cola in Image Vendor). In virtue of image fusion, the results acquired from proposed method keep the maximum details of both the overexposed regions and normal exposed regions (e.g., the right side of Image Stela) and natural transition boundaries (e.g., the lamp in Image Slogan).

Different from the other low-level vision problems, such as deblurring or noise reduction that have generally approved models, it is difficult to make a simulation of the overexposure problem. The correction method based on model that creates the overexposed input usually has its own advantages in the assessment of image quality. Hence, two no-reference quantitative indicators are selected to assess the image quality of correction results. LogAME proposed by Panetta et al. [28] is a contrast assessment index developed by incorporating a nonlinear framework to the Michelson Contrast Law [29]. GMG (gray mean grads) is another index to measure the enhanced information. In the assessment, only the overexposed pixels are selected. Therefore, the two indexes are corrected as:

where

\(I_{\max }^\mathrm{c} \left( {x,y} \right) \) refers to the maximum in the local patches centered at position \(\left( {x,y} \right) \). \(I_{\min }^\mathrm{c} \left( {x,y} \right) \) represents the minimum. \(dI_x^\mathrm{c} \) and \(dI_y^\mathrm{c} \) are the vertical and horizontal gradients, respectively. Because the M defined by Eq. (11) is designed for the proposed method specially, a generic definition of overexposure is used as \(M_e \) to avoid the potential tendency. SH stands for the threshold of overexposure and is defined as 0.9216 for images after normalization. \(M_e \) means if either channel is overexposed, the pixel is considered overexposed as well. \(N_{\max } \) is the maximum value of the image and set as 1 for images after normalization. More experiments were conducted for better comparisons as shown in Fig. 6. Table 2 lists the logAME values of 6 groups of images. Table 3 displays the GMG values. The quantitative indicators indicate that our method enhances more information in most cases compared with the other methods.

However, only assessments about the contrast enhancement are far from adequate because the chrominance correction is important in overexposure problem as well. The ideal correction result is that the saturation in overexposed regions is increased moderately and the hue is adjusted if necessary. The inappropriate changes in saturation and hue will cause color distortion. As a result, it is difficult to determine what kind of change is appropriate. For instance, Fig. 7a demonstrates the absolute value map of hue differences between Fig. 5 Image Stela (a) and Fig. 5 Image Stela (d), and Fig. 7b is the one between Fig. 5 Image Stela (a) and Fig. 5 Image Stela (f). The difference of hue is calculated according to CIEDE2000 [30], and only the pixels in overexposed regions are calculated.

In comparison, the result from Kim et al. [14] has heavier hue adjustment than that from ours. Besides, there is more color distortion correspondingly in Fig. 5 Image Stela (d) than Fig. 5 Image Stela (f), even though the indexes of contrast enhancement of the former are larger than that of the latter. In conclusion, the enhancement of contrast and the adjustment of chrominance should be treated in one combination in the assessment of overexposure correction.

The time cost of our method is determined by the number of decomposition layers N. For color images, FGS [13] will be used \(6N+1\) times, which is the major section of time cost. When \(N=3\), it is faster than the color estimation methods such as Guo et al. [7] and Masood et al. [6], but slower than the traditional HDR methods such as HE [25] and CLAHE [26], as well as the simple haze removal methods such as Kim et al. [14].

6 Conclusions

In this paper, a novel method has been proposed for the overexposure correction utilizing haze removal model and image fusion technique. Acquiring the experience of dehaze model, we have proposed an overexposure model that an OE image can be modeled as a normal exposure image added up with a layer of asymmetrical colorful haze, which guides us to enhance the submerged information in overexposed regions by adopting haze removal method to obtain Detail Map. Aiming at the colorful overexposed area, the correction of transmission is put forward to decrease the color distortion. The Detail Map and original image are fused utilizing the fast global image smoothing based on weighted least squares and global contrast-based saliency. A new fusion strategy aiming at the overexposed cases is formulated to improve the visualization of the correction results.

Our method performs well in both the overexposure correction and visualization improvement. The proposed method is compared with conventional methods to validate the performance. Both the subjective vision and quantitative indicators show that the submerged information in overexposed regions is efficiently enhanced. In chrominance adjustment, the results from our method are insensitive to noise and appears to be natural without pseudo-information and oversaturation due to the proposed overexposure model.

However, if the pixels are completely overexposed, our method does not take effect as shown in Fig. 8. An image inpainting technique may be better to be applied in this case. Furthermore, if the patch radius in the obtaining of values of dark channel in Eq. (5) is selected inappropriately when the texture in input is complicated, there will be halo around the high contrast edges as shown in Fig. 9, which may be tackled by an edge-preserving local minimum filter. In order to accelerate the proposed method, down-sampling technique is useful in the part of image fusion.

References

Aggarwal, M., Ahuja, N.: Split aperture imaging for high dynamic range. In: Proceedings of the Eighth IEEE International Conference on Computer Vision, 2001. ICCV 2001, pp. 10–17. IEEE (2001)

Tumblin, J., Agrawal, A., Raskar, R.: Why I want a gradient camera. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2005. CVPR 2005, pp. 103–110. IEEE (2005)

Durand, F., Dorsey, J.: Fast bilateral filtering for the display of high-dynamic-range images. In: ACM Transactions on Graphics (TOG), vol. 3, pp. 257–266. ACM (2002)

Hasinoff, S.W., Durand, F., Freeman, W.T.: Noise-optimal capture for high dynamic range photography. In: 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 553–560. IEEE (2010)

Jobson, D.J., Rahman, Z., Woodell, G.A.: A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 6(7), 965–976 (1997)

Masood, S.Z., Zhu, J., Tappen, M.F.: Automatic correction of saturated regions in photographs using cross–channel correlation. In: Computer Graphics Forum 2009, vol. 7, pp. 1861–1869. Wiley Online Library (2009)

Guo, D., Cheng, Y., Zhuo, S., Sim, T.: Correcting over-exposure in photographs. In: 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 515–521. IEEE (2010)

Lee, D.-H., Yoon, Y.-J., Kang, S., Ko, S.-J.: Correction of the overexposed region in digital color image. IEEE Trans. Consum. Electron. 60(2), 173–178 (2014)

Hou, L., Ji, H., Shen, Z.: Recovering over-/underexposed regions in photographs. SIAM J. Imag. Sci. 6(4), 2213–2235 (2013)

Yoon, Y.-J., Lee, D.-H., Kang, S.-J., Park, W.-J., Ko, S.-J.: Patch-based over-exposure correction in image. In: The 18th IEEE International Symposium on Consumer Electronics (ISCE 2014), pp. 1–3. IEEE (2014)

Arora, S., Hanmandlu, M., Gupta, G., Singh, L.: Enhancement of overexposed color images. In: 2015 3rd International Conference on Information and Communication Technology (ICoICT), pp. 207–211. IEEE (2015)

Abebe, M.A., Booth, A., Kervec, J., Pouli, T., Larabi, M.-C.: Towards an automatic correction of over-exposure in photographs: application to tone-mapping. Comput. Vis. Image Underst. (2017). https://doi.org/10.1016/j.cviu.2017.05.011

Min, D., Choi, S., Lu, J., Ham, B., Sohn, K., Do, M.N.: Fast global image smoothing based on weighted least squares. IEEE Trans. Image Process. 23(12), 5638–5653 (2014)

Kim, J.-H., Jang, W.-D., Sim, J.-Y., Kim, C.-S.: Optimized contrast enhancement for real-time image and video dehazing. J. Vis. Commun. Image Represent. 24(3), 410–425 (2013)

Tan, R.T.: Visibility in bad weather from a single image. In: IEEE Conference on Computer Vision and Pattern Recognition, 2008. CVPR 2008, pp. 1–8. IEEE (2008)

Fattal, R.: Single image dehazing. ACM Trans Gr (TOG) 27(3), 72 (2008)

He, K., Sun, J., Tang, X.: Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33(12), 2341–2353 (2011)

Park, D., Han, D.K., Ko, H.: Single image haze removal with WLS-based edge-preserving smoothing filter. In: 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2469–2473. IEEE (2013)

Tomasi, C., Manduchi, R.: Bilateral filtering for gray and color images. In: Sixth International Conference on Computer Vision, 1998, pp. 839–846. IEEE (1998)

He, K., Sun, J., Tang, X.: Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 35(6), 1397–1409 (2013)

Gastal, E.S., Oliveira, M.M.: Domain transform for edge-aware image and video processing. In: ACM Transactions on Graphics (ToG), vol. 4, p. 69. ACM (2011)

Shen, C.T., Chang, F.J., Hung, Y.P., Pei, S.C.: Edge-preserving image decomposition using L1 fidelity with L0 gradient. In: SIGGRAPH Asia 2012 Technical Briefs 2012, pp. 1–4 (2012)

Farbman, Z., Fattal, R., Lischinski, D., Szeliski, R.: Edge-preserving decompositions for multi-scale tone and detail manipulation. In: ACM Transactions on Graphics (TOG), vol. 3, p. 67. ACM (2008)

Kim, Y., Min, D., Ham, B., Sohn, K.: Fast domain decomposition for global image smoothing. IEEE Trans. Image Process. 26, 4079–4091 (2017)

Chen, S.-D., Ramli, A.R.: Contrast enhancement using recursive mean-separate histogram equalization for scalable brightness preservation. IEEE Trans. Consum. Electron. 49(4), 1301–1309 (2003)

Zuiderveld, K.: Contrast limited adaptive histogram equalization. In: Graphics Gems IV 1994, pp. 474–485. Academic Press Professional, Inc, Cambridge

Yoon, Y.-J., Byun, K.-Y., Lee, D.-H., Jung, S.-W., Ko, S.-J.: A new human perception-based over-exposure detection method for color images. Sensors 14(9), 17159–17173 (2014)

Panetta, K.A., Wharton, E.J., Agaian, S.S.: Human visual system-based image enhancement and logarithmic contrast measure. IEEE Trans. Syst. Man Cybern., Part B (Cybern.) 38(1), 174–188 (2008)

Michelson, A.A.: Studies in Optics. Courier Corporation, North Chelmsford (1995)

Sharma, G., Wu, W., Dalal, E.N.: The CIEDE2000 color–difference formula: implementation notes, supplementary test data, and mathematical observations. Color Res. Appl. 30(1), 21–30 (2005)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yang, C., Feng, H., Xu, Z. et al. Correction of overexposure utilizing haze removal model and image fusion technique. Vis Comput 35, 695–705 (2019). https://doi.org/10.1007/s00371-018-1504-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-018-1504-z