Abstract

Diffusion plays a significant role in complex pattern formulations occurred in biological and chemical reactions. In this work, the authors study the effect of diffusion in coupled reaction-diffusion systems named the Gray-Scott model for complex pattern formation with the help of cubic B-spline quasi-interpolation (CBSQI) method and capture various formates of these patterns. The idea of Kronecker product is used first time with CBSQI method for 2D problems. Linear stability analysis of the reaction-diffusion system as well as stability of the proposed method is studied. Four test problems are considered to check the accuracy and efficiency of the method and found the stable patterns.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Turing patterns [1] play a significant role in characterizing the biological and chemical reactions. Mathematical modeling, in the form of biological and chemical phenomena is a very important tool to study a variety of patterns of chemical species. The reaction-diffusion system illustrates the effect of some special types of two chemical species V and W. Let variables v and w are the concentration of these chemicals respectively. So as usual, both chemicals react with each other and then diffusion takes place in the medium and over the time passes concentration of V and W also change at every position. In this model, simultaneously two reactions take place with different rates throughout the medium

where \({\mathbb {P}}\) is the inert product. For the simplicity of this work, it is assumed that reverse reaction does not take place.

The complete nature of the system described by the following model [2,3,4]

where \(d_1\) and \(d_2\) represent diffusion coefficients for velocities v and w, and \(\nabla ^2\) represent the Laplace operator. The first term of the equation \(\nabla ^2 v\) is the diffusion term. It is well known that if the concentration of V is less in its neighboring areas then \(\nabla ^2 v\) will be negative and so v decreases. On the other hand, if the concentration is high in its neighboring areas then v will increase. The second term \(-vw^2\) is called the reaction rate. It is clear from the above equations that the quantity of w increases in the same proportion as the quantity of v decreases. However, there is no constraint on reaction terms, but the relative concentration of the remaining terms can be balanced by constants \(d_1, d_2,\gamma\) and \(\kappa\). The third term \(\gamma (1-v)\) is called the replenishment term. In this reaction, the quantity of v decreases eventually up to the level of refill it again and then generates w. The replenishing term says that v will be grown up in proportional to the difference between the current level and 1. Therefore, even if there is no other term then v will take the maximum value 1. The main difference between the above equations is in the third term, i.e., \(\gamma (1-v)\) in first and \(-(\gamma +\kappa )\) in second. The term \(-(\gamma +\kappa )\) is called diminishing term because in the absence of this term w is increased drastically. Further, it diffuses out of the system at the same rate as it generates a new supply of v.

In this work, the authors attempt to capture a variety of patterns for different values of parameters \(d_1, d_2, \gamma\) and \(\kappa\) in nonlinear time-dependent coupled reaction-diffusion model (1) in one and two-dimensional formats with the following initial and boundary conditions

For 1-D problems

For 2-D problems

The recent study of pattern formation in epidemic model has been studied by [5]. The amplitude equation for reversible Sel’kov has been studied in [6]. A specific type of reaction-diffusion model called cherite iodide malonic acid (CIMA) is used by Lee and Cho [7]. Some rigorous biological models and their pattern formation presented by [8]. The cross-diffusion phenomena, in which the concentration of one chemical species affects the other species has been proposed in [9,10,11]. Othmer and Scriven [12] presented the dynamical instability and its Turing pattern in the cellular networks. For the random network system, the Turing patterns have been studied in [13,14,15]. They have also compared their model with the classical models. The stability of the nonlinear dynamical models has been studied in [16, 17]. Also, the problems related to the stochastic control have attracted the researchers [18,19,20]. Hole et al. [21] discussed a 1-D Gray-Scott system and captured its nontrivial stationary Turing patterns. Kolokolnikov et al. [22] presented the solution of the 2-D Gray-Scott system and discussed its instability of equilibrium stripe in two different ways. Zheng et al. [23] studied the pattern formation of the reaction-diffusion immune system and also discuss its controllability over to the different patterns. Sayama [3] studied many complex structures and analyze their pattern and stability. Mittal and Rohila [4] proposed a differential quadrature scheme to solved some reaction-diffusion models. Jiwari et al. [2] captured the Turing pattern formation of nonlinear coupled reaction-diffusion systems. The solutions of the 2-D Gray-Scott model and their nonuniform Turing studied by Castelli [24]. McGough and Riley [25] studied the complex Turing pattern in Gray-Scott model. Yadav and Jiwari [26] solved the Brusselator model by finite element and studied its pattern formation. The artificial and biological immune system has many applications such as in homeostasis, adaptability and immunity are discussed in [27,28,29,30].

To begin with, we present some of the most relevant and substantial works that are fundamental to the proposed study. To approximate a function and its first-order derivative, a pioneer work was proposed by Sablonnière [31, 32], which deployed a discrete univariate B-spline quasi-interpolation technique. The claims of the author were ascertained by verifying the first-order derivative of a certain class of functions by the proposed method. In addition to this, the work even superseded the earlier known method of approximating the derivatives by finite difference method. The author observed the convergence of order \(O(h^4)\) for approximating the first-order derivate of a certain class of functions using cubic spline interpolation. Based on this fundamental work, the research community has since then proposed efficient numerical schemes for solving partial differential equations. The application of the algorithm was seen in the works of Zhu and Kang [33, 34], in which they solved hyperbolic conservation laws. Quadratic and cubic B-spline technique was proposed by Kumar and Baskar [35] in which the numerical schemes of higher order were developed for solving 1-D Sobolov type equations.

Analyzing the aforementioned work, we inferred that, majority of the work done in numerically solving these PDEs has been limited to 1-D space, and apart from Mittal et al. [36] that solve a 2-D advection-diffusion problem, scarcely can one find these techniques being applied for higher-order dimensions. In the proposed work, we put forth a numerical scheme based on CBSQI for solving 1-D and 2-D reaction-diffusion equations. The 2-D partial order derivatives are approximated using the Kronecker product and 1-D coefficient matrices of derivatives. Also, we have discussed the linear stability of the given system. However, for the non-linear dynamical system, linear stability analysis doesn’t give the complete detail about its asymptotic behaviour at large. But, for many applications, it is very important, especially where the main interest is how the system sustains its state at or around its equilibrium point.

2 Linear stability investigation of reaction-diffusion system

It is well known that linear-stability of continuous field models (without reaction term) produces a strong condition analytically such that a spatial system loses stability of its homogeneous equilibrium state and immediately forms a non-homogeneous spatial pattern. Here, dealing with homogeneous equilibrium state means along a line or curve that covers the considered domain.

However, for the reaction-diffusion system we can’t find such stability condition analytically. So, we used idea of Jacobian matrix for its stability and then conclude by analyzing its eigenvalues.

Now, consider the following standard reaction-diffusion system to investigate the linear stability:

For equilibrium state \(v_{{i{\text {eq}}}}\) no longer depend on space or time, so \((v_{{1{\text {eq}}}}, v_{{2{\text {eq}}}})\), is a solution of the following equations:

Now, we perturb the original state variables by introducing the equilibrium state as follows:

Using these replacement, dynamical equations can be rewrite as

These equations can be summarized in a single vector form about \(\varDelta V\)

where vector \(\mathfrak {R}\) represents the reaction terms and d denotes the diagonal matrix whose diagonal entries are \(d_i\) at ith place. Now, it is well known that except reaction term all the terms have been simplified easily. So the remaining task is to linearize the reaction term. Therefore, the Jacobian matrix is introduced for the linearization of the reaction term. If there is no spatial operator in reaction terms then these terms are local. Now, we use the idea of linear stability i.e., rewrite the dynamics by adding a small perturbation at equilibrium state. Therefore the vector function \(\mathfrak {R}(V_{{{\text {eq}}}}+\sin (\omega x+\psi )\varDelta V)\) can be linearly approximated as follows:

from Eqs. (8) to (9), \(\mathfrak {R}(V_{{{\text {eq}}}})\) become zero. Therefore Eq. (10) can be write as

where J denotes the Jacobian matrix of reaction terms and \(\omega\) is representing the spatial frequency of perturbation. Hence, the stability of the system can be described by evaluating its eigenvalues at its homogeneous state.

This simple result for linear stability can be obtained because of the clear separation of diffusion and reaction terms in reaction-diffusion systems.

We can now apply the above result to the following system:

Using the discussed result, we get

It is a well-known result for the stability of a matrix that, the trace must be negative and determinant must be positive. Therefore, this system with its the homogeneous equilibrium state is stable if the following two inequalities are true for all real values of \(\omega\):

for simplification we use \(\text {det}({\mathbb {A}})\) and \(\text {Tr}({\mathbb {A}})\) of a matrix \(\begin{pmatrix} \alpha _1 &{}\beta _1 \\ \alpha _2 &{}\beta _2 \end{pmatrix}\), now above Eqs. (15)–(16) can rewritten as:

Let us assume that the model was stable without diffusion terms, i.e., \(\text {det}({\mathbb {A}})>0\) and \(\text {Tr}({\mathbb {A}})<0\). Now, we try to find out the possibilities for the system becoming destabilized by itself by introducing the diffusion term to it.

As it is clear from the second inequality that the left-hand side is always positive. So for the negative \(-\text {Tr}({\mathbb {A}})\), second inequality is always true. But, the first-inequality can be violated, if the following equation have a positive value for \(z>0\).

or

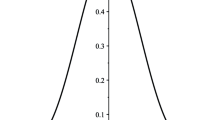

There are two possibilities for the above polynomial that can give positive value for some \(z>0\), as shown in Fig. 1.

Case-1 If the peak of g(z) is in the positive side of z, e.i., \((\alpha _1d_2+\beta _2 d_1)>0\) Fig. 1a, then the condition is that the peak should stick out above the z-axis

Case-2 If peak of g(z) is in the negative side of z, i.e., \((\alpha _1d_2+\beta _2 d_1)<0\) Fig. 1b, then the condition is that the intercept of g(z) should be positive, i.e.,

which is not true for the originally stable non-spatial model. Therefore, the only possibility of remain is case-1 for diffusion to destabilized the model otherwise it is stable. Which can be simplified further as

For example let’s apply the above results to the actual Turing models. Let \((\alpha _1,\beta _1,\alpha _2,\beta _2)=(1,-1,2,-1.5)\) and \((d_1,d_2)=(10^{-4}, 6\times 10^{-4})\), using there parameters \(\text {det}({\mathbb {A}})=0.5>0\) and \(\text {Tr}({\mathbb {A}})=-0.5<0\), so the system is stable without diffusion terms. However,

inequality (23) holds.

It is clear that without diffusion term system has only one equilibrium point \((v_{{{\text {eq}}}},w_{{{\text {eq}}}})=(h_1,k_1)\). There are so many choices for the parameters \(\alpha _{1}\), \(\beta _{1}\), \(\alpha _{2}\) and \(\beta _{2}\) for which this equilibrium point remains stable. So, the most interesting fact about Turing’s is that by introducing the diffusion term and spatial dimension to the equation these stable equilibrium points may be destabilized, and then the system immediately produces a non-homogeneous pattern. This is called Turing’s instability.

There are some shortcut results available, which are helpful to predict the Turing pattern formation in the considered model. Let’s continue the above discussion. We can evaluate the eigenvalue of the matrix \((J-d\, \omega ^2)\).

First, we find the value of \(\omega\) for the dominant eigenfunction \(\sin (\omega x+\psi )\) then we select that \(\omega\) which gives the largest value of the positive real part of eigenvalue \(\lambda\), as this \(\lambda\) produces most visible patterns.

3 B-spline quasi-interpolants

In the CBSQI method, the approximation is achieved by writing it as a linear combination of cubic B-spline functions for the considered space domain. Let \(X_n=\{x_i=\alpha +ih:i=0,1,\ldots ,n\},\) be the uniform partition for the considered interval \([\alpha , \beta ]\), where \(h=(\beta -\alpha )/n\). Let the set \(\{B_i^d: i=1,2,\ldots ,n+d\}\) form a basis for space of splines of degree d, which can be derived from de Boor-Cox [37]. Since for a B-spline \(B_i^d,\) support lie within the interval \([x_{i-d-1}, x_i],\) therefore, for this purpose we need to add \(d+1\) knots at each endpoint to the interval, i.e.,to the left of \(\alpha\) and right of \(\beta\). This extension is performed as follows

For a function w, the B-spline quasi-interpolant (BSQI) of degree d can be defined as [32]

where \(\mu _i\) are the unknown coefficients to be calculated, which depend on the two versions of local support property of B-spline : (1) the B-spline \(B_i^d\) remains non-zero within the interval \([x_{i-d-1}, x_i]\), and (2) there are only \(d+1\) B-splines in \(B^d(X_n)\) which are non-zero on \([x_{\eta }, x_{\eta +1}]\). Besides this, another condition is also imposed so that quasi-interpolant \(Q_dw\) is exact on \({\mathbb {P}}_n^d\), i.e., \(Q_dw=w\) for all \(w\in {\mathbb {P}}_n^d\), where \({\mathbb {P}}_n^d\) is the polynomial space of degree at most d. This procedure is named as discrete quasi-interpolant presented by Sablonnière [38]. We also discuss that derivatives of a function approximated by the corresponding B-spline function. The flexibility and simplicity are the main advantages of BSQI.

3.1 CBSQI method

For a certain function w cubic B-spline quasi-interpolant can be defined from (28) by taking d=3 and is given by

where all nodes are taken to be same as knots, i.e., \(\xi _i=x_i\) \((i=0,1,\ldots ,n)\) and the coefficients \(\mu _i(w)\) are computed in terms of values \(w_i\) for \((i=1,2,\ldots ,n+3)\) as follows

and the related B-spline basis functions are as follows

The derivatives of \(Q_3w\) are computed as follows.

and

where \((B_i^3)'\) and \((B_i^3)''\) can be obtained from (31).

and

The above expression can be written in the form of a matrix as

where \({\mathfrak {D}}^{(1)}\) represent the coefficient matrix of order \((n+1)\times (n+1)\), which is obtained from Eqs. (34)–(35), and \(w=(w_0,w_1,\ldots ,w_n)^T\).

For the second derivative, we have

and

Similarly, the above expression can be written in the form of a matrix as

where \({\mathfrak {D}}^{(2)}\) represent the coefficient matrix of order \((n+1)\times (n+1)\), which is obtained from Eqs. (37)–(38).

4 Implementation of the method

Now, we apply the CBSQI method to the 1-D model, discretized the time derivative in the usual finite difference way

where \({\mathfrak {D}}^{(2)}\) is \((n+1)\times (n+1)\) matrix as given in (39), \(\phi _1(v_i^m,w_i^m)=(-v^m_i(w^2_i)^m+\gamma (1-v^m_i))\) and \(\phi _2(v_i^m,w_i^m)=(v^m_i(w^2_i)^m-(\gamma +\kappa )w_i^m)\) are column vectors for \(i=0,1,\ldots ,n\). The dependent variables \({\varvec{v}}^m=(v_1^m,v_2^m,\ldots ,v_{n+1}^m)^T\) and \({\varvec{w}}^m=(w_1^m,w_2^m,\ldots ,w_{n+1}^m)^T\) are the column vectors. When \(m=0\), the vectors \({\varvec{v}}^0\) and \({\varvec{w}}^0\) are obtained from the initial conditions and solutions of (1) at time \(t=m+1\) can be calculated from explicit scheme (40) if the solution at \(t=m\) is known.

Now, we extended the proposed method for two-dimensional problems, for which we introduce the idea of the Kronecker product [39].

Let for the domain \(\varOmega =[\alpha ,\beta ]\times [\gamma ,\delta ]\), the uniform mesh is \(\{(x_i,y_j): x_i=\alpha +ih_x:i=0,1,\ldots ,n,\; y_j=\gamma +jh_y:j=0,1,\ldots ,m\},\) where \(h_x=(\beta -\alpha )/n\) and \(h_y=(\delta -\gamma )/m\). Before further discussion of the proposed method, we state an important theory.

Kronecker product: For solving the higher-dimensional PDEs, the Kronecker product approach is currently very famous. For any matrices \(R=[\alpha _{ij}]\in {\mathbb {F}}^{p\times q}\) and \(S=[\beta _{ij}]\in {\mathbb {F}}^{r\times s}\), where their Kronecker product is defined as

where \({\mathbb {F}}\) is a field (\({\mathbb {R}}\) or \({\mathbb {C}}\)). Some essential properties and results also presented by Zhang and ding [40].

As we have discussed, the approximation of first and second-order derivatives in Eqs. (36) and (39). So, we used these 1-D differentiated matrices and Kronecker products to approximate the 2-D partial derivatives [36]. The first-order derivatives of w in 2-D w. r. t. x and y are approximate by \(({\mathfrak {D}}_x^{(1)}\otimes I_y)\) and \((I_x\otimes {\mathfrak {D}}_y^{(1)})\), respectively, where \({\mathfrak {D}}_x^{(1)}\) and \({\mathfrak {D}}_y^{(1)}\) represent the 1-D first-order differentiated matrices of w in x and y directions. Similarly, we can define the for second-order derivatives.

So, after implementing the above scheme to the system, i.e., Eq. (1), we get

where \(({\mathfrak {D}}_x^{(2)}\otimes I_y)\) and \((I_x\otimes {\mathfrak {D}}_y^{(2)})\) are matrices of order \((n-1)(m-1)\times (n-1)(m-1)\). \((\hat{b_1}\otimes \hat{I_y})\) and \((\hat{b_2}\otimes \hat{I_y})\) are \((n-1)(m-1)\times 1\) column vector containing boundary values, where \(\hat{I_y}\) denotes the column vector with all entries unity. The dependent variables \(v(x_i,y_j,t)\) and \(w(x_i,y_j,t)\) are \((n-1)(m-1)\times 1\) column vectors. All the terms are defined as follows:

where

where \(v'(x_0), v'(x_n), w'(x_0)\) and \(w'(x_n)\) are represents the boundaries for variables v and w respectively. The above matrices can be obtained by using the approximations from Eq. (39) and Neumann boundary conditions. For Neumann boundary condition, we have derived the following results:

Neumann boundary conditions: If the Neumann’s boundary conditions are given, then from Taylor’s expansion we have

On multiplying (43) by 4 and subtracting to (44), we get

Similarly,

similarly, we can derive the expression for \(\dfrac{\partial w}{\partial y}(x,y)\)

By using the initial conditions and boundary conditions. Now system (41)–(42) can be written as

where \({\mathbb {M}}\) and \({\mathbb {N}}\) are the combined matrices containing boundary values and the coefficient matrices. The system (47) can be rewritten into a set of ODE in time, i.e. for each \(i=1,2,\ldots ,n-1\), \(j=1,2,\ldots ,m-1\), we have

where \({\mathbb {W}}=[v\; w]^T\) and \({\mathbb {G}}\) denotes a differential operator. The system of ODE (48) is solved by the strong stability preserving time stepping Runge–Kutta scheme (SSP-RK-43), because of its numerous advantages. Hence, we get the final solution after applying the following algorithm.

5 Stability of CBSQI scheme

For the stability analysis of the presented scheme, we have the following results:

Definition

Consider the autonomous system of ordinary differential equation of the form

i.e., the variable t does not appear explicitly in the above Eq. (49). Then, \(x_0\) is called the critical point of the above system (49), if \(g(x_0)=0\).

Theorem 5.1

Let \(\dfrac{dV}{dt}={\mathbb {M}}V+G(V)\) be a non-linear system and \(\dfrac{dV}{dt}={\mathbb {M}}V\) be the corresponding linear system. Let (0, 0) be a simple critical point of the non-linear system and

According to the Lyapunov theory, an asymptotically stable critical point (0, 0) of the linear system, remains asymptotically stable for the original non-linear system also.

Proof

The complete proof and other details available in [41]. Now, consider the Gray-scott model

The critical point of the above system is \((v,w)=(1,0)\) using this \({\text {d}}v/{\text {d}}t = {\text {d}}w/{\text {d}}t = 0\) with no flux. To simplify the system, we use the following transformation:

Now, the above system (52) is discretized by CBSQI method and then written as follows

where \({\mathbb {M}}= \left[ {\begin{array}{cc} A_1 &{} 0\\ 0 &{} A_2 \end{array}}\right] ,\)

and

where the matrix \({\mathfrak {D}}_x^{(2)}\) is taken from Eq. (39).

So by the above theorem’s (5.1) statement, the stability and instability of the non-linear system depend upon the corresponding linear system. For this purpose, we have the following condition.

Since, \(-g_{ij}(\tau )=h_{ij}(\tau ), \;\forall i=0,1,\ldots ,n,\, j=0,1,\ldots ,m\), so we proved it only for \(g_{ij}\). Using the non linear term of Eqs. (53) in (54), we have

Therefore for each \(\epsilon >0\) there exist a \(\delta ^2=\epsilon\) such that

Hence

Therefore, we conclude that the non linear system (53) is stable or unstable as the corresponding linear system is stable or unstable.

There are numerous methods available in the literature to study the stability of the linear system. Here. we use simple matrix method to calculate the eigenvalues of the linear system. As we know that, system is stable or asymptotically stable as real part of it’s eigenvalues are non-positive or negative respectively. In numerical examples, we have computed eigenvalues of the matrix \({\mathbb {M}}\) and found to be non-positive as shown in Figs. 3 and 6. Hence, proposed algorithm is stable. \(\square\)

6 Numerical experiments

To check the performance of the proposed scheme, we implement it to some test problems and discuss the obtained results in detail.

Problem 1

We consider the 1-D Gray-Scott model

The domain of consideration for 1-D model is \(x\in [-50, 50]\). The values of the parameters are taken as \(d_1=1.0, d_2=0.01, \gamma = 0.02\) and \(\kappa =0.066\). For both chemical component v and w the initial conditions and boundary condition are as follows

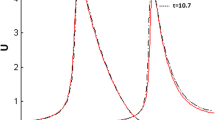

The pattern formed by the Gray-Scott model present in natural living things such as Leopard, Zebra, Fish and butterfly wings, etc. We clearly observed that the growth of Turing pattern formation over time increases. In Fig. 2 at time \(t=500\) the Turing pulse split and then as time increase new pulses have been formed. We determined the eigenvalues of the matrix corresponding to problem (1). Fig. 3 shows the region of eigenvalues.

Eigenvalues for the problem 1 with parameters \(d_1=8 \;10^{-5}, d_1=4 \,10^{-5}, \gamma = 0.024\) and \(\kappa =0.06\)

Problem 2

We consider the 2-D Gray Scott model (1)

The domain of consideration is \((x,y)\in [0, 2.5]\times [0, 2.5]\). The values of the parameters are taken as \(d_1=8 \;10^{-5}, d_2=4 \;10^{-5}, \gamma = 0.024\) and \(\kappa =0.06\). For both chemical component v and w zero Neumann condition is imposed at the boundaries. The initial conditions are as follows

Initially, only four spots have been reported for component w in the center of the considered domain. As time increases, at \(t=500\) these spots diffuse in eight spots see Fig. 4. Thus, this Turing pattern grows over the time.

Problem 3

Consider the 2-D reaction-diffusion model in the following form

The domain of consideration is \((x,y)\in [0, 1]\times [0, 1]\). The values of the parameters are taken as \(d_1=0.05, d_2=1, a=0.1305, b=0.7695\) and \(\kappa =100\). For both chemical component v and w zero Neumann condition is imposed at boundaries. The initial conditions are as follows

Initially, over the equilibrium state (\(v\equiv 0.90, w\equiv 0.95\)) the chemical shows a small Gaussian perturbation. Therefore, because of diffusion and reaction, this initial perturbation is grown and then split. In Fig. 5, the contour plot of component v is shown at time levels \(t=0.5,1.0\) and 2.0. We determined the eigenvalues of the matrix corresponding to the problem (3). Figure 6 shows the region of eigenvalues.

Eigenvalues for the problem (3) with parameters \(d_1=4 \;10^{-5}, d_1=2 \,10^{-5}, \gamma = 0.024\) and \(\kappa =0.06\)

Problem 4

We consider the 2-D Gray Scott model. (1)

The domain of consideration is \(\varOmega = [0, 2.5]\times [0, 2.5]\). For both chemical component v and w zero Neumann boundary conditions are imposed. The initial conditions are taken as follows:

where \(\delta\) is the small square chosen at the center of the considered domain. For different values of parameters \(\gamma\) and \(\kappa\), we get different patterns. In all cases, initially, only a small square has been reported for component w in the center of the considered domain. As time increases it starts to split more and more in a symmetrical fashion. Therefore, we get very beautiful patterns, which are presented in many biological and chemical species.

Case-1. The parameters are taken as \(d_1=4 \;10^{-5}, d_2=2\;10^{-5}, \gamma = 0.037\) and \(\kappa =0.06\). We obtain patterns presented in Fig. 7.

Contour plots of pattern formation at different time levels for case-1 in problem 4

Case-2. The parameters are taken as \(d_1=4 \;10^{-5}, d_2=2\;10^{-5}, \gamma = 0.03\) and \(\kappa =0.062\). We obtain patterns presented in Fig. 8.

Contour plots of pattern formation at different time levels for case-2 in problem (4)

Case-3. The parameters are taken as \(d_1=4 \;10^{-5}, d_2=2\;10^{-5}, \gamma = 0.04\) and \(\kappa =0.06\). We obtain patterns presented in Fig. 9.

Contour plots of pattern formation at different time levels for case-3 in problem (4)

Case-4. The parameters are taken as \(d_1=4 \;10^{-5}, d_2=2\;10^{-5}, \gamma = 0.025\) and \(\kappa =0.06\). We obtain patterns presented in Fig. 10.

Contour plots of pattern formation at different time levels for case-4 in problem (4)

7 Conclusions

In this work, the authors proposed a numerical algorithm based on CBSQI to simulate 1-D and 2-D Gray-Scott reaction-diffusion models. In the proposed algorithm, 2-D problems are numerically solved using a combination of Kronecker product and 1-D differential matrix. The scheme is validated against four benchmark problems. The main outcomes of the work are as follows

-

1.

To the best of the authors’ knowledge, this is the first application of the CBSQI in solving the 2-D reaction-diffusion equation. The major highlights of

this method is its better accuracy, efficacy in solving these equations, and its ease of implementation.

-

2.

The linear stability analysis of the system as well as stability of the proposed method is discussed.

-

3.

The obtained Turing patterns of the Gray-Scott model are very similar to the available literature [2,3,4, 42].

-

4.

The proposed algorithm is capable to simulate the models for large time t = 15,000. However for comparison purpose, results are reported for t = 10,000.

-

5.

The proposed algorithm can be extend to higher dimensional problems with some modifications.

References

Turing AM (1952) The chemical basis of morphogenesis. Philos Trans R Soc Lond Ser B 237(641):37–72

Jiwari R, Singh S, Kumar Aj (2017) Numerical simulation to capture the pattern formation of coupled reaction-diffusion models. Chaos Solitons Fractals 103:422–439

Sayama H (2015) Introduction to the modeling and analysis of complex systems. Open SUNY Textbooks, New York

Mittal RC, Rohila R (2016) Numerical simulation of reaction-diffusion systems by modified cubic b-spline differential quadrature method. Chaos Solitons Fractals 92:9–19

Liu H, Wang W (2010) The amplitude equations of an epidemic model. Sci Technol Eng 10(8):1929–1933

Dutt AK (2012) Amplitude equation for a diffusion-reaction system: the reversible Sel’kov model. AIP Adv 2(4):042125

Lee I-H, Cho U-I (2000) Pattern formations with Turing and HOPF oscillating pattern in a discrete reaction-diffusion system. Bull Korean Chem Soc 21(12):1213–1216

Maini PK, Woolley TE, Baker RE, Gaffney EA, Lee SS (2012) Turing’s model for biological pattern formation and the robustness problem. Interface focus 2(4):487–496

Vanag VK, Epstein IR (2009) Cross-diffusion and pattern formation in reaction-diffusion systems. Phys Chem Chem Phys 11(6):897–912

Fanelli D, Cianci C, Di Patti F (2013) Turing instabilities in reaction-diffusion systems with cross diffusion. Eur Phys J B 86(4):142

Shi J, Xie Z, Little K (2011) Cross-diffusion induced instability and stability in reaction-diffusion systems. J Appl Anal Comput 1(1):95–119

Othmer HG, Scriven LE (1971) Instability and dynamic pattern in cellular networks. J Theor Biol 32(3):507–537

Nakao H, Mikhailov AS (2008) Turing patterns on networks. arXiv preprint arXiv:0807.1230

Nakao H, Mikhailov AS (2010) Turing patterns in network-organized activator-inhibitor systems. Nat Phys 6(7):544

Hata S, Nakao H, Mikhailov AS (2012) Global feedback control of Turing patterns in network-organized activator-inhibitor systems. EPL (Europhysics Letters) 98(6):64004

Udwadia FE, Koganti PB (2015) Optimal stable control for nonlinear dynamical systems: an analytical dynamics based approach. Nonlinear Dyn 82(1–2):547–562

Skandari MHN (2015) On the stability of a class of nonlinear control systems. Nonlinear Dyn 80(3):1245–1256

Saldi N, Yüksel S, Linder T (2016) Near optimality of quantized policies in stochastic control under weak continuity conditions. J Math Anal Appl 435(1):321–337

Liu K, Fridman E, Johansson KH (2015) Networked control with stochastic scheduling. IEEE Trans Autom Control 60(11):3071–3076

Cohen D, Nigmatullin R, Kenneth O, Jelezko F, Khodas M, Retzker A (2019) Nano-NMR based flow meter. arXiv preprint arXiv:1903.02348

Hale JK, Peletier LA, Troy WC (2000) Exact homoclinic and heteroclinic solutions of the Gray-Scott model for autocatalysis. SIAM J Appl Math 61(1):102–130

Kolokolnikov T, Ward MJ, Wei J (2006) Zigzag and breakup instabilities of stripes and rings in the two-dimensional Gray-Scott model. Stud Appl Math 116(1):35–95

Zheng Q, Shen J, Wang Z (2018) Pattern dynamics of the reaction-diffusion immune system. PLoS One 13(1):e0190176

Castelli R (2017) Rigorous computation of non-uniform patterns for the 2-dimensional Gray-Scott reaction-diffusion equation. Acta Appl Math 151(1):27–52

McGough JS, Riley K (2004) Pattern formation in the Gray-Scott model. Nonlinear Anal Real World Appl 5(1):105–121

Yadav OP, Jiwari R (2019) A finite element approach to capture Turing patterns of autocatalytic Brusselator model. J Math Chem 57(3):769–789

Jordehi AR (2015) A chaotic artificial immune system optimisation algorithm for solving global continuous optimisation problems. Neural Comput Appl 26(4):827–833

Matzinger P (1994) Tolerance, danger, and the extended family. Annu Rev Immunol 12(1):991–1045

McCoy DF, Devarajan V (1997) Artificial immune systems and aerial image segmentation. In: 1997 IEEE international conference on systems, man, and cybernetics. Computational cybernetics and simulation, volume 1, pp 867–872. IEEE

Kuo RJ, Chiang NJ, Chen Z-Y (2014) Integration of artificial immune system and k-means algorithm for customer clustering. Appl Artif Intell 28(6):577–596

Sablonnière P (2005) Univariate spline quasi-interpolants and applications to numerical analysis. Rend Semin Mat Univ Politech Torino 63(3):211–222

Sablonnière P (2007) A quadrature formula associated with a univariate spline quasi interpolant. BIT 47(4):825–837

Zhu C-G, Kang W-S (2010) Applying cubic B-spline quasi-interpolation to solve hyperbolic conservation laws. UPB Sci Bull Ser D 72(4):49–58

Zhu C-G, Kang W-S (2010) Numerical solution of Burgers–Fisher equation by cubic B-spline quasi-interpolation. Appl Math Comput 216(9):2679–2686

Kumar R, Baskar S (2016) B-spline quasi-interpolation based numerical methods for some Sobolev type equations. J Comput Appl Math 292:41–66

Mittal RC, Kumar S, Jiwari R (2020) A cubic B-spline quasi interpolation method for solving two-dimensional unsteady advection diffusion equations. Int J Numer Methods Heat Fluid Flow 30(9):4281–4306

Schumaker LL (2007) Spline functions: basic theory. Cambridge Mathematical Library, 3rd edn. Cambridge University Press, Cambridge

Sablonnière P (2004) Quadratic spline quasi-interpolants on bounded domains of \({\mathbb{R}}^d, d=1,2,3\). Splines, radial basis functions and applications. Rend Semin Mat Univ Politec Torino 61(3):229–246

Trefethen LN (2000) Spectral methods in MATLAB, volume 10. Siam

Zhang H, Ding F (2013) On the Kronecker products and their applications. J Appl Math. https://doi.org/10.1155/2013/296185

Simmons GF (2016) Differential equations with applications and historical notes. CRC Press, Boca Raton

Hundsdorfer W, Verwer JG (2013) Numerical solution of time-dependent advection-diffusion-reaction equations, vol 33. Springer Science & Business Media, New York

Acknowledgements

The author Sudhir Kumar would like to thank Council of Scientific and Industrial Research (CSIR), Government of India (File no: 09/143(0889)/2017-EMR-I).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mittal, R.C., Kumar, S. & Jiwari, R. A cubic B-spline quasi-interpolation algorithm to capture the pattern formation of coupled reaction-diffusion models. Engineering with Computers 38 (Suppl 2), 1375–1391 (2022). https://doi.org/10.1007/s00366-020-01278-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-020-01278-3