Abstract

Animals emit visual signals that involve simultaneous, sequential movements of appendages that unfold with varying dynamics in time and space. Algorithms have been recently reported (e.g. Peters et al. in Anim Behav 64:131–146, 2002) that enable quantitative characterization of movements as optical flow patterns. For decades, acoustical signals have been rendered by techniques that decompose sound into amplitude, time, and spectral components. Using an optic-flow algorithm we examined visual courtship behaviours of jumping spiders and depict their complex visual signals as “speed waveform”, “speed surface”, and “speed waterfall” plots analogous to acoustic waveforms, spectrograms, and waterfall plots, respectively. In addition, these “speed profiles” are compatible with analytical techniques developed for auditory analysis. Using examples from the jumping spider Habronattus pugillis we show that we can statistically differentiate displays of different “sky island” populations supporting previous work on diversification. We also examined visual displays from the jumping spider Habronattus dossenus and show that distinct seismic components of vibratory displays are produced concurrently with statistically distinct motion signals. Given that dynamic visual signals are common, from insects to birds to mammals, we propose that optical-flow algorithms and the analyses described here will be useful for many researchers.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Studying animal behaviour often means the analysis of movements in time and space. While techniques are readily available for static visual patterns and ornaments (Endler 1990), this is less so with dynamic sequences of visual signals (motion displays) (but see Zanker and Zeil 2001).

An extensive literature exists on the study of motion as it pertains to neural processing, navigation and the extraction of motion information from visual scenes (reviewed in Barron et al. 1994; Zanker and Zeil 2001). In neurobiology in particular, techniques have been motivated by the need to accurately describe biologically relevant features of motion as an animal moves through its environment to identify coding strategies in the processing of visual information (Zanker 1996; Zeil and Zanker 1997; Zanker and Zeil 2001; Eckert and Zeil 2001; Tammero and Dickinson 2002). While these studies have been integral to an examination of visual processing, such techniques have limited application in studies of behavioural ecology and communication as they do not provide simple, intuitive depictions of motion for quantification and comparison. Another extensive body of literature on the analysis of motion exists in the study of biomechanics, particularly in the kinematics of limb motion (Tammero and Dickinson 2002; Jindrich and Full 2002; Nauen and Lauder 2002; Vogel 2003; Fry et al. 2003; Alexander 2003; Hedrick et al. 2004). Such techniques could, in principle, provide extensive information on motion signals but these computationally intensive approaches may not efficiently capture aspects of visual motion signals that are most relevant in the context of communication signals. In addition, both techniques present the experimenter with large data sets and it is often desirable to reduce the data in order to glean relevant information.

In particular, Peters et al.(2002) (Peters and Evans 2003a, b) have provided a significant advance in the analysis of motion signals in communication. Peters et al. (2002) described powerful techniques for the analysis of visual motion as optical flow patterns in an attempt to demonstrate that signals are conspicuous against background motion noise (Peters et al. 2002; Peters and Evans 2003a, b). Here we build upon these pioneering techniques by making use of these previous algorithms to develop another depiction of visual signals and use these to analyse courtship displays of jumping spiders from the genus Habronattus. In addition, we show that optical flow approaches are suitable for quantification and classification by methods equivalent to audio analysis (Cortopassi and Bradbury 2000).

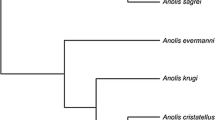

Male Habronattus court females by performing an elaborate sequence of temporally complex motions of multiple colourful body parts and appendages (Peckham and Peckham 1889, 1890; Crane 1949; Forster 1982b; Jackson 1982; Maddison and McMahon 2000; Elias et al. 2003). Habronattus has recently been used as a model to study species diversification (Masta 2000; Maddison and McMahon 2000; Masta and Maddison 2002; Maddison and Hedin 2003; Hebets and Maddison 2005) and multicomponent signalling (Maddison and Stratton 1988; Elias et al. 2003, 2004, 2005). In these studies there has been an implicit assumption that qualitative differences in dynamic visual courtship displays can reliably distinguish among species (Richman 1982), populations (Maddison 1996; Maddison and McMahon 2000; Masta and Maddison 2002; Maddison and Hedin 2003; Hebets and Maddison 2005), and seismic signalling components (Elias et al. 2003, 2004, 2005). It has yet to be determined, however, whether such qualitative differences can stand up to rigorous statistical comparisons (Walker 1974; Higgins and Waugaman 2004). To test hypotheses on signal evolution and function it is crucial to understand the signals in question. Thus it is necessary to test whether qualitative signal categories are consistently different.

Our method reduces the dimensionality of visual motion signals by integrating over spatial dimensions to derive patterns of motion speed as a function of time. This method may not be adequate for some classes of signal (e.g. which differ solely in position or direction of motion components). Our results demonstrate, however, that for many signals this technique allows objective quantitative comparisons of complex visual motion signals. This will potentially provide a wide range of useful behavioural measures to a variety of disciplines from systematics and behavioural ecology to neurobiology and psychology.

Methods

Spiders

Male and female H. pugillis and H. dossenus were field collected from different mountain ranges in Arizona (Atascosa—H. dossenus and H. pugillis; Santa Catalina—H. pugillis; Santa Rita—H. pugillis; Galiuro—H. pugillis). Animals were housed individually and kept in the lab on a 12:12 light:dark cycle. Once a week, spiders were fed fruit flies (Drosophila melanogaster) and juvenile crickets (Acheta domesticus).

Recording procedures

Recording procedures were similar to a previous study (Elias et al. 2003). We anaesthetized female jumping spiders with CO2 and tethered them to a wire from the dorsum of the cephalothorax with low melting point wax. We held females in place with a micromanipulator on a substrate of stretched nylon fabric (25×30 cm). This allowed us to videotape male courtship from a predictable position, as males approach and court females in their line of sight. Males were dropped 15 cm from the female and allowed to court freely. Females were awake during courtship recordings. Recordings commenced when males approached females. For H. pugillis, we used standard video taping of courtship behaviour (30 fps, Navitar Zoom 7000 lens, Panasonic GP-KR222, Sony DVCAM DSR-20 digital VCR) and then digitized the footage to computer using Adobe Premiere (San Jose, CA, USA) with a Cinepak codec. Video files were stored as *.avi files. For H. dossenus, we used digital high-speed video (500 fps, RedLake Motionscope PCI 1000, San Diego, CA, USA) acquired using Midas software (Xcitex, Cambridge, MA, USA). We selected suitable video clips of courtship behaviour based on camera steadiness and length of behavioural displays (<350 frames). For the H. pugillis analysis, courtship segments from several individuals were used (Santa Catalina, N=5; Galiuro, N=8; Santa Rita, N=6; Atascosa, N=4). The camera was positioned approximately 30° from a zero azimuth position (“head-on”) (azimuthal range 10°–70°). For the H. dossenus analysis, different signal components from different individuals (N=5) were analysed. The camera was positioned approximately 90° from a zero azimuth position (azimuthal range 75°–95°). It was difficult to predict precisely the final courtship position of the animals since males sometimes did not court the female “head-on”, so we included a wide range of camera angles in the analysis. All further analysis was conducted using Matlab (The Mathworks, Natick, MA, USA).

Motion analysis

The mathematical methods used for motion analysis are explained in the next few paragraphs. Full Matlab programs for each analysis step are available at http://www.nbb.cornell.edu/neurobio/land/PROJECTS/MotionDamian/

Cropping/intensity normalization

Video sequences were shot at either 30 (H. pugillis) or 500 fps (H. dossenus). High-speed sequences (500 fps) were reduced to 250 fps for analysis and the intensity of each frame normalized because the high-speed camera automatic gain control tended to oscillate slightly. Normalization (P N) was achieved by the following equation:

where P N is the normalized pixel intensity, P O is the original individual pixel intensity, P Avg is the mean pixel value for the whole video sequence, and P FAvg is the mean pixel intensity value in the individual frame. Frames were cropped so that the animal was completely within and spanned nearly the entirety (>75%) of the frame.

Optical flow calculation

The details of this algorithm are published elsewhere (Barron et al. 1994; Zeil and Zanker 1997; Peters et al. 2002). Briefly, we used a simple gradient optical flow scheme to estimate motion. If a 2-dimensional (2D) video scene includes edges, intensity gradients, or textures, motion in the video scene (as an object sweeps past a given pixel location) can be represented as changing intensity at that pixel. Intensity changes can thus be used to summarize motion from video segments. Such motion calculations are widely used in robotics and machine vision to analyse video sequences (e.g. http://www.ifi.unizh.ch/groups/ailab/projects/sahabot/).

Our video data were converted into an N×M×T matrix where N is the number of pixels in the horizontal direction, M is the number of pixels in the vertical direction, and T is the number of video frames. The 3D matrix was smoothed with a 5×5×5 Gaussian convolution kernel with a standard deviation of one pixel (Barron et al. 1994). Derivatives in all three directions were computed using a second-order (centred, 3-point) algorithm. This motion estimate is based on the assumption that pixel intensities only change from frame-to-frame because of motion of objects passing by the pixels. The local speed estimate (v g) was calculated as follows:

where v g is the local object velocity estimate in the direction of the spatial intensity gradient, I is an array of intensities of pixels, t is the frame number, and || is the magnitude operator (Barron et al. 1994). The local speed estimate is defined as the magnitude of v g.

Speed profile plots

The speed waveform is a simple average of the local speed estimates (v g) for objects over all pixels in the frame (Peters and Evans 2003a). We also defined a speed surface (analogous to a spectrogram). The speed surface is a 2D plot with frame number on the x-axis, pixel speed bins on the y-axis, and the colour in each bin related to the log of the number of pixels moving at that speed. In other words, at each frame, we plotted a histogram of the number of pixels showing movement at a particular speed range. Both of these plots represented the complete speed profiles of each video clip. We also constructed a speed “waterfall” plot which represents the speed surface as a 3-dimensional (3D) plot, with the z-axis showing the log of the number of pixels associated with a speed bin in any given frame.

Maximum cross-correlation of 1D and 2D signals

Similarity between speed profiles was computed by normalized cross-correlation of pairs of sample plots (with periodic wrapping of samples). Waveforms being compared were padded with the mean of the sequence, so that the shorter one became the same length as the longer one. Both speed waveforms and speed surfaces were analysed, using a 1-dimensional (1D) correlation for the speed waveforms and a 2D correlation (with shifts only along time) for the speed surfaces. For the next stage of the analysis, we used a measure of dissimilarity (1.0 minus the maximum correlation) as a distance measure to construct a matrix of distances between all pairs of signals.

Multidimensional scaling (MDS)

The distance (dissimilarity) matrix was used as input for a MDS analysis (Cox and Cox 2001). MDS provides an unbiased, low dimensional representation of the structure within a distance matrix. A good fit will preserve the rank order of distances between data points and give a low value of stress, a measure of the distance distortion. MDS analysis normally starts with a 1D fit and increases the dimensionality until the stress levels plateau at a low value. A Matlab subroutine was used (Steyvers, M., http://www.psiexp.ss.uci. edu/research/ software.htm) to perform the MDS. We used an information theoretic analysis on the entropy of clustering (Victor and Purpura 1997) on both the 1D and 2D correlations and determined that more information was contained in the 1D correlation (data not shown); hence, all further analyses were performed on the 1D correlation matrices. We fitted our data from one to five dimensions. Most of the stress reduction (S 1) occurred at either two or three dimensions (H. pugillis: 1st dimension, S 1=0.38, R=0.68; 2nd dimension, S 1=0.23, R=0.78; 3rd dimension, S 1=0.15, R=0.84; 4th dimension, S 1=0.12, R=0.88; 5th dimension, S 1=0.09, R=0.89; H. dossenus: 1st dimension, S 1=0.32, R=0.69; 2nd dimension, S 1=0.19, R=0.81; 3rd dimension, S 1=0.12, R=0.88; 4th dimension, S 1=0.09, R=0.92; 5th dimension, S 1=0.07, R=0.93), hence all further analysis was performed on 3D fits. Plots of the various signals in MDS-space showed strong clustering. The axes on the MDS analysis reflect the structure of the data; we therefore performed a one-way ANOVA along different dimensions to calculate statistical significance of the clustering. A Tukey post hoc test was then applied to compare different populations (H. pugillis) and signal components (H. dossenus). All statistical analyses were performed using Matlab.

Results

Motion algorithm calibration

In order to test the performance of the motion algorithm against a predictable and controllable set of motion signals, we simulated rotation of a rectangular bar against a uniform background using Matlab. Texture was added to the bar in the form of four nonparallel stripes (Fig. 1a). We programmed the bar to pivot around one end using sinusoidal motion. We then systematically varied the width (w) and length (l) of the bar, as well as the frequency (F) and amplitude (A) of the motion (Fig. 1a).

Simulated movements. a A bar of fixed length (l), width (w) and starting angle (θ) was simulated and rotated sinusoidally at a fixed peak-to-peak amplitude of A and frequency (F). The frequency (b), amplitude (c), and bar length (d) were then systematically changed. The time-course of the corresponding stimulus parameters are shown in panel i. Panels ii–iv show the resulting analysis of the simulated movement and step changes: 2D “speed waveform” plots (ii), 3D “speed surface” plots (iii) and 3D “speed waterfall plots” (iv); and summary of simulated motion amplitude as different parameters are changed (v)

The three examples in Fig. 1 show the effect of a step-change in frequency (F), amplitude (A) and bar length (l), respectively (Fig. 1b–d). In each case, the analysis depicts the temporal structure of the simulated movement very well (Fig. 1). As frequency, amplitude, or bar length increase, the computed average speed increases predictably (see below). The speed waveform and surface plots show that more pixels “move” at higher speeds after the step increase. This detailed shape is depicted particularly well in the surface plot.

The amplitude of the average motion of the simulated bar is related to the amplitude of the input wave and its frequency by a square law. [Fig. 1b(iv), c(iv)]. Detailed examination of the image sequence suggests that at higher speeds, the motion in a video clip “skips pixels” between frames, hence this square law is a result of the product of the speed measured at each pixel (which is linear) multiplied by the greater number of pixels averaged into the motion at higher speeds. The amplitude of the average motion of the simulated bar is related to bar length by a cube law. [Fig. 1d(iv)]. This results from the aforementioned square law increased by another linear factor, i.e. the number of pixels covered by the edge of the bar. Both bar width and texture change average motion amplitude only weakly (data not shown). This small change in average motion can be attributed to the increase in the total length of edge contours.

We also modelled amplitude (AM) and frequency (FM) modulated movement by animating a rotating bar at a fixed carrier frequency and either AM or FM modulating the carrier. The carrier and modulating frequencies are clearly discernable for both AM (Fig. 2a) and FM (Fig. 2b) movements in all the speed profiles. AM and FM movements are also easily distinguishable from one another. Both simple (sinusoidal) and complex (modulated) motions are thus faithfully and accurately reflected in the analysis.

Simulated AM and FM movements. The sinusoidal movement of a bar was simulated with either amplitude (AM) (a) or frequency (FM) (b) modulation. (i) Modulated sine wave used to move the simulated bar. (ii) 2D “speed waveform” plots. (iii) 3D “speed surface” plots. (iv) 3D “speed waterfall plots” (third row). AM and FM motion is easily distinguishable in the analysis

Habronattus pugillis populations

H. pugillis is found in the Sonoran desert and local populations on different mountain ranges (“sky islands”) have different ornaments, morphologies, and courtship displays (Masta 2000; Maddison and McMahon 2000; Masta and Maddison 2002). Courtship displays from four different populations of H. pugillis are plotted in Fig. 3. Several repeating patterns are apparent, especially in Galiuro (Fig. 3a), Atascosa (Fig. 3c), and Santa Catalina (Fig. 3d) populations. By comparing videos of courtship behaviour with their corresponding speed profiles, we verified that features in the speed profiles corresponded to qualitatively identifiable components of the motion display. For example, in the Atascosa speed profiles (Fig. 3c), high amplitude “pulses” (e.g. frames 130–150) correspond to single leg flicks and lower amplitude “pulses” (e.g. frames 150–200) correspond to pedipalp and abdominal movements. Speed profiles also reveal more subtle features of motion displays. For example, animals from the Santa Rita mountains make circular movements with their pedipalps during courtship (Maddison and McMahon 2000). It is evident from the speed surface (Fig. 3b, frames 0–200) that this behaviour does not occur in a smooth motion, but rather as a sequence of brief punctuated, jerky movements (Fig. 3b).

Different populations of Habronattus pugillis. Representative from the Galiuro (a), Santa Rita (b), Atascosa (c), and Santa Catalina (d) mountain ranges are shown. Top panel (i) shows an example of a single video frame at the resolution used in the analysis. Second panel (ii) shows the 2D “speed waveform” plots. Third panels (iii) show the 3D “speed surface” plots (second row). Fourth panel (iv) shows the 3D “speed waterfall plots” (third row). Frame rate is 30 fps

Different populations of H. pugillis vary in behavioural and morphological characters (Maddison and McMahon 2000). We evaluated two populations that include unique movement display characters [Galiuro—First leg wavy circle, Santa Rita—Palp motion (circling)] and two that have similar courtship display characters [Atascosa and Santa Catalina—Late-display leg flick (single)] (Maddison and McMahon 2000). Santa Catalina spiders include the rare Body shake motion character, but this was not analysed (Maddison and McMahon 2000). Using MDS, all four groups can be discriminated from each other by the speed profiles (Fig. 4). Clustering was strong for all population classes. In order to evaluate the significance of each cluster we performed a one-way ANOVA on dimension 1 (F 3,19=25.61, P=6.9×10−7) and saw that the Santa Catalina population was significantly different from the Galiuro (P<0.001) and Santa Rita (P<0.05) populations but not from the Atascosa population (P>0.05) [Fig. 4a(ii)]. Also, the Galiuro population was significantly different from the Santa Rita (P<0.01) and Atascosa (P<0.001) populations and the Santa Rita population was significantly different from the Atascosa population (P<0.05) [Fig. 4a(ii)]. Performing the same analysis on dimension 3 (F 3,19=3.68, P=0.0303), the Santa Catalina and Atascosa populations are significantly different (P<0.05) [Fig. 4b(ii)]. No other differences can be observed along dimension 3 (P>0.05, Fig. 4b). Hence, all population classes are statistically distinguishable in at least one of the dimensions used in the analysis.

Multidimensional scaling analysis of different H. pugillis populations. Similarity of the courtship displays from the Gailuro, Santa Rita, Atascosa, and Santa Catalina mountain ranges were computed by circular cross-correlation and then input into a MDS procedure. Dimension 1 versus dimension 2 [a(i)] and dimension 1 versus dimension 3 [b(i)] are plotted. MDS showed strong clustering by population location. A one-way ANOVA with Tukey’s post hoc and Bonferonni corrections on the different populations along dimension 1 [a(ii)] or dimension 3 [b(ii)] indicated that population clusters detected by MDS were significantly different from one another (P<0.05)

Habronattus dossenus signals

Courtship displays from five different individuals were selected and the visual component of different seismic signals recorded (scrape, N=5; thump, N=10; buzz, N=5) for each individual spider (Elias et al. 2003). Two classes of thumps, distinguishable by their seismic component, were selected for each individual but were not distinguishable based on their speed profiles, hence they were combined into one class (Elias et al. 2003). First we plotted the speed profiles for each of the signal classes (Fig. 5). Speed surfaces capture relatively subtle details of movements. For example, during individual scrape signals the forelegs first come down followed by abdominal movement upward (Elias et al. 2003) (Fig. 5a). Individual scrapes produce a rocking motion that can be observed clearly in the speed surface as a characteristic double peak (e.g. 1–3 in Fig. 5a). Furthermore, individual abdominal oscillations are resolved in the buzz speed surface (Fig. 5c).

Different signals of Habronattus dossenus. Representative examples of scrape (a), thump (b), and buzz (c) signals are shown. Top panel (i) shows an example of a single video frame at the resolution used in the analysis. Second panel (ii) illustrates body positions with numbers (1–4) illustrating movements of the forelegs and abdomen. Third panel (iii) shows the 2D “speed waveform” plots. Fourth panel (iv) shows the 3D “speed surface” plots. Fifth panel (v) shows the 3D “speed waterfall plots”. Panels iii–v are shown in the same time scale, with numbers (1–4) corresponding to the body movements illustrated in panel ii. Frame rate is 250 fps (reduced from 500 fps)

To test whether this technique could distinguish among the three qualitative signal classes, we applied the same analysis described above. Clustering was strong for all signal classes. A one-way ANOVA on dimension 1 (F 2,18=20.66, P=2.2×10−5) showed that scrapes are significantly different from thumps (P<0.001) and buzzes (P<0.05) (Fig. 6) and thumps are significantly different from buzzes (P<0.05) (Fig. 6). Hence, all signal classes are statistically distinguishable in the full analysis.

Multidimensional scaling analysis of H. dossenus signals. Similarity of scrape, thump, and buzz courtship signals were computed by circular cross-correlation and then input into an MDS procedure. Plot of dimension 1 versus dimension 2 (a). MDS showed strong clustering by signal category. A one-way ANOVA with Tukey’s post hoc and Bonferonni corrections on the different signals along dimension 1 (b) indicated that signal classes detected by MDS were significantly different from one another (P<0.05)

Spatial information

Our analysis technique extracts temporal patterns and integrates over spatial dimensions. For comparison, we derived summaries of spatial patterns of image motion, integrated over time, for representative signals (Fig. 7). Although we did not carry out quantitative analyses on spatial data, some general features are apparent. There are clear differences between the signal examples in the location of motion within the video frame (Fig. 7 left panels), although some features are obscured in displays where leg flick motions are superimposed on the movement of the entire animals (Fig. 7b). Summaries of the motion orientation are more difficult to interpret (Fig. 7 centre panels). This is most likely due to the fact that many of the movements are cyclical (rotation or back-and-forth movement). Speed isoform plots are 3D plots that (Fig. 7 right panels) show location of motion within the video frame with time on the z-axis. These plots parallel the speed surface plots used in the analyses above, but whereas speed surface plots depict the distribution of motion speed (amplitude) as a function of time, speed isoform plots depict the location of image motion as a function of time. These could, in principle, be analysed similarly to the speed profiles above.

Further optic flow parameters for courtship displays of different populations of Habronattus pugillis. Representative examples (same examples as in Fig. 3) from the Galiuro (a) and Santa Catalina (b) mountain ranges are shown. First column shows speed spatial distributions throughout the video integrated through time. Second column shows speed orientation spatial distribution throughout the video integrated through time. Third column show speed distribution isoform surfaces throughout the video with time on the z-axis and speed distribution on the x- and y-axis. Timing patterns are difficult to observe in speed and orientation distribution space. Frame rate is 30 fps

Discussion

Jumping spiders communicate using a complex repertoire of visual ornaments and dynamic visual (motion) signals (Jackson 1982; Forster 1982b). Here we use optical flow techniques for the depiction and quantification of motion signals and use the technique as the basis of a statistical analysis to assess motion signals in jumping spiders.

Quantitative analysis of courtship signals

“Sky island” populations

We examined variation in the courtship displays of different “sky island” populations of H. pugillis (Maddison and McMahon 2000; Masta 2000; Masta and Maddison 2002). By calculating the differences between the speed profiles of displays from different populations, we were able to show that courtship displays were different between all of the populations studied. We could easily distinguish between populations with unique display elements [Galiuro—First leg wavy circle, Santa Rita—Palp motion (circling)] (Maddison and McMahon 2000). Importantly, we could also discriminate the Santa Catalina and Atascosa populations that had qualitatively similar late stage visual displays (Late-display leg flick) (Fig. 4b). Maddison and McMahon (2000) in their initial descriptions and analysis of courtship coded this display as being the same between spiders from the Santa Catalina and Atascosa Mountains. Masta and Maddison (2002) demonstrated that fixation rates between neutral (mitochondrial genes) and male phenotypic traits (morphological and behavioural characters) were different and used this as evidence to suggest that sexual selection was driving diversification in H. pugillis. This study not only supports those previous studies, but also suggests that male courtship phenotypes are fixed to an even greater extent than previously demonstrated.

Multicomponent signals

Elias et al. (2003) showed that males in the jumping spider H. dossenus produced at least three different seismic signals all coordinated with motion signals. Given the strict coordination of seismic and motion signals, the authors suggested that the signal components in different modalities are functionally linked (Elias et al. 2003). If emergent properties of the multimodal signal (seismic and visual) are important, one would predict that unique seismic components would have unique motion components. Similar predictions can be made if unique motion displays serve to focus attention on corresponding seismic components (Hasson 1991). Distinct motion signals would function to prevent habituation and ensure attention to seismic components (Hall and Channel 1985; Dill and Heisenberg 1995; Post and von der Emde 1999; Busse et al. 2005). We measured different motion signals and found that distinct seismic signals occurred with specific motion signals suggesting inter-signal interactions either to focus attention or to construct integrative signals (Partan and Marler 1999, 2005; Hebets and Papaj 2005). While this is not a conclusive test on whether there exist inter-signal interactions, it is suggestive that selection has worked on the integrated multicomponent, multimodal signal.

Overall implications and limitations

In general, there are many potential applications of this technique for measuring motion signals. Any aspect of the repeated motion patterns can be measured (i.e. intervals between patterns, duration of patterns, maximum and minimum motion of patterns, etc.) for use in subsequent analysis, and multiple aspects of the speed profiles can be treated simultaneously in multivariate analyses.

Rigorous classification techniques are desirable in many disciplines particularly in studies of animal communication. For example, at the level of entire courtship displays, this could be used to identify motion parameters as characters for phylogenetic analyses. At the level of individual signals, this is potentially useful in evaluating natural variation in signals (Ryan and Rand 2003) and as a way to measure signal complexity (e.g. how many categories of visual signals can be objectively discriminated). These techniques could also be valuable in comparative studies. For example, closely related species that signal in different visual environments could be compared to investigate the effect of the visual environment on the design of motion displays (Endler 1991, 1992; Peters et al. 2002; Peters and Evans 2003a, b).

This method of constructing speed profiles severely reduces the information present in the original videotapes. Optic flow analyses reduce video data to essentially five dimensions (speed, speed spatial distribution, orientation, orientation spatial distribution, and time) (Zeil and Zanker 1997; Zanker and Zeil 2001; Peters et al. 2002). Some of these other motion parameters have been used in other systems (Peters et al. 2002; Peters and Evans 2003a, b). We chose to concentrate on speed and time since jumping spider displays can be very complex and often include the movement of various body parts (forelegs, third leg patella, pedipalps) superimposed upon the movement of the entire spider (Fig. 7). Any of these dimensions however can be plotted for jumping spider displays (Fig. 7). Speed and time parameters may be especially important in jumping spiders due to the structure of their visual system. Jumping spiders have two categories of eyes: primary eyes which have a small field of view and are specialized for fine spatial resolution, and secondary eyes which have a large field of view are specialized for motion detection (Land 1969, 1985; Land and Nilsson 2002). Motion signals would most likely stimulate secondary eyes and therefore timing and not spatial location is likely to be important since secondary eyes integrate over a wide field of view (Forster 1982a, b; Land 1985). This hypothesis remains to be tested and it is possible that jumping spiders are reducing the visual field into timing information (input from the secondary eyes) and spatial information (input from the primary eyes) independently. In this scenario, our use of speed and timing parameters would match “data reductions” performed by secondary eyes. This underlines the importance of picking the correct data reduction strategy based on insights from sensory physiology and behaviour. Combining both spatial and temporal analyses with an analysis on the primary and secondary eye fields of view could give insights into how information is channelled into the nervous system (Strausfeld et al. 1993). Complex motion signals in different communication systems may be specialized for different dimensions. By combining our technique with alternative analyses that focus on spatial rather than temporal motion patterns, it may be possible to develop a battery of analytical approaches to identify and analyse the salient parameters of a wide range of complex visual motion displays.

Regardless of this data reduction, distinct signal categories can still be discriminated using average speed and time parameters. The sheer complexity of motion displays makes data reduction attractive and we feel that this method reduces the data while constructing accurate, intuitive depictions of the structure and timing of motion displays in a way that may be biologically meaningful to the organism in question. While other parameters are no doubt important, we feel that using speed parameters and time allows one to easily observe repeating patterns in a way that is difficult in other parameter spaces (Fig. 7).

One potential limitation in this technique is the confounding effect of the number of edges on total motion. For example, if a moving appendage differed between two species solely in the number of stripe patterns, then for equivalent leg movements, our analysis would record a higher motion signal for the animal with more stripes. Such a difference in recorded motion signals due to ornaments could, however, reflect true differences in the perceived signal at the receiver. Neural processing of motion in animal brains is based on the movement of edges defined by luminance contrast and not edges defined by chromatic contrast. Edges defined by chromatic contrast are usually not perceived by animals; thus, total edge motion is total motion (Borst and Egelhaaf 1993). Therefore our algorithm analyses motion in a biological way.

By expanding the technique developed by Peters et al. (2002), we have developed a novel way to visualize motion data analogous to spectrogram representations of auditory data as well as demonstrating statistical techniques for analysing motion data. This study demonstrates the utility of using optic flow techniques to reduce and analyse motion in a variety of contexts (Zeil and Zanker 1997; Zanker and Zeil 2001; Peters et al. 2002; Peters and Evans 2003a,b).

References

Alexander RM (2003) Principles of animal locomotion. Princeton University Press, Princeton

Barron JL, Fleet DJ, Beauchemin SS (1994) Performance of optic flow techniques. IJCV 12:43–77

Borst A, Egelhaaf M (1993) Detecting visual motion: theory and models. Rev Oculomot Res 5:3–27

Busse L, Roberts KC, Crist RE, Weissman DH, Woldorff MG (2005) The spread of attention across modalities and space in a multisensory object. PNAS 102:18751–18756

Cortopassi KA, Bradbury JW (2000) The comparison of harmonically rich sounds using spectrographic cross-correlation and principal coordinates analysis. Bioacoustics 11:89–127

Cox TF, Cox MAA (2001) Multidimensional scaling. Chapman and Hall, Boca Raton

Crane J (1949) Comparative biology of salticid spiders at Rancho Grande, Venezuela. Part IV an analysis of display. Zoologica 34:159–214

Dill M, Heisenberg M (1995) Visual pattern memory without shape recognition. Philos Trans R Soc Lond Ser B Biol Sci 349:143–152

Eckert MP, Zeil J (2001) Towards an ecology of motion vision. In: Zanker JM, Zeil J (eds) Motion vision: computational, neural, and ecological constraints. Springer, Berlin Heidelberg New York, pp 333–369

Elias DO, Mason AC, Maddison WP, Hoy RR (2003) Seismic signals in a courting male jumping spider (Araneae: Salticidae). J Exp Biol 206:4029–4039

Elias DO, Mason AC, Hoy RR (2004) The effect of substrate on the efficacy of seismic courtship signal transmission in the jumping spider Habronattus dossenus (Araneae: Salticidae). J Exp Biol 207:4105–4110

Elias DO, Hebets EA, Hoy RR, Mason AC (2005) Seismic signals are crucial for male mating success in a visual specialist jumping spider (Araneae:Salticidae). Anim Behav 69:931–938

Endler JA (1990) On the measurement and classification of color in studies of animal color patterns. Biol J Linnean Soc 41:315–352

Endler JA (1991) Variation in the appearance of guppy color patterns to guppies and their predators under different visual conditions. Vision Res 31:587–608

Endler JA (1992) Signals, signal conditions, and the direction of evolution. Am Nat 139:S125–S153

Forster L (1982a) Vision and prey-catching strategies in jumping spiders. Am Sci 70:165–175

Forster L (1982b) Visual communication in jumping spiders (Salticidae). In: Witt PN Rovner JS (eds) Spider communication: mechanisms and ecological significance. Princeton University Press, Princeton, pp 161–212

Fry SN, Sayaman R, Dickinson MH (2003) The aerodynamics of free-flight maneuvers in Drosophila. Science 300:495–498

Hall G, Channel S (1985) Differential effects of contextual change on latent inhibition and on the habituation of an orientating response. J Exp Psychol Anim Behav Process 11:470–481

Hasson O (1991) Sexual displays as amplifiers: practical examples with an emphasis on feather decorations. Behav Ecol 2:189–197

Hebets EA, Maddison WP (2005) Xenophilic mating preferences among populations of the jumping spider Habronattus pugillis Griswold. Behav Ecol 16:981–988

Hebets EA, Papaj DR (2005) Complex signal function: developing a framework of testable hypotheses. Behav Ecol Sociobiol 57:197–214

Hedrick TL, Usherwood JR, Biewener AA (2004) Wing inertia and whole-body acceleration: an analysis of instantaneous aerodynamic force production in cockatiels (Nymphicus hollandicus) flying across a range of speeds. J Exp Biol 207:1689–1702

Higgins LA, Waugaman RD (2004) Sexual selection and variation: a multivariate approach to species-specific calls and preferences. Anim Behav 68:1139–1153

Jackson RR (1982) The behavior of communicating in jumping spiders (Salticidae). In: Witt PN, Rovner JS (eds) Spider communication: mechanisms and ecological significance. Princeton University Press, Princeton, pp 213–247

Jindrich DL, Full RJ (2002) Dynamic stabilization of rapid hexapedal locomotion. J Exp Biol 205:2803–2823

Land MF (1969) Structure of retinae of principal eyes of jumping spiders (Salticidae : Dendryphantinae) in relation to visual optics. J Exp Biol 51:443–470

Land MF (1985) The morphology and optics of spider eyes. In: Barth FG (ed) Neurobiology of arachnids. Springer, Berlin Heidelberg New York, pp 53–78

Land MF, Nilsson DE (2002) Animal eyes. Oxford University Press, Oxford

Maddison WP (1996) Pelegrina franganillo and other jumping spiders formerly placed in the genus Metaphidippus (Araneae: Salticidae). Bull Mus Comp Zool Harvard Univ 154:215–368

Maddison W, Hedin M (2003) Phylogeny of Habronattus jumping spiders (Araneae : Salticidae), with consideration of genital and courtship evolution. Syst Entomol 28:1–21

Maddison W, McMahon M (2000) Divergence and reticulation among montane populations of a jumping spider (Habronattus pugillis Griswold). Syst Biol 49:400–421

Maddison WP, Stratton GE (1988) Sound production and associated morphology in male jumping spiders of the Habronattus agilis species group (Araneae, Salticidae). J Arachnol 16:199–211

Masta SE (2000) Phylogeography of the jumping spider Habronattus pugillis (Araneae: Salticidae): recent variance of sky island populations? Evolution 54:1699–1711

Masta SE, Maddison WP (2002) Sexual selection driving diversification in jumping spiders. PNAS 99:4442–4447

Nauen JC, Lauder GV (2002) Quantification of the wake of rainbow trout (Oncorhynchus mykiss) using three-dimensional stereoscopic digital particle image velocimetry. J Exp Biol 205:3271–3279

Partan SR, Marler P (1999) Communication goes multimodal. Science 283:1272–1273

Partan SR, Marler P (2005) Issues in the classification of multimodal communication signals. Am Nat 166:231–245

Peckham GW, Peckham EG (1889) Observations on sexual selection in spiders of the family Attidae. Occas Pap Wisconsin Nat Hist Soc 1:3–60

Peckham GW, Peckham EG (1890) Additional observations on sexual selection in spiders of the family Attidae, with some remarks on Mr. Wallace’s theory of sexual ornamentation. Occas Pap Wisconsin Nat Hist Soc 1:117–151

Peters RA, Evans CS (2003a) Design of the Jacky dragon visual display: signal and noise characteristics in a complex moving environment. J Comp Physiol A 189:447–459

Peters RA, Evans CS (2003b) Introductory tail-flick of the Jacky dragon visual display: signal efficacy depends upon duration. J Exp Biol 206:4293–4307

Peters RA, Clifford CWG, Evans CS (2002) Measuring the structure of dynamic visual signals. Anim Behav 64:131–146

Post N, von der Emde G (1999) The “novelty response” in an electric fish: response properties and habituation. Physiol Behav 68:115–128

Richman DB (1982) Epigamic display in jumping spiders (Araneae, Salticidae) and its use in systematics. J Arachnol 10:47–67

Ryan MJ, Rand AS (2003) Sexual selection in female perceptual space: how female tungara frogs perceive and respond to complex population variation in acoustic mating signals. Evolution 57:2608–2618

Strausfeld NJ, Weltzien P, Barth FG (1993) Two visual systems in one brain: neuropils serving the principle eyes of the spider Cupiennius salei. J Comp Neurol 328:63–72

Tammero LF, Dickinson MH (2002) The influence of visual landscape on the free flight behavior of the fruit fly Drosophila melanogaster. J Exp Biol 205:327–343

Victor JD, Purpura KP (1997) Metric-space analysis of spike trains: theory, algorithms and application. Netw Comp Neural 8:127–164

Vogel S (2003) Comparative biomechanics: life’s physical world. Princeton University Press, Princeton

Walker TJ (1974) Character displacement and acoustic insects. Am Zool 14:1137–1150

Zanker JM (1996) Looking at the output of two-dimensional motion detector arrays. IOVS 37:743

Zanker JM, Zeil J (2001) Motion vision: computational, neural, and ecological constraints. Springer, Berlin, Heidelberg, New York

Zeil J, Zanker JM (1997) A glimpse into crabworld. Vision Res 37:3417–3426

Acknowledgements

We would like to thank M.C.B. Andrade, C. Botero, C. Gilbert, J. Bradbury, B. Brennen, M.E. Arnegard, E.A. Hebets, W.P. Maddison, M. Lowder, Cornell’s Neuroethology Journal Club, an anonymous reviewer, and members of the Hoy lab for helpful comments, suggestions, and assistance. Spider illustrations were generously provided by Margy Nelson. Funding was provided by NIH and HHMI to RRH (N1DCR01 DC00103), NSERC to ACM (238882 241419), NIH to BRL, and a HHMI Pre-Doctoral Fellowship to DOE. These experiments complied with “Principles of animal care”, publication no. 86–23, revised 1985 of the National Institute of Health, and also with the current laws of the country (USA and Canada) in which the experiments were performed.

Author information

Authors and Affiliations

Corresponding author

Additional information

Damian O. Elias and Bruce R. Land contributed equally

An erratum to this article can be found at http://dx.doi.org/10.1007/s00359-006-0128-3

Rights and permissions

About this article

Cite this article

Elias, D.O., Land, B.R., Mason, A.C. et al. Measuring and quantifying dynamic visual signals in jumping spiders. J Comp Physiol A 192, 785–797 (2006). https://doi.org/10.1007/s00359-006-0116-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00359-006-0116-7