Abstract

A novel technique to shrink the elongated particles and suppress the ghost particles in particle reconstruction of tomographic particle image velocimetry is presented. This method, named as intensity-enhanced multiplicative algebraic reconstruction technique (IntE-MART), utilizes an inverse diffusion function and an intensity suppressing factor to improve the quality of particle reconstruction and consequently the precision of velocimetry. A numerical assessment about vortex ring motion with and without image noise is performed to evaluate the new algorithm in terms of reconstruction, particle elongation and velocimetry. The simulation is performed at seven different seeding densities. The comparison of spatial filter MART and IntE-MART on the probability density function of particle peak intensity suggests that one of the local minima of the distribution can be used to separate the ghosts and actual particles. Thus, ghost removal based on IntE-MART is also introduced. To verify the application of IntE-MART, a real plate turbulent boundary layer experiment is performed. The result indicates that ghost reduction can increase the accuracy of RMS of velocity field.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Tomographic particle image velocimetry (Tomo-PIV) proposed by Elsinga et al. (2006) has become a powerful experimental technique to measure a three-dimensional three-components (3D3C) velocity field. In a Tomo-PIV experiment, tracer particles are illuminated by a thick laser sheet. The particle images are recorded by cameras from different view angles. Consequently, three-dimensional (3D) intensity distribution of particles is reconstructed via the two-dimensional (2D) images according to the geometry relationship of projection. Based on the intensity field, volumetric cross-correlation analysis or particle tracking velocimetry (PTV) can be applied to obtain the displacement field.

To date, Tomo-PIV has been developed about one decade and widely applied to numerous fields of fluid mechanics, well reviewed by Scarano (2013), Westerweel et al. (2013) and Gao et al. (2013). The critical step to achieve a highly accurate quantitative measurement with Tomo-PIV is the particle reconstruction technique. In order to reconstruct the physical measurement domain from limited image planes, the geometric projection of particle scattering are mathematically transformed into a model of discrete integration along the line of sight (LOS), which makes the particle reconstruction become a problem on dealing with a set of linear equations. In Tomo-PIV, iterative algebraic reconstruction technique (iterative-ART) introduced by Herman and Lent (1976) is commonly utilized to solve these equations. Compared with other analytical algorithms (Mersereau 1976; Zeng 2001), ART is more suitable for the limited projections, easier to model the imaging system and more efficient in time. However, limited by the number of cameras, this equation system is an underdetermined problem, which means the solution of the equation system is dependent on different physical constraints within the frame of optimization problem (Herman and Lent 1976). When Tomo-PIV was firstly introduced by Elsinga et al. (2006), authors had pointed out that the multiplicative algebraic reconstruction technique (MART) based on the maximum entropy criterion is the most compatible approach for Tomo-PIV among all of the ART methods. This view was also confirmed by Michaelis et al. (2010) later.

Among all the factors affecting the precision of particle reconstruction, the dominant factors are configuration of the cameras, seeding density and mapping function (Novara et al. 2010; Michaelis et al. 2010; Worth et al. 2010; Atkinson et al. 2011; Silva et al. 2012; Discetti et al. 2013b). For the arrangement of cameras, the typical configuration utilized in experiments is a cross-like array (Schröder et al. 2008; Elsinga et al. 2010; Atkinson et al. 2011; Tokgoz et al. 2012) or a linear-like (Ghaemi and Scarano 2011) array with four or more cameras. Thomas et al. (2014) summarized five different camera configurations and the corresponding optimum settings. A Tomo-PIV system composed of 12 cameras was also used to study the accuracy of object reconstruction by Lynch and Scarano (2014). On the other hand, the mapping function between the measurement volume and the image planes can be calibrated by a polynomial function. Its error can be reduced to less than 0.1 pixels after self-calibration (Wieneke 2008). Thus, if the issues of camera configuration and calibration accuracy are ignored, the concentration of tracer particles in the flow field is the only critical factor affecting the quality of the reconstruction. A four-camera system is a common configuration under a moderate seeding density of 0.05 ppp (particle per pixel, Elsinga et al. (2006)). However, the measurement error goes up with increasing ppp due to the multiple possible particle positions caused by the massive intersections of LOS. Although Tomo-PIV has improved one order of seeding density compared with 3D PTV (Maas et al. 1993), the seeding density for acceptable accuracy of particle reconstruction is still lower than a planar PIV, which limits its spatial resolution of measurement. Among all available investigations, the maximum seeding density achieved in real experiment is 0.2 ppp by Novara and Scarano (2012) with the motion tracking enhancement (MTE) technique. It is very difficult to exactly reconstruct the intensity distribution when ppp is high. Petra and his coworkers (Petra et al. 2008, 2009; Gesemann et al. 2010; Petra et al. 2013) tried to develop new methods by deriving other iterative mechanisms from \(L_1\) norm constraint or constrained least-squares strategies. Ye et al. (2015) proposed a new dual-basis pursuit reconstruction technique, but experimental verifications are needed for further assessment under 3D applications. Beside the concept of ppp, another high-associated parameter for leveling the seeding density is the image signal ratio \(N_s\) with range of [0, 1], defined as the ratio of nonblack pixels in the active pixel’s set (Scarano 2013; Thomas et al. 2014). With \(N_\mathrm{s}\), the contributions of the seeding density and particle size are both considered.

For all particle reconstruction techniques, ghost particles are an unavoidable by-product that significantly affects the accuracy and spatial resolution of velocity measurement. The ghost particle is a fake particle-like intensity distribution unexpectedly generated at the intersection of LOS from each camera during the reconstruction (Elsinga and Tokgoz 2014). Elsinga et al. (2011) proved that the ghost particles can smooth and reduce the velocity or velocity gradient over the volume thickness. However, some features of ghost particles are potentially helpful for improving the quality of reconstruction. For instance, a simulacrum matching-based reconstruction enhancement (SMRE) technique proposed by de Silva et al. (2013) utilizes the characteristic shape and size of actual particles to remove ghost particles in the reconstructed intensity field. The ‘Shake The Box’ approach (Schanz et al. 2013) uses the tracked particles to predict the particles distribution in the current time step. The predicted particle positions are refined by ‘Iterative Reconstruction of Volumetric Particle Distribution’ (IPR) proposed by Wieneke (2013) until the particle fits the images. Within this method, the ghost particle problem is nearly resolved by considering the found trajectories (Schanz et al. 2014). Recently, Elsinga and Tokgoz (2014) used the joint distribution of peak intensity and track length to completely separate the ghost particles from actual particles under certain conditions in a time-resolved Tomo-PIV measurement, in which high speed cameras and lasers are required. The volume threshold method (Thomas et al. 2014) is an optimization technique that applies an intensity threshold linear to the image signal ratio \(N_s\) to eliminate ghost particles with weak intensity. The MTE-MART introduced by Novara et al. (2010) and the adaptive MLOS-SMART proposed by Atkinson et al. (2010) have similar processes to MART iteration with a feedback mechanism of particle pairing from the velocity field. In those two algorithms, the reconstructed particle fields of neighboring time steps are enhanced on the paired particles and attenuated on the unmatched particles (probably being ghost particles), which can significantly improve the quality of reconstruction and the accuracy of velocimetry. However, their iterative update of the reconstructed volume and velocity costs much more time than simple application of MART. Thus, for time-resolved image sequence, a highly efficient and accurate reconstruction based on MTE-MART was designed by Lynch and Scarano (2015). The new algorithm, named as the sequential MTE-MART (SMTE-MART), uses the previous object intensity field that propagates in time according to the velocity field as the best initial guess to reconstruct the following volume. In addition, Discetti et al. (2013a) presented a novel low-cost experiment setup for Tomo-PIV to reduce the bias error introduced by the coherent motion of the ghost particles.

Besides the issue of the accuracy of particle reconstruction, another big concern of reconstruction algorithms is the time efficiency. Atkinson et al. (2013) analyzed a set of volumetric data formats to exploit the sparse nature of 3D particle volumes and to improve the efficiency of generating, storing and processing particle volumes in 3D3C PIV and PTV measurements. In order to accelerate the updating process of MART, some new efficient algorithms were introduced into Tomo-PIV, such as simultaneous multiplicative algebraic reconstruction technique (SMART) (Atkinson and Soria 2009) and Block iterative MART (Thomas et al. 2014). Champagnat et al. (2014) proposed a particle volume reconstruction SMART (PVR-SMART) to recover single-voxel particles, in which the volume is reconstructed in a sparse way comparing to MART-based reconstructions. In practice, only 5 iterations are normally utilized in MART to balance the cost of time and the precision of convergence. The efficiency of iterative algorithm is sensitive to the initial guess of the particle field. Worth and Nickels (2008) introduced an approach of multiplicative first guess (MFG) to initialize the volume intensity instead of applying a uniform field as the first guess by Elsinga et al. (2006). Atkinson et al. (2008) further simplified the first guess by a multiplied line-of-sight (MLOS) approach, which does not use weighting functions to guess the initial intensity field as MFG, resulting in a faster reconstruction. Discetti and Astarita (2012) proposed a multiresolution (MR) algorithm for tomographic reconstruction, which relies on the adoption of a coarser grid in the first step of reconstruction to obtain a fast and accurate first guess, which shows a good performance on dealing with high seeding density. The main idea of making a good initial guess is to use the sparsity of the intensity field, which has been practically noticed in many investigations.

Despite the efforts have been made to reduce ghost particles, achieve a high precision of reconstruction and improve the processing efficiency, Tomo-PIV is still under development on optimizing the quality of reconstructed particles. As pointed by Novara et al. (2010) and Discetti et al. (2013b), the main error sources for tomographic reconstruction can be simply classified as discretization error, viewing geometry error and ghost particles. Discetti et al. (2013b) proposed a spatial filtering MART (SF-MART) to utilize an anisotropic filter to mitigate the discretization error. Although this method is not specifically designed for reducing viewing geometry error and ghost particles, it does provide an additional improvement on the issues of elongated particles and ghost particles. According to Discetti et al. (2013b), the SF-MART can significantly improve the accuracy of measured velocity field and velocity gradient tensor. The ghost particle has been widely investigated, while the elongation of particles as an inherent defection of MART algorithm has not drawn enough attention yet. For these reasons, the quality of reconstruction associated with the elongation and ghost particles is concerned.

In the present work, a priori knowledge on elongation and ghosts is given based on the numerical simulation of SF-MART. The inverse diffusion function is applied to correct the shape of particles, and a histogram-based intensity reduction is utilized to reduce the intensity of ghosts. Combining these two new methods, a novel algorithm named intensity-enhanced MART (IntE-MART) is proposed to improve the accuracy of reconstruction. Then, the performance of IntE-MART is evaluated with and without image noise in a test case of vortex ring motion which is same as Elsinga et al. (2006). Subsequently, the ghost removal algorithm based on IntE-MART is designed to further optimize the reconstruction. Eventually, this technique is applied to a real experiment of plate turbulent boundary layer (TBL) for assessment. The mean and root-mean-square (RMS) velocity profile are compared with the results of Laser Doppler Velocimetry (LDV).

2 Intensity-enhanced MART (IntE-MART)

2.1 Tomographic reconstruction

The reconstruction of 3D particles distribution by optical tomography, an inverse procedure of imaging projection, is an advanced technique introduced into the volumetric PIV by Elsinga et al. (2006). The procedure implies that the object intensity E(x, y, z) representing the 3D array of particle distribution should be solved from the recorded pixel intensity I(X, Y), where (x, y, z) denotes the coordinates of cubic voxel in a measurement volume, while (X, Y) represents the coordinates of pixel in images. This system can be simplified as a set of linear equations under the hypothesis that the recording pixel intensity is the result of spatially integrating the intensity along the corresponding LOS. The intensity array E(x, y, z) is unknown in these equations. The system can be iteratively optimized for instance by MART algorithm (Elsinga et al. 2006). In this work, starting from a uniform initialization (the whole voxels are set to 1), the object intensity E(x, y, z) is updated by MART technique as follows:

Here the relaxation factor \(\mu\) is taken equal to 1 in the current work, while Thomas et al. (2014) optimized this value depending on the number of iterations k. \(N_i\) is the voxel set corresponding to the LOS of the ith pixel. The weighting coefficient \(w^m_{i,j}\) represents the projection contribution of the jth voxel to the ith pixel in the mth camera. \(w^m_{i,j}\) is computed as the intersection area of voxel and LOS. Different weighting functions and their influences to reconstruction accuracy were discussed by Thomas et al. (2014). \({\tilde{I}^m(X_i,Y_i)}^k\) is the integrated pixel intensity of the mth camera in kth iteration. The ratio \({I^m(X_i,Y_i)}/{\tilde{I}^m(X_i,Y_i)}^k\) can be considered as a residual between actual image and integrated image. The volume intensity array is updated corresponding to this ratio on each camera.

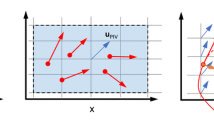

The current work focuses on two important error sources that are particle elongation and ghosts. In order to well resolve the displacement of subpixel in correlation or tracking, the best shape of the reconstructed 3D particles is considered as Gaussian shape as well as the particle image (Discetti et al. 2013b). However, in Tomo-PIV, the shape of particles cannot be fully resolved from the limited view angles due to the configuration of cameras. Therefore, all the particles including ghosts and actual particles are elongated along the depth direction of measurement domain (denoted as z-axis), which leads to a higher uncertainty and error of velocity in z direction (Elsinga et al. 2006; Novara et al. 2010). Generally speaking, the degree of elongation goes up with reducing the view angle of cameras. We use \(\beta\) to denote the total view angle between cameras following Scarano (2013), and \(d_\tau\) is the particle’s diameter according to Kim et al. (2013). Thus, the initial elongated diameter \(d_{\tau z}\) in z orientation can be estimated as \(d_{\tau z}\approx d_\tau /\tan (\beta /2)\) (Fig. 1). Note that the ratio between \(d_{\tau z}\) and \(d_\tau\) \((d_{\tau z}/d_\tau )\) gradually reduces during the iteration of MART, while it is always larger than 1 due to the particle elongation in MART. In order to describe the particles shape in an intuitive and simple way, Gaussian fit is adopted for a single particle. Raffel (2007) had given a 2D Gaussian formula for planar particles, which can be easily extended to 3D as

where \(E_0\) is the maximum intensity of tracer particles located at the center position of \((x_0,y_0,z_0)\). The particle’s diameter is 4 times of the standard deviation \(\sigma\) of Gaussian fitting, which means that the elongated rate \(r_d\) in z direction can be expressed by \(\sigma\) instead of size of particle, as

Due to a little difference of particle diameter in x and y directions, \(\sigma _\tau\) can be given as a mean value of \(\sigma _{\tau x}\) and \(\sigma _{\tau y}\) , \(\sigma _\tau =(\sigma _{\tau x}+ \sigma _{\tau y})/2\) .

The diagrammatic sketch on particles elongation (Kim et al. 2013)

Except for the shape of particles, another important negative factor on particle reconstruction is ghost particle, which has been introduced in Sect. 1. Particle intensity almost exists at all intersections of LOS from each camera. Some intersections will potentially generate ghost particles within MART algorithm. Normally, the intensity of ghost particle is statistically lower than the real particles in the reconstructed particle field based on probability density function (PDF) analysis (Elsinga et al. 2006; Novara et al. 2010; Discetti et al. 2013b; Elsinga 2013; Elsinga and Tokgoz 2014). It means that the intensity of particles is a potential criterion to differentiate ghost particles from real particles. However, the number and intensity of ghost particles increase with increasing tracer concentration, which means that it is difficult to distinguish actual particles and ghost particles at high ppp.

From previous analysis, the criterion of MART does not take advantage of the isotropic Gaussian distribution of a particle and its additional intensity information. Therefore, an intensity-enhanced MART designed in the current work introduces an intervention to redistribute the elongated intensity and ghost particles intensity. This method not only equalizes the dimensions of particles in different directions, but also enhances the intensity contrast ratio between actual particles and ghost particles. The results indicate that the errors can be reduced by this method.

2.2 Inverse diffusion to shrink elongated particles

Ignoring the physical causes, the particle elongation can be considered as convolving the original volume with an anisotropic Gaussian kernel. As pointed out by Perona and Malik (1990), convolution with Gaussian weight coefficients may equivalently be viewed as the solution of heat conduction or diffusion, which suggests that the voxel (or pixel) intensity can be characterized by the diffusion function. Therefore, the elongation of particles in z direction can be suppressed if an inverse diffusion function is applied. Following this idea, a large blur particle can be shrunk to a small particle. In the present work, one-dimensional inverse diffusion function is adopted because particles are mainly elongated in z direction. The function can be expressed as

where the intensity is denoted as E, and \(\Delta\) is the Laplace operator. The time t can be considered as a scalar parameter related to iteration. Then, the function is discretized to difference scheme as

\(\tilde{E}\) represents the intensity field processed by the inverse diffusion operation. \(\delta\) is a positive scalar that determines the strength of inverse diffusion, which means that the effect of particles sharpening would become intensive with the increase in \(\delta\). However, it is worth to note that this discrete function is ill-posed when \(\delta\) is larger than some certain value. Empirically, it is suggested to set \(\delta\) less than 1.

Further investigations have been done to study the characteristics of inverse diffusion function. For easy explanation, a three-dimensional particle is simplified to one-dimensional Gaussian distribution in z direction as

The standard deviation \(\sigma\) represents the size of particles. A large \(\sigma\) means a flat particle with large diameter. The effect of inverse diffusion operation on different \(\sigma\) is shown in Fig. 2. In this figure the black solid line, black dot line and red solid line represent the original distribution of particle (E), the negative of second-order derivative (\(-\delta E_{zz}\)) and the processed distribution (\(\tilde{E}\)) with a single inverse diffusion correction, respectively. Both of the two original distributions with different \(\sigma\) can be sharpened with different values of inverse diffusion factor \(\delta\), which indicates that the variation in \(\delta\) should be determined by the elongated rate \(r_d\). Note that negative intensity may occur at the position where absolute value of z is larger than \(\sqrt{\sigma ^4/\delta +\sigma ^2} (\delta > 0)\) highlighted by the blue solid lines in Fig. 2. The negative intensity only appears at the edge of particles and can be replaced with zero. Its effect on the shape of particles is negligible.

The inverse diffusion factor \(\delta\) is the only parameter that needs an a priori estimation based on the elongated rate \(r_d\). The relationship between \(\delta\) and \(r_d\) is investigated via a 2D simulation of intensity diffusion. In total, 4000 isotropic Gaussian particles with standard deviation \(\sigma = 1\) are randomly generated in an image of \(1024\times 1024\,\hbox {pixel}^2\), which is located at x–z plane. In order to find out the relationship between elongated rate \(r_d\) and \(\delta\), these particles are elongated only along z direction by applying the diffusion function of \(\tilde{E} = E+\delta \cdot E_{zz}\) once, in which the \(\delta\) varied from 0 to 1 with interval of 0.05. Then, the peaks of particles are detected and fitted to calculate the elongated rate \(r_d\) based on Eq. 4. The final result has been given in Fig. 3a, in which the horizontal and vertical axes represent \(r_d\) and \(\delta\), respectively. From the trend of the red triangle, the particles are elongated with increasing \(\delta\). Their relationship can be approximated as a power function (black solid curve)

where \(p_1=-1.40\), \(p_2=-1.97\), \(p_3=1.41\). Thus, the inverse diffusion factor \(\delta\) can be computed after the elongated rate \(r_d\) is counted in each reconstruction steps. Consequently, the inverse diffusion Eq. 6 is applied to correct the shape of particles. Although this fitting curve is calculated from the test of particles with \(\sigma =1\), it suitably works for other particle diameter with iterations as shown in Fig. 3b. Five different diameters of particles with identical initial elongated rate of 2 are tested, in which the factor \(\delta\) is determined by Eq. 8. The elongation rate of different particles converges to about 1 within 10 iterations. Owing to the value of \(\delta\) is between 0 and 1, it can be simply set to 1 when \(r_d\) is larger than 1.8. The detailed steps will be given in Sect. 2.4.

a The simulated results of the variation in \(\delta\) as a function of \(r_d\) within \(\sigma =1\). The black solid curve is the fitting result with power function. b The curves of \(r_d\) when particles are applied inverse diffusion function. Different colors of solid lines represent different diameter of particles as \(\sigma\) is equal to 0.6, 0.8,1.0, 1.2, 1.4

2.3 suppress the intensity of ghost particles

In Sect. 2.2, the inverse diffusion function has been studied to shrink the elongated particles. For another error source of ghost particles, an effective algorithm is designed based on the fact that the intensity of ghost particles is normally lower than the true particles. The idea is to reduce low intensity and enhance the high intensity, which will be firstly introduced without considering the image noise. The reconstructed volume in each iterative steps is multiplied by an intensity suppressing factor \(\alpha\), which is

where \(\alpha\) is relative to the intensity E and the seeding density ppp. On the other hand, to avoid the unnecessary disturbance to true particles, the effect range of \(\alpha\) should be bounded with the range of ghost particles. Therefore, the optimal suppressing factor \(\alpha\) should be negatively correlated with the probability density function \(g_{(E,ppp)}\) of ghost particles, which can be defined as

The suppression can be achieved by substituting Eq. 10 into Eq. 9.

In real measurements, the PDF of ghost particles is unknown. All of the PDFs in the present work are normalized by the total number of particles, not the number of ghosts. To obtain the \(g_{(E,ppp)}\), numerical simulations are performed to check the distribution of ghost particles using SF-MART algorithm. Despite the PDF of particle peak intensity for actual and ghost particles in reconstructed objects were given in many papers (Elsinga et al. 2006; Discetti et al. 2013b), there was no quantitative description about it. The simulation test in the current work is the same as Elsinga et al. (2006). A volume object with size of \(35\times 35 \times 7\,\hbox {mm}^3\) is discretized into \(700\times 700 \times 140\,\hbox {voxel}^3\) with voxel size of 0.05. Four virtual cameras with \(700\times 700\, \hbox {pixel}^2\) are placed at the left, right, up and down with viewing angle of \(30^{\circ }\). The ratio between voxel size and pixel size is 1. The Gaussian particles with diameter of 3 voxels (\(\sigma =0.75\)) are randomly distributed in the test volume with subvoxel accuracy, and the peak intensity is 255. The particle images of each camera are generated from the projection according to Eq. 1. The measurement volume is reconstructed from initial uniform field for ten iterations. After each iteration, the reconstructed volume is filtered by the Gaussian kernel of \(3 \times 3 \times 3\) with standard deviation 0.5 except for the last iteration (Discetti et al. 2013b).

The test simulated seven cases with different seeding densities of [0.05, 0.075, 0.1, 0.125, 0.15, 0.175, 0.2], corresponding to different \(N_s\) of [0.70, 0.83, 0.90, 0.94, 0.97, 0.98, 0.99]. The \(N_s\) is 0.7 when ppp is equal to 0.05, and this is the same as the result of Thomas et al. (2014). The intensity peaks were considered as actual particles if the peaks were located within 1 voxel radius of the correct positions. Other intensity peaks above 5 counts were considered as ghost particles. Take \(ppp = 0.15\) for example, Fig. 4a shows the PDF of all particles including ghost and real particles. If PDFs of ghosts (black bar) and actual particles (magenta bar) are distributed separately as in Fig. 4b, the number of low-intensity ghost particles rapidly decrease with increasing peak intensity. There are 68,284 actual particles and 207,293 ghost particles in the reconstructed particle field, and this will definitely reduce the reliability of the cross-correlation because of the low contrast ratio between true particles and ghost particles.

a The PDF of all particles. b The PDFs of ghosts and actual particles, respectively. The distribution of ghost particles is fitted by exponential function as plotted by the solid curve. The ppp is 0.15, and all of the PDFs are counted based on the total number of all the particles with a bin size of three counts

In order to quantitatively describe the distribution of ghost particles under different seeding densities, exponential function is applied to fit the distribution of ghosts, which is the best model against other functions such as Gaussian or polynomial function. The fitting formula is expressed as

where \(E_r\) is the relative intensity normalized by the maximum of particle intensity (Thomas et al. 2014). Thus, the distribution of ghost particles can be approximated by an exponential function with parameters of \((A_g, B_g)\).

The fitting results of ghosts are plotted in Fig. 5a at ppp of 0.05, 0.10, 0.15, 0.20. Different colors represent different cases of ppp. The plots potentially show a gradual variation in \(A_g\) and \(B_g\), which is clearly revealed in Fig. 5b. \(A_g\) decreases with seeding density, while \(B_g\) has an opposite trend. This means the influence of ghost particles gradually extends to the region of high intensity with the increase in ppp, but considerable ghost particles still remain in low-intensity regions. For easily being applied to the particle reconstruction in real measurement under arbitrary ppp, a power and a linear fitting function, respectively, for \(A_g\) and \(B_g\) are defined as

In Fig. 5b, the blue solid line is the power fitting with coefficients of \({a_1}=4.17 \times 10^{-5}\), \({a_2} = -3\), \({a_3}=6.42 \times 10^{-2}\), and the red line is the linear fitting with coefficients of \({b_1}=1.82\times 10^{-1}\), \({b_2}=2.65\times 10^{-3}\). Using these two functions, it is very convenient to evaluate the coefficients \(A_g\) and \(B_g\) according to the seeding density ppp, and consequently to estimate the distribution of ghost particles. Although the parameters \(A_g\) and \(B_g\) can be affected by image noise, in our tests, the variation in \(A_g\) and \(B_g\) under low noise level is fairly small and the reconstruction quality is not sensitive to the variation in these two coefficients.

a The exponential fit curve of ghost distribution within different seeding density. The x axis represents the relative intensity \(E_r\). The ppp is 0.05, 0.10, 0.15, 0.20. b The variation in \(A_g\) and \(B_g\) with ppp. The blue (\(A_g\)) and red (\(B_g\)) solid lines are fitting results using power and linear functions, respectively

Based on the numerical investigation, we believe that the distribution of ghost particles can be approximately estimated based on Eqs. 11 and 12, which are only dependent on ppp from the particle images. The strategy of suppressing the low intensities can be achieved by substituting Eqs. 10–12 into Eq. 9, which is easy to be introduced into the iteration of tomographic reconstruction.

2.4 Intensity-enhanced MART algorithm

Two optimized methods for particle elongation and ghost particles are, respectively, introduced in Sects. 2.2 and 2.3. The intensity-enhanced MART (IntE-MART) algorithm is a combination of these two methods. The flowchart of IntE-MART is given in Fig. 6 as follows:

-

(a)

Initialize the measurement volume with a uniform value (or by MFG, MR, MLOS methods) when the iteration index of k is 1. Different initializations have little influence on the results due to the redistribution of intensity in the IntE-MART iterations. In the current work, the initial intensity is set to a uniform value of 1.

-

(b)

Perform a MART iteration.

-

(c)

Smooth the intensity on an isotropic Gaussian kernel of \(3\times 3\times 3\) with standard deviation of 0.5. Anisotropic filtering is not needed because the particles will be shrunk by inverse diffusion equation in next steps.

-

(d)

Statistically calculate the elongation rate \(r_d\) and find the corresponding inverse diffusion factor \(\delta\) based on Eq. 8. Gaussian fitting (Eq. 3) is applied to the mean particle intensity for estimating the standard deviations in the directions of x, y and z. The particles are detected by local intensity peaks in the measurement volume.

-

(e)

Substitute the \(\delta\) into the inverse diffusion equation (Eq. 6) to shrink the particles along z direction. This operation is a global treatment performed on all the voxels in the intensity object.

-

(f)

Update the intensity of each voxel by multiplying the intensity suppressing factor \(\alpha\), which is estimated based on the seeding density ppp according to Sect. 2.3. This step can be updated many times as a subiteration (the total subiteration number is denoted by \(N_E\)) to enhance the intensity of actual particles.

-

(g)

Repeat these processes from step b until \(k=N_M\), where \(N_M\) is the default iteration number. Gaussian smoothing and intensity enhancement will not be applied to the last iteration. MART is needed to guarantee the conservation of intensity.

The new technique does not have a direct detection and elimination of ghost particles. It is just a method of redistributing the intensity from weak particles to strong particles with particle reshaping mechanism for reducing particle elongation. After these additional procedures, MART can efficiently converge to a higher quality of reconstruction.

3 Synthetic numerical assessment

From the introduction of IntE-MART algorithm in Sect. 2, the only two parameters needed for practice are the elongated rate \(r_d\) and seeding density ppp. In this section, the performance of IntE-MART is assessed by simulating the particle motion around a 3D vortex ring following the test case of Elsinga et al. (2006).

The tested vortex ring with a circle of 10 mm diameter is located at the center of a 35 × 35 × 7 mm3 (denoted by x, y, z) measurement volume. The analytical expression of the displacement field of d (in voxel units) is given by:

where R is the distance to the voxel-center ring and l is the width of the vortex. In the present work, the maximum displacement is 2.9 voxels according to \(l = 2\) mm. Four cameras are placed at left, right, upward and downward of the vortex ring with viewing angle of \(30^\mathrm{{o}}\). Particles in the first exposure volume of PIV are randomly located at the grid nodes, while the positions of particles in the second exposure volume are shifted by subvoxel displacement according to the Eq. 13. Seven different seeding densities [0.05, 0.075, 0.1, 0.125, 0.15, 0.175, 0.2] are tested with IntE-MART and SF-MART, respectively. For both methods, the iteration number of MART is set to \(N_M = 10\) and the intensity is smoothed on a kernel of 3 \(\times\) 3 \(\times\) 3 with standard deviation of 0.5. Figure 7 shows an example field of exact solution (a), SF-MART (b) and IntE-MART (\(N_E=20\)) (c) at an \(x-z\) plane under \(ppp = 0.15\). The red circles locate the actual particles. It is obvious that IntE-MART has better performance on particle reconstruction than SF-MART in terms of the particle shape and the ghosts. However, Fig. 7c also shows that some high-intensity ghost particles are enhanced in the object. Detailed analysis will be given in the following subsections.

In IntE-MART, the additional time-consuming step comparing with traditional MART algorithm is to calculate the particle elongation \(r_d\). The cost is normally proportional to the number of particles in the measurement volume. Practically, the parameter can be calculated only from those strong peaks or by scanning particles with a larger grid space for saving time. This paper adopts the latter approach, namely finding particle peaks with scanning step of three voxels. The algorithm is further accelerated by taking the advantage of sparsity (Atkinson and Soria 2009), which means that the voxels whose intensities are lower than a threshold will be permanently set to zero and not be updated any more. The threshold is set as 1 % of the mean intensity of the field, and this value is not optimal. The processing time of single reconstruction volume of SF-MART and IntE-MART with \(N_E = 20\) for two different seeding densities of \(ppp = 0.05\) and 0.15 is checked on MATLAB (Table 1). During the iterations, the intensity of many voxels is gradually lower than the threshold of sparsity and is set to zero. Additionally, the correction of particle elongation improves the shape of the reconstructed particles, which accelerates the convergence of MART. Thus, the IntE-MART can save about 40 % of time compared to the SF-MART by taking advantage of the sparsity.

3.1 Improvement on particle elongation

To refine the shape of particles, inverse diffusion equation is applied during the step e) of the reconstruction procedures in Sect. 2.4. The elongated rate \(r_d\) of SF-MART and IntE-MART (\(N_{E}=10\)) is found as a function of MART iteration number \(N_M\) under \(ppp = 0.15\) in Fig. 8a. The \(r_d\) gradually decreases to a constant value about 1.4 for SF-MART (blue) and 1.1 for IntE-MART (red) after ten iterations, respectively. IntE-MART converges the shape of particles rapidly after six iterations, while the curve of SF-MART gradually reduces within ten iterations. It suggests that the inverse diffusion equation can efficiently suppress the elongation in z direction. The curve of SF-MART turns up at last iteration because there are no smoothing applied at last step.

Furthermore, the final \(r_d\) of the reconstruction after 10 iterations under different ppp is tested as shown in Fig. 8b. For SF-MART, the final \(r_d\) goes up from 1.25 to 1.48 when ppp increases from 0.05 to 0.20 under a constant viewing angle of \(\mathrm {30^o}\). In other words, for constant viewing angle, the overlap issue of particle images can also lead to particle elongation. This indicates that careful image preprocessing is still needed to preserve the measurement accuracy (Atkinson et al. 2010). The image preprocessing adopted in our method is introduced in Sect. 4. For IntE-MART, the final \(r_d\) is closer to a constant about 1.1 for higher ppp. The \(r_d\) decreases with increasing ppp. The reason is that the strength of inverse diffusion at low ppp is slightly weaker than the strength at high ppp. Since the last procedure in the reconstruction is a pure MART iteration to guarantee the intensity conservation without the intensity enhancement or the inverse diffusion correction, the particles are little elongated to \(r_d = 1.1\) by MART.

3.2 Improvement on quality of reconstruction

The quality of reconstruction Q is defined as

which is the normalized correlation coefficient between the reconstructed intensity field \(E_\mathrm{rec}(x,y,z)\) and the artificial generated actual field \(E_\mathrm{true}(x,y,z)\). It is an essential parameter to estimate the precision of reconstruction. According to Elsinga et al. (2006), the reconstruction should be considered sufficiently accurate when Q is above 0.75.

In the present work, lens distortion effect is ignored, and the particle images are generated through spatially integrating the object intensity along the LOS. Although most of the ghosts are weakened by IntE-MART, some of them with strong intensity would be considered as potential true particles. The variation in Q corresponding to seven levels of ppp is shown in Fig. 9a. The blue curve with open symbols represents the result of SF-MART, while the red curves with solid symbols show the results of IntE-MART with \(N_E = 5\), 10 and 20, respectively. All the methods are iterated 10 times (\(N_M=10\)). The reconstruction quality of SF-MART decreases quickly with increasing ppp, which is similar to Discetti et al. (2013b). Compared with SF-MART, the IntE-MART with \(N_E = 20\) can maximally improve the quality factor about 15 % from 0.65 to 0.74 for a high seeding density of \(ppp = 0.2\) and from 0.78 to 0.90 for \(ppp = 0.15\). Meanwhile, the reconstruction quality is shown as a function of iteration number \(N_M\). For instance, when ppp is at a high level of 0.15 (Fig. 9b), the curves of IntE-MART gradually increase and grow faster than SF-MART. To illustrate the effect of intensity enhancement, Fig. 9c provides the PDFs of true particles at different \(N_E\) for \(ppp=0.15\). All the PDFs are generated based on the total number of actual particles with a bin size of 3 counts. It apparently shows a peak shift with increasing \(N_E\). This phenomenon can be ascribed to the redistribution of intensity by IntE-MART. Larger contrast ratio between true particles and ghost particles is very helpful for ghost particle removal and reducing the bias error of velocity. Fig. 9c reflects the key idea of IntE-MART.

The effect of IntE-MART on reconstruction quality. a The variation in Q as a function of ppp. b The variation in Q as a function of \(N_M\) when ppp is 0.15. c The PDFs of the reconstructed true particles under different \(N_E\) for \(ppp=0.15\). All the PDFs are counted based on the total number of actual particles with a bin size of three counts

From Fig. 9, it seems that the reconstruction quality gradually increases with increasing the enhancement times \(N_E\). However, suppressing the ghosts with larger \(N_E\) will lead to smaller size of particles, which means that larger \(N_E\) may reduce the quality of reconstruction instead. This issue has been addressed in Fig. 10, in which the dependency of Q on \(N_E\) under different ppp is presented. The figure is generated by scanning seven levels of ppp (0.050, 0.075, 0.100, 0.125, 0.15, 0.175, 0.200) combining with ten different \(N_E\) (0, 5, 10, 20, 30, 40, 50, 60, 70, 80) using IntE-MART with 10 iterations of \(N_M\). The plot is linearly interpolated and low-pass-filtered with \(3\times 3\) Gaussian weights to get smooth contour. When \(N_E\) is less than 10 (lower white line), Q increases quickly for the reduction in ghost particles, while an opposite effect of \(N_E\) on Q is observed when \(N_E\) exceeds 40 (upper white solid line) for all cases of ppp. The test suggests that the \(N_E\) located in the range of [10, 40] is an effective choice with high efficiency of iteration.

3.3 Improvement on velocimetry

The evaluation of particle displacement under different seeding density was performed by means of volume deformation iterative multigrid technique (VODIM) (Scarano 2002, 2013; Scarano and Poelma 2009). The final interrogation volume (IV) size is 32 \(\times\) 32 \(\times\) 32 \(\mathrm {voxel}^3\) with 75 % overlap, yielding a velocity field of 84 \(\times\) 84 \(\times\) 14 grid points. For each iteration of VODIM, the velocity field is validated by normalized median test (Westerweel and Scarano 2005) and smoothed by 3 \(\times\) 3 \(\times\) 3 Gaussian kernel with standard deviation 0.65, while the final velocity field is only validated without smoothing. Figure 11a shows the velocity result calculated by IntE-MART with \(N_E=20\) when \(ppp=0.15\). The blue is the iso-vorticity surface at 0.13 voxels/voxel, and the color of the vectors represents the velocity magnitude.

The measurement error \(\mathbf {u}_p\) is estimated from the calculated velocity field \(\mathbf {U}\) and the theoretical velocity solution \(\tilde{\mathbf {U}}\), namely \(\mathbf {u}_p=\mathbf {U}-\tilde{\mathbf {U}}\). The error caused by cross-correlation is also calculated from the exact synthetic particle field for comparison, which is denoted by \(\mathbf {u}_c\). Taking u component for example, the standard deviation of measurement error \(e^{u}\) can be expressed as

where \(n = 84\times 84 \times 14\) is the total number of mesh grid indexed with i. The standard deviation of other components can also be evaluated in a similar way as Eq. 15. The \(e^u\) (upper subplot) and \(e^w\) (lower subplot) are plotted in Fig. 11b. The result of v is not shown due to its similarity to the result of u. In the figure, the blue solid curve and red solid curve represent the error of SF-MART and IntE-MART, respectively. The black dash-dot line is the error of the ground truth \(\mathbf {u}_c\) for comparison. Generally speaking, the performance of IntE-MART is superior to SF-MART. First, the error of w is almost reduced to the ground truth due to the particle shape correction of inverse diffusion when ppp is lower than 0.075. Second, although the error increases with the increase in seeding density, the error of IntE-MART is lower than SF-MART and reduces with increasing \(N_E\). Especially at high ppp, the effect of IntE-MART becomes more apparent owing to the reduction in the intensity of ghosts. However, the error of IntE-MART is still higher than the ground truth at high ppp. This is because that the IntE-MART is not fully efficient in removing high-intensity ghosts. Additionally, for IntE-MART with \(N_E= 20\), 90 % of the deduced velocity vectors have an absolute error less than 0.031 pixel in u and less than 0.055 pixel in w at ppp of 0.15, while these two values are 0.063 and 0.093 in SF-MART, respectively. In a word, IntE-MART can improve the accuracy of velocimetry through elongation correction and ghosts suppression.

3.4 The effect of image noise on IntE-MART

As discussed in previous sections, the performance of IntE-MART is superior to the SF-MART for a wide range of seeding densities under a perfect image condition. However, image noise can influence the accuracy of reconstruction according to the research of Atkinson et al. (2010) and Schanz et al. (2014). In order to evaluate the effects of image noise, Gaussian white noise with different variance \(\sigma\) is added to every pixel of the synthetic images. The variance \(\sigma\) is set as a percentage of the standard deviation of the synthetic images. Eight levels of images noise varying from 5 to 40 % are tested under single seeding density \(ppp=0.15\) in the present work. The effect of images noise on particle elongation, reconstruction quality and velocity accuracy are shown in Fig. 12.

The particle elongated rate of IntE-MART is about 1.1, which is much smaller than SF-MART when the image noise level is low. This is consistent with the result of Fig. 8b. However, due to the fact that more and more noise peaks are detected as particles at high noise level and the \(r_d\) of these noise peaks (normally with size of single voxel) is generally very close to 1, the \(r_d\) gradually decreases with increasing image noise. Therefore, even if the particle elongation \(r_d\) is close to 1 at high noise level, it does not mean an improvement of \(r_d\) no matter in SF-MART or IntE-MART. In Fig. 12b, due to the effect of noise, the reduction in reconstruction quality of IntE-MART is faster than SF-MART. The quality of IntE-MART becomes worse than SF-MART when the noise level is more than 25 %. However, the error of velocity is less sensitive than the reconstruction quality to the image noise because the filter effect of cross-correlation. All the errors in Fig. 12c increase with increasing noise level, while the IntE-MART can preserve a higher accuracy than SF-MART and the velocity accuracy increases with the intensity-enhanced time \(N_E\) when the noise level is less than 35 %.

3.5 Ghost removal in IntE-MART

The signal-to-noise ratio of intensity field is improved by suppressing the low-intensity particles with an a priori suppressing factor \(\alpha\) in IntE-MART. It is found that the distribution of peak intensity after the intensity enhancement has new characteristics. To identify individual particles, all particles including actual particles and ghost particles are detected based on peak intensity larger than 5 counts over a local 3 \(\times\) 3 \(\times\) 3 subvolume, which is the same with the method introduced in Sect. 2.3. Figure 13a gives the PDF of all the detected particles when ppp is 0.15 and \(N_E = 20\) after 10 IntE-MART iterations. Note that the reconstructed particles have different intensity, albeit all original synthetic particles have a peak intensity of 255 counts. The PDF clearly shows two local minima, which are differentiated as A and B. For further investigating the characteristics of true particles and ghost particles after the intensity enhancement, Fig. 13b gives the PDFs of two types of particles individually. For the minimum A, it is a typical phenomenon that the ghost particles are normally weaker than the true particles from MART algorithm. This behavior cannot be noticed in the overall PDF in SF-MART as shown in Fig. 4, but it will be enhanced in IntE-MART process. When the low-intensity particles are suppressed, the intensity of ghost particles is transferred to the true particles. Therefore, a clear minimum A appears in the PDF of Fig. 13a. For those ghost particles with moderate intensity, they will be iteratively enhanced as well, but not as strong as the true particles due to the conservative constraint in the MART iteration. This is the mechanism behind the phenomenon of minimum B.

It is believed that the minimum A can be used to discriminate the ghost particles and real particles, which has been clearly revealed in Fig. 13b. Almost all the particles lower than the intensity indicated by A are ghosts. Thus, the ultimate goal of ghost removal may be achieved by removing the particles lower than the separation intensity. According to Elsinga and Tokgoz (2014), the particles are removed from the volume by setting the reconstructed intensity to zero within a region of 5 \(\times\) 5 \(\times\) 5 \(\mathrm {voxel}^3\) centered at the peak location. However, many other artificial particles due to the sharp edge are introduced into volumes. Thus, in the present work, two passes of ghosts removal are adopted to removal those particles lower than the local minimum A, as 100 counts in Fig. 13. The padding size in first pass is 5 voxels which is the same as Elsinga and Tokgoz (2014). Subsequently, the remaining particles lower than 100 counts are detected again and padded with size of 3 voxels. Finally, the remaining intensity field is smoothed by Gaussian weight function (\(3 \times 3 \times 3\) kernel size with standard deviation 0.5). No additional MART is performed after ghost removal.

In order to quantify the effect of ghost removal, the reconstruction quality Q, the number of ghost particles and the error of u component at \(ppp = 0.15\) are given in Table 2. The effect of image noise on ghost removal is also investigated for the optimal case (IntE-MART with \(N_E=20\)) in this table. Two methods of SF-MART and IntE-MART both with 10 iterations are compared. For SF-MART, the ghost removal cannot be applied because there is no clear separation point in the PDF of peak intensity as shown in Fig. 4a, but it is clearly shown in the PDF of IntE-MART (Fig. 13). The detailed analysis is introduced in the following paragraphs.

First, the cases without images noise are analyzed. For IntE-MART without ghost removal, the number of true particles is very stable (around 68,000, close to the real particles number of 73,500) and the ghosts decrease apparently with increase in \(N_E\). For IntE-MART with ghost removal, the number of ghost particles is much less than the number of actual particles, while the Q does not change. Especially for \(N_E = 20\) without noise, only about 0.9 % ghost particles remain after the ghost removal. However, the ghost removal may also reduce some actual particles, which can lead to the increase in the measurement error in velocity field. For instance, the error of u after ghost removal is 0.033 pixels in the case of \(N_E=20\), which is slightly greater than the error of 0.03 pixels without ghost removal.

Second, considering the image noise, the number of actual particles is still stable and slightly reduces with increasing the noise level. While, the number of ghost particle can reach up to 201,870 when the noise level is 20 %. The increasing ghost particles can lead to a lower reconstruction quality as well as a worse velocity accuracy. When increasing the noise to a high level, the ghost removal based on IntE-MART cannot be applied due to the separation point A is fully contaminated by the noise particles. Therefore, the ghosts are eliminated only for the noise of 5 and 10 %. After ghost removal, the number of ghosts apparently decreases and a number of actual particles are also removed from the reconstructed volume. Due to the fact that the number of eliminated actual particles increases with increasing noise level, the error of velocity after ghost removal becomes larger and also increases with the noise level.

In practice, it is very hard to make the peak intensity of particles as a constant in measurements. To verify the effect of IntE-MART on the variation in particle intensity, a simulation with random distribution of peak intensity between 1 and 255 counts has been performed with the same seeding density of 0.15. Figure 14 shows the final peak intensity distribution after 10 iterations of IntE-MART with \(N_E = 20\). Ideally, true particles should have a uniform distribution in the range of 1 and 255 counts. Due to the suppress treatment from IntE-MART, two-minimum patterns of PDF are also observed in Fig. 14, which is similar to Fig. 13. Differently, true particles with small intensity will be eliminated as weak ghosts. The true particles with low intensity only contribute little to the cross-correlation coefficient when deducing the velocity, that is why we allow low-intensity actual particles to be removed as well as ghost particles if we aim at eliminating most ghost particles in the reconstructed intensity field (Elsinga and Tokgoz 2014). According to this principle, the local minimum A can also be considered as a critical point to discriminate actual particles and ghost particles. In this test, the case of IntE-MART with \(N_E = 20\) yields the minimum A around 57.5 counts as shown in Fig. 14. Coincidentally, the ratio between the threshold value and the maximal artificial peak value is 0.22 which is slightly larger than the ratio 0.17 simulated by Elsinga and Tokgoz (2014), where their threshold value of intensity was 20 counts against the maximal artificial peak value of 120 counts.

The quality, the number of actual and ghost particles and measurement error for random particle intensity are provided in Table 3. For IntE-MART without ghost removal, the result is similar with Table 2 except the number of actual particles is less than the simulation based on the constant peak value. The image noise can obviously reduce the number of actual particles and increases the number of ghosts. For instance, the number of actual particles reduces from 63,002 to 54,469, while the ghost increases from 29,191 to 177,421 when the noise level is up to 20 % with \(N_E=20\), which leads to a higher measurement error. The ghost removal based on the histogram of particles intensity can only be applied to two cases (image noise is 0 and 5 % for \(N_E=20\)) due to the fact that the distribution of particle intensity is sensitive to the image noise. Although the ghosts are apparently eliminated, the real particles are also reduced with increase in noise level. The reduction in actual particles will decrease the quality of cross-correlation.

From the previous analysis, the ghost filtering technique based on IntE-MART is effective under limited noise level. This method can also filter out a part of actual particles due to the fact that the intensity is the only separated criterion, which can lead to reducing the accuracy of velocimetry. Although the ghost removal cannot improve the measurement accuracy for the simulated vortex ring motion, the efficiency of IntE-MART and ghost removal on the velocity profile of TBL in a real experiment will be further considered in Sect. 4.

4 Turbulent boundary layer experiment

The applicability of IntE-MART to a real experiment was verified by a TBL experiment conducted in a water tunnel at the Beihang University, China. The flow was generated through a flat acrylic glass plate which was vertically mounted in the tunnel. The dimensions of this plate are 7 \(\times\) 1 \(\mathrm {m^2}\), and an elliptic leading edge was employed. The width of the test section of water tunnel is 1 \(\mathrm {m}\), the plate was placed at 0.6 \(\mathrm {m}\) from the side wall of the tunnel. The depth of water was 0.6 \(\mathrm {m}\). Thus, this experiment was performed in \(0.6 \times 0.6\,\hbox {m}^2\) test section. The measurement volume was located at 6720 mm from the leading edge and set up to the center position of spanwise with major dimensions parallel to the wall (see Fig. 15). The TBL flow was tripped by a spanwise attached tripwire with diameter 3 mm at the location 100 mm downstream of the leading edge. A double-pulse laser with wavelength of 532 nm was used to illuminate the volume. The laser beam was expanded to a 16-mm thick laser sheet locating at 5.5 mm away from the plate. Four 12-bit \(2456 \times 2058\,\hbox {pixel}^2\) IMPERX B2520 CCD cameras were laterally placed along the side wall with satisfaction of the Scheimpflug condition. The view angle of cameras is about \(26^{\circ }\). Four \(f = 45\) mm Nikon camera lenses were used with apertures of \(f_\# = 8\) and 11 according to the forward and backward scattering, respectively. The configuration is shown in Fig. 15. The flow was seeded with hollow glass particles with a mean diameter of 20 \(\upmu \hbox {m}\) and density of \(1.05 \cdot 10^{-3}\,\hbox {g}\cdot \hbox {mm}^{-3}\). Cameras were calibrated using polynomial mapping function (Soloff et al. 1997). The calibration plane was translated along the wall-normal direction from 5.5 to 21.5 mm with steps of 2 mm. The initial mapping function was corrected by self-calibration method (Wieneke 2008), which reduced the error down to 0.1 pixels.

Before the Tomo-PIV experiment, the velocity profile of the TBL was calibrated by LDV measurement. The Musker profile method introduced by Kendall and Koochesfahani (2008) was applied to fit the LDV data and estimate the skin friction velocity \(u_\tau\). The parameters of the TBL are given in Table 4. \(\delta\) is the thickness of the turbulent boundary layer and \(y^*\) represents wall unit (inner length scale). The kinematic viscosity coefficient \(\nu\) was estimated according to the experimental temperature of 18 \(^{\circ }\)C. The Reynolds number based on momentum thickness (\(Re_\theta\)) and skin friction velocity (\(Re_\tau\)) is 5180 and 1770, respectively. The turbulence level of free-stream velocity was 0.97 % when free streamwise velocity \(U_\infty\) was about 0.413 \(\mathrm {m/s}\).

For the Tomo-PIV experiment, the laser was pulsed at a frequency of 4 Hz, with a time interval \(\Delta t = 1.2\) ms. Each camera captured 240 images during about 30 s, which can generated 120 velocity fields. The ppp is about 0.06 after preprocessing to remove the noises. In this work, four steps were applied to raw images for preprocessing:

-

(a)

Subtract the background. This approach is required to account for the historical minimum value over the image sets.

-

(b)

Subtract the sliding median value over 32 \(\times\) 32 pixels in one image. Median value is more effective than minimum especially in low seeding density.

-

(c)

Normalize the particle intensity by dividing the local standard deviation over \(32\times 32\) pixels (Sage 2011).

-

(d)

Adjust the intensity to original grayscale, and apply Gaussian filter on the kernel of \(3 \times 3\) with standard deviation 0.5.

In order to determine the thickness of the intensity profile of laser sheet, larger volumes with size of 60 \(\times\) 21 \(\times\) 40 \(\mathrm {mm^3}\) in x, y and z directions (streamwise, wall-normal and spanwise directions) were reconstructed by SF-MART and IntE-MART (\(N_M = 6\), \(N_E = 10\)). There are 382 voxels in the direction of thickness. As shown in Fig. 16, the mean intensity profile of 240 IntE-MART reconstructed volumes presents two clear drops at the edges highlighted by two red lines, which indicates a successful reconstruction. Therefore, the volume between two intensity edges (4.6 and 20 mm along y direction) was utilized for further velocity deduction.

The PDFs of particle peak intensity are given in Fig. 17, in which the blue and red represent the results of SF-MART and IntE-MART, respectively. The distribution of high-intensity particles in SF-MART is less than the IntE-MART and its low-intensity particles dominate the PDF. For IntE-MART, a local minimal point around 455 clearly exists in Fig. 17, which is consistent with the numerical test in Sect. 3.5 and could potentially be used as a separation point for ghost removal.

The comparison of distribution of particles on real TBL experiment. Blue the PDF of all particles of SF-MART with \(N_M=6\). Red the PDF of all particles of IntE-MART with \(N_M=6\) and \(N_E=10\). The bin size is 50 counts. The detail of the local minimum in black box is enlarged in the small figure

Reconstructed particle fields were evaluated in the cases with and without ghost removal. The velocity fields were deduced via VODIM method at final IV of 48 \(\times\) 48 \(\times\) 48 \(\mathrm {voxel^3}\) with 50 % overlap. The final velocity fields were validated by normalized median test (Westerweel and Scarano 2005). With the magnification of 55 \(\upmu \hbox {m}/\hbox {voxel}\), the final IV corresponds to a spatial resolution about \(2.64 \times 2.64 \times 2.64\,\hbox {mm}^3\) (\(38.8 \times 38.8 \times 38.8\) wall units). Thus, a velocity field over a grid of \(44\times 10 \times 29\) with a spacing of 1.32 mm (19.4 wall units) in all directions was obtained. The velocity volume is in the wall-normal region of \(93< y^+ < 267\).

The mean velocity profile (a) and fluctuating velocity profile (b) at \(Re_\tau =1770\) over volume depth from \(y^+=93\) to \(y^+=267\). In (b), the solid purple line represents the log-linear fitting of the LDV data. Both of the right y axis is the particle displacement denoted by voxel units. In the logarithmic law, the \(\kappa\) and B are 0.41 and 5.0, respectively

Figure 18 shows the mean velocity profile and the fluctuating velocity profile obtained from SF-MART and IntE-MART with and without ghost removal. For comparison, LDV data and theoretical profile of logarithmic law (\(\kappa = 0.41\) and \(B = 5.0\)) are also provided in the figure. In Fig. 18a, the LDV data show a good agreement with the theoretical profile, while a bias error (the slope of the profile) presents in all of the Tomo-PIV data which was also reported by Atkinson et al. (2011) and Gao (2011). The bias error is about 0.06 pixels at \(y^+=248\). Two potential reasons are considered. First, the particle displacement variation over the thickness is not large enough to minimize the effect of the coherent ghost particles (Elsinga et al. 2011). This kind of ghosts always has high intensity and can contribute to cross-correlation negatively. Second, the IntE-MART and ghost removal only eliminate the particles with very low intensity, which is consistent with the simulation test in Fig. 11. It is probable that some high-intensity ghost particles could be enhanced and would not be eliminated by the present method, while some real particles with low intensity would be weakened and eliminated due to variable particle intensity and image noise in the real experimental environment. For three different reconstruction treatments, there is a negligible difference observed.

The effect on RMS is also investigated in Fig. 18b. The solid purple curve is the log-linear fitting result of the LDV data, which has its 5 % underestimation as the dash-dot curve. According to Saikrishnan et al. (2006), the turbulent intensity of PIV-measured turbulent boundary layer is \(\sim\)95 % when the spatial resolution is \(\sim\)38.8 wall units at \(y^+=110\). Curves of three different techniques suggest an underestimation of the RMS of velocity due to the filtering effect of PIV correlation. With the resolution in the current experiment, the RMS profile is expected to be close to the 95 % turbulence intensity of the LDV data (the purple dash-dot curve). There is no difference observed between the IntE-MART with and without ghost removal, while the SF-MART has higher RMS at the lower wall-normal position (with high intensity at that location as show in Fig. 16). It is believed that SF-MART is more affected by ghost particles which can make the velocity variation become larger.

5 Conclusions

The most critical problems in Tomo-PIV reconstruction are particle elongation and ghosts. The elongation along thickness direction can be characterized by one-dimensional diffusion function. The distribution of ghost particles is also quantitatively described by exponential function. Taking advantages of these two points, an IntE-MART for Tomo-PIV is proposed in this work. The new method does not only shrink elongated particles through inverse diffusion function, but also suppress the intensity of weak particles (normally ghosts). The numerical simulations with and without noise are performed to test the performance of the IntE-MART. Results indicate that IntE-MART can cause a substantial improvement on quality of reconstruction and velocimetry when the noise level is less than 25 %. Additionally, this method can accelerate the reconstruction by increasing the sparsity of the intensity field.

Furthermore, this paper presents a systematic comparison of the distribution of the peak intensity between original SF-MART and IntE-MART. From the difference of the distributions, a potential separation point can be utilized to possibly discriminate the ghost particles and the actual particles. Without image noise, the simulated results of \(ppp = 0.15\) indicate that only 0.9 % ghosts are inseparable for the test case with constant peak value and only 1.5 % for the case of random peak value. With image noise, the ghost removal based on IntE-MART can also be used under low noise level. However, the ghost removal based IntE-MART can also filter out a number of actual particles which may lead to the reduction in measurement accuracy.

The application of IntE-MART to a real TBL experiment shows that there is no significant difference on the mean velocity profile between SF-MART and IntE-MART with/without ghost removal because the seeding density ppp is low. However, the RMS profile of IntE-MART is more accurate because of the reduction in the low-intensity particles. As discussed in Sect. 4, the bias error of the velocity profile is probably caused by remaining high-intensity ghost particles.

References

Atkinson C, Soria J (2009) An efficient simultaneous reconstruction technique for tomographic particle image velocimetry. Exp Fluids 47(4):553–568

Atkinson C, Dillon-Gibbons CJ, Herpin S, Soria J (2008) Reconstruction techniques for tomographic piv (tomo-piv) of a turbulent boundary layer. In: 14th International symposium on applications of laser techniques to fluid mechanics. Lisbon, Portugal

Atkinson C, Buchmann N, Stanislas M, Soria J (2010) Adaptive MLOS-SMART improved accuracy tomographic PIV. In: 15th International symposium on applications of laser techniques to fluid mechanics

Atkinson C, Coudert S, Foucaut JM, Stanislas M, Soria J (2011) The accuracy of tomographic particle image velocimetry for measurements of a turbulent boundary layer. Exp Fluids 50(4):1031–1056

Atkinson C, Buchmann NA, Soria J (2013) Computationally efficient storage of 3D particle intensity and position data for use in 3D PIV and 3D PTV. Meas Sci Technol 24(11):115,303

Champagnat F, Cornic P, Cheminet A, Leclaire B, Le Besnerais G, Plyer A (2014) Tomographic PIV: particles versus blobs. Meas Sci Technol 25(8):084,002

de Silva CM, Baidya R, Khashehchi M, Marusic I (2012) Assessment of tomographic PIV in wall-bounded turbulence using direct numerical simulation data. Exp Fluids 52(2):425–440

de Silva CM, Baidya R, Marusic I (2013) Enhancing Tomo-PIV reconstruction quality by reducing ghost particles. Meas Sci Technol 24(2):024,010

Discetti S, Astarita T (2012) A fast multi-resolution approach to tomographic PIV. Exp Fluids 52(3):765–777

Discetti S, Ianiro A, Astarita T, Cardone G (2013) On a novel low cost high accuracy experimental setup for tomographic particle image velocimetry. Meas Sci Technol 24(7):075,302

Discetti S, Natale A, Astarita T (2013b) Spatial filtering improved tomographic PIV. Exp Fluids 54(4):1–13

Elsinga GE (2013) Complete removal of ghost particles in Tomographic-PIV. In: 10th international symposium on particle image velocimetry-PIV13

Elsinga GE, Tokgoz S (2014) Ghost hunting-an assessment of ghost particle detection and removal methods for tomographic-PIV. Meas Sci Technol 25(8):084,004

Elsinga GE, Scarano F, Wieneke B, van Oudheusden BW (2006) Tomographic particle image velocimetry. Exp Fluids 41:933–947

Elsinga GE, Adrian RJ, van Oudheusden BW, Scarano F (2010) Three-dimensional vortex organization in a high-reynolds-number supersonic turbulent boundary layer. J Fluid Mech 644:35–60

Elsinga GE, Westerweel J, Scarano F, Novara M (2011) On the velocity of ghost particles and the bias errors in tomographic-PIV. Exp Fluids 50(4):825–838

Gao Q (2011) Evolution of eddies and packets in turbulent boundary layers. PhD thesis, University of Minnesota, Minneapolis

Gao Q, Wang HP, Shen GX (2013) Review on development of volumetric particle image velocimetry. Chin Sci Bull 58(36):4541–4556

Gesemann S, Schanz D, Schröder A, Petra S, Schnörr C (2010) Recasting tomo-PIV reconstruction as constrained and L1-regularized nonlinear least squares problem. In: 15th Int symp on applications of laser techniques to fluid mechanics

Ghaemi S, Scarano F (2011) Counter-hairpin vortices in the turbulent wake of a sharp trailing edge. J Fluid Mech 689:317–356

Herman GT, Lent A (1976) Iterative reconstruction algorithms. Comput Biol Med 6(4):273–294

Kendall A, Koochesfahani M (2008) A method for estimating wall friction in turbulent wall-bounded flows. Exp Fluids 44(5):773–780

Kim H, Westerweel J, Elsinga GE (2013) Comparison of Tomo-PIV and 3D-PTV for microfluidic flows. Meas Sci Technol 24(2):024,007

Lynch KP, Scarano F (2014) Experimental determination of tomographic PIV accuracy by a 12-camera system. Meas Sci Technol 25(8):084,003

Lynch KP, Scarano F (2015) An efficient and accurate approach to MTE-MART for time-resolved tomographic piv. Exp Fluids 56(3):1–16

Maas HG, Gruen A, Papantoniou D (1993) Particle tracking velocimetry in three-dimensional flows. Exp Fluids 15:133–146

Mersereau RM (1976) Direct fourier transform techniques in 3-D image reconstruction. Comput Biol Med 6(4):247

Michaelis D, Novara M, Scarano F, Wieneke B (2010) Comparison of volume reconstruction techniques at different particle densities. In: 15th Int symp on applications of laser techniques to fluid mechanics

Novara M, Scarano F (2012) Performances of motion tracking enhanced Tomo-PIV on turbulent shear flows. Exp Fluids 52(4):1027–1041

Novara M, Batenburg KJ, Scarano F (2010) Motion tracking-enhanced MART for tomographic PIV. Meas Sci Technol 21(3):035,401

Perona P, Malik J (1990) Scale-space and edge detection using anisotropic diffusion. IEEE Trans Pattern Anal Mach Intell 12(7):629–639

Petra S, Schröder A, Wieneke B, Schnörr C (2008) On sparsity maximization in tomographic particle image reconstruction. Lecture Notes in Computer Science, vol 5096. Springer, Berlin Heidelberg, Book section 30:294–303

Petra S, Schröder A, Schnörr C (2009) 3D Tomography from few projections in experimental fluid dynamics, notes on numerical fluid mechanics and multidisciplinary design, vol 106. Springer, Berlin Heidelberg, Book section 7:63–72

Petra S, Schnörr C, Becker F, Lenzen F (2013) B-SMART: Bregman-based first-order algorithms for non-negative compressed sensing problems. Lecture Notes in Computer Science, vol 7893. Springer, Berlin Heidelberg, Book section 10:110–124

Raffel M (2007) Particle image velocimetry: a practical guide, 2nd edn. Springer, Berlin

Sage D (2011) Local normalization [online: http://bigwww.epfl.ch/sage/soft/localnormalization/]

Saikrishnan N, Marusic I, Longmire E (2006) Assessment of dual plane PIV measurements in wall turbulence using DNS data. Exp Fluids 41(2):265–278

Scarano F (2002) Iterative image deformation methods in PIV. Meas Sci Technol 13(1):R1–R19

Scarano F (2013) Tomographic PIV: principles and practice. Meas Sci Technol 24(1):012,001

Scarano F, Poelma C (2009) Three-dimensional vorticity patterns of cylinder wakes. Exp Fluids 47(1):69–83

Schanz D, Schröder A, Gesemann S, Michaelis D, Wieneke B (2013) ’Shake The Box’: A highly efficient and accurate tomographic particle tracking velocimetry (TOMO-PTV) method using prediction of particle positions. In: 10th International symposium on particle image velocimetry

Schanz D, Schröder A, Gesemann S (2014) ’Shake The Box’—a 4D PTV algorithm: accurate and ghostless reconstruction of Lagrangian tracks in densely seeded flows. In: 17th International symposium on applications of laser techniques to fluid mechanics

Schröder A, Geisler R, Elsinga GE, Scarano F, Dierksheide U (2008) Investigation of a turbulent spot and a tripped turbulent boundary layer flow using time-resolved tomographic PIV. Exp Fluids 44:305–316

Soloff SM, Adrian RJ, Liu ZC (1997) Distortion compensation for generalized stereoscopic particle image velocimetry. Meas Sci Technol 8:1441–1454

Thomas L, Tremblais B, David L (2014) Optimization of the volume reconstruction for classical Tomo-PIV algorithms (MART, BIMART and SMART): synthetic and experimental studies. Meas Sci Technol 25(3):035,303

Tokgoz S, Elsinga GE, Delfos R, Westerweel J (2012) Spatial resolution and dissipation rate estimation in Taylor–Couette flow for tomographic PIV. Exp Fluids 53(3):561–583

Westerweel J, Scarano F (2005) Universal outlier detection for PIV data. Exp Fluids 39(6):1096–1100

Westerweel J, Elsinga GE, Adrian RJ (2013) Particle image velocimetry for complex and turbulent flows. Annu Rev Fluid Mech 45(1):409–436

Wieneke B (2008) Volume self-calibration for 3D particle image velocimetry. Exp Fluids 45:549–556

Wieneke B (2013) Iterative reconstruction of volumetric particle distribution. Meas Sci Technol 24(2):024,008

Worth NA, Nickels TB (2008) Acceleration of Tomo-PIV by estimating the initial volume intensity distribution. Exp Fluids 45(5):847–856

Worth NA, Nickels TB, Swaminathan N (2010) A tomographic PIV resolution study based on homogeneous isotropic turbulence DNS data. Exp Fluids 49(3):637–656

Ye ZJ, Gao Q, Wang HP, Wei RJ, Wang JJ (2015) Dual-basis reconstruction techniques for tomographic PIV. Sci China Technol Sci 58(9):1–8

Zeng GL (2001) Image reconstruction—a tutorial. Comput Med Imaging Graph 25(2):97–103

Acknowledgments

This work is supported by the National Natural Science Foundation of China (11472030, 11327202, 11490552).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, H., Gao, Q., Wei, R. et al. Intensity-enhanced MART for tomographic PIV. Exp Fluids 57, 87 (2016). https://doi.org/10.1007/s00348-016-2176-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00348-016-2176-y