Abstract

Purpose

The aim of the current narrative review was to summarize the available evidence in the literature on artificial intelligence (AI) methods that have been applied during robotic surgery.

Methods

A narrative review of the literature was performed on MEDLINE/Pubmed and Scopus database on the topics of artificial intelligence, autonomous surgery, machine learning, robotic surgery, and surgical navigation, focusing on articles published between January 2015 and June 2019. All available evidences were analyzed and summarized herein after an interactive peer-review process of the panel.

Literature review

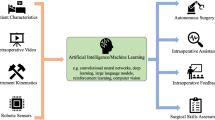

The preliminary results of the implementation of AI in clinical setting are encouraging. By providing a readout of the full telemetry and a sophisticated viewing console, robot-assisted surgery can be used to study and refine the application of AI in surgical practice. Machine learning approaches strengthen the feedback regarding surgical skills acquisition, efficiency of the surgical process, surgical guidance and prediction of postoperative outcomes. Tension-sensors on the robotic arms and the integration of augmented reality methods can help enhance the surgical experience and monitor organ movements.

Conclusions

The use of AI in robotic surgery is expected to have a significant impact on future surgical training as well as enhance the surgical experience during a procedure. Both aim to realize precision surgery and thus to increase the quality of the surgical care. Implementation of AI in master–slave robotic surgery may allow for the careful, step-by-step consideration of autonomous robotic surgery.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Minimally-invasive robotic surgery is increasingly used during major uro-oncological interventions [1, 2]. Its implementation strengthened the concept of “precision surgery” [3] which represents the next step towards the transition to the most effective treatment with minimal invasiveness for the patient [4]. Current robotic surgery makes use of technologically advanced platforms that adhere to the “master–slave” principle, wherein the surgeon—seated behind a sophisticated console—remotely controls the telemetry of the robot. The resulting synergism between man and machine provides the ability to accurately monitor the robot’s every move and to use this information to refine the feedback to the surgeon by applying artificial intelligence (AI).

Increasing the surgical accuracy via robotics comes with an increased technical complexity. This means that achievement of surgical proficiency requires the development of more advanced training models [5, 6]. In this context, modern, automatized and objective approaches for assisting this learning process such as AI could play an important role. Conversely, the same technologies may aid proficient surgeons by enhancing their interpretation of the surgical field (e.g., enhancing surgical guidance via augmented reality) [3].

AI systems entail computers that are trained to solve problems by mimicking human cognition. Machine learning (ML) and deep learning (DL) models are subfields of artificial intelligence that allow the computer to make predictions based on underlying data patterns. Evidence suggest that the interaction between medical professionals and ML algorithms improve the decision-making process by decreasing the error rate [7]. For instance, a ML approach using diagnostic and therapeutic information was able to improve lung cancer staging compared to a method based solely on clinical guidelines (accuracy of 93% for ML vs. 72% for the clinical approach) [8]. By allowing for the processing of multiple data sources and clinically valuable feedback, ML has shown higher predictive accuracy than classic statistical methods [9]. Understandably, AI induced reduction of the surgical error rate would increase the surgeon’s performance [10]. By actively stimulating the implementation of AI, it may be possible in the short term to reshape the surgical workflow in terms of precision, efficiency, and personalized approach for each specific patient profile.

The aim of the current narrative review was to summarize the available literature on AI methods used in robotic surgery, both during the training process and applied in clinical care.

Methods

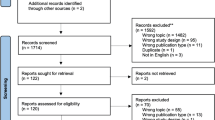

A literature search of the MEDLINE/PubMed and Scopus database was performed using the following keywords: artificial intelligence, autonomous surgery, machine learning, robotic surgery, and surgical navigation. All relevant English-language original and review studies published between January 2015 and June 2019 were analyzed by three authors (IA, EM, PD) and summarized after an interactive peer-review process of the panel.

Literature review

Although ML algorithms have been successfully implemented in a large number of domains (e. g., economics, engineering, telecommunication, and medical diagnosis) their use in the surgical field is still scarce [11]. Moreover, only few studies specifically focused on applying ML in robotic surgery [12,13,14,15,16,17,18]. These analyses reported promising results in terms of development of an objective and automated tool for skills assessment, increasing efficiency of operating room use and predicting surgical outcomes (Table 1).

AI and surgical skill assessment

The growing public concern regarding surgeon proficiency [12], as well as the widespread implementation of robotic surgery have led to an increased need for adequate structured training models and objective evaluation tools of the clinical competence. Several methods were developed to improve the traditional training modality, as the novel surgical techniques require adapted training for both technical and non-technical skills [19]. Combined use of dry-lab, wet-lab, mentored training, and clinical practice has proven to be effective [20, 21]. These modular training methods allow the acquisition of adequate robotic surgical skills under the supervision of expert surgeons. Despite the crucial importance of assessing trainees’ performances and the presence of structured programs for trainees [6, 22,23,24], subjective assessment, lack of consistency and limited interobserver reproducibility represent important biases [25,26,27]. Therefore, there is an impending need for developing objective metrics that provide a completely unbiased evaluation [28].

Using ML algorithms that integrate motion analysis, energy, and force usage, it is possible to obtain an automated, accurate and quantitative skills assessment [12,13,14]. The key step in the ML based skills assessment is to extract and quantify meaningful features of the surgical gestures that can be representative of surgeon dexterity. These tools may be used to provide feedback during the surgeon’s learning curve as well as to ensure periodic surgeon re-evaluation and credentialing [29].

The first experience with ML methods to evaluate robotic surgery skills level was reported by Fard et al. [12] (Table 1). The authors recorded metrics of eight robotic surgeons with varying levels of experience (novice and experts) while each of them performed for five times surgical tasks such as suturing and knot-tying. Kinematic data of the robotic arms was extracted and quantified using the following global-movement metrics: task completion time, path length, depth perception, speed, motion smoothness, curvature, turning angle, and tortuosity. Based on these data, the authors demonstrated with 90% accuracy that the automated ML algorithm allowed differentiation between a novice and expert surgeon just a few seconds after tasks completion. However, given the small number of participants, future studies are needed to validate this model on a larger cohort.

Similarly, Wang et al. [13] (Table 1) developed a DL model based on an artificial neural network, which represents a more complex ML approach. This model was inspired by the inter-neuronal connections in biological nervous systems, combining different ML algorithms to process data and learn from input information. They considered pattern recognition to be the most important application of DL algorithms. As such, their model was used to recognize motion characteristics during robotic surgery training. Eight surgeons were assessed while performing five trials of different tasks, such as suturing, knot-tying and needle passing. The artificial neural network model showed an accuracy of > 90% for robotic surgical skills assessment within 1–3 s. This feedback was provided without the need for gesture segmentation or entire trial observation.

Ershad et al. [14] (Table 1; Fig. 1) proposed an alternative ML skills assessment, namely the analysis of the surgeon’s “movement style”. The rationale of this approach stems from the fact that an expert surgeon may execute maneuvers with ease and efficacy, in a calm, relaxed, and coordinated fashion compared to a novice surgeon. They obtained kinematic data from 14 surgeons with different experience level in robotic surgery, each of whom performed two tasks (ring and rail and suture) for three times. Movement tracking was realized by applying three-dimensional electromagnetic tracking of the surgeons shoulder, wrist and hand positions during virtual simulator training [14]. Training videos were categorized by crowdsourcing using a number of adjectives that describe the style of behavior. This was subsequently used to train a classifier model. The authors reported a 68.5% increase in skills level classification accuracy compared to raw kinematic data acquisition. One potential advantage of this approach is that it eliminates the need for adequate surgical expertise for interpreting surgical skill level, since it takes into account qualitative motion features (e.g., smooth, calm, and coordinated) that are surgeon-dependent, rather than task-dependent.

AI to overcome the lack of haptic feedback

Although robotic surgery routinely implements 3D visualization of the surgical field and improves the surgeon’s dexterity during procedures, the dissection movements, applied force and assessment of tissue responses are based only on visual cues. The lack of haptic feedback might impair the surgical outcomes by excessive force applied to fragile structures, disruption of anatomical planes and suture breakage. For instance, excessive traction applied on the neuro-vascular bundles during robotic radical prostatectomy (RARP) leads to neuropraxia and delay in the recovery of the sexual function [3]. On the contrary, application of insufficient force during knot-tying can lead to suture failure. In this regard, Dai et al. [16] (Table 1) developed a suture breakage warning system that uses sensors mounted on the robotic instruments and provides vibrotactile feedback when the tension is approaching the suture’s failure point. The authors showed that the use of the haptic feedback system significantly reduced suture breakage by 59%, ensured 3.8% less knot slippage and showed higher consistency in repeated tasks in novice surgeons. Furthermore, by practicing with the tactile feedback system, the surgeons showed lower rates of suture breakage (17% for the first 4 knots vs. 2% for the following 6 knots, p < 0.05) [16]. Conversely, this was not observed when surgeons practiced without haptic feedback, where a high rate of suture breakage was reported (20%) [16].

AI and its impact on surgical logistics

Although there is a significant number of robotic systems available worldwide, healthcare systems consider the costs of surgery to be high [2]. Various strategies have been discussed to reduce costs, such as minimizing the instrumentation [30, 31], increasing the case-load or starting multi-specialty robotic programs [32]. All these strategies remain at surgeon or hospital discretion. One of the most important factors affecting surgical costs is the operative time (OT) [33]. A more accurate prediction of OT could help improve logistics, something that is already routinely applied in industry [17, 33].

Zhao et al. [17] (Table 1) showed that a ML approach can also significantly refine the time estimation of robotic procedures. The authors developed a model to predict case duration for robotic surgery including 28 factors related to the patient (e.g., age, obesity, malignancy, tumor location, and comorbidities), to the procedure type, as well as to the robotic system model and the expertise of the table-side assistant (e.g., resident, fellow, assistant, and attending). A number of 424 robotic urological, gynecological, and general surgery procedures were analyzed and used to construct the ML model. The application of this predictive model led to an increase of 16.8% in the accuracy of prediction of OT, showing that its implementation may improve the daily operative time schedule with a significant impact on the utilization of the operating room resources.

Furthermore, the automatic assessment of surgical skills and kinematic data obtained from the surgeon was shown to have a predictive role for the surgical outcomes [34, 35], as hypothesized by Goldenberg et al. [36] for RARP. Hung et al. [15] (Table 1) performed an analysis of automated performance metrics derived from the data of the “dVLogger” (recording device attached to the robotic system that captures both video and movement data, Intuitive Surgical) of 78 full-length RARP. The analysis included 25 features regarding overall/dominant and non-dominant instrument kinematic data (e.g., travel time, path length, movement, and velocity) and system events (e.g., frequency of clutch use, camera movement, third arm, and energy use) with the purpose to evaluate their accuracy for the prediction of surgical outcomes. A ML model was able to predict length of stay based on automated surgical performance metrics with an accuracy of 87.2%. Furthermore, predicted patient outcomes such as OT and Foley catheter duration were significantly correlated with the actual outcome (r = 0.73, p < 0.001 for OT; r = 0.45, p < 0.001 for Foley catheter duration). The most important predictor among surgical performance metrics was camera manipulation (e.g., idle time, adjustment frequency and position adjustment) which may act as an indicator of robotic surgical expertise. This study represents a step towards the optimization of logistics, as it may lead, for example, to a cost-effective individualized catheter removal time [37].

AI and prediction of postoperative outcomes

In a recent review, Chen et al. [38] showed that several studies support the superiority of AI systems trained with clinical, pathologic, imaging, and genomic data over D’Amico risk stratification for the prediction of treatment outcome, representing a step towards individualized patient care. But apart from patient-related factors, there are also surgeon-related factors that can impact postoperative outcome of the patients. Hung et al. [18] (Table 1) assessed the role of automated performance metrics and DL models for the prediction of urinary continence (UC) in a group of 100 patients who underwent RARP. Urinary continence was defined as the use of no pads during 2 years follow-up. The association of kinematic data with clinical patient features showed the highest accuracy in prediction of UC recovery at a median of 4 months after RARP compared to clinical features only (concordance index 59.9% vs. 57.7% for sole automated performance metrics vs. 56.2% for clinical features only). Furthermore, the automated performance metrics measured during apical dissection and vesicourethral anastomosis ranked highest in this dataset, suggesting that surgical technique is more important than patient features to achieve early UC, as previously confirmed by Goldenberg et al. [36]. In their report, Hung et al. [18] showed that the patients operated by surgeons with more efficient automated performance metrics had a 10.8% and 9.1% higher continence rate at 3 months and 6 months, respectively, compared to patients operated by surgeons with less efficient metrics.

AI and surgical guidance

Apart from the applications in surgical skills assessment and prediction of outcomes, AI systems can provide help to already proficient surgeons by improving the visualization of the intraoperative anatomy, using augmented reality [39]. When augmented reality overlay such as 3D reconstruction of the surgical target are accurately positioned over the patient anatomy, it enhances the interpretation of surgical field [40]. Although the preliminary advantages of these systems have been proven, their application in real cases is still experimental due to the lack of registration accuracy in real-life setting [41, 42]. In this context, ML automatized registration models can be used to adapt for organs movements, but more advancements need to be made in video based recognition of the surgical field. Furthermore, the automatic identification of the robotic instruments from the background of the visual field can avoid their occlusion by the image overlay and enhance surgical guidance [43]. When ML can compensate for the motility of the organs during surgery and can provide tissue and instrument recognition, a more accurate connection can be made between preoperative 3D imaging data-sets and the patient. This will also support the implementation of “GPS-like” surgical navigation strategies [44].

Future prospective: AI for development of autonomous robotic surgery

Recent development in AI systems and ML models have paved the path for the next generation of surgical robots that can learn and autonomously perform different tasks under human supervision [45]. Autonomous robots have been very successful in industry, but there is still much to be done before such systems can handle the complexity of soft tissues and unforeseen events. Potential applications of autonomous robotic surgery could be procedures that require precise dissection in restricted spaces, thus preventing iatrogenic surgical injuries. For example, procedures in otorhinolaryngology [46], ophthalmology [47], gynaecology [48], orthopaedics [49] and, ideally, in urology can be enhanced by these robots.

The first use of autonomous robots for performing surgical tasks in humans was described on cadavers [50]. Cadaver models are ideal for robot training, as they allow the use of different contrast agents or tracers to guide autonomous dissection without having the issue of toxicity. In real-time surgery, these tracers can help the identification of individual variation of organs and blood vessels, leading to the tailoring of the surgical technique. Furthermore, the ML process can be enhanced by the use of sensors to measure the tool-tissue interaction, potentially leading to tissue recognition [51].

Before entering the clinical practice, performance standards for evaluating autonomous robotic surgery have to be defined. The most important issues to be assessed are adaptation to unforeseen events, accuracy of surgical gestures and repeatability [50]. In this regard, the automation of surgical gestures can overcome the inherent differences between surgeons and can lead to more consistent and predictable results.

Still, there are some ethical and safety issues that arise regarding autonomous robotic surgery. First of all, the use of a surgical robot cannot be done without patient consent. Second, it is important to assess in what situations the robot has been trained and what it has learned during the training process to prevent inappropriate information storing and impair patient safety. Also, the possibility to adapt to unforeseen situation or complications is one of the main challenges facing autonomous surgery, as robotic systems will usually choose a random solution to a situation it has not been trained in [50]. As such, the use of more diverse training models can improve the performance of autonomous surgical robots in various situations.

Conclusion

Robotic surgery represents a good setting to refine the application of AI in surgical practice. The preliminary results of its clinical implementation are encouraging. Machine learning approaches enable the possibility to assess and clinically interpret large volumes of data, providing important feedback regarding surgical skills acquisition, efficiency of surgical procedure planning and prediction of postoperative outcomes. However, standardization of data collection and interpretation and validation on a larger number of subjects is needed for ensuring a widespread implementation.

The use of AI in robotic surgery could change the surgical training, target definition and surgical guidance, surgical logistics, outcomes and may eventually result in the standardization and consideration of autonomous surgery. To allow this, a multidisciplinary collaboration between surgeons, engineers and software developers is mandatory. Ultimately AI should help advance precision surgery and improve both functional and disease related outcomes without compromising patient safety.

References

Bachman AG, Parker AA, Shaw MD et al (2017) Minimally invasive versus open approach for cystectomy: trends in the utilization and demographic or clinical predictors using the National Cancer Database. Urology 103:99–105

Mazzone E, Mistretta FA, Knipper S et al (2019) Contemporary North-American assessment of robot-assisted surgery rates and total hospital charges for major surgical uro-oncological procedures. J Endourol 33(6):438–447

Autorino R, Porpiglia F, Dasgupta P et al (2017) Precision surgery and genitourinary cancers. Eur J Surg Oncol 43(5):893–908

Veronesi U, Stafyla V, Luini A, Veronesi P (2012) Breast cancer: from “maximum tolerable” to “minimum effective” treatment. Front Oncol 2:125

Gallagher AG (2018) Proficiency-based progression simulation training for more than an interesting educational experience. J Musculoskelet Surg Res 2:139–141

Satava RM, Stefanidis D, Levy JS et al (2019) Proving the effectiveness of the fundamentals of robotic surgery (FRS) skills curriculum: a single-blinded, multispecialty, multi-institutional randomized control trial. Ann Surg. https://doi.org/10.1097/SLA.0000000000003220

Wang D, Khosla A, Gargeya R et al (2016) Deep learning for identifying metastatic breast cancer. arXiv preprint arXiv:1606.05718

Bergquist S, Brooks G, Keating N et al (2017) Classifying lung cancer severity with ensemble machine learning in health care claims data. Proc Mach Learn Res 68:25–38

Hashimoto DA, Rosman G, Rus D, Meireles OR (2018) Artificial intelligence in surgery: promises and perils. Ann Surg 268(1):70–76

Shouval R, Hadanny A, Shlomo N et al (2017) Machine learning for prediction of 30-day mortality after ST elevation myocardial infraction: an acute coronary syndrome Israeli survey data mining study. Int J Cardiol 246:7–13

Kassahun Y, Yu B, Tibebu AT et al (2016) Surgical robotics beyond enhanced dexterity instrumentation: a survey of machine learning techniques and their role in intelligent and autonomous surgical actions. Int J Comput Assist Radiol Surg 11(4):553–568

Fard MJ, Ameri S, Darin Ellis R et al (2018) Automated robot-assisted surgical skill evaluation: predictive analytics approach. Int J Med Robot 14(1):e1850

Wang Z, Majewicz Fey A (2018) Deep learning with convolutional neural network for objective skill evaluation in robot-assisted surgery. Int J Comput Assist Radiol Surg 13(12):1959–1970

Ershad M, Rege R, Majewicz Fey A (2019) Automatic and near real-time stylistic behavior assessment in robotic surgery. Int J Comput Assist Radiol Surg 14(4):635–643

Hung AJ, Chen J, Che Z et al (2018) Utilizing machine learning and automated performance metrics to evaluate robot-assisted radical prostatectomy performance and predict outcomes. J Endourol 32(5):438–444

Dai Y, Abiri A, Pensa J et al (2019) Biaxial sensing suture breakage warning system for robotic surgery. Biomed Microdevices 21(1):10

Zhao B, Waterman RS, Urman RD, Gabriel RA (2019) A machine learning approach to predicting case duration for robot-assisted surgery. J Med Syst 43(2):32

Hung AJ, Chen J, Ghodoussipour S et al (2019) A deep-learning model using automated performance metrics and clinical features to predict urinary continence recovery after robot-assisted radical prostatectomy. BJU Int. https://doi.org/10.1111/bju.14735

Collins JW, Dell'Oglio P, Hung AJ, Brook NR (2018) The importance of technical and non-technical skills in robotic surgery training. Eur Urol Focus 4(5):674–676

Lovegrove CE, Elhage O, Khan MS et al (2017) Training modalities in robot-assisted urologic surgery: a systematic review. Eur Urol Focus 3(1):102–116

Mazzone E, Dell’Oglio P, Mottrie A (2019) Outcomes report of the first ERUS robotic urology curriculum-trained surgeon in Turkey: the importance of structured and validated training programs for global outcomes improvement. Turk J Urol 45(3):189–190

Mottrie A, Novara G, van der Poel H et al (2016) The European Association of Urology robotic training curriculum: an update. Eur Urol Focus 2(1):105–108

Larcher A, De Naeyer G, Turri F et al (2019) The ERUS curriculum for robot-assisted partial nephrectomy: structure definition and pilot clinical validation. Eur Urol 75(6):1023–1031

Dell’Oglio P, Turri F, Larcher A et al (2019) Definition of a structured training curriculum for robot-assisted radical cystectomy: a Delphi-consensus study led by the ERUS Educational Board. Eur Urol Suppl 18(1):e1116–e1119

Chen J, Cheng N, Cacciamani G et al (2019) Objective assessment of robotic surgical technical skill: a systematic review. J Urol 201(3):461–469

Schout BMA, Hendrikx AJM, Scheele F, Bemelmans BLH, Scherpbier AJJA (2010) Validation and implementation of surgical simulators: a critical review of present, past, and future. Surg Endosc 24(3):536–546

Goldenberg MG, Lee JY, Kwong JCC, Grantcharov TP, Costello A (2018) Implementing assessments of robot-assisted technical skill in urological education: a systematic review and synthesis of the validity evidence. BJU Int 122(3):501–519

Ganni S, Botden SMBI, Chmarra M, Goossens RHM, Jakimowicz JJ (2018) A software-based tool for video motion tracking in the surgical skills assessment landscape. Surg Endosc 32(6):2994–2999

Hung AJ, Chen J, Gill IS (2018) Automated performance metrics and machine learning algorithms to measure surgeon performance and anticipate clinical outcomes in robotic surgery. JAMA Surg 153(8):770–771

Delto JC, Wayne G, Yanes R, Nieder AM, Bhandari A (2015) Reducing robotic prostatectomy costs by minimizing instrumentation. J Endourol 29(5):556–560

Ramirez D, Ganesan V, Nelson RJ, Haber GP (2016) Reducing costs for robotic radical prostatectomy: three-instrument technique. Urology 95:213–215

Basto M, Sathianathen N, Te Marvelde L et al (2016) Patterns-of-care and health economic analysis of robot-assisted radical prostatectomy in the Australian public health system. BJU Int 117(6):930–939

Pandit JJ, Carey A (2006) Estimating the duration of common elective operations: implications for operating list management. Anaesthesia 61:768–776

Birkmeyer J, Finks J, O'Reilly A et al (2013) Surgical skill and complication rates after bariatric surgery. N Engl J Med 369:1434–1442

Beulens AJW, Brinkman WM, Van der Poel HG et al (2019) Linking surgical skills to postoperative outcomes: a Delphi study on the robot-assisted radical prostatectomy. J Robot Surg. https://doi.org/10.1007/s11701-018-00916-9

Goldenberg MG, Goldenberg L, Grantcharov TP (2017) Surgeon performance predicts early continence after robot-assisted radical prostatectomy. J Endourol 31(9):858–863

Atug F, Sanli O, Duru AD (2018) Editorial comment on: utilizing machine learning and automated performance metrics to evaluate robot-assisted radical prostatectomy performance and predict outcomes by Hung et al. J Endourol 32(5):445

Chen J, Remulla D, Nguyen JH et al (2019) Current status of artificial intelligence applications in urology and their potential to influence clinical practice. BJU Int. https://doi.org/10.1111/bju.14852

Navaratnam A, Abdul-Muhsin H, Humphreys M (2018) Updates in urologic robot assisted surgery. F1000Res. https://doi.org/10.12688/f1000research.15480.1

Kong SH, Haouchine N, Soares R et al (2017) Robust augmented reality registration method for localization of solid organs’ tumors using CT-derived virtual biomechanical model and fluorescent fiducials. Surg Endosc 31(7):2863–2871

Bertolo R, Hung A, Porpiglia F et al (2019) Systematic review of augmented reality in urological interventions: the evidences of an impact on surgical outcomes are yet to come. World J Urol. https://doi.org/10.1007/s00345-019-02711-z

van Oosterom MN, van der Poel HG, Navab N, van de Velde CJ, van Leeuwen FW (2018) Computer-assisted surgery: virtual-and augmented-reality displays for navigation during urological interventions. Curr Opin Urol 28(2):205–213

Pakhomov D, Premachandran V, Allan M, Azizian M, Navab N (2017) Deep residual learning for instrument segmentation in robotic surgery. arXiv preprint arXiv:1703.08580

Zhao Y, Guo S, Wang Y et al (2019) A CNN-based prototype method of unstructured surgical state perception and navigation for an endovascular surgery robot. Med Biol Eng Comput. https://doi.org/10.1007/s11517-019-02002-0

O’Sullivan S, Nevejans N, Allen C et al (2019) Legal, regulatory, and ethical frameworks for development of standards in artificial intelligence (AI) and autonomous robotic surgery. Int J Med Robot 15(1):e1968

Fichera L, Dillon NP, Zhang D et al (2017) Through the eustachian tube and beyond: new miniature robotic endoscope to see into the middle ear. IEEE Robot Autom Lett 2(3):1488–1494

Yang S, MacLachlan RA, Martel JN, Lobes LA Jr, Riviere CN (2016) Comparative evaluation of handheld robot-aided intraocular laser surgery. IEEE Trans Robot 32(1):246–251

Fornalik H, Fornalik N, Kincy T (2015) Advanced robotics: removal of a 25 cm pelvic mass. J Minim Invasive Gynecol 22(6S):S154

Tsai TY, Dimitriou D, Li JS, Kwon YM (2016) Does haptic robot-assisted total hip arthroplasty better restore native acetabular and femoral anatomy? Int J Med Robot 12(2):288–295

O’Sullivan S, Leonard S, Holzinger A et al (2019) Anatomy 101 for AI-driven robotics: explanatory, ethical and legal frameworks for development of cadaveric *skills training standards in autonomous robotic surgery/autopsy. Int J Med e2020

Chen CH, Suehn T, Illanes A et al (2018) Proximally placed signal acquision sensoric for robotic tissue tool interactions. Curr Dir Biomed Eng 4(1):67–70

Acknowledgements

This research was conducted with the support of the European Urological Scholarship Programme and an NWO TTW VICI grant (TTW BTG 16141).

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

Protocol/project development: IA, EM, PDO, and AM. Data collection or management: IA, EM, and PDO. Data analysis: IA, EM, and PDO. Manuscript writing: IA, EM, and PDO. Manuscript editing: FWBL, GN, MNO, SB, TB, SOS, PJL, AB, NC, FDH, PS, HDP, and AM.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Andras, I., Mazzone, E., van Leeuwen, F.W.B. et al. Artificial intelligence and robotics: a combination that is changing the operating room. World J Urol 38, 2359–2366 (2020). https://doi.org/10.1007/s00345-019-03037-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00345-019-03037-6