Abstract

The formation of singularities in finite time in nonlocal Burgers’ equations, with time-fractional derivative, is studied in detail. The occurrence of finite-time singularity is proved, revealing the underlying mechanism, and precise estimates on the blowup time are provided. The employment of the present equation to model a problem arising in job market is also analyzed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The study of singularities occurrence in nonlinear evolution problems constitutes a source of intriguing questions deeply related to the mathematical and physical issues. The basic example of a PDE evolution leading to shock formation is given by the so-called Burgers’ equation [actually introduced by Airy (1845)], which represents a simple model for studying the interaction between nonlinear and dissipative phenomena. Moreover, this equation exhibits the basic nonlinear mechanism shared by the more involved nonlinearities inherent to Euler and Navier–Stokes equations (Kiselev and Šverák 2014). In exploiting a possible scenario for singularity formation in nonlocal evolution problems, continuing a line of research pursued in Coclite et al. (2019) from a different perspective, here we investigate the effect of a nonlocal-in-time modification of Burgers’ equation with respect to singularity creation.

Besides their interest from the purely mathematical point of view, the nonlocal operators with respect to the time variable find a number of concrete applications in many emerging fields of research like, for instance, the anomalous transportation problems (see Li and Wang 2003), the heat flow through ramified media (see Arkhincheev and Baskin 1991) and the theory of viscoelastic fluids [see Section 10.2 in Podlubny (1999) and the references therein]. Specifically, we will also present here a concrete model from job market analysis which naturally leads to a fractional Burgers’ equation. See also Chapter 1 in Carbotti et al. (2019) for several explicit motivations for fractional derivative problems.

Focusing on the case of inviscid fluid mechanics, we recall that in the classical Burgers’ equation explicit examples show the possible formation of singularities in finite time, see Bressan (2000). In particular, an initial condition with unitary slope leads to a singularity at a unitary time.

The goal of this paper is to study whether a similar phenomenon persists in nonlocal Burgers’ equations with a time-fractional derivative. That is, we investigate how a memory effect in the equation affects the singularity formation.

Our main results are the following:

The memory effect does not prevent singularity formations.

For initial data with unitary slopes, the blowup time can be explicitly estimated from above, in a way that is uniform with respect to the memory effect (namely, it is not possible to slow down indefinitely the singularity formation using only memory effects).

Explicit bounds from below of the blowup times are also possible.

The precise mathematical setting in which we work is the following. First of all, to describe memory effects, we make use of the left Caputo derivative of order \(\alpha \in (0,1)\) with initial time \(t_0\) for \(t\in (t_0,+\infty )\), defined by

where \(\Gamma \) is the Euler Gamma function.

In this framework, we consider the time-fractional Burgers’ equation driven by the left Caputo derivative, given by

In the recent literature, various types of fractional versions of the classical Burgers’ equation were taken into account from different perspectives, see, e.g., Miškinis (2002), Inc (2008), Harris and Garra (2013), Wu and Baleanu (2013), Esen and Tasbozan (2016), Saad et al. (2017), Yokuş and Kaya (2017) and the references therein. (In this paper, we also propose a simple motivation for Eq. (1.2) in Sect. 5.) When \(\alpha =1\), Eq. (1.2) reduces to the classical inviscid Burgers’ equation

Remark 1.1

We notice that examples of solutions to classical Burgers’ equation, exhibiting instantaneous and spontaneous formation of singularities, work well also in the present case. Indeed, the aim of our study relies in understanding, through quantitative estimates, how the finite-time creation of singularities can be affected by the presence of a fractional-in-time derivative.

We prove that the time-fractional Burgers’ equation driven by the left Caputo derivative may develop singularities in finite time, according to the following result:

Theorem 1.2

There exist a time \(T_\star >0\), a function \(u_0\in C^\infty ({\mathbb {R}})\), a solution \(u:{\mathbb {R}}\times [0,T_\star )\rightarrow {\mathbb {R}}\) of the time-fractional Burgers’ equation in (1.2) and a sequence \(t_n\nearrow T_\star \) as \(n\rightarrow +\infty \) such that

Remark 1.3

The function u in Theorem 1.2 will be constructedFootnote 1 using the separation of variable method, by taking

where v is the solution of the time-fractional equation

Interestingly, this strategy is compatible with the classical case \(\alpha =1\). Indeed, for \(\alpha =1\), (1.5) boils down to

and it can be checked directly that (1.6) possesses the explicit singular solution

When \(\alpha =1\), the function in (1.7) can be used, by separation of variables, to construct a singular solution of the classical Burgers’ Equation (1.3), by considering the function

Indeed, the function in (1.8) solves (1.3) and diverges at time 1. In this sense, both the fractional and the classical cases share the common feature of allowing the construction of singular solutions by multiplying by x a singular solution in t (and the singular solution in t corresponds to Eq. (1.6) in the classical case, and to Eq. (1.5) in the fractional case).

We also point out that in the classical case the blowup time \(T_\star \) of (1.8) is exactly 1: In this sense, we will see that our fractional construction in Theorem 1.2 recovers the classical case in the limit \(\alpha \nearrow 1\) also in terms of blowup time, as will be discussed in Remarks 1.5 and 1.7 .

We also observe that it is possible to give an explicit upper bound on the blowup time for the fractional solution (1.4) in Theorem 1.2, as detailed in the following result:

Theorem 1.4

If \(T_\star \) is the blowup time found in Theorem 1.2, we have that

In particular, for all \(\alpha \in (0,1)\),

where \(\gamma \) is the Euler–Mascheroni constant.

Remark 1.5

One can compare the general estimate in (1.10), valid for all \(\alpha \in (0,1)\), with the blowup time for the classical solution in (1.8), in which \(T_\star =1\). Indeed, we point out that the right-hand side of (1.9) approaches 1 as \(\alpha \nearrow 1\). Hence, in view of Remark 1.3, we have that the bound in (1.9) is optimal when \(\alpha \nearrow 1\).

It is also possible to obtain a lower bound on the blowup time involving the right-hand side in (1.9), up to a reminder which is arbitrarily small as \(\alpha \nearrow 1\). Indeed, we have the following result:

Theorem 1.6

If \(T_\star \) is the blowup time found in Theorem 1.2, we have that for any \(\delta >0\) there exists \(c_\delta >0\) such that

Remark 1.7

We observe that the right-hand side of (1.11) approaches \(1/(1+\delta )\) as \(\alpha \nearrow 1\), which, for small \(\delta \), recovers the unitary blowup time of the classical solution in (1.8).

The strategy to prove Theorems 1.4 and 1.6 relies on the construction of appropriate barriers and a comparison result: To this end, a careful choice of structural parameters is needed (and, of course, this choice plays a crucial role in the bounds of the blowup times detected in this note).

Remark 1.8

Of course, the blowup time estimates in Theorems 1.4 and 1.6 are specific for the singular solution in (1.4), and other singular solutions have in general different blowup times. As a matter of fact, by scaling, if u is a solution of (1.2), then so is \(u^{(\lambda )}(x,t):=u(\lambda ^\alpha x,\lambda t)\), for all \(\lambda >0\), with initial datum \(u^{(\lambda )}_0(x):=u_0(\lambda ^\alpha x)\). In particular, if u is the function in (1.4) and \(T_\star \) is its blowup time, then the blowup time of \(u^{(\lambda )}\) is \(T_\star /\lambda \). That is, when \(\lambda \in (1,+\infty )\), the slope of the initial datum increases and accordingly the blowup time becomes smaller. This is the reason for which we choose the setting in (1.4) to normalize the slope of the initial datum to be unitary.

Remark 1.9

It is interesting to observe the specific effect of the Caputo derivative on the solutions in simple and explicit examples. From our perspective, though the Caputo derivative is commonly viewed as a “memory” effect, the system does distinguish between a short-term memory effect, which enhances the role of the forcing terms, and a long-term memory effect, which is more keen to remember the past configurations.

To understand our point of view on this phenomenon, one can consider, for \(\alpha \in (0,1)\), the solution \(u=u(t)\) of the linear equation

where \(0<p_1<\dots <p_N\) and \(\delta _p\) is the Dirac delta at the point \(p\in {\mathbb {R}}\).

When \(\alpha =1\), Eq. (1.12) reduces to the ordinary differential equation with impulsive forcing term given by

Up to negligible sets, the solution of (1.13) is the step function

On the other hand, Eq. (1.12) is a Volterra-type problem whose explicit solution is given by

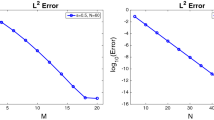

Notice that the solution in (1.15) recovers (1.14) as \(\alpha \nearrow 1\). Nevertheless, the sharp geometric difference between the solutions in (1.14) and (1.15) is apparent (see Fig. 1).

Indeed, while the classical solutions experience a unit jump at the times where the impulses take place, the structure of the fractional solutions exhibits a more complicated, and “less monotone,” behavior. More specifically, on the one hand, for fractional solutions, the short-term memory effect of each impulse is to create a singularity toward infinity, and in this sense its impact on the solution is much stronger than in the classical case. On the other hand, the solution in (1.15) approaches zero outside the times in which the impulses occur, thus tending to recover the initial datum in view of a long-term memory effect.

Plot of the solutions in (1.14) and (1.15) with the following parameters: \(N=4\), \(p_1=1\), \(p_2=2\), \(p_3=3\) and \(p_4=4\). The different plots correspond to the cases \(\alpha =\frac{1}{10}\), \(\alpha =\frac{1}{4}\), \(\alpha =\frac{1}{2}\), \(\alpha =\frac{3}{4}\), \(\alpha =\frac{7}{8}\), \(\alpha =\frac{9}{10}\), \(\alpha =\frac{99}{100}\) and \(\alpha =1\)

It is interesting to recall that monotonicity, comparison principles and blowup analysis for fractional equations have been also considered in Feng et al. (2018), Feng et al. (2018). See also Li and Liu (2018) for compactness criteria, Allen et al. (2016, 2017) for time-fractional equations of porous medium type and Dipierro et al. (2020) for the analysis of the fundamental solutions of time-fractional equations.

The paper is organized as follows: Sects. 2, 3 and 4 are devoted to the proofs of Theorems 1.2, 1.4 and 1.6 , respectively. In Sect. 5, we propose a job market motivation for Eq. (1.2).

2 Proof of Theorem 1.2

The proof of Theorem 1.2 relies on a separation of variables method (as it will be apparent in the definition of the solution u in (2.14) at the end of this proof). To make this method work, one needs a careful analysis of the solutions of time-fractional equations that we now discuss in detail. Fixed \(M\in {\mathbb {N}}\cap [ 4,+\infty )\), for any \(r\in {\mathbb {R}}\) we define \(f_M(r):=\min \{ r^2,M^2\}\). We let \(v_M\) be the solution of the Cauchy problem

The existence and uniqueness of the solution \(v_M\), which is continuous up to \(t=0\), is warranted by Theorem 2 on page 304 of Kilbas and Marzan (2004). In addition, by Theorem 1 on page 300 of Kilbas and Marzan (2004), we know that this solution can be represented in an integral form by the relation

In particular, since \(f_M\geqslant 0\), we have that \(v_M\geqslant 1\). Also, by continuity at \(t=0\), there exists \(\delta >0\) such that

We claim that

Indeed, if \(t\in (0,\delta )\) and \(M\geqslant 4\), we have that

thanks to (2.3), and therefore \({}^C \! D^\alpha _{0,+} v_4(t) = f_M(v_4(t))\) for all \(t\in (0,\delta )\). Then, the uniqueness of the solution of the Cauchy problem in (2.1) gives (2.4), as desired.

Furthermore, we observe that if \(M_2\geqslant M_1\), then \(f_{M_2}\geqslant f_{M_1}\) and then

Consequently, by the Comparison PrincipleFootnote 2 in Theorem 4.10 on page 2894 in Li and Liu (2018), we conclude that \(v_{M_2}\geqslant v_{M_1}\). Therefore, for every \(t\geqslant 0\), we can define

By (2.4), we know that

and hence we can consider the largest \(T_\star \in (0,+\infty )\cup \{+\infty \}\) such that

By (2.6), we have that \(T_\star \geqslant \delta \). We claim that

To prove this, we let \(T_0\in (0,T_\star )\) and we exploit (2.7) to see that

and hence, for every \(t\in (0,T_0)\) and every \(M\geqslant M_0\),

This gives that \({}^C \! D^\alpha _{0,+} v_{M_0}(t)=f_{M_0}(v_{M_0}(t)) =f_M(v_{M_0}(t))\) for all \(t\in (0,T_0)\) and \(M\geqslant M_0\), and therefore, by the uniqueness of the solution of the Cauchy problem in (2.1), we find that \(v_M=v_{M_0}\) in \((0,T_0)\). This and (2.5) give that

As a consequence, recalling (2.2), we obtain that, for all \(t\in (0,T_0)\), the function v satisfies the integral relation

and thus, by Theorem 1 in Kilbas and Marzan (2004), we obtain (2.8), as desired.

Now we claim that

To this end, we argue by contradiction and assume that \(T_\star =+\infty \). We let \(\lambda \geqslant 2\), \(T>0\) (which will be taken as large as we wish in what follows), and

We know [see Lemmata 1 and 2 in Furati and Kirane (2008)] that

We also recall the left Riemann–Liouville derivative of order \(\alpha \in (0,1)\) with initial time \(t_0\) for \(t\in (t_0,+\infty )\), given by

and we point out that

This and (2.8) give that

where \(w(t):=v(t)-1\).

It is also useful to consider the right Riemann–Liouville derivative of order \(\alpha \in (0,1)\) with final time \(t_0\) for \(t\in (-\infty ,t_0)\), given by

Integrating by parts [see Corollary 2 on page 46 of Samko et al. (1993), or formula (15) in Kirane and Malik (2010)], and recalling (2.11), we obtain that

From this and (2.10), we find that

for some \(C_1>0\) independent of T.

Furthermore,

thanks to (2.10), for some \(C_2>0\) independent of T. As a consequence, recalling (2.12), we conclude that

Therefore, recalling that \(v\geqslant 1\) in view of (2.5),

and accordingly

which is a contradiction, thus completing the proof of (2.9).

Then, from (2.7) and (2.9), we obtain that

Hence, we consider a sequence \(t_n\nearrow T_\star \) such that

and we define

For every \(t\in (0,T_\star )\), we have that

thanks to (2.8), and also \(u(x,0)=-x\,v(0)=-x\). These observations and (2.13) prove Theorem 1.2.

3 Proof of Theorem 1.4

We set

This choice of b is useful to make a suitable barrier satisfy a convenient inequality, with a precise determination of the coefficients involved, allowing us to use an appropriate comparison result, as it will be apparent in formula (3.2). This strategy will lead to a lower bound on the blowup time, thus proving the desired claim in (1.9). From this, we will obtain the uniform bound in (1.10) by detecting suitable monotonicity properties of the map \(\alpha \mapsto b(\alpha )\) in light of polygamma functions. The technical details are as follows.

For any \(t\in (0,b)\), let also

Notice that \(w(0)=1\). Moreover, for any \(t\in (0,b)\) and any \(\tau \in (0,t)\), we have that

Consequently, by (1.1), for all \(t\in (0,b)\),

Therefore, using the Comparison Principle in Theorem 4.10 on page 2894 in Li and Liu (2018), if v is as in (2.8), we find that \(v\geqslant w\) in their common domain of definition. This, (2.14), and the fact that w diverges at \(t=b\) yield that

which, together with (3.1), establishes (1.9), as desired.

Now we prove (1.10). For this, we first show that the map \((0,1)\ni \alpha \mapsto b(\alpha )\) that was introduced in (3.1) is monotone. To this end, we recall the polygamma functions for \(\tau \in (1,2)\) and \(n\in {\mathbb {N}}\) with their integral representations, namely

We observe, in particular, that, for all \(\tau \in (1,2)\),

Let also, for all \(\tau \in (1,2)\),

We see that

thanks to (3.4) and therefore, for all \(\tau \in (1,2)\),

Now we define

We have that, for all \(\tau \in (1,2)\),

due to (3.5).

Therefore, the function \((1,2)\ni \tau \mapsto \lambda (\tau )\) is decreasing, and hence so is the function \((1,2)\ni \tau \mapsto e^{\lambda (\tau )}=:\Lambda (\tau )\). Hence, using the substitution \(\tau :=2-\alpha \), with \(\alpha \in (0,1)\), we deduce that the following function is increasing:

thanks to (3.1).

Consequently, the function \((0,1)\ni \alpha \mapsto b(\alpha )\) is decreasing; hence, it attains its maximum as \(\alpha \searrow 0\). This and (3.3) give that

Furthermore, using L’Hôpital’s Rule,

where \(\gamma \) is the Euler–Mascheroni constant, and therefore

4 Proof of Theorem 1.6

To establish Theorem 1.6, we will introduce a suitable barrier [defined in formula (4.6)] that we exploit in combination with a comparison result to obtain lower bounds on the blowup time and prove the desired claim in (1.11). The construction of this auxiliary barrier relies on a careful choice of some parameters that need to be chosen in an algebraically convenient way [for instance, to satisfy the inequality in formula (4.8)]. The computational details of this proof are as follows.

We let \(\delta >0\) as in the statement of Theorem 1.6, and

We define

Let also

In light of (4.2), we remark that

Recalling (4.1), we can also define

and then (4.3) becomes

which coincides with the right-hand side of (1.11).

Therefore, to complete the proof of Theorem 1.6, it is enough to show that

To this end, for all \(t\in (0,T)\), we define

Notice that \(z(0)=1\). Moreover, for all \(t\in (0,T)\) and \(\tau \in (0,t)\) we have that

Hence, since

we see from (4.7) that

and therefore

As a consequence, we conclude that

Accordingly, by (1.1), for all \(t\in (0,T)\),

Consequently, using the fact that z is increasing,

Then, recalling (2.8) and exploiting the Comparison Principle in Theorem 4.10 on page 2894 in Li and Liu (2018), we obtain that \(v\leqslant z\) in their common domain of definition. In particular, this gives (4.5), and so the proof of Theorem 1.6 is complete.

5 A Motivation for (1.2) from the Job Market

In this section, we give a simple, but concrete, motivation for the time-fractional Burgers’ equation in (1.2) making a model of an ideal job market from a few basic principles. The discussion that we present here is a modification of classical models proposed for fluid dynamics and traffic flow in a highway.

We fix parameters \(\delta \), \(\varepsilon >0\), and we use the real line to describe the positions available in a company, in which workers can decide to work. More specifically, the working levels in the company are denoted by \(x\in \varepsilon {\mathbb {Z}}\), and the higher the value of x, the higher and more appealing the position is (e.g., \(x=\varepsilon \) corresponds to Brigadier, \(x=2\varepsilon \) to Major, \(x=3\varepsilon \) to Lieutenant, \(x=4\varepsilon \) to General, etc.).

We suppose that the main motivation for a worker to join the company by taking the position \(x\in \varepsilon {\mathbb {Z}}\) at time \(t\in \delta {\mathbb {N}}\) is provided by the possibility of career progression toward the successive level. If we denote by \(\rho \) the number of people employed in a given position at a given time, and by v the velocity of career progression relative to a given position at a given time, the “group velocity” of career progression for a given position at a given time is obtained by the product \(p:=\rho v\).

We suppose that the potential worker who is possibly entering the company at the level \(x\in \varepsilon {\mathbb {Z}}\) will look at the value of p for its perspective position and compare it with the value of p relative to subsequent level \(x+\varepsilon \), and this will constitute, in this model, the main drive for the worker to join the company. At time \(t\in \delta {\mathbb {N}}\), this driving force is therefore quantified by

for a normalizing constant \({\hat{c}}>0\). Then, we assume that the potential worker bases her or his decision on not only considering the driving force at the present time, but also taking into account the past history of the company. Past events will be weighted by a kernel \({{\mathcal {K}}}\), to make the information coming from remote times less important than the ones relative to the contemporary situation. For concreteness, we suppose that the information coming from the time \(t-\tau \), with \(t=\delta N\), \(N\in {\mathbb {N}}\), and \(\tau \in \{\delta ,2\delta ,\dots , \delta N\}\), is weighted by the kernel

If all the potential workers argue in this way, the number of workers at time \(t=\delta N\) in the working position \(x\in \varepsilon {\mathbb {Z}}\) of the company is given by the initial number of workers, incremented by the effect of the drive function in the history of the company, according to the memory effect that we have described, that is

for some normalizing constant \(c>0\). Hence, exploiting (5.2),

Using the Riemann sum approximation of an integral, for small \(\delta \) we can substitute the summation in the right-hand side of (5.3) with an integral, and, with this asymptotic procedure, we replace (5.3) with

Then, we define \(\alpha :=1-\beta \in (0,1)\) and, up to a timescale, we choose \(c:=1/\Gamma (\alpha )\). In this way, we can write (5.4) as

or, equivalently [see, e.g., Theorem 1 on page 300 of Kilbas and Marzan (2004)],

Thus, recalling (5.1) (and using the normalization \({\hat{c}}:=1/\varepsilon \)),

and then, in the approximation of \(\varepsilon \) small,

Now we make the ansatz that the career velocity is mainly influenced by the number of people in a given position, namely this velocity is proportional to the “vacancies” in a given working level. If \(\rho _{\max }\in (0,+\infty )\) is the maximal number of workers that the market allows in any given position, we therefore assume that

for a normalizing constant \({\tilde{c}}>0\). Of course, in more complicated models, one can allow \(\rho _{\max }\) and \({\tilde{c}}\) to vary in space and time, but we will take them to be constant to address the simplest possible case, and in fact, for simplicity, up to scalings, we take \(\rho _{\max }=1\) and \({\tilde{c}}=1\).

Then, plugging (5.6) into (5.5), we obtain

Now we perform the substitution

and we thereby conclude that

which corresponds to (1.2).

We remark that in the model above, one can interpret \(\rho \in {\mathbb {R}}\) also when it takes negative values, e.g., as a position vacancy. As a matter of fact, since the driving force of Eq. (5.7) can be written as \(\partial _x\rho (1-2\rho )\), we observe that such a drive becomes “stronger” for negative values of \(\rho \) (that is, vacancies in the job markets tend to increase the number of filled positions).

It is also interesting to interpret the result in Theorem 1.2 in light of the motivation discussed here and recalling the setting in (5.8). Indeed, the value 1/2 for the working force \(\rho \) plays a special role in our framework since not only it corresponds to the average between the null working force and the maximal one allowed by the market, but also, and most importantly, to the critical value of the concave function \(\rho (1-\rho )\), whose derivative is the driving force of Eq. (5.7).

In this spirit, recalling (1.4), we have that the solution found in Theorem 1.2 takes the form

for a function v which is diverging in finite time. The expression in (5.9) says that the role corresponding to the job position \(x=0\) has, at the initial time, exactly the critical working force \(\rho =1/2\). Given the linear structure in x of the solution in (5.9), this says that the job position corresponding to \(x=0\) will maintain its critical value \(\rho =1/2\) for all times, while higher level job roles will experience a dramatic loss of number of positions available (and, correspondingly, lower level job roles a dramatic increase). Though it is of course unrealistic that the job market really attains an (either positive or negative) infinite value in a finite time, and the model presented in Eq. (5.7) must necessarily “break” for too large values of \(\rho \) (which, of course, in practice, cannot exceed the total working population), we think that solutions such as (5.9) may represent a concrete case in which the market would in principle allow arbitrarily high-level job positions, but in practice (almost) all the workers end up obtaining a position level below a certain threshold (in this case normalized to \(x=0\)), which constitutes a “de facto” optimal role allowed by the evolution of special preexisting conditions.

Notes

The notion of solution in Theorem 1.2 is such that for all \(x\in {\mathbb {R}}\), the map \([0,T_\star )\ni t\mapsto u(x,t)\) is continuous, and it is in \(C^{0,\alpha }((0,T_\star ))\), being the latter the space defined, e.g., in formula (3.1) of Kilbas and Marzan (2004). In particular, the Caputo derivative of u is well defined for all \(t\in (0,T_\star )\). Furthermore, for all \(t\in (0,T_\star )\), the map \({\mathbb {R}}\ni x\mapsto u(x,t)\) is smooth, making the classical derivative in space well defined too.

We observe that we cannot use here the Comparison Principle in Lemma 2.6 and Remark 2.1 on pages 219-220 in Vergara and Zacher (2015), since the monotonicity of the nonlinearity goes in the opposite direction.

References

Airy, G.B.: Tides and Waves: Extracted from the Encyclopaedia Metropolitana, vol. 5. William Clowes and Sons, London (1845)

Allen, M., Caffarelli, L., Vasseur, A.: A parabolic problem with a fractional time derivative. Arch. Ration. Mech. Anal. 221(2), 603–630 (2016). https://doi.org/10.1007/s00205-016-0969-z

Allen, M., Caffarelli, L., Vasseur, A.: Porous medium flow with both a fractional potential pressure and fractional time derivative. Chin. Ann. Math. Ser. B 38(1), 45–82 (2017)

Arkhincheev, V.E., Baskin, É.M.: Anomalous diffusion and drift in a comb model of percolation clusters. J. Exp. Theor. Phys 73, 161–165 (1991)

Bressan, A.: Hyperbolic Systems of Conservation Laws. The One-Dimensional Cauchy Problem. Oxford Lecture Series in Mathematics and its Applications, vol. 20. Oxford University Press, Oxford (2000)

Carbotti, A., Dipierro, S., Valdinoci, E.: Local Density of Solutions to Fractional Equations. De Gruyter Studies in Mathematics 74. De Gruyter, Berlin (2019)

Coclite, G.M., Dipierro, S., Maddalena, F., Valdinoci, E.: Wellposedness of a nonlinear peridynamic model. Nonlinearity 32(1), 1–21 (2019). https://doi.org/10.1088/1361-6544/aae71b

Dipierro, S., Pellacci, B., Valdinoci, E., Verzini, G.: Time-fractional equations with reaction terms: fundamental solutions and asymptotics. Discrete Contin. Dyn. Syst. (2020), https://arxiv.org/pdf/1903.11939.pdf

Esen, A., Tasbozan, O.: Numerical solution of time fractional Burgers equation by cubic B-spline finite elements. Mediterr. J. Math. 13(3), 1325–1337 (2016). https://doi.org/10.1007/s00009-015-0555-x

Feng, Y., Li, L., Liu, J.-G., Xu, X.: A note on one-dimensional time fractional ODEs. Appl. Math. Lett. 83, 87–94 (2018). https://doi.org/10.1016/j.aml.2018.03.015

Feng, Y., Li, L., Liu, J.-G., Xu, X.: Continuous and discrete one dimensional autonomous fractional ODEs. Discrete Contin. Dyn. Syst. Ser. B 23(8), 3109–3135 (2018). https://doi.org/10.3934/dcdsb.2017210

Furati, K.M., Kirane, M.: Necessary conditions for the existence of global solutions to systems of fractional differential equations. Fract. Calc. Appl. Anal. 11(3), 281–298 (2008)

Harris, P.A., Garra, R.: Analytic solution of nonlinear fractional Burgers-type equation by invariant subspace method. Nonlinear Stud. 20(4), 471–481 (2013)

Inc, M.: The approximate and exact solutions of the space- and time-fractional Burgers equations with initial conditions by variational iteration method. J. Math. Anal. Appl. 345(1), 476–484 (2008). https://doi.org/10.1016/j.jmaa.2008.04.007

Kilbas, A.A., Marzan, S.A.: Cauchy problem for differential equation with Caputo derivative. Fract. Calc. Appl. Anal. 7(3), 297–321 (2004)

Kirane, M., Malik, S.A.: The profile of blowing-up solutions to a nonlinear system of fractional differential equations. Nonlinear Anal. 73(12), 3723–3736 (2010). https://doi.org/10.1016/j.na.2010.06.088

Kiselev, A., Šverák, V.: Small scale creation for solutions of the incompressible two-dimensional Euler equation. Ann. Math. (2) 180(3), 1205–1220 (2014). https://doi.org/10.4007/annals.2014.180.3.9

Li, L., Liu, J.-G.: Some compactness criteria for weak solutions of time fractional PDEs. SIAM J. Math. Anal. 50(4), 3963–3995 (2018). https://doi.org/10.1137/17M1145549

Li, L., Liu, J.-G.: A generalized definition of Caputo derivatives and its application to fractional ODEs. SIAM J. Math. Anal. 50(3), 2867–2900 (2018). https://doi.org/10.1137/17M1160318

Li, B., Wang, J.: Anomalous heat conduction and anomalous diffusion in one-dimensional systems. Phys. Rev. Lett. 91, 044301 (2003). https://doi.org/10.1103/PhysRevLett.91.044301

Miškinis, P.: Some properties of fractional Burgers equation. Math. Model. Anal. 7(1), 151–158 (2002). https://doi.org/10.1080/13926292.2002.9637187

Podlubny, I.: Fractional Differential Equations, Mathematics in Science and Engineering, An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of Their Solution and Some of Their Applications, Mathematics in Science and Engineering, vol. 198. Academic Press Inc, SanDiego (1999)

Saad, K. M., Al-Sharif, Eman H. F.: Analytical study for time and time-space fractional Burgers’ equation. Adv. Differ. Equ. https://doi.org/10.1186/s13662-017-1358-0

Samko, S.G., Kilbas, A.A., Marichev, O.I.: Fractional integrals and derivatives, Theory and applications; Edited and with a foreword by S. M. Nikol’skiĭ; Translated from the 1987 Russian original; Revised by the authors, Gordon and Breach Science Publishers, Yverdon (1993), https://books.google.fr/books?id=SO3FQgAACAAJ

Vergara, V., Zacher, R.: Optimal decay estimates for time-fractional and other nonlocal subdiffusion equations via energy methods. SIAM J. Math. Anal. 47(1), 210–239 (2015). https://doi.org/10.1137/130941900

Wu, G.-C., Baleanu, D.: Variational iteration method for the Burgers’ flow with fractional derivatives-new Lagrange multipliers. Appl. Math. Model. 37(9), 6183–6190 (2013). https://doi.org/10.1016/j.apm.2012.12.018

Yokuş, A., Kaya, D.: Numerical and exact solutions for time fractional Burgers’ equation. J. Nonlinear Sci. Appl. 10(7), 3419–3428 (2017). https://doi.org/10.22436/jnsa.010.07.06

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Dr. Alain Goriely.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The authors are members of the Gruppo Nazionale per l’Analisi Matematica, la Probabilità e le loro Applicazioni (GNAMPA) of the Istituto Nazionale di Alta Matematica (INdAM). SD and EV are supported by the Australian Research Council Discovery Project grant “Nonlocal Equations at Work” (NEW). SV is supported by the DECRA Project “Partial differential equations, free boundaries and applications”.

Rights and permissions

About this article

Cite this article

Coclite, G.M., Dipierro, S., Maddalena, F. et al. Singularity Formation in Fractional Burgers’ Equations. J Nonlinear Sci 30, 1285–1305 (2020). https://doi.org/10.1007/s00332-020-09608-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00332-020-09608-x