Abstract

Objectives

Artificial intelligence (AI) has the potential to impact clinical practice and healthcare delivery. AI is of particular significance in radiology due to its use in automatic analysis of image characteristics. This scoping review examines stakeholder perspectives on AI use in radiology, the benefits, risks, and challenges to its integration.

Methods

A search was conducted from 1960 to November 2019 in EMBASE, PubMed/MEDLINE, Web of Science, Cochrane Library, CINAHL, and grey literature. Publications reflecting stakeholder attitudes toward AI were included with no restrictions.

Results

Commentaries (n = 32), surveys (n = 13), presentation abstracts (n = 8), narrative reviews (n = 8), and a social media study (n = 1) were included from 62 eligible publications. These represent the views of radiologists, surgeons, medical students, patients, computer scientists, and the general public. Seven themes were identified (predicted impact, potential replacement, trust in AI, knowledge of AI, education, economic considerations, and medicolegal implications). Stakeholders anticipate a significant impact on radiology, though replacement of radiologists is unlikely in the near future. Knowledge of AI is limited for non-computer scientists and further education is desired. Many expressed the need for collaboration between radiologists and AI specialists to successfully improve patient care.

Conclusions

Stakeholder views generally suggest that AI can improve the practice of radiology and consider the replacement of radiologists unlikely. Most stakeholders identified the need for education and training on AI, as well as collaborative efforts to improve AI implementation. Further research is needed to gain perspectives from non-Western countries, non-radiologist stakeholders, on economic considerations, and medicolegal implications.

Key Points

-

Stakeholders generally expressed that AI alone cannot be used to replace radiologists. The scope of practice is expected to shift with AI use affecting areas from image interpretation to patient care.

-

Patients and the general public do not know how to address potential errors made by AI systems while radiologists believe that they should be “in-the-loop” in terms of responsibility. Ethical accountability strategies must be developed across governance levels.

-

Students, residents, and radiologists believe that there is a lack in AI education during medical school and residency. The radiology community should work with IT specialists to ensure that AI technology benefits their work and centres patients.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Artificial intelligence (AI) has seen increased public attention and industrial application in recent years [1,2,3,4,5,6,7]. Its ability in analysing complex data particularly suits it to automated interpretation in diagnostic imaging. Several collaborative programmes between medical institutions and data companies exist to establish reliable AI-based diagnostic algorithms [8,9,10].

Reception of such programmes has been mixed. While most believe that AI enhances the accuracy, efficiency, and accessibility of medical imaging, the role of the radiologist in this future remains uncertain [11, 12]. Attitudes of physicians are variable, ranging from envisioning an AI-dominated practice to optimism in broadening their scope of practice [13, 14].

AI refers to systems designed to execute tasks that traditionally require a human agent [15, 16]. Machine learning (ML) refers to computer algorithms applied in AI capable of automatic learning and data extrapolation [17,18,19]. ML can incorporate different learning algorithms, of which artificial neural networks (ANN) and deep learning (DL) are most well-known. ANN are collections of artificial neurons that analyse inputs and assign suitable weights to predict an outcome [18,19,20,21]. DL uses multi-level ANN for nonlinear data processing [22]. Experts predict that the coming decades will see DL grow into mainstream medical imaging [23, 24]. Although these definitions are understood by AI researchers, how clinicians or patients understand these terms and their implications remains unclear.

There is an increasing body of publications regarding attitudes toward the impact of AI in diagnostic imaging. A comprehensive review of stakeholders’ perspectives has yet to be performed. We undertook a scoping review to systematically search the literature, identify relevant stakeholders, and categorise their views on the use of AI in radiology.

Methods

Study design

The protocol was registered (DOI: 10.17605/OSF.IO/AXDPE). Based on the methodology outlined by Arksey and O’Malley (2005) and Levac et al (2010), this scoping review consisted of six stages [25, 26].

Stage 1: Formulating the research question

Following a preliminary exploration of published literature, the following research questions were identified:

-

To what extent is AI expected to influence radiology practice?

-

What are stakeholders’ views on the use of AI in radiology?

-

What challenges and advantages arise with AI use in radiology?

Stage 2: Identifying relevant studies

Database selection

Publications were identified from EMBASE, PubMed/MEDLINE, Web of Science, Cochrane Library, and Cumulative Index to Nursing and Allied Health Literature (CINAHL). Grey literature was searched using the Canadian Agency for Drugs and Technologies in Health (CADTH) Grey Literature Checklist, OpenGrey, and Google Scholar [27].

Search strategy

The search strategy was drafted with consultation of a research librarian and a timeframe of 1960, when “artificial intelligence” first appeared in the literature, to November 2019 (Supplement S1).

Eligibility criteria

Published studies and grey literature of any design, language, region, and timeframe including commentaries, abstracts, and reviews were eligible.

Stage 3: Study selection

Two levels of screening were conducted: (1) title and abstract review, and (2) full-text review. For level one screening, two reviewers (C.E., L.Y.) independently screened titles and abstracts for full-text review based on the inclusion criteria. In level two screening, full-text studies underwent independent review, including reference list searches. Relevant studies were included if perspectives on AI use in radiology were described. The PRISMA flow diagram tracking progress is shown in Figure 1 [28].

Stage 4: Charting the data

All included articles were independently extracted using a standardised form. Characteristics organised included bibliographical information (i.e. authors, titles, dates, and journals), objective, study type, participant demographic, AI definitions, and attitudes toward AI.

Stages 5 and 6: Collection, summary, and consultation

The PRISMA-ScR guided the collection, interpretation, and communication of results [28]. Following the recommendations by Levac et al (2010), thematic analysis consisted of (1) analysing the data, (2) reporting results, and (3) applying meaning to the results [25]. A spreadsheet was generated with article characteristics and conclusions. Extracted text was grouped by stakeholder, issues discussed, and expressed views. Themes were subsequently identified. Following the initial groupings by C.E. and L.Y., methodological experts (R.A., P.S.) and radiologists (D.K., N.S.) reviewed the data to provide additional input and confirm interpretations. Several iterations were undertaken to ensure accuracy and consistency.

Results

Characteristics of all included publications

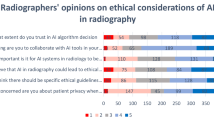

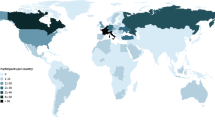

Sixty-two publications were included from the 3282 screened (Figure 1). These represented radiologists (n = 52, 7 surveys), medical students (n = 7, 4 surveys), the general public (n = 4, 1 survey), patients (n = 3, 2 surveys), computer scientists (n = 3, 1 survey), and surgeons (n = 1, 1 survey); many studies assessed more than one stakeholder group. Table 1 shows characteristics for surveys and Table S3 (online supplement) shows characteristics of non-surveys. Most publications were commentaries and editorials (n = 39) and a minority (n = 13) were surveys. The majority of publications (n = 50) represented North American and European views (Figure 2). The majority (81%) of eligible publications were dated after 2018, indicating a surge of interest in AI (Figure 4) (Table 2).

Definition of AI in all included publications

A working definition of AI was found in 52% (n = 33) of studies. These were grouped into one or more of three broad categories that defined AI: (1) into its sub-concepts, ML, DL, and ANN [14,15,16, 29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51]; (2) with a technical description of its mechanisms [14,15,16, 33, 35, 37, 38, 40,41,42,43,44,45,46,47, 49,50,51,52,53,54,55,56,57,58]; and (3) via its current and future applications [30,31,32, 34, 39, 59]. Figure 3 is a weighted word map showing the dominant vocabulary used. A greater percentage of commentaries, narrative reviews, and social media studies explicitly defined AI compared to surveys and presentation abstracts.

Generally, AI was understood as the use of pattern-identifying computational algorithms extrapolating from training sets to make predictions, i.e. machines performing problem-solving tasks typically delegated to humans [14, 24, 33, 35, 37, 38, 40, 42, 49, 50, 52, 54,55,56, 58]. Commonly cited applications in radiology included quantitative feature extraction, computer-aided classification and detection, image reconstruction, segmentation, and natural language processing [30, 32, 34, 52, 59].

Analysis of themes expressing views on AI in radiology

Following content analysis, seven themes were identified: (1) predicted impact of AI on radiology, (2) potential for radiologist replacement, (3) trust in AI, (4) knowledge of AI, (5) education on AI, (6) economic considerations, and (7) medicolegal implications. Results were consistent across publications (online Tables S4 and S5). 81,82,83,84,85,86,87,88,89,90 As the surveys represented include larger sample sizes, we have summarised these separately (Tables 3 and 4) and will be prioritising them in our results. Findings from non-survey studies will be explicitly identified. Themes captured in surveys are shown in Table 2.

(1) Predicted impact of AI on radiology

While this theme did not include surgeons’ views, all remaining stakeholders—radiologists, medical students, patients, computer scientists, and the general public—were generally optimistic. Radiologists and residents consistently expressed that AI will have a significant, positive impact in daily practice [29, 59,60,61]. Most believe that practical changes will occur in the next 10–20 years [30, 60, 61]. The majority would still choose this specialty if revisiting the choice [29, 30, 59, 62], citing interests in advancing technology [29, 62]. Senior radiologists have greater confidence in the future of the specialty than trainees [59]. Radiologists emphasise that avoiding AI is not feasible, and most medical students agree that radiologists should embrace AI and work with the industry; this is reflected in both surveys [31, 72] and commentaries [39, 63]. Computer scientists had a higher estimate of AI’s impact on radiology than radiologists, with half predicting dramatic changes in the next 5–10 years [30]. Medical students believe that the impact of AI will be largely positive [31, 72]. Positions with procedural training are seen as having greater job security compared to diagnostic radiology, as AI is expected to automate image interpretation [62, 73]. Patients believe that AI will positively affect efficiency [58], and no negative impacts were anticipated by the general public in a search of Twitter opinions [54].

(2) Potential for radiologist replacement and (3) Trust

None of the stakeholder groups foresees total replacement of radiologists, and do not trust AI to make decisions independently. Radiologists and medical students expect AI to act as a “co-pilot” [30,31,32, 60]. Surgeons also expressed skepticism that AI can make clinical decisions alone, and are ambivalent about the endangerment of diagnostic radiology [62]. Radiologists do not expect their diagnostic roles to be replaced due to AI’s lack of general intelligence and human traits [29, 30, 59, 62]; they do expect that clinicians who embrace AI will replace those who do not. They anticipate that AI will shift their focus from repetitive tasks to activities involving research, teaching, and patient interaction [32]. Some radiologists expressed that the use of AI opens the possibility for other specialties to assume radiological tasks, and they anticipate a fall in job demand [32, 60, 62]. Medical students anticipate a similar reduction in radiologists needed, but most believe that such “turf losses” are unlikely [31]. Medical students also expressed worry about replacement and excitement about the use of AI in radiology [62, 72, 73]. Although uncertain about changes to workload, most radiologists are optimistic about job satisfaction and salary—a sentiment echoed by computer scientists [30, 32, 60]. Computer scientists did not predict replacement of radiologists in the next 5 years, and few predicted obsolescence in the next 10–20 years [30]. Notably, views presented at an international symposium indicated that computer scientists who worked in medical imaging are more skeptical about AI replacing radiologists [75].

Similarly, patients expressed a lack of trust in machine diagnoses, prefer personal interactions, and anticipate a lack of emotional support from AI [72]. Given equal ability, patients prefer human physicians [72]. However, if computers can perform better and more holistic assessments to predict future diseases, patients prefer AI [72]. An editorial suggests that the public is generally uncomfortable with technology without a human in command [68]. The majority surveyed felt that technology could not entirely replace radiologists [76], a view consistent with perspectives expressed in commentary [54].

(4) Knowledge of AI and (5) Education

Radiologists, medical students, and patients in surveys expressed a lack of knowledge on AI. Although most radiologists are aware of the prominence of AI in radiology, they report limited knowledge and training [29, 30, 32, 59]. They expressed interest in ongoing research and felt that AI should be taught in medical training [29, 30, 59, 60]. Radiologists pointed to their role in AI development for medical imaging, especially in task definition, providing labelled images, and developing applications [32]. Residents are especially enthusiastic to learn about technological advancement [29, 32]. Education can increase interest, as tech-savvy respondents are more likely to find AI and ML exciting for radiology [29].

Medical students surveyed overestimated their competency in AI [31]. Only half were aware that AI is a major topic in radiology and a third had basic knowledge [72]. Most agreed that there is a need for training in AI during medical school [31, 72]. Increased year level, exposure to AI, and obtaining knowledge from literature and radiologists decreased pessimism toward field prospects [31].

Although patients believe AI to be a useful checking tool, they report having limited knowledge and express uncertainty about how AI will affect workflow [74].

As expected, computer scientists have the most knowledge and exposure to AI [30]. Although this survey did not discuss education [30], one commentary emphasises on the collaboration between radiologists and AI experts to ensure clinical relevance of AI technologies [68].

There were no surgeon or public views on knowledge or education in the included articles.

(6) Economic considerations

While none of the surveys evaluated radiologists’ views on economic implications, this theme was explored in commentaries with mixed opinions [35, 57, 64,65,66]. Some radiologists cited an increase in costs associated with computer-aided detection systems without an increase in productivity [57]. Conversely, others suggested that AI may reduce burnout and cost while increasing care quality [35, 64]. Another urged that although AI may be more cost effective, progress must be driven by patient impact instead of financial considerations [55]. A commentary anticipates that hospitals—especially in publicly funded systems—may hesitate to invest in technology lacking vigorous testing, and may lack the network infrastructure to run these programmes [50].

Surgeons’, medical students’, patients’, and computer scientists’ views on economic considerations were not addressed in any of the included publications.

(7) Medicolegal implications

Both surveys [32, 62] and commentaries indicated that regulation, accountability, and ethical issues present barriers to AI implementation [14,15,16, 38, 40, 41, 44, 46, 47, 50, 55, 57, 65, 67,68,69,70,71]. Most radiologists surveyed believed they would assume responsibility for medical errors made by AI [32, 60]. Included non-survey articles echo similar sentiments [37, 44, 64, 70, 71]. Commentaries emphasised that time is needed to set up regulatory bodies [36, 40]. They suggest that radiologists should help develop assessment processes for AI tools based on evidence, and advocate for patients’ consent, privacy, and data security [14,15,16, 41, 46, 55, 66].

Patients surveyed suggest it is difficult to address computer errors and assigning accountability [58, 74]. Similarly, a social media analysis indicated that legal and regulatory concerns present a challenge to AI implementation; these issues are not frequently discussed [54].

Surgeons’, medical students’, and computer scientists’ views on medicolegal implications were not addressed in the included publications.

Discussion

This scoping review is the first step in summarising views on AI in medical imaging. Seven themes were identified from the included articles, representing views from six stakeholders. Radiologists’ views were predominantly represented. Inconsistencies existed within the half of the articles that provided definitions of AI. This may pose difficulties in future comparisons and syntheses. Overall, stakeholders do not trust AI in making independent diagnoses and do not believe that radiologists can be completely replaced. The general public and patients dislike AI due to a lack of “human touch”. However, patients would accept its use if it can provide more insight than a human clinician.

Instead of replacement, stakeholders expect AI to function as a “co-pilot” in reducing error and repetitive tasks. Nevertheless, a decrease in the demand for diagnostic radiologists is anticipated. Radiologists’ responsibilities are expected to shift from image interpretation to patient communication, policy development, and innovation. This is an important consideration for medical students when making residency choices. Radiologists, medical students, and patients indicated a need for education in the clinical use of AI.

There is opportunity for interdisciplinary collaboration between radiologists and AI experts to design technologies that advance the Quadruple Aim: patient outcome, cost-effectiveness, patient experience, and provider experience. The medical community must also work with legislative bodies to ensure that changes are driven by patient outcomes rather than economic considerations [55].

Economic considerations and medicolegal implications were not well addressed. No surveys consulted stakeholders in terms of economic considerations despite coverage in commentaries, indicating a need to outline this emerging technology’s financial angle. A recent systematic review similarly found a need for economic analyses of AI implementation in healthcare [77]. The values and resources of health systems may constitute an additional consideration. Although the general public and patients do not know how to address potential errors made by computer systems, radiologists believe that they should be “in-the-loop” in terms of responsibility; ethical accountability strategies must be developed across governance levels. In comparison with existing literature, several commentaries discussed ways to restrict data transmission, protect patient privacy, and suggested review boards to prevent information compromise [64, 78, 79]. In addition to ethical and medicolegal barriers, adoption may be slow if radiology does not identify a need for automatic image interpretation. The European Society of Radiologists and Canadian Association of Radiologists have written ethical statements on the subject, indicating the beginning of much-needed higher level regulation [15, 80]. These findings must be accounted for in large-scale decision-making.

Biases and limitations

AI is under investigation in multiple areas of radiology, such as pre- and post-imaging workflow. Since this review focused on AI use in image interpretation, radiologists’ perception of other applications fell outside our scope. Given the methodology of scoping reviews, risk of bias assessment was not performed, and would have been applicable only to the survey studies. Most of the publications represent Western radiologists’ perspectives (Figure 2). This indicates a gap in knowledge from non-Western countries and other stakeholders (e.g. government, insurance providers, radiation technicians, radiographers, or radiation technologists) [91, 92]. Due to an exponential rise in scientific publications on AI (Figure 4), there are undoubtedly new publications on views of stakeholders considered in this study as well as others that were not represented. As AI may increase accessibility of radiological diagnoses in low- and middle-income countries, it is important to include global perspectives in future study and adapt such technologies to different international healthcare contexts. There were limited publications capturing the views of surgeons (n = 1), computer scientists (n = 3), patients (n = 3), and the general public (n = 4). Radiologists’ views were more frequently published or evaluated in formal surveys, likely because of their proximity to these emerging technologies. However, it is important to incorporate other stakeholders’ views into the design of such systems. As patient information will be used in the development of AI technology and patient care will undergo significant changes, patient perspectives need to be prioritized.

Future directions

AI is innovative and highly applicable to radiology; barriers to entry and drivers of adoption must be considered. This scoping review can encourage a comprehensive plan in adapting current training and practice. There is a need for stakeholders to incorporate the growing body of evidence around AI in radiology in order to guide development, education, regulation, and deployment. Given that AI is currently an intervention of great interest in health contexts, it is beneficial to regularly update reviews capturing perspectives. More formal qualitative studies can further explore elements that facilitate or prevent AI implementation. The present scoping review serves as a first step toward future research and synthesis of such information.

Conclusion

The views of radiologists, medical students, patients, the general public, and computer scientists suggest that replacement of radiologists by AI is considered unlikely; most acknowledge its potential and remain optimistic. Stakeholders identified a need for education and training on AI, and specific efforts are needed to improve its practical integration. Further research is needed to gain perspectives from non-Western countries, non-radiologist stakeholders, economic considerations, and medicolegal implications.

Abbreviations

- AI:

-

Artificial intelligence

- ANN:

-

Artificial neural networks

- CADTH:

-

Canadian Agency for Drugs and Technologies in Health

- CINAHL:

-

Cumulative Index to Nursing and Allied Health Literature

- DL:

-

Deep learning

- ML:

-

Machine learning

- PRISMA:

-

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

References

Klumpp M (2018) Automation and artificial intelligence in business logistics systems: human reactions and collaboration requirements. Int J Log Res Appl 21:224–242

Elizalde-Ramírez F, Nigenda RS, Martínez-Salazar IA, Ríos-Solís YÁ (2019) Travel plans in public transit networks using artificial intelligence planning models. Appl Artif Intell 33:440–461

Alarie B, Niblett A, Yoon AH (2018) How artificial intelligence will affect the practice of law. Univ Tor Law J 68:106–124

Nguyen H, Bui X-N (2019) Predicting blast-induced air overpressure: a robust artificial intelligence system based on artificial neural networks and random forest. Nat Resour Res 28:893–907

Rodríguez F, Fleetwood A, Galarza A, Fontán L (2018) Predicting solar energy generation through artificial neural networks using weather forecasts for microgrid control. Renew Energy 126:855–864

Krittanawong C (2018) The rise of artificial intelligence and the uncertain future for physicians. Eur J Intern Med 48:e13–e14

Ramesh A, Kambhampati C, Monson JR, Drew P (2004) Artificial intelligence in medicine. Ann R Coll Surg Engl 86:334

Ardila D, Kiraly AP, Bharadwaj S et al (2019) End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat Med 25:954

Lorenzetti L (2016) Here’s how IBM Watson Health is transforming the health care industry. Fortune (April 5)

Bloch-Budzier S (2016) NHS using Google technology to treat patients. BBC News 22

Conant EF, Toledano AY, Periaswamy S et al (2019) Improving accuracy and efficiency with concurrent use of artificial intelligence for digital breast tomosynthesis. Radiology: AI 1:e180096

Gottumukkala RV, Le TQ, Duszak R Jr, Prabhakar AM (2018) Radiologists are actually well positioned to innovate in patient experience. Curr Probl Diagn Radiol 47:206–208

Obermeyer Z, Emanuel EJ (2016) Predicting the future—big data, machine learning, and clinical medicine. N Engl J Med 375:1216

Kruskal JB, Berkowitz S, Geis JR, Kim W, Nagy P, Dreyer K (2017) Big data and machine learning—strategies for driving this bus: a summary of the 2016 intersociety summer conference. J Am Coll Radiol 14:811–817

Tang A, Tam R, Cadrin-Chênevert A et al (2018) Canadian Association of Radiologists white paper on artificial intelligence in radiology. Can Assoc Radiol J 69:120–135

Pesapane F, Codari M, Sardanelli F (2018) Artificial intelligence in medical imaging: threat or opportunity? Radiologists again at the forefront of innovation in medicine. Eur Radiol Exp 2:35

Faggella D (2020) What is Machine Learning. Available via https://emerj.com/ai-glossary-terms/what-is-machine-learning/

Wiemken TL, Kelley RR (2020) Machine learning in epidemiology and health outcomes research. Annu Rev Public Health 41:21–36

Alpaydin E (2014) Introduction to machine learning, 3 edn

Lisboa PJ, Taktak AF (2006) The use of artificial neural networks in decision support in cancer: a systematic review. Neural Netw 19:408–415

Sherriff A, Ott J, Team AS (2004) Artificial neural networks as statistical tools in epidemiological studies: analysis of risk factors for early infant wheeze. Paediatr Perinat Epidemiol 18:456–463

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521:436–444

Ching T, Himmelstein DS, Beaulieu-Jones BK et al (2018) Opportunities and obstacles for deep learning in biology and medicine. J R Soc Interface 15

Suzuki K (2017) Overview of deep learning in medical imaging. Radiol Phys Technol 10:257–273

Levac D, Colquhoun H, O’Brien KK (2010) Scoping studies: advancing the methodology. Implement Sci 5:69

Arksey H, O’Malley L (2005) Scoping studies: towards a methodological framework. Int J Soc Res Methodol 8:19–32

Canadian Agency for Drugs and Technologies in Health (2013) Grey Matters: a practical search tool for evidence-based medicine. CADTH, Ottawa. Available via https://www.cadth.ca/resources/finding-evidence/grey-matters

Tricco AC, Lillie E, Zarin W et al (2018) PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med 169:467–473

Ooi SKG, Makmur A, Soon AYQ et al (2019) Attitudes toward artificial intelligence in radiology with learner needs assessment within radiology residency programmes: a national multi-programme survey. Singap Med J:04

Eltorai AEM, Bratt AK, Guo HH (2019) Thoracic radiologists’ versus computer scientists’ perspectives on the future of artificial intelligence in radiology. J Thorac Imaging 35:255–259

Gong B, Nugent JP, Guest W et al (2019) Influence of artificial intelligence on Canadian medical students’ preference for radiology specialty: a national survey study. Acad Radiol 26:566–577

European Society of Radiology (ESR) (2019) Impact of artificial intelligence on radiology: a EuroAIM survey among members of the European Society of Radiology. Insights Imaging 10:105

Aminololama-Shakeri S, Lopez JE (2019) The doctor-patient relationship with artificial intelligence. AJR Am J Roentgenol 212:308–310

Shalaby SM, El-Badawy M, Hanafy A (2019) A white paper on artificial intelligence in radiology, getting over the hype. Clin Radiol 74 (Supplement 2):e11

Aerts HJWL (2018) Data science in radiology: a path forward. Clin Cancer Res 24:532–534

Beregi JP, Zins M, Masson JP et al (2018) Radiology and artificial intelligence: an opportunity for our specialty. Diagn Interv Imaging 99:677–678

Hirschmann A, Cyriac J, Stieltjes B, Kober T, Richiardi J, Omoumi P (2019) Artificial intelligence in musculoskeletal imaging: review of current literature, challenges, and trends. Semin Musculoskelet Radiol 23:304–311

Moore MM, Slonimsky E, Long AD, Sze RW, Iyer RS (2019) Machine learning concepts, concerns and opportunities for a pediatric radiologist. Pediatr Radiol 49:509–516

Nguyen GK, Shetty AS (2018) Artificial intelligence and machine learning: opportunities for radiologists in training. J Am Coll Radiol 15:1320–1321

Chan S, Siegel EL (2019) Will machine learning end the viability of radiology as a thriving medical specialty? Br J Radiol 92(1094). https://doi.org/10.1259/bjr.20180416

Yi PH, Hui FK, Ting DSW (2018) Artificial intelligence and radiology: collaboration is key. J Am Coll Radiol 15:781–783

Syed AB, Zoga AC (2018) Artificial intelligence in radiology: current technology and future directions. Semin Musculoskelet Radiol 22:540–545

Giger ML (2018) Machine learning in medical imaging. J Am Coll Radiol Part B 15:512–520

Nawrocki T, Maldjian PD, Slasky SE, Contractor SG (2018) Artificial intelligence and radiology: have rumors of the radiologist’s demise been greatly exaggerated? Acad Radiol 25:967–972

Dreyer KJ, Geis JR (2017) When machines think: radiology’s next frontier. Radiology 285:713–718

Kohli M, Prevedello LM, Filice RW, Geis JR (2017) Implementing machine learning in radiology practice and research. AJR Am J Roentgenol 208:754–760

Chockley K, Emanuel E (2016) The end of radiology? Three threats to the future practice of radiology. J Am Coll Radiol Part PA 13:1415–1420

European Society of Radiology (ESR) (2019) What the radiologist should know about artificial intelligence - an ESR white paper. Insights Imaging 10:44

Langs G, Rohrich S, Hofmanninger J et al (2018) Machine learning: from radiomics to discovery and routine. Radiologe 58:1–6

Wong SH, Al-Hasani H, Alam Z, Alam A (2019) Artificial intelligence in radiology: how will we be affected? Eur Radiol 29:141–143

Brotchie P (2019) Machine learning in radiology. J Med Imaging Radiat Oncol 63:25–26

Kocak B, Durmaz ES, Ates E, Kilickesmez O (2019) Radiomics with artificial intelligence: a practical guide for beginners. Diagn Interv Radiol 25:485–495

Marieke H, Yfke PO, Saar H, Thomas CK, Derya Y (2019) A qualitative study to understand patient perspective on the use of artificial intelligence in radiology. J Am Coll Radiol 16:1416–1419

Goldberg JE, Rosenkrantz AB (2019) Artificial intelligence and radiology: a social media perspective. Curr Probl Diagn Radiol 48:308–311

Jalal S, Nicolaou S, Parker W (2019) Artificial intelligence, radiology, and the way forward. Can Assoc Radiol J 70:10–12

Hainc N, Federau C, Stieltjes B, Blatow M, Bink A, Stippich C (2017) The bright, artificial intelligence-augmented future of neuroimaging reading. Front Neurol 8 (SEP). https://doi.org/10.3389/fneur.2017.00489

Blum A, Zins M (2017) Radiology: is its future bright? Diagn Interv Imaging 98:369–371

Haan M, Ongena YP, Hommes S, Kwee TC, Yakar D (2019) A qualitative study to understand patient perspective on the use of artificial intelligence in radiology. J Am Coll Radiol 16:1416–1419

Collado-Mesa F, Alvarez E, Arheart K (2018) The role of artificial intelligence in diagnostic radiology: a survey at a single radiology residency training program. J Am Coll Radiol 15:1753–1757

Waymel Q, Badr S, Demondion X, Cotten A, Jacques T (2019) Impact of the rise of artificial intelligence in radiology: what do radiologists think? Diagn Interv Imaging 100:327–336

Koh DM (2019) Attitudes and perception of artificial intelligence and machine learning in oncological imaging. Cancer Imaging Conference: 19th Meeting and Annual of the International Cancer Imaging Society Italy 19. https://doi.org/10.3389/frai.2020.578983

Jv H, Huber A, Leichtle A et al (2019) A survey on the future of radiology among radiologists, medical students and surgeons: students and surgeons tend to be more skeptical about artificial intelligence and radiologists may fear that other disciplines take over. Eur J Radiol 121. https://doi.org/10.1016/j.ejrad.2019.108742

Tajmir SH, Alkasab TK (2018) Toward augmented radiologists: changes in radiology education in the era of machine learning and artificial intelligence. Acad Radiol 25:747–750

Liew C (2018) The future of radiology augmented with Artificial Intelligence: a strategy for success. Eur J Radiol 102:152–156

Mazurowski MA (2019) Artificial intelligence may cause a significant disruption to the radiology workforce. J Am Coll Radiol 16:1077–1082

Gallix B, Chong J (2019) Artificial intelligence in radiology: who’s afraid of the big bad wolf? Eur Radiol 29:1637–1639

Massat MB (2018) A promising future for AI in breast cancer screening. Appl Radiol 47:22–25

Kim W (2019) Imaging informatics. Fear, hype, hope, and reality: how AI is entering the health care system. Radiology Today 20:6–7

Thrall JH, Li X, Li Q et al (2018) Artificial intelligence and machine learning in radiology: opportunities, challenges, pitfalls, and criteria for success. J Am Coll Radiol 15:504–508

Recht M, Bryan RN (2017) Artificial intelligence: threat or boon to radiologists? J Am Coll Radiol 14:1476–1480

Conway S (2017) The Radiologisaurus: why THEY want YOU to become a dinosaur. Appl Radiol 46:30

Santos DP, Giese D, Brodehl S et al (2019) Medical students’ attitude towards artificial intelligence: a multicentre survey. Eur Radiol 29:1640–1646

Dbouk S, Auloge P, Cazzato RL et al (2019) Awareness and knowledge of interventional radiology by medical students in one of the largest medical schools in France. Cardiovasc Interv Radiol 42(3 Supplement):S284

Ongena YP, Haan M, Yakar D, Kwee TC (2019) Patients’ views on the implementation of artificial intelligence in radiology: development and validation of a standardized questionnaire. Eur Radiol. https://doi.org/10.1007/s00330-019-06486-0

Yamada K (2018) The future of radiology? Asian perspectives. Neuroradiology 60 (1 Supplement 1):93-94

Rosenkrantz AB, Hawkins CM (2017) Use of Twitter polls to determine public opinion regarding content presented at a major national specialty society meeting. J Am Coll Radiol 14:177–182

Wolff J, Pauling J, Keck A, Baumbach J (2020) The economic impact of artificial intelligence in health care: systematic review. J Med Internet Res 22(2):e16866

Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJ (2018) Artificial intelligence in radiology. Nat Rev Cancer 18:500

Saba L, Biswas M, Kuppili V et al (2019) The present and future of deep learning in radiology. Eur J Radiol 114:14–24

Geis JR, Brady AP, Wu CC et al (2019) Ethics of artificial intelligence in radiology: summary of the joint European and North American multisociety statement. Can Assoc Radiol J 70:329–334

Kobayashi Y, Ishibashi M, Kobayashi H (2019) How will “democratization of artificial intelligence” change the future of radiologists? Jpn J Radiol 37:9–14

Reiner BI (2014) A crisis in confidence: a combined challenge and opportunity for medical imaging providers. J Am Coll Radiol 11:107–108

Strickland N (2018) What can Radiologists realistically expect from artificial intelligence? J Med Imaging Radiat Oncol 62:56–83

Pesapane F (2019) How scientific mobility can help current and future radiology research: a radiology trainee’s perspective. Insights Imaging 10:85

Bratt A (2019) Why radiologists have nothing to fear from deep learning. J Am Coll Radiol 16:1190–1192

O’Regan D (2017) The power of “Big Data”: a digital revolution in clinical radiology? Cardiovasc Interv Radiol 39:778–781

Schier R (2018) Artificial intelligence and the practice of radiology: an alternative view. J Am Coll Radiol 15:1004–1007

Tang L (2018) Radiological evaluation of advanced gastric cancer: from image to big data radiomics. Chin J Gastrointest Surg 21:1106

Burdorf B (2019) A medical student’s outlook on radiology in light of artificial intelligence. J Am Coll Radiol 16:1514–1515

Purohit K (2019) Growing interest in radiology despite AI fears. Acad Radiol 26:e75

Odle T (2020) The AI era: the role of medical imaging and radiation therapy professionals. Radiol Technol 91:391–400

Woznitza N (2020) Artificial intelligence and the radiographer/radiological technologist profession: a joint statement of the International Society of Radiographers and Radiological Technologists and the European Federation of Radiographer Societies. Radiography 26:93–95

Funding

The authors state that this work has not received any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Guarantor

The scientific guarantor of this publication is Pasqualina (Lina) Santaguida.

Conflict of Interest

The authors declare no competing interests.

Statistics and Biometry

No complex statistical methods were necessary for this paper.

Informed Consent

Written informed consent was not required for this study because this study is based on a review of publicly available data and does not involve human or animal subjects.

Ethical Approval

Institutional Review Board approval was not required because this study is based on a review of publicly available data and does not involve human or animal subjects.

Methodology

• scoping review

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Ling Yang and Ioana Cezara Ene are co-first authors.

Supplementary information

ESM 1

(DOCX 244 kb)

Rights and permissions

About this article

Cite this article

Yang, ., Ene, I.C., Arabi Belaghi, R. et al. Stakeholders’ perspectives on the future of artificial intelligence in radiology: a scoping review. Eur Radiol 32, 1477–1495 (2022). https://doi.org/10.1007/s00330-021-08214-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00330-021-08214-z