Abstract

Background

Simulation-based mastery training may improve clinical performance. The aim of this study was to determine the effect of simulation-based mastery training on clinical performance in abdominal diagnostic ultrasound for radiology residents.

Method

This study was a multicenter randomized controlled trial registered at clinicaltrials.gov (identifier: NCT02921867) and reported using the Consolidated Standards of Reporting Trials (CONSORT) statement. Twenty radiology residents from 10 different hospitals were included in the study. Participants were randomized into two groups: (1) simulator-based training until passing a validated test scored by a blinded reviewer or (2) no intervention prior to standard clinical ultrasound training on patients. All scans performed during the first 6 weeks of clinical ultrasound training were scored. The primary outcome was performance scores assessed using Objective Structured Assessment of Ultrasound Skills (OSAUS). An exponential learning curve was fitted for the OSAUS score for the two groups using non-linear regression with random variation. Confidence intervals were calculated based on the variation between individual learning curves.

Results

After randomization, eleven residents completed the simulation intervention and nine received standard clinical training. The simulation group participants attended two to seven training sessions using between 6 and 17 h of simulation-based training. The performance score for the simulation group was significantly higher for the first 29 scans compared to that for the non-simulation group, such that scores reached approximately the same level after 49 and 77 scans, respectively.

Conclusion

We showed improved performance in diagnostic ultrasound scanning on patients after simulation-based mastery learning for radiology residents.

Trial registration

NCT02921867

Key Points

• Improvement in scanning performance on patients is seen after simulation-based mastery learning in diagnostic abdominal ultrasound.

• Simulation-based mastery learning can prevent patients from bearing the burden of the initial steep part of trainees’ learning curve.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Ultrasound examination often provides important information regarding a patient’s diagnostics, but skilled examiners are crucial to ensure diagnostic benefit. The skills needed are complex and include hand-to-eye coordination, image optimization, and image interpretation [1]. The current training requirements for physicians in abdominal ultrasound are a predetermined numbers of scans within a specific timeframe [2, 3]. This approach is potentially problematic because trainees learn at different rates and the number of scans does not correlate well with skill levels such that a certain number of performed scans do not guarantee competence [4].

Due to these concerns, competency-based medical education has emerged as a means of providing continued assessments to establish competence and allowing the time used by the individual trainee to be variable [5]. However, the education of future competent doctors is under pressure due to the challenges of modern medicine, including centralized patient care, limited working hours, supervisor shortage, time pressure, and an increased focus on patient safety [6]. The best solution for these challenges may be new educational methods, such as simulation-based mastery learning, where trainees are trained on simulators until they reach a predefined skill level [7, 8]. This provides a safe training space, where the duration of training varies on individual training needs to reach the same skill and knowledge levels. Simulation-based mastery training could provide a standardized approach for improving the clinical performance of trainees in abdominal ultrasound before scanning patients in the clinical setting. A recent, national general needs assessment prioritized procedure suitable for simulation-based training in radiology and ultrasound-guided biopsies, ultrasound-guided needle punctures, and basic abdominal ultrasound topped the list [9]. However, the effect of this intervention/training remains unclear, and to date, there are no randomized controlled trials evaluating the transfer of skills from simulation-based education to clinical performance [10].

The aim of this study was to determine the effect of simulation-based mastery training on clinical performance in abdominal diagnostic ultrasound for radiology residents.

Methods

Study design

This study was approved by The Danish Ethical Committee with an exemption letter (protocol H-16023858). It was a multicenter randomized controlled trial registered at clinicaltrials.gov (identifier: NCT02921867) and reported according to the Consolidated Standards of Reporting Trials (CONSORT) statement [11].

Participants

A power calculation was performed based on a transfer study on point-of-care ultrasound using Objective Structured Assessment of Ultrasound Skills (OSAUS), with mean outcomes of 27.4 for the intervention and 18.0 for the non-simulation group, and a standard deviation of 7.7 [12, 13]. A total of 22 participants were needed to get a power of 0.8 at the 5% significance level.

All introductory radiology residents in Southern Zealand and capital regions of Denmark were contacted by email. Participants were enrolled from November 1, 2016, until the end of February 2018, prior to beginning their clinical ultrasound training. Inclusion criteria included were fluency in Danish or English and employment as residents within the first year of radiology training. Participants were excluded if they had more than 1 week of formalized ultrasound training or if they already had started (or completed) clinical ultrasound training. Included residents were randomized by one author (EAB) to the two study groups: (1) training on a virtual-reality simulator until passing a validated test or (2) standard clinical training on patients. Randomization was done using www.random.org in a randomized block design with pairs matched by hospital, to account for the differences in the hospitals’ sizes and patient’s demographics. Enrollment with assigned intervention was performed by one author (MØ). All residents participated voluntarily and gave written informed consent. No compensation was given.

Intervention

Simulation training was conducted in a standardized setting at Copenhagen Academy for Medical Education and Simulation, located at Copenhagen University Hospital, Rigshospitalet [14]. The simulation-based training program was developed by the author group on two identical simulators (Schallware station 64; version 10013) provided by the research fund from the Department of Radiology, Rigshospitalet. The training program was developed by two authors (KRN, MØ) who viewed 150 abdominal simulator cases and selected 49 cases with 69 different pathological findings, which represent/comprise the knowledge recommended for level 1 ultrasound by European Federation of Societies for Ultrasound in Medicine and Biology (EFSUMB) [2]. The cases were presented in nine modules (Table 1).

All study participants in the intervention arm were given an identical introduction to the simulator by one author (MØ), including directions to freely switch between the three different learning strategies available on the simulator:

-

1.

Question/answer (Q&A) mode: Participants are asked to mark an anatomic or pathologic finding. Each case has 5–35 different assignments, e.g., “mark left kidney cortex.” Setting the marker results in a notification of “correct or incorrect answer” and a color marker showing the correct location. Additional information is given when relevant, e.g., for veins in the liver “…notice the walls of the portal veins are visible, whereas the walls of the hepatic veins are not.”

-

2.

Region of interest (RoI) mode: A list of all RoIs in the case is displayed and a color marker for each RoI is available for activation.

-

3.

Finding mode: This setting offers a full list of pathologic findings in the case with still frame images of the pathologic findings displayed opposite the “live” case.

A simulator assistant (medical student or first-year radiology resident (MØ)) with expert knowledge of the simulator was present during training. Participants practiced in all modules on the simulator until they felt they mastered the cases in module 9 and were ready for the final test. The test was developed for assessing ultrasound competence and had solid evidence of validity, with a predefined pass/fail standard of 14 points established in an earlier study with 60 participants [15]. All tests were scored by a blinded reviewer (KRN).

Comparison group

The non-simulation group followed the standard approach and did not receive any training prior to the clinical ultrasound training.

Outcome

For both the intervention and non-intervention study groups, all abdominal ultrasound scans performed during the first 6 weeks of clinical training were scored in a paperback log carried by the residents. The log was organized with one page for each procedure, including date, examination subject (e.g., suspected gallstone), presence of supervision, marking if supervisor or resident scored the scan, time used by resident, time used by supervisor, and the OSAUS scorecard (Table 2). The scorecard had five lines, where a score was written on each line corresponding to a 1–5 rating on a Likert scale for each of the five OSAUS items: knowledge of equipment, image optimization, systematic examination, image interpretation, and documentation [13]. Thus, all scans were given a total score between five and 25 points.

Statistics

Descriptive analysis of resident characteristics was performed for the simulation and non-simulation groups by means of frequency distributions (number and %) and mean, standard deviation, median and range (minimum, maximum). If one or more OSAUS item scores were missing, the mean score was calculated and re-scaled to 5–25.

An exponential learning curve was fitted for the simulation and non-simulation groups, respectively, using the model

where n denotes the scan number, Yn the mean OSAUS score at scan number n, Y0, and Y0 + Y1 the initial and asymptotic OSAUS score, respectively, and α is a constant coefficient. The OSAUS score reaches an approximately constant level at scanning 3α. The exponential learning curve was estimated for each group using non-linear regression with random variation between residents in parameters a, Y0, and Y1 (i.e., random slope model).

To determine the number of scans where there is no difference between the simulation and the non-simulation groups, 83.4% confidence intervals were calculated based on the variation between individual learning curves for the residents within each groups. Overlap between the 83.4% confidence intervals is an indication of the number of scans where there is no significant difference between the two groups at a 5% significance level [16].

Differences in OSAUS scores between the two groups were further evaluated using an analysis of variance. The correlation between repeated measurements for the same resident was modeled using an autoregressive correlation structure of first order. Scanning number was included as a fixed effect. The interaction between group and number of scans was tested. The analysis was performed for scan numbers 1 to 20, 11–30, 21 to 40, and 41 to 60.

The association between the simulation-based test score and OSAUS at first scan was evaluated using a linear regression. Differences in supervision time between the two groups were further evaluated using an analysis of variance. The correlation between repeated measurements for a single resident was modeled using an autoregressive correlation structure of first order. Scanning number was included as a continuous explanatory variable. Differences in the number of scans performed between the two groups were examined using a t test. A 5% significance level was applied and Statistical Analysis System version 9.4 (SAS Institute Inc.) was used for analysis.

Results

Participants

Thirty-three of the 58 eligible physicians were excluded before randomization due to completed ultrasound training (n = 15), already started ultrasound training (n = 12), no reply on first or second email (n = 3), maternity leave (n = 1), department declined to participate (n = 1), or clinical ultrasound training scheduled after study ended (n = 1). The originally intended trial period of 1 year was extended by 4 months due to recruitment needs, and the study ended with 20 of the intended 22 residents for data analysis, due to the fact that five of the 25 enrolled participants did not complete the study (Fig. 1).

Simulation training

The simulation group participants used between 6 and 17 h of simulation-based training, in two to seven training sessions (Table 3). All participants reached mastery level with their first attempt at the simulation-based test, except for one participant who trained 11 h before failing the first test and passed after three additional hours of training. Simulation-based training was completed on average 5.3 days before the start of clinical ultrasound training, with a range of 1 to 18 days.

Primary outcome

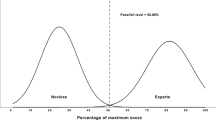

The fitted learning curves showed a significant difference in the clinical start performance level score between the simulation and the non-simulation groups (Fig. 2). The performance score was significantly higher for the simulation group for the first 29 scans, and the mean scores plateaued for the simulation and the non-simulation groups after 49 and 77 scans, respectively (Fig. 2). There was a significant difference in OSAUS scores between the simulation group and the non-simulation group until scan number 30 (number of scans 1–20, p < 0.001; number of scans 11–30, p = 0.040; number of scans 21–40, p = 0.15; number of scan 41–60, p = 0.55). There was no significant interaction between group and number of performed scans.

Learning curve of mean OSAUS scores for the simulation group (black line) with confidence intervals (black dotted lines) and the non-simulation group (gray line) with confidence intervals (gray dotted lines). The differences in the scores are significant until scan 29 (vertical line), and the two curves reach a plateau at 49 and 77 scans, respectively (vertical dotted lines)

Secondary outcome

The association between simulation-based test score and the clinical start level performance score was not statistically significant (p = 0.35). There was no significant difference in supervisor time between the two groups (p = 0.65). The mean number of scans performed was 84.6 (SD = 34.2) in the simulation group and 83.9 (SD = 23.9) in the non-simulation group (p = 0.96). The feasibility of the simulation-based mastery learning program was good, and only one participant randomized for simulation dropped out and only one department declined to participate, due to excessive workload.

Discussion

This study showed a statistically significant improvement in scanning performance on patients after simulation-based mastery learning in diagnostic abdominal ultrasound for radiology residents. The improvement was seen for the first 79 scans with statistically significant differences for the first 29 scans.

The goal of medical training is the acquisition of clinical skills, and therefore, this study focused on an assessment of competence in the clinical environment as the primary outcome. The targeted skill level should be identified, such that it is clear if clinical competence is affected by the training [17]. Kirkpatrick provides a four-level model to evaluate this [18]: level 1, reaction (asking participants about their subjective opinions of training efficacy); level 2, learning (competence affected in the educational setting, e.g., measuring whether simulation-based training improves performance on the simulator); level 3, behavior (competence affected in the clinical setting; measuring transfer of skills); and level 4, results (clinical outcomes affected). This study showed an effect at level 3, while most existing educational research in simulation-based abdominal ultrasound has focused on level 1. Additionally, most studies have used outdated research methods, such as a pretest/post-test setup, assessment tools which lack validity evidence, or historical data as the basis for comparison [10, 19]. Randomized controlled trials in other medical areas have demonstrated an effect of simulation-based training on level 3 or 4, e.g., in laparoscopy, endoscopic ultrasound, and transvaginal ultrasound, where simulator training led to improved patient care and reduced need for supervisor, as well as reduced need for re-scanning [20,22,22]. To the best of our knowledge, this is the first randomized controlled trial assessing simulation-based abdominal ultrasound training with a clinical performance outcome. Increased performance level of ultrasound trainees could optimize the use of supervision and enhance/improve patient safety, while providing trainees a uniform understanding knowledge of pathology and ensuring a minimum skill level.

The learning of motor skills is a never-ending process to which Fitts and Posner provide a three-stage learning model corresponding to the novice, intermediate, and advanced learner [23]: (1) the cognitive stage which features a step-by-step approach to the task with many significant errors; (2) the associative stage during which skills are refined and consistency emerges with fewer, smaller errors; and finally, (3) the autonomous stage where decisions are not conscious and performance is subject is very consistent. The rate of learning is not uniform in these three stages and a negative accelerated learning curve, as apparent in this study, is typical for motor skills, where performance improves more rapidly at the beginning [24]. Simulation-based mastery training moves the initial steep part of the learning curve, characterized by inferior performance, away from the clinical setting and ensures trainees are already transitioning to the associative learning stage, with fewer and less significant errors, before scanning on patients.

The clinical ultrasound training can vary significantly, as it is dependent on the characteristics of individual departments, such as patient demographics, and supervisor availability [25]. The simulation-based mastery learning approach, which was used as the intervention in this study, ensures that all ultrasound trainees acquire a minimum skill level and have a fundamental understanding of pathology before scanning patients [20]. Other simulator studies have used a training intervention which is based on a certain number of repetitions or a set amount of time, e.g., in colonoscopy where three randomized controlled training studies assessed 8, 10, and 16 h of simulation-based training, respectively [26,28,28]. As neither time nor the number of procedures corresponds to a certain skill level, this approach does not ensure that all trainees reach the same or even a minimum skill level [4]. The simulation-based mastery training approach also allows for integration of other well-established learning strategies, such as timely feedback, testing effect, and deliberate practice [29,31,31].

The tests used for assessing training and clinical skills in this study have solid validity evidence, as it is critical that the measured performance scores reflect actual skill levels [10, 13, 32]. The OSAUS score used for clinical assessment was developed for point-of-care ultrasound and may miss some of the educational effects of more detailed diagnostic ultrasound examination: primarily in item 2 (image optimization) and item 4 (interpretation of image) (Table 2). We found a significant difference for the first 29 scans and scores reached approximately the same plateau after 79 scans, which resembles the effect found in other studies, e.g., 20–50 laparoscopies in gynecology and 80 colonoscopies for gastroenterology [20, 27]. A more precise assessment tool could possibly have lowered the variability in scores and would, thereby, have narrowed the confidence intervals of our findings (Fig. 2).

Limitations

It is inherently difficult to blind participants to an educational intervention, and therefore/thus, this represents an important limitation in educational research. We blinded the clinical raters to the randomization process, but residents differed significantly with respect to their presence in the department due to the time needed for off-site training for the simulation group. Furthermore, our findings showed a higher performance level for the simulation group in the first part of clinical training. These factors may have compromised our efforts to blind the supervisors who scored the scans.

The number of participants is a well-known limitation in educational research where a systematic review found a median sample size of 25 participants [33]. In this study with only 20 participants, sample size is partially responsible for the broad confidence intervals for the performance scores. With a small sample size, the individual effect of each participant’s data is significant, and interpretation of a measured effect should be carefully considered.

Conclusion

We have demonstrated improved performance in diagnostic ultrasound scanning on patients after simulation-based mastery learning for radiology residents. The simulation-based mastery learning program was feasible and its implementation could accelerate the initial steep part of trainees’ learning curve.

Abbreviations

- CONSORT:

-

Consolidated Standards of Reporting Trials

- EFSUMB:

-

European Federation of Societies for Ultrasound in Medicine and Biology

- OSAUS:

-

Objective Structured Assessment of Ultrasound Skills

References

Nicholls D, Sweet L, Hyett J (2014) Psychomotor skills in medical ultrasound imaging: an analysis of the core skill set. J Ultrasound Med 33:1349–1352

Education and Practical Standards Committee, European Federation of Societies for Ultrasound in Medicine and Biology (2006) Minimum training recommendations for the practice of medical ultrasound. Ultraschall Med 27:79–105

(2008) AIUM practice guideline for the performance of an ultrasound examination of the abdomen and/or retroperitoneum. J Ultrasound Med 27:319–326

Barsuk JH, Cohen ER, Feinglass J, McGaghie WC, Wayne DB (2017) Residents’ procedural experience does not ensure competence: a research synthesis. J Grad Med Educ 9:201–208

Reznick RK, MacRae H (2006) Teaching surgical skills--changes in the wind. N Engl J Med 21:2664–2669

Garg M, Drolet BC, Tammaro D, Fischer SA (2014) Resident duty hours: a survey of internal medicine program directors. J Gen Intern Med 10:1349–1354

Cook DA, Brydges R, Zendejas B, Hamstra SJ, Hatala R (2013) Mastery learning for health professionals using technology-enhanced simulation: a systematic review and meta-analysis. Acad Med 88:1178–1186

McGaghie WC (2015) Mastery learning: it is time for medical education to join the 21st century. Acad Med 90:1438–1441

Nayahangan LJ, Nielsen KR, Albrecht-Beste E et al (2018) Determining procedures for simulation-based training in radiology: a nationwide needs assessment. Eur Radiol 28:2319–2327

Østergaard ML, Ewertsen C, Konge L, Albrecht-Beste E, Bachmann Nielsen M (2016) Simulation-based abdominal ultrasound training - a systematic review. Ultraschall Med 37:253–261

Schulz KF, Altman DG, Moher D (2010) CONSORT 2010 statement: updated guidelines for reporting parallel group randomised trials. BMJ 24:340 c332

Todsen T, Jensen ML, Tolsgaard MG et al (2016) Transfer from point-of-care ultrasonography training to diagnostic performance on patients-a randomized controlled trial. Am J Surg 211:40–45

Todsen T, Tolsgaard MG, Olsen BH et al (2015) Reliable and valid assessment of point-of-care ultrasonography. Ann Surg 261:309–315

Konge L, Ringsted C, Bjerrum F et al (2015) The simulation centre at Rigshospitalet, Copenhagen, Denmark. J Surg Educ 72:362–365

Østergaard ML, Nielsen KR, Albrecht-Beste E, Konge L, Nielsen MB (2018) Development of a reliable simulation-based test for diagnostic abdominal ultrasound with a pass/fail standard usable for mastery learning. Eur Radiol 28:51–57

Knol MJ, Pestman WR, Grobbee DE (2011) The (mis)use of overlap of confidence intervals to assess effect modification. Eur J Epidemiol 26:253–254

Bransford JD, Schwartz DL (1999) Chapter 3: Rethinking transfer: a simple proposal with multiple implications. Rev Res Educ 24:61–100

Kirkpatrick D (1950) The Kirkpatrick Model. Available from: https://www.kirkpatrickpartners.com/Our-Philosophy/The-Kirkpatrick-Model. Accessed 13 Oct 2017

Orr KE, Hamilton SC, Clarke R et al (2018) The integration of transabdominal ultrasound simulators into an ultrasound curriculum. Ultrasound 8:1742271X18762251

Larsen CR, Soerensen JL, Grantcharov TP et al (2009) Effect of virtual reality training on laparoscopic surgery: randomised controlled trial. BMJ 338:b1802

Konge L, Clementsen PF, Ringsted C, Minddal V, Larsen KR, Annema JT (2015) Simulator training for endobronchial ultrasound: a randomised controlled trial. Eur Respir J 46:1140–1149

Tolsgaard MG, Ringsted C, Rosthøj S et al (2017) The effects of simulation-based transvaginal ultrasound training on quality and efficiency of care: a multicenter single-blind randomized trial. Ann Surg 265:630–637

Fitts PM, Posner MI (1967) Human performance. Brooks/Cole Publishing Co., Belmont

Magill R, Anderson D (2014) Motor learning and control: concepts and applications, 10th edn. McGraw-Hill Education, Singapore

European Society of Radiology (2013) Organisation and practice of radiological ultrasound in Europe: a survey by the ESR Working Group on Ultrasound. Insights Imaging 29:401–407

Grover SC, Garg A, Scaffidi MA et al (2015) Impact of a simulation training curriculum on technical and nontechnical skills in colonoscopy: a randomized trial. Gastrointest Endosc 82:1072–1079

Cohen J, Cohen SA, Vora KC et al (2006) Multicenter, randomized, controlled trial of virtual-reality simulator training in acquisition of competency in colonoscopy. Gastrointest Endosc 64:361–368

Haycock A, Koch AD, Familiari P et al (2010) Training and transfer of colonoscopy skills: a multinational, randomized, blinded, controlled trial of simulator versus bedside training. Gastrointest Endosc 71:298–307

Strandbygaard J, Bjerrum F, Maagaard M et al (2013) Instructor feedback versus no instructor feedback on performance in a laparoscopic virtual reality simulator: a randomized trial. Ann Surg 257:839–844

Kromann CB, Jensen ML, Ringsted C (2009) The effect of testing on skills learning. Med Educ 43:21–27

Ericsson KA (2015) Acquisition and maintenance of medical expertise: a perspective from the expert-performance approach with deliberate practice. Acad Med 90:1471–1486

Downing SM, Yudkowsky R (2009) Assessment in health professions education. 1. Vol. Routledge, New York

Cook DA, Hatala R (2015) Got power? A systematic review of sample size adequacy in health professions education research. Adv Health Sci Educ Theory Pract 20:73–83

Acknowledgements

The authors would like to thank Kirsten Engel at Copenhagen Academy for Medical Education and Simulation for language revision and all participants and raters for their time.

Funding

The authors state that this work has not received any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Guarantor

The scientific guarantor of this publication is Michael Bachmann Nielsen.

Conflict of interest

The authors of this manuscript declare no relationships with any companies whose products or services may be related to the subject matter of the article.

Statistics and biometry

One of the authors has significant statistical expertise.

Informed consent

Written informed consent was obtained from all subjects in this study.

Ethical approval

Institutional Review Board approval was obtained.

Methodology

• prospective

• randomized controlled trial

• multicenter study

Rights and permissions

About this article

Cite this article

Østergaard, M.L., Rue Nielsen, K., Albrecht-Beste, E. et al. Simulator training improves ultrasound scanning performance on patients: a randomized controlled trial. Eur Radiol 29, 3210–3218 (2019). https://doi.org/10.1007/s00330-018-5923-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00330-018-5923-z