Abstract

Years of ill-managed irrigation have triggered secondary soil salinization in the Khorezm region of Uzbekistan located in the Aral Sea basin. To assess the magnitude and dynamics of secondary soil salinization, to quantify improved management strategies and to derive updated irrigation standards, the soil water model Hydrus-1D was used. Water and soil salinity dynamics in three cotton fields with different soil textures were monitored and simulated for the years 2003 and 2005. Until now in Khorezm, overall soil salinity could only be controlled by pre-season salt leaching using high amounts of water. This water, however, may not be available anymore in the near future because of global climate change and shrinking fresh water resources. Simulations confirmed that the present leaching practice is barely effective. At two out of the three locations within a sandy loam field, leaching did not remove salts from the 2 m profile. Instead, salts were only shifted from the upper (0–0.8 m) to the lower (0.8–2 m) soil layer. Strong groundwater contribution to evapotranspiration triggered secondary (re)-salinization of the topsoil during the cropping season. As a consequence, salt amounts in the top 0.8 m of soil increased from 9 to 22 Mg ha−1 in the field with loamy texture, and from 4 to 12 Mg ha−1 in the field with sandy texture. Management strategy analyses revealed that reducing soil evaporation by a surface residue layer would notably decrease secondary soil salinization. Here, owing to the reduced capillary rise of groundwater, post-season salt contents of the three fields were reduced by between 12 and 19% when compared with residue-free conditions. Even more effective would be improving the efficiency of the drainage system so as to lower the groundwater table. This would require a revision of the current irrigation management schemes, but could, as simulations revealed, reduce the post-season salt content in the 2 m soil profile of the three fields by between 36 and 59% when compared with unaltered conditions. For the revised irrigation management in total not more water than already foreseen by national irrigation recommendations would be needed. Increasing leaching and irrigation efficiency would help sustaining the present cotton production levels while reducing future leaching demands.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Soil salinity is a latent threat in irrigated agriculture. Worldwide almost 400 Mha of land are affected by salinization (Bot et al. 2000). Salinity on about 80% of these lands is of natural origin (primary salinization), whereas on the remaining 20% it is “man-made”, i.e., a result of secondary soil salinization under conditions of poor drainage in irrigation. Between 9 and 25% of irrigated areas in Tunisia, the United States, India, China and South Africa are salt-affected. In Pakistan, the share is estimated to even surpass 25% (CISEAU 2006). About 1–2% of the irrigated areas, mostly in arid and semi-arid regions, become unsuitable for agriculture each year (FAO 2002). In monetary terms this amounts to US$ 250 per ha annually, or about US$11 billion in total (CISEAU 2006).

Owing to the arid agro-climatic conditions, crop production in Uzbekistan fully relies on irrigation. At the end of the last century, the total irrigated land was estimated to 4.2 Mha (Abdullaev 2003). One of the most important irrigated crop in Uzbekistan is cotton (Gossypium hirsutum L.), which, given its long growing period, requires large amounts of water. However, water management at field level as observed in Khorezm, a region in the western part of Uzbekistan, is poor (Forkutsa et al. 2009). At the same time, water losses from the conveyance system are high, and consequently overall water withdrawal is extremely high: on an average for the whole of Khorezm between 17,000 and 30,000 m3 of water per hectare is extracted from the Amu Darya (Conrad 2006), which is one of the two tributaries to the Aral Sea.

Inadequate management of irrigation water has lead to considerable salinization of the soils in this region. Ibrakhimov et al. (2007) reported that more than 50% of the soils in Khorezm were classified as moderate to high saline according to a survey from the 1980s. Secondary soil salinization by capillary rise of shallow groundwater (and salt) into the rooting zone plays a major role, nullifying pre-season salt leaching efforts, entailing yield losses and seriously threatening economic growth and development similar to other region (Grieve et al. 1986; Smets et al. 1997; Willis et al. 1997; Christen et al. 2001; Singh 2004; Murtaza et al. 2006). Within the Aral Sea basin, an estimated 20%, corresponding to more than US$ 200 million per year, of the yield of cotton production are lost because of salinization problems (CISEAU 2006). Nevertheless, little is known about the soil salinity dynamics of the irrigated areas of this basin.

The detailed analysis of the water balance of current and alternative irrigation management of cotton in Khorezm (Forkutsa et al. 2009), is supplemented by the present analyses on soil salinity dynamics. Water application, crop growth, soil water, groundwater and corresponding salt dynamics were studied on three fields with different soil textures in 2003 and 2005. Subsequently, the soil water model Hydrus-1D (Šimunek et al. 2005) was applied to (1) understand and analyze the effect of major as well as micro-scale differences in soil texture and (micro-basin) water management on soil salinity, (2) simulate soil salinity dynamics under cotton with the aim to quantify leaching efficiency, and secondary soil salinization and (3) simulate improved management strategies and revise irrigation accordingly.

Materials and methods

A detailed description is provided in Forkutsa et al. (2009). Here only a brief overview is given with a focus on the aspects of soil and water salinity.

Location

Experimental data were collected in the Khorezm region located in western Uzbekistan, on the left bank of the Amu Darya River. This region has an extreme continental, semi-desert climate with hot and dry summers and cold winters. It receives around 100 mm of precipitation from October to May. Crop production of mostly cotton, wheat, and rice fully relies on irrigation water that is withdrawn from the Amu Darya.

Experimental data

In 2003, a jointly farmer–researcher-managed experiment was conducted on two cotton fields, one with a sandy loam and the second with a sandy soil. Both fields were located in the Khiva district of Khorezm (41°20′ 39′′N, 60°18′60′′E). All cultivation activities were decided upon by farmers. After rough tractor-leveling, in mid-February the sandy loam field was divided into micro-basins of approximately 30 × 30 m. Salt in the soils of both fields was leached by three applications of water in March–April; a common practice in Khorezm. Cotton was planted after plowing and chiseling April, 25. Both fields were fertilized following local recommendations. The water and salt balance of cotton of three micro-basins following a west–east transect along the slope on the sandy loam field (henceforth called location 1–3), and one single micro-basin on the sandy field were studied. The transect study allowed the assessment of the significance of micro-level soil textural variability and in-field differences in water management with regard to water dynamics (parallel paper) and secondary soil salinization.

A second data set was established in 2005 on a researcher-managed fertilizer-response trial in Urgench district (41°60′N, 60°51′E) on a loamy soil. Tillage and leaching was similar to that of the sandy loam field. The cotton variety Khorezm-127 was planted May 2.

Water application

Amounts of water applied for leaching (by flood irrigation) and irrigation (by furrow irrigation) were measured with Cipoletti (or trapezoidal) weirs. The electrical conductivity (ECw) of the applied water was measured once at the inlet of the field irrigation canals at the start of water application, since previous observations had shown that no notable change of water salinity occurred during one and the same day.

Water content and salinity of the soil

Soil genetic layers and soil physical properties (texture, bulk density) were determined before the start of the experiments. Soil water content was determined manually (gravimetrically) by soil sampling with an auger at 20, 50, 80 and 105 cm depth. The soil was sampled 1 day before and 2 days after irrigation at two to four locations, and additionally between two irrigation events at least once every week. The electrical conductivity, ECp, which is the EC of the 1:1 water–soil paste, of soil sub-samples was measured according to Chernishov and Shirokova (1999). ECp linearly correlates with the international standard EC value of the saturated soil extract, ECe (Rhoades et al. 1999), and the equation ECe = 3.5 ECp applies (R 2 = 0.877; Shirokova et al. 2000).

In addition, the total dissolved solids (TDS) in 124 soil samples, a subset of the above-mentioned samples, were determined. Using soil bulk density, TDS was expressed in units of milligrams of salt per cm3 of soil. To convert qualitative EC measurements into mass units, the dependency between TDS (mg salts cm−3 soil) and ECe (dS m−1) was analyzed (Fig. 1a) and a linear regression equation was established:

Assuming that only negligible amounts of salts were adsorbed at the soil exchange complex, the concentration (C, mg salts cm−3 water) of salts in the solution was calculated from TDS (mg salts cm−3 soil) and the water content (θ, cm3 water cm−3 soil):

Total dissolved solids and ECe do not depend on the actual in situ water content of the soil.

In 2005, in the experiment on the loamy field, soil water content at 20, 40, 60, 80 and 100 cm depth was measured in hourly intervals with capacitance probes (theta probe, Delta-T Devices, UK). As no more soil samples had to be taken for manual soil moisture determination, and given the focus of this experiment on crop growth and N dynamics (Kienzler 2009), ECe of the soil was measured less frequently than in 2003, altogether four times on February 4, June 1, June 30 and November 7.

Groundwater

The depth of the groundwater table was monitored by observation wells installed down to 2.5 m depth as perforated polyethylene pipes. Groundwater depth and the electrical conductivity (ECw) were measured at the sandy and sandy loam fields at the same time. TDS of 152 sub-samples were determined. Groundwater salinity of the loamy field was only measured three times throughout the season on July 7, July 21 and August 29. The ECw (dS m−1) was regressed against total dissolved solids of groundwater and irrigation water (mg salts cm-3 water) yielding (Fig. 1b):

Meteorological data

In 2003, air temperature, relative humidity, incoming short-wave radiation, wind speed and direction at 2 m above ground and precipitation were recorded automatically every 30 min with data-logger system (Micromec Multisens-Technetics 2000 logger equipped with Eijkelkamp and Kipp&Zonen sensors) 3 km away from the sandy loam field. Directly next to the loamy field hourly data of air temperature, incoming short-wave radiation, wind speed, relative humidity and precipitation were recorded in 2005 at the same heights as specified above using an automatic weather station (WatchDog 900ET).

Hydrus-1D

Hydrus-1D (Šimunek et al. 2005) is a software tool that simulates water movement in the soil using the Richards equation in combination with a convection–dispersion equation for solute transport. Soil hydraulic properties were described by the modified Mualem-van-Genuchten model (Vogel and Císlerová 1988).

We assumed that salts in the soils of Khorezm do not notably interact with the soil matrix and thus did not simulate adsorption–desorption processes. For convection–dispersion, the molecular diffusion coefficient in free water was set to 5 cm2 day−1, and the longitudinal dispersivity to 15 cm (both within default ranges given by Šimunek, personal communication). The uptake of salts by cotton was also assumed to be negligible and therefore disabled in the simulation routine.

The top boundary conditions were defined by evaporation, irrigation and precipitation. Potential evaporation and potential transpiration (root water uptake) were calculated according to the FAO-56 approach adjusted by observed leaf area index data (Allen et al. 1998). Measured groundwater depths were used to describe the bottom boundary of the soil profile. Analogously, measured ECw of irrigation water and groundwater, converted into concentrations using Eq. 3, were used to define the upper and lower solute transport boundary.

Root water uptake and water and salinity stress

For the determination of root water uptake (=transpiration), the method proposed by Feddes et al. (1978) and modified by van Genuchten (1987) to include multiplicative water and osmotic stress, was applied. The inherent water stress reduction term was parameterized with the function proposed by Feddes et al. (1978) using the following values for h 1 to h 4: h 1 = −10 hPa, h 2 = −25 hPa, h 3high = −200 hPa, h 3low = −6,000 hPa and h 4 = −14,000 hPa.

The reduction in crop yields due to salinity stress is linearly related to the soil water electrical conductivity (Maas and Hoffman 1977). Cotton is a salt-tolerant crop. According to the “threshold-slope function” of Maas (1990) which is part of Hydrus-1D, cotton growth and yield are notably influenced starting only at an ECe equal 7.7 dS m−1. Below this threshold root water uptake occurs without reduction, and above it root water uptake declines at the rate of 5.2% per 1 dS m−1 increase in ECe (=EC slope).

Management strategy analysis

Four management strategies were simulated:

-

1.

improved scheduling of irrigation;

-

2.

the introduction of a surface residue (mulch) layer to reduce soil evaporation;

-

3.

the introduction of an improved groundwater drainage system;

-

4.

a combination of the three previous management options, with the aim to layout irrigation requirements for an improved production system that builds on improved drainage and a surface residue layer.

For the sake of simplicity, we assumed that the mulch layer as part of the second and fourth strategy could reduce potential soil evaporation by 50%. In the third strategy, we simulated an improved drainage system that could lower groundwater to below at least 2 m [see Forkutsa et al. (2009), for more details]. In the fourth, integrative strategy, amount and timing of leaching and irrigation was altered to prevent any major crop-water and -salinity stress, measured by the relative transpiration rate (T a/T p). Irrigation amount and timing were manipulated so that T a/T p did not fall below ~0.8 from May until the end of August. Additionally, for the sandy field it was assumed that crops could potentially grow as vigorously as observed at location 1 on the sandy loamy soil. Consequently T p and maximum rooting depth for the sandy field was adjusted to equal observations of this location. To highlight the effects of slight micro-scale variations in soil texture and initial soil salinity levels on the salt balance, the altered irrigation management of location 1 was transferred to locations 2 and 3 of the sandy loam field without further adjustments.

Statistical evaluation

The statistical evaluation comprised regression analyses and analysis of variance (ANOVA). The goodness-of-fit of the simulation results was assessed by the root mean square error (RMSE) between observations and simulation results, which is:

Results

Irrigation, groundwater and salinity

In total between 210 and 300 mm of water was applied during three leaching events of the three experimental fields. The sandy loam field was irrigated five times, and altogether locations 1, 2 and 3 received 407, 387 and 268 mm of water, respectively. The loamy field was irrigated six times, but with only 295 mm in total. The sandy field was not irrigated at all.

Groundwater dynamics of all three fields followed commonly observed seasonal trends, with shallow groundwater in spring during pre-season salt leaching, when losses from the mostly unlined canals are high. A second groundwater peak was observed in July–August, when water requirement for cotton irrigation is high, which also increases the accompanying system losses. Groundwater was shallowest in the sandy field (0.5–1.2 m below surface). The sandy loam and the loamy fields had deeper groundwater levels (1.0–1.8 m).

Groundwater salinity of the sandy field was significantly lower (P < 0.05; one-way ANOVA) during the observed period than that of the three locations of the sandy loam field (Fig. 2). From May until mid-July, groundwater salinity was significantly higher at location 3 of the sandy loam field than at locations 1 and 2.

Groundwater salinity (dS m−1) of the sandy and sandy loam (SL) field (location 1–3) in 2003, and the loamy field in 2005 (three measurements only; circles); intermittent periods linearly interpolated; squares indicated times of observation of groundwater at the sandy field; for the sandy loam field leaching and irrigation dates are denoted by arrows at the bottom

Over the whole season, average groundwater salinity levels of the sandy field, the sandy loam field at locations 1, 2 and 3, and of the loamy field were 3.0, 4.3, 4.5, 5.6 and 2.6 dS m−1, respectively.

Irrigation water salinity ranged between 1.0 and 1.9 dS m−1, with slightly higher values at the beginning of the season during leaching, but in general with no notable trend over time or differences between fields.

Soil salinity dynamics

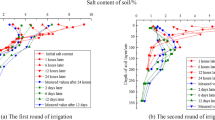

On the sandy loam field before leaching in February, soil salinity at 20 cm depth was highest at location 2 with an ECe equal to 15.6 dS m−1 (Fig. 3, left). Soil salinity levels at locations 1 and 3 at this time were 8.0 and 6.9 dS m−1, respectively (figures not shown). The first leaching (120 mm, March 4–5) lowered soil salinity at location 2 to 2.8 dS m−1 (March 11). The second leaching (78 mm, March 14–15) changed soil salinity only insignificantly, most noticeably at 50 cm depth. The third leaching (102 mm at locations 1 and 2, 60 mm at location 3, April 1) had no additional effect on soil salinity, as was seen from measurements at 20, 50, 80 and 105 cm depth at all three locations.

Soil salinity of the sandy field was <1 dS m−1 throughout the profile before leaching. The three leaching events had no impact on soil salinity (Fig. 3, right). This was also the case for the loamy field, where, on February 4, soil salinity was around or slightly lower than 2 dS m−1 throughout the profile (Fig. 4).

Re-salinization of the soil during the cropping season was observed on all fields. This was more pronounced in the topsoil at 20 cm depth, and at this depth it was in part temporarily reversed by irrigation. Soil salinity only slightly increased on the sandy field due to the fact that soil and groundwater salinity was low. Only at location 2 of the sandy loam soil did the salinity exceed 7.7 dS m−1 during some periods. Overall, soil salinity stress was still moderate with maximum ECe values of 9.5 dS m−1 measured on June 26. Assuming a decline of 5.2% water uptake per 1 dS m−1 increase above the threshold of 7.7 dS m−1 as proposed by Maas and Hoffman (1977), soil water uptake by the cotton plants should have decreased temporarily by <10%.

At the end of the cropping period, in mid-October, soil salinity at 20 cm depth had reached 3.0 dS m−1 on the sandy field and 2.9, 6.9 and 3.4 dS m−1 at locations 1, 2 and 3 of the loamy field, respectively. Soil salinity in 10–20 cm depth measured on November 7 on the loamy field was on average 2.9 dS m−1.

Evaluation of soil salinity simulations

In our simulations, we could well reproduce salt dynamics during the leaching period on the sandy loam field at all three locations. Also, the slight (sandy field) to moderate (sandy loam field) re-salinization of the topsoil, with irrigation events temporarily decreasing topsoil salinity (“irrigation-leaching”), could be simulated with sufficient accuracy. In part, the leaching effect of irrigation was over-predicted by the model, which might be related to small-scale heterogeneity in water application at micro-basin level. Also some of the short-term oscillations, notable down to 80 cm depth, were not captured by the model. Soil salinity of the loamy soil profile measured June 1 and 30, 2005, was also well matched by the simulations. The level of soil salinity at the end of the cropping season was slightly underestimated for location 2 and slightly overestimated for location 3 (20 cm depth only, figure not shown). The simulated salinity of the loamy soil on November 7 was comparable with the maximum observed values (Fig. 4).

Simulated soil salinity levels during the period of highest water demand (June–August) were always below the threshold of 7.7 dS m−1, and thus, in the simulations, water uptake was not reduced in response to salinity stress.

Overall, the RMSE between observed and simulated soil salinity of the sandy and sandy loamy soils was highest for the 20 cm observation point (RMSE between 1.0 and 3.2 dS m−1) and decreased with depth (Table 1).

Given the low number of observations over time, we calculated the RMSE for the loamy soil over the whole profile and distinguished observation dates. RMSE for the first three observation dates was extremely low, indicating that simulations reproduced real dynamics well. It was higher for the last observation date, but with 3.2 dS m−1 still at an acceptable level.

Salt balance

Simulation results allowed us to establish a detailed salt balance for the three soils. We distinguished between the top 0.8 m of soil, which is influenced by root water uptake and the layer below 0.8 m down to a maximum simulated depth of 2 m.

Before leaching in mid-February, 24 Mg ha−1 of salts were present in the upper 2 m of the soil of the loamy field and 9 Mg ha−1 2 m−1 in the sandy field (Table 2). Initial salt content differed strongly over short distance between locations 1 and 2, and 2 and 3 of the sandy loam field. It was 46 Mg ha−1 2 m−1 at location 1, 76 Mg ha−1 2 m−1 at location 2, and 45 Mg ha−1 2 m−1 at location 3.

Simulations revealed that at locations 1 and 3 of the sandy loam field, leaching did not effectively remove salts out of the 2 m profile but only shifted salts from 0–0.8 to 0.8–2 m depth (Fig. 5).

Consequently, considering the complete observation period, the amounts of salt in 0–2 m depth at locations 1 and 3 of the sandy loam field were quite balanced. Leaching seemed to have a more long-lasting effect at location 2 of the sandy loamy soil. Here, also a shift of salts from 0–0.8 to 0.8–2 m soil depths was observable, but comparing initial and post-season soil salinity, some of the salts were permanently removed.

Salt contents increased in the sandy soil that was not irrigated at all, in response to a continuous upward movement of water (capillary rise) to finally 24 Mg ha−1 2 m−1. Therewith, the sandy field still had the lowest overall salt content, but compared to the initial amounts, salt content had almost tripled. Also the salt content of the loamy field increased. It amounted finally to 38 Mg ha−1 2 m−1 following simulation results, or 30 Mg ha−1 2 m−1 if corrected for the slight overestimation in comparison to observed data towards the end of the simulation (compare Fig. 4).

Management strategy analysis

Rescheduling irrigation

The above-detailed observation and simulation results showed that the third leaching event did not further reduce soil salinity. Additionally, as explained in more detail in Forkutsa et al. (2009), the delay of the first irrigation (July 16) on the sandy loam field resulted in considerable water stress from June onwards.

Postponing and splitting the third leaching (60–102 mm) into two additional irrigation events in the simulation, namely on June 14 and on July 2, alleviated crop-water stress. However, the effect on soil salinity dynamics was limited and was only discernible until July 16. Rescheduling irrigation this way led to an increase in topsoil salinity at 20 cm depth. This was earlier and slightly faster when compared with the observed, business-as-usual scheduling, because the upper soil profile was dryer due to the skipped third leaching. Soil salinity did, however, not increase above 7.7 dS m−1 and thus crop-water uptake was not affected, despite somewhat higher topsoil salinity in May and June. Similarly, as more water was transpired and thus less water drained, the total amount of salt in the soil profile increased slightly.

Introducing a surface residue layer (reducing potential evaporation)

A residue layer was simulated by reducing potential evaporation by 50% in the simulations. This led to a considerable reduction in actual evaporation as compared to business-as-usual (=residue-free soil). Even though the effect of less evaporation on actual transpiration was limited, the effect of this measure on soil salinity dynamics was considerable. Owing to the reduced capillary rise of groundwater, post-season (October 4) salt contents of the three fields were reduced between 12 and 19% as compared to a residue-free soil. Total salt content of location 2 of the sandy loam field, dropped from originally 56 to 45 Mg ha−1 2 m−1 when a permanent residue layer was introduced (Table 2). The effect of this measure was even more pronounced when focusing on the upper 80 cm of soil—the part of the soil that matters most in terms of any potential crop salinity stress.

Improved groundwater drainage

Simulating a drainage system that would lower the groundwater to below 2 m soil depth, and keeping current irrigation management resulted in a reduction in actual transpiration (see parallel publication), as groundwater could no longer sustain crop-water requirements. As this eliminated the process of secondary soil salinization, the salt content of the 2 m soil profile of the sandy loam and loamy fields were considerably reduced between 25 and 32% compared with unaltered conditions. The salt content of the sandy field was even lowered by 65%. Given that under current uncertainties in water supply farmers rely on groundwater to avoid crop failure, improving the drainage system without improving irrigation scheduling seems not an advisable strategy. A severe reduction in crop growth and yield, if not a complete failure (sandy soil) would be the likely consequence.

Improved productions systems

In the fourth management strategy analysis, we simulated an optimized irrigation and salt leaching management in a hypothetical agricultural production system where an improved drainage infrastructure (analysis 3) and a surface residue layer (analysis 2) to prevent excessive, unproductive soil evaporation has been implemented.

Simulations indicated that around 200 mm of water split over two events would be sufficient for a proper leaching of salts out of the sandy loamy soil profile. This is shown for location 1 in Fig. 6. The drop of salt content in 0–0.8 m depth in response to leaching on March 5 and 15 is clearly visible. Subsequently, eight irrigations with each time between 60 mm (May) and 100 mm (August) and in total 580 mm of water were adequate to avoid notable plant water stress and to keep soil salinity in the top 0.8 m soil at low levels, i.e., to avoid secondary soil salinization.

Simulated dynamics of total salt in the profile of the sandy loam field at location 1 distinguished according to the soil layers 0–0.8 and 0.8–2 m from February to October under the assumption of an optimized production system with improved drainage, a surface residue layer and adapted irrigation and leaching management

Similarly, the loamy field required 230 mm of water for leaching and 650 mm for eight irrigations. The sandy field had to be irrigated much more frequently with less water applied at each event. This was necessary to economize irrigation water rather than to avoid soil salinization, as this soil with over 95% sand content had a low water holding capacity.

As compared to the business-as-usual conditions, soil salinity could be reduced significantly under optimal management within a single cropping season and without applying more water than is foreseen by Uzbek irrigation standards. At the end of the cotton cropping season on October 4, merely 18, 18–36 and 10 Mg ha−1 2 m−1 of salt was found in the loamy, sandy loam and sandy fields, respectively (Table 2). Thus, 36–59% less salt were found than if the system would have been run in the traditional way. The long-term benefits of such system improvement are obvious: minimizing upward movement of water and re-salinization of soils, leaching requirement in the coming year or for a subsequent winter-wheat crop would be reduced drastically, and eventually could be substituted by a much lighter pre-season irrigation-leaching only.

Discussion

In general, HYDRUS-1D proved to be an efficient tool for an accurate estimation of water and salt dynamics under conditions of shallow saline groundwater, indicated by the low RMSE between observed and simulated soil salinity. Some short-term fluctuations could not be reproduced by the model. This could be attributed to the micro-scale heterogeneity in soil salinity. However, more data points (in space and time) would be needed to understand why soil salinity at 80 cm depth, for instance, oscillated between 3 and 8 dS m−1 within <2 weeks in August–September 2003, i.e. far more frequently than corresponding irrigation events would explain.

Simulations overestimated post-season (November 7, 2005) topsoil salinity and consequently salt content of the loamy field by approximately 8 Mg ha−1. Simulated groundwater upward movement and groundwater contribution to crop growth was high on the loamy field; a result that is backed by the fact that observed cotton yields under fully fertilized conditions reached their potential (see Sommer et al. 2008 for more details). Part of the gap between average observed and simulated soil salinity might be attributed to spatial soil heterogeneity. This is supported by the match of the simulations with the maximum observed soil salinity.

There are a few comparable studies which focus on cotton irrigation, soil and groundwater salinity management in an arid environment that rely on intensive field studies and simulation: Smets et al. (1997) used the SWAP-1993 model (van den Broek et al. 1994) to study the impact of irrigation practices on soil salinity and crop transpiration of cotton and wheat in the Punjab, Pakistan. Based on a scenario analysis, the authors concluded that to control salinity and optimize crop transpiration farmers should focus on irrigation frequency. The authors noted that when sandy soils were irrigated less frequently but with larger amounts of water, crop transpiration will be reduced, but salinity is kept at acceptable levels. This is in line with the irrigation-leaching practice observed on our sandy loam field, and to a lesser extent on the sandy field. Singh (2004) used the SWAP model to develop guidelines for irrigation planning in cotton-wheat rotations on sandy loamy and loamy sandy soils in northwest India. He concluded that saline groundwater (up to 14 dS m−1) and canal water (0.3–0.4 dS m−1) could be used for irrigation in an alternating fashion for both soils, if pre-planting irrigation was done with canal water. This option might also be beneficial under the conditions observed in Khorezm.

According to the Ministry of Agriculture and Water Management of Uzbekistan (MAWR 2004), the groundwater table in autumn 2004 on 34% of the irrigated land in Khorezm was above 1 m depth below the surface and on 59% it ranged between 1 and 1.5 m. During that period, 55% of the irrigated lands were classified as slightly saline (2–4 dS m−1), 33% as medium saline (4–8 dS m−1) and 12% as highly saline (8–16 dS m−1). The percentage of saline soils in 2004 was comparable to the situation in 1990, when the share of slightly, medium and highly saline lands was 50, 33 and 10%, respectively. Thus, although the current study confirms the observation by Abdullaev (2003) that the Khorezm region is considered an area with continuous secondary salinization, overall soil salinity can be controlled by pre-season salt leaching, when sufficient water resources are available. Our field data from 2003, however, also show that salinity came close to the threshold of 7.7 dS m−1 above which salinity increasingly affects plant growth and yield. In downstream areas, where less water is available, salinity however can no longer be effectively controlled and yield reduction or failure is widespread. Irrigated agriculture in Khorezm thus is vulnerable to any reduction in irrigation water, be it caused by the expected higher withdrawal upstream or in response to climate change and a reduction in precipitation in the water-catchment area of the Amu Darya.

Apart from the vulnerability aspect mentioned above, our data do not allow drawing any conclusions on the long-term sustainability of the system assuming water continues to be abundant as in the past. MAWR (2004) data suggest that soil salinity levels did not substantially increase or decrease over time, even though an increase seems taken for granted in popular accounts of the problem (e.g. Tavernise (2008)).

The benefits of a surface residue layer, a key component of conservation agriculture, suggested that currently too much water is lost by soil evaporation. If this amount was reduced, irrigation water could be reduced and topsoil salinity diminished. A reduction in secondary soil salinization in turn means that less water is needed for pre-season salt leaching. Certainly, the issue of maintaining crop residues in the field has to be resolved in order to avoid competition between uses: surface residue, livestock fodder or, in the case of cotton, firewood for cooking and heating.

According to Ochs and Smedema (1996), in the Aral Sea basin, water applied for leaching of seriously saline land is about 500–1,000 mm years−1. Ramazanov and Yakubov (1988) and Shirokova and Ramazanov (1989) showed that with an efficient drainage system these amounts can be reduced to about 200 mm year−1, which concurs with the simulation results for the sandy loamy soil of this study. Starting in the 1970s about 2 Mha of irrigated farmland in the Nile basin of Egypt has been provided with subsurface drainage systems (Ali et al. 2001). This example shows that large-scale investments of such kind have successfully been implemented in the past. It also shows that investing in an effective drainage system, which we consider highly desirable for Khorezm, “is an effective measure in controlling groundwater tables and salinity and a highly profitable investment for both the national economy and farmers.” (Ali et al. 2001). However, the present analyses underlined that additional measures need to be implemented in concert since the improvement of the drainage system alone without introducing improved water application strategies may worsen the situation as this bears the risk of crop failure if irrigation water delivery is uncertain (see also Forkutsa et al. 2009).

Under the current circumstances, the right balance has to be drawn between allowing subsurface irrigation and avoiding excessive secondary soil salinization. This is to some extent a task related to the operation of the irrigation system at higher level, in the context of the limits given by the irrigation system (infrastructure) to deliver irrigation water to each individual field when needed. Uzbek authorities responsible for water distribution and system capacities are in the position to strike this balance, and our and possibly further simulation studies can be used to support this decision-making.

Environmental concerns, such as the degradation of the fresh water supply downstream by a more efficient salt leaching have not been addressed in this study, but should nevertheless be part of an overall Aral Sea basin irrigation management strategy. If for instance a zero-drainage discharge policy was to be implemented, as has been introduced elsewhere in the world, measures are needed also to stop a drainage discharge into the Amu Darya. It seems advisable to consider such measures already at the onset of large-scale re-structuring activities.

Conclusions

Salinity dynamics observed in the three fields could be simulated accurately with Hydrus-1D. Using the model to analyze management options proved a powerful tool for agro-ecosystem assessment.

Although the Khorezm region is characterized as an area with continuous secondary salinization, the overall soil salinity in the region seems to have so far been kept under control by pre-season salt leaching. However, disproportionately large water quantities are required for this, because, currently, pre-season salt leaching is not very effective and its effects not long-lasting. Salts are rather displaced within the soil profile but not effectively washed out of the top 2 m of soil. Thus, improving the drainage system and minimizing seepage of water out of the irrigation canals should be a top priority in the region. However, an improved irrigation and drainage systems needs to be implemented in concert with reliable, timely irrigation management and good farming practices, as for instance surface residue retention. Isolated remedies, like solely improving the drainage system, bear the risk of doing more harm than good. Raising leaching and irrigation efficiency helps sustain the present cotton production levels and reduces future leaching demands.

References

Abdullaev U (2003) Republic of Uzbekistan. Land degradation assessment in dry lands (LADA). State. Design and Research Institute (Uzgip), Tashkent

Ali AM, van Leeuwen HM, Koopmans RK (2001) Benefits of draining agricultural land in Egypt: results of five years’ monitoring of drainage effects and impacts. Water Res Dev 17:633–646

Allen RG, Pereira LS, Raes D, Smith M (1998) Crop evapotranspiration—guidelines for computing crop water requirements—FAO Irrigation and drainage paper 56. Food and Agriculture Organization of the United Nations, Rome

Bot AJ, Nachtergaele FO, Young A (2000) Land resource potential and constraints at regional and country levels. In: World soil resources reports (FAO), no. 90/FAO, Rome (Italy). Land and Water Development Division. p 114

Chernishov AK, Shirokova Y (1999) Express method of soil and water salinity evaluation in Uzbekistan (In Russian). Selskoe hozyaistvo Uzbekistana 5

Christen EW, Ayars JE, Hornbuckle JW (2001) Subsurface drainage design and management in irrigated areas of Australia. Irrig Sci 21:35–43

CISEAU (2006) Irrigation induced salinization. Background paper presented at the ‘Electronic conference on salinization: extent of salinization and strategies for salt-affected land prevention and rehabilitation’ from February 6 to March 6, 2006. www.dgroups.org/groups/fao/salinization-conf/docs/BackgroundPaper.doc. Last accessed Sept 2008

Conrad C (2006) Remote sensing based modeling and hydrological measurements for the assessment of agricultural water use in the Khorezm region (Uzbekistan), Ph.D. Thesis (in German). University of Wuerzburg, 205 p

FAO (2002) The salt of the earth: hazardous for food production. http://www.fao.org/worldfoodsummit/english/newsroom/focus/focus1.htm. Last accessed Sept 2008

Feddes RA, Kowalik PJ, Zaradny H (1978) Simulation of field water use and crop yield. Simulation monographs. Pudoc, Wageningen, p 189

Forkutsa I, Sommer R, Shirokova Y, Lamers JPA, Kienzler K, Vlek PLG (2009) Modeling irrigated cotton with shallow groundwater in the Aral Sea Basin of Uzbekistan: I. Water dynamics. Irrig Sci (Accepted)

Grieve AM, Dunford E, Marston D, Martin RE, Slavich P (1986) Effects of waterlogging and soil salinity on irrigated agriculture in the Murray Valley: a review. Aust J Exp Agric 26:761–777

Ibrakhimov M, Khamzina A, Forkutsa I, Paluasheva G, Lamers JPA, Tischbein B, Vlek PLG, Martius C (2007) Groundwater table and salinity: spatial and temporal distribution and influence on soil salinization in Khorezm region (Uzbekistan, Aral Sea Basin). Irrig Drain Syst 21:219–236

Kienzler K (2009) Improving N use efficiency and crop quality in the Khorezm region, Uzbekistan. PhD Thesis, Rheinische Friedrich-Wilhelms-Universität Bonn, p 230

Maas EV (1990) Crop salt tolerance. In: Tanji KK (ed) “Agricultural salinity assessment and management”, ASCE Manuals and Reports on Engineering practice, No. 71, NY

Maas EV, Hoffman GJ (1977) Crop salt tolerance—current assessment. J Irrig Drain Div ASCE 103:115–134

MAWR (2004) Annual report of Amu-Darya basin hydrogeologic melioration expedition, Khorezm region. Ministry of Melioration and Water Resources (MAWR), Tashkent

Murtaza G, Ghafoor A, Qadir M (2006) Irrigation and soil management strategies for using saline-sodic water in a cotton-wheat rotation. Agric Water Manage 81:98–114

Ochs WJ, Smedema LK (1996) Planning for drainage development in the Aral Sea basin. In: Bos MG (ed) The inter-relationship between irrigation, drainage and the environment in the Aral Sea Basin. Proceedings of the NATO advanced research workshop on drainage and development in arid zones. Kluwer, The Netherlands, p 28–29

Ramazanov AR, Yakubov HE (1988) Leaching and pre-planting irrigation. Mehnat, Tahskent

Rhoades JD, Chanduvi F, Lesch SM (1999) Soil salinity assessment: methods and interpretation of electrical conductivity measurements. FAO Irrigation and Drainage Paper 57, Food and Agriculture Organization of the United Nations, Rome, Italy

Shirokova YI, Ramazanov AR (1989) Development and implementation of ameliorative activities by zones, to increase land productivity to the potential level. SANIIRI Scientific report, Tashkent

Shirokova Y, Forkutsa I, Sharafutdinova N (2000) Use of electrical conductivity instead of soluble salts for soil salinity monitoring in Central Asia. Irrig Drain Syst 14:199–205

Šimunek J, van Genuchten MT, Šejna M (2005) The HYDRUS-1D software package for simulating the one-dimensional movement of water, heat, and multiple solutes in variably-saturated media. Version 3.0, HYDRUS Software Series 1, Department of Environmental Sciences, University of California Riverside, Riverside, CA, p 270

Singh R (2004) Simulations on direct and cyclic use of saline waters for sustaining cotton–wheat in a semi-arid area of north-west India. Agric Water Manage 66:153–162

Smets SMP, Kuper M, Van Dam JC, Feddes RA (1997) Salinization and crop transpiration of irrigated fields in Pakistan’s Punjab. Agric Water Manage 35:43–60

Sommer R, Kienzler K, Conrad C, Ibragimov N, Lamers J, Martius C, Vlek PLG (2008) Evaluation of CropSyst for simulating the potential yield of cotton in Uzbekistan. Agron Sustain Dev. doi:10.1051/agro:2008008

Tavernise S (2008) Old farming habits leave Uzbekistan a legacy of salt. New York Times, 15 June 2008. http://www.nytimes.com/2008/06/15/world/asia/15uzbek.html?_r=2&re&oref=slogin. Last accessed Feb 2009

Van den Broek JB, Van Dam JC, Elbers JA, Feddes RA, Huygen J, Kabat P, Wesseling JG (1994) SWAP 1993, input instructions manual. Report 45, Department of Water Resources, Wageningen Ag. University, The Netherlands

Van Genuchten MT (1987) A numerical model for water and solute movement in and below the root zone. Research Report 121. USDA-ARS, US Salinity Laboratory, Riverside

Vogel T, Císlerová M (1988) On the reliability of unsaturated hydraulic conductivity calculated from the moisture retention curve. Trans Porous Media 3:1–15

Willis TM, Black AS, Meyer WS (1997) Estimates of deep percolation beneath cotton in the Macquarie Valley. Irrig Sci 17:141–150

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by J. Ayars.

Rights and permissions

About this article

Cite this article

Forkutsa, I., Sommer, R., Shirokova, Y.I. et al. Modeling irrigated cotton with shallow groundwater in the Aral Sea Basin of Uzbekistan: II. Soil salinity dynamics. Irrig Sci 27, 319–330 (2009). https://doi.org/10.1007/s00271-009-0149-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00271-009-0149-0