Abstract

Background

Radiographer reporting has been studied for plain films and for ultrasonography, but not in paediatric brain CT in the emergency setting.

Objective

To study the accuracy of radiographer reporting in paediatric brain CT.

Materials and methods

We prospectively collected 100 paediatric brain CT examinations. Films were read from hard copies using a prescribed tick sheet. Radiographers with 12 years’ and 3 years’ experience, respectively, were blinded to the history and were not trained in diagnostic film interpretation. The radiographers’ results were compared with those of a consultant radiologist. Three categories were defined: abnormal scans, significant abnormalities and insignificant abnormalities.

Results

Both radiographers had an accuracy of 89.5% in reading a scan correctly as abnormal, and radiographer 1 had a sensitivity of 87.8% and radiographer 2 a sensitivity of 96%. Radiographer 1 had an accuracy in detecting a significant abnormality of 75% and radiographer 2 an accuracy of 48.6%, and the sensitivities for this category were 61.6% and 52.9%, respectively. Results for detecting the insignificant abnormalities were poorer.

Conclusions

Selected radiographers could play an effective screening role, but lacking the sensitivity required for detecting significant abnormality, they could not be the final diagnostician. We recommend that the study be repeated after both radiographers have received formal training in interpretation of paediatric brain CT.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Hospitals require radiology departments to support emergency departments with continuous high-quality radiology services [1]. Qualified radiologists cannot be on call continuously. Staff shortages, especially in paediatric radiology, restrict the continuity of these services. In order to provide this service, a significant burden is placed on radiology residents/registrars, the medical staff responsible for this service in the after-hours setting. Radiographer reporting has been studied in ultrasonography, where radiographers have been found to be highly accurate with very little difference between them and consultant radiologists [2, 3]. In terms of reading plain films, an in-depth analysis has found that radiographers have an accuracy rate that must be considered acceptable [4]. These studies have the implication that radiographers of this level could ease the workload, especially in emergency departments, while still maintaining a satisfactory level of diagnostic accuracy [4]. Berman et al. [5] found, when comparing radiographers to casualty officers in 1985, they had similar error rates. Renwick et al. [6] found that during assessment of plain films for triage, radiographers could offer useful advice to casualty officers [6].

A systematic review of radiographer red dot or triage of accident and emergency radiographs was done by Brealey et al. [7] in 2005. They found that for the red dot studies, pooled sensitivity (when compared to a radiologist) was 0.88, while specificity was 0.91 for all body areas. For the triage studies they found sensitivity and specificity of 0.90 and 0.94, respectively, for the skeleton, and 0.78 and 0.91, respectively, for the chest and abdomen. This highlights the difference in accuracy when reporting different parts of the body.

Our question was: are these areas of radiographer reporting and triage the only ones that could be serviced by radiographers, or are relatively experienced radiographers capable of accurately reporting/reading CT scans of the paediatric brain?

Materials and methods

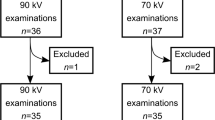

This was a prospective study carried out at a tertiary children’s hospital over a 1-month period. The age limit for referral to this institution is 13 years. The cases were selected in a premeditated fashion in an attempt to obtain a group felt to be representative of a wide spectrum of abnormalities. The scans were collected consecutively in the following manner: the first 25 CT scans referred for trauma, the first 25 CT scans performed for reasons other than trauma and not requiring administration of contrast agent for the study, and the first 50 CT scans performed both before and after contrast enhancement where the exact reason for the scan was not a consideration. This gave a total of 100 scans. Patients had to fall within the normal age range of the hospital.

The films were read by two senior radiographers with 12 and 3 years of experience working in paediatric CT, but with no formal training in imaging interpretation. Reading of films was done from hard copies by means of a prescribed tick sheet, with the radiographer simply ticking every abnormality seen or none at all (Fig. 1). They were blinded to the radiologist reports and the clinical history. Their results were compared to those of a consultant radiologist (representing the reference standard) who also used the tick sheet and was also blinded to the history. This was done as it was felt that our study was to test the ability of the radiographers to read the scans, not write complete reports, and not look for features suggested by the history. It was also felt that knowing the history would possibly bias the study in favour of the consultant radiologist. Reporting was done during working hours but none of the participants had access to other colleagues during the reporting.

After testing, the data were divided into three categories of interest. Firstly, out of the total of 95 scans available for analysis it was established how many were read as simply normal or abnormal, with no regard as to the abnormality, as per the first two options on the tick sheet (Fig. 1). We were interested in how accurately and sensitively abnormal scans were detected. Secondly, we defined a group of significant abnormalities: hydrocephalus, basal enhancement, abscess, brain swelling, surface blood and infarction. These abnormalities were felt to be diagnoses that would herald a change in management in the emergency setting (i.e. definitive, specific management instituted overnight, particular to the findings on the scan) in our clinical practice. These were totalled and placed in a single category—significant abnormalities. We focused on the total significant abnormalities. This left the remaining abnormalities to be totalled and placed in the third category—insignificant abnormalities. A scan was permitted to have any number of abnormalities, significant and insignificant, i.e. the number of abnormalities was independent of the number of abnormal scans.

We determined three areas of comparison:

-

1.

Detection of the total number of abnormal scans (irrespective of the degree of the abnormality).

-

2.

Detection of the number of significant abnormalities with respect to the number of total abnormalities.

-

3.

Detection of the number of insignificant abnormalities with respect to the total number of abnormalities.

Statistical analysis

Statistically we focused on accuracy and sensitivity in all the categories as we were more interested in the ability of the radiographers to detect an abnormality and particularly a significant abnormality. We were less interested in their ability to detect the lack of an abnormality. Specificity, positive predictive values (PPV) and negative predictive values (NPV) were also determined and 95% confidence intervals calculated (P = 0.5).

Results

Five of the scans were lost during the course of the study, leaving 95 for full analysis. The missing five scans could not be replaced because the study group was not complete at the end of the study. Patient ages ranged from newborn to 12 years 9 months (mean 4 years 3 months). There were 9 neonates and 30 infants.

The consultant (reference standard) found 49 of the 95 scans to be abnormal (Table 1). The total number of abnormalities found on all the abnormal scans was 72. Of these, 34 were felt to be significant and 38 insignificant.

Radiographer 1 recorded 47 abnormal scans and was correct in 43 cases (Table 2). Radiographer 1 identified 26 significant abnormalities (Table 3) and 19 insignificant abnormalities (Table 4), and was correct in 21 and 15 of the cases, respectively. For detecting an abnormal scan (Table 5) and a significant abnormality (Table 6), radiographer 1 had accuracies of 89.5% and 75%, sensitivities of 87.8% and 61.6%, and specificities of 91.3% and 86.8%, respectively.

Radiographer 2 recorded 55 abnormal scans and was correct in 47 cases (Table 2). Radiographer 2 identified 39 significant abnormalities (Table 3) and 45 insignificant abnormalities (Table 4), and was correct in 18 and 19 of the cases, respectively. For detecting an abnormal scan (Table 5) and a significant abnormality (Table 6), radiographer 2 had accuracies of 89.5% and 48.6%, sensitivities of 96% and 52.9%, and specificities of 82.6% and 44.7%, respectively.

Radiographer 1 was accurate at detecting abnormality alone, but significantly less accurate at detecting significant abnormality compared to the consultant radiologist. Radiographer 2 was also accurate at detecting abnormality alone (indeed with an even greater sensitivity than radiographer 1), but was not accurate at all at detecting significant abnormality compared to the consultant radiologist. Both radiographers showed poor accuracy in reading insignificant abnormalities although radiographer 1 showed a reasonable PPV and good specificity (Table 7).

Discussion

This question as to whether experienced radiographers can interpret films is not new. Indeed, it seems the earliest use of the “red dot” goes back to 1985 [5]. Since then we have seen an expansion of the role of radiographers into areas that were traditionally the province of the radiologist, such as ultrasonography. In the last few decades, in South Africa as in the rest of the world, there has been an increase in the workload in all medical specialities. Clinical radiology has been no exception and this has led to delays in reporting, an increased burden on residents, and situations where there are no radiologists on site outside normal working hours, despite modalities such as CT being in use.

Our question therefore was whether radiographers could read CT with a degree of accuracy that could allow implications to be made about their ability to work either as triaging personnel or perhaps even as the “overnight reporters” until a consultant radiologist reviews the films the following day. It is probably fair to say that our study is an indirect comparison between radiographers and junior radiological staff. Of interest to both groups is that if radiographers develop comparable reporting skills to radiologists then they may be able to relieve radiologists’ workload, bring down the waiting time for reports, free up radiologists for more specialized tasks, and improve the job satisfaction of both specialities [4, 8].

The significance of this study in our country is that it may translate to bringing CT to areas not previously serviced by this modality. In South Africa we are continuously hampered by our lack of resources, particularly in rural areas. In some cases this lack takes the form of qualified personnel rather than of funds or equipment. The implication of this study is that it may be possible to use sophisticated equipment in a setting not previously considered possible, i.e. geographical areas not staffed by qualified radiologists, if the technology is operated by personnel with a satisfactory level of accuracy in the interpretation of the modality. This would obviously require regional radiologist cover with scans being reviewed by a consultant radiologist, but the potential beneficial impact on emergency patient management cannot be doubted.

In the emergency out-of-hours setting decisions are made quickly and are usually based on the opinions of junior staff. In South Africa, reporting emergency room films and, indeed, the CT scans of paediatric patients, is done by registrars of varying seniority, sometimes only in their second year of training. Keeping in mind that all radiologists make errors in reporting, and that even consultant radiologists differ from neuroradiologists in their opinion on individual diagnoses in 2% of significant abnormalities, the question is whether radiographer reporting of CT brain scans would be of an acceptable standard [1].

That junior radiologists generally err more than consultants is beyond doubt, yet these are the doctors responsible for decisions involving changes of management in patient care in the out-of-hours period. Studies examining the accuracy of registrars in reading head CT scans have shown various results. Some have demonstrated a low overall disagreement rate with the consultant radiologist of 2% or less for significant abnormalities [9–11]. However, the disagreement rate is significantly influenced by the registrar’s experience and training; moreover, abnormal scans yield a far higher disagreement rate of 12.2% [9, 10]. One study has shown that registrars made a fair number of errors (21.5%) and that 10% of these are significant, although this study evaluated not only head CT scans [12]. It seems that, worldwide, these doctors are integral in reporting CT scans in the out-of-hours setting.

Although it has been shown, in a systematic review of studies of radiographer plain film reporting in an emergency setting, that radiographers have a high level of accuracy for reporting of plain radiographs of the skeleton, this level is not maintained when radiographers triage plain films of the face, skull, soft tissues, chest and abdomen [6, 7]. This seems to indicate that body area (and therefore, possibly modality) is a factor in these accuracy studies. This may be negated by training, as it has been found that selected radiographers who have had training are more accurate than radiographers who have had limited training in triage or flagging-type systems [7]. With training it has been shown that hand-picked radiographers may become as sensitive (but not as specific) as radiologists in the detection of fractures when reviewing skeletal radiographs from the emergency department [8]. As mentioned previously, it has also been shown that when compared to emergency room doctors with respect to plain film abnormality detection radiographers fare equally well and detect a significant proportion of the abnormalities detected by doctors [5]. Despite this, it is argued that radiographers lack the training and skill sets required to interpret the relevance of radiological findings and, therefore, their reporting in clinical practice will always remain within very restricted bounds [13].

The underlying rationale is that selected experienced CT radiographers can recognize patterns of abnormality that they have observed over many years.

Normal versus abnormal

Both radiographers in our study essentially correctly identified 90% of the scans as either normal or abnormal. The sensitivities were 87.75% and 95.9% for radiographers 1 and 2, respectively, whilst the specificities were 91.3% and 82.6%, respectively. In practice, this means that radiographer 1 would have correctly alerted the referring physician or referred the scan via teleradiology for further analysis of an abnormality (significant or insignificant) in 88% of cases. Radiographer 2 fared even better and would have done the same in 96% of cases. Radiographer 1, in particular, would have unnecessarily alerted/sent through a low number of scans and although the specificity of radiographer 2 was significantly lower, it was still quite reasonable. This has impressive implications for their ability to act in a screening role.

Significant abnormality

Radiographer 1 had an accuracy of 75% in identifying the significant abnormalities. The sensitivity of radiographer 1 in identifying a significant abnormality amongst the abnormal scans was only 61.6%. (The specificity for this test for radiographer 1 was higher, 86.8%, but we decided it was less important here in light of the low sensitivity.) This simply means that one in every four children would either not have had a significant abnormality diagnosed when there was in fact one, or would have had a diagnosis of significant abnormality made when there was none. Moreover, and more importantly, 38.4% of children with a significant abnormality on their scan would not have had it diagnosed. This is of great interest to us, as it is upon the finding of these abnormalities that the referring clinician would most likely base management and prognosis. It therefore seems clear that radiographer 1 could not be employed in a diagnostic role as this would result in too many delays in definitive management.

Radiographer 2 had an accuracy of 48.6% in reading significant abnormalities and a sensitivity of 52.9%. This is considerably worse than the accuracy of radiographer 1. Thus, whilst this level of accuracy would be sufficient for radiographer 2 to act in a screening role, radiographer 2 also did not have the level of sensitivity to be able to safely decide whether an abnormality was significant or not.

Insignificant abnormalities

Although tested in our study, and of diagnostic importance overall, these abnormalities were specifically chosen as those that would not result in a change in management in the short-term, e.g. the out-of-hours emergency setting. These could be reviewed during the next working day and the referring clinician informed. In any case the accuracy and sensitivity of both radiographers to these abnormalities was low.

It has been shown that the reporting skills of radiographers improve after training, and the difference between registrars’ diagnoses and those of consultants become less with rising seniority [8, 9]. Our study was performed without any formal training in the detection of abnormalities. Reporting was performed on a tick sheet with a limited range of responses, and this may have been of some assistance to the subjects. It would be realistic to assume that a similar tick sheet may be used in reality and that formal training in the detection and diagnosis of significant abnormalities take place should such a reporting system become a reality.

Conclusion

Limited resources constantly impact on the provision of a high level of health care in South Africa. In some instances there is a shortage of skilled personnel rather than technology. Our study shows that a selected experienced radiographer with an acceptable accuracy rate and sensitivity at detecting abnormalities on CT of the brain in children would be able to play an effective screening role in outlying/rural areas or in tertiary centres. Clearly this practice would require input by senior radiology staff to ensure quality control, protection against litigation and maintenance of skills. However, at this moment in time radiographers cannot diagnose significant abnormalities accurately enough to be the final diagnostician and will, therefore, need to have all abnormal scans reviewed before final comment is made. We propose that this study be repeated after each of the radiographers has received formal training in the detection of significant abnormalities. At the same time, it would be of interest to assess the accuracy of registrars of varying seniority who have had formal training in reading paediatric brain CT scans.

References

Erly WK, Ashdown BC, Lucio RW II et al (2003) Evaluation of emergency CT scans of the head: is there a community standard? AJR 180:1727–1730

Lo RH, Chan PP, Chan LP et al (2003) Routine abdominal and pelvic ultrasound examinations: an audit comparing radiographers and radiologists. Ann Acad Med Singapore 32:126–128

Leslie A, Lockyer H, Virjee JP (2001) Who should be performing routine abdominal ultrasound? A prospective double-blind study comparing the accuracy of radiologist and radiographer. Clin Radiol 56:166–167

Brealey S, Scally A, Hahn S et al (2005) Accuracy of radiographer plain film reporting in clinical practice: a meta-analysis. Clin Radiol 60:232–241

Berman L, de Lacey G, Twomey E et al (1985) Reducing errors in the accident department: a simple method using radiographers. Br Med J 290:421–422

Renwick IG, Butt WP, Steele B (1991) How well can radiographers triage X-ray films in the accident and emergency department? Br Med J 302:568–569

Brealey S, Scally A, Hahn S et al (2006) Accuracy of radiographers red dot or triage of accident and emergency radiographs in clinical practice: a systematic review. Clin Radiol 61:604–615

Loughran CF (1994) Reporting of fracture radiographs by radiographers: the impact of a training programme. Br J Radiol 67:945–950

Erly WK, Berger WG, Krupinski E et al (2002) Radiology resident evaluation in the emergency department. AJNR 23:103–107

Wyosoki MG, Nassar CJ, Koenigsberg RA et al (1998) Head trauma: CT interpretation by radiology residents versus staff radiologists. Radiology 208:125–128

Lal NR, Murray UM, Eldevick OP et al (2000) Clinical consequences of misinterpretations of neuroradiologic CT scans by on-call radiology residents. AJNR 21:124–129

Hillier JC, Tattersall DJ, Gleeson FV (2004) Trainee reporting of computed tomography examinations: do they make mistakes and does it matter? Clin Radiol 59:159–162

Donovan T, Manning DJ (2006) Successful reporting by non-medical practitioners such as radiographers will always be task specific and limited in scope. Radiography 12:7–12

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Brandt, A., Andronikou, S., Wieselthaler, N. et al. Accuracy of radiographer reporting of paediatric brain CT. Pediatr Radiol 37, 291–296 (2007). https://doi.org/10.1007/s00247-006-0401-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00247-006-0401-1