Abstract

We study a simple technique, originally presented by Herlihy (ACM Trans. Program. Lang. Syst. 15(5):745–770, 1993), for executing concurrently, in a wait-free manner, blocks of code that have been programmed for sequential execution and require significant synchronization in order to be performed in parallel. We first present an implementation of this technique, called Sim, which employs a collect object. We describe a simple implementation of a collect object from a single shared object that supports atomic Add (or XOR) in addition to read; this implementation has step complexity O(1). By plugging in to Sim this implementation, Sim exhibits constant step complexity as well. This allows us to derive lower bounds on the step complexity of implementations of several shared objects, like Add, XOR, collect, and snapshot objects, from LL/SC objects.

We then present a practical version of Sim, called PSim, which is implemented in a real shared-memory machine. From a theoretical perspective, PSim has worse step complexity than Sim, its theoretical analog; in practice though, we experimentally show that PSim is highly-efficient: it outperforms several state-of-the-art lock-based and lock-free synchronization techniques, and this given that it is wait-free, i.e. that it satisfies a stronger progress condition than all the algorithms that it outperforms. We have used PSim to get highly-efficient wait-free implementations of stacks and queues.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Coping with the difficulty of concurrent programming has become urgent due to the wide spread of multi-core machines. One way to do so is by designing efficient concurrent data structures; common structures, like stacks and queues, are the most widely used inter-thread communication mechanisms, and therefore they are major building blocks of parallel software. Additionally, adequate mechanisms are required to efficiently execute, in a concurrent environment, those parts of modern applications that require significant synchronization. Although the efficient parallelization of these parts is not an easy task, Amdhal’s law [3] implies that achieving this is necessary to avoid significant reductions on the speed-up that can be achieved.

In this paper, we study how to implement a highly-efficient wait-free universal construction. Wait-freedom [19] ensures that each thread should finish the execution of the code block it wants to execute within a finite number of its own steps independently of the speed or the failure of other threads.

The universal construction that we implement follows a simple idea presented by Herlihy in [20] which is the following. A thread p starts by recording the request that it wants to execute in a shared structure that it owns. This structure additionally contains a toggle bit. A set of toggle bits, one for each thread, are stored as part of the simulated state. Based on the values of the toggle bits, p finds out which other requests are active and serves them by executing their code on a local copy of the simulated state. Finally, p tries to change a shared reference, stored in an LL/SC object, to point to this local structure. An LL/SC object supports the atomic operations LL (which returns the current value of the object) and SC. By executing SC with parameter v, a thread p i attempts to set the value of v to the object; this update occurs only if no thread has changed the value of the object (by executing SC) since the execution of p i ’s latest LL on it. If this attempt is successful, TRUE is returned; otherwise, SC returns FALSE. Thread p may have to apply these steps twice to ensure that its request has been served. An array containing n response values is also stored as part of the simulated state. Once p ensures that its request has been served, it finds its response value in the LL/SC object.

We start by presenting a simplified version of this universal construction, called Sim, which allows us to derive some theoretical results. In Sim, the announcement of the requests and the discovery of the active requests by each thread have been abstracted using a collect object. A collect object consists of n components A 1,…,A n , one for each thread, where each component stores a value from some set and supports two operations update(v) and collect. When executed by thread p i , 1≤i≤n, update(v) stores the value v in A i ; collect returns a vector of n values, one for each component. We note that a collect object is not required to be atomic (see Sect. 2 for a description of the correctness condition that needs to be ensured by an implementation of a collect object). A snapshot object is an atomic version of a collect object.

We describe simple implementations of collect and snapshot objects using a single atomic Add (or XOR) object. An Add (XOR) object supports the operation Add (XOR) in addition to read; Add(O,x) adds some (positive or negative) value x to object O (XOR(O,x) computes O XOR v and stores it into O). These implementations exhibit constant step complexity (under the standard theoretical model of shared memory computation where even if the size of the Add object is large, an Add can be executed atomically as a single step). The step complexity of an (implemented) operation is the maximum number of steps performed by a thread in any execution to execute any instance of the operation. Using these simple implementations, one could get improved performance for several previously presented algorithms [2, 6, 23, 32].

By plugging in to Sim the implementation of collect discussed above, the step complexity of Sim becomes constant as well. Jayanti [24] has proved a lower bound of Ω(logn) on the step complexity of any oblivious universal construction using LL/SC objects; an oblivious universal construction does not exploit the semantics of the object being simulated. This lower bound holds even if the size of the base objects used by the universal construction is unbounded. One of the open problems mentioned in [24] is the following: “If shared-memory supports all of read , write , LL/SC , swap , CAS , move , Fetch&Add , would the Ω(logn) lower bound still hold?” Sim has constant step complexity and it uses a single Add (or XOR) object in addition to an LL/SC object, thus proving that the lower bound in [24] can be beaten if we use just a single Add (or XOR) object in addition to an LL/SC object. So, an Ω(logn) lower bound can be derived for the step complexity of any implementation of an Add, XOR, collect, or a snapshot object, from LL/SC objects.

Sim is an efficient wait-free implementation of the well-known combining technique [15, 17, 20, 29–31, 34]. Most of the previous implementations of this technique, including the algorithm presented in [29] (which we will call OyamaAlg from now on) and flat-combining [17], employ locks and therefore they are blocking (i.e. threads may have to wait for actions performed by other threads in order to make progress). Specifically, in those algorithms, a thread, called the combiner, holding a coarse-grained lock, serves, in addition to its own request, active requests announced by other threads while they are waiting by performing local spinning (and possibly periodical checking of the lock status).

We present a practical version of Sim, called PSim, which we have implemented and experimentally tested on a real shared memory machine. PSim uses an efficient implementation of the Add-based collect object. This allows a thread to read only the announcement records of those requests that are active improving upon the technique described in [20] where threads read all n such records. Moreover, in PSim each thread uses its own pool of structures to store the simulated state. In the recycling technique of [20], threads share the same pool which leads to a significantly higher number of cache misses. PSim validates not only whether the copied state is consistent (as does the validation mechanism in [20]), but also if the reference to the shared state has changed in the meantime. If the validation fails, PSim avoids performing unnecessary work. Moreover, PSim uses a simple backoff scheme to guarantee that a thread executing a request will help a large number of other active threads; in [20], the employed backoff scheme aims at reducing the contention in updating the LL/SC object.

We provide a detailed experimental analysis illustrating that PSim is highly-efficient in practice. Specifically, our experiments (Figs. 2–11) show that PSim outperforms several state-of-the-art synchronization techniques, both lock-based (like local spinning) and lock-free. Moreover, the performance of PSim is as good as that of the best-known implementations [17, 29] of the combining technique, and in some cases even better than them. More specifically, we experimentally compare PSim with OyamaAlg [29], flat-combining [17], CLH spin locks [11, 26], and a simple lock free technique. Our experiments (Fig. 2) show that PSim outperforms all these algorithms in several cases. It is worth pointing out that this is so given that PSim is wait-free whereas all other techniques ensure only weaker progress properties.

Herlihy [20] starts by presenting a lock-free version of the universal construction where each thread does not help requests initiated by other threads. It rather performs LL on the reference to the simulated state, copy the state locally and apply its SC; these actions are applied repeatedly until the SC succeeds. Experiments presented in [20] show that Herlihy’s wait-free implementation does not perform well in comparison to this lock-free version and a Test-And-Test-And-Set spin lock (with backoff). In contrast, PSim proves that the common belief that ensuring wait-freedom is too expensive to be practical is in many cases wrong.

We have used PSim to design new highly-efficient wait-free implementations of common concurrent data structures like queues and stacks. We experimentally establish that our stack implementation, called SimStack, outperforms most well-known previous shared stack algorithms, like the lock-free stack implementation of Treiber [33], the elimination back-off stack [18], a stack implementation based on a CLH spin lock [11, 26], and a linked stack implementation based on flat-combining [17]. Similarly, our queue implementation, called SimQueue significantly outperforms the following previous queue implementations: a lock-based algorithm [28] which uses two CLH locks [11, 26], the lock-free algorithm presented in [28], and the implementation using flat-combining provided by Hendler et al. [17].

We formally prove that our implementations are linearizable. Linearizability ensures that in any execution α of the implementation, each completed operation op (and possibly some of the uncompleted operations) on the simulated shared object executed in α appears to take effect, instantaneously, at some point, called the linearization point of op, in its execution interval.

P. Fatourou and N.D. Kallimanis have presented in [12] a family of wait-free, adaptive, universal constructions, called RedBlue. The first algorithm (F-RedBlue) has step complexity O(min{k,logn}), where k is the interval contention, i.e. the maximum number of threads that are active during the execution interval of any operation; the second (S-RedBlue) uses smaller objects than F-RedBlue and has step complexity O(k). Sim is much simpler than F-RedBlue and S-RedBlue, uses significantly less objects and has better step complexity. Two additional adaptive RedBlue universal constructions [12] (LS-RedBlue and BLS-RedBlue) coped with large objects (i.e. objects that need a large amount of storage to maintain their state). These algorithms combined some of the techniques described by Anderson and Moir [5] with the techniques of the RedBlue family to get the best of both worlds. Using Sim we can obtain simpler versions of these algorithms which would perform the same number of shared memory accesses as LS-RedBlue and BLS-RedBlue, but they would employ Ω(n) less LL/SC objects and would reduce the number of LL/SC instructions performed in any execution by a factor of Ω(logk) per operation.

A summary of known wait-free universal algorithms is presented in Table 1. Most of these algorithms, like that presented in [19], GroupUpdate [1], F-RedBlue [12], S-RedBlue [12], Sim, and PSim copy the state of the object locally and perform any updates on the local copy. In all these algorithms (other than PSim), we follow the common convention that the entire state is stored in a single object, and reading or writing this object can be done with a single shared memory access. In PSim, we count a cost of O(s) shared memory accesses to read the state of the object. The algorithms in [4], LS-RedBlue [12] and BLS-RedBlue [12] cope with large objects by assuming that the state of the object is stored in a sequence of consecutive blocks of size B each; each thread copies and updates locally only the blocks that are required in order to perform its operation (and the operations of the threads that it helps). IndividualUpdate copes with large objects by ensuring that threads agree on the updates they will perform on the data structure; then, they all apply the same operations directly on the shared data structure. Another universal construction, presented in [9], avoids making a local copy of the entire state of the object by combining ideas from [7] and [19]. The threads access directly the shared data structure by copying some of its words locally, performing updates on them, and writing back the updated versions of these words to the shared data structure. The algorithm uses O(n+s) registers, each of which stores sequence numbers that take unbounded values. The space required by all other algorithms is measured in terms of memory words. All algorithms presented in Table 1 are oblivious.

Based on their universal construction, Chuong et al. [9] built a wait-free implementation of a shared queue implemented as a semi-infinite array. This implementation allows a thread to perform an enqueue concurrently with a thread performing a dequeue. It also allows multiple threads to perform dequeue operations simultaneously if the queue is empty. Actions are taken to ensure that synchronization is achieved between enqueuers and dequeuers. Similarly, SimQueue allows the enqueuers to work concurrently with the dequeuers. This is achieved in a structural way by employing two instances of PSim. This technique is of general interest and may be applicable to several other universal constructions as well. SimQueue is much simpler than the shared queue implementation presented in [9], although it implements a linked version of the shared queue. We remark that in the lock free queue implementation and the two-locks implementation presented in [28], enqueuers and dequeuers also operate independently.

Recently (after the publication of the conference version of this paper), P. Fatourou and N.D. Kallimanis have published in [15] blocking implementations of the combining technique that outperform PSim. Since PSim is wait-free, it ensures a much stronger progress property than those implementations.

The rest of this paper is organized as follows. Section 2 presents the model. Sim and PSim are presented in Sects. 3 and 4, respectively. The experimental evaluation of PSim is presented in Sect. 5. Section 6 presents the stack and queue implementations that are based on PSim. Finally, Sect. 7 provides a discussion and mentions some open problems.

2 Model

We consider an asynchronous system of n threads, p 1,…,p n , each of which may fail by crashing. Threads communicate by accessing shared objects. Each shared object has a state and provides a set of operations to each thread to read and modify its state; these operations may be executed by the threads concurrently.

The most basic shared object is a read-write (r/w) register R which stores a value from some set and supports two operations: read(R) which returns the current value of R, and write(R,v) which writes the value v into R and returns an acknowledgment. An Add object O supports in addition to read(O), the atomic operation Add(O,v) which adds the (positive or negative) value v to the value of O. Similarly, a XOR object O supports in addition to read(O), the atomic operation XOR(O,v) which computes the value O XOR v and stores it into O. An LL/SC object R stores a value from some set and supports the operations LL (which returns the current value of R) and SC. By executing SC(R,v), a thread p i attempts to set the value of v to R; this update occurs only if no thread has changed the value of R (by executing SC) since the execution of p i ’s latest LL on R. If the update occurs, TRUE is returned and we say that the SC is successful; otherwise, the value of R does not change and FALSE is returned. In this case we say that the SC operation fails. An LL/VL/SC object supports the operation VL in addition to LL and SC; when it is executed by some thread p, VL returns TRUE if no thread has performed a successful SC on O since the execution of p’s latest LL on O and FALSE otherwise. A CAS object O supports in addition to read(O), the operation CAS(O,u,v) which stores v to O if the current value of O is equal to u and returns TRUE; otherwise the contents of O remain unchanged and FALSE is returned.

An active set is a shared object that identifies a set of threads participating in some computation; it supports the operations (1) join which is called by a thread to add itself to the set of participating threads, (2) leave to request removal from the set of participating threads, and (3) getSet which returns the set of the currently participating threads.

A collect object consists of n components A 1,…,A n , one for each thread, where each component stores a value from some set and supports two operations update(v) and collect. When executed by thread p i , 1≤i≤n, update(v) stores the value v in A i ; collect returns a vector of n values, one for each component. The vector returned by each collect Col must contain, for each component A i , the value written by the last update U on A i by p i that has finished its execution before the invocation of Col (or the initial value if such an update does not exist), given that p i has not started the execution of a new update U′ in the interval between the end of U and the end of Col. If p i has started the execution of a new update U′, then Col may return either the value of U′ or that of U for A i . Moreover, an instance of collect G which has started its execution after the response of some other instance of collect G′, must return, for each component A i , either the same value v as that returned by G or some value v′ that has been written into A i by an update U′ which has started its execution after the response of the update U that writes into A i the value v read by G. A similar correctness property must be ensured by any implementation of an active set.

A universal object simulates any other concurrent object. It supports an operation, called ApplyOp(Request req), which simulates the execution of any request (operation) req on the simulated state. ApplyOp returns a return value for req.

An implementation of a (high-level) object from base objects provides, for each operation of the simulated object and for each thread, an algorithm that uses the base objects to implement the operation. An implementation of a universal object is called oblivious if it does not exploit the semantics of the type of the simulated object.

A configuration C describes the system at some point in time; it is a vector containing one component for each thread storing its state and one component for each base object storing its value. At an initial configuration, the base objects contain initial values and each thread is in an initial state. A thread completes the execution of a step each time it executes an operation on a base object (i.e. a step consists of a single operation on a base object and possibly some local computation). An execution α is a sequence of steps executed by threads. A thread starts the execution of a (simulated) operation by invoking its algorithm and finishes it when it gets back a response; thus, for each instance of an operation that is executed in α, there is an invocation and a response. A thread is active at some configuration C, if it has executed the invocation of an instanceFootnote 1 op of an operation at C but it has not yet received the response for op; in this case, we also say that op is active at C. The execution interval of op is the part of the execution that starts with op’s invocation and ends with op’s response. An operation is completed in α, if there is a response for it in α.

An implementation is blocking if a thread may have to wait for some step taken by another thread. An implementation is wait-free if in each execution, each non-faulty thread finishes the execution of any instance of an operation within a finite number of its steps. Wait-freedom is the strongest non-blocking progress property since it guarantees that all non-faulty threads make progress in any execution. A weaker such property is lock-freedom, which ensures that in each execution, some thread makes progress, i.e. it finishes the execution of an instance of some operation within a finite number of steps.

The step complexity of an operation is the maximum number of steps that each thread performs to complete any instance of the operation in any execution. The step complexity of an implementation is the maximum between the step complexities of the operations of the simulated object. The interval contention of an instance of an operation in an execution α is the maximum number of threads that are active in the execution interval of this instance. The interval contention of an operation in an execution α is the maximum number of threads that are active in the execution interval of any instance of the operation in α. The total contention of an execution α is the total number of threads that have taken steps in α. If the step complexity of an implementation depends on the interval (or the total) contention of the simulated operations in each execution, and not on the total number of threads n, then the implementation is called adaptive. The space complexity of an implementation is determined by the number, the type, and the size of the base objects employed by the implementation.

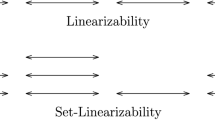

Let α be any execution. Linearizability [22] ensures that, for each instance op of any completed operation executed in α (and for some of the uncompleted operations), there is some point within its execution interval, called the linearization point, such that the response returned by op in α is the same as the response op would return if all operations in α were executing serially in the order determined by their linearization points. When this holds, we say that the response of op is consistent, and the order determined by the linearization points is a linearization order. An implementation is linearizable if all its executions are linearizable.

We remark that an implementation of a collect object does not have to be linearizable. A snapshot object is a linearizable version of a collect object (we then use the term scan instead of collect). A snapshot object is single-writer, if each component can be updated by a single thread, so it is only this thread that can apply update operations to the component. In multi-writer snapshots, each thread can execute update operations to any component.

3 The Sim Algorithm

In this section, we discuss the simple technique presented in [20], as implemented by Sim. Section 3.1 provides the pseudocode of Sim and its description. The correctness proof of Sim is presented in Sect. 3.2 (we remark that no formal arguments are presented in [20]). Section 3.3 focuses on efficient implementations of collect objects and active sets using Add. Section 3.4 discusses the space and step complexity of Sim. Finally, Sect. 3.5 describes lower bounds that are derived by Sim and by results presented in [24].

3.1 Description

Sim (Algorithm 1) uses an LL/SC object S and a collect object Col. The LL/SC object stores the simulated state st, a vector, called applied, of n bits with initial value 0, and an array rvals of n elements containing the return values. We remark that the size of S could be reduced to a single pointer using indirection (see Sect. 4 which describes how to build a practical version of Sim).

Each thread maintains a persistent local variable toggle i , initially 0, which it toggles each time it performs a new request. The collect object consists of n components, one for each thread. The ith component of Col stores the last request req i initiated by p i (or ⊥ if no such request exists) and a toggle bit toggle which stores the value contained in toggle i at the time that req i was initiated (or 0 if no such request exists). Whenever p i wants to perform some request req i , it first announces req i by updating component i of Col with the value 〈req i ,toggle i 〉 (line 1). It then toggles toggle i . Finally, p i executes a routine (line 3), called Attempt, to ensure that its request has been executed.

A request by p i is applied only if the toggle field of the ith component of Col differs from the ith bit of S.applied (lines 7, 9). In more detail, when p i wants to execute its first request req 1, it writes in the toggle field of the ith component of Col the value 1 (line 1). Each thread q that sees the value 1 in Col[i].toggle and 0 in S.applied[i], will apply req 1 on the copy of the simulated state that it works on. However, only one of them will succeed in updating S on line 12. This update changes S.applied[i] to 1 which identifies that req 1 has been applied. When p i initiates its second request, it changes the toggle field of the ith component of Col to 0, thus storing in it a different value than that of S.applied[i]; this indicates that a new request by p i has been announced.

When a thread p i executes Attempt, it first creates a copy of S (line 6) which contains the state of the simulated object. Then, it discovers which requests are currently active (line 7) by executing collect, and performs locally, one after the other, those of them that have not been applied yet (lines 8–11) by using its local copy ls of S. By doing so, it calculates a new state for the simulated object and a return value for each of the active requests (line 10); finally, Attempt attempts to write the value of ls into S by executing an SC (line 12). If, in the mean time, some other thread managed to replace S with its local copy of the simulated state, then p i ’s SC will not succeed and the actions it performed during the execution of the current loop of for (of line 8) will be discarded.

Recall that thread p i computes the return values for the requests that it attempts to perform and stores them in ls.rvals (line 10). We remark that ls.rvals contains return values for all active requests (and not only for those that p i attempts to perform) since all return values recorded in S are copied in ls by executing the LL of line 6.

The instance of Attempt executed by p i performs the above steps twice (lines 6–12) to ensure that its currently active request req i has been applied to the simulated object before the instance of SimApplyOp that is currently executed by p i responds. We remark that executing lines 6–12 just once is not enough. This is so since, if this was the case, there could be another request req j executed by some thread p j whose collect on line 7 occurred before the execution of the update with parameter 〈req i ,toggle i 〉 (line 1) and so it did not return req i for the ith component.Footnote 2 If the SC instruction, let it be SC 1, that was executed by req j on S was successful, it may have caused req i ’s SC on S to fail. In this case, p i would return without ensuring that its request has been served.

We finally explain why this problem is overcome if the instance of Attempt executed by p i performs lines 6–12 twice. Suppose process p i ’s first SC fails and let q be the process performing the next successful SC. Then, q’s LL occurs after p i ’s update completes, so q sees p i ’s request and performs it.

3.2 Proof of Correctness

In this section, we prove that Sim is linearizable. We start by introducing some useful notation. Fix any execution α of Sim. Assume that some thread p i , i∈{1,…,n}, executes m i >0 requests in α. For each j, 0<j<m i , let \(\mathit{req}_{j}^{i}\) be the argumentFootnote 3 of the jth invocation of SimApplyOp by p i and let \(\pi_{j}^{i}\) be the instance of Attempt executed by SimApplyOp when it is called with \(\mathit{req}_{j}^{i}\) as its argument. Let \(U_{j}^{i}\) be the last update executed by p i before \(\pi_{j}^{i}\) and let \(Q_{j}^{i}\) be the configuration just before the first step of \(U_{j}^{i}\); let \(Q_{0}^{i}\) be the initial configuration C 0 and let \(v_{j}^{i}\) be the value written by \(U_{j}^{i}\).

We first present the following lemma which is an immediate consequence of the pseudocode (lines 5, 6 and 12) and of the semantics of the LL/SC operation.

Lemma 1

Consider any j, 0<j≤m i . There are at least two successful SC instructions in the execution interval of \(\pi_{j}^{i}\).

The next lemma also follows from the pseudocode (lines 1 and 2). It states that \(U_{j}^{i}\) updates the ith component of Col with the value \(\langle \mathit{req}_{j}^{i}, j \mod 2 \rangle\) and this value does not change until the next update on the ith component starts its execution.

Lemma 2

For each j, 0<j≤m i , the following claims hold:

-

1.

\(v_{j}^{i} = \langle \mathit{req}_{j}^{i}, j \mod 2 \rangle\);

-

2.

no update occurs on the ith component of Col between the end of \(U_{j-1}^{i}\) (or C 0, if j=1) and \(Q_{j}^{i}\).

We next prove that, at the end of the execution of \(\pi_{j}^{i}\), it holds that S.applied[i] is equal to jmod2.

Lemma 3

Consider any j, 0<j≤m i . It holds that S.applied[i] is equal to jmod2 at the end of the execution of \(\pi_{j}^{i}\).

Proof

Assume, by way of contradiction, that S.applied[i]=1−(jmod2) at the end of \(\pi_{j}^{i}\). By Lemma 1, there are at least two successful SC instructions in the execution interval of \(\pi_{j}^{i}\). It follows that the last successful SC instruction that is executed in \(\pi_{j}^{i}\) writes 1−(jmod2) into S.applied[i]. Let SC x be this SC instruction, let LL x be the matching LL instruction of SC x , let p x be the thread that executes LL x and SC x , and let G x be the instance of collect executed by p x between LL x and SC x . Since the execution interval of \(\pi_{j}^{i}\) contains at least two successful SC instructions and SC x is the last one and it is successful, it follows that LL x follows the beginning of \(\pi_{j}^{i}\).

By the pseudocode (lines 1, 6 and 7), it follows that G x begins its execution after the end of the execution interval of \(U_{j}^{i}\). Thus, Lemma 2 implies that G x returns jmod2 for the toggle field of the ith component of Col. By the pseudocode (line 11), SC x writes the value jmod2 into S.applied[i] which is a contradiction. □

At C 0, S.applied[i] is equal to 0. If m i >0, Lemma 3 implies that at the end of \(\pi_{1}^{i}\), S.applied[i] is equal to 1. Let \(C_{1}^{i}\) be the first configuration between C 0 and the end of \(\pi_{1}^{i}\) at which S.applied[i] is equal to 1. Consider any integer 1<j≤m i . Lemma 3 implies that at the end of \(\pi_{j-1}^{i}\), S.applied[i] is equal to (j−1)mod2, whereas at the end of \(\pi_{j}^{i}\), S.applied[i] is equal to jmod2. Let \(C_{j}^{i}\) be the first configuration between the end of \(\pi_{j-1}^{i}\) and the end of \(\pi_{j}^{i}\) such that S.applied[i] is equal to jmod2; let \(C_{0}^{i} = C_{0}\). Obviously, \(C_{j}^{i}\) precedes the end of \(\pi_{j}^{i}\). Figure 1 illustrates the above notation.

By the definition of \(C_{j}^{i}\), it follows that just before \(C_{j}^{i}\) a successful SC on S is executed. Let \(\texttt {SC}_{j}^{i}\) be this SC instruction and let \(\texttt {LL}_{j}^{i}\) be its matching LL instruction. Denote by \(G_{j}^{i}\) the instance of collect that is executed between \(\texttt {LL}_{j}^{i}\) and \(\texttt {SC}_{j}^{i}\) by the same thread (line 7).

We continue to prove that \(G_{j}^{i}\) returns the value \(v_{j}^{i}\) written by \(U_{j}^{i}\) for thread p i . Moreover, we prove that \(\texttt {SC}_{j}^{i}\) is executed after \(Q_{j}^{i}\) (i.e. after the execution of the first step of \(U_{j}^{i}\)).

Lemma 4

Consider any j, 0<j≤m i . It holds that: (1) \(\texttt {SC}_{j}^{i}\) is executed after \(Q_{j}^{i}\), and (2) \(G_{j}^{i}\) returns \(v_{j}^{i}\) for the ith component.

Proof

Assume first that j=1. Then, \(\texttt {SC}_{1}^{i}\) writes 1 to S.applied[i]; the code (lines 7, 11 and 12) implies that, in this case, \(G_{1}^{i}\) returns the value 1 for the toggle field of the ith component of Col. However, since the initial value of this field is 0, and \(U_{1}^{i}\) is the only update that is executed on the ith component between C 0 and the end of \(\pi_{1}^{i}\), it follows that \(G_{1}^{i}\) returns the value written to the ith component by \(U_{1}^{i}\). Thus, the execution of \(G_{1}^{i}\) ends after the beginning of \(U_{1}^{i}\), i.e. after \(Q_{1}^{i}\), and \(G_{1}^{i}\) returns \(v_{1}^{i}\).

Consider now any j>1. Suppose first that \(G_{j}^{i}\) starts executing before the beginning of \(\pi_{j-1}^{i}\). By the pseudocode, it follows that \(\texttt {LL}_{j}^{i}\) is executed before \(G_{j}^{i}\) and, by its definition, \(\texttt {SC}_{j}^{i}\) is executed after the end of \(\pi_{j-1}^{i}\). By Lemma 1, at least two successful SC instructions are executed in the execution interval of \(\pi_{j-1}^{i}\). It follows that \(\texttt {SC}_{j}^{i}\) is not successful, which is a contradiction. Thus, \(G_{j}^{i}\) starts its execution after the beginning of \(\pi_{j-1}^{i}\).

We continue to prove that the value v returned by \(G_{j}^{i}\) for the toggle field of the ith component of Col is not equal to \(v_{j-1}^{i}.\mathit{toggle}\). By definition, \(\texttt {SC}_{j}^{i}\) writes jmod2 to S.applied[i]. Then, by the code (lines 7, 11 and 12), it follows that \(G_{j}^{i}\) returns the value v=jmod2 for the toggle field of the ith component. By Lemma 2, \(v_{j-1}^{i}.\mathit{toggle} = (j-1) \mod 2\). Thus, \(v \neq v_{j-1}^{i}\).

By the code (lines 1–4), no update other than \(U_{j}^{i}\) is executed on the ith component between the end of \(U_{j-1}^{i}\) and the end of \(\pi_{j}^{i}\). Since \(G_{j}^{i}\) starts after the beginning of \(\pi_{j-1}^{i}\) (and therefore after the end of \(U_{j-1}^{i}\)), ends before the end of \(\pi_{j}^{i}\), and returns a value not equal to \(v_{j-1}^{i}\), it follows that \(G_{j}^{i}\) must return the value \(v_{j}^{i}\) written by \(U_{j}^{i}\). Therefore, the execution of \(G_{j}^{i}\) ends after the beginning of the execution of \(U_{j}^{i}\), and the same is true for \(\texttt {SC}_{j}^{i}\) which is executed after \(G_{j}^{i}\). □

We next prove that the value of S.applied[i] remains the same between \(\texttt {SC}_{j-1}^{i}\) and \(\texttt {SC}_{j}^{i}\).

Lemma 5

Consider any j, 0<j≤m i . At each configuration C following \(C_{j-1}^{i}\) and preceding \(C_{j}^{i}\), it holds that S.applied[i]=(j−1)mod2.

Proof

By definition of \(C_{1}^{i}\), no successful SC writes the value 1 to S.applied[i] between C 0 and \(C_{1}^{i}\). Thus, the claim follows for j=1. So, assume that j>1. By definition of \(C_{j}^{i}\), no successful SC writes the value jmod2 to S.applied[i] between the end of \(\pi_{j-1}^{i}\) and \(C_{j}^{i}\). Assume, by way of contradiction, that there is some configuration between \(C_{j-1}^{i}\) and the end of \(\pi_{j-1}^{i}\) such that S.applied[i] is equal to jmod2. Let C x be the first of these configurations. Since only SC instructions change the value of S, there is a successful SC instruction, SC x , which occurs just before C x and writes the value jmod2 to S.applied[i]. Let LL x be the matching LL instruction to SC x , let π x be the instance of Attempt that executes SC x , and let G x be the instance of collect executed by p x between LL x and SC x .

We continue to prove that the value v returned by G x for the toggle field of the ith component of Col is not equal to \(v_{j-1}^{i}\). By definition of SC x , SC x writes the value jmod2 into S.applied[i]. Then, by the code (lines 7, 11 and 12), it follows that G x returns a value v=jmod2 for the toggle field of the ith component. By Lemma 2, \(v_{j-1}^{i}.\mathit{toggle} = (j-1) \mod 2\). Thus, \(v \neq v_{j-1}^{i}\).

Since SC x is successful, LL x must have occurred after \(C_{j-1}^{i}\). Since G x occurs between LL x and SC x , and \(U_{j-1}^{i}\) is executed before \(\pi_{j-1}^{i}\), G x occurs after the end of the execution of \(U_{j-1}^{i}\). Since no other update occurs on component i between the end \(U_{j-1}^{i}\) and the end of \(\pi_{j-1}^{i}\), it follows that G x returns \(v_{j-1}^{i}\) for the ith component, which contradicts our argument above that G x returns \(v \neq v_{j-1}^{i}\). □

We say that a request \(\mathit{req}_{j}^{i}\) is applied on the simulated state if there is some request req′ (that might be \(\mathit{req}_{j}^{i}\) or not) for which all the following conditions hold: (1) the last collect that is executed by req′ returns \(\langle \mathit{req}_{j}^{i}, \mathit{toggle} \rangle\) as the value of the ith component of Col, where toggle is a value different from the value returned by the last read on S.applied[i] (line 6) that is executed by the Attempt of req′ (so that line 10 is executed), and (2) the execution of the last SC of line 12 on S by req′ succeeds. When these conditions are satisfied, we sometimes also say that req′ applies \(\mathit{req}_{j}^{i}\).

We continue to prove that \(\mathit{req}_{j}^{i}\) is applied on the simulated object exactly once and this occurs just before \(C_{j}^{i}\).

Lemma 6

For each j, 0<j≤m i , \(\mathit{req}_{j}^{i}\) is applied exactly once.

Proof

By the pseudocode (lines 7, 8, 9, and 11) and by definition, it follows that when some request req initiated by p i is applied, there is some successful SC on S which toggles the value of S.applied[i]. Lemmas 3 and 5 imply that there should be at least one m>0 such that this SC is \(\texttt {SC}_{m}^{i}\). Since the requests initiated by p i are distinct, Lemmas 2 and 4 imply that \(\mathit{req}_{j}^{i}\) is applied if m=j. Thus, \(\mathit{req}_{j}^{i}\) is applied exactly once. □

We are now ready to assign linearization points. For each i∈{1,…,n} and 0<j≤m i , we place the linearization point of \(\mathit{req}_{j}^{i}\) at \(C_{j}^{i}\); ties are broken by the order imposed by threads’ identifiers.

Lemma 7

Each request \(\mathit{req}_{j}^{i}\), 0<j≤m i , is linearized within its execution interval.

Proof

Lemma 4 implies that \(\texttt {SC}_{j}^{i}\) follows \(Q_{j}^{i}\). By its definition, \(\texttt {SC}_{j}^{i}\) occurs before the end of \(\pi_{j}^{i}\). Thus, \(C_{j}^{i}\) is in the execution interval of \(\mathit{req}_{j}^{i}\). □

In order to prove consistency, we use the following notation. Denote by SC m , m>0, the mth successful SC instruction on S and let LL m be its matching LL. Obviously, between SC m and SC m+1, S is not modified.

Denote by α m , the prefix of α which ends at SC m and let C m be the first configuration following SC m . Let α 0 be the empty execution. Denote by L m the order defined by the linearization points (assigned as described above) of the requests in α m ; let L 0=λ, where λ is the empty sequence. We remark that S.st stores a copy of the simulated state at each point in time. Moreover, each thread applies requests on its local copy of the simulated state sequentially, one after the other. We say that S.st is consistent at C m if it equals the state resulting from executing the requests of α m sequentially in the order specified by L m , starting from \(\hat{s}\), the initial state of the simulated object.

Lemma 8

For each m≥0, (1) S.st is consistent at C m , and (2) L m is a linearization order for α m .

Proof

We prove the claims by induction on m.

Base case (m=0): The claims hold trivially. By the initialization of S, S.st contains \(\hat{s}\), which is the initial state of the simulated object, and α 0 is empty.

Induction hypothesis: Fix any m>0 and assume that the claims hold for m−1.

Induction step: We prove that the claim holds for m. By the induction hypothesis, it holds that: (1) S.st is consistent at C m−1, and (2) L m−1 is a linearization order for α m−1. Let req be the request that executes SC m . Assume that req applies j>0 requests on the simulated object. Denote by req 1,…,req j the sequence of these requests ordered in increasing order of the identifiers of the threads that initiate them.

Notice that req performs LL m after C m−1 since otherwise SC m would not be successful. By the induction hypothesis, S.st is consistent at C m−1. Thus, the local copy of S that is last stored by req in ls, represents a consistent state of the simulated object. Lemma 6 implies that req 1,…,req j are applied only once. This is realized when SC m is executed. Thus, none of these requests have been applied previously.

Given that the application of req 1,…,req j is simulated by the thread executing req sequentially, in the order mentioned above, starting from the state stored in ls, it is a straightforward induction to prove that (1) for each f, 0≤f≤j, a consistent response is calculated for req f , and the new state of the simulated object is calculated in a correct way in the local variable ls of the Attempt executed by req. Therefore, S.st is consistent after the execution of req’s successful SC. Notice that, by the way linearization points are assigned, L m =L m−1,req 1,…,req j . It follows that L m is a linearization order for α m . □

Theorem 1

Sim is a linearizable implementation of a universal object.

3.3 An Efficient Implementation of collect

We present an implementation of a collect object, called SimCollect, which uses a single Add object and has step complexity O(1). However, the size of the Add object it employs is large. In Sect. 4, we describe a practical version of SimCollect which has been used by PSim to achieve high performance and scalability.

Recall that a collect object consists of n components. Suppose that each of the components stores a value from some set D. Suppose that d is the number of bits that are needed for the representation of any value in D. SimCollect uses an Add object O of nd bits. O is partitioned into n chunks of d bits each, one for each thread. Thread p i owns the ith chunk of d bits, and stores there the value of the component that has been assigned to it. An update U with value v by p i first performs an Add to ensure that v is written into the ith chunk of O, and then stores a copy of v into a local variable v l ; this copy is maintained by p i to discover the appropriate value that should be added to the ith chunk of O during its next update (which will be the new value minus v l ). Whenever p i executes a collect, it simply reads the value stored in O and returns for each component the value stored in the corresponding chunk. It is apparent that the number of shared memory accesses performed by SimCollect is 1.

If the size b of an Add object is less than nd bits, then we can employ ⌈nd/b⌉ Add objects, O 1,…,O ⌈nd/b⌉. In this case, the value last written by p i is represented by the (i⋅dmodb)th chunk of O ⌈i⋅d/b⌉.Footnote 4 An update by p i adds an appropriate value to O ⌈i⋅d/b⌉, and collect reads every Add object once and returns the set of values written in the chunks. This version of the algorithm has step complexity 1 for update, and O(nd/b) for collect. Notice that this version is not linearizable (but linearizability is not necessary for implementations of collect objects). In case b≥nd, the implementation is linearizable; then, SimCollect can serve as a single-writer snapshot implementation.

We remark that the same techniques, as in SimCollect, can be used to get an implementation of an active set, called SimActSet, by using an Add object of n bits (one for each thread); this implementation has step complexity 1 if b<n, and ⌈n/b⌉ if b>n.

It is apparent that similar implementations of collect, snapshot and active set can be derived if a XOR object is used instead of an Add object.

3.4 Space and Step Complexity of Sim

The step complexity of Sim is O(sc), where sc is the step complexity of the implementation of the collect object it employs. If this implementation is SimCollect, Sim exhibits constant step complexity. In this case, it uses an Add object of nd bits and an LL/SC object of size O(n+s), where s is the size of the simulated state.

Theorem 2

By using SimCollect, the step complexity of Sim is O(1) and Sim uses one Add object of nd bits and one LL/SC object of size O(n+s).

3.5 Lower Bounds

Jayanti [24] has proved that any oblivious implementation of a universal object from LL/SC objects has step complexity Ω(logn). This lower bound holds even if the size of the LL/SC objects is unbounded. Sim is oblivious. So, the lower bound can be beaten if just one Add object (or a collect object) is used in addition to an LL/SC object. Thus, the following theorem holds:

Theorem 3

A lower bound of Ω(logn) holds on the step complexity of any implementation of (1) a single-writer snapshot object, (2) a collect object, (3) a XOR object, and (4) an Add object, from LL/SC objects.

4 From Theory to Practice

In this section, we discuss the techniques applied to Sim in order to port it to a real-world machine architecture, like x86_64. Applying these techniques leads to a practical version of Sim, called PSim. A simplified version of PSim is shown in Algorithm 2.

The information stored in structure StRec is now maintained using indirection; we employ recycling to reduce the space requirements. Each thread p i maintains a pool of two structures of type StRec. These pools are implemented by allocating an array Pool of type StRec which consists of n+1 rows of two elements each. Thread p i ’s pool is comprised by the ith row of Pool.Footnote 5 Variable S is now a pointer to one of the elements of Pool,Footnote 6 initially pointing to Pool[n+1][1] (where the (n+1)st row is used for initialization).

The collect object is implemented by a set of n single-writer read-write registers of type Request, called Announce, and a shared bit vector Toggles of n bits, one for each thread. A structure of type Request contains two fields, a pointer func which points to a function containing the code of the simulated operation, and an argument. Toggles is implemented using Add in a way similar to SimCollect. Specifically, when a thread p i initiates a new request, it toggles Toggles[i] by performing an atomic Add (lines 3–4). More specifically, Toggles is implemented as an integer (or as an array of ⌈n/b⌉ integers, if n is larger than the size b of an integer); to toggle bit i, p i atomically adds 2i or −2i to this integer (or 2imodb or −2imodb to Toggles[⌈i/b⌉], respectively). Initially, all bits of Toggles are equal to 0.

When p i wants to execute a request req, it announces it by writing req (and its parameters) in Announce[i] (line 2). Thread p i discovers the requests that other active threads want to perform by reading the appropriate entries of Announce (lines 14–16) based on the information read in Toggles and in the StRec record pointed to by S. This increases the step complexity of PSim but it decreases the size of the Add object which now stores n bits instead of nd bits that are used in SimCollect.

The VL instruction of line 11 guarantees that the copied state is consistent. A slow thread p j may read the state of the simulated object from some StRec record r while thread p i , which owns r, reuses this record. As a result, p j will read an inconsistent state. Notice that, in this case, if p j executed the SC instruction of line 18, it would fail. Still, p j would simulate locally the application of several requests, while executing lines 14–17, using an inconsistent state. Successful execution of the VL of line 11 guarantees that r has not yet been reused, so the state that was read is consistent. Additionally, the existence of the VL enhances the performance of PSim. Making a local copy of the state (line 10) is slow, since it usually causes one or more cache misses. In the mean time, another thread may have successfully updated the state of the simulated object. In this case, having p j executing lines 14–17 is useless and may cause cache misses due to the reads that are performed on the Announce array. The use of the VL ensures that this unnecessary overhead is avoided.

The majority of the commercially available shared memory machines support CAS rather than LL/SC. PSim simulates an LL on S with a read(S). An SC is simulated with a CAS on a timestamped version of S to avoid the ABA problemFootnote 7. Finally, VL is implemented by reading S and checking whether its timestamp has changed since the most recent previous LL executed by the same thread. In the real code S simply stores an index to Pool (and not a full 64 bit pointer), so there are enough bits (in our experiments 48) in a word to store the timestamp. In systems with more threads, we could use 128 bit words; we remark that x86_64 machines support 128 bit words.

The performance of PSim improves when a combining thread manages to help a large number of other threads while performing its request. For exploiting this property, we use an adaptive backoff scheme. A thread p i backoffs, after it has announced its request (line 5) and has indicated in Act that it is active. PSim does not use backoff for reducing the contention on accessing a shared CAS object, as it is usually the case in previous algorithms [20, 28]. It rather employs backoff in an effort to achieve a better combining degree. The backoff scheme of PSim is simple: it uses a single parameter which is a backoff upper bound (by default, the backoff lower bound is set to 1). During backoff, a thread executes t noop instructions (where t is initialized to 1). Each time a new request is initiated, t is re-calculated as follows. If the SC instruction (line 18) of this request succeeds, t is doubled (until it reaches its upper bound); otherwise t is halved until it reaches its lower bound. In Sect. 5, we discuss the impact of backoff in the performance of PSim.

The full source code of PSim is provided at http://code.google.com/p/sim-universal-construction/.

4.1 Proof of Correctness

The correctness proof of PSim is similar to that of Sim presented in Sect. 3.2. We follow the same notation as in Sect. 3.2 where we denote by \(U_{j}^{i}\) the jth Add executed by p i (line 3) and we consider the read of Toggles on line 13 of PSim as the analog of the collect executed on line 7 of Sim. Let \(v_{j}^{i}\) be equal to 1 if the argument of the jth Add by p i is positive and 0 otherwise. It is easy to see that, in this way, \(v_{j}^{i}\) plays the same role as \(v_{j}^{i}.\mathit{toggle}\) in the proof of Sim.

We focus on those parts of the proof of Sim that are different than those of the proof of PSim. We start with Lemma 1 whose proof is now simpler.

Lemma 9

Consider any j, 0<j≤m i . There are at least two successful SC instructions in the execution interval of \(\pi_{j}^{i}\).

Proof

We prove that during the execution of each iteration of the for loop of lines 8–17, at least one successful SC instruction is performed. If the iteration is completed on line 12 of the pseudocode, the VL instruction (line 11) returns 0. This implies that at least one successful SC instruction occurred between the LL of line 9 and the execution of VL on line 11. Suppose that the iteration executes the SC instruction on line 18. If this SC is successful, the claim follows. Otherwise, at least one successful SC instruction was performed between the execution of line 9 and line 18. □

We next present the analog of Lemma 2 of Sim. Its proof is a straightforward induction on j.

Lemma 10

For each j, 0<j≤m i , the following claims hold:

-

1.

\(v_{j}^{i} = j \mod 2\) (i.e. Toggles[i]=jmod2 after the execution of \(U_{j}^{i}\));

-

2.

no Add instruction executed between the end of \(U_{j-1}^{i}\) (or C 0, if j=1) and \(Q_{j}^{i}\) changes the ith bit of Toggles.

It is easy to prove a lemma similar to Lemma 3 for PSim. Its proof follows the same arguments as those in the proof of Lemma 3.

Lemma 11

Consider any j, 0<j≤m i . It holds that S→applied[i] is equal to jmod2 at the end of \(\pi_{j}^{i}\).

As in the proof of Sim, we let \(C_{1}^{i}\) denote the first configuration between C 0 and the end of \(\pi_{1}^{i}\) at which S→applied[i] is equal to 1, and we let \(C_{j}^{i}\) to be the first configuration between the end of \(\pi_{j-1}^{i}\) and the end of \(\pi_{j}^{i}\) such that S→applied[i] is equal to jmod2; let \(C_{0}^{i} = C_{0}\).

We continue to prove that no field of the structure pointed to by S may change its value as long as S points to it. Thus, S→applied[i] takes different values only by executing successful SC instructions on S. It follows that recycling does not cause any implication to the proof.

Lemma 12

Let SC 1 and SC 2 be two successful SC instructions on S such that no successful SC on S is executed between SC 1 and SC 2. Let v 1 be the value of the structure pointed to by S after SC 1. Then, the value of the structure pointed to by S is always v 1 between SC 1 and SC 2.

Proof

Let C 1 and C 2 be the configurations resulting from the application of SC 1 and SC 2, respectively. Let p i be the thread that executes SC 1. By the pseudocode (lines 10 and 18), it follows that S points to Pool[i][l], for some l∈{1,2} at C 1, so Pool[i][l]=v 1.

Assume, by way of contradiction, that there is a configuration C x between SC 1 and SC 2 at which Pool[i][l]≠v 1. By the pseudocode (lines 17 and 18), it follows that only p i can write to Pool[i][l]. Since p i ’s pool contains two structures and p i uses a different structure each time it performs a successful SC on S, it follows that p i can use Pool[i][l] again only if it performs a successful SC instruction between SC 1 and C x . However, this would contradict our assumption that no successful SC instruction is executed on S between SC 1 and SC 2. □

Lemma 12 implies that S→applied[i] takes different values only when successful SC instructions are executed on S. It follows that just before \(C_{j}^{i}\) a successful SC on S is executed. Let \(\texttt {SC}_{j}^{i}\) be this SC instruction and let \(\texttt {LL}_{j}^{i}\) be its matching LL instruction. Let \(r_{j}^{i}\) be the read of Toggles that is executed between \(\texttt {LL}_{j}^{i}\) and \(\texttt {SC}_{j}^{i}\) by the same thread (line 13) for the ith bit. The following two lemmas are the analogs of Lemmas 4 and 5 of Sim. Their proofs follow similar arguments as those of these lemmas.

Lemma 13

Consider any j, 0<j≤m i . It holds that \(r_{j}^{i}\) is executed after \(Q_{j}^{i}\) and reads jmod2 in Toggles[i].

Lemma 14

Consider any j, 0<j≤m i . At each configuration C following \(C_{j-1}^{i}\) and preceding \(C_{j}^{i}\), it holds that S→applied[i]=(j−1)mod2.

We say that a request \(\mathit{req}_{j}^{i}\) is applied if there is some request req′ (that might be \(\mathit{req}_{j}^{i}\) or some other request) for which all the following conditions hold: (1) the last read on Toggles that is executed by req′ returns a value for its ith bit which is different from the value returned by the last read on S.applied[i] (line 6) executed by the Attempt of req′, (2) the read on Announce[i] (line 16) by req′ returns \(\mathit{req}_{j}^{i}\), and (3) the execution of the SC of line 12 on S by req′ succeeds. If these conditions hold, we sometimes say that \(\mathit{req}_{j}^{i}\) is applied when the SC of line 12 on S by req′ is executed.

Following similar arguments as those in the proof of Lemma 6, we can prove that \(\mathit{req}_{j}^{i}\) is applied on the simulated object exactly once and this occurs just before \(C_{j}^{i}\).

Lemma 15

For each j, 0<j≤m i \(\mathit{req}_{j}^{i}\) is applied to the simulated object only once and this occurs just before \(C_{j}^{i}\).

Proof

By the pseudocode (lines 13, 15, and 17) and by definition, it follows that when some request req initiated by p i is applied, there is some successful SC on S which toggles the value of S.applied[i]. Lemmas 11 and 14 imply that there should be at least one integer m>0 such that this SC is \(\texttt {SC}_{m}^{i}\). We argue that \(\mathit{req}_{j}^{i}\) is applied when \(\texttt {SC}_{j}^{i}\) is executed. By Lemma 13, \(r_{j}^{i}\) is executed after \(Q_{j}^{i}\). By definition of \(C_{j}^{i}\) and by the pseudocode (lines 13 and 18), it follows that \(r_{j}^{i}\) is executed before the end of \(\pi_{j}^{i}\). By the pseudocode, it follows that the read of Announce[i] on line 16 by the instance of Attempt that executes \(\texttt {SC}_{j}^{i}\) occurs between \(Q_{j}^{i}\) and \(C_{j}^{i}\). Since \(\mathit{req}_{j}^{i}\) is active between \(Q_{j}^{i}\) and \(C_{j}^{i}\), this read returns \(\mathit{req}_{j}^{i}\). Thus, \(\mathit{req}_{j}^{i}\) is applied at least once when \(\texttt {SC}_{j}^{i}\) is executed. Since the requests initiated by p i are distinct, \(\mathit{req}_{j}^{i}\) is not applied any other time. □

We assign linearization points for PSim in the same way as we do for Sim. We can then argue, as in Sim, that PSim is linearizable. Thus, the following theorem holds for PSim.

Theorem 4

PSim is a linearizable implementation of a universal object.

4.2 Space and Step Complexity

PSim performs O(n+s) shared memory accesses. More specifically, the read of the StRec record which is performed on line 10, results in reading the array rvals of n return values, the bit vector applied which is stored in O(n/b) memory words, and the entire state of the object, i.e. s memory words. Moreover, the read of Toggles on line 13 requires O(n/b) additional shared memory accesses. The algorithm performs O(k) memory accesses to read the appropriate elements of Announce. Thus, the shared memory accesses performed by PSim is O(n+s). PSim uses a pool of O(n) records of type StRec, each of size O(n+s). The algorithm also employs a bit vector of size n and an array of n values. Thus, the space complexity of PSim is O(n 2+ns).

Theorem 5

PSim uses O(n/b) Add objects of size b bits each, one LL/SC object storing a single pointer, and O(n) read-write registers each of size O(n+s). The step complexity of PSim is O(n+s).

4.3 Making PSim Adaptive

In this section, we discuss how we could modify PSim in order to get an adaptive version of it in terms of both space and step complexity. The step complexity of PSim is determined based on the following: (1) Toggles is a vector of n bits, so a read on it (line 13) causes O(n/b) shared memory accesses, (2) each structure of type StRec contains the simulated state and a vector of n return values, so a read on it (line 10) causes O(n+s) shared memory accesses, and (3) Pool contains O(n) records. Below, we discuss how we can redesign each of these data structures to get an adaptive version of PSim in terms of both space and step complexity.

Herlihy, Luchangco and Moir present in [21, Algorithm 1], an adaptive implementation of a collect object. The step complexity of this implementation is O(k) for collect and O(1) for update, where k is the total contention. Its space complexity is O(k).

To avoid maintaining a vector of n bits, we can replace Toggles with the collect implementation of [21, Algorithm 1]. Whenever a thread p wants to perform a request, it calls update to write to its component the value of its bit and its request instead of recording its request on Announce (line 2) and executing an Add on Toggles to update the value of its assigned bit (line 3). The read of the bit vector performed on line 13 is replaced with a collect on the collect object. This collect returns the set of bits and the requests of all active threads.

Instead of storing an array of n return values, a set of records (one for each thread that has taken steps thus far) is maintained. Each of these records contain a return value, a thread identifier, and a toggle bit which is used to identify if a new request has been initiated by this thread. Whenever a thread executes a request for the first time, the set is updated with a record containing the thread’s id. The set can be trivially implemented as a linked list given that each thread works on its own copy of this list. By applying these techniques the size of structure StRec is reduced to O(k+s) and making a copy of it costs O(k+s).

By applying the two techniques discussed above, the step complexity of PSim becomes O(k+s). However, the space complexity is still a function of n since Pool still contains n+1 structures. Instead of allocating Pool statically at the beginning of the execution, each thread dynamically allocates its two records when it executes its first request. Instead of storing an array of n return values, each record stores a pointer which will point to the first element of the list of return values. Thus, the number of such structures that are allocated is 2k+1, each of size O(s). S is a pointer to one of the elements of Pool. When a thread makes a local copy of a structure pointed to by S, it should also make a local copy of the current list of return values which is pointed to by the appropriate field of S. Thus, the memory overhead for each thread is O(k+s). Therefore, by applying these techniques the space complexity becomes O(k(k+s)).

The collect implementation presented in [21, Algorithm 1] has the disadvantage that its step and space complexity is a function of the total contention since it cannot free the memory that is not used any more. The collect implementation presented in [21, Algorithm 2] could be used instead, the step and space complexity of which adapt to operation’s complexity. However, the last implementation uses primitives that are not supported by real machines. In case primitives are simulated using CAS instructions, the resulting algorithm would not satisfy the wait-freedom property.

5 Performance Evaluation

We run our experiments on a 32-core machine consisting of four AMD Opteron 6134 processors (Magny Cours). Each processor consists of two dies and each of them contains four processing cores and an L3 cache shared by its cores. Dies and thus processors are connected to each other with Hyper Transport Links creating a topology with an average diameter of 1.25 hops [10]. For the experiments presented here, we used the latest version of the code of PSim (version 1.2) [25]. All codes were compiled with gcc 4.3.4, and the Hoard memory allocator [8] was used to eliminate any bottlenecks in memory allocation. The operating system was Linux with kernel 2.6.18. Thread binding was used in order to get more reliable performance results; the i-th thread was bound to the i-th core of the machine. In this way, we exploited first multi-core, then multi-chip and then multi-socket configuration.

We first focus on a micro-benchmark which shows the performance advantages of PSim over well-known synchronization techniques. We have chosen to simulate a simple Fetch&Multiply operation as a case study; each algorithm has simulated the execution of 107 Fetch&Multiply requests for different values of n, where each thread initiated 107/n such requests. We measured the average throughput (i.e. the number of Fetch&Multiply simulated per second) that each algorithm has exhibited. Specifically, the horizontal axis of Figs. 2–11 represents the number of threads n, and the vertical axis represents the throughput (in millions of requests executed per second) that each synchronization technique has performed. For each value of n, the experiment has been performed 10 times and averages have been calculated. Between two Fetch&Multiply requests by the same thread, we have inserted a random number (up to 512) of dummy loop iterations in order to simulate a random work load large enough to avoid unrealistically low cache miss ratios and long runs; we remark that this load is not big enough to reduce contention. A similar technique is employed by Michael and Scott in [28] for the same reasons. The performance behavior of our algorithms for different values of random work (Fig. 6) will be discussed later.

We have performed this experiment to measure the performance of the following techniques: CLH spin locksFootnote 8 [11, 26], a simple lock-free algorithm with exponential back-off, flat-combining [16, 17], and OyamaAlg [29]. The simple lock-free algorithm uses a single CAS object O, and executes CAS on O repeatedly until it successfully stores the new value into it; the algorithm employs an exponential back-off scheme to reduce contention. We also implemented a version of PSim, called CAS-Sim, where Add is simulated in a lock-free way using a CAS object, as in the simple lock-free algorithm discussed above.

We carefully optimized these algorithms in our computing environment; for those that use backoff schemes, we performed a large number of experiments to select the best backoff parameters in each case. CLH spin locks and OyamaAlg have been evaluated for only up to 32 threads (so that each thread runs on a distinct core), since otherwise they result in poor performance. We used the flat-combining implementation provided by its inventors [16, 17] and we applied similar optimizations on its code as for that of PSim; we also carefully chose its parameters (i.e. polling level, number of combining rounds) to optimize its performance in our computing environment.

Figure 2 shows the results of our experiment. PSim has been proved to be up to 2.5 times faster than spin-locks, and up to 1.7 times faster than the simple lock-free algorithm. We remark that both PSim and flat-combining implement the combining technique, so we expect both of them to enjoy the performance benefits of this technique. However, flat-combining is blocking whereas PSim is wait-free. Since wait-freedom is expected to come with some overhead, our first goal was to design a wait-free implementation of the combining technique that performs as well as flat-combining (which is however blocking). Figure 2 shows that PSim achieves this goal and even performs slightly better than flat-combining; it exhibits up to 1.20 times better throughput than flat-combining for n=32. We remark that PSim achieves up to 1.38 times better performance than flat-combining in case the number of threads is greater than the number of processing cores (i.e. n=64 or n=96). Finally, PSim outperforms OyamaAlg by a factor of up to 1.9.

As illustrated in Fig. 2, when n>4, the simple lock-free algorithm causes a lot of contention and exhibits performance much worse than PSim or flat-combining. However, it behaves well for up to 4 threads since then all the communication occurs within the same die, which is much faster than achieving intra-communication between dies. As expected for queue-locks, the performance of CLH remains almost the same as n increases. For up to 96 threads, the performance of PSim and flat-combining is getting better as the number of n increases. This is so, since the average degree of combining that is achieved increases with the number of active requests in the system. We remark that this enhancement in performance is noticed even for values of n>32 where the processing cores are oversubscribed. In contrast, OyamaAlg achieves lower throughput. This is so since in OyamaAlg threads need to succeed on a CAS in order to have their requests announced; this causes a lot of contention and leads to a significant performance degradation. It is worth pointing out that PSim is at least 2 times faster than CAS-Sim which, not surprisingly, exhibits similar performance to OyamaAlg; in CAS-Sim, as in OyamaAlg, a thread repeatedly executes CAS to announce a request and therefore CAS-Sim faces a similar performance penalty for the announcement of the requests as OyamaAlg.

Figure 3 shows the average number of requests, called average combining degree, that are executed by the combiners in PSim and flat-combining. Specifically, to calculate the average combining degree of PSim, we add the number of requests that are applied each time a successful CAS on S is executed and we divide this sum by the total number of successful CAS instructions. As shown in Fig. 3, flat-combining achieves better combining degree in some cases. This is expected since no more than n requests (one per thread) can be applied in PSim, each time a CAS on S succeeds. In contrast, in flat-combining, a combiner may apply several requests of the same thread, since it may traverse the request list more than once. Because of this, it is reasonable to expect that flat-combining avoids moving cache lines between the processing cores which is good in terms of performance. However, the performance of flat-combining is not better than that of PSim, since the advantage in the achieved combining degree of flat-combining is counterbalanced by other performance factors that are discussed below. Moreover, as shown in Fig. 3, when the processing cores are lightly oversubscribed, the combining degree of PSim matches the combining degree of flat-combining. As shown in Fig. 2, this results in better performance for PSim.

Figure 4 shows the average number of failed CAS instructions executed per request. Notice that a large number of requests in PSim do not execute any unsuccessful CAS instructions; this is mainly due to the validation that is performed on line 11. So, the average number of unsuccessful CAS per request in PSim is very small. In contrast, this number is close to one (or larger) for all other algorithms. The large number of unsuccessful CAS instructions executed in flat-combining occur during the acquisition of the global lock. This results in a performance degradation. We remark that our efforts to overcome this problem by increasing the number of combining roundsFootnote 9 (which reduces the number of times the global lock is acquired) did not result in better performance. This was so because after the first few combing rounds, flat-combining spent a lot of time reading records of threads with no announced requests. To the contrary, PSim does not perform non-useful read operations.

Figure 5 shows the average number of atomic instructions (other than reads and writes) per request that each algorithm executes. For large values of n, PSim and flat-combining execute almost the same number of atomic instructions per request on average. For smaller values of n, flat-combining executes slightly less atomic instructions per request on average than PSim. However, all the atomic instructions executed in flat-combining are CAS instructions on a single shared variable that implements the global lock. In contrast, the atomic instructions that are executed by any request in PSim are not applied on the same base object (one of them is an Add and if there is any other it is a CAS on S). Moreover, the release of the global lock in flat-combining have not been taken into consideration in the diagram of Fig. 5 (since it is implemented with a write). However, these write instructions cause more contention on the global lock and additional cache misses.

It is worth pointing out that the failed CAS instructions may cause branch miss-predictions which are expensive since modern microprocessors usually have deep pipelines. Table 2 shows that flat-combining pays more (in cpu cycles) for stalls due to cache misses and branch miss-predictions.

In the experiment illustrated in Fig. 6, we study the behavior of the evaluated algorithms for different amounts of random work, i.e. for different numbers of dummy loop iterations inserted between the executions of two Fetch&Multiply by the same thread. We fix the number of threads to 32 and we perform the experiment for several different random work values (0–8192). Figure 6 shows that, for a wide range of values (64–2048), there are no big differences in the throughput exhibited by the evaluated algorithms. The reason for this is that for all these values the synchronization cost is the dominant performance factor. For small values of random work (0–64), the simple lock-free algorithm achieves unrealistically high throughput. The reason for this is that a thread can uninterruptedly perform thousands of Fetch&Multiply. This phenomenon is known as a long run; as discussed in previous work [28], such runs are unrealistic workloads. A similar behavior, but in smaller scale, is observed in flat-combining. In cases that the random work is too high (greater than 4096), the throughput of all algorithms degrades and the performance differences among them become minimal since the amount of random work becomes then the dominant performance factor.

Table 3 studies the throughput of PSim for different values of the backoff upper bound. The performed experiment is the same Fetch&Multiply experiment studied in Fig. 2, where n=32 and for a maximum workload of 512. The second row of Table 3 shows the throughput achieved by PSim for different values of the backoff upper bound (first row). Notice that the best throughput is achieved when the backoff upper bound is equal to 1000 dummy loop iterations. The third row shows by how much each of these values diverge from the optimal backoff upper bound value (of 1000) and line 4 shows the performance degradation in each case. Notice that the performance of PSim is very tolerant to overestimated values for the backoff upper bound. More specifically, even a value greater by 120 % than the optimal backoff upper bound causes a performance drop of just 12.5 %. However, PSim is less tolerant to smaller backoff values. Notice that a value for the backoff upper bound smaller by 40 % than the optimal causes a 22.7 % performance drop. Thus, if the amount of workload cannot be determined precisely, overestimated backoff upper bounds is the preferable choice.

Our next experiment, illustrated in Fig. 7, studies the performance of PSim and flat-combining (the best two algorithms in terms of performance) when n takes values larger than 96, i.e. when a large number of threads are active and the system is heavily oversubscribed. The active threads execute 107 Fetch&Multiply in total, as in the previous experiments. Figure 7 shows that both PSim and flat-combining scale well up to thousands of threads.

Figure 8 illustrates how PSim performs in cases where an application initiates a large number of threads from which only a small percentage are active, at any given point in time. Specifically, we consider systems where the total number of threads ranges from 128 to 1024 and only 10 % of them are active at each point in time. The active threads execute 107 Fetch&Multiply in total, as previously. We remark that this experiment is in favor of flat-combining: PSim requires to read all n bits of the Add object and copy locally n return values independently of how many of the threads are active, whereas in flat-combining inactive threads cause no overhead. Figure 8 shows there is indeed a small performance advantage (by a factor of 1.05) of flat-combining in this case. In order to discover the main overheads of PSim in this case, we have implemented an additional version of it where no return values are calculated. Figure 8 shows that the calculation of the return values is indeed an expensive part of the computation performed by PSim. This shows that the overhead of having each thread performing an Add per request does not cause any significant overhead even for large values of n. We remark that in the implementation of several shared objects some of the simulated operations do not have a return value. For instance, a push on a stack or an enqueue on a queue, etc., do not require the calculation of a return value. We remark that in cases where the percentage of active threads is larger than 10 %, PSim achieves much better performance than that shown in Fig. 8.

We next explore in more detail the performance characteristics for the Add instruction. We compare the performance of SimActSet (discussed in Sect. 3.3) to that of a simple lock-free active set implementation that uses CAS objects to store a set of n bits, one for each thread. Specifically, as in SimActSet, the algorithm uses n/b CAS objects. Whenever a thread wants to apply a join (leave), it repeatedly executes CAS on the appropriate object until it succeeds to change its bit to 1 (0, respectively). getSet simply reads the CAS objects. An exponential backoff scheme is used to increase the performance of the lock-free implementation.

We executed 107 join and leave requests, and 107 getSet requests in total; each thread executed 107/n join or leave requests and 107/n getSet requests. We measured the average throughput exhibited by each technique. To study the scalability of our technique, we consider systems where the total number of threads is large, i.e. it ranges from 128 to 1024. Again, a random number of (up to 512) dummy loop iterations are executed between the execution of two requests by the same thread. Figure 9 illustrates that SimActSet outperforms the active set based on CAS by a factor of up to 1.7. This is due to the fact that getSet causes a small number of cache misses in SimActSet, whereas the repeated execution of a CAS results in a larger number of cache misses.

Several modern shared memory machines (e.g. those that employ the x86_64 architecture) include atomic Add and CAS instructions on up to 64 bit words in their instruction set. In order to cope with more than 64 threads efficiently, we have implemented the multi-word bit vector of Add (and CAS, for the lock-free algorithm) by storing its words to the minimum possible number of cache lines. In this way, getSet causes a minimum number of cache misses. Notice that the size of a typical cache line is usually 64 bytes; thus, a single cache line can store one bit for each of up to 512 threads. So, getSet causes more than one cache miss only if the number of threads is more than 512.