Abstract

We manipulated the visual information available for grasping to examine what is visually guided when subjects get a precision grip on a common class of object (upright cylinders). In Experiment 1, objects (2 sizes) were placed at different eccentricities to vary the relative proximity to the participant’s (n = 6) body of their thumb and finger contact positions in the final grip orientations, with vision available throughout or only for movement programming. Thumb trajectories were straighter and less variable than finger paths, and the thumb normally made initial contact with the objects at a relatively invariant landing site, but consistent thumb first-contacts were disrupted without visual guidance. Finger deviations were more affected by the object’s properties and increased when vision was unavailable after movement onset. In Experiment 2, participants (n = 12) grasped ‘glow-in-the-dark’ objects wearing different luminous gloves in which the whole hand was visible or the thumb or the index finger was selectively occluded. Grip closure times were prolonged and thumb first-contacts disrupted when subjects could not see their thumb, whereas occluding the finger resulted in wider grips at contact because this digit remained distant from the object. Results were together consistent with visual feedback guiding the thumb in the period just prior to contacting the object, with the finger more involved in opening the grip and avoiding collision with the opposite contact surface. As people can overtly fixate only one object contact point at a time, we suggest that selecting one digit for online guidance represents an optimal strategy for initial grip placement. Other grasping tasks, in which the finger appears to be used for this purpose, are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Binocular visual guidance of the opposable thumb and finger when precisely grasping objects is accredited as one of the most crucial developments in human evolution and central to our success as species (Napier 1961; Sakata et al. 1997; Castiello 2005). The act is traditionally conceived as comprising two temporally coordinated components (Jeannerod 1981): transporting the hand—or more specifically, the wrist—towards the object’s location (reach component); and opening the thumb and finger to a maximal aperture near the object before closing them together in a ‘pincering’ action onto optimal contact points for grip stability (grasp component). According to this ‘two-visuomotor-channel’ (2VMC) model (Jeannerod 1981)—and variants of it (Hoff and Arbib 1993; Paulignan et al. 1997; Meulenbroek et al. 2001; Rosenbaum et al. 2001; Mon-Williams and Tresilian 2001)—visual computation of the position, size and geometry of the goal object is transformed into motor commands that cause the opposing digits to move relative to each other as functional grasping unit to achieve this. There are several alternative accounts. Among these are suggestions that grasping constitutes a ‘two-digit-pointing’ (2DP) manoeuvre (Smeets and Brenner 1999), whereby the underlying transformation involves visual selection of the best contact positions on the target, followed by motor outputs that direct relatively independent movements of the thumb- and finger-tips to their different object end-points.

A problem with many grasping models is that they are primarily based on analyses of the spatiotemporal characteristics of the maximal digit opening at grip preshaping, which are largely the outcome of movement programming. A key reason for this is that data concerning features of thumb and finger closure in establishing initial object contact—the period during which visual guidance contributes importantly to end-point grip accuracy (Servos and Goodale 1994; Watt and Bradshaw 2000; Melmoth and Grant 2006; Melmoth et al. 2007; Anderson and Bingham 2010)—remain rather limited (see Castiello 2005).

Our interest in this issue was initially aroused by a common, but unexpected, finding in our previous work in which subjects were required to ‘quickly and accurately’ grasp cylindrical objects—a familiar occurrence in both daily life and laboratory settings. This was that for the great majority of trials, getting a grip did not end in a simultaneous pincering of the target, but in a ‘first-contact’ by one digit while the grasp was still closing (see Fig. 1). This raised the question of whether it was the thumb or the finger that usually made first-contact and alerted us to another general problem associated with visually guiding a two-digit grasp compared to single-digit point—which is that we can only fixate on one position in space at time. Do we, then, select a single digit to guide to its end-point while closing the grip or adopt some alternative strategy, such as switching fixations rapidly between the two contact sites or focussing on the target’s centre of mass so that both end-points are in equivalent regions of parafoveal vision? Our motivations in the present study were to shed light on these issues by examining how the two digits are guided during precision grasping.

Grip profile. A typical grasping movement obtained from a normal subject in a previous study (Melmoth and Grant 2006). The grip aperture remained stable for ~400 ms prior to movement onset, opened to a peak grip at ~875 ms, then closed on the object. The open circle represents the moment of first-contact by one of the digits (causing the object to be displaced by >1 mm in 3D space); the filled circle represents contact by the second digit almost 100 ms later, so that the grip size now matched to the target’s diameter (48 mm) just before it was lifted. Note: the profile is of ‘raw’ data, uncorrected for digit thickness, so that the aperture of ~48 mm at the beginning represents a combination of the distances between the marker centroids on the thumb and finger and their contacts with the 30-mm-diameter start button

Wing and Fraser (1983) were among the first to do this. They compared the trajectories of the wrist, thumb and index finger during reaches to midline-presented cylindrical targets executed by a patient skilled in the use of a prosthetic hand. They found that when using her natural hand, both digits initially moved together in parallel to the wrist-target axis but that, in the second half of the movement, while the thumb maintained a relatively straight path to its contact site, the finger moved out and in to open and close the grip. Interestingly, the subject’s behaviour was the same when using her artificial hand, even though its mechanism required subordination of the wrist, by marked abduction, in order to maintain the directness of the thumb trajectory. Wing and Fraser (1983) argued that the thumb is visually monitored in order to provide a ‘line of sight’ for online guidance of the wrist/hand in the transport end-phase. Haggard and Wing (1997) provided support for this idea, by directly comparing the envelopes of spatial variability in the lateral deviations of the wrist, thumb and finger, from their start to end positions, during forward hand transport to grasp a cylindrical dowel. They showed that average trial-by-trial standard deviations in the trajectory of the thumb always decreased more markedly than the wrist as the movement progressed. Since noise in the motor system tends to accumulate with movement duration, Haggard and Wing (1997) argued that the improving positional accuracy of the thumb was likely the result of an ‘active control’ process based on visual feedback related to this digit. They did not, however, report whether a marked reduction in late variability comparable to that of the thumb also occurred for the finger.

Subsequent work employing a wider range of table-top object locations, sizes and/or orientations has supported the generality of straighter thumb compared to finger transport (Galea et al. 2001) and the relative invariance of end-point thumb versus wrist positions (Paulignan et al. 1997). In this latter study, however, the spatial variability of both the thumb and finger trajectories reduced markedly towards the end of repeated movements to each target, with no significant difference between the two at the presumed moment of object contact, thereby implying that each digit is under similarly active guidance. This finding accords with most grasping models. Moreover, Smeets and Brenner (1999, 2002) have argued that the directness of the thumb’s trajectory results from the fact that its contact position is more visible and closer to the subject’s body than that of the finger, with the path of this latter digit obliged to deviate outwards around the edge of the opposite object surface. That is, the differing thumb and finger trajectories are more a consequence of the differing orientations of their contact positions, as explicitly predicted by the 2DP model. In an experiment in which, for these reasons, subjects were deliberately constrained to pincer-grip midline-presented circular discs at an angle parallel to their frontal plane (i.e. with each digit-tip equidistant from the body). Smeets and Brenner (2001) obtained evidence supporting further predictions of 2DP; that the maximal lateral deviations of the two digits should occur at independent times in the movement, with the digit having the earliest maximal deviation taking a wider and more variable path. However, it was the thumb that satisfied these conditions, casting further doubt (e.g. Paulignan et al. 1997) upon its special role in transport guidance.

Here, we began by re-examining the kinematics of more natural reach-to-precision grasp movements directed at a cylindrical (household) objects, in which we combined analyses of the amplitude and variability of the digit’s trajectories during the second half of the movement, with aspects of the timing and variability of their placements at object contact. Targets were of two diameters, placed at one of four locations; one (near) position along the subject’s midline—as in most previous studies—or at three (far) locations, −10° across the midline and at +10° and +30° ipsilateral to it. This latter arrangement exploited the fact that final grip orientations vary systematically with target eccentricity (Paulignan et al. 1997), being almost parallel to the mid-sagittal axis with the thumb contact site much nearer the body at the −10° position, but near perpendicular to it with near-equidistant thumb and finger contact sites (c.f., Smeets and Brenner 2001) at the most peripheral (+30°) location. In other words, we varied target size and the relative visibility and closeness of the contact surfaces from trial-to-trial, to determine whether these factors differentially affected the actions of the two digits.

We then undertook more direct evaluations of the thumb as visual guide (TAVG) hypothesis via two different approaches. First, we had the participants to perform the same movements, but without visual feedback during their execution. This was a direct test of a caveat raised by Haggard and Wing (1997, pp. 287) about their own conclusions regarding the special role of the thumb, that, to paraphrase; ‘if purely visual, [its relative directness] might vanish if movements are made without visual guidance, [whereas] if they persist, [its] role in hand transport must be caused by factors other than its convenient visibility’. Second, we examined the effects of selectively occluding vision of either the thumb or the finger during the movement. This provided an opportunity to test whether compromising the visibility of each digit had different (TAVG) or equivalent (2VMC, 2DP) effects on the visual feedback phase of getting a precision grip.

Materials and methods

Subjects in the experiments (n = 18) were young right-handed adults (aged 18–35 years) with normal or corrected-to-normal vision, stereoacuity thresholds of at least 40 arc secs (Randot stereotest, Stereoptical Inc., Chicago, USA), and no history of neurological impairment. Informed consent was obtained for participation, and conduct was in accordance with the Declaration of Helsinki and Senate Ethics Committee of City University London approval.

Experiment 1: Getting a normal grip

Hand movement recordings

Initial data were collected from 6 subjects who precision grasped cylindrical household objects of similar height (100 mm) but different width—a ‘small’ (2.4 cm) diameter glue-stick and a ‘large’ (4.8 cm) diameter pill-bottle—placed at one ‘near’ (25 cm) or three ‘far’ (40 cm) locations at −10° across and +10° and +30° uncrossed with respect to their midline start positions. Standardized methods were used to record their hand movements (Melmoth and Grant 2006; Melmoth et al. 2007). Subjects sat at a table (60 cm wide by 70 cm deep) on which the goal objects were placed. They gripped a 3-cm start button situated along their midline and 12 cm from the table edge between the thumb and index finger of their preferred hand. Passive, lightweight infrared reflective markers (7 mm diameter) were placed on the wrist, and tips of the thumb (ulnar side of nail) and finger (radial side of nail) of this hand, and on top of the centre of each object. Instantaneous marker positions were recorded (at 60 Hz) using three infrared 3D-motion capture cameras (ProReflex, Qualisys AB, Sweden), with a spatial tracking error of <0.4 mm. Subjects wore PLATO liquid crystal goggles (Translucent Technologies Inc., Toronto, Canada), the lenses of which were opaque between trials, but become suddenly transparent when activated in conjunction with hand movement recording onset. Participants were instructed to reach out “as naturally and accurately as possible” to pick up the object using a precision grip, place it to the right, and return to the start position. Subjects performed 4 repeats of the 8 (2 size × 4 location) trial combinations in a single block, with object presentations in the same pseudo-randomized order.

In the second part, subjects performed a further block of identical trials without visual guidance. All methods were the same, except that the PLATO goggles opened for 1 s so that the subjects could programme their upcoming movement, but then closed, this being the ‘go’ signal for them to execute the grasp in the absence of online visual feedback.

Analysis of grasping data

Conventional dependent measures of the movement kinematics were determined for each trial. Those largely reflecting movement programming were: the reaction time (from the ‘go’ signal to movement onset, when wrist marker first exceeded a velocity of 50 mm/s); the peak velocity of the reach and time to peak velocity; the peak grip aperture between the two-digit tips at grip pre-shaping and the time to peak grip. Measures of movement execution were: the overall movement duration (from onset to initial object contact, when the target was first displaced by ≥1 mm in 3D space); the grip closure time from peak grip to initial object contact; the grip size at contact and the velocity at contact (the latter determined from the wrist marker). Grip aperture data were corrected for differences in digit thickness between participants, as in our previous work (Melmoth and Grant 2006; Melmoth et al. 2007).

Additional measures of the trajectories of each digit-tip were determined from their forward and lateral deviations parallel to the table surface, and relative to a direct line from their positions on the start button to their initial contact point on the objects. From this, we calculated the amplitude of maximal lateral deviation (LatDevmax) of the thumb and the finger on each movement, with positive values representing rightward deviations. Taking the shortest Euclidean path (i.e. with zero deviation) in moving each digit from its start to end positions can be considered ‘ideal’ with respect to optimizing energetic costs (Soechting et al. 1995). But, more pragmatically, these measures were chosen because they directly correspond to or closely resemble those computed by others in previous work. We also determined the spatial variability of the lateral deviation of each digit in the second half of the movement, as a measure of their active online guidance during this period (c.f., Wing and Fraser 1983). For this, the lateral deviation of the thumb and finger in the recording frames corresponding to 6 normalized time-points (50, 60, 70, 80, 90 % and contact minus one frame) in their movements was obtained for each subject on each trial-type, with approach variability of each digit expressed as the standard deviation of these measures at each time-point.

The digit making first-contact with the object was determined from the marker which was closest to the centre of the object (in the x–y plane) in the recording frame that defined initial contact (≥1 mm target displacement). This criterion was chosen because it is well above the positional noise level of our recordings and because we have shown empirically that target movements of this magnitude reliably occur when the object is first touched. Because both digits rarely made simultaneous contact (i.e. in the same recording frame), the moment of second-digit-contact was determined from the subsequent frame in which the marker on this digit achieved minimum velocity, indicating that it had stopped moving (see Fig. 1) and that the grip was about to be applied. The thumb and finger positions at these moments of contact were also determined independently from the x- and y-coordinates of the marker on each digit relative to the marker on the centre of the target (which served as the origin), with the x-axis representing the object’s width or frontal plane and the y-axis its depth. From these coordinates, we confirmed that grip orientations varied systematically with target eccentricity (Paulignan et al. 1997), by calculating the angle formed by their opposition axis on the object relative to the frontal plane, wherein an angle of 90° indicated that the digits were aligned exactly perpendicular to this plane and of 0° that they were parallel with it. Positional variability in the initial contacts of each digit in each of the two axes was also calculated from the average of their standard deviations by trial-type in each subject.

In general, the median values obtained for each dependent measure on equivalent (object size × location) trials in each subject were analysed by Huynh–Feldt corrected repeated measures analysis of variance. Post hoc tests were conducted with the Bonferroni adjustment for multiple pair-wise comparisons to examine the origin of main effects or with the unadjusted least significant difference (LSD) test where the main effects had no explanation. The significance level was set at p < 0.05. Departures from these procedures are indicated in the text.

Results

Figure 2 shows examples of the digit trajectories during reach-to-precision grasps of the small and large objects at the two locations (−10° crossed, +30° uncrossed) for which differences in relative visibility of their contact sites and closeness of the thumb were the most extreme. Grip orientations rotated on the objects from near perpendicular (~75°) to the frontal plane to almost parallel to it (~30°) on completion of the respective movements. Formal analysis (see Table 1; Full Vision) showed that this was a main effect [F (3,15) = 141, p < 0.001] of target location, with the mean angle between the two digits being significantly different between all 4 positions (p < 0.01, for each comparison) and always greater for the small versus large object [F (1,5) = 326, p < 0.001] at each position.

Digit trajectories. Frame-by-frame positions of the thumb- (grey circles) and finger-tip (black circles) during movements of a right-handed subject to precision grasp the a small and b large objects (open circles) at the 10° crossed (left) and 30° uncrossed (right) positions. The view is from above showing the forward (y-axis) and lateral (x-axis) movement components with respect to the initial digit locations on the midline start button. Dotted lines: the direct path from the start position of each digit to its initial object contact position; continuous arrows: the points of maximal lateral deviation in the digit’s trajectory; white squares: the moments of peak grip aperture. Note the straighter path taken by the thumb and the occurrence of late outward deviations (unfilled arrows) in its trajectory

There were main effects of both digit [F (1,5) = 116, p < 0.001] and object position [F (3,15) = 4.4, p = 0.02] on the maximal deviation of the thumb and finger trajectories. Most strikingly, the thumb always deviated less on its path to the object (Fig. 2; Table 2), with an overall mean LatDevmax (+31.3 mm ± 1.8 SD) around half that (+57.1 mm ± 5.0 SD) recorded for the finger. The smaller effect of position resulted from greater deviation of both digits for movements to the most peripheral (+30°) compared to the near midline and crossed (−10°) locations. This effect further showed a tendency [digit × location interaction, F (3,15) = 3.2, p = 0.066] to be greater for the finger than the thumb, despite the more equal visibility and closeness of its contact surface on the peripherally located objects.

There was also a significant digit × object size interaction (F (1,5) = 6.9, p = 0.047) which, again, was due to wider finger compared to thumb deviations. Counter-intuitively, this interaction arose because the finger LatDevmax was greater (+61.0 mm ± 6.7 SD) when subjects grasped the small compared to the large diameter (+54.1 mm ± 5.6 SD) object, despite showing the usual effect of object size on peak grip aperture [small 69.0 mm ± 9.3 SD versus large 82.9 mm ± 9.5 SD; F (1,5) = 116, p < 0.001]. Further analyses revealed the explanation for this (Fig. 2, Table 2). Note that the LatDevmax for both the thumb and finger were in a rightward direction (yielding values of positive sign) and these typically occurred early in the second half of their movement durations—at respective means of 55.4 % (±1.4 % SD) and 56.3 % (±1.5 % SD)—and significantly earlier than the moments of peak grip aperture at 61.2 % (±3.3 % SD) of the movement (unpaired t tests, both p < 0.01).

Inspection of individual trajectories revealed that, at the moment of peak grip, the thumb continued to move inwards only deviating outwards in the direction opposite to the finger later in its trajectory (e.g. Fig. 2) when the finger was now moving in towards its contact site. It transpires that such late thumb path changes have been observed before, although not specifically commented on despite expectation of a simple curved trajectory, when subjects grip cylindrical objects (see Schlicht and Schrater 2007, Figs. 3 and 1). These findings lead to two conclusions. First, they suggest that formation of the peak grip does not result from the two digits moving in opposite directions relative to each other, but is determined mainly by the outward deviation of the finger. Second, the finger LatDevmax reflects the extent to which this digit affords spatial clearance to the lateral edge of the target, with a wider deviation in the approach to the smaller object—the least stable of the two and relatively easy to knock over—probably occurring to avoid colliding with it.

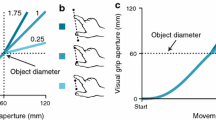

Approach variability. Average trial-by-trial standard deviations in the thumb (grey columns) and finger (black columns) trajectories at different points in the movements performed under a Full Vision and b No Vision conditions. Data are the averages across subjects in the variability of the lateral deviations of the thumb and finger, with end-1 representing the penultimate recording frame before each digit made contact with the object. Error bars, SEM

Despite the late direction reversal in thumb’s path, its approach variability (average standard deviation) on repeated trials was significantly less [F (1,5) = 34.6, p = 0.002] than that of the finger (Table 2), and independent of object position or size. There was also a main effect of time [F (5,25) = 64.7, p < 0.001] on this parameter and a significant digit × time interaction [F (5,25) = 16.0, p < 0.001]. These effects (Fig. 3) occurred because the variability of both digits progressively reduced as they got closer to the target, but whereas the thumb’s trajectory was much more consistent than the finger between 60 and 90 % of movement duration, the smaller difference present just before contact was not. Nonetheless, it was the thumb that made great majority (81.2 %) of first-contacts with the objects (Fig. 4) with the tip of this digit much closer (by ≥10 mm) to the centre of the object on ~15 % of trials, a proportion similar to the combined total of finger first-contacts (10.4 %) and simultaneous pincer grips (8.4 %). Indeed, across all trials, the thumb contacted the objects ~40 ms before the finger. Average trial-by-trial standard deviations in thumb positioning at contact were also generally less than that of the finger (Table 1), with its reduced positional variability in the y-axis or depth plane of the objects almost achieving significance [F (1,5) = 5.2, p = 0.072].

The thumb as the digit of first-contact. Distribution of distances (in mm) across all trials (n = 192) by which the thumb (left side of histogram) or finger (right side of histogram) was closest to the centre of the target at the moment of initial object contact, defined as its displacement by ≥1 mm in 3D space (the closest digit was assumed to have caused this initial target displacement). Simultaneous ‘pincering’ by both digits (at 0 ± 1 mm, double arrow-head) occurred on a minority (~8 %) of trials

Since these findings were independent of final grip orientations, they appear most consistent with the TAVG. In support of this, while movements ending in finger first-contacts were equally distributed among the different trial combinations [χ2 = 10.4, df 7, p > 0.1], aspects of their kinematics (nonparametric, related-samples Wilcoxon signed rank test) differed from those in which the thumb was first to touch the object. Specifically, finger-first compared to thumb-first trials ended in higher velocity (115 mm/s ± 32 SD versus 82 mm/s ± 25 SD) and wider grips (57.1 mm ± 11.3 SD versus 44.8 mm ± 5.9 SD) at initial object contact (both p < 0.05), suggesting that they represented harder and less accurate ‘collisions’ with the object.

Getting a grip without visual guidance

Comparisons of standard reach-to-grasp kinematics showed, as would be expected (e.g. Wing et al. 1986; Jakobson and Goodale 1991; Whitwell et al. 2008), that movements made without visual guidance were slower and less accurate than when vision was fully available (Table 3); movement durations and grip closure times were significantly prolonged, with subjects producing wider grasps at peak grip and initial object contact. While mean final grip orientations (Table 1) were unaffected by view (i.e. Full versus No Vision)—consistent with these end-postures being programmed in advance of movement onset (e.g. Rosenbaum et al. 2001)—there were main effects for most other parameters of interest. But more notably, given the Haggard and Wing (1997) caveat (see “Introduction”), relevant view × digit interactions (Table 2) showed that neither the relative straightness nor consistency of the in-flight thumb trajectory ‘vanished’ in the absence of online visual guidance (Fig. 3), whereas the average LatMaxdev of the finger and its approach variability increased significantly or nearly so (by 22 and 36 %, respectively) compared to the Full Vision condition. By contrast, lack of visual guidance mainly affected the thumb at the movement end-point being associated with a major reduction in first-contacts (to only 44 % of trials) compared to the Full Vision condition and marked (~3-fold) increases in its positional variability at contact in both object dimensions. These findings suggest that a direct and invariant thumb path was programmed by our subjects while they viewed the goal objects during movement preparation in the No Vision condition, along with incorporation of a wider finger deviation designed to avoid colliding with them. But they further suggest that when vision is available, its main importance for active guidance of the thumb is in establishing accurate contact with the goal object.

Discussion

We examined previously unexplored aspects of the precision grasping of upright cylindrical objects, the positions of which were varied so as to systematically alter the relative visibility and closeness of the thumb and finger contact sites before (No Vision) as well as during (Full Vision) the movements. Getting a grip in the tasks examined clearly involved different actions of the thumb and finger. Three findings were of general significance, because they are contrary to the tenets of several contemporary grasping models and to casual assumptions routinely made in empirical studies of this behaviour. First, we found that the thumb and finger were usually moving in the same—not opposing—directions at the moment of peak grip aperture (see Fig. 2), indicating that this maximal opening of the digits is more an emergent property of their differing trajectories than a specific function of grip formation. Second, we show that the movements rarely ended in simultaneous pincering of the object by the opposing digits; the thumb usually made first-contact, with the finger arriving later (by ~40 ms, on average). In fact, consistent with this, a similar average delay in initial force production by the ‘leading’ and ‘following’ digits during the preloading phase of adult precision grip formation was demonstrated some time ago (Forssberg et al. 1991). Third, we show that when the same movements are made in the absence of vision, it was not each digit (or the ‘hand’) that opened wider to increase the safety margin (e.g. Wing et al. 1986; Jakobson and Goodale 1991; Whitwell et al. 2008), but mainly an increased deviation of the finger away from its side of the target.

Of more direct relevance to our main experimental questions, the thumb trajectory was always much straighter and less variable than the finger path. While these features of thumb transport were hardly affected by preventing its visual guidance, the reliability of its first-contact and initial positioning on the object were particularly disrupted in the No Vision condition, indicating these resulted from loss of ‘active control’ (e.g. Haggard and Wing 1997) when visual feedback was unavailable. There were even trends for the finger deviation to increase more than that of the thumb when participants grasped; (1) the smaller diameter object, indicating that the finger movement was more influenced by this intrinsic object property; and (2) the most peripherally located (+30°) objects for which relative differences in the visibility/proximity of their respective contact sites were least pronounced. We acknowledge, though, that our attempt to equalize these latter was not completely successful, since the thumb remained slightly nearer to the body in the final grip posture adopted by our subjects when grasping the peripherally located objects.

Experiment 2: Getting a grip without vision of the thumb or the finger

For this reason, we undertook a further perturbation experiment, in which we examined the consequences of selectively removing online visual feedback from the thumb or from the finger on precision grip formation. We reasoned that if the two digits are subject to differential active guidance, then selectively obscuring the thumb or the finger should have different effects on grasping performance, whereas if they are equally guided, the effects of the two perturbations should be equivalent. We tested this by requiring subjects to get a precision grip on luminous ‘glow-in-the-dark’ objects, while wearing different cotton gloves impregnated with the same photo-luminescent paint. One glove was completely covered with paint so that whole hand could be seen, whereas for two others, either the thumb or the index finger was selectively blacked out.

Objects in this experiment were also two upright cylinders and of exactly the same dimensions (and similar weight) to those used in Experiment 1, but made of wood and painted so that they presented uniform surfaces to the subjects. The test objects were thus more akin to generic/neutral ‘dowels’ rather than the ‘familiar’ (household) items—with possible functional or semantic associations—of Experiment 1. However, before we painted them, we carried out an extensive study (involving 10 participants), in which we compared binocular and monocular movements made to these two sets of objects using a similar protocol and range of analyses to that of Melmoth and Grant (2006). Of the 24 kinematic and 11 ‘error’ parameters measured, there was not a single main effect of object category on performance (unpublished data), consistent with evidence that parameterization of grasping movements in which the final aim is simply to lift the target or move it aside is determined by the object’s volumetric properties, not its functional identity (Kritikos et al. 2001; Valyear et al. 2011). Thus, the different objects used should not, in themselves, have influenced performance in the two parts of this study.

Materials and methods

Twelve right-handed subjects participated, each of whom completed 4 blocks of 24 trials. In these they precision grasped the two (2.4 and 4.8 cm diameter) objects covered in photo-luminescent acrylic paint (Glowtec, UK), placed at the same near midline and two just off-midline far locations as in Experiment 1, with 4 repetitions of each trial-combination. The first block was conducted under normal ‘Full Light’ laboratory conditions in which vision of the whole painted gloved hand and the objects was always available. This was followed by 3 blocks performed with the room lights dimmed while wearing the different gloves; that is, under ‘Whole Hand’ (control), ‘No Thumb’ or ‘No Finger’ in-the-dark conditions. These were counterbalanced between subjects who, of course, were aware in advance of the ‘view-of-hand’ conditions under which they were about to perform. Spots of paint were applied to the table surface (at 10-cm intervals between the start and the 2 far locations) to add some pictorial cues (e.g. height-in-scene) to target position in the reduced light conditions, in which the luminance of the glove and target was generally between 0.5 and 1 cd/m2. Other task procedures and methods of hand movement recording and data analyses followed those of Experiment 1.

The purpose of including the Full Light condition was to confirm that our subjects performed the task properly when allowed normal vision of their moving hand, the target and its immediate surroundings, and that removing this latter environmental information in the Whole Hand in-the-dark condition did not affect their performance in ways other than those expected from similar work (Churchill et al. 2000). The mean kinematic data obtained (see Table 4) indicated that these expectations were met, as they replicated evidence that reducing the environment context in this latter condition resulted in slower movements (e.g. reduced peak reaching velocity; longer movement durations) and in larger grip sizes at peak and object contact, compared to normal performance in Full Light. The analyses presented thus focus on the main issue of possible differences between the 3 view-of-hand conditions in-the-dark. Finally, in response to a suggestion by a previous Reviewer, the last 2 participants in the experiment completed a 5th trial-block in which both the thumb and the index finger of the glove were blacked out. This ‘No Digit’ condition was intended to examine which, if any, effects on performance in the separate No Thumb and No Finger conditions might be additive. Data presented on this are restricted to the only parameter in which they were.

Results

As shown in Table 4, there were no main effects of the 3 view-of-hand conditions on early kinematic measures of the reach (peak velocity and its timing) or the grasp (peak grip aperture and its timing). Nor did view-of-hand affect the amplitude of the digit’s maximal trajectory deviations, trial-by-trial approach variability or the final grip orientations; as in our first experiment, the thumb always took a more direct and less variable route to the objects than the finger [both F (1,11) > 15, p ≤ 0.002]. Moreover, the LatMaxdev exhibited the same digit × location [F (2,22) = 4.8, p = 0.024] and digit × size [F (1,11) = 11.1, p = 0.007] interactions as before, due to relatively wider deviation of the finger during movements to the uncrossed (+10°) position and to the smaller object. We conclude from this that participants similarly programmed these movement parameters and outcomes based on the identical views of the glow-in-the-dark objects available to them before they began to move. We further conclude that these parameters were unaffected by ‘anticipatory control’ that could have derived from the subject’s advance knowledge that one of their digits would be visually occluded during the later feedback phase of the movement on some trial blocks.

Obscuring the thumb, however, significantly compromised components of the grasp during this online guidance phase. First, there was a 3-way view-of-hand × digit × time interaction [F (5,55) = 2.7, p = 0.02] on approach variability, this being due to greater variability in the thumb compared to finger path at the 90 % and penultimate recording frame in the movement duration in the No Thumb condition. Second, participants selectively slowed down their final approach to the objects, producing prolonged grip closure times and more careful, low-velocity contacts with them (Table 4). Post hoc tests revealed that both effects were significant [p < 0.01] compared to the No Finger condition and were generally greater when subjects grasped the larger of the two objects when unable to see their thumb (Fig. 5a), with this view-of-hand × size interaction achieving significance for the grip closure time [F (2,22), = 3.5, p = 0.047]. Finally, while the thumb (as in Experiment 1) always made the majority of first-contacts with the objects, there was a significant view-of-hand × digit interaction for this parameter [F (4,44) = 9.0, p = 0.002]. This occurred because—despite the slower approach—the proportion of thumb first-contact (77.8 % ± 12.9 SD) was substantially reduced [p < 0.001] when vision of this digit was occluded compared to the Whole Hand condition (96.7 % ± 4.6 SD).

There was also a main view-of-hand effect on the grip size at contact (Table 4), whereby preventing vision of the finger resulted in significantly wider grasps compared to the No Thumb condition [p = 0.046]. We interpret this effect as due to a relative loss of grip precision with No Finger visible, because it occurred regardless of object size (Fig. 5b), whereas an ‘improvement’ in accuracy resulting from slower grip closure when the thumb was occluded would be expected mainly for the large object. In support of this interpretation, there was a view-of-hand × digit interaction [F (1,11) = 5.5, p = 0.013] in positional variability at contact, which appeared (LSD test, p = 0.029) due to a reduced consistency in thumb placement in the depth axis of the objects in the No Thumb (mean 6.6 mm ± 0.46) compared to the Whole Hand (mean 5.7 mm ± 0.89) conditions. Either way, the effects of the two perturbations were clearly non-equivalent, with selective occlusion of the thumb causing most disruption to online control of the grasp.

Inspection of the limited data obtained for the ‘No Digit’ condition from the last 2 subjects revealed only one obvious additive effect, in which trial-by-trial positional variability of both initial thumb and finger placements increased by factors of 1.33–1.5 times compared to the separate No Thumb and No Finger trial blocks. This is reminiscent of a major consequence of removing all vision of the moving hand versus the Full Vision condition in Experiment 1.

General discussion

It is generally accepted that vision contributes to getting a precision grasp in three important ways: selecting the most suitable positions on the goal object for grip stability; helping to plan the optimal hand path so that collision with the object or any non-target obstacles is avoided; and providing guidance for the thumb and finger to their pre-selected contact sites. How the underlying visuomotor transformations are implemented, however, remains matters of widely differing opinion (e.g. see Smeets and Brenner 1999; commentaries by Various Authors Various authors 1999). Some argue that the thumb and finger have essentially equivalent functions in grip formation and are visually guided together (Jeannerod 1981; Hoff and Arbib 1993; Paulignan et al. 1997; Rosenbaum et al. 2001; Meulenbroek et al. 2001) or independently (Smeets and Brenner 1999, 2001, 2002), while others suggest that only the thumb is selected for active guidance (Wing and Fraser 1983; Haggard and Wing 1997; Galea et al. 2001). The data obtained from our two experiments are mutually consistent with this latter suggestion.

It was notable that the relative directness and consistency of the thumb trajectories were little affected by removing all vision (Experiment 1) or by selectively occluding vision of this digit (Experiment 2) during their movements. This strongly suggests that the straightness of the thumb path was programmed to contact the nearer side of the goal objects prior to movement onset. But the data further suggest that the relative visibility of this contact position was normally exploited to enhance online guidance of the grasp, since movements made without the benefit of any vision or with the thumb occluded resulted in selective increases in grip closure time and in positional variability of the thumb placement, along with reductions in first-object contacts made by this digit.

We do not know where our participants were looking while performing the present tasks. However, we are currently combining gaze with hand movement recordings to monitor fixation patterns when subjects grasp a cylindrical target presented alone or with an obstacle nearby. Results obtained thus far indicate that, regardless of the target conditions, most subjects fixate the target’s centre of mass around the time of movement onset, but direct their gaze towards the ‘thumb side’ of the object in the later feedback period just before contact. As also inferred from our present experiments, anchoring gaze on or near the thumb landing site during grip closure could serve a dual purpose, both of which are improved by binocular vision compared to one eye alone (Servos and Goodale 1994; Watt and Bradshaw 2000; Melmoth and Grant 2006; Melmoth et al. 2007; Anderson and Bingham 2010): to guide the thumb to a ‘soft’ and accurate contact (e.g. Johansson et al. 2001)—a form of collision avoidance—at this site and to provide feedback about the narrowing thumb-object depth for online movement corrections preparatory to grip application.

The programmed outward trajectory of the finger, by contrast, appears dedicated to avoid colliding with the other, non-fixated, edge of the object. This conceptualization is supported by evidence that our subjects increased their safety margin for collision avoidance by selectively widening their finger deviation prior to grasping the smaller and less stable object used here, regardless of whether vision was available or not during the movement. Also consistent with this idea, Schlicht and Schrater (2007) have shown that increased lateral deviation of the finger scales with the degree of visual uncertainty and wider grip opening associated with reaching for cylindrical objects placed in increasingly peripheral vision. Our evidence further suggests that this digit was the less actively guided of the two. Taken together, this would imply that contacting the object with the finger before the thumb represents a failure of collision avoidance, and our finding that finger first-contacts were generally ‘hard’ and imprecise is indicative of an error of this kind (see Melmoth et al. 2007). It would also explain a key finding in our glow-in-the-dark experiment; that subjects close both their digits more slowly (i.e. between peak grip and initial contact) when they cannot see their thumb—just as when visual feedback from the whole hand is unavailable (present results; Jakobson and Goodale 1991; Churchill et al. 2000; Watt and Bradshaw 2000; Schettino et al. 2003)—but make initial thumb contact with a wider grip aperture, because they are more cautiously closing just their finger when vision of this digit is selectively occluded.

We examined performance on a simple precision grasping and lifting task involving isolated cylindrical objects. An important question is whether our findings generalize to other types of precision grasping or whether the digits have flexible roles that change with task conditions (Mon-Williams and McIntosh 2000). In this context, de Grave et al. (2008) examined the pattern of visual fixations made by subjects when precision grasping different shapes held by a Plexiglass frame at eye-level, such that the goal positions of the thumb and finger were equally visible. Subjects grasped the objects with their index finger vertically oriented above the thumb (i.e. in grip pronation). They found that gaze was strongly biased towards the finger-landing site on the objects, even when this site was deliberately masked while the thumb-contact position remained in view or when the thumb position was more difficult to contact because its surface area was very small. In subsequent work, Brouwer et al. (2009) further showed that the finger nearly always made first-contact with the shape, irrespective of its configuration, with the thumb subsequently (by ~80 ms, on average) contacting it from below. That is, the finger appeared to be the more active visual guide and showed a similar specialization in role that we found was devolved to the thumb.

We suggest that the really key difference between these studies and ours was that their subjects grasped the objects with the digits in pronated (see also Desanghere and Marotta 2011)—rather than horizontal—opposition and hypothesize that such end-postural constraints strongly influence the particular digit that will be selected as the online guide. Evidence that the finger also takes a straighter and less variable trajectory than the thumb to establish first-contact with the shapes in their task—which is not their current assumption (de Grave et al. 2008; Brouwer et al. 2009), but is yet to be formally evaluated—would provide support for our hypothesis. Wider thumb deviations accompanied by finger first-contacts have, however, been reported to predominate on a task that required subjects to horizontally pincer-grip circular discs along an opposition axis exactly orthogonal to their mid-sagittal plane (Smeets and Brenner 2001). As the authors noted, these unnatural conditions necessitated extreme (i.e. uncomfortable) wrist flexion in order to achieve the end-point grip postures demanded. Finger first-contacts also predominated in Schlicht and Schrater’s (2007) study of grasping in peripheral vision. But in this case, the goal objects were obviously never directly fixated and were supported by a cradling device, so there was no cost of collision.

In fact, some qualified support for our hypothesis is offered by Brouwer et al. (2009) who, in a second experiment, had subjects grasp a square or triangle (with its apex directed to the left or right on different trials) with a horizontally oriented grip. When grasping the former, symmetrical, target (i.e. most analogous to the cylinders in our experiments), it was the thumb landing site to which gaze was principally directed; the finger-side of the shapes only attracted substantial fixations on the task in which this digit had to be guided to the apex of the triangle, the site that was most difficult to contact. While it is clear that further studies of fixation strategies associated with different grasping actions and orientations are warranted, including those involving complex/asymmetric objects (Lederman and Wing 2003; Kleinholdermann et al. 2007), for the moment, self-observation testifies to the validity of the results presented here. We would invite readers to precision grasp an object similar to those that we used employing the index finger as the visual guide to first-contact: the unnatural feel and extra effort associated with such a movement confirms the advantage of primarily guiding the thumb under these task conditions.

References

Anderson J, Bingham GP (2010) A solution to the online guidance problem for targeted reaches: proportional rate control using relative disparity τ. Exp Brain Res 205:291–306

Brouwer A-M, Franz VH, Gegenfurtner KR (2009) Differences in fixations between grasping and viewing objects. J Vis 9:1–24

Castiello U (2005) The neuroscience of grasping. Nat Rev 6:726–736

Churchill A, Hopkins B, Rönnqvist L, Vogt S (2000) Vision of the hand and environmental context in human prehension. Exp Brain Res 134:81–89

de Grave DDJ, Hesse C, Brouwer A-M, Franz VH (2008) Fixation locations when grasping partly occluded objects. J Vis 8:1–11

Desanghere L, Marotta JJ (2011) “Graspability” of objects affects gaze patterns during perception and action tasks. Exp Brain Res 212:177–187

Forssberg H, Eliasson AC, Kinoshita H, Johansson RS, Westling G (1991) Development of human precision grip I: basic coordination of force. Exp Brain Res 85:451–457

Galea MP, Castiello U, Dalwood N (2001) Thumb invariance during prehension movement: effects of object orientation. NeuroReport 12:2185–2187

Haggard P, Wing AM (1997) On the hand transport component of prehensile movements. J Mot Behav 29:282–287

Hoff B, Arbib MA (1993) Models of trajectory formation and temporal interaction of reach and grasp. J Mot Behav 25:175–192

Jakobson LS, Goodale MA (1991) Factors affecting higher-order movement planning: a kinematic analysis of human prehension. Exp Brain Res 86:199–208

Jeannerod M (1981) Intersegmental coordination during reaching at natural visual objects. In: Long J, Baddeley A (eds) Attention and performance IX. Eribaum, New Jersey, pp 153–169

Johansson RS, Westling G, Bäckström A, Flanagan JR (2001) Eye-hand coordination in object manipulation. J Neurosci 21:6917–6932

Kleinholdermann U, Brenner E, Franz VH, Smeets JBJ (2007) Grasping trapezoidal objects. Exp Brain Res 180:415–420

Kritikos A, Dunai J, Castiello U (2001) Modulation of reach-to-grasp parameters: semantic category, volumetric properties and distractor interference? Exp Brain Res 138:54–61

Lederman SJ, Wing AM (2003) Perceptual judgement, grasp point selection and object symmetry. Exp Brain Res 152:156–165

Melmoth DR, Grant S (2006) Advantages of binocular vision for the control of reaching and grasping. Exp Brain Res 171:371–388

Melmoth DR, Storoni M, Todd G, Finlay AL, Grant S (2007) Dissociation between vergence and binocular disparity cues in the control of prehension. Exp Brain Res 183:283–298

Meulenbroek RGJ, Rosenbaum DA, Jansen C, Vaughan J, Vogt S (2001) Multijoint grasping movements. Simulated and observed effects of object location, object size, and initial aperture. Exp Brain Res 138:219–234

Mon-Williams M, McIntosh RD (2000) A test between two hypotheses and a possible third way for the control of prehension. Exp Brain Res 134:268–273

Mon-Williams M, Tresilian JR (2001) A simple rule of thumb for elegant prehension. Curr Biol 11:1058–1061

Napier JR (1961) Prehensibility and opposability in the hands of primates. Symp Zool Soc 5:115–132

Paulignan Y, Frak VG, Toni I, Jeannerod M (1997) Influence of object position and size on human prehension movements. Exp Brain Res 114:226–234

Rosenbaum DA, Meulenbrook RJ, Vaughan J, Jansen C (2001) Posture-based motion planning: applications to grasping. Psychol Rev 108:709–734

Sakata H, Taira M, Kusunoki M, Murata A, Tanaka Y (1997) The parietal association cortex in depth perception and visual control of hand action. Trends Neurosci 20:350–356

Schettino LF, Adamovich SV, Poizner H (2003) Effects of object shape and visual feedback on hand configuration during grasping. Exp Brain Res 151:158–166

Schlicht EJ, Schrater PR (2007) Effects of visual uncertainty on grasping movements. Exp Brain Res 182:47–57

Servos P, Goodale MA (1994) Binocular vision and the on-line control of human prehension. Exp Brain Res 98:119–127

Smeets JB, Brenner E (1999) A new view on grasping. Mot Control 3:237–271

Smeets JB, Brenner E (2001) Independent movements of the digits in grasping. Exp Brain Res 139:92–100

Smeets JB, Brenner E (2002) Does a complex model help to understand grasping? Exp Brain Res 144:132–135

Soechting JF, Buneo CA, Hermann U, Flanders M (1995) Moving effortlessly in three dimensions: does Donder’s law apply to arm movement? J Neurosci 15:6271–6280

Valyear KF, Chapman CS, Gallivan JP, Marks RS, Culham JC (2011) To use or to move: goal-set modulates priming when grasping real tools. Exp Brain Res 212:125–142

Various authors (1999) Commentaries on a new view on grasping. Mot Control 3:272–315

Watt SJ, Bradshaw MF (2000) Binocular cues are important in controlling the grasp but not the reach in natural prehension movements. Neuropsychologica 38:1473–1481

Whitwell RL, Lambert LM, Goodale MA (2008) Grasping future events: explicit knowledge of the availability of visual feedback fails to reliably influence prehension. Exp Brain Res 188:603–611

Wing AM, Fraser C (1983) The contribution of the thumb to reaching movements. Q J Exp Psychol 35A:297–309

Wing AM, Turton A, Fraser C (1986) Grasp size and accuracy of approach in reaching. J Mot Behav 15:217–236

Acknowledgments

This study was supported by The Wellcome Trust (Grant 066282). We thank Dr Lore Thaler, Prof Mark Mon-Williams and an anonymous reviewer for helpful comments.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Melmoth, D.R., Grant, S. Getting a grip: different actions and visual guidance of the thumb and finger in precision grasping. Exp Brain Res 222, 265–276 (2012). https://doi.org/10.1007/s00221-012-3214-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-012-3214-5