Abstract

The brain integrates information from multiple sensory modalities and, through this process, generates a coherent and apparently seamless percept of the external world. Although multisensory integration typically binds information that is derived from the same event, when multisensory cues are somewhat discordant they can result in illusory percepts such as the “ventriloquism effect.” These biases in stimulus localization are generally accompanied by the perceptual unification of the two stimuli. In the current study, we sought to further elucidate the relationship between localization biases, perceptual unification and measures of a participant’s uncertainty in target localization (i.e., variability). Participants performed an auditory localization task in which they were also asked to report on whether they perceived the auditory and visual stimuli to be perceptually unified. The auditory and visual stimuli were delivered at a variety of spatial (0°, 5°, 10°, 15°) and temporal (200, 500, 800 ms) disparities. Localization bias and reports of perceptual unity occurred even with substantial spatial (i.e., 15°) and temporal (i.e., 800 ms) disparities. Trial-by-trial comparison of these measures revealed a striking correlation: regardless of their disparity, whenever the auditory and visual stimuli were perceived as unified, they were localized at or very near the light. In contrast, when the stimuli were perceived as not unified, auditory localization was often biased away from the visual stimulus. Furthermore, localization variability was significantly less when the stimuli were perceived as unified. Intriguingly, on non-unity trials such variability increased with decreasing disparity. Together, these results suggest strong and potentially mechanistic links between the multiple facets of multisensory integration that contribute to our perceptual Gestalt.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In addition to processing information on a sense-by-sense basis, the brain is responsible for assembling the rich mélange of information from the various sensory modalities into a coherent and meaningful “view” of the world (Stein and Meredith 1993). This process is believed to be accomplished by the binding together of related cues from the different senses (e.g., the sight and sound of an ambulance) and the segregation of unrelated cues. In the most straightforward view of this process, it seems reasonable to predict that multisensory stimuli that arise from the same location and at the same point in time originate from a common event, whereas stimuli that are spatially and/or temporally disparate are the result of two separate events. However, a number of cross-modal illusions illustrate that this distinction is not absolute, in that multisensory cues that are somewhat discordant can give rise to a unitary perception (Bertelson and Aschersleben 1998; Bertelson and Radeau 1981; Choe et al. 1975; Hairston et al. 2003; Jack and Thurlow 1973; Lewald and Guski 2003; Lewald et al. 2001; Slutsky and Recanzone 2001; Thurlow and Jack 1973). Perhaps the most familiar of these is the “ventriloquism effect”, in which the movements of the dummy’s mouth alter the perceived location of the ventriloquist’s voice (Howard and Templeton 1966).

Not surprisingly, previous work has shown that such cross-modal localization bias depends on the spatial and temporal relationships between the stimuli, with bias declining as a function of increasing disparity in both realms (Bermant and Welch 1976; Bertelson and Aschersleben 1998; Bertelson and Radeau 1981; Choe et al. 1975; Hairston et al. 2003; Jack and Thurlow 1973; Lewald and Guski 2003; Lewald et al. 2001; Pick et al. 1969; Radeau and Bertelson 1987; Slutsky and Recanzone 2001; Thomas 1941; Thurlow and Jack 1973; Warren et al. 1981; Welch and Warren 1980). Despite the general agreement on this issue, the specifics, in terms of the degree of tolerated disparity and the amount of elicited bias, range widely, probably reflecting the substantial paradigmatic differences between these studies.

In addition to examining cross-modal localization bias, a number of these earlier studies have shown that such bias often occurs in conjunction with reports of perceptual unification, suggesting a possible link between these two components of multisensory integration. However, it has also been shown that localization bias can occur in the absence of perceptual unification (Bertelson and Radeau 1981), suggesting that the processes can be independent, and may rely on two distinct neural circuits. In a recent study, we have established that the degree of cross-modal localization bias is inversely related to a participant’s variability in target localization, a measure of ambiguity or uncertainty in the sensorimotor act (Hairston et al. 2003). Consequently, in the current study we sought to extend our own findings and those of Bertleson and Radeau (1981) by examining the relationship between cross-modal bias, perceptual unification and localization variability. It is only with the establishment of these relationships that greater insights can be gained into their possible mechanistic commonalities, and, ultimately, into resolution of the perceptual and post-perceptual aspects of these multisensory processes.

Methods

Participants

All procedures were approved by the Institutional Review Board of Wake Forest University and have been performed in accordance with the ethical standards laid down in the 1964 Declaration of Helsinki. Five undergraduate students (four women; age range 18–20 years), naive to the task, participated in each experiment. Data from an additional 14 participants (eight women; age range 19–22 years), collected in conjunction with related research paradigms, were used to supplement the data in the current study for the examination of localization bias on “unity” and “non-unity” trials (see below). All participants gave their informed consent prior to inclusion in the study, and reported having normal hearing and either normal or corrected-to-normal vision.

Apparatus and stimuli

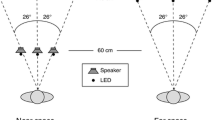

The apparatus (Fig. 1A) was housed in a dark, sound-attenuated room. It consisted of a series of light-emitting diodes (LEDs) and speakers mounted on a semicircular array with a radius of 91.4 cm. Two sets of LEDs were used: a set of accessory or “biasing” LEDs separated by 2.5° of visual angle and positioned at eye level, and a set of fixation LEDs separated by 10° and positioned 5 cm below the stimulus LEDs. A series of miniature speakers (Panasonic 4D02CD) separated by 10° were also mounted on the array, and served as the target stimuli. To mask extraneous noises from outside the room, free-field white noise (40 dB SPL A level; measured at the location of the head) was continuously presented by means of a white noise generator (model PWN1; GoldLine, West Redding, CT, USA) located above the participant’s head. Participants sat with their heads comfortably resting on an adjustable chin mount at the center of the semicircle. Immediately below and in front of them was a joystick-style yoke fitted with response buttons and a laser pointer.

Experimental paradigm. A The apparatus. Participants used a yoke-mounted laser pointer to localize auditory stimuli contained within a perimetry apparatus. On most trials, a task-irrelevant visual stimulus was also presented (see “Apparatus and stimuli” section for details). Participants began a trial by orienting their sensory apparatus toward one of the fixation light-emitting diodes (LEDs). Target sounds were emitted from one of an array of speakers situated at 10° intervals along the perimeter. Task-irrelevant LEDs were separated by 2.5°, and could be presented at the same location (spatially-coincident trials) or at different locations (spatially-disparate trials) from the target sound. Participants rotated the yoke to localize the target. B Schematic of the trial procedure. Trials began with the onset of the fixation LED. Participants pushed a button to illuminate the laser pointer, and then moved the yoke to acquire the fixation target. The target sound was presented 750–1000 ms after acquisition of fixation, and, on most trials, was followed by the illumination of the irrelevant LED (either 200, 500 or 800 ms after the onset of the sound). The fixation LED was turned off 300 ms after the offset of the irrelevant LED, signaling participants to begin their localization judgment. Participants released the yoke button, turning off the laser pointer, when their localization was complete. Participants subsequently depressed a foot pedal to denote whether the stimuli were spatially aligned

Stimuli consisted of a 50-ms broadband noise burst (63 dB SPL A level) from one of the speakers and a 50-ms illumination of one of the LEDs (0.032 lux). Target location was always referenced to the position of visual fixation, which varied randomly from trial to trial among locations 0°, ±10°, and ±20° from the center of the apparatus. At the onset of each trial, signaled by the illumination of a fixation LED, participants were instructed to reorient their eyes, head, and torso so that the fixation point was straight ahead. Relocation of the fixation position from trial to trial was done to prevent participants from learning the actual locations of the speakers, and to minimize the effect of any minor differences in stimulus characteristics. For example, while on one trial speaker A might be located 10° to the left of fixation, on the next trial (where participants are fixated at a new location) a different speaker (e.g., speaker C) might be located 10° to the left of this new fixation location.

Trial procedure

A schematic of the trial procedure is shown in Fig. 1B. Each trial began with the onset of the fixation light, to which participants aligned their entire sensory apparatus (eyes, head and body) so that it was directed straight ahead. Participants directed the laser pointer at the fixation target to signal acquisition of fixation. After either 750 or 1000 ms, a sound was presented either alone or in conjunction with the illumination of one of the accessory LEDs. The LED was illuminated either 200, 500, or 800 ms after the target sound (temporal disparity). The accessory LED was illuminated after the target sound to eliminate its potential to prime or cue performance. Target sounds were presented at locations 0°, 10°, or 30° to the left or right of fixation, and the LED was presented at locations 0°, 5°, 10°, or 15° to the left or right of the sound (spatial disparity). The fixation light was extinguished 300 ms after the offset of the last stimulus. Participants indicated the perceived location of the sound by aiming the laser pointer at that location and then turning it off by releasing a button. In addition, participants subsequently depressed a foot pedal to indicate whether they had perceived the light and sound as coming from the same location (spatial unity). The inter-trial interval was 1000 ms. Participants were instructed that this was an auditory localization task, and that the sound was always the target; they were given no information about the light.

Data analysis

Each stimulus combination of spatial disparity (n=7) and temporal disparity (n=3) was presented with equal probability 24 times at each of the five target locations, for a total of 2,520 trials, divided into eight 1-h sessions, with each session on a different day. Each participant’s data were compiled across all sessions and averaged across trials to compute an average localization score for each condition. Dependent variables examined were percentage bias, variance in localization, and reports of spatial unity. Percentage bias was calculated by subtracting the actual location of the sound from the average location to which participants pointed, dividing by the amount of actual spatial disparity between the light and the sound, and multiplying by 100. Because trends were similar for both sides of space, data from left and right locations were collapsed for statistical analysis.

Bias data was analyzed in two steps. First, percentage bias and the percentage of “unity” responses were analyzed via repeated-measures analysis of variance (ANOVA), with spatial and temporal disparity as factors. Second, bias was examined again using repeated-measures ANOVA with spatial disparity and reports of unity as factors, to assess differences in localization bias for unity relative to non-unity trials. Additionally, logistic regression was used to assess the predictability of perceived unity/non-unity based on the amount of bias observed from trial to trial. To compare differences in variability associated with the judgment of unity, the standard deviation of the distribution of localization responses was calculated for each participant, and a two-level, repeated-measures ANOVA was used to compare the average of these deviation scores for each spatial disparity and unity/non-unity grouping. Tests of normality were performed using the Kolmogorov-Smirnov Z-statistic based on a 20% random sample of all relevant localization responses to limit oversensitivity of the test to the large sample size. Tests of bimodality were performed using the dip statistic (Hartigan 1985; Hartigan and Hartigan 1985), a distribution-free test that computes the maximum difference (“dip”) between the empirical distribution function and the unimodal distribution function that minimizes the differences.

Results

Localization bias

Consistent with previous reports, there was a significant main effect of spatial disparity on localization bias, with bias decreasing substantially with increasing cross-modal spatial disparity (F (2,8)=25.3, p<0.001). Nonetheless, the average amount of bias remained substantial (>20%) even for cross-modal spatial disparities as large as 15° (Table 1). Similarly, there was also a significant main effect of temporal delay, with mean localization bias decreasing with increasing cross-modal temporal disparity (F (2,8)=32.6, p<0.001). As for spatial disparity, there was still substantial (>25%) localization bias even at the largest tested temporal disparity (800 ms; Table 1). There was no significant interaction between spatial and temporal disparity (F (4,16)=1.6, p>0.05). Note that for this and all subsequent analyses, because there was no effect of fixation location, data have been collapsed across all fixation locations.

Reports of spatial unity

Similar to the results seen for localization bias, the likelihood of participants reporting that the stimuli were spatially aligned (i.e., unity responses) was also found to be dependent upon the spatial and temporal relationships of the multisensory stimuli. The percentage of unity responses decreased significantly with increasing spatial disparity (F (3,12)=51.2, p<0.001). Nonetheless, unity was still reported on 44% of trials with the largest tested spatial disparity (15°; Table 1). Reports of unity also decreased significantly with increasing temporal disparity (F (2,8)=30.2, p<0.001). Again, participants reported unity on a substantial number of trials in which the stimuli were separated by the longest tested temporal disparity (800 ms; Table 1). For reports of spatial unity, there was no significant interaction between spatial and temporal disparity (F (6,24)=2.5, p>0.05).

Localization bias and perceived unity

Mean measures of localization bias and reports of spatial unity were highly correlated (Fig. 2). In fact, logistic regression analysis showed that localization bias was a reliable, significant predictor of whether perceptual unity would be reported (R 2=0.628, p<0.001), with an average correct prediction rate of 89.7%.

Localization bias and reports of unity are well correlated. Plotted are the mean measures for percentage reports of unity as a function of each 1% unit of localization bias. Note that when reports of unity approach 100%, bias is very large. In contrast, when reports of unity are very low (i.e., near 0%), localization bias is either absent or negative

To further examine this relationship between localization bias and perceptual unification, responses were dichotomized on a trial-by-trial basis into those in which unity was reported and those in which it was not. This division illustrated striking differences in the pattern of localization responses for these two types of trials (Fig. 3), a finding confirmed by the significant main effect seen in the ANOVA (F (1,4)=90.5, p<0.001). As is clearly evident from Fig. 3, when spatial unity was reported, localization bias was either complete (100%) or nearly complete. In contrast, when spatial non-unity was reported, localization bias was either absent or negative (<0%). This difference between localization bias and perceptual unification was true regardless of the actual degree of disparity between the stimuli. Furthermore, the significant disparity–unity interaction (F (2,8)=13.4, p<0.005) reveals an interesting pattern. In trials where unity was not perceived, the amount of bias depended on spatial disparity, such that no bias occurred at the largest tested spatial disparity (15°), yet increasingly negative bias (i.e., localization judgments on the side of the target opposite to the light) was seen as spatial disparity decreased (i.e., more negative bias at 5° than 10°) (F (2,8)=11.8, p<0.005). This effect was robust, in that the bias seen for both 5° (t (4)=3.2, p<0.05) and 10° (t (4)=3.0, p<0.05) disparities differed significantly from zero. In addition, the result of negative bias accompanying non-unity judgments was very consistent across participants.

Localization bias differs dramatically on unity and non-unity trials. Thus, when participants reported that the two stimuli are spatially unified (gray lines, solid squares), bias was nearly complete at all tested spatial disparities. In contrast, when participants reported the stimuli as appearing to not be unified (black line, open diamonds), bias was absent at larger disparities (i.e., 15°) and was increasingly negative for smaller disparities. Note that bias cannot be calculated for 0° spatial disparity. Error bars represent SEMs

To alleviate concerns about the relatively small sample size in the present study, data involving identical stimulus conditions (spatial disparities, temporal disparities, etc.) have been compiled for a total of 19 participants across four concurrent studies done in our laboratory. In the analysis of this additional data we have examined localization performance and reports of spatial unity along identical lines to those employed in the current study. This compilation shows remarkable consistency among the data and concordance with the results of the present study. Thus, there is a highly significant relationship between localization bias and reports of spatial unity (F (1,19)=147.3, p<0.001), a significant interaction between bias and spatial disparity (F (2,36)=14.2, p<0.001), and a significant finding of more negative biases with increasingly smaller disparities during non-unity cases (F (2,36)=8.7, p<0.001).

Localization variability

An analysis of participants’ variability in localization as a function of reporting unity or non-unity revealed an additional relationship. For all tested spatial disparities, localization variability (as assessed by the standard deviation of the response distribution) was significantly lower when participants reported that the stimuli were spatially unified (F (1,4)=27.0, p<0.01) (Fig. 4). There was also an interaction between reports of unity and spatial disparity (F (3,12)=18.8, p<0.001). Thus, when unity was reported, variability increased with the disparity between signals (F (3,12)=17.8, p<0.001). However, most notable was the finding that, for non-unity judgments, localization variability decreased with increasing disparity (F (3,12)=9.6, p<0.005), the greatest localization variability being seen when the stimuli were spatially coincident (i.e., 0° disparity). A more detailed analysis of the data derived from this spatially coincident condition revealed a distinct pattern of localization responses dependent upon the judgment of unity (Fig. 5). When the stimuli were reported as unified, localization responses clustered around the auditory target location (0° localization error) and were normally distributed (Kolmogorov-Smirnov Z, p>0.05). In contrast, when the stimuli were reported as non-unified, localization errors were more broadly distributed and failed the test for normality (Kolmogorov-Smirnov Z, p<0.05). Under these non-unity circumstances, the distribution of errors was found to be bimodally distributed (dip test, significance level 99.9%).

Localization variability as a function of spatial disparity. When participants reported that the two stimuli are spatially unified (gray line, solid squares), the variability in stimulus localization (as measured by the standard deviation) was relatively small and increased somewhat with increasing spatial disparity. A very different pattern was seen for stimuli that were reported as non-unified (black line, open diamonds). In these cases, variance was always significantly greater than in the unity cases. Surprisingly, this difference was most pronounced when the light and sound were spatially coincident (i.e., 0° disparity). Error bars represent SEMs

Analysis of spatially-coincident visual-auditory stimuli (0° spatial disparity). When the sound and light were reported to be spatially unified, the pattern of localization errors was tightly clustered around 0° (gray bars). However, when the stimuli were perceived as not unified, the pattern of errors was bimodally distributed (black bars)

Discussion

Consistent with previous reports, in the current experiment target localization was strongly influenced by the spatial and temporal relationships of the multisensory stimuli. Somewhat surprising was the size of the spatial and temporal windows within which multisensory stimuli could bias localization performance and be perceived as coming from a unitary event. In fact, substantial effects were still seen with the largest spatial (15°) and temporal (800 ms) separations. Although these values seem large in comparison with some previous reports of cross-modal biases (e.g., Lewald and Guski 2003; Lewald et al. 2001; Slutsky and Recanzone 2001), it is important to note that other studies have reported substantial localization biases with similarly large disparities (e.g., Bermant and Welch 1976; Bertelson and Radeau 1981). The differences in the degree of tolerated disparities most likely reflects both contextual differences in the stimuli used, as well as differences in the instruction set and methods used to assess the localization judgment. Along these lines, we have found that differences in the stimulus set can powerfully modulate the extent of cross-modal bias (unpublished observations).

The most compelling finding of the current study was the striking relationship between localization performance and judgments of spatial unity. Whenever participants reported unification, they also perceived the auditory target to shift completely to the location of the non-target visual stimulus (i.e., visual capture, or 100% bias). Conversely, when the multisensory stimuli were judged as not unified, there was either no localization bias or a “negative” bias, such that the auditory stimulus was perceived to be on the side of the target opposite to the light. This negative bias increased as the spatial disparity between the light and sound decreased. The presence of negative bias suggests that in addition to its ability to “attract” the localization of an auditory target toward a task-irrelevant visual cue, the visual system has the capacity to “repulse” the localization of an auditory target when the spatial disparity between the multisensory cues is sufficiently small, but they are not perceived as originating from the same event.

An additional intriguing finding was that even when the stimuli were not separated in space, if participants judged them to be perceptually distinct, the pattern of localization responses was bimodally distributed on either side of the target location. Such a distribution illustrates a strong interdependence between these factors and suggests that the perceptual division of the stimuli may drive the biasing of the localization judgment. Although the interpretation that a perceptual decision determines a sensorimotor action seems most plausible, one cannot rule out an alternative explanation. The presence of visual-auditory localization bias, coupled with the need to categorize the stimuli as unified or non-unified, may produce a strategy that only appears to reflect cross-modal perceptual fusion. In this scenario, localization bias could be driving a perceptual judgment. For example, when localization bias is strong, the participant may decide to use the category “unified” rather than the only other alternative, “non-unified”, because stimuli from the same location are more likely to be coming from the same event than stimuli from different locations. In this case, the reported categorization would be the same, whether or not the stimuli are actually perceived as stemming from the same event. It also is possible that the localization bias seen when participants judged the stimuli to be non-unified reflects a strategy to “resist” the biasing effects of the visual stimulus. This explanation is supported by the greater variability seen in non-unity trials.

Despite the fact that the neural substrates underlying the perceptual, post-perceptual and sensorimotor aspects of the current paradigm are unknown, data from animal (and human) studies are germane as they relate to the basic principles of multisensory integration. Although the spatial and temporal “windows” observed in the current study seem quite large by intuitive standards, individual multisensory neurons in both cortical and subcortical structures exhibit the capacity to integrate multisensory cues over similarly large disparities (Stein and Meredith 1993; Wallace et al. 1992, 1996, 1998). For example, multisensory neurons in these brain regions have large overlapping receptive fields subtending many tens of degrees of sensory space and within which multisensory stimuli have the capacity to interact to give rise to an integrated output. Similarly, these neurons have been shown to integrate multisensory stimuli over temporal intervals spanning many hundreds of milliseconds—delays similar to those used in the current study. Perhaps most intriguing in this regard is the relationship between stimulus effectiveness and multisensory integration, where it has been found that weakly effective stimuli give rise to the largest proportionate changes of response. In the current study, we suggest that localization variability is a reflection of target salience or effectiveness, with less variability being equated with greater stimulus salience. Mapping these relations onto unity distinctions and localization biases, we see that greater stimulus salience may be associated with both unity judgments and large values of bias (i.e., 100%). Whether such heightened salience drives these judgments and biases, or is a consequence of them, awaits future inquiry.

Taken together, these results emphasize a striking interrelationship between two very different aspects of multisensory integration—a sensorimotor component that drives target localization and a perceptual or cognitive component that judges the stimuli in terms of their relationship to a unitary event. These processes may be mechanistically dependent; that is, they are performed by interrelated neural circuits. Alternatively, as has been shown for a variety of species and brain structures, they may follow a conserved set of integrative principles that lend coherence to multisensory processes at different levels of information processing (Stein and Wallace 1996; Stein et al. 2002; Wallace and Stein 1996).

References

Bermant RI, Welch RB (1976) Effect of degree of separation of visual-auditory stimulus and eye position upon spatial interaction of vision and audition. Percept Mot Skills 42:487–493

Bertelson P, Aschersleben G (1998) Automatic visual bias of perceived auditory location. Psychon Bull Rev 5:482–489

Bertelson P, Radeau M (1981) Cross-modal bias and perceptual fusion with auditory-visual spatial discordance. Percept Psychophys 29:578–584

Choe CS, Welch RB, Gilford RM, Juola JF (1975) The “ventriloquist effect”: visual dominance or response bias? Percept Psychophys 18:55–60

Hairston WD, Wallace MT, Vaughan JW, Stein BE, Norris JL, Schirillo JA (2003) Visual localization ability influences cross-modal bias. J Cogn Neurosci 15:20–29

Hartigan PM (1985) Statistical algorithms: algorithm AS 217. Computation of the dip statistic to test for unimodality. Appl Stat 34:320–325

Hartigan JA, Hartigan PM (1985) The dip test of unimodality. Ann Stat 13:70–84

Howard IP, Templeton WB (1966) Human Spatial orientation. Wiley, New York

Jack CE, Thurlow WR (1973) Effects of degree of visual association and angle of displacement on the “ventriloquism” effect. Percept Mot Skills 37:967–979

Lewald J, Guski R (2003) Cross-modal perceptual integration of spatially and temporally disparate auditory and visual stimuli. Cogn Brain Res 16:468–478

Lewald J, Ehrenstein WH, Guski R (2001) Spatio-temporal constraints for auditory—visual integration. Behav Brain Res 121:69–79

Pick HL, Warren DH, Hay JC (1969) Sensory conflict in judgements of spatial direction. Percept Psychophys 6:203–205

Radeau M, Bertelson P (1987) Auditory-visual interaction and the timing of inputs: Thomas (1941) revisited. Psychol Res 49:17–22

Slutsky DA, Recanzone GH (2001) Temporal and spatial dependency of the ventriloquism effect. Neuroreport 12:7–10

Stein BE, Meredith MA (1993) The merging of the senses. MIT Press, Cambridge MA

Stein BE, Wallace MT (1996) Comparisons of cross-modality integration in midbrain and cortex. Prog Brain Res 112:289–299

Stein BE, Laurienti PJ, Wallace MT, Stanford TR (2002) Multisensory integration. In: Ramachandran V (ed) Encyclopedia of the human brain. Elsevier, Amsterdam, pp 227–241

Thomas G (1941) Experimental study of the influence of vision on sound localisation. J Exp Psychol 28:167–177

Thurlow WR, Jack CE (1973) Certain determinants of the “ventriloquism effect”. Percept Mot Skills 36:1171–1184

Wallace MT, Stein BE (1996) Sensory organization of the superior colliculus in cat and monkey. Prog Brain Res 112:301–311

Wallace MT, Meredith MA, Stein BE (1992) Integration of multiple sensory modalities in cat cortex. Exp Brain Res 91:484–488

Wallace MT, Wilkinson LK, Stein BE (1996) Representation and integration of multiple sensory inputs in primate superior colliculus. J Neurophysiol 76:1246–1266

Wallace MT, Meredith MA, Stein BE (1998) Multisensory integration in the superior colliculus of the alert cat. J Neurophysiol 80:1006–1010

Warren DH, Welch RB, McCarthy TJ (1981) The role of visual-auditory compellingness in the ventriloquism effect: implications for transitivity among the spatial senses. Percept Psychophys 30:557–564

Welch RB, Warren DH (1980) Immediate perceptual response to intersensory discrepancy. Psychol Bull 88:638–667

Acknowledgements

We thank Julie Edelson for editorial assistance. The study was supported in part by US National Institutes of Health grants MH63861, NS22543 and NS36916.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wallace, M.T., Roberson, G.E., Hairston, W.D. et al. Unifying multisensory signals across time and space. Exp Brain Res 158, 252–258 (2004). https://doi.org/10.1007/s00221-004-1899-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-004-1899-9