Abstract

We propose a new algorithm for solving the semiclassical time-dependent Schrödinger equation. The algorithm is based on semiclassical wavepackets. The focus of the analysis is only on the time discretization: convergence is proved to be quadratic in the time step and linear in the semiclassical parameter \(\varepsilon \).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the semiclassical time-dependent Schrödinger equation

where \(\psi =\psi (x,t)\) is the wave function that depends on the spatial variables \(x = (x_{1},\ldots ,x_{d})\in \mathbb R ^{d}\) and the time variable \(t\in \mathbb R \). The Hamiltonian

involves the Laplace operator \(\Delta _{x}\) and a smooth real potential \(V\).

The main challenges in the numerical solution of (1) result from the dimension \(d\) often being large and the existence of several time- and spatial-scales governed by the small parameter \(\varepsilon \). In chemical applications, the dimension \(d\) is \(3N\), where \(N\) is the number of nuclei in the molecule being considered. (It is \(3N-3\) if the center of mass motion is removed.) One typically takes the mass of an electron to be 1 and the masses of the nuclei to be proportional to \(\varepsilon ^{-4}\). If a molecule has various different nuclei, one has some freedom concerning the precise value, but that value lies in an interval determined by the heaviest and lightest nuclei in the molecule. For instance, the \(\mathrm{H+H_{2} }\) reaction has \(\varepsilon \approx 0.1528\). \(\mathrm{CO_{2} }\) has \(\varepsilon \) between 0.0764 and 0.0821. The standard model for \(\mathrm{IBr }\) is one dimensional because both the center of mass and rotational motions have been separated. The relevant mass is then \(\frac{m_\mathrm{I } m_\mathrm{Br }}{m_\mathrm{I }+m_\mathrm{Br }}\), which yields \(\varepsilon \approx 0.0577\). In these physical problems, \(\varepsilon \) has a clear meaning as the fourth root of a mass ratio, but mathematically we can regard Eq. (1) as a whole range of models indexed by the parameter \(\varepsilon \). We recover full quantum dynamics when \(\varepsilon =1\) and classical mechanics in the limit \(\varepsilon \rightarrow 0\).

Here, we ignore the difficulties that arise from the possibly large dimension \(d\) and focus on the challenges caused by a small \(\varepsilon \) in the time-integration schemes. The preferred numerical integration scheme in quantum dynamics is the split operator technique [6] which, unlike Chebyshev or Lanczos schemes, does not suffer from a time step restriction, such as \(\Delta t = O(\Delta x^{2})\) (see [12]). However, in the case of a semiclassical model (1), it is proved in [3] that the Lie–Trotter splitting requires a time-step of the order of \(\varepsilon ^{2}\), and the error is of order \(\Delta t/\varepsilon ^{2}\). For the Strang-splitting, convergence of order \((\Delta t)^{2}/\varepsilon ^{2}\) was observed in [2, 3] and proved in [4]. Our own numerical experiments with a fourth-order splitting in time, together with spectral discretizations in space show the same factor of \(1/\varepsilon ^{2}\).

The small parameter \(\varepsilon \) forces the choice of a small time-step (and for a Fourier based space discretization, a huge number of grid points) in order to have reliable results, even for a fourth-order scheme. Recent research has been done to control the error in such time-splitting spectral approximations [10]. We propose below a new splitting that is more appropriate for the semiclassical situation. We prove it has convergence of order \(\varepsilon (\Delta t)^{2}\), which improves instead of deteriorating when \(\varepsilon \) is reduced. While the submitted paper was in revision, the authors realized that new algorithms with this property are in development [1].

The new integration scheme is based on a spatial discretization via semiclassical wavepackets [7]. The wavepackets have already been useful in the time integration of the semiclassical time-dependent Schrödinger equation in many dimensions via a special Strang-splitting [5]. They are related to higher-order Gaussian beams which are known to allow computational meshes of size \(O(\varepsilon )\), and have several appealing properties, see e.g., [8, 9, 14]. A family of wavepackets forms an orthonormal basis of \(L^{2}\), which gives them several advantages. However, to the best knowledge of the authors, the previous known algorithms for the propagation in time wavepackets as for Gaussian beams feature also the \(1/\varepsilon ^{2}\)-dependence.

The main idea of the time-integration scheme in [5] was Strang-splitting between the kinetic and potential energy, together with the observation that the kinetic part and a quadratic part of the potential could be integrated exactly. This yielded a second splitting of the potential into a quadratic part and a remainder. The line of attack in [7] was slightly different: an approximate solution was built upon the integration of a system of ordinary differential equations for the parameters of the wavepackets. Then a second system of ordinary differential equations was used for determining the coefficients of the wavepackets. Our Algorithm 3 (called below semiclassical splitting) combines both of these two important ideas. The main achievement is to have the factor \(\varepsilon \) instead of \(1/\varepsilon ^{2}\) in the error estimate. The order of convergence in time could be further improved via a higher-order splitting together with a Magnus integrator, but that is not the subject of this paper.

Let us start with a short introduction to semiclassical wavepackets. They are an example of a spectral basis consisting of functions that are defined on unbounded domains. For simplicity, we describe only the case of dimension \(d=1\), while the whole analysis can be carried out in general dimensions.

Given a set of parameters \(q,p,Q,P\), a family of semiclassical wavepackets \(\{\varphi _k^\varepsilon [q,p,Q,P], k=0,1,\ldots \}\) is an orthonormal basis of \(L^{2}(\mathbb R )\) that is constructed in [7] from the Gaussian

via a raising operator. In the notation used here, \(Q\) and \(P\) correspond to \(A\) and \(iB\) of [7], respectively. Note that \(Q\) and \(P\) must obey the compatibility condition \(\overline{Q}P-\overline{P}Q=2i\) (see [7]). Each state \(\varphi _k(x) = \varphi _k^\varepsilon [q,p,Q,P](x)\) is concentrated in position near \(q\) and in momentum near \(p\) with uncertainties \(\varepsilon |Q|\sqrt{k+\frac{1}{2}}\) and \(\varepsilon |P|\sqrt{k+\frac{1}{2}}\), respectively. The recurrence relation

holds for all values of \(x\). We gather together the parameters of the semiclassical wavepackets and write \(\Pi (t) = (q(t),p(t),Q(t),P(t))\), so that \(\varphi _k[\Pi ](x) = \varphi _k^\varepsilon [q,p,Q,P](x)\).

We assume we have an initial condition \(\psi (0)\) that is given as a linear combination of semiclassical wavepackets

If the initial condition is not given in terms of such wavepackets, but it is still well localized, then it can be approximated by such a finite sum. A discretization error arises then; the involved projection is costly, as long as no fast Hermite transform is known. However, typical initial values in chemical applications are in terms of eigenfunctions of harmonic oscillators (see [11, 13] for two examples among many). Hence, they are exactly such wavepackets or can be easily rewritten this way.

Note that we have an overall phase parameter \(S(t)\) that will enlarge the parameter set. Hence, we shall also write \(\varphi _{k}[\Pi ,S] = e^{iS/\varepsilon ^{2}} \varphi _{k}[\Pi ]\). Note that \(K\) can be taken as large as we wish, just by inserting more trivial coefficients \(c_{k}(0,\varepsilon ) = 0\). Theorem 2.10 in [7] establishes an upper bound for the semiclassical approximation: if the potential \(V\in C^{M+2}(\mathbb R )\) satisfies \(-C_{1}< V(x) < C_{2}e^{C_{3} x^{2}}\), there is an approximate solution \(v(t)\) of the semiclassical time-dependent Schrödinger equation (1) such that for any \(T>0\) we have:

From now on, \(\mathcal{C}\) will denote a generic constant, not depending on \(\varepsilon \) or any involved time-step. We also only consider potentials \(V\) that satisfy the above conditions. The approximate solution in Theorem 2.10 in [7] is defined as

with the parameters \(\Pi (t)\) and \(S(t)\) given by the solution to the following system of ordinary differential equations

The coefficients \(c_{k}(t,\varepsilon )\) obey a linear system of ordinary differential equations.

A similar result is valid in higher dimensions (see Theorem 3.6 of [7]). The dependence of \(\mathcal{C}\) on the end-time \(T\) is difficult to assess, and the system for the coefficients \(c_{k}(t,\varepsilon )\) is difficult to solve, so an alternative numerical scheme is necessary.

This approximation result motivates us to look for an algorithm that does not deteriorate as \(\varepsilon \rightarrow 0\). We note that our algorithm will separately handle the approximation for the parameters \(\Pi (t)\) and \(S(t)\) and the wave packet coefficients \(c_{k}(t,\varepsilon )\). This observation is essential for the construction of the algorithm.

In the next section we present several splittings, ending with our proposed Algorithm 3 (called below semiclassical splitting). Numerical simulations show the desired behavior in \(\varepsilon \) and time and emphasize the importance of the factor \(\varepsilon \) instead of \(1/\varepsilon ^{2}\). With the motivation of these numerical results we focus in the last section only on the analysis of the time-convergence.

2 Time-splittings

A starting point for an efficient time-integration is the scheme proposed in [5]: As in Strang-splitting, we decompose the Hamiltonian

into its kinetic part \(T = -\frac{\varepsilon ^{4}}{2}\Delta _{x}\) and its potential part \(V(x) = U(q(t),x) + W(q(t),x)\), where \(U(q(t),x)\) is the quadratic Taylor expansion of \(V(x)\) around \(q(t)\) and \(W(q(t),x)\) is the corresponding remainder:

In the context of the wavepackets, the propagation with \(U+W\) can be broken into two parts [5]. We call this algorithm the L-splitting for a time-step of length \(\Delta t\):

Algorithm 1

(L-splitting) \(H = \frac{1}{2} T + (U+W) +\frac{1}{2} T\)

-

1.

Propagate the solution for time \(\frac{1}{2} \Delta t\), using only \(T\).

-

2.

Propagate the solution for time \(\Delta t\), using only \(U\).

-

3.

Propagate the solution for time \(\Delta t\), using only \(W\).

-

4.

Propagate the solution for time \(\frac{1}{2} \Delta t\), using only \(T\).

As shown in [5], the steps 1, 2, and 4 reduce to simple updates of the numerically propagated parameters \(\tilde{\Pi }\) and \(\tilde{S}\) (starting, of course from the given \(\Pi (0)\) and \(S(0)\)). Step 3 keeps the parameters \(\tilde{\Pi }\) and \(\tilde{S}\) fixed and evolves the set of coefficients via the system of ordinary differential equations

with a \(K\times K\) matrix \(F[\tilde{\Pi }(\frac{\Delta t}{2})]\) whose entries are

As mentioned in the Introduction, the global convergence of this algorithm (measured by the \(L^{2}\)-error) is observed to be \((\Delta t)^{2}/\varepsilon ^{2}\), which can also be proved as in [4].

A splitting of \(H=T+V\) that is of order 4 is the Y-splitting (see [15]). Denoting \(\theta =1/(2-2^{1/3})\), we have:

Algorithm 2

(Y-splitting \(H=T+V\))

-

1.

Propagate the solution for time \(\theta \frac{1}{2}\Delta t\), using only \(T\).

-

2.

Propagate the solution for time \(\theta \Delta t\), using only \(V\).

-

3.

Propagate the solution for time \((1-\theta )\frac{1}{2}\Delta t\), using only \(T\).

-

4.

Propagate the solution for time \((1-2\theta )\Delta t\), using only \(V\).

-

5.

Propagate the solution for time \((1-\theta )\frac{1}{2}\Delta t\), using only \(T\).

-

6.

Propagate the solution for time \(\theta \Delta t\), using only \(V\).

-

7.

Propagate the solution for time \(\theta \frac{1}{2}\Delta t\), using only \(T\).

If we use this also for our decomposition \(H=T+U+W\) we get what we call YL-splitting: each of the steps 2, 4, and 6 above are decomposed in a step for \(U\) and one for \(W\).

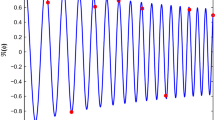

We observed convergence of order \((\Delta t)^{4}/\varepsilon ^{2}\) in all tests based on this YL-splitting. Since the expensive propagation of the coefficients must be done three times (in the steps 3, 6, and 9) during each time step, the computational time is much greater than that for the L-splitting. We measure the error in the experiments via a numerical approximation of the \(L^{2}\)-norm based on \(2^{16}\) equispaced points in the space domain; in order to emphasize that this is not the exact \(L^{2}\)-norm of the error, we denoted it by \(\Vert \cdot \Vert _{wf}\) (see Fig. 1). The benchmark problem used in all numerical results presented here is based on the torsional potential \(V(x)=1-\cos (x)\) with the initial value \(\varphi _{0}[1,0,1,i]\) which is propagated from the initial time \(t=0\) to the end time \(T=2\) using \(K=8\) wavepackets. Tests with different potentials (including \(V(x)=x^4\)) and various other initial values produced similar results. Equation (4) was solved via standard Padé approximation of the exponential matrix. We also used the Arnoldi method when computing with much larger \(K\) without observing any significant difference concerning the results in this paper. The components of \(\tilde{F}[(\frac{\Delta t}{2})]\) in (4) were computed via a very precise Gauss–Hermite quadrature which was adapted to the shape of the wavepackets. The computational setting is made such that the error due to the discretization in space has no significant effect. A larger number of basis functions does not make any difference until the end time \(T=2\), which is already a long integration time, due to the \(\varepsilon \)-time scale. Other end times \(T=4,6,8,10\) are possibleFootnote 1 but require the use of more basis functions. Doing this may be questionable for the semiclassical approach, since the Ehrenfest-time is proportional to \(\log (1/\varepsilon ^{2})\).

In each case, the reference solution used to estimate the error was the numerical solution computed with the time step \(\Delta t_0=2^{-13}\).

Remark 1

Since the errors grow like \(1/\varepsilon ^{2}\) as \(\varepsilon \rightarrow 0\), the solution based on traditional second or fourth order splittings (Fourier or wavepacket based) should not be used as a reference solution for the semiclassical splitting, unless one can afford the sufficiently small time-steps and the large number of Fourier points. E.g., in the case of the harmonic oscillator, the exact solution is represented by a few wavepackets independently on the value of \(\varepsilon \), while the sampling rate for the Fourier approximation has to be at least \(2/\varepsilon ^{2}\) per dimension. This means for the space domain \([-\pi ,\pi ]\) about \(2\times 10^{5}\) points when \(\varepsilon =10^{-2}\) and \(2\times 10^{6}\) points when \(\varepsilon =10^{-3}\). Larger space domains could be necessary in order to avoid artificial boundary conditions, and hence they would require the use of even more computational points. The large number of space points makes one time-step of the traditional Fourier based split operator method computationally intensive. Furthermore the inherent time-step restriction \(\Delta t \ll \varepsilon \) forces one to use many time-steps, yielding a long computational time even for efficient algorithms. In the case of other potentials, the solution based on wavepackets is no longer exact. However, if the solution remains localized in space (and this can be proved in the semiclassical situation), then a few wavepackets are sufficient for a good approximation, making the new numerical method competitive, even though we do not have such a marvelous tool as the FFT. This observation is even more important if we envisage higher dimensional applications.

The critical idea for our new algorithm comes from the observation that for small \(\varepsilon \), the largest errors arise from the use of numerically computed parameters \(\Pi \) and \(S\), while the expensive part of the computation is finding the coefficients \(c\). On the other hand, \(\varepsilon \) is not present in the classical equations (3) for the parameters, but it appears only in the equation for the coefficients (4). Our algorithm combines the Strang-splitting for \(H=(T+U)+W\) and the Y-splitting for \(T+U\), i.e., it is a fourth order scheme for the approximation of the parameters \(\Pi \) and \(S\). We keep the expensive part of the computation (involving the remainder \(W\)) in the inner part of the Strang-splitting \(H = \frac{1}{2} (T+U) + W + \frac{1}{2} (T+U)\) with a large time step \(\Delta t\). We use a small time step \(\delta t\) for the cheap propagation of the parameters. This small time step is chosen so that the desired overall convergence rate \(\varepsilon (\Delta t)^{2}\) is achieved with a minimum value of \(\Delta t/\delta t=N=N(\varepsilon ,\Delta t)\) small time steps per large time step. We call the resulting propagation algorithm semiclassical-splitting.

Algorithm 3

(Semiclassical-splitting) Define \(N:=\mathtt {ceil}\left( 1+\frac{\sqrt{\Delta t}}{\varepsilon ^{3/4}}\right) \) and \(\delta t:=\frac{\Delta t}{N}\), which hence satisfies \(\delta t \le \min \{\varepsilon ^{3/4} \sqrt{\Delta t}, \Delta t \}\).

-

1.

Propagate the solution for time \(\frac{1}{2} \Delta t\) via \(N\) steps of length \(\delta t\) using the Y-splitting for \(T+U\) (Algorithm 2).

-

2.

Propagate the solution for time \(\Delta t\), using only \(W\), i.e., solve Eq. (4).

-

3.

Propagate the solution for time \(\frac{1}{2} \Delta t\) via \(N\) steps of length \(\delta t\) using the Y-splitting for \(T+U\) (Algorithm 2).

Figures 2, 3, and 4 display the results of numerical experiments based on this semiclassical splitting. They confirm our theoretical considerations from Sect. 3, as long as the strong round off in \(e^{-i\alpha /\varepsilon ^{2}}-e^{-i(\alpha +\mathrm{eps})/\varepsilon ^{2}}\) (with \(\alpha \ne 0\) and eps = machine precision) in the measurement of the error can be avoided. The effect of this round off in the measurement of the error for \(\Delta t\) and \(\varepsilon \) simultaneously small is evident in Fig. 2; this effect is missing when measuring the error in the coefficients (see Fig. 3).

Left difference between the solution via Fourier method on \(2^{16}\) grid points and the semiclassical-splitting with eight wavepackets: the \(1/\varepsilon ^{2}\) factor in the error of the Fourier solution dominates. Right physical time in femtoseconds corresponding to the simulation parameter \(\varepsilon \)

The left part of Fig. 4 shows the \(L^{2}\)-norm of the difference between the solution via the Fourier method with \(2^{16}\) grid points and the semiclassical-splitting with eight wavepackets. The semiclassical-splitting gives such a good approximation of the exact solution for small \(\varepsilon \) that we see the \(\varepsilon ^{-2}\)-deterioration until the Fourier-based solution produces nonsense. In an intermediate range we see the \(\varepsilon \)-dependence of the error in the semiclassical splitting. We also see that for \(\varepsilon >\frac{1}{4}\), the solution based on eight wavepackets is also poor. The right part of Fig. 4 shows the physical end time in femtoseconds for different values of the simulation parameter \(\varepsilon \). Smaller \(\varepsilon \) means a later physical time at the same computational end time \(T\).

Finally, let us note that the convergence order can be improved to \(\varepsilon (\Delta t)^{4}\) by replacing the Y-splitting with a higher order one and using a Magnus integrator for the step 2 in Algorithm 3. In Fig. 5 we plotted the error at different end times (\(T=2\) and \(T=5\)) in the semiclassical-splitting with 8 (left) and 16 (right) wavepackets when the reference solution is computed via the Magnus method with 96 wavepackets: note that the \(\varepsilon \)-dependence of the approximation error dominates the second order error in time for large \(\varepsilon \) and that a longer end time needs more basis functions in order to reach the same accuracy.

The error dependence of the coefficients on \(\varepsilon \) and \(\Delta t\) in the semiclassical-splitting at end time \(T=2\) (above) and \(T=5\) (below); left with \(K=8\), and right with \(K=16\) basis functions; the reference solution is computed via 96 wavepackets and a Magnus time-integrator of fourth order

All time-steps in the steps 1 and 3 of the semiclassical-splitting, as well as in the steps 1, 2 and 4 of the L-splitting, are very cheap, since they consist of simple updates of the parameters \(\Pi \) and \(S\). A higher order splitting for those is indeed more expensive, but is negligible compared to the amount of work required for solving Eq. (4) via Pade-approximation or even a Krylov-method with a few steps. The internal parameter \(\delta t\) depends (mildly) on \(\varepsilon \), yielding more time-steps N in the steps 1 and 3 of the semiclassical-splitting, but this increase is still insignificant (for the same reason): the improvement in computing-time when using large time-steps \(\Delta t\) is so important, that the extra work in the approximation of the parameters \(\Pi \) and \(S\) is not perceptible. A comparative look at Figs. 1 and 2 reveals that one may use a larger time step \(\Delta t\) in the semiclassical algorithm than in the YL-splitting, while achieving the same error. The influences of the number of basis functions used and the detailed study of the method in many dimensions are the subjects of on-going and future investigations.

We now turn to the main topic of this paper, the analysis of the time-convergence of the semiclassical splitting.

3 Convergence results for the semiclassical-splitting algorithm

We first note that one cannot address the convergence of the proposed algorithms via the local error representation of exponential operator splitting methods as in Section 5 of [4]. This is because the parameters \(\varepsilon ^{2}\) and \(\Delta t\) enter the splitting in fundamentally different ways.

The overall error when using the semiclassical-splitting algorithm consists of an approximation error caused by using the representation with a finite number of moving wavepackets and a time-discretization error. Our main result is the following:

Theorem 1

Suppose the initial value is

and that \(K\ge K_1+3(N-1)\), where \(N=1,2,\) or \(3\). If the potential \(V\in C^{5}(\mathbb R )\) and its derivatives satisfy \(-C_{1}< V^{(s)}(x) < C_{2}e^{C_{3} x^{2}}\), for \(s=0,1,\ldots ,5\), then there are constants \(\mathcal{C}_{1}\) and \(\mathcal{C}_{2}\), such that

where \(\tilde{u}\) is constructed via the semiclassical splitting Algorithm 3. The constants \(\mathcal{C}_{1}\) and \(\mathcal{C}_{2}\) do not depend on \(\Delta t,\delta t\), or \(\varepsilon \), but depend on the potential \(V\) and its derivatives up to fifth order, on the \(\sup \)’s of \(|Q|,|\dot{Q}|,|\ddot{Q}|,|q|,|\dot{q}|,|\ddot{q}|\) from (3) on \([0,T]\), on the number \(K\) of wavepackets used in the approximation, and on the final integration time \(T\).

Remark

If \(V\in C^l(\mathbb R )\) with \(l>5\), then the theorem is true with a larger \(N\). More precisely, if \(K\ge K_1+3(N-1)\), the first term on the right hand side of (5) still has the form \(\mathcal{C}_{1}\varepsilon ^N\), but the restriction on \(N\) becomes \(N=1,2,\ldots ,l-2\), and \(\mathcal{C}_{1}\) depends also on \(V^{(s)}\) for \(s\le l\).

Let us briefly outline the several steps that make up the proof of this theorem. We introduce an approximation \(u\) to the solution of the time-dependent Schrödinger equation that is similar to (2). The parameters \(\Pi \) and \(S\) satisfy the system (3), and the coefficients \(c_{k}\) are given by the solution to the linear system of ordinary differential equations

where the \(K\times K\) matrix \(F[\Pi ]\) has entries

Then, we prove that the approximation error \(\Vert \psi (T) - u(T)\Vert \) is bounded by the Galerkin error, so that we can concentrate in the rest of the section only on \(\Vert u(T) - \tilde{u}(T)\Vert \), or rather on showing that the error in one time-step \(\Vert u(\Delta t) - \tilde{u}(\Delta t)\Vert \) is of order \(\varepsilon (\Delta t)^{3}\). We introduce an intermediate function \(u_{1}\) constructed via the parameters \(\Pi (t)\) from the exact solution of (3) and coefficients \(c^{1}\) from the linear system with constant matrix \(F^{1} = F[\Pi ^{1}]\), where \(\Pi ^{1} = \Pi (\frac{\Delta t}{2} )\). We then carefully investigate the quantities \( \Vert u(\Delta t)-u_{1}(\Delta t)\Vert \) and \(\Vert u_{1}(\Delta t)-\tilde{u}(\Delta t)\Vert \). A crucial observation is that the \(\varepsilon \)-dependence of the matrix entries \(F_{j,k}\) can be written explicitly in several different ways that involve terms which contain \(\varepsilon \)-independent wavepackets. The fast decay at infinity of these wavepackets and the particular \(\varepsilon \)-dependence let us establish appropriate bounds on the matrix entries \(F_{j,k}\), as well as on their time-derivatives. Also important is a bound on the error when using approximate wavepackets \(\tilde{\varphi }_{k}\) instead of \(\varphi _{k}\). The details of the proof follow; let us now start with the first step.

Our proof relies on the following elementary lemma that is Lemma 2.8 of [7]. We restate it here for completeness and to make the notation consistent with this paper.

Lemma 1

Suppose \(H(\varepsilon )\) is a family of self-adjoint operators for \(\varepsilon >0\). Suppose \(\psi (t,\varepsilon )\) belongs to the domain of \(H(\varepsilon )\), is continuously differentiable in \(t\), and approximately solves the Schrödinger equation in the sense that

where \(\zeta (t,\varepsilon )\) satisfies

Then

Proof

By the unitarity of the propagator \(e^{-itH(\varepsilon )/\varepsilon ^2}\) and the fundamental theorem of calculus, the quantity on the left hand side of (7) can be estimated as

This proves the lemma. \(\square \)

Theorem 2

The approximation error is bounded by the Galerkin error:

Under the hypotheses of Theorem 1 (or the remark after it), the integrand here is bounded by

Proof

Corollary 2.6 of [7] ensures that each \(\varphi _{k}[\Pi ,S]\) satisfies the time dependent Schrödinger equation with the Hamiltonian \(T+U\). Using (6), we see that

where \(P_{k}\) denotes the \(L^{2}\)-orthogonal projection into the space spanned by the basis functions \(\varphi _{0}[\Pi ],\ldots ,\varphi _{K-1}[\Pi ]\).

We shall apply Lemma 1, so we only have to find an upper bound for the Galerkin error

We note that \(\Vert P_{K}Wu - Wu\Vert \) is decreasing in \(K\), so when \(N=1\), it suffices to prove \(\Vert P_{K_1}Wu - Wu\Vert =O(\varepsilon ^3)\). However, \(\Vert P_{K}Wu - Wu\Vert \le \Vert Wu\Vert \), and since \(W\) is locally cubic near \(x=q\), estimate (2.68) of [7] immediately proves the result.

When \(N=2\), it suffices to prove \(\Vert P_{K_1+3}Wu - Wu\Vert =O(\varepsilon ^4)\). To show this, we first note that \(\Vert (1-P_{K_1})u\Vert =O(\varepsilon )\) as in the proof of Theorem 2.10 of [7]. Next,

So, we have

The first term on the right hand side is zero since \((P_{K_1+3}-1)(x-q)^3P_{K_1}=0\). The second term is \(O(\varepsilon ^4)\) as in the proof of Theorem 2.10 of [7]. The third term is bounded by \(\Vert W(1-P_{K_1})u\Vert \). This quantity is \(O(\varepsilon ^4)\) because \(W\) is locally cubic as in the \(N=1\) case and \(\Vert (1-P_{K_1})u\Vert =O(\varepsilon )\).

The \(N=3\) case is similar, but with the next order Taylor series estimates. The \(N\le l-2\) case is similar under the hypotheses of the remark, but with higher order Taylor series estimates.\(\square \)

The first term on the right hand side of estimate (5) in Theorem 1 arises from the estimate in Theorem 2. The rest of this paper concerns the second term on the right hand side of (5).

We concentrate on the local time-error when approximating \(u(\Delta t)\); if we show that it can be bounded by \(\varepsilon (\Delta t)^{3}\), then standard arguments and Theorem 2 prove Theorem 1.

The first step in Algorithm 3 produces approximations \(\tilde{\Pi }(\frac{\Delta t}{2})\) of \({\Pi }(\frac{\Delta t}{2})\) and \(\tilde{S}(\frac{\Delta t}{2})\) of \({S}(\frac{\Delta t}{2})\), both with errors \(\mathcal{C}(\delta t)^{4}\frac{\Delta t}{2}\). Given these approximate parameters, the coefficients \(\tilde{c}_{k}\) solve the system (6) with the constant matrix \(\tilde{F}=F[\tilde{\Pi }(\frac{\Delta t}{2})]\) on right hand side, as in (4). They enter in the expression of the numerical solution at the end of the Strang-splitting

In practice, they are computed via expensive (Padé or Arnoldi) iterations, but here we assume they are computed exactly.

In order to shorten the formulas, we denote \(\varphi _{k}=\varphi _{k}[{\Pi }]\) and \(\tilde{\varphi }_{k}=\varphi _{k}[\tilde{\Pi }]\).

To facilitate the proof, we introduce \(u_{1}(t)\), constructed via the parameters \(\Pi (t)\) from the exact solution of (3) and coefficients \(c^{1}\) from the linear system with constant matrix \(F^{1} = F[\Pi ^{1}]\), where \(\Pi ^{1} = \Pi (\frac{\Delta t}{2} )\):

With this we construct

which has the parameters of \(u\), but the coefficients \(c^{1}\). The local error then decomposes as

The next two theorems give upper bounds for these two last terms, ensuring the desired bound on the local error.

We now take a more careful look at the Galerkin matrix \(F\). A crucial observation is that as in section 4.1 of [5], the change of variables \(x=q+ \varepsilon y\) allows us to represent

in terms of \(\varepsilon \)-independent, but orthogonal functions \(\phi _{k}\), given by the recurrence relation

By this change of variables, all of the dependence on \(\varepsilon \) has been moved out of the wavepackets and put into the operator \(W(q+\varepsilon y)\), which is

Note that \(Q\) is not present in the expression for \(W\), while the functions \(\phi _{k}\) are wavepackets that depend on \(Q\) only: \(\phi _{k} = \varphi _{k}^{\varepsilon =1}[0,0,Q,i(\overline{Q})^{-1}]\). We use this observation in the proofs of the following lemmas.

Lemma 2

Suppose \(g\) and \(Z\) are functions on \(\mathbb{R }\) that satisfy \(g\in L^2(\mathbb{R },dy)\) and \(|Z(y)|\le P(y) e^{C \varepsilon ^2y^2/2}\), where \(P\) is a non-negative polynomial. Then there exists a constant \(\mathcal{C}\) depending only on \(j\) and \(Q\), such that

for all \(\varepsilon <\frac{|Q|^{-1}}{\sqrt{2 C}}\) if \(C>0\). If \(C=0\), then the estimate is valid for \(\varepsilon \in (0, D]\) for any fixed positive \(D\).

Proof

Let us assume the more difficult situation \(C>0\). By the Schwarz inequality, \(|\langle \phi _j,Z g\rangle |\le \Vert Z\phi _j\Vert \Vert g\Vert \), so it suffices to prove that \(\Vert Z \phi _j\Vert \) is finite. However,

where \(p\) is a polynomial. Under our assumptions, elementary estimates show that this integral is bounded by a constant, uniformly for \(\varepsilon ^2<\frac{|Q|^{-2}}{2 C}. \square \)

Lemma 3

The entries of the matrix \(F[\Pi ]\) and their first two time-derivatives are bounded by constants times \(\varepsilon ^{3}\):

where the constants \(\mathcal{C}_l\) depend only on \(j,k,Q,\dot{Q},\ddot{Q},\dot{q},\ddot{q}\), and bounds on the third, fourth, and fifth derivatives of \(V\).

Proof

We use expression (10) to write

We then let \(z=q(t)+\sigma \varepsilon y\) and rewrite the inner integral as

Next, we interchange the order of integration to obtain

Lemma 2 with \(Z(y) = y^3 V^{\prime \prime \prime }(q(t)+\sigma \varepsilon y)\) gives the result for \(F_{j,k}\).

To study \(\dot{F}_{j,k}\), we take the time derivative of expression (12). The only time dependent quantities here are \(\phi _j,\phi _k\), and \(V^{\prime \prime \prime }(q(t)+\sigma \varepsilon y)\). The time dependence in the \(\phi \)’s comes only from \(Q(t)\) and its conjugate, while the time dependence in \(V^{\prime \prime \prime }(q(t)+\sigma \varepsilon y)\) comes only from \(q(t)\). The result for \(\dot{F}_{j,k}\) then follows by several applications of Lemma 2.

The result for \(\ddot{F}_{j,k}\) follows from the same arguments applied to the second time derivative of (12). \(\square \)

Corollary 1

where \(\mathcal{C}\) has the same dependence as in Lemma 3 and depends additionally on \(K\), but is independent of \(\varepsilon \).

Lemma 4

The error caused by using the approximate parameters \(\tilde{\Pi }\) in the wavepacket \(\varphi _{k}\) satisfies

Proof

Using a homotopy between \(\Pi \) and \(\tilde{\Pi }\), one can proveFootnote 2 via careful calculations that

The fact that the Y-splitting with time step \(\delta t\) for \(\Pi \) on an interval of length \(\Delta t\) is of fourth order then concludes this proof.

Let us briefly sketch the proof of the above inequality, which is the key to this lemma. We can construct a homotopy \(\Pi [\theta ] = (q[\theta ], p[\theta ], Q[\theta ], P[\theta ])\) for \(\theta \in [0,1]\) with the properties

such that the compatibility conditions for \(P[\theta ]\) and \(Q[\theta ]\) are satisfied. These parameters then give a corresponding homotopy \(\varphi _{0}^{\varepsilon }[\theta ] = \varphi _{0}^{\varepsilon }(\Pi [\theta ])\), for the raising and lowering operators and \(\varphi _{k}^{\varepsilon }[\theta ]\) for every \(k\). Using this homotopy, we have

An induction argument together with lengthy careful calculations gives an expression for \( \varphi _{k}^{\prime }[\theta ]\) as a linear combination of slightly more basis functions. In the one-dimensional case this reads simply

with the complex coefficients satisfying

which completes the proof. In higher dimensions, the above sum extends over basis functions having \(m_{j} < K_{j}+1\) for the \(j\)th coordinate. \(\square \)

Lemma 5

The error caused by using the approximate parameters \(\tilde{\Pi }\) in the matrix \(F\) satisfies

with the constant \(\mathcal{C}\) depending on \(Q^{1},\tilde{Q}\), and \(V\) and its derivatives up to third order.

Proof

The representation (8) of the entries of the matrix \(F\) that depends only on \(Q\) is again the key idea: it allows us to write \(F^{1}_{j,k} - \tilde{F}_{j,k}\) as the sum of the following three terms

where

and

Note that \(\phi ^{1}_{j} = \phi _{j}[Q]\) and \(\tilde{\phi }_{j}=\phi _{j}[\tilde{Q}]\) do not depend on \(q, p\), or \(\varepsilon \). Lemma 2 now applies with \(Z=W^{1}/\varepsilon ^{3}\) and \(g= \phi ^{1}_{k} - \tilde{\phi }_{k}\) or \(Z=\tilde{W}/\varepsilon ^{3}\) and \(g = \phi ^{1}_{j} - \tilde{\phi _{j}}\) to estimate the first and the last term, respectively, by \(\mathcal{C}\varepsilon ^{3} \Vert \phi ^{1}_{k} - \tilde{\phi }_{k}\Vert \) or \(\mathcal{C}\varepsilon ^{3} \Vert \phi ^{1}_{j} - \tilde{\phi _{j}}\Vert \). As in the previous lemma, the last terms are both bounded by \(\mathcal{C}\varepsilon (\delta t)^{4}\Delta t\) which is one order (in \(\varepsilon \)) smaller that the stated result. The largest error arises from the middle term. We bound it using (9) with the corresponding \(q^{1}\) and \(\tilde{q}\) and Lemma 2 again. This yields a bound of \(\mathcal{C}(2+\varepsilon +\frac{1}{2}\varepsilon ^{2}) |q^{1}-\tilde{q}|\). Combining all these estimates, we get the upper bound for the quantity in the lemma:

which is bounded by \(\mathcal{C}(\delta t)^{4} \Delta t\) as in the previous lemma. \(\square \)

Theorem 3

The difference between the approximate solution and the intermediate solution is

where \(\mathcal{C}\) depends on \(K\) and \(V\) and its derivatives up to 5th order, on \(Q\) for \(t\) between 0 and \(T\), but is independent of \(\varepsilon \) and \(\Delta t\).

Proof

Denoting the (unitary) propagator for (6) by \(\mathcal{U}(t,s)\), we can express the difference between the approximate solution and the intermediate solution as

We abbreviate \(F(s):=F[\Pi (s)]\), and observe that since \(F^{1} = F[\Pi (\frac{\Delta t}{2})]\), the expression in the second norm above is the integral from 0 to \(\Delta t\) of

Thus, we have

Standard arguments from the proof of the convergence order of the midpoint quadrature rule then shows that

with the remainder \(R\) involving the Peano kernel and a factor containing the second derivative with respect to \(s\) of the integrand in the above formula. The first derivative of the integrand is

The second derivative has an even longer expression, but has the same character as the first one: every term containing the factor \(1/\varepsilon ^{2}\) contains also a factor of \(F^{1}\) or \(F(s)\) or \(\dot{F}(s)\), which are of order \(\varepsilon ^{3}\), according to Corollary 1. Hence, the leading order terms are those involving only \(\dot{F}(s)\) and \(\ddot{F}(s)\), i.e., similar to the middle term in the first derivative. Those terms are themselves of order \(\varepsilon ^{3}\), which shows that the remainder \(R\) is bounded by \(\mathcal{C}\varepsilon ^{3}. \square \)

Theorem 4

The difference between the intermediate solution and the numerical solution is

Proof

By the triangle inequality,

We rewrite Eq. (4) as

Lemma 1 and 5 give \(\Vert \mathbf{c }(t)-\tilde{\mathbf{c }}(t)\Vert \le \mathcal{C}\frac{(\delta t)^{4}}{\varepsilon ^{2}}(\Delta t)^{2}\). Lemma 4 and the error in the splitting of (3) then yield the result. \(\square \)

Finally, Theorems 3, 4, and the triangle inequality give us an estimate of the local error:

if we choose

This shows that the number \(N\) of time-steps for solving (3) via a splitting is at least \(N\ge \sqrt{\Delta t}\varepsilon ^{-3/4}\) for the semiclassical splitting Algorithm 3. With such a choice of \(N\), standard arguments and Theorem 2 imply the result on the global error in our main Theorem 1.

Notes

The reader is welcome to make experiments with the open-source library based on the wavepackets: https://github.com/raoulbq/WaveBlocksND. All the integrators mentioned in this paper are already implemented there.

Private communication from G. Kallert (now Gauckler) in 2010.

References

Bader, P., Iserles, A., Kropielnicka, K., Singh, P.: Effective approximation for the linear time-dependent Schrödinger equation. Tech. rep, DAMTP (2012)

Balakrishnan, N., Kalyanaraman, C., Sathyamurthy, N.: Time-dependent quantum mechanical approach to reactive scattering and related processes. Phys. Rep. 280(2), 79–144 (1997). doi:10.1016/S0370-1573(96)00025-7

Bao, W., Jin, S., Markowich, P.A.: On time-splitting spectral approximations for the Schrödinger equation in the semiclassical regime. J. Comput. Phys. 175(2), 487–524 (2002). doi:10.1006/jcph.2001.6956

Descombes, S., Thalhammer, M.: An exact local error representation of exponential operator splitting methods for evolutionary problems and applications to linear Schrödinger equations in the semi-classical regime. BIT 50(4), 729–749 (2010). doi:10.1007/s10543-010-0282-4

Faou, E., Gradinaru, V., Lubich, C.: Computing semiclassical quantum dynamics with Hagedorn wavepackets. SIAM J. Sci. Comput. 31(4), 3027–3041 (2009). doi:10.1137/080729724

Feit, M.D., Fleck Jr, J.A., Steiger, A.: Solution of the Schrödinger equation by a spectral method. J. Comput. Phys. 47(3), 412–433 (1982). doi:10.1016/0021-9991(82)90091-2

Hagedorn, G.A.: Raising and lowering operators for semiclassical wave packets. Ann. Phys. 269(1), 77–104 (1998). doi:10.1006/aphy.1998.5843

Jin, S., Wu, H., Yang, X.: Gaussian beam methods for the Schrödinger equation in the semi-classical regime: Lagrangian and Eulerian formulations. Commun. Math. Sci. 6(4), 995–1020 (2008)

Jin, S., Wu, H., Yang, X.: Semi-Eulerian and high order Gaussian beam methods for the Schrödinger equation in the semiclassical regime. Commun. Comput. Phys. 9(3), 668–687 (2011). doi:10.4208/cicp.091009.160310s

Kyza, I., Makridakis, C., Plexousakis, M.: Error control for time-splitting spectral approximations of the semiclassical Schrödinger equation. IMA J. Numer. Anal. 31(2), 16–441 (2011). doi:10.1093/imanum/drp044

Lee, S.Y., Heller, E.J.: Exact time-dependent wave packet propagation: application to the photodissociation of methyl iodide. J. Chem. Phys. 76(6), 3035–3044 (1982). doi:10.1063/1.443342

Lubich, C.: From Quantum to Classical Molecular Dynamics: Reduced Models and Numerical Analysis. European Mathematical Society, Europe (2008)

Puzari, P., Sarkar, B., Adhikari, S.: A quantum-classical approach to the molecular dynamics of pyrazine with a realistic model Hamiltonian. J. Chem. Phys. 125(19), 194, 316 (2006). doi:10.1063/1.2393228

Qian, J., Ying, L.: Fast Gaussian wavepacket transforms and Gaussian beams for the Schrödinger equation. J. Comput. Phys. 229(20), 7848–7873 (2010). doi:10.1016/j.jcp.2010.06.043

Yoshida, H.: Construction of higher order symplectic integrators. Phys. Lett. A 150(5–7), 262–268 (1990). doi:10.1016/0375-9601(90)90092-3

Acknowledgments

We thank Professor Christian Lubich for helpful discussions. Raoul Bourquin deserves special credit for his professional implementation of the described methods (also in many dimensions) in the publicly available open-source library WaveBlocksND.

Author information

Authors and Affiliations

Corresponding author

Additional information

GAH was partially supported by US National Science Foundation Grants DMS-0907165 and DMS-1210982.

Rights and permissions

About this article

Cite this article

Gradinaru, V., Hagedorn, G.A. Convergence of a semiclassical wavepacket based time-splitting for the Schrödinger equation. Numer. Math. 126, 53–73 (2014). https://doi.org/10.1007/s00211-013-0560-6

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-013-0560-6