Abstract

Purpose

Virtual reality simulator training has become important for acquiring arthroscopic skills. A new simulator for knee arthroscopy ArthroS™ has been developed. The purpose of this study was to demonstrate face and construct validity, executed according to a protocol used previously to validate arthroscopic simulators.

Methods

Twenty-seven participants were divided into three groups having different levels of arthroscopic experience. Participants answered questions regarding general information and the outer appearance of the simulator for face validity. Construct validity was assessed with one standardized navigation task. Face validity, educational value and user friendliness were further determined by giving participants three exercises and by asking them to fill out the questionnaire.

Results

Construct validity was demonstrated between experts and beginners. Median task times were not significantly different for all repetitions between novices and intermediates, and between intermediates and experts. Median face validity was 8.3 for the outer appearance, 6.5 for the intra-articular joint and 4.7 for surgical instruments. Educational value and user friendliness were perceived as nonsatisfactory, especially because of the lack of tactile feedback.

Conclusion

The ArthroS™ demonstrated construct validity between novices and experts, but did not demonstrate full face validity. Future improvements should be mainly focused on the development of tactile feedback. It is necessary that a newly presented simulator is validated to prove it actually contributes to proficiency of skills.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Arthroscopic surgery has developed into an important operative therapy. As arthroscopic skills are complex, learning these skills is challenging and time-consuming [24]. Traditionally, arthroscopic training is performed in the operating room using a one-to-one apprentice model, where the resident is permitted to perform actions under supervision. This is not an ideal learning environment. The resident is not able to make mistakes and learn from them, because these mistakes would compromise patient safety [1, 6, 30]. Inspired by the aerospace industry, virtual reality simulation has been deployed successfully in the medical world on a variety of medical skills [20, 26]. Due to reductions in training time and increasing demands for patient safety and quality control, virtual reality simulators have also been introduced in the education of orthopaedic residents [6, 18, 25]. The potential advantages of arthroscopic knee simulators have been demonstrated repeatedly [5, 6, 8, 18, 24, 30]. However, objective validation of individual simulators that are new to the market remains necessary to confirm their contribution to the improvement of skills.

A new virtual reality simulator for knee arthroscopy has been launched (ArthroS™, VirtaMed AG (www.virtamed.com). This simulator has been developed through a joint effort of medical experts from the Balgrist University Hospital and the ETH Zurich. The aim of this new simulator is the presentation of a realistic virtual training environment, since other virtual reality simulators evaluated for training of knee arthroscopy [3, 4, 9, 11, 13–15, 23, 31, 33] only showed a marginal level of realism [7, 16, 17, 32]. Similar to other simulators, the simulator offers training of diagnostic and therapeutic arthroscopy and objective performance feedback. However, the method to determine collision of the instrument tools with the anatomic structures is performed differently, which is meant to enhance the sense of realistic tactile feedback. Additionally, irrigation flow and bleedings are simulated to improve realism. The purpose of this study is to determine face and construct validity of this simulator. To allow comparison, this will be performed according to a protocol that has previously been used for the validation of arthroscopic knee simulators [30].

Materials and methods

Simulator characteristics

The simulator has an original arthroscope and original instruments (palpation hook, grasper, cutting punch and shaver) that were modified to connect them to the virtual environment of this simulator. The arthroscope features in- and outlet valves for fluid handling and three virtual cameras (0°, 30° and 70°) including a focus wheel. The simulator provides a patient knee model and a high-end PC with touch screen. The VirtaMed ArthroS™ training software consists of six fully guided procedures for basic skill training, four virtual patients for diagnostic arthroscopy and eight virtual patients for various surgical operations and courses designed for beginners, intermediate trainees and advanced arthroscopists. It is possible to display an anatomical model of the knee in which the real-time orientation of the instruments is shown. Registration devices are integrated to provide objective feedback reports and registration of training sessions.

Participants

Twenty-seven participants were recruited to perform the validity test. To keep in line with the protocol [30], the same strategy was applied in dividing the participants in three groups with different levels of arthroscopic experience: beginners who had never performed an arthroscopic procedure, intermediates who had performed up to 59 arthroscopies and experts who had performed more than 60 arthroscopies (Table 1). This boundary level of 60 arthroscopies was based on the average opinion of fellowship directors who were asked to estimate the number of operations that should be performed before allowing a trainee to perform unsupervised meniscectomies [22]. It was investigated whether the simulator could discriminate between the three groups.

Study design

To ensure a similar level of familiarity with using the simulator, the participants were not allowed to use the simulator before the experiment. A previously developed test protocol was used to determine face and construct validity [30]. Firstly, participants were asked to answer questions regarding general information (Fig. 1) and to provide their opinion on the outer appearance of the simulator for face validity [30]. The assessment of construct validity was performed on one navigation task [29] that was to be repeated five times. The time to complete all repetitions was set at 10 min [30]. Task time was used as an outcome measure, as it enables objective comparison between the experience groups [24]. The task time was determined with a separate video recording of the simulator’s monitor in which the intra-articular joint is presented. This enabled verification of the correct sequence of probing the nine anatomic landmarks. Accurate determination of the task time was ensured, since the video recordings had a frame rate of 25 images per second (equal to accuracy an of 0.04 s).

The participant population per experience group. The age in years and the number of attended arthroscopies (“Observation”) are expressed as median with range. Also, the number of participants who previously had used a simulator (“Simulator”) or had experience in playing computer games (“Games”) is shown

Subsequently, three exercises representative for the capability of this particular simulator were performed. The first task was a triangulation task to train hand–eye coordination. The second task was a guided navigation task for a complete inspection of the knee (Fig. 2). The third task was a medial meniscectomy. During the meniscectomy, it was possible to perform joint irrigation. For these three exercises, no task time was recorded, but rather the participant’s impression of the capabilities of the simulator. After every task, an objective feedback report, and a before and after picture were shown to demonstrate the way in which feedback was provided by the simulator.

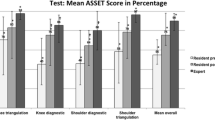

After the three tasks, the participants filled out the remainder of the questionnaire [30]. The questionnaire comprised questions concerning face validity, educational value and user friendliness. Face Validity was determined based on several aspects of the simulator (Table 1). Educational Value I concerned the variation and level of exercises; Educational Value II indicated to what extent the simulator serves as a good way to prepare for real-life arthroscopic operations. User Friendliness I comprised questions concerning the quality of the instructions given by the simulator and the presentation of the performance and results; User Friendliness II indicated whether the participants needed a manual before operating the simulator [30].

Questions were answered using a ten-point numerical rating scale (NRS) (e.g. 0 = completely unrealistic and 10 = completely realistic) or dichotomous requiring a yes/no answer [30]. A value of seven or greater was considered sufficient. Questions featuring a “not applicable (N/A)” answer option could be used solely by beginners. Furthermore, only the answers from the expert and intermediate groups were used to determine Face Validity and Educational Value I. Participants were able to provide free text suggestions for improvement of the simulator.

IRB approval

Academic Medical Centre, Amsterdam; reference number: W13_262 # 1.17.0326.

The Medical Research Involving Human Subjects act does not apply to the current study, and an official approval of this study by the Medical Ethical Commission is not required.

Statistical analysis

Data processing and statistical analysis were performed on the basis of the study protocol according to a previous study [30]. One observer determined all navigation task times using the recorded arthroscopic view by consecutive determination of each identified landmark. The summation of the duration to probe all landmarks of one trial was considered as one complete task time. Statistical analysis was performed using SPSS 20 (SPSS Inc., Chicago, IL, USA). The Shapiro–Wilk test demonstrated a skewed distribution. Therefore, nonparametric tests were performed, and values were presented as median (minimum–maximum). Construct validity was assessed by calculation of significant statistical differences in the task time for each trial between the three groups with the nonparametric Kruskal–Wallis test and Mann–Whitney U tests to highlight significant differences. The significance level was adjusted for multiple comparisons with the Bonferroni–Holm procedure (α = 0.05). The results of Face Validity, Educational Value I and User Friendliness I were expressed as median summary scores of the corresponding questions.

Results

One beginner completed only one task trial within the time limit. All other participants completed the five trials. The median task times and ranges of each trial are represented in Fig. 3. The median task time for the fifth trial was 41 s (3–50 s) for the beginners, 35 (18–120 s) for the intermediates and 31 s (17–42 s) for the experts (Fig. 3).

Task times of the beginners and the intermediates did not show significant differences for all repetitions (n.s.), nor did the task times of the intermediates and the experts (n.s.). The task times of the beginners were significantly slower than those of the experts for all trials (Fig. 3).

The outer appearance was indicated as satisfactory by the experts and intermediates (Table 1, median > 7). The intra-articular face validity and instrument face validity were not sufficient (Table 1, median < 7), because the texture of the structures, the motion of instruments and tissue probing and cutting were perceived as not realistic (Table 2). Related to these results were the remarks written by respondents: presence of a small delay between the motion of the instruments and the virtual image (14 participants) and markers were sometimes placed in the femur condyle (4 participants).

The quality of instructions was perceived well (Table 1, User Friendliness I). Seventy-one per cent of the participants did not feel the need to read the manual (User Friendliness II). Fifty-nine per cent of the participants indicated that the simulator is most valuable as a training modality during the first year of the residency curriculum. The level of exercises was 2 out of 5 (range 1–5) (Educational Value I). However, the variation was judged adequate. In fact, the extensive training programme, which enables to practise a wide range of procedures, especially hand–eye coordination, was considered an important asset of the simulator. Fifty per cent of the intermediates and experts agreed that training on this simulator is a good way to prepare for arthroscopic operations (Educational Value II).

Important suggestions for improvement included height adjustment of the lower leg (3 participants), replacement of the tibia bone by a complete lower leg for better varus–valgus stressing (10 participants), more realistic use and movement of the instruments (6 participants) and the improvement of tactile feedback (18 participants).

Discussion

The most important finding of the present study was that the simulator showed construct validity between beginners and experts for the navigation task (Fig. 3) and that face validity was partly achieved. No significant differences were found between trials of the beginners and the intermediates and between the intermediates and the experts (p > 0.05). Analysing the task times, different factors could have contributed to this. Overall, all task times were relatively fast, which could indicate that with this simulator the navigation task was fairly easy to perform. As all participants needed to become familiar with the simulator including the experts, learning pace might have been the same for all experience levels. Another noticeable result is the relative large variance of the task times in the intermediate group. As this was the most heterogeneous group in terms of skills levels—some intermediate participants performed no more than few knee arthroscopies, while others performed almost sixty—this explains the large variance. However, some studies did show significant differences between experience groups [24]. These studies both stratified their subjects into three groups (beginner, intermediate and experts). In the study of Pedowitz et al., larger numbers of participants per experience group (35, 22 and 21, respectively) were included, which could have increased the chance to detect significant differences. However, they did not classify their participants based on a certain number of performed arthroscopies [24]. The study of Srivastava et al. [27] is more comparable to the current study, since they included a similar number of participants, and retained a comparable expert boundary (50 arthroscopies). As there was no correlation between task times and experience in playing computer games, it is not probable that gaming experience contributed to the lack of significant differences.

Almost all participants indicated that the outer appearance of the simulator was sufficient. All essential intra-articular anatomical structures were present and were considered realistic in terms of their size. However, stressing of the knee, and the colour and the texture of the structures were regarded less realistic, which is remarkable as this virtual reality simulator makes use of high-end graphics and is the first to simulate irrigation. The latter is meant to provide an additional sense of realism as was found in Tuijthof et al. [29]. The joint stressing issue can be easily solved by incorporating the suggestion of the participants to extend the rather short partial lower leg by a full-sized one. Although a new manner to simulate tissue probing and cutting was implemented in the system, the participants felt that the haptic feedback they experienced did not adequately imitate clinical practice. This was reflected in the low scores for surgical instruments use (Tables 1, 2) and the perceived educational value.

Questionnaire results showed that tissue probing and cutting, texture of the structures and irrigation were perceived as not realistic. As a consequence, proper training of joint inspection and therapeutic interventions was not considered feasible (Educational Value I). Eighteen participants explicitly mentioned the absence of realistic tactile feedback as a limitation in using the simulator as a training modality. Detailed analysis revealed that this might have been caused by an offset of the system’s calibration that connects the real to the virtual world, as the markers of one of the tasks were occasionally positioned inside the femoral condyle. The importance of realistic tactile feedback has been raised earlier [2, 10, 19, 28], and the lack of (realistic) feedback has been considered a limitation [23]. The current and previous studies demonstrate that simulators without realistic tactile feedback are perceived as less appropriate for training arthroscopic skills [13, 30]. Simulators that do give realistic (partly) tactile feedback are considered more suitable for training and have shown to improve arthroscopic skills in the operating room [1, 12, 21].

When comparing the results of this virtual reality simulator to other virtual reality simulators that have been tested in literature, the simulator offers similar results as the GMV simulator in offering acceptable face validity and a wide variety in exercises via a user-friendly interface [1, 30].

A limitation of this study was the relatively small number of participants in each experience group. It is possible that significant differences in the navigation trials were not detected. For logistic reasons, this was the maximum number of participants that we could include. Besides, the heterogeneity of the intermediate group is likely to also have contributed to the absence of significant differences. Another limitation is that we only tested construct validity for one task, instead of testing the complete curriculum of exercises. Within the set time frame of 30 min, this was not possible, but due to this time limit, we were able to include a sufficient number of experts.

Obtaining arthroscopic skills is challenging. Specifically, at the start of their learning curve, it is important that trainees are able to make mistakes that do not compromise patient safety. Therefore, traditional arthroscopic training in the operating room is not ideal [1, 6, 30]. Simulators can play a valuable role in training residents, but this has to be verified through objective validation protocols for each individual simulator. This study is clinically relevant as the findings provide important information for educators that intend to use this simulator to train residents; it is necessary that a newly presented simulator demonstrates validity. The strength of this study is the application of a previously introduced study design that allows relative comparison in an objective manner and highlights areas of improvement. Overall, the simulator was considered a reasonable preparation for real-life arthroscopy with as its main advantages the large variety of exercises, the extensive theoretical section and the realistic intra-articular anatomy. However, essential improvements are necessary which mainly focus on the development of realistic tactile feedback.

Conclusion

The simulator demonstrated construct validity between beginners and experts. Face validity was not fully achieved. The most important shortcoming of the simulator is the lack of sufficient tactile feedback, which participants considered essential for proper arthroscopic training. According to most participants, the simulator ArthroS™ has potential to become a valuable training modality.

References

Bayona S, Fernández-Arroyo JM, Martín I, Bayona P (2008) Assessment study of insight ARTHRO VR® arthroscopy virtual training simulator: face, content, and construct validities. Am J Robot Surg 2:151–158

Botden SMBI, Torab F, Buzink SN, Jakimowicz JJ (2008) The importance of haptic feedback in laparoscopic suturing training and the additive value of virtual reality simulation. Surg Endosc 22:1214–1222

Brehmer M, Swartz R (2005) Training on bench models improves dexterity in ureteroscopy. Eur Urol 48:458–463

Brehmer M, Tolley DA (2002) European urology validation of a bench model for endoscopic surgery in the upper urinary tract. Eur Urol 42:175–180

Gomoll AH, O’Toole RV, Czarnecki J, Warner JJP (2007) Surgical experience correlates with performance on a virtual reality simulator for shoulder arthroscopy. Am J Sports Med 35:883–888

Gomoll AH, Pappas G, Forsythe B, Warner JJP (2008) Individual skill progression on a virtual reality simulator for shoulder arthroscopy: a 3-Year follow-up study. Am J Sports Med 36:1139–1142

Haluck RS, Satava M, Fried G, Lake C, Ritter EM, Sachdeva K, Seymour NE, Terry ML, Wilks D (2007) Establishing a simulation center for surgical skills: what to do and how to do it. Surg Endosc 21:1223–1232

Howells HR, Gill HS, Carr J, Price J, Rees JL (2008) Transferring simulated arthroscopic skills to the operating theatre: a randomised blinded study. J Bone Joint Surg Br 90:494–499

Jacomides L, Ogan K, Cadeddu J, Pearle MS (2004) Use of a virtual reality simulator for ureteroscopy training. J Urol 171:320–323

Kim HK, Rattner DW, Srinivasan M (2004) Virtual-reality-based laparoscopic surgical training: the role of simulation fidelity in haptic feedback. CAS 9:227–234

Knoll T, Trojan L, Haecker A, Alken P, Michel MS (2005) Validation of computer-based training in ureterorenoscopy. BJU Int 95:1276–1279

Korndorffer JR, Dunne B, Sierra R, Stefanidis D, Touchard CL, Scott DJ (2005) Simulator training for laparoscopic suturing using performance goals translates to the operating room. J Am Coll Surg 201:23–29

Macmillan AIM, Cuschieri A (1999) Assessment of innate ability and skills for endoscopic manipulations by the advanced dundee endoscopic psychomotor tester: predictive and concurrent validity. Am J Surg 177:274–277

Matsumoto ED, Hamstra SJ, Radomski SB, Cusimano MD (2001) A novel approach to endourological training: training at the surgical skills center. J Urol 166:1261–1266

Matsumoto ED, Pace KT, D’A Honey RJ (2006) Virtual reality ureteroscopy simulator as a valid tool for assessing endourological skills. Int J Urol 13:896–901

Maura A, Galatà G, Rulli F (2008) Establishing a simulation center for surgical skills. Surg Endosc 22:564

McClusky DA, Smith CD (2008) Design and development of a surgical skills simulation curriculum. World J Surg 32:171–181

Modi CS, Morris G, Mukherjee R (2010) Computer-simulation training for knee and shoulder arthroscopic surgery. Arthroscopy 26:832–840

Moody L, Waterworth A, McCarthy AD, Harley PJ, Smallwood RH (2007) The feasibility of a mixed reality surgical training environment. Virtual Real 12:77–86

Michelson JD (2006) Simulation in orthopaedic education: an overview of theory and practice. J Bone Joint Surg 88:1405–1411

Munz Y, Almoudaris AM, Moorthy K, Dosis A, Liddle AD, Darzi AW (2007) Curriculum-based solo virtual reality training for laparoscopic intracorporeal knot tying: objective assessment of the transfer of skill from virtual reality to reality. Am J Surg 193:774–783

O’Neill PJ, Cosgarea AJ, Freedman JA, Queale WS, McFarland EG (2002) Arthroscopic proficiency: a survey of orthopaedic sports medicine fellowship directors and orthopaedic surgery department chairs. Arthroscopy 18:795–800

Ogan K, Jacomides L, Shulman MJ, Roehrborn CG, Cadeddu J, Pearle MS (2004) Virtual ureteroscopy predicts ureteroscopic proficiency of medical students on a cadaver. J Urol 172:667–671

Pedowitz R, Esch J, Snyder S (2002) Evaluation of a virtual reality simulator for arthroscopy skills development. Arthroscopy 18:1–6

Reznek M, Pp Harter, Krummel T (2002) Virtual reality and simulation: training the future emergency physician. Acad Emerg Med 9:78–87

Satava RM (1997) Virtual reality and telepresence for military medicine. Ann Acad Med Singap 26:118–120

Srivastava S, Youngblood PL, Rawn C, Hariri S, Heinrichs WL, Ladd AL (2004) Initial evaluation of a shoulder arthroscopy simulator: establishing construct validity. J Shoulder Elbow Surg 13:196–205

Ström P, Hedman L, Särnå L, Kjellin A, Wredmark T, Felländer-Tsai L (2006) Early exposure to haptic feedback enhances performance in surgical simulator training: a prospective randomized crossover study in surgical residents. Surg Endosc 20:1383–1388

Tuijthof GJM, van Sterkenburg MN, Sierevelt IN, van Oldenrijk J, van Dijk CN, Kerkhoffs GMMJ (2010) First validation of the PASSPORT training environment for arthroscopic skills. Knee Surg Sports Traumatol Arthrosc 18:218–224

Tuijthof GJM, Visser P, Sierevelt IN, van Dijk CN, Kerkhoffs GMMJ (2011) Does perception of usefulness of arthroscopic simulators differ with levels of experience? Clin Orthop Relat Res 469:1701–1708

Watterson JD, Beiko DT, Kuan JK, Denstedt JD (2002) Randomized prospective blinded study validating acquisition of ureteroscopy skills using computer based virtual reality endourological simulator. J Urol 168:1928–1932

White MA, Dehaan AP, Stephens DD, Maes A, Maatman TJ (2010) Validation of a high fidelity adult ureteroscopy and renoscopy simulator. J Urol 183:673–677

Wilhelm DM, Ogan K, Roehrborn CG, Cadeddu J, Pearle MS (2002) Assessment of basic endoscopic performance using a virtual reality simulator. J Am Coll Surg 195:675–681

Acknowledgments

This research was funded by the Marti-Keuning Eckhart Foundation, Lunteren, the Netherlands. This research was performed at the Academic Medical Centre, Amsterdam.

Conflict of interest

None.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Stunt, J.J., Kerkhoffs, G.M.M.J., van Dijk, C.N. et al. Validation of the ArthroS virtual reality simulator for arthroscopic skills. Knee Surg Sports Traumatol Arthrosc 23, 3436–3442 (2015). https://doi.org/10.1007/s00167-014-3101-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00167-014-3101-7