Abstract

Multiple models of a physical phenomenon are sometimes available with different levels of approximation. The high fidelity model is more computationally demanding than the coarse approximation. In this context, including information from the lower fidelity model to build a surrogate model is desirable. Here, the study focuses on the design of a miniaturized photoacoustic gas sensor which involves two numerical models. First, a multifidelity metamodeling method based on Radial Basis Function, the co-RBF, is proposed. This surrogate model is compared with the classical co-kriging method on two analytical benchmarks and on the photoacoustic gas sensor. Then an extension to the multifidelity framework of an already existing RBF-based optimization algorithm is applied to optimize the sensor efficiency. The co-RBF method does not bring better results than co-kriging but can be considered as an alternative for multifidelity metamodeling.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the micro-electronics industry, as well as in other fields, products are nowadays usually designed using computer models. Physical experimentation with prototypes are too costly compared to computer simulations for the development of new concepts. However, numerical models are often built with complex mathematical codes involving multiple disciplines that are very expensive to evaluate. For example a single Finite Element analysis of a gas sensor can require a computational time of several hours.

A solution that has attracted intensive attention during the last decades is to replace the physics based computer simulation by a metamodel, which emulates the statistical input-output relationship. The advantage of using a metamodel is the reduction of the computational cost necessary to approximate the output of the numerical model. The methodology implies that a sampling plan is evaluated first, in order to train the metamodel. Then, the response surface is updated using an adaptive sampling algorithm, in order to reduce the prediction uncertainty at new points. Surrogate models are useful for sensitivity analysis or optimization purpose because they limit the cost of function calls compared to a direct evaluation of the simulation. The reader is referred to Wang and Shan (2007) for a non-exhaustive review of different metamodeling methods.

Kriging-based surrogate models (Krige 1951; Santner et al. 2003) are mainstream in the metamodeling community because of their accuracy, the availability of the prediction variance and their use in sequential design and optimization (Schonlau and Welch 1996). Sometimes multiple models of a physical phenomenon are available with different levels of approximation, the high fidelity model being more expensive in terms of computational time than the coarse approximation. Thus, Kriging models have recently been improved to take advantage of the use of both the coarse and the precise versions of the computer simulation, resulting in an enhanced prediction accuracy at a reduced computational cost (Kennedy and O’Hagan 2000). Then, several studies dealing with co-kriging (multifidelity version of Kriging) and its use in optimization have been exposed (Forrester et al. 2007; Dong et al. 2015; Le Gratiet and Cannamela 2015).

Another possible choice in this context is the Radial Basis Functions (RBF) based interpolation method (Powell 1987; Dyn et al. 1986). Among metamodeling approaches, it is one of the most effective multidimensional approximation methods (Jin et al. 2001), as the dimension of the input space does not alter its performance (Powell 2001). It is also suitable for optimization since an adaptive sampling strategy has been developed by Gutmann (2001) to sequentially enrich the training database of the RBF metamodel, in order to find an optimal design of the model simulated.

The present work focuses on the optimization of a photoacoustic gas sensor using two different simulation models based on more or less sophisticated physicals approaches. A possible way to solve this problem involves the use of RBF multifidelity metamodeling as an alternative to co-kriging. The use of RBF in multifidelity framework has already been proposed (Sun et al. 2010), but the methodology described here is different. The optimization problem of the photoacoustic gas sensor is explained in Section 2, where the reason for the choice of RBF metamodeling instead of kriging is also discussed. The RBF metamodel is described in Section 3. The new co-RBF methodology (multifidelity version of RBF metamodeling) is detailed in Section 4, followed by a proposition of an extension of the Gutmann optimization algorithm to the multifidelity framework in Section 5. A comparison between co-kriging and co-RBF metamodels is presented in Section 6, where two different analytical test problems are solved. The efficiency of the new multifidelity surrogate model is then confirmed on the design optimization of a photoacoustic gas sensor in Section 7.

2 Miniaturized photoacoustic gas sensor test problem

The design of a miniaturized photoacoustic gas sensor is the principal motivation of the methodology proposed. The physical behaviour of this component and the optimization problem are explained in the following section.

2.1 Theory and models

Photoacoustic (PA) spectroscopy is employed to detect gas traces with a high sensitivity, sometimes below the part per billion by volume (ppbv) level. The principle of PA spectroscopy relies on the excitation of a molecule of interest by a light source emitting at the wavelength of an absorption line of the molecule. The light source, usually a laser in the mid-infrared range, is modulated at the acoustic frequency of a resonant cell, containing the gas mixture. During the molecules collisional relaxation, the kinetic energy exchange with the surrounding gas creates local temperature modulation, and thus acoustic waves in the chamber (Miklós et al. 2001). The cell used in this work is of the differential Helmholtz resonator (DHR) type (Zéninari et al. 1999). It is composed of two chambers linked by two capillaries (Fig. 1). The gas excitation is ensured by illuminating one chamber with a laser source. At the Helmholtz resonance of the cavity, acoustic signals in the two chambers are in phase opposition. The signals provided by two microphones measuring the pressure into each chamber are subtracted to provide the PA signal. As it is inversely proportional to the volume of the resonant cell (Miklós et al. 2001), an effort for reducing the size of PA cells has been initiated during the last decade (Holthoff et al. 2010; Bauer et al. 2014; Rouxel et al. 2016).

Assuming no viscous and thermal losses and a harmonic heat source, the non homogeneous Helmholtz (1) can be used to compute the pressure field in the cell, and thus the differential PA signal:

In (1), ω is the laser modulation frequency, c the speed of sound, γ the Laplace coefficient of the gas, k = ω/c the wave number and \(\mathcal {H}\) the Fourrier transform of the power density of the heat source. This pressure acoustic model is computationally efficient and accurate at the macro-scale but fails at the micro-scale (Glière et al. 2014). In fact, various volume and surface dissipation processes, at work in the bulk of the propagation medium and close to the walls, cannot be neglected in miniaturized devices, where boundary layers occupy a non-negligible part of the overall cell volume. Numerous approximate models have been adapted from the pressure acoustic model to take into account the dissipation effects. For instance, Kreuzer (1977) rely on eigenmode expansion of the pressure field and a correction by quality factors. The latter model is fast and faithful enough to constitute the coarse approximation used in our multifidelity approach. On the other hand, the high fidelity, but CPU time and memory consuming model relies on the full linearized Navier-Stokes formulation (FLNS), that accounts for viscous and thermal dissipation effects. In that approach, small harmonic variations are assumed to linearize the Navier-Stokes equation. The PDE equations system (2) is composed of the continuity equation, incorporating the ideal gas equation of state p = ρ R M T, and the momentum and energy conservation laws:

where \(\tilde {p}\), \(\tilde {T}\) and \(\tilde {\mathbf {u}}\) are respectively the pressure, temperature and velocity fields in the gas, p 0, T 0 and ρ 0 are the mean values of the pressure, temperature and density fields, λ and μ are the bulk viscosity and the shear viscosity. Q h is the heat source.

2.2 Multi-fidelity metamodeling and optimization problem

In the framework of the PA sensor test case, the FLNS model constitutes the high fidelity model and the Kreuzer model the coarse version. Both models are solved using the commercial software package COMSOL multiphysics (COMSOL AB, Sweden), based on the finite element method. The computational time for the FLNS model is around one hour and ten minutes on a twenty-core cluster node cadenced at 3 GHz. For the Kreuzer model, the computational time on the same computer is reported at 3 minutes.

The PA cell has a chamber diameter of 1 mm and three design parameters are involved: chamber length, diameter and length of capillaries. Parameters ranges are available in Table 1. The cell resonance frequency and the maximum photoacoustic signal detected are the two outputs. The resonance frequency vary between 1000 Hz and 10000 Hz and the signal is around 1 Pa. In order to optimize the efficiency of the gas sensor, the signal must be maximized. The resonance frequency must lie in a range limited for the low frequency by the 1/f noise and for high frequency, by the inverse of the molecules relaxation time.

2.3 Single-fidelity metamodels comparison

A first study has been initiated to compare the prediction accuracy of Kriging and RBF metamodel on the photoacoustic gas sensor models. The purpose was to select the best metamodel for our test case and use it in an optimization sequence. The high fidelity and coarse model have been evaluated using a latin hypercube sampling maximin optimized of respectively 20 points and 100 points. The signal and the resonance frequency are surrogated and the prediction accuracy of metamodels are assessed using 10 test points. The prediction error is computed as the mean absolute percentage error between predicted values and the output from the numerical models. Results in Table 2 are average over 5 different training sample.

The low fidelity model (Kreuzer) is easier to surrogate since both metamodels are accurate (the prediction error is arround 0.1%). The signal is more difficult to approximate than the resonance frequency for the high fidelity model. RBF is more accurate than Kriging on 3 out of 4 cases in Table 7, but it is noteworthy that both metamodels performances are similar. This result motivates the development of a RBF-based multifidelity metamodel, described in the next sections, to offer an alternative to co-kriging that might better address the photoacoustic cell optimization problem. The differences between the two methods in terms of prediction error are discussed in Section 6, where a benchmark is performed.

3 Radial basis function

Before explaining the multifidelity metamodel proposed in this work, the analytical basis of Radial Basis Function surrogate model is first recalled. We consider a real-valued function, formally defined by:

A surrogate model is built from a set of n input vectors \(\mathcal {X} = \left \{ {{{\mathbf {x}}_{1},{\mathbf {x}}_{2}, {\dots } ,{\mathbf {x}}_{n}}} \right \}\) and a vector of corresponding scalar evaluations \(\mathbf {z} = \left \{ {{{z}_{1}},{{z}_{2}}, {\dots } ,{{z}_{n}}} \right \}\). The dimension of the input space is d. Multiple outputs are approximated one by one. Once the training of the surrogate model is achieved, the prediction \(\widehat {y}\) of the process output is obtained at new sample points, with a highly reduced computational cost. The RBF is defined as a linear combination of basis functions φ that depend on the distance between the training points and the evaluation point:

where ∥.∥ denotes the Euclidean norm in \( \mathbb {R}^{d} \), the β i coefficients are real numbers, Q is in the linear space π l of polynomials of degree at most l in \(\mathbb {R}^{d}\) and the hat denotes the prediction value of the function. It has been initially developed for scattered multivariate data interpolation by Dyn et al. (1986). The polynomial is given by the following general form where \(\hat {l}\) is the polynomial degree, α is a vector of real coefficients and p k (x) are the monomial components:

The basis function, the RBF coefficients β and the polynomial parameters (degree and coefficients) must be selected in order to build the RBF approximation. The interpolation condition, \(\hat {Y}(\mathcal {X})=\mathbf {z}\), leads to the linear system of equation, z = Φ β, where:

The linear system is not sufficient to completely define the RBF. A solution proposed by Micchelli (1986) is to ensure that the basis function matrix Φ is conditionally positive definite, which reduces numerical errors when solving the system. The following inequality is obtained, where the polynomial order l 0 depends on the basis function:

where \(\mathcal {V}_{l_{0}} \subset \mathbb {R}^{n}\) is the linear space containing all \({\boldsymbol {\beta }} \in \mathbb {R}^{n}\), that satisfy:

The RBF φ used in this work and the corresponding polynomial form are given in Table 3, where x[i] is the i-th component of x. The polynomial order l 0 is set at 1 for the Cubic case and − 1 for the Gaussian one to fulfill the inequality in (7). For a more complete list of RBF, the reader is referred to Gutmann (2001).

The unknown parameters β and α are now completely determined by the interpolation condition \(\hat {Y}(\mathcal {X})=\mathbf {z}\) and the positive definite condition in (8) for the basis function matrix. Then the following system allows to estimate these parameters:

where \({\mathbf {F}} = \left ({{p_{1}}({\mathcal {X}}), {\ldots } ,{p_{{l_{0}} + 1}}(\mathcal {X})} \right )\). If the rank of matrix F is equal to d + 1, the matrix system is nonsingular and the resulting RBF interpolant is unique. The essential step of the unknown parameter estimation is the inversion of the nonsingular matrix. If the dataset is large, this is the most time demanding part of the surrogate model training. It is also noteworthy that the matrix is ill-conditioned if points in \(\mathcal {X}\) are close to each other.

Beyond determining the vector of basic parameters \({\left ({\begin {array}{cc}{\boldsymbol {\beta }^{T}}&{\boldsymbol {\alpha }^{T}} \end {array}} \right )}\), the parameter γ introduced in the case of Gaussian RBF must also be estimated. The correct estimation of this parameter enables to minimize the generalization error of the model. It can be interpreted as a scaling factor which expresses the spacial influence in each direction of the basis function central point. Forrester et al. (2008) suggests to use the cross-validation error estimate in order to determine the value of γ. First, γ is selected from candidate values and then basic parameters are determined for each subset of the cross-validation population. Once a value with the minimal cross-validation error is found, basic parameters are built on the whole training dataset with the optimized γ. Rippa (1999) derived a leave-one-out (LOO) formula (10) that estimates the model error by determining basic parameter only once by γ value. This method is used in the present work.

The LOO criterion optimization was solved using the Covariance Matrix Adaptation Evolution Strategy (CMA-ES) (Hansen and Kern 2004). CMA-ES is a second-order evolutionary search strategy based on the propagation of a covariance matrix, which has produced excellent results on multimodal and high dimensional test problems.

Now the prediction capability is studied. A standard uncertainty measure is derived by Jakobsson et al. (2009), inspired by the interpretation of the native space norm as a “bumpiness” measure by Gutmann (2001). This quantity \(s_{\hat {Y}}\), also called power function, describes the interpolation error at a point t of the input space:

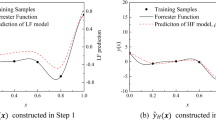

where \({\mathbf {u}_{\mathbf {x}}} = {\left ({\varphi \left ({\left \| {\mathbf {x} - \mathbf {x}_{1}} \right \|} \right ), {\ldots } ,\varphi \left ({\left \| {\mathbf {x} - \mathbf {x}_{n}} \right \|} \right )} \right )^{T}}\) and \({\mathbf {F}_{\mathbf {x}}} = \left ({{p_{1}}({{\mathbf {x}}}), {\ldots } ,{p_{{l_{0}} + 1}}({{\mathbf {x}}})} \right )\). An example of Cubic and Gaussian RBF interpolant is available on Fig. 2, together with the bumpiness measure of Gutmann. For both basis functions, the prediction error measure indicates to add a new point at x = 1 in order to reduce the uncertainty in this area. This prediction error has also large values in area where training points are sparse, for instance close to x = 0.4.

Even though the Kriging model framework is not detailed here, it is important to recall that RBF is similar to the Kriging model, as stated by Costa et al. (1999) and Mackman et al. (2013). The basis function and the polynomial term can be the same for both metamodels, which lead to identical interpolants if the parameters are estimated with the same method. The difference between these formulations is more conceptual, since they were derived from different branches of mathematics. For example, Kriging assumes that the process Y to surrogate is the realization of a Gaussian process (Santner et al. 2003), which is not the case for RBF. The main difference between the two methods lies in the parameters optimization method, maximum likelihood estimation (MLE) is used here for Kriging and LOO for RBF. The performances of these two approaches are discussed in the analytical benchmark at Section 6.

4 Co-RBF

Designing a component such as a mechanical structure or optical planar integrated circuit may involve a computationally-demanding and detailed numerical simulation. In many cases, it is feasible to obtain a simpler version of the model using approximations that is qualitatively similar to the full model. For example, Vitali et al. (2002) used a finite element model of a stiffened panel with a crack for the high fidelity model to capture accurately the stress next to the crack tip, and a low fidelity model without the crack to compute nominal stresses and strains. Here, it is assumed that different levels of modeling, in terms of accuracy, are available for our physical problem. It is possible to generalize the approach to multiple sets of data with different fidelity levels but, for readability sake, the present method description is limited to two datasets. The most accurate set, which is also the more demanding in terms of computational time, is represented by training points \(\mathcal {X}_{e}\) and their corresponding outputs z e . The coarse version of the simulation model is represented by \(\mathcal {X}_{c}\) and z c . It is assumed that the high fidelity sampling points are also in the training dataset of the low fidelity model. With this condition, the multifidelity approximation is built using the auto-regressive model of Kennedy and O’Hagan (2000):

where ρ is a scaling factor and y d the function representing the difference between the expensive process and the scaled coarse process. This formulation allows different levels of fidelity to have different correlation structures. This implies that the RBF parameters for the coarse process are not be the same as the parameters for the expensive one. In order to estimate the RBF of the coarse process, the coarse dataset is considered independently of the expensive one and this leads to the parameters estimation described by (4). Once \({\left ({\begin {array}{cc}{\boldsymbol {\beta }_{c}}^{T}&{\boldsymbol {\alpha }_{c}}^{T} \end {array}} \right )}\)are obtained, the RBF parameters related to the difference function have to be estimated. With the auto-regressive model, the assumption is made that, given the point x i evaluated on the coarse model, no more information can be learnt about z e i from the coarse model. This indicates that only \({y_{c}}\left (\mathbf {x}_{e} \right )\) is considered in this estimation. If the coarse process has not already been evaluated at these points, the values predicted by the previously built RBF are used, which relax the condition that \(\mathcal {X}_{e} \subset \mathcal {X}_{c}\). Then the following linear system has to be solved, where \({\left ({\begin {array}{cc}{\boldsymbol {\beta }_{d}}^{T}&{\boldsymbol {\alpha }_{d}}^{T} \end {array}} \right )}\) corresponds to the RBF parameters of the difference model:

This linear system depends on the scaling factor ρ. As for the Gaussian RBF parameter, the scaling factor may be determined using the leave-one-out error estimation on the expensive dataset. If both γ and ρ have to be estimated, the minimization of the LOO error has to be done regarding both parameters. Once optimal parameters have been determined, the surrogate model of the expensive process is computed using (12).

An example of co-RBF metamodel is available on Fig. 3 left, in an analytical test case defined by Forrester et al. (2007). Four expensive points and eleven coarse points are evaluated to build the Gaussian co-RBF. The resulting interpolant is the dashed line and it is close the real model (solid line). The evolution of the LOO depending on γ d and ρ is also plotted on the right. The global minimum of the map is located at γ d = 1 and ρ = 1.74. The LOO error decreases sharply toward the optimal scaling factor, but it has a quite flat shape in the γ d dimension.

5 Co-RBF based optimization

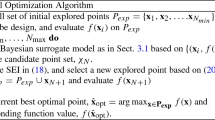

Gutmann (2001) developed an adaptive sampling method for optimization in a single fidelity RBF approach, which is similar to the Efficient Global Optimization (EGO) method of Jones et al. (1998). It is based on the standard uncertainty measure of (11) and an estimate of the real function minimum value. This algorithm has then been modified by Regis and Shoemaker (2006) to improve its local search property. Here the so-called original Gutmann-RBF algorithm is derived for the multifidelity RBF framework.

The estimated global minimum of the high fidelity function is denoted as z ∗. The co-RBF interpolation is re-built with the modified accurate training set containing \((\mathcal {X}_{e},\mathbf {z}_{e})\) and \(({x_{e}}^{*},z^{*})\) where \({x_{e}}^{*} \notin \mathcal {X}_{e}\). The point \({x_{e}}^{*}\) to be evaluated and added to training dataset, is the one that leads to a re-built co-RBF which is most ”reasonable”. An example of what reasonable means is available on Fig. 4 with a single fidelity approach. The target value chosen is represented by the dashed line and two different locations are tested. The upper right plot is the negative log value of the Gutmann criterion. The lower right plot is the best infill points to evaluate than the one on the lower left plot according to this criterion. This point is also in the area of the true global optimum of the real function.

Gutmann derived an infill criterion that indicates the next evaluation point to be added to the RBF metamodel training dataset. After multiple iterations, the minimum of the RBF converges toward the global minimum of the function. The following assumptions are needed to transpose this method into the co-RBF framework. First, the evaluation time of the coarse model is considered negligible compare to the accurate model, then it can be evaluated a large number of times. Thus, it is possible to build an accurate metamodel of this function and there is no need to add points to the coarse training dataset during optimization. The expensive function is the only one that is called during the refinement process. Second, since the difference model is the one built using accurate model evaluation, the infill criterion of Gutmann is computed using the prediction error of RBF model φ d . The formula derived in (14) is the one used in the multifidelity framework:

where \({u_{n}}\left ({\mathbf {x}} \right ): = {\left ({\varphi \left ({\left \| {{\mathbf {x}} - {{\mathbf {x}}_{1}}} \right \|} \right ), {\ldots } ,\varphi \left ({\left \| {{\mathbf {x}} - {{\mathbf {x}}_{n}}} \right \|} \right )} \right )^{T}}\) and \({\mathbf {F}_{\mathbf {x}}} = \left [ {\begin {array}{cc} {{{\mathbf {x}}^{T}}}&1 \end {array}} \right ]\). The selection of the goal value z ∗ for the global optimum has to be explained. In Gutmann approach, it is cyclically defined in the interval \(\left [-\infty , \min (\hat y_{e}) \right ]\), where low values corresponds to global search while values close to the actual minimum of the metamodel leads to local search. The convergence of the whole optimization algorithm to the global minimum is proven (Gutmann 2001) in the linear, cubic and thin plate spline cases if the goal value is selected using the following procedure. Let N be the cycle length and p be a permutation of \(\{1,\dots ,n\}\) such that \(y(x_{p(1)}) \le {\dots } \le y(x_{p(n)})\). The number of points in the initial training set is n 0 and n is the current number of points in the training dataset during optimization. The goal value is set as follow:

where

The values of W n are decreasing during a cycle so that it allows moving from a global to a local infill criterion. At each iteration, the quantity \(-\log (h_{n})\) is minimized in order to avoid numerical difficulties. Once again, the CMA-ES algorithm is used to get the optimum of the Gutmann criterion since it is a multi-modal objective function. The cycle length is set to 5 in this work.

6 Analytical benchmark

A benchmark of two analytical metamodeling test problems, with different characteristics, has been defined to compare multifidelity surrogate modeling methods. The problems have been used by Xiong et al. (2013) for illustration on multifidelity sequential design. The first one is a simple two-dimensional problem where the basic co-RBF prediction capability is tested. The second problem allows checking if higher dimensional problems are better approximated by co-RBF or by co-kriging. The co-kriging model is built using the ooDACE Toolbox (Couckuyt et al. 2014) and the co-RBF code is implemented using the commercial software package MATLAB (The MathWorks Inc., U.S.A.). For each problem, the co-RBF prediction error is compared to the co-kriging one. The accuracy is analyzed on different sampling plan sizes and results are averaged on one hundred initial random space-filling designs. The evolution of the prediction error over the combination of different expensive and coarse evaluations is plotted. The mean absolute percentage error (MAPE) on one hundred test points of the expensive code is computed for both metamodels as the measure of accuracy. The Gaussian correlation function is used to build both metamodels and the correlation length parameter γ is optimized by MLE for co-kriging and by LOO for co-RBF. Its bounds are set by the minimal and maximal distances (d i s t m i n and d i s t m a x ) between the points in the training dataset: γ ∈ [1/(2 × d i s t m a x );1/(2 × d i s t m i n )]. This heuristic rule is often employed to simplify the correlation length estimation by resticting the optimization space. Those bounds allow sometimes a better conditionning of the correlation matrix.

6.1 Test function 1

The first test problem is based on a function from Currin et al. (1991). The high fidelity function and the coarse version are detailed in (17).

The results obtained for this problem are globally the same for both methods. The average of the prediction error on Figs. 5 and 6 is 2.07% ± 0.53 for co-kriging and 2.16% ± 0.58 for co-RBF. As expected, if the number of expensive or coarse model evaluations in the training sample increases, the accuracy of both models is improved.

Mean value of the prediction error on Currin et al. (1991) problem over 100 random initial designs

Standard deviation of the prediction error on Currin et al. (1991) problem over 100 random initial designs

The analysis of scaling factor estimation on Fig. 7 (right) reveals that the LOO provides a higher value of ρ when the number of expensive points increases. As an indicator, the mean value of the ratio between the low and high fidelity model is arround 1.55 . The LOO leads to a higher value of the co-RBF scaling factor than this indicative ratio as the number of expensive points increases. At end, the coRBF is a little more accurate for different combinations of High fidelity - Low fidelity evaluations in Table 4 compared to co-kriging. The correlation length estimation is also depicted on Fig. 7 (left) for γ d2. It reveals that the MLE for co-kriging brings higher value of γ d2 than LOO for co-RBF. This is also observable for all the correlation lengths.

Left- Correlation length γ d2. Right - Scaling factor ρ. These two parameters are estimated for co-kriging and co-RBF over 100 random initial design on Currin et al. (1991) problem

6.2 Test function 2

The second test is a larger dimensional problem based on the borehole model (Morris et al. 1993) and is derived by Xiong et al. (2013) as a multi-fidelity model. It describes the flow of water through a borehole drilled from the ground surface through two aquifers (18). The eight inputs and their ranges are detailed in Table 5.

The mean value of the prediction error for each multifidelity metamodel is plotted on Fig. 8. The average of the mean prediction error is 0.94% ± 0.66 for co-kriging and 2.04% ± 1.12 for co-RBF. Co-kriging prediction error is clearly lower than co-RBF on this example (see Table 6). The accuracy of both methods increases with the number of expensive and coarse evaluations. The standard deviation of the error on Fig. 9 reveals the same behavior as for the mean value.

The small results disparity between the two methods lies once again in the parameters estimation. The same behavior as test function 1 is observed on Fig. 10: the correlation length γ d for the difference model is underestimated with the LOO criterion and the scaling factor ρ is less stable for co-RBF compared to co-kriging. The mean of the ratio between the two models is arround 0.4 and the LOO gives a higher value of the scaling factor as the number of expensive evaluations increases.

7 Metamodeling and optimization of the photoacoustic cell

Multi-fidelity metamodels are built, with Gaussian basis functions, using five to twenty expensive function calls and ten to one hundred coarse function calls. Each combination of expensive and coarse evaluations are built over five different initial samples. The prediction accuracy is computed using the MAPE on the same ten extra points of the expensive code for all results. The MAPE is then averaged over the five different results by combination of expensive and coarse model.

The mean prediction error of the approximation of the cell resonance frequency is plotted on Fig. 11. Here co-kriging globally brings a better approximation than

co-RBF. As a quantitative measure, the average of

the mean prediction error of the co-kriging is 0.45% ± 0.20 and the one for co-RBF is 0.73% ± 0.42 (Fig. 12). The accuracy of co-RBF is improved when the number of coarse and expensive evaluations increases but this improvement is less clear for co-kriging. At end, the co-RBF is as accurate as co-kriging for the combination of 20-100 points with 0.34% of prediction error compared to 0.36% for co-kriging.

The optimization of the correlation length γ d for the difference model seems to be underestimated with the LOO criterion for co-RBF. On Fig. 13 (left), the MLE optimization with co-kriging leads to higher values of γ d3. This result is also observed for the other correlation length and may explain the difference in terms of accuracy between the two metamodels. The estimation of the scaling factor leads to the same results for both method with a little more variance in the co-RBF data, on Fig. 13 (right).

The second output, i.e. the maximum signal detected by the PA cell, is now approximated. The mean prediction error is presented on Fig. 14. The co-kriging gives better results than co-RBF on the overall combination map but there is not a clear correlation between more accuracy and larger number of coarse evaluations points. The accuracy improvement is clear for co-RBF only when the number of expensive points increases. The average of the mean prediction error of the co-kriging is 2.80 ± 0.07 and the one for co-RBF is 3.80 ± 0.15 (Fig. 15).

The coarse model does not seem to bring more information to the approximation of the signal with co-RBF. Thus, it may not be useful to use the Kreuzer model in a multifidelity metamodeling of the signal. The number of expensive points has to be increased in order to improve significantly the prediction accuracy. On Fig. 16 the LOO underestimates the scaling factor value compared to MLE. This may explain the higher prediction error with co-RBF metamodel. Correlation lengths estimated for co-RBF are also still lower than the ones for co-kriging.

In order to assess the benefit of using a multi-fidelity approach for the approximation of both outputs, single-fidelity and multi-fidelity RBF metamodels are compared. Table 7 summarizes the prediction error when RBF model is built on the same expensive datasets that are used for the multi-fidelity problem. The table includes a reminder of co-RBF and co-kriging accuracy obtained with 100 coarse evaluations. For each number of expensive calls, the results are once again averaged over the same five different initial samples.

The accuracy on the resonance frequency prediction is clearly improved with multi-fidelity modeling compared to the single fidelity one. The RBF prediction leads to results similar to those of the multi-fidelity method, as the number of expensive evaluations increases. Results on the detected signal show that multifidelity and classic RBF prediction errors are not very different. The Kreuzer model for the signal adds information to multifidelity model but it does not improve accuracy as much as for the resonance frequency output.

Here, the improvement brought by the multifidelity approach on the prediction accuracy of the resonance frequency can be used to reduce the computational time of the FLNS model. Since the result brought by this time consuming model depends on a frequency sweep, it is possible to reduce the search range if an estimate of the cell resonance frequency is available. The actual sweep is 50 values between 1000 Hz and 10000 Hz, then the resonance is estimated by spline interpolation of the dataset. By using the prediction of the resonance frequency of the co-RBF, the sweep range is reduced to 5 values and thus, the computational time of the FLNS to 40 minutes. This strategy is now implemented when the FLNS model is called and a co-RBF on the resonance frequency has already been built (for example during a sequential optimization of the photoacoustic cell using co-RBF).

7.1 Optimization of the gas sensor geometry

Since a multifidelity metamodel of the signal detected by the sensor is now available, an adaptative sampling strategy to get the optimum value is set up. The method described in Section 5 is used as an infill criterion for the co-RBF. It is compared to the traditional Efficient Global Optimization (EGO) algorithm adjusted to the multifidelity framework (Forrester et al. 2007). Five different initial DOE are tested with 100 points from the coarse model and 5 from the accurate one. The number of additional calls to the accurate simulation model is arbitrarily set to 16. The results are presented in Table 8, where the value of the minimum obtained and its location are compared for each method.

Both methods lead to similar results. Two optimal locations are found, the first one when the capillaries and the chambers length are equal and the second when the capillaries and chambers diameter are equal. No significant difference in sensor performance is noted in the two solutions obtained.

The photoacoustic cell could be approximated by a spring-mass system, where capillaries are the mass and chambers the springs. The two optimal solutions give a close equivalent mass, which may explain that the sensor behavior is nearly the same for both geometries. Optimums lie close to the parameters space boundaries, but it is not possible to relax them, as the bounds have been chosen to fulfill some integration requirements of the gas sensor in a higher level system. In terms of algorithm performances, Gutmann method adapted to multifidelity produced equivalent results to the well known EGO and somewhat more accurate minimum location. This first comparison would interestingly be extended to a higher dimensional problem, where the co-RBF seems to be more accurate.

8 Conclusion

A methodology for multifidelity surrogate model based on RBF has been proposed. The metamodel is constructed using an auto-regressive model that was first introduced for co-kriging. A comparison on an analytical benchmark shows that co-kriging and co-RBF bring similar results on low dimensional problems. In higher dimension, co-kriging prediction error is lower compared to co-RBF when the number of sampling points is small on our test function. The results presented on Figs. 7, 10, 13 and 16 show a clear difference between leave-one-out and maximum likelihood estimation for metamodels parameters optimization. The LOO tends to understimate the correlation lengths in comparison to the ones obtained with MLE. The LOO procedure used for co-RBF builds an interpolant that is less sensitive to a loss of information in the training dataset. But the variance in the scaling factor, as the number of expensive point increases, indicates that the result may depend too much on the training sample. The MLE brings a more stable value of the scaling factor.

The new method has also been validated on a real engineering problem: the design of a photoacoustic based gas sensor. The benefit of a multifidelity approach to approximate the resonance frequency of the cell with co-RBF has been demonstrated. Then an extension of the Gutmann algorithm to multifidelity framework has been applied to optimize the photoacoustic cell. A benchmark between co-kriging and co-RBF based optimization on the gas sensor design led to two optimal solutions. The efficiency of the co-RBF based method is close to that of EGO procedure, but performed somewhat better on optimums location. In the near future, these promising results will be completed by studying the optimization of photonic planar integrated circuits involving a larger number of parameters.

References

Bauer R, Stewart G, Johnstone W, Boyd E, Lengden M (2014) 3D-printed miniature gas cell for photoacoustic spectroscopy of trace gases. Opt Lett 39(16):4796–4799. doi:10.1364/OL.39.004796

Costa JP, Pronzato L, Thierry E (1999) A comparison between Kriging and radial basis function networks for nonlinear prediction. In: Nonlinear signal and image processing, pp 726–730

Currin C, Mitchell T, Morris M, Ylvisaker D (1991) Bayesian prediction of deterministic functions, with applications to the design and analysis of computer experiments. J Amer Stat Assoc 86(416):953. doi:10.2307/2290511

Couckuyt I, Dhaene T, Demeester P (2014) ooDACE toolbox: a flexible object-oriented kriging implementation. J Mach Learn Res 15(1):3183–3186

Dong H, Song B, Wang P, Huang S (2015) Multi-fidelity information fusion based on prediction of kriging. Struct Multidiscip Optim 51(6):1267–1280. doi:10.1007/s00158-014-1213-9

Dyn N, Levin D, Rippa S (1986) Numerical procedures for surface fitting of scattered data by radial functions. SIAM J Sci Stat Comput 7(2):639–659. doi:10.1137/0907043

Forrester AI, Sóbester A, Keane AJ (2007) Multi-fidelity optimization via surrogate modelling. Proc R Soc A: Math Phys Eng Sci 463(2088):3251–3269. doi:10.1098/rspa.2007.1900

Forrester AI, Sóbester A, Keane AJ (2008) Engineering design via surrogate modelling a practical guide. Wiley, Chichester, West Sussex, England; Hoboken, NJ

Glière A, Rouxel J, Brun M, Parvitte B, Zéninari V, Nicoletti S (2014) Challenges in the design and fabrication of a lab-on-a-chip photoacoustic gas sensor. Sensors 14(1):957–974. doi:10.3390/s140100957

Gutmann HM (2001) A radial basis function method for global optimization. J Global Optim

Hansen N, Kern S (2004) Evaluating the CMA evolution strategy on multimodal test functions. In: Yao X et al (eds) Parallel problem solving from nature PPSN VIII, vol 3242. Springer, LNCS, pp 282–291

Holthoff E, Heaps D, Pellegrino P (2010) Development of a mems-scale photoacoustic chemical sensor using a quantum cascade laser. Sens J IEEE 10(3):572–577. doi:10.1109/JSEN.2009.2038665

Jakobsson S, Andersson B, Edelvik F (2009) Rational radial basis function interpolation with applications to antenna design. J Comput Appl Math 233(4):889–904. doi:10.1016/j.cam.2009.08.058

Jin R, Chen W, Simpson T (2001) Comparative studies of metamodelling techniques under multiple modelling criteria. Struct Multidiscip Optim 23(1):1–13. doi:10.1007/s00158-001-0160-4

Jones DR, Schonlau M, Welch WJ (1998) Efficient global optimization of expensive black-box functions. J Global Optim 13(4):455–492

Kennedy MC, O’Hagan A (2000) Predicting the output from a complex computer code when fast approximation are available. Biometrika 87(1):1–13

Kreuzer LB (1977) The physics of signal generation and detection. In: Pao YH (ed) Optoacoustic spectroscopy and detection. Academic Press, pp 1–25

Krige DG (1951) A statistical approach to some basic mine valuation problems on the witwatersrand. J Chem Metallurg Min Soc South Africa 52(6):119–139

Le Gratiet L, Cannamela C (2015) Cokriging-Based sequential design strategies using fast cross-validation techniques for multi-fidelity computer codes. Technometrics 57(3):418–427. doi:10.1080/00401706.2014.928233

Mackman TJ, Allen CB, Ghoreyshi M, Badcock KJ (2013) Comparison of adaptive sampling methods for generation of surrogate aerodynamic models. AIAA J 51(4):797–808. doi:10.2514/1.J051607

Micchelli CA (1986) Interpolation of scattered data: distance matrices and conditionally positive definite functions. Construct Approx 2(1):11–22. doi:10.1007/BF01893414

Miklós A, Hess P, Bozóki Z (2001) Application of acoustic resonators in photoacoustic trace gas analysis and metrology. Rev Sci Instrum 72(4):1937–1955. doi:10.1063/1.1353198

Morris MD, Mitchell TJ, Ylvisaker D (1993) Bayesian design and analysis of computer experiments: use of derivatives in surface prediction. Technometrics 35(3):243–255

Powell MJD (1987) Radial basis functions for multivariable interpolation: a review. In: Mason J C, Cox M G (eds) Algorithms for approximation. Clarendon Press, New York, pp 143–167

Powell MJD (2001) Radial basis function methods for interpolation to functions of many variables. In: HERCMA, pp 2–24

Regis RG, Shoemaker CA (2006) Improved strategies for radial basis function methods for global optimization. J Glob Optim 37(1):113–135. doi:10.1007/s10898-006-9040-1

Rippa S (1999) An algorithm for selecting a good value for the parameter c in radial basis function interpolation. Adv Comput Math 11(2–3):193–210

Rouxel J, Coutard JG, Gidon S, Lartigue O, Nicoletti S, Parvitte B, Vallon R, Zéninari V, Glière A (2016) Miniaturized differential Helmholtz resonators for photoacoustic trace gas detection. Sens Actuat B: Chem 10.1016/j.snb.2016.06.074

Santner TJ, Williams BJ, Notz WI (2003) The design and analysis of computer experiments. Springer-Verlag, Berlin-Heidelberg

Schonlau M, Welch WJ (1996) Global optimization with nonparametric function fitting. In: Proceedings of the ASA, section on physical and engineering sciences, pp 183–186

Sun G, Li G, Stone M, Li Q (2010) A two-stage multi-fidelity optimization procedure for honeycomb-type cellular materials. Comput Mater Sci 49(3):500–511. doi:10.1016/j.commatsci.2010.05.041

Vitali R, Haftka R, Sankar B (2002) Multi-fidelity design of stiffened composite panel with a crack. Struct Multidiscip Optim 23(5):347–356. doi:10.1007/s00158-002-0195-1

Wang GG, Shan S (2007) Review of metamodeling techniques in support of engineering design optimization. J Mech Des 129(4):370–380

Xiong S, Qian PZG, Wu CFJ (2013) Sequential design and analysis of high-accuracy and low-accuracy computer codes. Technometrics 55(1):37–46. doi:10.1080/00401706.2012.723572

Zéninari V, Kapitanov VA, Courtois D, Ponomarev YN (1999) Design and characteristics of a differential helmholtz resonant photoacoustic cell for infrared gas detection. Infrared Phys Technol 40(1):1–23

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Durantin, C., Rouxel, J., Désidéri, JA. et al. Multifidelity surrogate modeling based on radial basis functions. Struct Multidisc Optim 56, 1061–1075 (2017). https://doi.org/10.1007/s00158-017-1703-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-017-1703-7