Abstract

We study the causal effect of state-mandated (central) exit examinations (CEEs) on student performance in Germany and find a small positive effect. We also investigate what actually drives this effect. We find that the teachers’ main reaction to CEEs is to increase the amount of homework and to check and discuss homework more often. Students report increased learning pressure, which has sizeable negative effects on student attitudes toward learning. Students who take central exit exams in mathematics like mathematics less, think it is less easy, and are more likely to find it boring.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since the publication of Third International Mathematics and Science Study (TIMSS) and Programme for International Student Assessment (PISA) test results, school reform has gained renewed interest in the German public. In particular, the results of PISA have sparked intense political discussions about the need to reform the German school system. Part of the discussion has focused on insufficient financial resources flowing into the school system, as exemplified by repeated complaints about too large class sizes. Although increasing financial inputs into the education system might raise outputs (measured, e.g., as average student performance) to some degree, it has to be kept in mind that the education system operates under decreasing marginal returns. In a developed country like Germany, it is at most unclear if the returns are sufficiently high to warrant a general increase in the education budget. In fact, estimated effects of school resources on student achievement are often small and sometimes even inconsistent (as exemplified by the class size discussion). Increasing resources alone does not appear to be a very promising approach, especially when dealing with a broad target population (e.g., Hanushek 1996; Hoxby 2000).

An alternative to an input-oriented approach is to change the institutional setup of the school system (or the education system in general). From an economist’s point of view, creating the right incentives for students and schools can increase the average performance with given financial inputs. Thus, changing the institutional setup appears to be a more cost-efficient approach. But which institutions provide the right incentives in schools? In general, economists favor output-oriented governance of the public school system: define the goals of education, give incentives for attainment of these goals, and allow schools to choose the appropriate means to reach these goals. The main thrust is to introduce more competition into the school system and to develop indicators that allow comparing the performance of schools (e.g., standardized tests).

German education policy has reacted to the “PISA shock” with what is sometimes termed a “paradigm shift” (see Kultusministerkonferenz 2005): the move from the old input-oriented to a more output-oriented governance. One key element of this new paradigm are national performance standards, which have become mandatory in the school year 2005/2006 and which define expected competencies and performance levels for students at different ages and in different secondary school tracks (see Section 2 for a description of the German school system). A closely related issue that has received a great deal of attention is setting common standards by establishing state-mandated or central exit examinations (CEEs) throughout the country. This discussion is of particular interest in Germany because federal states that already employed CEEs in the past generally outperformed non-CEE states in standardized achievement tests. In response to PISA, all but one German federal state that have not had CEEs have introduced CEEs. Furthermore, a group of seven German federal states have recently introduced regular standardized tests of student skills at different grades in primary and secondary schools.

The implicit assumption behind these policy changes is that states with CEEs (or standardized tests in general) outperform non-CEE states because of the beneficial effects of CEEs and not because of some other omitted variable at the state level. The theoretical literature almost unanimously shows that CEEs and central standards improve student performance and might even raise welfare (Costrell 1997; Effinger and Polborn 1999; Jürges et al. 2005a). CEEs are purported to function better as incentives for students, teachers, and schools than decentralized examinations (e.g., Bishop 1997, 1999). Students, for example, benefit because the results of CEEs are more valuable signals on the job market than the results of noncentral examinations, simply because the former are comparable. Furthermore, students who have to meet an external standard at the end of their school career have no incentive to establish a low-achievement cartel in class, possibly with the tacit consent of the teachers.

Much of the existing empirical literature has been devoted to estimating the effect of CEEs on student performance without trying to figure out how exactly CEEs work. In this paper, we aim at having a look into the black box and study the possible channels through which central exams raise performance. In particular, we look at differences in teacher and student effort as well as attitudes toward learning that might have beneficial effect on the learning process. For instance, teachers whose students have to face standardized examinations at the end of secondary school might adopt more efficient teaching styles but they might also simply increase the students’ workload.

In this paper, we focus on the effect of exit exams at the end of lower secondary education where a “natural experiment” provided in the German school system helps to infer the causal effect of CEEs on performance and teaching practices. In CEEs, students are generally examined in only one of the two subjects tested in TIMSS, namely, mathematics. We calculate the between-state differences in the mathematics/science differences in test scores, teaching practices (as perceived by students and teachers), student behavior and attitudes. Under fairly weak identifying assumptions described below, these differences-in-differences can be interpreted as the causal effect of CEEs on outcomes.

The paper proceeds as follows: in Section 2, we describe the relevant features of the German school system. Section 3 gives a schematic overview of our conceptual framework of the learning process and Section 4 explains our identification strategy in detail. In Section 5, we give a brief description of the German TIMSS 1995 data and Section 6 contains the estimation results for differences-in-differences in a large number of education process outcomes. Finally, we draw some conclusions in Section 7.

2 Institutional background

We now give a concise description of the German school system, trying to emphasize those aspects that are most relevant for understanding CEEs in the German context (a detailed description of the German school system can be found in Jonen and Eckardt 2006). Figure 1 gives a stylized overview of primary and secondary education in Germany.

All children in Germany attend primary school, which covers grades 1 to 4 or, in some states, grades 1 to 6. There is no formal exit examination at the end of primary school. Rather, students are generally allocated to one of the three secondary school tracks on the basis of their ability and performance in primary school (for an empirical analysis of biases in the secondary school track allocation process, see Jürges and Schneider 2007).

The three main types of secondary school tracks are the basic, intermediate, and academic track, each leading to a specific leaving certificate. The basic track provides its students with basic general education and usually comprises grades 5 to 9 (or 10 in some states). The intermediate track provides a more extensive general education, usually comprising grades 5 to 10. The academic track provides an in-depth general education covering both lower and upper secondary levels and usually comprises grades 5 to 13 (or 12 in some former German Democratic Republic [GDR] states). Depending on their academic performance, students can—at least theoretically—switch between school types.Footnote 1

At the end of lower secondary level, basic or intermediate track students who complete grade 9 or 10 successfully are awarded a leaving certificate. They are only required to take CEEs in some states (Table 1 describes the situation in 1995, the year in which the TIMSS data were collected). Six states had CEEs at the end of the intermediate track, and only four had them at the end of basic track.Footnote 2 Students leaving basic or intermediate track usually embark on vocational training in the “dual” system, so-called because it combines part-time education in a vocational school with on-the-job training with a private or public sector employer.

Academic track students are not issued a leaving certificate after completing lower secondary level, but are admitted to the upper level of secondary education, which eventually leads to a university-entrance diploma. CEEs are most common at the end of upper secondary education. However, as Table 1 shows, decentralized systems of exit examinations at the end of upper secondary education exist as well. In the absence of central exist examinations, teachers devise their own exams, subject to the approval of the school supervisory authority.

German exit examinations never cover all of the subjects taught at school. For the university-entrance diploma, students can choose four or five subjects (the choice is limited and varies from state to state). This leads to self-selection problems, which are unlikely to be solved convincingly with the available TIMSS data. At basic or intermediate track, German and mathematics are always tested in the exit examinations, i.e., mathematics is compulsory for all students in these two school types taking exit examinations. In order to assess the causal effect of CEEs, we will thus concentrate on the mathematics performance, teaching practices, student behavior, and attitudes in basic or intermediate track as the main outcome variables to be affected by CEEs.

3 Conceptual framework

A stylized conceptual model of the education process underlying our study is shown in Fig. 2. Student achievement is typically viewed as the main outcome of the education process and education policy is often evaluated based on this outcome only. What is missing is an analysis of how education policy and institutions are affecting the process of teaching and learning. In the case of CEEs, the earlier empirical literature has mainly analyzed the causal effect of CEEs on student achievement. Theoretical models, however, also consider the channels through which CEEs work in more detail. For instance, CEEs are thought to affect students’ and teachers’ effort and thereby improve student achievement. But raising effort is also costly for students and teachers, as more effort reduces utility. This could result in a more negative attitude of students toward school. However, a better knowledge of, for instance, mathematics might well increase the student’s interest in mathematics and result in a more positive attitude, and teachers might find it more enjoyable to teach more motivated students. If this is the case, CEEs promise to grant a “free lunch.” In this paper, we try to find out how CEEs affect effort, motivation, and attitudes of students and teachers and shed more light on the costs and benefits of CEEs.

While it is widely accepted that the main determinant of individual educational success is parental background, the influence of the parents, i.e., the electorate, on institutions is of importance as well. We indicate this by the (dashed) arrow from parental background to institutions. For estimating the causal effect of CEEs, this constitutes an important potential source of endogeneity of institutions, which we discuss in the next section.

4 Identification

The most basic approach to identify the effect of CEEs on any outcome would be to estimate simple differences between average outcomes in CEE states and non-CEE states, controlling for student background and other variables of interest. Simple differences in outcomes across CEE and non-CEE states are of limited value, however, because they ignore two potentially confounding effects: a composition effect and endogeneity of CEEs. The first problem, the composition effect, stems from the fact that, in CEE states, more students attend basic or intermediate track and fewer students attend academic track than in non-CEE states. Since students are selected into secondary schools mainly on the basis of their achievement in primary school, student achievement in CEE states (conditional on school type) will be higher simply because there are, on average, relatively more able students in each type of school. We will use information on the proportion of students in each school type to account for this kind of composition effect. Different compositions of the student body in German secondary schools across states are interpreted as the result of different critical ability levels α chosen to sort students. As a proxy for α, we will use Φ − 1(1 - a), the a percent quantile of the standard normal distribution where a is the proportion of eighth grade students aspiring to a high school diploma (see Table 1).

Besides the difficulties due to a composition effect, the attempt to estimate the effect of CEE is subject to the fundamental problem of causal inference, namely, that it is impossible to observe the individual treatment effect (Holland 1986). One cannot observe the same teacher or student at the same time as being teacher or student in a state with and without CEE. Only if selection into treatment is purely random, this poses no problem. However, self-selection into treatment is one of the most frequent problems encountered by researchers trying to evaluate the causal effects of policy measures. In our context, this can happen if parents vote with their feet and move to another state in order to send their children to schools with a CEE (or to avoid CEEs). Parents in non-CEE states who live near a CEE state may choose to send their children to school in the neighboring state. However, this will not apply to many parents. In the short run, the treatment status might be considered exogenous, given the institutional arrangement in each state. In the long run, however, institutions can change and affect all parents. But clearly, not only parents can vote with their feet; teachers might well be more mobile than parents when deciding where to work. However, the between-state mobility of teachers, who are mostly state civil servants, is rather limited. As an example, consider the mobility between Bavaria (one of the large southern CEE states) and the rest of Germany (see Table 2). In 2001, only 102 teachers applied to be transferred from a non-Bavarian school to a Bavarian school (this was less than 0.2‰ of all non-Bavarian teachers). Only 22 teachers were granted the transfer. Of about 87,500 Bavarian teachers, only 38 applied to be transferred to another German state. Moreover, the observed mobility has been mainly between Bavaria and neighboring Baden-Württemberg, which is another large CEE state.

Even if mobility of parents and teachers is low, the existence of CEEs might reflect unobserved variables such as the importance attached to education by the electorate of a particular state, i.e., parental attitudes toward education and achievement in school (see the dashed arrow in Fig. 2). If CEEs are correlated with such attitudes, simple differences between CEE and non-CEE states will be a biased measure of the causal CEE effect.

Our strategy to isolate CEE effects from differential parental attitudes and other unobserved variables draws on variation within states. As explained above, the fact that CEEs only apply to a narrow range of subjects offers a source of exogenous variation that can be used to identify the causal effect of CEEs. When mathematics is a CEE subject but science is not and if CEEs have a causal effect, the observed outcome differences should be larger in mathematics than in science.

Formally, our estimator can be described as follows (for simplicity, let us assume for a moment that all outcomes are measured continuously). Consider two regressions: one to explain outcomes related to mathematics \(y_i^m \) and measured at the student level (the index i denotes the student):

and another to explain outcomes related to science \(y_i^s\):

where μ i is some student-specific characteristic (e.g., general ability), X i is a vector of covariates that might affect mathematics and science outcomes differently, C i is a dummy variable for central exams in mathematics, and \(\varepsilon _i^k ,\;k=m,s\) are i.i.d. error terms. Subtracting Eq. 2 from Eq. 1 yields:

where δ is the parameter of interest. The main advantage of this estimator is that each student serves as her own control group. By taking differences, μ i is swept out of the regression. Estimating Eq. 3 allows us to control for a lot of unobserved heterogeneity on the individual level, such as general ability, general attitudes toward learning and academic success, or socioeconomic background. When looking at “subjective” outcome variables, the difference-in-difference estimator has another important advantage: it will sweep out all differences between CEE and non-CEE states that are due to differences in survey response styles across both types of states.

In order for δ to identify the causal effect of CEEs on outcomes, we need identifying assumptions, specifically \(E\left[ {C_i \left( {\varepsilon _i^m -\varepsilon _i^s } \right)} \right]=0\). There are several ways in which this assumption might be violated, depending on the outcome variable. For instance, in the case of student test scores, there could be systematic indirect effects in the form of spillover from mathematics (more general skills) to science (more specific knowledge and skills). Negative spillovers from mathematics to science are also conceivable if students divert resources away from learning science to learning mathematics because the latter is tested against an external, and possibly higher, standard. If mathematics teachers also teach science, spillover can be thought of as teachers transferring more successful teaching strategies from one subject to another. In the analysis of test scores and student attitudes, the above assumption can be violated if CEE and non-CEE states differ systematically in their relative preference for mathematics rather than science. Also, unobserved student background (e.g., innate mathematics and science skills) must not differ between federal states. Usually, one can plausibly assume that such characteristics are equally distributed across German states. But as was mentioned in the discussion of the composition effect, we use selective subsamples of the student population. Mathematics skills may be more important than science skills when students are allocated to secondary school types. If the academic track skims off the students with the best mathematics skills (and mathematics ability is not perfectly correlated with science ability), students in states with a high proportion of students in basic or intermediate track (high α, see above) may have better mathematics skills than their peers in low-α states, but comparable science skills. Finally, it is also important that mathematics and science outcomes are comparable.

The ideal comparison subject for our difference-in-difference-strategy is one (a) for which we have TIMSS test scores and a lot of ancillary information about the teaching process (b) that is “unrelated” to mathematics to avoid problems of knowledge spillover or of teachers teaching both subjects and (c) that is not tested in central exams. Unfortunately, this subject does not exist. The subject that—in our opinion—comes closest to meeting all three requirements simultaneously is biology. However, biology test scores and separate information on hours spent learning biology at home are not available in the TIMSS data. Thus, we use the corresponding information for science in general for our comparisons of outcomes across exam types.

Jürges et al. (2005b) give a detailed discussion of the plausibility of our identifying assumptions with respect to student achievement as the outcome variable. They argue that spillover from good mathematics skills to good performance in the TIMSS science test is likely to be very small, because of the 87 (released) science items, only four require mathematics skills, such as dividing by a fraction (see IEA TIMSS 1998). Negative spillover is likely, so that strictly speaking, we are only able to measure the size effect of a partial introduction of CEEs (that includes the effect of students to divert time away from nontested to tested subjects). Sizeable spillover on the teacher level is probably less of a problem. Less than 15% of the teachers teach both mathematics and biology. Relative preferences for mathematics versus science are most likely to be very similar in CEE and non-CEE states. Mathematics are a core subject in every state, accounting for roughly one-fifth of teaching time in primary schools and about one-seventh of teaching time in lower secondary schools, and there are no significant differences in relative teaching time between CEE and non-CEE states (Frenck 2001). Finally, we can account for the possibility of relative composition or selection effects by controlling for α in our differences-in-differences framework.

5 Data description

The international data set of TIMSS Germany contains data on a total of 5,763 seventh and eighth grade students and 566 teachers in 137 schools, collected in the 1994/1995 school year. Data were collected in 14 of the 16 German states (Baden-Württemberg and Bremen did not participate) and from all major types of secondary schools. However, for reasons explained above, we consider only basic or intermediate track students. Moreover, we deleted data from Saxony (where both mathematics and biology are tested centrally) from our sample.Footnote 3 Our working sample consists of 1,976 students in non-CEE-states and 1,219 students in CEE states. In addition to the actual test results in mathematics and science, the TIMSS data contain a wide range of context variables on student backgrounds and attitudes, as well as on teachers and schools.

Despite the wealth of data available, we take a rather parsimonious approach and select a limited number of control variables for student and school background that have proven to have sizeable explanatory power for student achievement. Table 3 contains variable definitions and descriptive statistics, by the type of exit examination, for these variables. Student background, measured in terms of the number of books at home, differs only slightly by exit examination type—the proportion of students within each range is very similar in CEE and non-CEE states. There are far more students with an immigrant background in the non-CEE group than in the CEE group. This is largely attributable to the relatively low rates of immigration to eastern Germany where most states have CEEs (a legacy of the former GDR education system). Another major difference between students in CEE and non-CEE states is that, in the latter, a larger proportion of students have repeated class at least once.

Table 4 contains variable descriptive statistics for our dependent variables. Exact definitions and operationalizations are shown in Table 7 in the Appendix. The most notable difference between students in states with and without CEEs is their achievement in mathematics and science (scores were standardized to have a mean of 0 and a variance of 1, differences can thus be interpreted in terms of standard deviations). In mathematics, students in states with CEEs score on average nearly 0.6 standard deviations higher than those in states without CEEs. In science, the difference is somewhat less than 0.5 standard deviations. In both types of states, roughly three quarters of the students agree or agree strongly to the statement that they are usually good in mathematics or biology.

There are a number of statistically significant differences between CEE and non-CEE states in teaching practices—as reported by the students. For instance, in mathematics, it appears that CEE students more often copy notes from the board but less often work from textbooks or worksheets on their own. Teachers also appear to give homework less often but homework is more often checked. Overall, however, the percentage differences are relatively small. In biology, the differences are much larger, in particular with respect to giving, checking, and discussing homework. While about half of the students in non-CEE states say that teachers give, check, and discuss homework pretty often or always, 25% to 37% of the students in CEE states do so. Such large differences shed some doubt on the cross-state comparability of the ordinal response scales such as the one used for these question. It rather seems as if there is differential item functioning at work, i.e., “pretty often” may mean different things in absolute terms depending on whether a student lives in a CEE or a non-CEE state.

Another noteworthy difference between students in CEE and non-CEE states is their attitude toward mathematics. CEE students are consistently less likely to like or enjoy mathematics, or to find it an easy subject, but they are more likely to find it boring. Differences with respect to biology are smaller and less often statistically significant. Again, differential item functioning might be an issue here. However, for our difference-in-difference analysis of causal effects of CEEs, this is less of a problem as it might seem at first sight. Since we use intrastudent variation in ordinal judgements, the only measurement assumption we make is that of response consistency, i.e., that students use the same response categories in the same way, independent of the subject they refer to (mathematics or biology/science). Students may differ in the way they use these answer categories, but the construction of our dependent variables allows that, for example, “once in while” means the same frequency to one student as “pretty often” to another. Individual students’ response styles may also differ across questions. For instance, “pretty often” may mean a different frequency when used with the statement “We have a quiz or test” rather than with “The teacher gives us homework.” We only require that “pretty often” means the same when used for the same questions related to mathematics classes and to biology classes.

6 Regression results

Regression results are shown in Table 5. We only report the coefficients for the CEE dummy variable, which measure the effect of CEEs on various dimensions of student achievement, teacher and student behavior, and student attitudes. In other words, Table 5 shows the results of 24 regressions with different dependent variables but the same set of explanatory variables. Besides CEE, we use the explantory variables described in Table 3 above: the number of books in the student’s home, student’s sex, grade, and immigration background, whether a student repeated class, region (East/West Germany), type of track (intermediate/basic), and α, the variable that reflects the selectivity of the student body in intermediate or basic tracks in the respective federal state. With the exception of class behavior, each dependent variable measures achievement, behavior, and attitudes in mathematics relative to biology (or in some instances, science in general). Thus, the CEE coefficient identifies differences-in-differences, as explained above.

Before actually discussing our results, a note on the interpretation of the effects shown for multinomial logit models might be helpful. All models are three-category models. The values shown in Table 5 are relative risks and their standard errors (computed with the delta method). Significance levels are based on t tests using the original logit coefficients, however.

The three categories of the dependent variable are defined in the same generic way. With two four-category outcome variables, there are 16 different combinations of answers. Take self-rated performance as an example. The original items read: “I usually do well in mathematics” and “I usually do well in biology,” respectively. Students are asked whether they “strongly disagree,” “disagree,” “agree,” or “strongly agree” to that statement. We reduce the 16 possible combinations to three:

-

(1)

Agree less to do well in mathematics than to do well in biology (put differently: to state one does worse in mathematics than in biology).

-

(2)

Agree equally to do well in mathematics and biology (put differently: to state one does about equally well in mathematics and in biology).

-

(3)

Agree more to do well mathematics than to do well in biology (put differently: to state one does better in mathematics than in biology).

For example, a student who “disagreed” to both statements is assigned to the second category, a student who “disagreed” to the mathematics item but “agreed” to the corresponding biology item is assigned to the first category, and a student who “strongly agrees” to the math statement but “disagrees” with the biology statement is assigned to the third category, etc.

In our multinomial regressions, the middle category is always the baseline category. In Table 5, we show two relative risks: the first mirrors the effect of CEEs on the probability of thinking one does worse in mathematics than in biology relative to the probability of thinking that one does about equally well in mathematics than in biology; the second reflects the effect on the probability of thinking one does better in mathematics than in biology relative to the probability of thinking that one does about equally well in both subjects. To illustrate, consider the the bivariate relationship between central exit exams and the relative self-evaluation in mathematics versus biology shown in Table 6.

The relative risk of those in CEE states to judge themselves worse in math than in biology can be computed as:

A multinomial regression of the differences in self-ratings on CEE without covariates would yield exactly the same result. Since RR(1) is larger than 1, this means that students in CEE states have a higher risk of thinking they do worse in mathematics than in biology relative to non-CEE students. RR(2) equals 0.829, i.e., students in CEE states have a lower relative risk of thinking they do better in mathematics than in biology than non-CEE students. As a shorthand, we will simply state that students in CEE states are less likely to think they do well in mathematics than students in non-CEE states, bearing in mind that this need not be true in absolute terms but relative to biology or science in general. Although the differences-in-differences risk ratios are admittedly a bit cumbersome to interpret, they have the advantage that, under fairly weak assumptions, we have no differential item functioning problem.

6.1 Objective and self-perceived student achievement

We now discuss our results, starting with student achievement. TIMSS test scores were rescaled to have mean of 0 and a standard deviation of 1. Thus, the difference in mathematics scores between CEE and non-CEE states is 0.11 standard deviations larger than the same difference in science scores. CEE state students thus do relatively better than non-CEE state students, which indicates that there is some causal effect of CEEs on achievement. Comparing this to the one grade year differences in mathematics scores of 0.28 shows that the effect amounts to a little more than one-third of a school year.

One potential objection against this result is that the regression include a small proportion of students (from Bavaria) who will pass CEEs in science. Earlier studies (Jürges et al. 2005b) have addressed this issue by excluding students who are likely to take a CEE in science, e.g., those who (a) say they do well in science or (b) the 40% basic track and 25% intermediate track students who do best in science as measured by their TIMSS test score.Footnote 4 We have tried both sample restrictions. It turns out that imposing the restrictions results in an increased estimate of the effect of CEE on test scores. This is of course expected as we essentially censor the left-hand side variable. However, the results for the other dependent variables in this paper (discussed below) are very similar to those in the basic regressions including all Bavarian students.

Returning to the students self-assessment shows that controlling for covariates does only slightly change the results presented above. Thus, despite the fact that the relative mathematics performance of students in CEE states is superior to that of their peers in non-CEE states, students themselves appear to think the opposite. One explanation for this seemingly contraditory finding is that relative expectations are higher in CEE states, for example, because teachers put more pressure on their students to perform well, knowing that the centralized exams are due in only 1 or 2 years.

6.2 Teaching practices

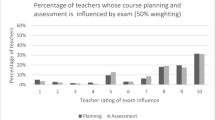

The results for teaching practices (as reported by the students) shows major differences between CEE and non-CEE states for all homework-related items. Risk ratios smaller than 1 in the column labeled “RR(1)” indicate that it is less common in CEE states to give less homework in mathematics than to give the same amount of homework in mathematics and biology. Risk ratios greater than 1 in the column labeled “(RR2)” indicate that it is more common in CEE states to give more homework in mathematics than to give the same amount of homework in mathematics and biology. Both effects go in the same direction. It is thus much more common for teachers in CEE states to give, check, and discuss homework in class. Moreover, effect sizes (relative risks) for these items are substantial.

Overall, it seems as if the importance of homework is the main systematic differences between CEE and non-CEE states. Of the other nine items, only two show significant differences: how often teachers let students copy notes from the board and how often they start a new topic by solving an example. The risk ratios smaller than 1 in the “RR(1)” column show that this is a less common practices in non-CEE states’ mathematics lessons than in CEE states’ mathematics lessons.

6.3 Class behavior (student discipline)

The items concerning the behavior of the students in class can only be analyzed in terms of simple differences between CEE and non-CEE states. This is because there are no corresponding items for science or biology classes in the data. Hence, we use ordered logit models to compare levels of discipline in mathematics classes across states. The results do not suggest that there are any systematic differences between CEE and non-CEE states in student discipline in math classes. However, differential item funtioning across the two types of states could mask factual differences in discipline.

6.4 Student effort and motivation

TIMSS asked students how much time they spend outside school learning mathematics and science. Our results indicate that students in CEE states spend relatively more time learning mathematics at home than their peers in non-CEE states. Two variables that aim at capturing the general motivation for learning mathematics and science are how much students agree to the statement that mathematics/biology is important in everyone’s life and whether students would like a job that involves mathematics/biology. Here, we only find weak and/or inconsistent relationships with the presence of CEEs. Students in CEE states have a slightly lower chance to think that mathematics is importance in everyone’s life but the relationship is not significant. There is also a higher probability that students in CEE states want to get a job that involves mathematics rather than biology, but they are also more likely to want to have it the other way round.

6.5 Student attitudes

The final set of items measures the difference in individual attitudes toward mathematics and science. Here, we find strong and consistent evidence for causal effects of CEEs, and this evidence clearly points into the direction that CEEs impose costs on students. Students in CEE states are consistently less likely to like mathematics, to enjoy doing mathematics, and to find that mathematics is an easy subject. They are also more likely to find mathematics boring. Thus, despite the better performance, CEE state students have a worse attitude toward mathematics.

7 Summary and conclusion

This paper studies the costs and benefits of CEEs at the end of lower secondary school in Germany. The theoretical literature almost exclusively focuses on the benefits of central examinations, which arise in the form of higher student achievement. The costs, however, have been neglected so far by the economic literature. By costs, we mean potentially negative effects on students’ and teachers’ morale and attitudes toward learning.

The identification of (positive or negative) causal effects of CEEs is by no means easy. Caution is warranted when interpreting observed differences between jurisdictions with and without CEEs as the effect of CEEs on student achievement because CEEs are most likely the outcome of a political process (reflecting the preferences of the electorate) and thus potentially endogenous.

In this paper, we make use of some unique regional variation in Germany that enables us to develop a differences-in-differences identification strategy to estimate the causal effect of CEEs on academic performance, teaching practices, and student attitudes. In the German school system, only some states have CEEs and these exams are restricted to core subjects such as German, mathematics, and the first foreign language (mostly English). We use data from the TIMSS 1995 to exploit this institutional variation and uncover the causal effect of CEEs on student achievement in mathematics, teaching practices, and students’ attitudes toward mathematics by comparing a range of outcome variables across subjects and types of exit examination. The fundamental idea is that a CEE affects only mathematics-related outcomes but not science-related outcomes. In most of our analyses, we use biology outcomes as our main comparison subject. Biology is almost never examined centrally and there is no mathematics involved in lower secondary school biology topics.

There are three main insights from this study. First, CEEs have a small but statistically significant causal effect on student test scores. Second, teachers in CEE states are more likely to give, check, and discuss homework. Third, students in CEE states do like mathematics less, find it less easy, and find it more boring than those in non-CEE states. They are also somewhat more diligent in learning mathematics at home. We find only little difference in (student-reported) teaching practices other than those that are homework-related, little difference in student behavior in class, and little difference in general student motivation to learn mathematics.

Broadly speaking, this evidence is consistent with the view that that the main effect of CEEs is that teachers increase the pressure on students rather than employ more sophisticated or innovative teaching methods. However, giving, checking, and discussing homework certainly involves also increased teacher effort. But all in all, achievement gains in mathematics appear to result largely from increased student effort. One (certainly unintended) consequence is that students in CEE states less often think that mathematics is fun to do. This might actually offset some of the positive achievement effects of CEEs. Working harder but being less motivated could be less efficient than working hard but at the same enjoying it.

Notes

A fourth type of track, the comprehensive school, does not appear in our figures. This type offers all lower secondary level leaving certificates, as well as providing upper secondary education. It only plays a minor role in most federal states with less than 10% of all students in grade 8 attending a comprehensive school.

As mentioned in Section 1, CEEs have now been introduced in Saarland (2001), Hamburg (2005), Brandenburg (2005), Hesse (2006), Lower Saxony (2006), Berlin (2007), North Rhine-Westphalia (2007), Bremen (2007), and Schleswig-Holstein (2008).

As has been pointed out by one referee, Saxony would make good comparison state to corroborate our results if students in Saxony (passing central exams in mathematics and science) are compared to those with CEEs only in mathematics. The main problem with this strategy is that the number of independent observations (classes, not students) in Saxony is very low (12). However, there is evidence that, in Saxony, biology homework is taken more seriously relative to mathematics homework than in other CEE states, which supports our hypothesis.

In Bavaria, between 25% (intermediate track) and 40% (basic track) of the students take the CEEs in science.

References

Bishop JH (1997) The effect of national standards and curriculum-based exams on achievement. Am Econ Rev 87(2):260–264

Bishop JH (1999) Are national exit examinations important for educational efficiency? Swed Econ Policy Rev 6(2):349–401

Costrell RM (1997) Can educational standards raise welfare? J Public Econ 65(3):271–293

Effinger MR, Polborn MK (1999) A model of vertically differentiated education. J Econ 69(1):53–69

Frenck I (2001) Stundentafeln der Primar- und Sekundarstufe I im Ländervergleich-eine empirische Studie am Beispiel der Fächer Deutsch und Mathematik. Unpublished master thesis, University of Essen

Hanushek EA (1996) School resources and student performance. In: Burtless G (ed) Does money matter? The effect of school resources on student achievement and adult success. Brookings Institution, Washington, DC, pp 43–73

Holland PW (1986) Statistics and causal inference. J Am Stat Assoc 81(396):945–960

Hoxby CM (2000) The effects of class size on student achievement: new evidence from population variation. Q J Econ 115(4):1239–1285

IEA TIMSS (1998) TIMSS science items: released set for population 2 (seventh and eighth grades). Available at http://timss.bc.edu/timss1/timsspdf/bsitems.pdf. Accessed 1 March 2002

Jonen G, Eckardt T (2006) The education system in the Federal Republic of Germany 2004. Secretariat of the Standing Conference of the Ministers of Education (KMK). Available at http://www.kmk.org/dossier/dossier_en_ebook.pdf. Accessed 12 Jan 2007

Jürges H, Schneider K (2007) What can go wrong will go wrong: birthday effects and early tracking in the German school system. MEA Discussion Paper 138-07, University of Mannheim

Jürges H, Richter WF, Schneider K (2005a) Teacher quality and incentives, theoretical and empirical effects of standards on teacher quality. Finanzarchiv 61(3):298–326

Jürges H, Schneider K, Büchel F (2005b) The effect of central exit examinations on student achievement: quasi-experimental evidence from TIMSS Germany. J Eur Econ Assoc 3(5):1134–1155

Kultusministerkonferenz (ed) (2005) Bildungsstandards der Kultusministerkonferenz Erläuterungen zur Konzeption und Entwicklung. Luchterhand, München/Neuwied

Acknowledgements

We are grateful to Helen Ladd and two anonymous referees for their helpful comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editor: Christian Dustmann

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Jürges, H., Schneider, K. Central exit examinations increase performance... but take the fun out of mathematics. J Popul Econ 23, 497–517 (2010). https://doi.org/10.1007/s00148-008-0234-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00148-008-0234-3