Abstract

This paper tests adverse selection in the market for child care. A unique data set containing quality measures of various characteristics of child care provided by 746 rooms in 400 centers, as well as the evaluation of the same attributes by 3,490 affiliated consumers (parents) in the U.S., is employed. Comparisons of consumer evaluations of quality to actual quality show that after adjusting for scale effects, parents are weakly rational. The hypothesis of strong rationality is rejected, indicating that parents do not utilize all available information in forming their assessment of quality. The results demonstrate the existence of information asymmetry and adverse selection in the market, which provide an explanation for low average quality in the U.S. child care market.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In his seminal paper, Akerlof (1970) shows that in a market with asymmetric information between buyers and sellers, adverse selection is likely to result. If it is difficult for buyers to assess the quality of the product, and if quality is costly to produce, sellers of high-quality products will not be able to command higher prices for higher quality. As a result, high-quality products will withdraw from the market, leaving the “lemons” behind. Although Akerlof’s paper is followed by a number of theoretical articles that extended the idea (e.g., Leland 1979; Heinkel 1981; Wolinsky 1983; von Ungern-Sternberg and von Weizsacker 1985 and Shapiro 1986), only a handful of papers tested the presence of this type of market failure. Bond (1982) and Genesove (1993) analyzed the market for used cars, Greenwald and Glasspiegel (1983) investigated the New Orleans slave market, Chezum and Wimmer (1997) and Rosenman and Wilson (1991) examined the market for thoroughbred yearlings, and the market for cherries, respectively. Paucity of data prevented these papers from employing direct measures of product quality.Footnote 1 Consequently, researchers used indirect methods to test the presence of lemon markets.

The main empirical procedure to test for information asymmetry-based adverse selection has been to investigate the link between the price of the good in question and observable characteristics of the seller, which may provide a quality signal to the buyer. For example, Genesove (1993) suggested that new car dealers differed from used car dealers in the propensity to sell trade-ins on the wholesale market. Used car dealers are expected to keep high-quality used cars and take low-quality ones to block auctions. Thus, if buyers can distinguish between different dealer types, they should pay a price premium for those used cars sold by new car dealers. Similarly, Chezum and Wimmer (1997) investigated the relationship between a yearling’s auction price and observable seller characteristics, and Rosenman and Wilson (1991) analyzed the link between wholesale cherry prices and seller characteristics that may signal quality.

The average quality of center-based child care provided in the U.S. is thought to be mediocre (Whitebook et al. 1990; Mocan 1997; Bergmann 1996). The issue is important because in 2004 about 8.7 million children were enrolled in nursery school, preschool, and kindergarten (U.S. Bureau of the Census 2004). This number is expected to be larger now given the recent welfare reform and increased female labor force participation.Footnote 2 It is argued that high-quality child care programs reduce the likelihood of enrolling in special education programs (Lazar and Darlington 1982), improve the academic outcomes of children (Ramey and Campbell 1991), and in general are positively associated with children’s well-being (Waldfogel 2002; Love et al. 1996). Another argument in favor of policies targeted to increase child care quality is that child care has aspects of a “public good” or “merit good.” This means that high-quality child care not only benefits its private consumers but also the society as a whole through positive externalities. For example, if high-quality care increases the cognitive skills of children and their labor market opportunities as young adults, high-quality child care today would benefit society tomorrow by helping create more educated and productive individuals with more earning power, who are also less welfare-dependent and less crime-prone.Footnote 3

This paper focuses on the market for child care, where the model of information asymmetry between the producer and the consumer described above is particularly applicable. It is documented that the price elasticity and income elasticity of quality (as defined in this paper) are low in child care (Blau and Mocan 2002; Blau 2001, Chapter 4). This suggests a low degree of willingness to pay for quality on the part of the parents. It is plausible to hypothesize that the provider (child care center) is informed about the level of quality of its service, but the consumers (parents) have difficulty in distinguishing between the quality levels of alternative centers. The reason for parents’ lack of information on center quality may be their inability to spend significant amounts of time at the center to observe various dimensions of the operation. Mocan (1995, 1997) shows that it costs $243 to $324 per child per year (in 1993 dollars) to increase the quality of child care services from “mediocre” to “good.” Given that it costs more to produce higher quality, providers would not have an incentive to increase the quality of their services if they cannot charge higher fees. If parents cannot distinguish between high-quality and low-quality centers, they would not be prepared to pay higher fees. Under this scenario, high quality centers exit the market, average quality falls, and eventually the market is filled primarily with “lemons” that provide mediocre quality.

The paper improves upon earlier empirical studies on information asymmetry and adverse selection in a number of ways. First, a direct measure of product quality is used. As described in the Data and the measurement of quality section, the paper employs a unique data set that contains well-developed measures of the quality of child care services. This allows for a direct comparison of the quality of services produced by the provider, with the quality assessment of consumers (parents) to test hypotheses about consumers’ weak and strong rationality. Second, the paper investigates whether or not consumers’ characteristics impact the accuracy of their assessments of quality. Third, firm-specific determinants of consumers’ errors in quality assessment are analyzed. This allows for an investigation as to whether provider characteristics are taken as signals of quality by consumers, which is the primary vehicle to test for adverse selection. Fourth, the detail of the data set enables us to entertain a number of important questions. For example, easy-to-observe and difficult-to-observe aspects of the services can be identified. An example of the former is the cleanliness of the reception area of the child care center, and an example of the latter is the quality of teacher–child interaction. This information allows for an investigation of the extent to which consumers have difficulty in extracting information due to “unobservability.” Similarly, it is tested whether race-matching between parents and the classroom teacher creates a “misplaced trust” for the parents that would inflate their quality ratings, and whether the avenues through which consumers gather information have an impact on the accuracy of their quality assessments. Fifth, estimation of quality production functions enables an analysis as to whether consumer perceptions are consistent with reality.

The investigation of these issues is significant, not only because they provide insights into information asymmetry-based market failure in this particular market, but they can also be helpful for understanding similar markets. The Data and the measurement of quality section describes the data and the measure of quality. The Descriptive statistics of the data section presents the descriptive statistics of the data. The Weak rationality and Strong rationality sections investigate consumers’ weak and strong rationality, respectively. The Determinants of parent prediction error section analyzes the determinants of prediction errors, and the Summary and conclusions section is the conclusion.

2 Data and the measurement of quality

As described by Hayes et al. (1990), Lamb (1998), and Love et al. (1996), there are two distinct concepts of quality in child care. The first one is referred to as “structural quality,” which describes the child care environment as measured by such variables as the child–staff ratio, group size, and the average education of the staff. These structural measures of quality are thought of as inputs to the production of “process quality,” which measures, among other things, the nature of the interactions between the care provider and the child, and activities to which the child is exposed. This paper employs widely-used measures of process quality designed by psychologists, as well as various structural measures of quality as explained below.

The data were compiled with the collaboration of economists, psychologists, and child development experts from the University of Colorado at Denver, Yale University, University of North Carolina at Chapel Hill, and UCLA during the first half of 1993 on a stratified random sample of approximately 100 programs in each participating state, evenly split between for-profit and nonprofit centers. The data set includes information on child care centers located in metropolitan regions in four states: Los Angeles County in California, the Front Range of Colorado, the New Haven-Hartford corridor in Connecticut, and the Piedmont Triad in North Carolina. These regions were selected for their regional, demographic, and child care program diversity. The data set includes only state-licensed child care centers serving infant-toddlers and/or preschoolers that offered services at least 6 h per day, 30 h per week, and 11 months per year. To be used in the sample, a center had to have been in operation at least 1 full fiscal year, and the majority of children had to attend at least 30 h and 5 days per week.Footnote 4

Data collectors obtained in-depth information on centers through on-site interviews with center administrators and owners, and reviews of center payroll and other records. Also, two observers visited each center for 1 day to gather data on classroom and center structural and process quality. As a result, the extraordinary detail of the data allows one to measure classroom quality and other variables with more precision than was possible before.

At each center, two classrooms were randomly chosen, one preschool and one infant-toddler. Infant-toddler rooms were defined as those where the majority of children were less than 2 1/2 years old. Preschool classrooms were defined as those where the majority of children were at least 2 1/2 years old but not yet in kindergarten. If a center did not serve infant-toddlers, two preschool rooms were observed. Data were collected in a total of 228 infant-toddler rooms and 518 preschool rooms.

Trained observers used two instruments to comprehensively assess the process quality of care provided by children: the Early Childhood Environment Rating Scale (ECERS) (Harms and Clifford 1980) and its infant-toddler version, the Infant-Toddler Environment Rating Scale (ITERS) (Harms et al. 1990). The instruments contain questions that measure the quality of personal care routines, furnishings and display for children, language-reasoning experience, fine and gross motor activities, creative activities, social development, and adult needs. Each question is scored on a seven-point scale from inadequate to excellent. These are objective measures and do not involve other possibly intangible aspects of quality parents may value, such as religious affiliation or proximity to home or work. Specific questions included in ECERS and ITERS measure conditions such as the structure of the arrival and leaving times, meals and snacks, nap and rest time, room decoration, keeping children neat and clean, equipment for active play, and block play. The same questions are given to the parents and their evaluations of each of these items are recorded.Footnote 5

Observers’ ratings of the individual questions in ECERS and ITERS are averaged to obtain the room-level measure of process quality for preschool, and infant-toddler rooms, respectively. These are standard aggregate measures of process quality for infant-toddler and preschool rooms, which are argued by developmental psychologists to impact child outcomes such as cognitive development (Hayes et al. 1990). Averaging the answers to the same questions provide information on parents’ overall rating. Some questions in ECERS and ITERS pertain to aspects of care that are more difficult for parents to observe. For example, it is easy for a parent to assess whether the center staff provided friendly greetings for all parents and children during arrival and departure, whether the departure was organized, and whether parents and teachers shared information during arrival and departure. On the other hand, it may be difficult for a parent to determine the quality of the interaction between the child and the teacher in the classroom. Parents’ ability to accurately assess the quality of a given aspect of the child care services may depend upon whether they can easily observe that particular aspect.

For this reason, two other measures of quality are created: one that pertains to easy-to-observe aspects of center operation, and another which pertains to difficult-to-observe aspects. The classification of the questions in ECERS and ITERS into easy-to-observe and difficult-to-observe is done in the following way. Parent surveys allow the parents to indicate if they “don’t know” enough about that particular question to provide a rating. In those instances, instead of rating the question from 1 to 7, the parent chooses the option of “don’t know” on the survey. If a particular question received at least 10% of “don’t know” answers from all parents, that question is classified as unobservable. Using this algorithm, easy- and difficult-to-observe items are identified and their individual ratings are averaged. The results were insensitive to the cutoff value. In addition, a subjective classification of the questions generated very similar results (Mocan 2000). Finally, analyses are performed using individual quality items in ECERS and ITERS instruments.

2.1 What does quality measure?

It can be argued that parents may find certain center characteristics more valuable than quality as measured by child development experts. For example, even if a particular classroom in a given center receives a low-quality rating by child development specialists, if that center is close to a parent’s place of work, the classroom may be of high quality to the parent because the parent could visit the center easily during the day, or can get to the child quickly in case of an emergency. Similarly, if parents care only about a “bare-bones” child care service and a “warm body as a teacher” whose main task is to keep the children safe in the classroom, then information asymmetry might not be a major factor for market failure, because under this scenario, parents do not care about information on quality in the first place. Another way to put this issue is to state that the quality of the services provided for children has dimensions that include parents’ preferences concerning the child care arrangements, such as the travel distance between home and the center, and whether the provider shares the same religion and values of the parents. This suggests that a particular parent’s perception of the quality of a given classroom may diverge from the child care experts’ evaluation.

The surveys given out to the parents include questions on how important parents think particular aspects of ECERS and ITERS are for their children. Parents can choose three alternatives: 1, 2, and 3; 1 indicating “not important,” and 3 indicating “very important.” An overwhelming majority of the parents chose “very important” for most of the questions. For example, for all the questions in ECERS, at least 60% of parents of preschool children indicated that those questions were very important for their children, with the following exceptions: only 53% of the preschool parents indicated that block play was very important for their children; 37% indicated sand and water play were very important; and 59% thought space for child to be alone was very important. For infant-toddler parents, the particular items of the ITERS, which were of the lowest importance for the parents, were: sand and water play, where 54% of the parents indicated that this was very important; and activities for different cultures, where 58% of the parents said this was very important for their children. Thus, parents seem to care about the various dimensions of the classroom operation as measured by ITERS and ECERS. More importantly, there is no reason to believe that parents’ rating of very specific aspects of the classrooms would be confounded by other dimensions parents may find valuable, such as travel distance. For example, there is no reason to think that parents would believe that the quality of meals/snacks is mediocre, but they would, nevertheless, inflate their rating on meal/snack quality because the center is close to their home.

It is reasonable to argue that if the quality of care measured this way has no impact on child outcomes, then there is little reason to worry about provision of low-quality care and the problem of information asymmetry. The literature on child development has not provided conclusive evidence on the impact of quality of care on child development, primarily because of the methods employed. While there is robust positive association between quality as defined by child development experts and various child outcomes, more work is needed to conclusively determine the magnitude of the cause-and-effect relationship.Footnote 6 However, regardless of the strength of the relationship between quality of child care and child outcomes, information asymmetry is an issue because, as discussed above, parents believe that quality measured this way is important to them. Thus, parents’ willingness to pay for child care in general, or for certain aspects of it (e.g., well-supervised nap time), would be curtailed if they could not determine the quality level of the product accurately.

If there was no relationship between quality of care and child outcomes, the problem of information asymmetry becomes analogous to that found in other markets, such as used cars. Similar to a consumer who thinks that a good car engine is important for her, the evidence presented above shows that parents think an overwhelming majority of the items listed in the questionnaire are important for them. Thus, similar to the market for used cars, if parents cannot determine the level of quality of these items, adverse selection in the market will result, and average quality of care will go down. Even if there was no impact of quality on child outcomes, this is a market failure. If, in addition, quality of care has a positive impact on child outcome, then the problem has a “public good” dimension in addition to its “private consumption” dimension because, in this case, high-quality child care creates positive externalities for society.

Data on socio-economic and labor market characteristics of 1,035 teachers and assistant teachers from these 400 centers as well as data on 3,134 parents whose children attended the centers were collected. These data are used to create classroom-specific variables such as average teacher experience, group size, and staff–child ratios as well as parent information. The details of the data are presented in the next section.

3 Descriptive statistics of the data

The descriptive statistics of parents’ assessment of individual items in ITERS and ECERS surveys, and the corresponding actual rating (assigned by trained observers), are displayed in Table 1. The table reports the 19 questions that are identically worded between ECERS and ITERS surveys. Other questions revealed very similar patterns (Mocan 2000). The way the questions are phrased in the surveys given to parents is displayed under “description.” Because of space limitations, parent surveys included shortened descriptions of individual questions in comparison with the ones seen by trained observers. Potential implications of this are discussed below. Immediately evident in Table 1 is the fact that parents overstimate the quality of their children’s classrooms as compared to the rating given by trained observers. This behavior was reported in previous research (Cryer and Burchinal 1997).

Table 2 presents the characteristics of the parents. The Same race variable indicates the matching of race between the parent and classroom teacher. This variable takes the value of 1 if the parent and teacher(s) in the classroom are of the same race, and 0 otherwise. It will enable us to test the hypothesis of whether race-matching between the parent and the teacher has an impact on parent’s quality assessment. Seventy-four percent of mothers work either part-time or full-time. Note that these variables (Part Time, Full Time) pertain to the mother of the child, even when the parent who responded to the survey is the father. The last six variables in Table 2 pertain to the way in which parents gather information about their child’s classroom. In the survey given to parents, they were asked how they “find out what happens in their child’s care.” The alternatives were: talking to the teacher, talking to the director, talking to other parents, watch the classroom at drop-off and pick-up times, drop in on the classroom unexpectedly, and from what the child says or does. These variables are not mutually exclusive.

Table 3 presents classroom characteristics. These are the classrooms affiliated with respective parents. If there is one teacher in the classroom, Teacher age is the age of that teacher. If there is more than one teacher, it is the average age of the teachers in the room. Note that the age, experience, and race information presented in Table 3 pertains to teachers only, and does not include assistant teachers. Each classroom is observed throughout the day by data collectors, and the group size and the child–staff ratio are recorded five different times. The variable Group size is the average value of the recorded group size of the classroom, and Staff–child ratio stands for average staff–child ratio.

Table 4 presents the characteristics of the centers. Publicly regulated is 1 if the center receives public money, either from the state or federal government, tied to higher standards (above and beyond normal licensing regulations), and 0 otherwise. This group includes Head Start programs, centers where 20% or more of their enrollment constitute special needs children, special preschool programs sponsored by the State or Federal Department of Education, and other special programs in Connecticut and California. Publicly owned is set to 1 for centers that are owned and operated by public agencies. Examples include public colleges, hospitals, and city departments of family services.

The variables listed in Table 4 allow for critical tests. For example, adverse selection hypothesis suggests that parents would rely on observable center attributes that are not functionally related to the child care quality as signals of quality (Chezum and Wimmer 1997; Genesove 1993). Thus, it will be investigated whether parents take as signals of quality such center characteristics as being for-profit, church-sponsored, publicly owned, etc. In addition, some of the variables presented in Table 4 are designed to gauge the relationship between parents’ perception of quality and certain aspects of the center that may be thought of as reliable proxies of quality. Examples are Articulate director, Clean entrance, and Coffee and cookies. Data collectors were asked to rate the director’s articulateness from 1 (poor) to 5 (very good). Articulate director is a dummy variable, which takes the value of 1 if the director received 5 from data collectors, and 0 otherwise. Somewhat surprisingly, these three variables are not highly correlated. The simple correlation between Coffee and cookies and Clean entrance is 0.18. The simple correlation between Coffee and cookies and Articulate director is 0.16, and it is 0.19 between Clean entrance and Articulate director. Clean entrance and Articulate director may contain measurement error, as they necessarily involve a judgement on the part of the data collectors. These variables will be employed as explanatory (independent) variables in the analyses; thus, measurement error in these variables would generate a bias in their estimated coefficients toward 0 (towards finding no impact). The results should be interpreted with this caveat in mind.

4 Weak rationality

Parents’ assessment of quality is unbiased if parents do not make systematic errors in their assessment and, on average, predict the true quality accurately. This notion of unbiased prediction corresponds to weak rationality. Note that even if the individuals cannot assess the quality of the good, in equilibrium they know the resultant quality. That is, models of adverse selection satisfy weak rationality. Let PA stand for the parent’s assessment of a particular aspect of the classroom’s operation, and Q be the observer’s rating of the same aspect. Following Keane and Runkle (1998), Mocan and Azad (1995), Feenberg et al. (1989) and the literature they cite, a test for weak rationality can be performed by estimating the regression

where PA kij is the rating of the kth aspect of quality by the jth parent in the ith classroom, Q i stands for the rating of the same aspect of quality in classroom i, and ɛ kij is the white noise error term that impacts on parent’s perceptions. Under the hypothesis of weak rationality β 0=0 and β 1=1; that is, parents do not make systematic errors, and predict the true quality on average. This scenario is represented by points along line A in Fig. 1.Footnote 7 Observer ratings (true quality) are measured on the horizontal axis, and parents’ assessment are measured on the vertical axis. Unbiased assessment (β 0=0, β 1=1) implies that the observations should be scattered around the 45-degree line.

Figures 2, 3 and 4 display parent ratings of selected aspects of the classrooms. The graphs of all other questions, which are not reported in the interest of space, are very similar (for a review, see Mocan 2000). As is evident from the graphs, the expert rating–parent rating pairs are not scattered around the 45-degree line. Rather, parents overestimate actual quality. Figure 2 displays parents’ average rating of all the questions given to them as a function of the classroom averages provided by experts. Figure 3 presents the same information for observable aspects of the classroom quality. Similarly, Fig. 4 plots parents’ average ratings of the unobservable aspects of classroom quality against experts’ rating of the classrooms on the same dimension.Footnote 8

Consistent with the literature on child development, I assume that the ratings provided by trained observers are generated by the following mechanism.

where I represents the information set utilized by trained observers in creating their ratings, e 1 is a white noise error term, and a and b are parameters (the subscripts are suppressed for simplicity). I consists of various classroom and center characteristics that impact experts’ ratings. The same factors are observed by the parents in generating parent ratings. That is,

Solving for I in Eq. 2 and substituting in Eq. 3 yields

Note that Eq. 4 is the same as Eq. 1, where PA is the dependent variable, and Q is the independent variable. However, in this framework the error term u in Eq. 4 is negatively correlated with the right-hand side variable Q, generating a downward bias for the estimated coefficient of Q. This means that to investigate weak rationality, Eq. 1 should be estimated with instrumental variables. The literature on child care quality production functions provides guidance on potential instruments. The formulation depicted in Eq. 2 is a production function of classroom quality, where I consists of classroom and center characteristics (Blau 1997; Mocan et al. 1995). Thus, Eq. 1 is estimated where Q is instrumented by the variables listed in Tables 3 and 4, which are: teacher experience, teacher age, percent black teachers, percent white teachers, percent Asian teachers, staff–child ratio, group size, for-profit status, on-site, publicly regulated, publicly supported, publicly owned, church, national chain, percent subsidized children, percent white children, infant-toddler, and state dummies. Because there is evidence of state-specific variation quality as a function of for-profit status, For-profit is interacted with state dummies. This formulation of the quality production function is also used as the benchmark in evaluating the accuracy of parent assessments, as will be explained in Determinants of parent prediction error below.

The instrumental variables estimates of the coefficients are obtained for each individual quality item that is listed in Table 1. Robust standard errors are adjusted to account for multiple parents being affiliated with a given center.Footnote 9 The point estimates of the slope coefficients were smaller than one. The F statistics for the hypotheses of β 1 =1, and the joint hypothesis of β 0=0 and β 1=1 were large, strongly rejecting the hypotheses in each case. This indicates that parent rating and expert ratings of quality do not have a one-to-one correspondence (β 1 ≠1), they are not scattered around the 45-degree line (β 0≠0, and β 1≠1), and the hypothesis of weak rationality is rejected.Footnote 10

It can be argued that parent ratings may contain measurement error. This could be because the surveys given to parents are abbreviated versions of the instruments used by observers. Therefore, the condensed nature of the survey (in comparison with the one used by the observers) may have generated noise in parent ratings. Measurement error in parent ratings (PA) would not yield a bias in the estimated coefficients of β 0 and β 1 as the noise-in-parent ratings will be absorbed by the error term ɛ in Eq. 1. On the other hand, because parents had the opportunity to observe the center repeatedly before they were given the questionnaire, it can be argued that their evaluations may be more accurate in comparison with those of the professional evaluators who observed the center only once. This argument suggests that observers’ ratings would contain more noise in comparison with that of the parents.Footnote 11 If this kind of random noise in observer rating is prevalent, it would create an attenuation bias for the estimated parameter β 1 of Eq. 1, biasing it towards zero. This would mean that one would incorrectly conclude that parent and observer ratings are systematically different. It would be surprising to face the same type of noise in all the questions listed in Table 1. Nevertheless, the issue is important and warrants further investigation.

To test the potential bias that may have been created by the noise in observer ratings, Eq. 4 is reversed and observers’ ratings are regressed on parents’ ratings. In this specification, if expert rating (Q) contains significant measurement error, the noise would be absorbed by the error term u, and the coefficient of PA would remain unbiased. The results indicated that although the hypothesis that β 1=1 cannot be rejected in most cases, the joint hypothesis that the intercept is zero and the slope is one is strongly rejected in all quality items except for “sand and water play”. Thus, reverse regression estimates also lead to the rejection of the weak rationality hypothesis of parents.

5 The scale effect

The reason for no support of weak rationality may be because parents’ average rating in the sample can be higher than the actual rating assigned by observers, but it can be independent of the actual rating. This hypothesis is rejected because although the estimated coefficients of Q in Eq. 1 are smaller than 1, they are significantly different from 0. In other words, parent ratings are not independent of actual quality. The two are positively correlated, although the relationship is not as strong as required by weak rationality. Another possibility is that parents choose to neglect the lower portion of the scale of 1–7, and they may use only the upper range instead. Their rating may differ from that of the observers such as PA=k+Q, where PA is parent rating, Q is the actual (observer) rating, and k>0. In this case, β 0 ≠ 0, but β 1=1 in Eq. 1, and one would observe a relationship between parent assessment and true quality, such as the one displayed by the dots around line B in Fig. 1 given a positive k. For example, line B in Fig. 1 depicts a situation in which parents overrate quality by 2 points in comparison with trained observers. In this example, because the scale has an upper-bound of 7, parent ratings would be equal to 7 when the actual quality is equal to or greater than 5.

The hypothesis that parents use a different scale can be tested by estimating the following equation.

where the notation is the same as before, and D is a dichotomous variable to indicate the threshold level of actual quality, beyond which parent ratings always equal to 7.

Equation 5 is estimated by instrumental variables to test the hypotheses that parents overrate actual quality by 1, 2, 3, or 4 points. For example, if parents overrate by 1 point, β 0=1 and, in this case, the slope becomes horizontal after actual quality is equal to 6 [(β 1+δ)=0 when Q≥6 and D=1]. If parents overrate by 2 points (D=1 when Q≥5), β 0=2 and (β 1+δ)=0 when Q≥5, and so on. For each question given to the parents, using the notation of Eq. 5, the following six tests are conducted: (1) β 1=1; (2) β 0=4,3,2, or 1; (3) joint test for (1) and (2); (4) (β 1+δ)=1, (5) (β 1+δ)=0 and (6) joint test for (5) and (β 0+γ)=0. The first test investigates whether the pre-break slope is equal to 1 (see line B in Fig. 1). The fourth test is to see if the post-break slope is equal to 1, and the fifth test investigates whether the post-slope is zero (such as the horizontal segment of line B in Fig. 1). The results, which are not reported in the interest of space, demonstrate that in about 70% of the attributes examined, we cannot reject the hypothesis that β 1=1 in the pre-cutoff region. Similarly, for the majority of the questions, we cannot reject the hypothesis that (β 1+δ)=0, indicating that after the threshold quality parents assign ratings that become independent of actual quality. (This is the horizontal segment of line B in Fig. 1).To present this information visually, Fig. 5 displays the predicted values of Colorado preschool parents’ total quality assessment as a function of actual quality obtained from the instrumental variables estimates of Eq. 5 with a threshold of actual quality equal to 4. Thus, the analysis performed in this section provides evidence indicating that parents use a different scale in comparison with trained observers; and, adjusting for the scale effect, there is evidence for parent weak rationality.

6 Strong rationality

Consider Eq. 6 below.

which states that classroom quality, Q, depends on structural room characteristics, S (e.g., staff–child ratio, group size, teacher experience, etc.), and the characteristics of the center, R (e.g., for-profit vs nonprofit, publicly regulated, publicly owned, percent subsidized children, etc.). Equation 6 is a standard quality production function (e.g., Blau 1997; Mocan et al. 1995).

Equation 7 below postulates that parents’ assessment of center quality (PA) depends on the same factors as in Eq. 6 as well as parent characteristics, Z, such as age, race and education.

Thus, Eqs. 6 and 7 are more general formulations depicted by Eqs. 2 and 3.

A test for strong rationality of parent assessment of quality can be performed by investigating whether the actual quality (Q) and its assessment by parents (PA) are governed by the same regression relationship. Note that parameterization of Eqs. 6 and 7, and subtracting Eq. 6 from Eq. 7 yields

where the subscript icj represents the jth parent affiliated with the ith room in the cth center.

The left-hand-side of Eq. 8 is the difference between parent assessment of quality and actual quality, which is the prediction error. A rationality test can be performed by testing the null hypothesis that (δ 0=δ 1=δ 2=0) in Eq. 8. That is, classroom and center characteristics should be orthogonal to parental prediction errors if parents are strongly rational. To give an example, if religious centers are of high quality as rated by trained observers in Eq. 6, and if parents believe that that is the case in Eq. 7, then the difference between parent rating and observer rating (parent error) in Eq. 8 should not depend on religious affiliation of the center. This procedure, which is employed by Mullineax (1978), Feenberg et al. (1989), Mocan and Azad (1995), and Keane and Runkle (1998), is a test of strong rationality. Failure to reject the null hypothesis gives support to strong rationality and implies that parents utilize all available information efficiently in forming their assessments of center quality.

Estimation of Eq. 8 separately for all quality items listed in Table 1 using OLS revealed that in all cases the hypothesis of strong rationality is soundly rejected.Footnote 12 Specifically, for individual questions such as “furniture for routine care,” and “softness in room,” as well as aggregate quality indices, the hypothesis that center and classroom characteristics are orthogonal to parent prediction error is strongly rejected. The extent of parent overprediction is negatively correlated with the level of quality. That is, as Figs. 2, 3 and 4 demonstrate, the tendency to overestimate quality gets smaller as the level of actual quality gets higher. To account for this potential censoring, regressions are estimated with the inclusion of actual quality as an additional control variable. The results remained the same. Alternatively, tobit specifications are estimated where observations are considered censored when parents assign a rating of 7 (which is the upper limit), or when actual quality is greater than 4, 5, or 6.Footnote 13 In each case, the hypothesis of strong rationality is rejected.

Finally, parent prediction errors are classified into three categories based on their relative accuracy. The first category consists of prediction errors, which are more than one-half standard deviation below the mean error. The second category consists of errors, which are one-half standard deviation around the mean, and the third category contains errors, which are more than one-half standard deviation above the mean. Put differently, the first category consists of inaccurately conservative predictions, the second category contains errors that are relatively more accurate, and the third category consists of highly inaccurate overpredictions. For total quality, the mean prediction error is 1.14 and its standard deviation is 1.42. The first category consists of parents with prediction errors ranging from −5.21 to 0.43. The range of the errors in the second category is 0.43 to 1.85; and the third category contains prediction errors ranging from 1.85 to 5.43. About 30% of the parents fall in the first category, 40% are in the second category, and 30% are in the third category (I call this classification Model 1). Using this classification, ordered-probit models are estimated separately for each question, and likelihood-ratio tests are applied to test the hypothesis of the independence of the prediction error from the explanatory variables. To control for the impact of the level of quality, each regression also included the corresponding measure of actual quality. Standard errors are corrected for clustering at the center level. The results were highly consistent with those reported earlier; that is, the hypothesis of strong rationality is rejected in all cases.

Ordered-probit models are also estimated where parent prediction errors are classified using an absolute criterion. Specifically, I classified parents into the first category if their rating was more than 1/2 standard deviation below the actual quality. They belonged to the second group if their rating was within 1/2 standard deviation (plus or minus) of actual quality; and they are assigned to the third category if their rating was more than 1/2 standard deviation above the rating of that particular quality item. (This will be termed Model 2). In this algorithm, about 12% of the parents fell into the first category, 21% were in the second category, and 67% were in the third category. The results were very consistent with those obtained from Model 1 specification, rejecting the hypothesis of strong rationality. These results imply that there exists information in the explanatory variables that can be extracted by parents to improve the accuracy of their evaluations.

7 Determinants of parent predication error

Given that the hypothesis of strong rationality is rejected, it is important to investigate the determinants of parents’ prediction error. To that end, models are estimated where the difference between individual parent ratings and actual quality (parent prediction error) is explained by parent, classroom, and center characteristics. Following previous empirical literature on asymmetric information, adverse selection in the market for child care suggests that parents rely on center characteristics as predictors of quality. More precisely, detection of a relationship between parent quality assessment and center attributes (e.g., nonprofit status, being a national chain, or being a publicly owned center) is an indication of adverse selection.

Because the quality scale is bounded from above at 7, and in light of the results presented in the Weak rationality section, it is reasonable to assume that parent errors are likely to be censored from above. Put differently, some parents who would have liked to assign a higher rating were forced to assign a rating of 7. For such parents, the observed prediction errors are smaller than the ones that would have been observed otherwise. For example, if parents overestimate quality by 2 on average (as in line B of Fig. 1), then parent prediction errors will have a mean of 2 when actual quality is 5 or less. When actual quality is 6, parents who would have liked to assign 8 can only assign 7 (because 7 is the top of the scale), and when actual quality is 7, there is no room for parent overestimation as the maximum value they can assign is also 7. More specifically, consider Eq. 9 below, where e* stands for the difference between the rating a parent would like to assign (P*) and actual quality (Q).

Thus, e* may be called parent’s “desired” error, and X is a determinant of it. Based on the discussion above, it can be argued that the observed parent rating (P) is equal to the desired parent rating (P*) only if the level of actual quality is low enough. Thus, P=P* if Q≤m, where 1<m<7. For those observations where Q>m, it is assumed that P≠P*. To account for such potential censoring in the dependent variable of Eq. 9, tobit models are estimated with maximum-likelihood with various censoring levels depending on different levels of actual quality, ranging from 3 to 6. The likelihood functions were maximized mostly when actual quality was 4.1. This assumes that parent prediction errors are considered as right-censored when the corresponding actual quality is 4.1 or higher (prediction errors are 2.9 or lower).

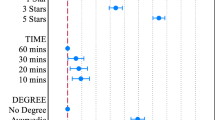

Table 5 displays the determinants of parent prediction error for total quality, observable (easy-to-observe) quality and unobservable (difficult-to-observe) quality in censored-regression models. The results for individual questions are discussed below. Table 5 pertains to the analysis of positive prediction errors. Therefore, it represents the analyses of parents who overestimated the true quality of their children’s classrooms. Thus, a positive coefficient for a particular variable in the table indicates that an increase in that variable generates an increase in the prediction error. The results for the analysis of negative prediction error were consistent with those of positive error; thus, they are not presented in the interest of space.Footnote 14

There are alternative ways to estimate Eq. 9. First, Eq. 9 can be estimated by OLS, without entertaining the possibility of censoring in the dependent variable. This assumes that parent ratings are always equal to their desired ratings (P i =P i *). Second, one can consider the value of the dependent variable as censored whenever the parent assigned the maximum score of 7, and estimate tobit models for this type of censoring. Both models are estimated, which are displayed in Tables 9 and 10 in the Appendix. The results are similar between OLS and tobit with truncation at 7, and they are similar to those presented in Table 5, with some differences in magnitudes and significance, but not the signs. Alternatively, ordered-probit models are estimated where parent prediction errors are classified into three categories using two different algorithms (Models 1 and 2) as described in the previous section.

As explained above, estimation of quality production functions reveal between-state variation in quality by for-profit status. This means that controlling for all other factors, for-profit centers in a particular state may have higher (or lower) levels of quality in comparison with for-profit centers in a different state. Thus, quality production functions estimated later in the paper include state-for-profit interactions. For consistency, the same specification is used in the analyses of parent prediction errors.

To investigate whether low-educated parents react differently to signals coming from centers, the variable High school or less, which identifies parents who have high school education or less, is interacted with Clean entrance, Articulate director, and Coffee and cookies. Similarly, to test the hypothesis whether parent perceptions of the link between quality and Clean entrance, Articulate director, and Coffee and cookies differ by profit status, these variables are interacted with For-profit. Preliminary analyses indicated that the impact of the race-matching is different between minority and white parents. Thus, the variable Same race is interacted with Minority, where Minority is a dummy variable to identify minority parents.

7.1 The impact of parent characteristic

Table 5 shows that when parents are married, this decreases the prediction error by 0.26 points (on a scale from 0 to 6), and each additional child of the family who attends the center increases the prediction error by about 0.13 points. Parents whose children receive nine or more hours of care per week are more accurate predictors. This may be because these parents receive more exposure to the center as the child stays longer at the center. Education has a significant impact on parents’ prediction error. Parents with at least some college education have more accurate assessments in comparison with parents with high school education or less. This could be because more educated parents are better evaluators of their environment. It could also be because parents who have no high school education may have a poor home environment. Thus, the classroom environment of their children may constitute an improvement in comparison with their home environment, which may lead to a more generous rating for such parents.

Asian parents have more accurate assessments in comparison with parents of other races. An interesting result is the positive and significant coefficient of Same race* minority. This means that minority parents’ assessment of quality is inflated if the parent and all teachers in the classroom are minority. This could be because minority parents prefer their children to be taught by a teacher of the same race, or there could simply be a “misplaced trust.”

To make sure this result is race-dependent, the models are estimated separately for White parents and for minority parents. The results, which are not reported, provided a striking contrast. The impact of the Same race variable was 0 for white parents, while it was positive and significant for minority parents for total quality, observed quality, as well as unobserved quality. This means that if a classroom consists of all White teachers, this does not impact on the quality rating of White parents. On the other hand, if a classroom’s teachers consists exclusively of a minority group (Black, Asian, etc.) and if the parent is of the same race, then the parent overestimates total quality by 0.83 points, observed quality by 0.60 points, and unobserved quality by 0.61 points (see Table 5). Regressions for individual quality items revealed that for minority parents a match with teacher race generates an overestimation in the following items: furniture for routine care, room decoration, meals and snacks, nap time, talking with children, art activities, pretend play, activities for different cultures, and daily schedule. Six of these nine items are difficult to observe.

It is possible that the apparent overestimation in minority parents’ rating as a function of teacher’s race is because of some bias in observers’ ratings. More specifically, it may be the case that minority parents do not overestimate the quality when the classroom staff is of the same race, but the observers underrate all-minority-teacher classrooms in comparison with the classrooms with all-White or mixed-race teachers. Almost all observers who gathered data for this project were White females. Thus, it is not possible to investigate directly the impact of observer race on classroom ratings. However, it is possible to analyze whether classrooms where the staff consists exclusively of minorities received lower observer ratings in comparison with other classrooms, holding constant various standard determinants of quality (such as staff–child ratio, group size, teacher education, etc.). I estimated quality production functions for total quality, observable quality, and unobservable quality, which included room and center characteristics, as well as a variable indicating whether the classroom consists of all-minority staff. In no case was the variable representing all-minority staff significant. Dropping the race of the teacher from the models and keeping the “all minority staff” variable did not change the results. Thus, there is no evidence of a bias on the part of the observers pertaining to the rating received by all-minority staff classrooms.Footnote 15

7.2 Sources of information

It is interesting to note that parents who indicate that they drop in on the classroom unexpectedly tend to overestimate total quality by 0.27 points, observable quality by 0.22 points, and unobservable quality by 0.19. No other source of information has a significant impact on parents’ prediction error. Interaction of the age group of the child (the Infant-toddler dummy) with variables measuring sources of information did not produce significant coefficients.

7.3 Center characteristics and signal extraction

The results reveal significant signal extraction from center characteristics, which underscore adverse selection. For example, parents with children at church-based centers and at publicly regulated centers have more conservative quality ratings, while publicly supported centers produce an overrating. The proportion of White children at the center is associated with a perception of higher quality, while the proportion of subsidized children generates a lower parent quality rating. If the director of a nonprofit center is very articulate, this increases parent prediction error by 0.31 points for total quality, 0.26 points for easy-to-observe quality, and 0.52 points for difficult-to-observe quality.

7.4 Room characteristics

The racial composition of classroom teachers has an impact. Keeping the percentage of Black and White teachers the same, an increase in the percentage of Asian classroom teachers (which implies a switch from Hispanic teachers and teachers of other races to Asian teachers) generates a decrease in parents’ overestimation. Holding the staff–child ratio constant, an increase in group size increases parents’ prediction error. To give an example, consider a classroom with ten children and two staff, with a staff–child ratio of 0.2. Consider a second arrangement with 20 children and four staff. This second room has the same staff–child ratio, but the group size is larger by ten. This second arrangement generates an increase in the prediction error by 0.15 points in comparison with the first arrangement. It can be argued that the positive relationship between group size and parents’ overrating of total quality is a reflection of parental preferences. That is, parents simply prefer their children to be in larger classrooms as long as the staff–child ratio remains the same. One possible reason for this may be having access to greater choice of social diversity. Another possibility may be concerns over safety of children if larger classrooms promote heightened feelings of security. When the model was estimated separately for each of the 19 questions listed in Table 1, the group size had a positive impact on parent prediction error for the following five items: room arrangement, diapering and toileting, small muscle activities, music activities, and activities for different cultures. With the exception of the first, these are unobservable items, confirming that a larger group size makes parents overrate classroom quality mostly in unobservable dimensions of classroom operation.

7.5 Ordered-probit estimates

For each of quality items listed in Table 1, two ordered-probit models are estimated: one based on classification of Model 1, the other based on classification of Model 2, as described in the Strong rationality section. The results were similar between the specifications, and they were also consistent with those reported in Table 5, with some differences. For example, marital status and the hours of care provided for children were not significant determinants of parent prediction error in ordered-probit models. On the other hand, sources of information provided a more precise picture. Prediction error is inflated if the parent finds out about the child’s care by talking to teacher or director and the prediction is more accurate if the parent finds out about the child’s care from what the child says or does. The coefficient of Church was negative but not quite significant in ordered-probit models.

7.6 Comparison with production function estimates

The results presented in Table 5 are summarized in Table 6 for total quality, observable quality, and unobservable quality. The table displays the relationships between prediction errors and their determinants. A zero in a given cell indicates no statistically significant relationship, a (+) signifies a positive relationship, and a (−) indicates a negative relationship. For example, in the first column of Table 5 we observe that there is a negative relationship between Publicly regulated and parent perception of quality. This information is presented again in Table 6 with a (−) sign in the first column. Similarly, Table 5 displays a positive relationship between group size and parents’ prediction error, which is depicted by a (+) in column I of Table 6 on the Group size row. Table 6 also displays information on “Reality.” The signs (0, +, or −) under this column are based upon estimation of standard quality production functions (e.g., Blau 1997; Mocan et al. 1995), where quality (total, observable, and unobservable) are explained by staff and center characteristics as explained earlier. More specifically, using observed classrooms as the unit of observation, production functions are estimated using the same explanatory variables that are used in explaining parent perceptions. Parent-specific variables, such as parent age, parent race, etc. are not included because quality is measured at the classroom level. The production function estimates are reported in Table 8.Footnote 16

Table 6 allows the comparison of parent perceptions to “Reality.” For example, column II of Table 6 shows that all else being the same, for-profit centers in California and North Carolina have lower total quality scores. On the other hand, column I of Table 6 shows that parents do not consider for-profit status as a signal of quality. Similarly, production function estimates indicate that for-profit national chains, publicly owned, and publicly-regulated centers produce higher quality. Parents do not consider the first two of these attributes as signals of quality, and they consider the last one as a negative signal of quality. Similarly, parents believe that a larger group size is associated with higher quality, when group size has no statistically significant impact on total classroom quality. On the other hand, parents do not believe a higher staff–child ratio to be a positive signal for quality, when in fact, it is associated with higher quality. The shaded cells highlight the mismatched cases between parent beliefs and reality. Similar patterns are detected for observable as well as unobservable quality. For example, as column VI of Table 6 demonstrates, keeping all else constant, being a for-profit, publicly regulated, publicly supported, publicly owned, or church-sponsored center contains information about difficult-to-observe quality. However, the interpretations of the signals are mostly incorrect.

In addition to the incorrect interpretation of the signals, another source of information problem is the lack of reaction to signals. For example, column II of Table 6 demonstrates that the presence of coffee and cookies, the cleanliness of the reception area, or the articulateness of the director are positive signals of total classroom quality in for-profit centers, and an articulate director is a positive quality signal in nonprofit centers. However, parents with high school education or less do not consider these as signals of quality, and parents with more than high school education take only the director’s articulateness in nonprofit centers as a signal of quality.

One way to summarize Table 6 is to count the center attributes, which are related to quality, and compare it to the number of attributes that parents consider as quality signals. For example, there are 14 signals that are provided by the centers and classrooms pertaining to unobservable quality. Parents with a high school education or less do not consider 11 of these as appropriate signals. They interpret two of these signals correctly and one incorrectly. Furthermore, they believe that four additional center characteristics are signals of quality, when they are not (being a publicly supported center, the proportion of White children, percent Asian teachers and group size; see columns V and VI of Table 6). Parents with more than high school education do not consider 8 of these 14 signals. They interpret four signals correctly and two signals incorrectly. They consider four additional characteristics as signals when they are not. The same pattern of making an attempt, but failing to read signals properly, emerges in easy-to-observe and total quality as well.

Table 6 demonstrates that in case of observable quality, there are three center attributes parents believe are related to quality, which are: publicly regulated, church sponsored, and director’s articulateness. The corresponding number is eight for unobservable quality, indicating that parents are trying to extract signals from center characteristics more heavily in case of difficult-to-observe aspects of quality.

It is also informative to analyze the determinants of parent prediction errors and to compare them to the results obtained from production function estimates for individual questions. As described earlier, Table 1 displays the 19 questions that were worded identically between ITERS and ECERS. Of these 19 questions, ten are unobservable items, and the remaining nine are observable. Table 7 displays the summary results obtained from 16 individual quality items, eight unobservable, and eight observable, which depict the same pattern shown in Table 6. Therefore, taken together, these results indicate that parents unsuccessfully are trying to extract signals from center characteristics.

7.7 Prices as signals of quality

There is evidence in the marketing literature that consumers believe that higher prices are associated with higher quality, especially for new products (e.g., Monroe 1973; Buzzell et al. 1972 and Gerstner 1985). The economics literature also contains papers on product quality signaling, where prices may serve as signals that differentiate between the available quality levels (e.g., Wolinsky 1983; Shapiro 1982; Milgrom and Roberts 1986). To test this hypothesis, fees paid by parents are included into the equations that investigate the determinants of parent prediction error. In no case were the coefficients of fees significant, indicating that after controlling for parent, classroom and center characteristics, fees do not contain additional information on quality. This is consistent with Cooper and Ross (1984), who show that factors that limit the entry of firms, such as steeply shaped average cost curves and a relative abundance of informed buyers, improve the revelation property of prices in a competitive environment. The results of this paper and those of Mocan (1997) show that such conditions are not satisfied in child care market, and, therefore, prices would not convey significant information on quality.

8 Summary and conclusions

This paper investigates whether the low quality in the center-based child care market can be the result of a market failure due to information asymmetry between child care providers and consumers (parents) regarding the quality of services. The paper uses detailed data collected from 400 child care centers in California, Colorado, Connecticut, and North Carolina in the United States. The data contain information on 228 infant-toddler and 518 preschool classrooms and the characteristics of 3,490 parents. Classroom quality is assessed by trained observers using well-established measures, and individual aspects of services provided for children are classified as difficult-to-observe and easy-to-observe quality. The quality measure employed in this paper is widely used as the indicator of child care quality in child development literature as well as economics literature (Blau and Mocan 2002; Mocan 1997 and Love et al. 1996), and is more closely related to child development than structural quality measures such as staff–child ratio and group size.

Parents are asked to evaluate quality using the same measures. Analyses are conducted using overall quality scores, individual scores that make up the total score, as well as the ratings for easy-to-observe and difficult-to-observe dimensions of classroom quality.

A comparison of parent and observer ratings indicates that parents significantly overestimate quality. However, a closer look suggests that, adjusting for the scale effect, parent ratings parallel observer ratings fairly closely. Put differently, parents use a different scale than trained observers, and adjusting for that scale effect shows that parent irrationality (parents making systematic errors in prediction and not assessing quality accurately) does not have strong support in the data. On the other hand, we soundly reject the hypothesis that parents utilize all available information when forming their assessments of quality.

If parents cannot evaluate the level of quality of the services provided for their children, they will not be willing to pay a premium for high quality. Because it is costly to produce quality (Mocan 1997), centers will not have an incentive to produce high quality in the absence of the demand for it. Thus, high-quality centers cannot exist, generating an adverse selection where the market is filled with low-quality providers. If consumers associate observable provider characteristics with the quality of services, this is considered as evidence on adverse selection.

The results present strong and interesting evidence on this issue. The analysis of parents’ prediction errors demonstrates that parents believe that certain center and room characteristics are indicators of quality. The paper also investigates the determinants of true quality by estimating room-level quality production functions. Comparisons of parent beliefs and the factors that impact classroom quality and its components reveal interesting patterns. For example, parents in publicly regulated centers underestimate quality when production function estimates reveal that these centers have higher quality. Similarly, parents do not consider publicly owned status as a positive or negative signal of quality, while these centers actually produce higher levels of quality. These patterns are summarized in Tables 6 and 7.

The evidence that emerges from the project is that: (1) Parents are weakly, but not strongly, rational. (2) Parents are trying to extract quality signals from room and center characteristics. These attempts are, for the most part, unsuccessful. Such results provide evidence for adverse selection in the market. (3) Parent characteristics, such as education and marital status, affect the accuracy of the predictions. (4) Parent race, and race-matching with classroom teachers, have a significant impact on quality assessment. For example, minority parents have significantly higher quality assessments if the classroom teacher is of the same race, suggesting that race-matching between minority parents and teachers generates an unfounded trust for minority parents. This result is not due to trained observers’ negative bias towards classrooms with minority teachers. There is no race-matching impact for white parents. (5) Parents attempt to associate provider attributes with child care quality more so for difficult-to-observe items of quality.

These results indicate that the market for center-based child care has aspects of a “market for lemons.” The information asymmetry between the parents and the centers regarding the quality of services forces parents to try to extract signals from observable center and classroom characteristics. These attempts are, for the most part, unsuccessful, as parents associate certain center characteristics with quality when they are not, and they do not read other signals of quality.

This body of evidence suggests that the low average quality of child care may be attributable to information asymmetry between the consumer and the producers, and, therefore, points to specific policy remedies. Given that parents strongly reveal their beliefs on the importance of individual items in quality instruments, and given their unsuccessful attempts to extract signals from classroom and center characteristics, making information on quality obtained by expert observers available to parents has the potential of creating a remedy for this market failure.

Notes

Bond (1982) investigated whether or not there was a difference between the maintenance records of pickup trucks that were purchased and used and those that were original-owner trucks. To the extent that maintenance records are proxies of quality, this paper is an exception.

See Blau (2001), Chapter 2 for a detailed overview of the child care market.

See Poterba (1995) for a detailed discussion of the reasons for government intervention in health care and education markets.

Most of the data collectors were individuals who were involved in early childhood education, such as former child care teachers, assistant teachers, or center directors. After a week-long training program, data collectors were required to carry out actual observations and data collection in actual centers. During these practices, inter-rater reliability was evaluated, and site coordinators, who were individuals with experience in administering the survey instruments, provided additional training if the agreement between observers was less than 80%. It should be noted that this is the standard procedure to train data collectors in child development research, and this study arguably provided some of the best training to the data collectors in terms of the duration of the training, emphasis on inter-rater reliability, and providing in-person training (as opposed to training through videos, etc.).

There were no statistically significant differences between centers that participated in the study and centers that declined to participate with respect to such characteristics as the legal capacity of the center, age of the center, auspice type, enrollment, and the age group of children served. Similarly, no systematic state and auspice differences are found in the return rates for parent surveys. Details can be found in Mocan (1997) and Cryer and Burchinal (1997).

Line B is discussed below.

Easy-to-observe aspects for infant-toddler include the following: furniture for routine care; furniture for play and learning; softness in room; room arrangement; arriving and leaving times; keeping children clean and neat; talking with children; small muscle activities; active play activities; chances for children to make friends; teacher’s behavior with children; discipline; and daily schedule. Difficult-to-observe aspects for infant-toddlers include: room decoration; meals and snacks; nap time; diapering and toileting; healthful caring; health rules; safe caring; safety rules; books and pictures activities; art activities for toddlers; music activities; activities with blocks; pretend play; sand and water play; and activities for different cultures. Preschool observable quality includes: arriving and leaving; diapering and toileting; keeping children clean; furniture for routine care; furniture for play and learning; furnishing for relaxation and comfort; room arrangement; room decoration; small muscle activities; space for active play; how teacher supervises active play; pretend play; schedule; how teacher supervises play activities; free-choice play activities; and how pleasant the room feels. Preschool unobservable quality includes: meals and snacks; nap or rest time; helping children to understand talk; helping children learn to talk well; helping children learn to think and reason; teacher’s talking; supervision of small muscle activities; equipment for active play; time for active play; art activities; music activities; block play; sand and water play; space for child to be alone; group times; and activities about different cultures.

The first-stage regressions had explanatory power. The mean R square of all models was 0.29.

When Eq. 1 is estimated by OLS, the estimated coefficients of Q were smaller than the ones obtained from instrumental variables estimation, and the hypothesis of weak rationality was rejected in each case. All of these results are available upon request.

For example, consider the question on napping, which includes aspects of the nap schedule, adult supervision, and the quality of the nap area. Imagine that the accurate rating of this question is a 5 on the scale from 1 to 7, and parents who have the opportunity to observe the center repeatedly give this question a rating of 5 on average. Imagine further, that when the trained observers visited the center, it was a “bad” day for whatever reason, and the center received a rating of 4.

Robust standard errors are adjusted for clustering at the center level.

In the light of the results of the previous section, this formulation conjectures that parents may overestimate by 3, 2, or 1 points. For example, if parents overestimate by 3 points, then, an average parent is going to assign a rating of 7 when actual quality is equal to 4. In this case, when actual quality is 5, the parent is forced to assign 7, despite the fact that she is willing to assign 8. In this example, censoring exists when actual quality is 4 or greater.

The percentage of negative prediction errors was 20 in total quality, 16 in observable quality, and 24 in unobservable quality.

The amount of information parents extract from centers and classrooms may depend on their children’s tenure at the center. However, the data set does not contain information on time spent at the center to analyze this aspect.

References

Akerlof GA (1970) The market for ‘Lemons’: quality uncertainty and the market mechanism. Q J Econ 84(3):488–500

Beckman SR (1992) The sources of forecast errors: experimental evidence. J Econ Behav Organ 19:237–244

Bergmann BR (1996) Saving our children from poverty: what the United States can learn from France. Russell Sage Foundation, New York

Blau DM (1991) The economics of child care. Russell Sage Foundation, New York

Blau DM (1997) The production of quality in child care centers. J Hum Resour 32(2):354–387

Blau DM (2001) The child care problem: an economic analysis. Russell Sage Foundation, New York

Blau DM, Mocan HN (2002) The supply of quality in child care centers. Rev Econ Stat 84(3):483–496

Bond EW (1982) A direct test of the “Lemons” model: the market for used pickup trucks. Am Econ Rev 72(4):836–840

Buzzell RD, Nourse REM, Mathews JB, Levitt T (1972) Marketing: a contemporary analysis. McGraw-Hill, New York

Chezum B, Wimmer B (1997) Roses or lemons: adverse selection in the market for thoroughbred yearlings. Rev Econ Stat 79(3):521–526

Cooper R, Ross TW (1984) Prices, product qualities and asymmetric information: the competitive case. Rev Econ Stud 51(2):197–207

Cryer D, Burchinal M (1997) Parents as child care consumers. Early Child Res Q 12(1):35–58

Feenberg DR, Gentry W, Gilroy D, Rosen HS (1989) Testing the rationality of state revenue forecasts. Rev Econ Stat 42:429–440

Genesove D (1993) Adverse selection in the wholesale used car market. J Polit Econ 101(4):644–665

Gerstner E (1985) Do higher prices signal higher quality? J Mark Res 22(2):209–215

Greenwald BC, Glasspiegel RR (1983) Adverse selection in the market for slaves: New Orleans, 1830–1860. Q J Econ 98(3):479–499

Harms T, Clifford R (1980) Early childhood environment rating scale. Teachers College, New York

Harms T, Cryer D, Clifford R (1990) Infant/toddler environment rating scale. Teachers College, New York

Hayes CD, Palmer JL, Zaslow ML (eds) (1990) Who cares for America’s children? Child care policy for the 1990s. National Academy, Washington, DC

Heinkel R (1981) Uncertain product quality: the market for lemons with an imperfect testing technology. Bell J Econ 12(2):625–636

Keane MP, Runkle DE (1998) Are financial analysts’ forecasts of corporate profits rational? J Polit Econ 106(4):768–805

Lamb ME (1998) Nonparental child care: context, quality, correlates, and consequences. In: Sigel I, Renninger K (eds) Damon W (series ed) Child psychology in practice. Handbook of child psychology, 5th edn. Wiley, New York

Lazar I, Darlington R (1982) Lasting effects of early education: a report from the consortium for longitudinal studies. The University of Chicago Press, Chicago

Leland HE (1979) Quacks, lemons and licensing: a theory of minimum quality standards. J Polit Econ 87(6):1328–1346

Love JM, Schochet PZ, Meckstroth AL (1996) Are they in any real danger? what research does- and doesn’t-tell us about child care quality and children’s well-being. Manuscript: Mathematica Policy Research, Princeton, New Jersey

Milgrom P, Roberts J (1986) Price and advertising signals of product quality. J Polit Econ 94(4):796–821

Mocan HN (1995) Quality adjusted cost functions for child care centers. Am Econ Rev 85(2):409–413

Mocan HN (1997) Cost functions, efficiency, and quality in day care centers. J Hum Resour 32(4):861–891

Mocan HN (2000) Information asymmetry in the market for child care. Final Report, Smith Richardson Foundation

Mocan HN, Azad S (1995) Accuracy and rationality of state general fund revenue forecasts: evidence from panel data. Int J Forecast 11:417–427

Mocan HN, Burchinal M, Morris JR, Helburn SW (1995) Models of quality in center child care. In: Helburn SW (ed) Cost, quality, and child outcomes in child care centers, technical report. Department of Economics, Center for Research in Economic and Social Policy, University of Colorado at Denver, Denver, Colorado

Monroe KB (1973) Buyers’ subjective perceptions of price. In: Kassarjian HH, Robertson TS (eds) Perspectives in consumer behavior. Scott Foresman, Glenview, Illinois, pp 23–42

Mullineaux DJ (1978) On testing for rationality: another look at the Livingston price expectations data. J Polit Econ 86:329–336

Poterba J (1995) Government intervention in markets for education and health: how and why. In: Fuchs (ed) Individual and social responsibility. Chicago, University of Chicago, Illinois, pp 277–304

Ramey C, Campbell F (1991) Poverty, early childhood education and academic competence: the Abecedarian experiment. In: Houston (ed) Children and poverty: child development and public policy. Cambridge University Press, New York, pp 190–221

Rosenman RE, Wilson WW (1991) Quality differentials and prices: are cherries lemons? J Ind Econ 39(6):649–658

Shapiro C (1982) Consumer information, product quality and seller reputation. Bell J Econ 13:20–35

Shapiro C (1986) Investment, moral hazard, and occupational licensing. Rev Econ Stud 53(5):843–862

Smith K (2002) Who’s minding the kids? Child care arrangements: spring 1997 Current Population Report 1970–1986. U.S. Census Bureau, Washington, DC

U.S. Bureau of the Census (2004) American community survey. Washington DC

von Ungern-Sternberg T, von Weizsacker CC (1985) The supply of quality on a market for “Experienced Goods”. J Ind Econ 33(4):531–540

Waldfogel J (2002) Child care, women’s employment and child outcomes. J Popul Econ 15:527–548

Weisbrod BA (1988) The nonprofit economy. Harvard University Press, Cambridge, Massachusetts

Whitebook M, Howes C, Phillips D (1990) Who cares? Child care teachers and the quality of care in America. Final report of the National Child Care Staffing Study. Child Care Employee Project, Oakland, California