Abstract

The recognition of forest species is a very challenging task that generally requires well-trained human specialists. However, few reach good accuracy in classification due to the time taken for their training; then they are not enough to meet the industry demands. Computer vision systems are a very interesting alternative for this case. The construction of a reliable classification system is not a trivial task, though. In the case of forest species, one must deal with the great intra-class variability and also the lack of a public available database for training and testing the classifiers. To cope with such a variability, in this work, we propose a two-level divide-and-conquer classification strategy where the image is first divided into several sub-images which are classified independently. In the lower level, all the decisions of the different classifiers, trained with different features, are combined through a fusion rule to generate a decision for the sub-image. The higher-level fusion combines all these partial decisions for the sub-images to produce a final decision. Besides the classification system we also extended our previous database, which now is composed of 41 species of Brazilian flora. It is available upon request for research purposes. A series of experiments show that the proposed strategy achieves compelling results. Compared to the best single classifier, which is a SVM trained with a texture-based feature set, the divide-and-conquer strategy improves the recognition rate in about 9 percentage points, while the mean improvement observed with SVMs trained on different descriptors was about 19 percentage points. The best recognition rate achieved in this work was 97.77 %.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the past decade, most of the applications of computer vision in the wood industry have been related to quality control, grading, and defect detection [1–5]. However, more recently the industry and supervisory agencies presented another demand to the academia, i.e., the automatic classification of forest species. Such a classification is needed in many industrial sectors, since it can provide relevant information concerning the features and characteristics of the final product [6].

Supervisory agencies have to certify that the wood has been not extracted illegally from the forests. Besides, the industry spends a considerable amount of money to prevent frauds where wood trades might mix a noble species with cheaper ones. As stated by Paula et al. in [7], identifying a wood log or timber outside of the forest is not a straightforward task since one cannot count on flowers, fruits, and leaves. Usually this task is performed by well trained specialists but few reach good accuracy in classification due to the time it takes for their training, hence they are not enough to meet the industry demands.

To surpass such difficulties, some researches have begun to investigate the problem of automatic forest species recognition. In the literature this problem is addressed through two main approaches: spectrum-based processing systems and image-based processing systems.

In the first case, a proper source of radiation is used to excite the wood surface to analyze the emitted spectrum. The techniques are mainly based on vibrational spectroscopy methods, such as Near Infra Red (NIR) [8], Mid-IR [9, 10], Fourier-transform Raman spectroscopies [11], and fluorescence spectroscopy [6]. The acquisition system of such methods is usually composed of a spectrometer (e.g., Ocean Optics USB2000), a laser source, and an optical filter. All these devices should be placed in an angle \(\alpha \) and distance \(z\) capable to maximize the overall power of the signal captured by the spectrometer according to the focal length of its objective lens.

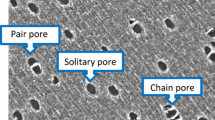

In the second case, the characteristics (color, texture, morphology, etc.) of the wood are acquired by a microscope or a camera with an illumination system. The acquisition protocol for microscopic images is quite complex since the wood must be boiled to make it softer and then the wood sample is cut with a sliding microtone to a thickness of about 25\(\upmu \) (\(1 \upmu = 1\times 10^{-6}{\hbox {m}}\)). Thereafter, the veneer is colored using the triple staining technique, which uses acridine red, chrysoidine, and astra blue. Finally, the sample is dehydrated in an ascending alcohol series and then the image is acquired from sheets of wood using a microscope. This process produces an image full of details that can be used for classification. Figure 1a shows an example of an microscopic image of the Pinacae Pinus Taeda.

Martins et al. [12] introduced a database of microscopic images composed of 112 forest species and reported recognition results ranging from 70 to 80 % using different classifiers and Local Binary Patterns as features and about 85 % using Local Phase Quantization (LPQ) [14]. One advantage of the microscopic approach lies in the fact that it allows the human expert to label the database in several different levels of the botanic, for example, Family, Sub-family, Gender, and Species.

The complexity of the acquisition protocol used in the microscopic approach does not make it suitable for the use in the field, where one needs less expensive and more robust hardware. To overcome this problem some authors [15–19] have investigated the use of macroscopic images to identify forest species. Figure 1b shows a macroscopic image of the same Pinacae Pinus Taeda species acquired in laboratory. As one may see the macroscopic image presents some significative loss of information related to specific features of the forest species, when compared to the microscopic sample. Moreover, when the whole acquisition process is done in the field, as proposed in this paper, it is possible to observe an additional loss in terms of quality (see Fig. 1c). Basically, it results from the use of less expensive devices and rough tools (saws, sandpapers) in the cutting process. These tools usually are responsible for additional noise represented by different kinds of marks on the wood.

Tou et al. [15–17] have reported two forest species classification experiments using macroscopic images in which texture features are used to train a neural network classifier. They report recognition rates ranging from 60 to 72 % for five different forest species. Khalid et al. [18] have proposed a system to recognize 20 different Malaysian forest species. Image acquisition is performed with a high-performance industrial camera and LED array lighting. Like Tou et al., the recognition process is based on a neural network trained with textural features. The database used in their experiments contains 1,753 images for training, and only 196 for testing. They report a recognition rate of 95 %. In our preliminary work [7], we have proposed a database composed of 22 different species of Brazilian flora and used Gray-Level Co-occurrence Matrix (GLCM) and color-based features to classify forest species. Our best result, 80.8 % of recognition rate, was achieved by combining GLCM and color-based features. A major challenge to pursue research involving forest species classification is the lack of a consistent and reliable database. Part of this gap was filled by Martins et al. [12] by making available a database of microscopic images composed of 112 species.

In light of this, the contribution of this work is twofold. First, we extend the database of macroscopic images used in [7], which now contains 41 species of the Brazilian flora. This database has been built in collaboration with the Laboratory of Wood Anatomy at the Federal University of Parana (UFPR) in Curitiba, Brazil, and it is available upon request for research purposesFootnote 1. The database introduced in this work makes future benchmark and evaluation possible. Second, we propose a two-level divide-and-conquer classification strategy where the image is first divided into several sub-images which are classified independently. In the lower level, all the decisions of the different classifiers, trained with several different families of textural descriptors (structural, spectral, and statistical), are combined through a fusion rule to generate a decision for the sub-image. Thereafter, the higher-level fusion combines all these partial decisions for the sub-images to produce a final decision.

Inspired on different ensemble methods available in the literature [20–24], the novelty of the proposed classification scheme is the two-level divide-and-conquer strategy. The rationale behind that is to better deal with the classification problem complexity. To this end, the first level reduces the complexity of the classification problem when just sub-problems, or sub-images, representing small regions of the forest species are submitted to the ensemble of classifiers. Local decisions are made based on diverse classifiers trained on different feature sets, while in the second level these local decisions are combined, characterizing a bottom-up problem solution.

Comprehensive experiments using a Support Vector Machine (SVM) classifier show that the proposed strategy achieve compelling results. To have a better understanding of each level of the system, they were evaluated independently. Compared to the best single classifier, which is a SVM trained with a texture-based feature set, the divide-and-conquer strategy improves the recognition rate in about 9 percentage points. The best recognition rate achieved in this work was 97.77 %.

This paper is structured as follows: Sect. 2 introduces the proposed database. Section 3 describes the different families of feature we have used to train the classifiers. Section 4 outlines the proposed method to classify forest species, while Sect. 5 reports our experiments and discusses our results. Finally, Sect. 6 concludes the work.

2 Database

The database introduced in this work contains 41 different forest species of the Brazilian flora which were cataloged by the Laboratory of Wood Anatomy at the Federal University of Parana (UFPR) in Curitiba, Brazil.

Our goal was to define an acquisition protocol that could be used in the field. In this way, one could rely on the automatic identification system to get the results on the fly without sending the wood samples to the laboratory. After some attempts, we arrived at a simple design, which is depicted in Fig. 2a. It contains two halogen lamps positioned on the sides while the wood sample is positioned at the bottom of the box. This configuration provides indirect light and highlights the characteristics of the wood sample (Fig. 2b).

The camera is positioned perpendicularly to the wood sample. The distance from the lenses to the sample is \(\approx 1cm\). The database was collected using a Sony DSC T20 with the macro function activated. The resulting images are then saved in JPG format with no compression and a resolution of \(3{,}264 \times 2{,}448\) pixels.

To date, 2,942 macroscopic images have been acquired and carefully labeled by experts in wood anatomy. Table 1 describes the 41 species in the database as well as the number of images available for each species. The proposed database is presented in such a way as to allow work to be performed on different problems with different numbers of classes. For the experimental protocol, we suggest the following: 35 % for training, 15 % for validation, and 50 % for testing.

Figure 3 shows one example of each species of the database. Differently from the microscopic database image presented in [12], where the color could not be used because of the dye used to produce contrast, in this case color is a useful characteristic that we can rely on to better discriminate the species.

3 Features

In this section, we briefly describe all the feature sets we used to train the classifiers. It can be observed from Fig. 3 that texture and color seem to be the most discriminative features for these images. They are represented here by the use of statistical, structural, and spectral descriptors usually suggested in the literature as the most suitable approaches to represent texture and color. The rationale behind that is the possible combination of complementary information from different families of features. Table 2 summarizes all the features used in our experiments. All feature vectors were normalized in the interval \([-1, 1]\) according to the min–max rule.

3.1 Color features

Three different color representations were assessed in this work. The first two were based on the work presented by Zhu et al. [25]. In such a case, the image is partitioned into \(3 \times 3\) grids. For each grid, we extract three kinds of color moments: mean, variance, and skewness from each color channel (R, G, and B), respectively. Thus, an 81-dimensional grid color moment vector is adopted as feature set. The same procedure is carried out using the Lab color model generating a second feature vector, which is also composed of 81 components.

The third is similar to the one presented in [7]. To calculate it, we have used a more perceptive approach to define the color features. The choice of which features to be used was done by an empirical analysis of histograms collected from channels of different color spaces. The idea was to choose those channels that maximize the inter-class and minimize the intra-class variance, respectively. By the end of this analysis, we have chosen to use the following color channels: green (G) from RGB, saturation (S) from HSV, and luminance (L) from CIELUV.

We also have noticed that different classes have the information concentrated in certain zones of the histogram. To take advantage of this fact, we decided to implement a local analysis by dividing the histogram into two zones and then extracting one feature vector from each zone. The first zone covers those pixels from 0 to 200 while the second zone covers those from 201 to 255. From each histogram three characteristics based on first-order statistics were calculated: average, kurtosis, and skewness. Considering the three color channels mentioned before and the zoning strategy used, in the end we have a feature vector composed of 18 (3 features \(\times \) 3 histograms \(\times \) 2 zones) components.

3.2 GLCM

The Gray-Level Co-occurrence Matrix (GLCM) is a widely used approach to textural analysis. A GLCM is the joint probability occurrence of gray-level \(i\) and \(j\) within a defined spatial relation in an image. That spatial relation is defined in terms of a distance \(d\) and an angle \(\theta \). Given a GLCM, some statistical information can be extracted from it. The most common descriptors were proposed by Haralick [26] and have been successfully used in several application domains. In this work, we have used the following seven descriptors, which provided the best preliminary results: energy, contrast, entropy, homogeneity, maximum probability, correlation, and third-order momentum. Considering that these features were computed in four different angles (\(\theta = 0, 45, 90, 135\)) and \(d=1\), the resulting feature vector is composed of 28 attributes.

3.3 Gabor filters

Like GLCM, Gabor filters are among the mostly used textural descriptors in the literature, specially, for face and facial expression recognition [13, 27]. The Gabor wavelet transform is then applied on the scaled image with 5 scales and 8 orientations through the use of a mask with \(64 \times 64\) pixels (Fig. 4), which results in 40 sub-images. For each sub-image, 3 moments are calculated: mean, variance, and skewness. Thus, a 120-dimensional vector is used for Gabor textural features.

3.4 Local binary patterns (LBP)

The original LBP proposed by Ojala et al. [28] labels the pixels of an image by thresholding a \(3 \times 3\) neighborhood of each pixel with the center value and considering the results as a binary number. The 256-bin histogram of the LBP labels computed over a region is used as texture descriptor. Figure 5 illustrates this process.

The limitation of the basic LBP operator is its small neighborhood which can not absorb the dominant features in large-scale structures. To surpass this problem, the operator was extended to cope with bigger neighborhoods [29]. Using circular neighborhoods and bilinearly interpolating the pixel values allow any radius and number of pixels in the neighborhood. Figure 6 exemplifies the extended LBP operator where \((P,R)\) stands for a neighborhood of \(P\) equally spaced sampling points on a circle of radius of \(R\) from a circularly symmetric neighbor set.

The LPB operator LBP\(_{P,R}\) produces \(2^P\) different configurations corresponding to the \(2^P\) different binary patterns that can be formed by the \(P\) pixels in the neighbor set. However, certain bins contain more information than others, hence, it is possible to use only a subset of the \(2^P\) LBPs. Those fundamental patterns are known as uniform patterns. A LBP is called uniform if it contains at most two bitwise transitions from 0 to 1 or vice versa when the binary string is considered circular. For example, 00000000, 001110000 and 11100001 are uniform patterns. It is observed that uniform patterns account for nearly 90 % of all patterns in the (8,1) neighborhood and for about 70 % in the (16, 2) neighborhood in texture images [29].

Accumulating the patterns which have more than two transitions into a single bin yields an LBP operator, denoted LBP\(^{u2}_{P,R}\), with less than \(2^P\) bins. For example, the number of labels for a neighborhood of 8 pixels is 256 for the standard LBP but 59 for LBP\(^{u2}\). Thereafter, a histogram of the frequency of the different labels produced by the LBP operator can be built.

In our experiments, five different configurations of the LBP operator were considered: LBP\(^{u2}_{8,1}\), LBP\(^{u2}_{8,2}\), LBP\(^{u2}_{16,1}\), LBP\(^{u2}_{16,2}\), and LBP-HF (Histogram Fourier) [30]. The first two produce a feature vector of 59 components while the third and forth ones produce a feature vector of 243 components. The last one, LBP-HF, which is computed from discrete Fourier transforms, is composed of 38 components.

3.5 Fractals

The fractal concept introduced by Mandelbrot [31] provides an interesting textural descriptor since it is able to capture the raggedness of natural surfaces. Many fractal features have been defined in the literature. The three most common are: fractal dimension [32], lacunarity [33], and succolarity [34].

The fractal dimension [31, 32] is the simplest measure in the fractal theory and it can be computed in several different ways. In this work, we use the fractal box counting dimension, which represents the growth in the number of grid cubes intersected by \(X\) as a function of the grid size.

Voss [33] demonstrated that the fractal dimension characterizes only part of the information available in the object. To fill this gap, the author proposed another measure, called lacunarity, which is a measure that characterizes the way in which the fractal set occupies the available topological space. It is a mass distribution function by definition.

Still looking for some complementary information in the fractal theory, Melo and Conci [34] proposed the measure of succolarity, which gives the percolation degree of an image, i.e., how much a given fluid can flow through this image.

The fractal features were extracted using the same channels used for the color features, i.e., green (G) from RGB, saturation (S) from HSV, and luminance (L) from CIELUV. In the case of the fractal dimension, it was computed for three different sizes (2, 3, and 5). This gives us 9 features (three for each channel). The lacunarity was computed for each channel (three components) and the succolarity was computed in four different directions for each channel (top–down, bottom–up, left–right and right–left), summing up 12 components. The final feature vector contains 24 components.

3.6 Edge histograms

An edge orientation histogram is extracted for each image. For this purpose, first, the color space of the input macroscopy image is reduced to 256 gray levels. Then, Sobel operators (\(K_x\) and \(K_y\)) are used to detect the horizontal and vertical edges, as defined by Eq. 1. With the results, it is possible to calculate the orientation of each edge using Eq. 2. After that, a threshold (\(T\)) is applied to \(G(x,y)\) as a filter to eliminate irrelevant information as defined by the Eq. 3. Finally, as in [35], the orientations are divided into \(K\) bins and the value of each bin is computed through Eq. 4.

In this work, the edge orientation histogram is quantized into 36 bins of 10 degrees each. An additional bin is used to count the number of pixels without edge information. Hence, a 37-dimensional vector is used for shape features.

3.7 Local phase quantization

The Local phase quantization (LPQ) [36] is based on the blur invariance property of the Fourier phase spectrum. It has been shown to be robust in terms of blur and according to [37] it outperforms LBP in texture classification. The local phase information of an \(N \times N\) image \(f(x)\) is extracted by the 2D DFT [short-term Fourier transform (STFT)]

The filter \(\Phi _\mathbf{u _i}\) is a complex valued \(m \times m\) mask, defined in the discrete domain by

where \(r= (m-1)/2\), and \(\mathbf u _i\) is a 2D frequency vector. In LPQ only four complex coefficients are considered, corresponding to 2D frequencies \(\mathbf u _1 = [a,0]^T\), \(\mathbf u _2 = [0,a]^T\), \(\mathbf u _3 = [a,a]^T\), and \(\mathbf u _4 = [a,-a]^T\), where \(a = 1/m\). For the sake of convenience, the STFT presented in Eq. 5 is expressed using the vector notation presented in Eq. 7

where \(\mathbf w _\mathbf{u }\) is the basis vector of the STFT at frequency \(\mathbf u \) and \(\mathbf f (\mathbf x )\) is a vector of length \(m^2\) containing the image pixel values from the \(m \times m\) neighborhood of \(\mathbf x \).

Let

denote an \(m^2 \times N^2\) matrix that comprises the neighborhoods for all the pixels in the image and let

where \(\mathbf w _R = Re{[\mathbf w _\mathbf{u _1}, \mathbf w _\mathbf{u _2},\mathbf w _\mathbf{u _3},\mathbf w _\mathbf{u _4}]}\) and \(\mathbf w _I = Im[\mathbf w _\mathbf{u _1}, \mathbf w _\mathbf{u _2},\mathbf w _\mathbf{u _3},\mathbf w _\mathbf{u _4}]\). In this case, \(Re\{\cdot \}\) and \(Im\{\cdot \}\) return the real and imaginary parts of a complex number, respectively.

The corresponding \(8 \times N^2\) transformation matrix is given by

In [36], the authors assume that the image function \(f\mathbf ( x)\) is a result of a first-order Markov process, where the correlation coefficient between two pixels \(x_i\) and \(x_j\) is exponentially related to their \(L^2\) distance. Without a loss of generality, they define each pixel to have unit variance. For the vector \(\mathbf f \), this leads to a \(m^2 \times m^2\) covariance matrix \(C\) with elements given by

where \(||\cdot ||\) stands for the \(L_2\) norm. The covariance matrix of the Fourier coefficients can be obtained from

Since \(D\) is not a diagonal matrix, i.e., the coefficients are correlated, they can be decorrelated using the whitening transformation \(E = V^T \hat{F}\) where V is an orthogonal matrix derived from the singular value decomposition (SVD) of the matrix D that is

The whitened coefficients are then quantized using

where \(e_{i,j}\) are the components of \(E\). The quantized coefficients are represented as integer values from 0–255 using binary coding

Finally, a histogram of these integer values from all the image positions is composed and used as a 256-dimensional feature vector in classification.

3.8 Completed local binary pattern

Different variants of the LBP have been proposed in the literature with the objective of improving such a local texture descriptor. The derivative-based LBP [38], the dominant LBP [39], the center-symmetric LBP [40] and the completed LBP (CLBP) [41] are recent and good examples of contributions in this direction. In special, the CLBP method provides a completed modeling of the LBP, which is based on three components extracted from a local region and coded by proper operators. Similar to LBP, for a given local region, it calculates the difference between the center pixel \(g_c\) and each of its \(P\) circularly and evenly spaced neighbors \(g_p\) located in a circle of radius R, defined as \(d_p = g_p - g_c\). However, here the difference \(d_p\) is decomposed into two components: sign, which is defined as \(s_p = sign(d_p)\), and magnitude, which is defined as \(m_p = \left| {d_p}\right| \). Finally, the complete descriptor is composed of the center gray level (\(C\)), and of these two components derived from the local difference, sign (\(S\)) and magnitude (\(M\)).

The operator related to the component \(C\), named CLBP_C, is defined in Eq. 16, where \(c_I\) corresponds to the average gray level of the whole image.

The operator defined to code the component \(S\) (named CLBP_S) corresponds to the original LBP operator, while the operator defined to code the component \(M\) (CLBP_M) is presented in Eq. 17. In this case, the threshold \(c\) is originally defined as the mean value of \(m_p\) from the whole image.

See [41] for more details about the rotation invariant version of CLBP_M and different schemes to combine the histograms of codes provided by each operator CLBP_C, CLBP_S, and CLBP_M. After evaluating the different configurations suggested in [41], the best results observed in our experiments were obtained with the combination of all components (SMC) using a 3D histogram, while the best values for the parameters P and R, were 24 and 5, respectively.

4 Proposed method

Figure 7 shows a general overview of the proposed method, in which the forest species classification is done based on a divide-and-conquer idea. For this purpose, the input image is divided into \(n\) non-overlapping sub-images with identical sizes, which are themselves smaller instances of the same type of problem. These \(n\) sub-images are then classified by \(k\) different classifiers and then two different levels of fusion, which we call low- and high-level, are used to produce a final decision. Basically, the proposed method classifies the whole image sample throughout the classification of its parts or sub-images.

The fusion scheme based on two levels is used to explore the combination of diverse classifiers trained on complementary color and textural information in the low level, and to deal with the inherent intra-class problem variability by combining the results of the different sub-images in the high level.

Examples of the mentioned intra-class variability are shown in Fig. 8. They usually represent local problems in the image samples. For instance, Fig. 8a is related to changes in the intensity colors motivated by the presence of transitions between heartwood and sapwood, or growing rings in the wood. Figure 8b shows some marks produced by the improper manipulation of the wood samples, while Fig. 8c shows an ill-prepared sample in which one may see the saw marks.

The motivation behind the proposed strategy is that by splitting the images into smaller ones is an interesting way to cope with such a variability since smaller images tend to be more homogeneous, hence, an easier classification problem. The best number of sub-images is a parameter of the system and it will be investigated in our experiments. The low-level fusion combines the outputs of the \(k\) different classifiers. The literature has shown that such a combination can considerably improve the results when complementary classifiers are used [21]. In our case, the complementarity comes from the different families of descriptors used to characterize the texture and colors of the forest species.

In this paper, we are dealing with a 41-class classification problem. Multiple experts, based on different descriptors, produce as output an estimation of posteriori probabilities \(P(\omega _k|x_i)\), where \(\omega _k\) represents a possible class and \(x_i\) is the \(i\)-th expert input pattern. We have tried out several fixed fusion rules when developing our framework, but the best results were always achieved using the Sum rule [20]. This is represented by the Eq. 18, where \(N\) and \(m\) represent the number of classifiers (\(N=12\)) and the number of possible classes (\(m=41\)), respectively.

Finally, we perform the high-level fusion where the \(n\) pieces of the original images are combined to produce a final decision. We exemplify this process in Fig. 9a, where the image was divided into 9 sub-images. In this example, we assume that we have a 3-class problem. The result of the low-level fusion is a list of classes with estimation of posteriori probabilities (Fig. 9b). In this example, the sample depicted in Fig. 9a belongs to class 1, therefore, the sub-images 1, 8, and 9 were misclassified. As we may notice, the sub-image 1 is lighter than the average while sub-images 8 and 9 are darker. This could contribute for the misclassification. But since most of sub-images were correctly classified, the high-level fusion is able to produce the correct decision (class 1). In this case, the Sum rule was used but other rules would produce the same result.

5 Experimental results and discussion

In all experiments, Support Vector Machines (SVM) were used as classifiers. As stated in Sect. 2, the database was divided into training (35 %), validation (15 %), and testing (50 %). Different kernels were tried out, but the best results were achieved using a Gaussian kernel. Parameters \(C\) and \(\gamma \) were determined through a grid search with hold-out validation, using the training set to train SVM parameters and the validation set to evaluate the performance.

For all reported results, we used the following definitions of the recognition rate and error rate. Let \(B\) be a test set with \(N_B\) images. If the recognition system classifies correctly \(N_{rec}\) and misclassifies the remaining \(N_{err}\), then

One of the limitations with SVMs is that they do not work in a probabilistic framework. There are several situations where it would be very useful to have a classifier producing a posteriori probability \(P(class|input)\). In our case, as depicted in Fig. 7, we are interested in the estimation of probabilities because we want to try different fusion strategies like sum, max, min, average, and median. Due to the benefits of having classifiers estimating probabilities, many researchers have been working on the problem of estimating probabilities with SVM classifiers [42]. In this work, we have adopted the strategy proposed by Platt in [42].

To have a better insight of the proposed methodology we divided the experiments into two parts. In the first part, what we call baseline, the system contains only the low-level modules, i.e., the image is not divided into sub-images. Then, in the second part we added the high-level modules and analyzed the impacts of the divide-and-conquer strategy.

5.1 Baseline system

Our baseline system is a traditional pattern recognition system composed of the following modules: Acquisition, Feature Extraction, and Classification. Table 3 reports the performance of the baseline system for all the feature sets presented in Sect. 3.

As one may see in Table 3, the most promising results were achieved by the classifiers trained with the color RGB, color LAB, and CLBP descriptors. The classifier trained on CLBP SMC with 24 neighbors and a radius equal to 5 provided the best recognition rate (88.60 %).

Following the strategy depicted in Fig. 7, we performed the low-level fusion, i.e., the results of the individual classifiers were combined. In this experiment, all classifiers were combined using brute force. As stated before, the best result was provided by the Sum rule. Table 4 reports the Top-5 combination results.

It is possible to observe from Table 4 that the feature sets offer a certain level of complementarity. Their combination brought an improvement of more than 5.5 percentage points when compared to the best individual classifier (CLBP\(_{24,5}\)). It is worth of remark that such a complementarity does not really involve the classifiers with highest individual recognition rates. A clear example is the Fractal feature set, which reaches a poor performance (about 46 %) but is present in three Top-5 ensembles.

5.2 Divide-and-conquer strategy

Once we have analyzed the performance of the baseline classifiers and their combination, let us focus on the two high-level modules (Image Splitting and Image Fusion) illustrated in Fig. 7. An important issue in this strategy is the number of sub-images that the Image Splitting module should generate. In this work we consider the following number of non-overlapping sub-images: \(\{4,9,25,36,49,64,100\}\). Table 5 presents the size in pixels of the sub-images for each configuration.

Following the same idea of assessing the modules step-by-step, in this experiment we first evaluate the impact of the high-level modules using single classifiers (\(k=1\)). Are the high-level modules capable of improving the results using a single classifier? To address this aspect we selected three different feature sets: a color-based (Color RGB), a spectral (Gabor), and a structural (LPB\(_{8,1}\)). The classifiers were then retrained using larger training sets (original training set \(\times \) number of sub-images). The testing images are also divided into \(n\) sub-images and the final decision is achieved using the Sum rule.

Figure 10 shows the performance on the testing set for the three selected classifiers. The recognition rate of the color-based classifier increases as the number of sub-images gets bigger. The best performance, 91.2 %, was achieved when the original image was divided into 100 sub-images (10 \(\times \) 10 matrix). The other two classifiers reached their best performances using less sub-images. The Gabor-based classifier produced its best recognition rate with nine images (3 \(\times 3\) matrix) while the LPB-based classifier achieved its best performance using 25 sub-images (5 \(\times 5\) matrix). Independently of the classifier, Fig. 10 shows clearly that the proposed strategy succeeds in improving the results of all classifiers. Besides, we can notice that there is a best \(n\) for each feature set.

Our final experiment consists in using the full framework proposed in Sect. 4. To this end, we have combined the outputs of the three aforementioned classifiers, i.e., \(k=3\), thus creating four possible outcomes (Color RGB + Gabor; Color RGB + Gabor + LBP; Color RGB + LBP; Gabor + LBP). Figure 11 compares the performance of such a combination for different number of sub-images. In this case, the bests results, was achieved using \(n=25\) and \(n=36\).

Regarding the performance, all ensembles achieve a similar performance of about 95 % and there is no statistical difference among them. In that case, one should select the smaller ensemble for \(n=25\), thus reducing the computational cost of classification. Figure 11 still shows that after a certain point the performance of the system drops as \(n\) increases.

Table 6 shows the contribution of the proposed two-level divide-and-conquer scheme considering each individual descriptor. The mean gain observed by considering the proposed scheme is 18.65 percentage points. As expected, the most significant contributions of the proposed methodology were observed for the weak classifier, e.g., the classifier trained with Edge descriptors, where the increase in terms of performance was about 41 percentage points. On the other hand, we also observed compelling improvements for our best classifier, the CLBP, where the gain produced was more than 7 percentage points (from 88.60 to 96.22 %).

The results related to the combination of the classifiers trained on different descriptors are shown in Table 7. It is possible to observe an improvement in the recognition rate of 1.55 percentage points (from 96.22 to 97.77 %), showing some complementarity of the evaluated descriptors. It is worth emphasizing that CLBP, LBP, and Color RGB are always present in all promising ensembles.

Finally, Table 8 summarizes the works on forest species recognition using macroscopic images we have found in the literature. A direct comparison is not possible since different databases have been considered in each work. However, it gives us an idea of the state of the art in this field and shows that our results compare favorably to the literature, even using a low-cost acquisition process dedicated to field direct application.

6 Conclusion

In this work we have discussed the problem of automatic classification of forest species based on macroscopic images. We have introduced a two-level divide-and-conquer classification strategy where the input image is first divided into several sub-images which are classified independently. Then, in the lower level, the partial decision produced by the different classifiers are combined to yield a final decision. Our experiments have shown that the proposed method is able to deal with the great intra-class variability presented by the forest species and it was able to increase the results by 9.17 percentage points when compared with the best single classifier based on the CLBP descriptor. The mean gain in terms of correct recognition observed by considering classifiers based on different descriptors was about 19 percentage points.

In addition, we extend our previous database to 41 species of the Brazilian flora. As stated before, it is available upon request for research purposes to make future benchmark and evaluation possible. For future works, we plan to investigate other strategies for combining experts which includes the dynamic selection of classifies and also in selecting the best subset of features to perform the classification task.

References

Thomas, L., Milli, L.: A robust gm-estimator for the automated detection of external defects on barked hardwood logs and stems. IEEE Trans Sig Proc 55, 3568–3576 (2007)

Huber, H.A.: A computerized economic comparison of a conventional furniture rough mill with a new system of processing. For Prod J 21(2), 34–39 (1971)

Buechler DN, Misra DK (2001) Subsurface detection of small voids in low-loss solids. In: First ISA/IEEE conference sensor for industry, pp 281–284

Cavalin PR, Oliveira LS, Koerich A, Britto AS (2006) Wood defect detection using grayscale images and an optimized feature set. In: 32nd Annual conference of the IEEE industrial electronics society, Paris, France, pp 3408–3412

Vidal, J.C., Mucientes, M., Bugarin, A., Lama, M.: Machine scheduling in custom furniture industry through neuro-evolutionary hybridization. Appl Soft Comput 11, 1600–1613 (2011)

Puiri, V., Scotti, F.: Design of an automatic wood types classification system by using fluorescence spectra. IEEE Trans Syst Man Cybern Part C 40(3), 358–366 (2010)

Paula PL, Oliveira LS, Britto AS, Sabourin R (2010) Forest species recognition using color-based features. Int Conf Pattern Recognit, pp 4178–4181

Tsuchikawa, S., Hirashima, Y., Sasaki, Y., Ando, K.: Near-infrared spectroscopic study of the physical and mechanical properties of wood with meso- and micro-scale anatomical observation. Appl Spectrosc 59(1), 86–93 (2005)

Nuopponen, M.H., Birch, G.M., Sykes, R.J., Lee, S.J., Stewart, D.J.: Estimation of wood density and chemical composition by means of diffuse reflectance mid-infrared Fourier transform (drift-mir) spectroscopy. Agric Food Chem 54, 34–40 (2006)

Orton, C.R., Parkinson, D.Y., Evans, P.D.: Fourier transform infrared studies of heterogeneity, photodegradation, and lignin/hemicellulose ratios within hardwoods and softwoods. Appl Spectrosc 583, 1265–1271 (2004)

Lavine, B.K., Davidson, C.E., Moores, A.J., Griffiths, P.R.: Raman spectroscopy and genetic algorithms for the classification of wood types. Appl Spectrosc 55, 960–966 (2001)

Martins, J., Oliveira, L.S., Nisgoski, S., Sabourin, R.: A database for automatic classification of forest species. Mach Vis Appl 24(3), 567–578 (2013)

Zavaschi, T., Oliveira, L.S., Souza Jr, A.B., Koerich, A.: Fusion of feature sets and classifiers for facial expression recognition. Expert Syst Appl 40(2), 646–655 (2013)

Cavalin PR, Oliveira LS, Koerich A, Britto AS (2012) Combining textural descriptors for forest species recognition. In: 32nd Annual conference of the IEEE industrial electronics society, Montreal, Canada

Tou JY, Lau PY, Proceedings of Y. H. Tay (2007) Computer vision-based wood recognition system. In: International workshop on advanced image technology

Tou JY, Tay YH, Lau PY (2008) One-dimensional grey-level co-occurrence matrices for texture classification. In: International symposium on information technology (ITSim 2008), pp 1–6

Tou, J.Y., Tay, Y.H., Lau, P.Y.: A comparative study for texture classification techniques on wood species recognition problem. Int Conf Nat Comput 5, 8–12 (2009)

Khalid M, Lee ELY, Yusof R, Nadaraj M (2008) Design of an intelligent wood species recognition system. IJSSST 9(3)

Nasirzadeh M, Khazael AA, Khalid MB (2010) Woods recognition system based on local binary pattern. In: Second international conference on computational intelligence, communication systems and networks, pp 308–313

Kittler, J., Hatef, M., Duin, R.P.W., Matas, J.: On combining classifiers. IEEE Trans Pattern Anal Mach Intell 20, 226–239 (1998)

Kuncheva L (2004) Combining pattern classifiers. In: Methods and Algorithms, Wiley, New York

Saso, D., Zenko, B.: Is combining classifiers with stacking better than selecting the best one? Mach Learn 54(3), 255–273 (2004)

Dong Y-S, Han K-S (2005) Boosting SVM classifiers by ensemble. In: Special interest tracks and posters of the 14th International Conference on World Wide Web. ACM, p 1073

Rokach, L.: Ensemble-based classifiers. Artif Intell 33(1–2), 1–39 (2010)

Zhu J, Steven CH, Michael R, Shuicheng Y (2008) Near duplicate keyframe retrieval by nonrigid image matching. ACM Multimedia, pp 41–50

Haralick RM (1979) Statistical and structural approaches to texture. Proc IEEE, 67(5)

Franco, A., Nanni, L.: Fusion of classifiers for illumination robust face recognition. Expert Syst Appl 36, 8946–8954 (2009)

Ojala, T., Pietikainen, M., Harwood, D.: A comparative study of texture measures with classification based on featured distribution. Pattern Recognit 29(1), 51–59 (1996)

Ojala, T., Pietikainen, M., Mäenpää, T.: Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans Pattern Anal Mach Intell 24(7), 971–987 (2002)

Zhao, G., Ahonen, T., Matas, J., Pietikainen, M.: Rotation-invariant image and video description with local binary pattern features. IEEE Trans Image Process 21, 1465–1477 (2012)

Mandelbrot, B.B.: The fractal geometry of nature. W. H. Freeman and Co, New York (1982)

Chen WS, Yuan SY, Hsiao H, Hsieh CM (2001) Algorithms to estimating fractal dimension of textured images. In: IEEE international conferences on acoustics, speech and signal processing, pp 1541–1544

Voss, R.F.: Characterization and measurement of random fractals. Phisica Scripta 27(13), 27–32 (1986)

Melo RHC, Conci A (2008) Succolarity: defining a method to calculate this fractal measure. In: 15th International conference on systems, signals and image processing, pp 291–294

Levi K, Weiss Y (2004) Learning object detection from a small number of examples. the importance of good features. In: Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2004. CVPR 2004, june. 2 July 2004, vol 2, pp II-53–II-60

Ojansivu V, Heikkila J (2008) Blur insensitive texture classification using local phase quantization. In: Proc. of the image and signal processing (ICISP 2008), pp 236–243

Ojansivu V, Rahtu E, Heikkila J (2008) Rotation invariant local phase quantization for blur insensitive texture analysis. In: International conference on pattern recognition, pp 1–4

Huang X, Li SZ, Wang Y (2004) Shape localization based on statistical method using extended local binary pattern. In: Proc. of the international conference on image and graphics, pp 184–187

Liao, S., Law, M.W.K., Chung, A.C.S.: Dominant local binary patterns for texture classification. IEEE Trans Image Process 18(5), 1107–1118 (2009)

Heikkilä, M., Pietikäinen, M., Schmid, C.: Description of interest regions with local binary patterns. Pattern Recognit 42(3), 425–436 (2009)

Guo, Z., Zhang, L., Zhang, D.: A completed modeling of local binary pattern operator for texture classification. IEEE Trans Image Process 19(6), 1657–1663 (2010)

Platt, J.: Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods. In: Smola, A. (ed.) Advances in Large Margin Classifiers, pp. 61–74. MIT Press, Cambridge (1999)

Yusof R, Rosli NR, Khalid M (2010) Using gabor filters as image multiplier for tropical wood species recognition system. In: 12th International conference on computer modelling and simulation, pp 284–289

Acknowledgments

This research has been supported by The National Council for Scientific and Technological Development (CNPq) grant 301653/2011-9.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Filho, P.L.P., Oliveira, L.S., Nisgoski, S. et al. Forest species recognition using macroscopic images. Machine Vision and Applications 25, 1019–1031 (2014). https://doi.org/10.1007/s00138-014-0592-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00138-014-0592-7