Abstract

Objective

We present a score for assessing the quality of ICU care in terms of structure and process, based on bibliographic review, expert consultations, field test, analysis, and final consensus, and analyze its initial application in the field.

Design and setting

This feasibility and observational study was conducted within the framework of a French regional clinical research project (NosoQual); 40 ICUs were visited and assessed between November 2002 and March 2003 according to standardized procedures.

Measurements and results

The grid consisted of 95 variables. The overall score derived from seven independent quality dimensions: human resources, architecture, safety and environment, management of documentation, patient care management, risk management of infections and evaluation, and surveillance. The average level of achievement of the scores varied from 48% to 63% of theoretical maxima. Variability in the individual dimensional subscores was greater than that of the overall score (CV = 0.15).

Conclusions

Evaluation this scoring system encounters the limitation of the absence of a “gold standard.” However, this is counterbalanced by the rigorous design methodology, the characteristic strengths of the quality dimensions. The survey also highlights also feasibility and the potential interest for specific tools for the assessment of ICUs.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Quality assessment in healthcare was started in 1918 in the United States and Canada by the American College of Surgeons, organized as on-site hospital inspections aimed at verifying a “minimum standard” [1]. Over the next 30 years quality standards were developed, leading to the establishment of organizations dedicated to the assessment of quality of healthcare, the oldest (1951) and probably the most well known being the Joint Commission on Accreditation of Healthcare Organizations (JCAHO). In the United Kingdom the National Health Service, created in 1948, established its own official framework for evaluating quality of care in the 1980s [2]. During the same period an enormous effort was also made by the Australian authorities; the Australian Council on Healthcare Standards was established in 1974 to assess the performance of healthcare facilities. In 1991 France included assessment requirements in hospital law [3] and in 1996 created the ANAES (now “Haute Autorité de Santé”), as a scientific organization responsible for accreditation in the healthcare sector [4].

Most quality assessment models have taken as a starting point the reference research work of Donabedian [5]. In 1980 he introduced to the healthcare sector the analytical framework “structure–process–result”, used to describe and evaluate quality in other fields [6]. The intensive care unit (ICU) is one of the major targets of the quality assessment process in hospitals (particularly in relation to healthcare-associated infections) [7]. ICUs are complex organizations requiring various fields of knowledge, technical devices, and diagnostic and therapeutic methods. Their history has been marked by many technological advances in the fight to save lives threatened by serious illnesses [8]. In most countries intensive care is expanding (in terms of number and size of units, budget), and new technologies are constantly being introduced. The evolving accreditation and evaluation in the healthcare sector have manifested the need to develop specific quality assessment tools for ICUs. In 1997 the European Society of Intensive Care Medicine (ESICM) produced general guidelines for continuous quality improvement and recommendations on minimal requirements for ICUs [9, 10]. Later a safety checklist (58 standards for nursing, respiratory therapy, and maintenance) was initiated in 1999 by the Veterans Affairs Ann Arbor Health System (VAAHS) [11]. In 2005 the JCAHO released a new manual for national hospital quality measures related to both ICU processes and outcomes [12], and the Spanish Society of Intensive and Critical Care and Coronary Units published its first edition of the quality indicators in critically ill patients (as yet only published in draft format).

NosoQual is a clinical research project (PHRC) funded by the French Health Ministry and carried out by three university hospitals (Lyon, Nice, and Montpellier) in collaboration with the regional coordination center for infection control C.CLIN Sud-Est (Lyon). Its objective is to test the correlation between standard quality measures in ICUs and surgical units (in terms of structure and process) and rates of healthcare-associated infections (HAI) that several European healthcare authorities consider as potential indicators of hospital performance.

The first phase of the project consisted of developing specific quality assessment tools for intensive care and for surgery. Theses tools were presented as sets/grids of selected criteria allowing the calculation and the attribution of quality scores. The objective of this phase was to design a global score independent of the outcome indicators (HAI) and based on structure and process criteria only. The present contribution describes the method of constructing the ICU quality assessment grid and presents the results obtained (in terms of correlation and variability of the scores across ICUs) following an on-site visit. The second step of the project will be to analyze the correlation between the scores expressed by these grids as resource-process performances and HAI. If validated, the scores could be also used both for evaluating the impact of changes in processes and structures and for assessing and comparing quality in different ICUs and surgical units.

Materials and methods

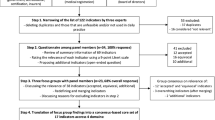

The score was developed between April 2001 and March 2005, and the process consisted of five phases: (a) bibliographical review, (b) experts reconsideration, (c) field test (pilot test and on-site visits), (d) descriptive analysis, and (e) final consensus. The bibliographical review used intensive care, quality indicators, performance, assessment, and risk management as basic key words, followed by brainstorming by the project investigators. This led to a definition of “quality” in terms of structure and process for designing of the score. This primary step led to the creation of a first version of the score consisting of recommended measures, regulations, and factors that could potentially affect the work organization, management, and ultimately performance of the unit. These elements were classified into several dimensions of the quality focusing on structure and process indicators.

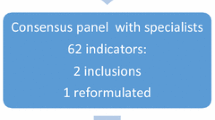

Experts' reconsideration took place in two stages. First, a committee of reviewers including five experienced intensive care physicians was asked to give an opinion on the selected items and their classification. The grid was considered globally as relevant and some consensual suggestions were subsequently carried out. Secondly, a national group of 25 experts from numerous fields was selected to review the questionnaire through a Delphi investigation. This consisted of professionals and experts in intensive care, quality assessment, and/or infections control. Two consecutive reviews were necessary to arrive at a consensus. The experts were asked to rank the selected criteria according to three statements: (a) level of association between the criteria and the quality of care in ICU (ranked from 0/no link to 3/strong link), (b) availability of information (ranked from 0/not available to 3/always available), and (c) formulation of the criteria (not ranked, but suggestions for modifications were solicited). Only criteria which achieved the highest rank (3) were integrated into the grid, which consisted at the end of seven quality dimensions related to the ICU and a general component of the global descriptors of the healthcare facility.

A pilot testing of the consensual grid in the field was carried out in two ICUs to demonstrate the acceptability and feasibility of the investigations. An ICU team represented each unit during the on-site visits, consisting of an intensivist and an intensive care nurse from the unit together with a member of the Infection Control Committee, the Risk Management Team, or the Quality Department of the hospital. Preparation of the visit by the ICU team was recognized to be crucial for completing the ICU visit appropriately in 1 day. The in situ data collection took place over a 5-month period (November 2002–March 2003) via interviews (using standardized questionnaires for each of the members of the ICU team) and observations (single or multiple observations to report the presence/absence or to the cumulative measure of a criteria in the unit). All the ICUs participating in a large regional network for the surveillance of HAI in ICU “REA Sud-Est,” were invited to participate. Forty units agreed to receive investigators on a voluntary basis for a 1 day visit; a list of the participating ICUs is presented under “Acknowledgements.”

Descriptive analyses were produced using SPSS package (version 12). These consisted essentially of: frequency tables aimed at identifying and deleting homogeneous variables and those with missing values and correlation matrices leading to the reformulation, combination, or suppression of variables for which the Pearson test identified significant correlation with another variable expressing the same or similar quality measure. These analyses led to the deletion of, respectively, 85 deleted and 45 variables.

Finalization was carried out jointly by some of the experts of the Delphi investigation and the investigators of the project. These considered the results of the analysis and decided consensually whether to retain the remaining discriminating variables in the final quality assessment grid. Some criteria were reformulated or combined to generate ratios to attribute to the score quantitative assessment ability. For each selected variable a weight and a threshold was defined.

Results

The preliminary instrument after bibliographical review and brainstorming included 234 variables; this grew to 331 after the experts' consultation. This was the questionnaire that was used during the field test (November 2002–March 2003). The subsequent analysis led to the removal of nondiscriminating and intercorrelated variables, leaving 146 variables, and the present ICU quality assessment grid consisting of 95 variables emerged from the stage of final consensus. These were classified into the following seven dimensions (Table 1): human resources (quotas, organization, training of ICU staff), architecture (unit structure and architectural characteristics), safety and environment (security requirements and environmental ICU specifications), management of documentation (management of protocols, medical and nursing files), patient care management (various procedures and standards related to patient care), risk management of infections, and evaluation and surveillance.

Each dimension included variables which were considered during the final phase to be discriminating, uncorrelated, nonredundant and therefore representative of quality of care in ICUs. For each of the 95 variables a ruling modality and a weighting from 0.5 to 3 was attributed according to the importance and the relevance of the variable in terms of quality of care. Thus a theoretical maximum score was calculated for each of the seven dimensions. The highest scores were those on dimensions 1–3 (score 1 = 24, score 2 = 20, score 3 = 21.5) and the lowest those on dimensions 5–7 (score 5 = 13, score 6 = 16, score 7 = 14). The sum of the “dimensional scores” generated a “maximum theoretical score” of 126.

Using the grid presented in Table 1, an individual score was calculated for the 40 ICUs assessed by the investigations (one score for each dimension as well as a total score for each unit). Fig. 1 shows the wide variability in scores between the participating units. This variability was observed particularly for the dimensions of “human resources,” “architecture,” and “patient care” (coefficient of variation over 0.3). However, the total score expressed a relative homogeneity (coefficient of variation 0.15); this varied from 36.5 to 89.5, and 45% of the units achieved the mean value of 68.6 (Fig. 2).

Since the various dimensions had different theoretical maxima, the levels of performance (average and maximum levels) were measured in terms of percentage of achievement of the maxima: The average level of achievement of the score varied from 48% (dimension 7) to 63% (dimension 6) of the theoretical maximum score, whereas the maximum observed score attained 75–100% of the theoretical maximum (Fig. 3).

The relationship between the scores was tested using Pearson's correlations matrix. The scores were rather independent; few significant correlations were observed: “management of documentation” was significantly correlated to both “human resources” (r = 0.09, p = 0.01) and “environment” (r = 0.389, p = 0.05; see Table 2).

The consensual grid was sent to the participating units together with a summary table of the descriptive results. Individual scores were highlighted for each ICU to allow them to compare their position to the sample. The intensivists were invited to express their opinion of the tool and the degree to which it reflects the reality of their units. Apart from the units which had been moved after the investigation, the participants were satisfied with their results; two units asked for a return visit from investigators, and two others asked for authorization to use the grid for self-evaluation.

Discussion

Evaluation of the NosoQual system for scoring the “quality” of ICUs is subject to some limitations. The major one of these is the absence of a “gold standard” against which to evaluate other tools. Nevertheless some elements in the design have been identified to assess and discuss this score. The data were collected by trained investigators during the on-site visit using standard questionnaires and checklists to perform interviews and observations. This process guaranteed an accurate data collection. However, remarks can be formulated regarding the time of on-site visits and level of specialization of investigators for a better observation of practices; however, intensive care specific practices were not included in the survey protocol. Furthermore, biases may have occurred in relation to the previous experiences of the experts involved in the different phases of the score conception. Experts were enrolled on voluntary bases according to their interest in quality management and ICU performances. This selection may have led to the over- or underscoring of some of the quality dimensions. Concerning representativeness the score was designed in consideration of the descriptive results of 40 ICUs in a surveillance network at the regional level, knowing that the sample size represented 60% of the ICU network. A comparison between the characteristics of participating ICUs and the others within the network revealed a similarity regarding to the type of ICU but a difference in relation to the type of the participating healthcare facilities. One of the elements that could not be tested in this study was sensitivity (in relation to time). The ability of the tool to represent slight modifications in time has not been checked after the grid conception. If tested, relevance could be one of the added values of this tool. Finally, the score was not tested after its finalization, which may have been a strong argument for the dissemination of the tool among professionals.

Despite these limitations, several reasons support the idea of a potential usefulness of the score for evaluating “quality” of ICUs. (a) The methodology of developing the score was respected throughout all the phases of the process. (b) During both the Delphi investigation and the stage of the final consensus only variables which reached 100% of agreement were retained in the score. (c) The survey was supported by direct observations, which helped to ensure the on-site situation of the units was recorded. In addition, the modalities of performance of these observations guaranteed that most subjective interpretations were eliminated. (d) The total score covered almost entirely the components of quality of care in intensive care, using discriminating uncorrelated variables. (e) The high variability and the independent faculty (poor intercorrelation) of each quality dimension allows the level of performance in each component to be revealed separately. (f) The feedback of participating ICUs, which expressed interests for further uses of this tool, was encouraging particularly as a self-assessment and also for performance comparison within a specific network. (g) Compared to some available standards for evaluating quality of care in ICUs, the NosoQual grid displays some equivalence: the safety checklist of the VAAHS [11] was organized into various components (e.g., medications, environment) and included a set of standards some of which some were common to our model (e.g., adherence to isolation protocols, medication administration record, signed, clear passage in hallway); on the other hand, the latest JCAHO manual of ICU specifications [12] consists of six measures, two of which are very similar to some variables of the sixth dimension of the NosoQual score “risk management of infection” (central line associated bloodstream infection, ventilation associated pneumonia prevention, patient positioning). Regarding the quality indicators proposed by the Spanish Society of Intensive and Critical Care and Coronary Units, 7 of the 15 sections classifying the 120 indicators also showed interesting parallels with the NosoQual indicators (acute respiratory insufficiency, infectious diseases, nursing, bioethics, planning and management, internet and training).

In conclusion, this survey highlights the feasibility and the potential interest for designing specific tools for the assessment of quality in ICUs. The tool developed in this study was oriented to structure and process evaluation and targeted at unit level, but it could also be associated with standards and existing supports for assessing quality at hospital level. Furthermore, such score could be used in multicenter collaborative projects and networks to check relative “quality” of participating ICU using benchmarking. In a different scientific approach this score could be used to test the strength and the correlation with outcome indicators used to measure clinical performance of ICUs.

References

Nora PF (2000) Improving safety for surgical patients: suggested strategies. Bull Am Coll Surg 85:11–14

Greengross P, Grant K, Collini E (1999) The history and development of the UK National Health Service 1948–1999. HSRC

Ministère de la Santé (1991) Loi n°91–748 du 31 juillet 1991 portant réforme hospitalière. J Officiel, Paris

Ministère de la Santé (1996) Ordonnance n°96–346 du 24 avril 1996 portant réforme de l'hospitalisation publique et privée. J Officiel, Paris

Donabedian A (1990) Contributions of epidemiology to quality assessment and monitoring. Infect Control Hosp Epidemiol 11:117–121

Donabedian A (1980) The Definition of quality and approaches to its assessment. Health Administration Press, Ann Arbor

Brun-Buisson C (1996) Practice guidelines for prevention of infection in European ICU's: a call for standards. Intensive Care Med 22:847–848

Wenzel RP (1992) Assessing quality health care: perspective for clinicians. Williams and Wilkins, pp 267–296

Thijs LG (1997) Continuous quality improvement in the ICU: general guidelines. Task Force European Society of Intensive Care Medicine. Intensive Care Med 23:125–127

Ferdinande P (1997) Recommendations on minimal requirements for intensive care departments. Members of the Task Force of the European Society of Intensive Care Medicine. Intensive Care Med 23:226–232

Piotrowski MM, Hinshaw DB (2002) The safety checklist program: creating a culture of safety in intensive care units. Jt Comm J Qual Improv 28:306–315

Joint Commission (2005) Specifications manual for national hospital quality measures-ICU. Joint Commission on Accreditation of Health Care Organizations, Oakbrook Terrace, Illinois

Miranda DR, Williams A Loirat P (1990) Management of intensive care: guidelines for the better use of resources. Kluwer

Depasse B, Pauwels D, Somers Y, Vincent JL (1998) A profile of European ICU nursing. Intensive Care Med 24:939–945

American College of Critical Care Medicine, Society of Critical Care Medicine (1999) Critical care services and personnel: recommendations based on a system of categorization into two levels of care. Crit Care Med 27:422–426

Norrenberg M, Vincent JL (2000) A profile of European intensive care unit physiotherapists. Intensive Care Med 26:988–994

Guidelines/Practice Parameters Committee of the American College of Critical Care Medicine, Society of Critical Care Medicine (1995) Guidelines for intensive care unit design. Crit Care Med 23:582–588

Ministère de la santé, de la famille et des personnes handicapées (2003) Circulaire DHOS/SDO/N° 2003/413 du 27 aot 2003 relative aux établissements de santé publics et privés pratiquant la réanimation, les soins intensifs et la surveillance continue. Bull Officiel, Paris

O'Connell NH, Humphreys H (2000) Intensive Care unit design and environmental factors in the acquisition of infection. J Hosp Infect 45:255–262

Francioli P, Muehlemann K (1999) Prévention des infections nosocomiales en réanimation. Swiss-Noso 6:13–15

Moro ML, Jepsen OB (1996) Infection control practices in intensive care units of 14 European countries. The EURO.NIS Study Group. Intensive Care Med 22:872–879

Harvey MA (1998) Critical-care-unit bedside design and furnishing: impact on nosocomial infections. Infect Control Hosp Epidemiol 19:597–601

Hoet T (1996) Conception des services de soins intensifs. Hygienes 12:50–54

O'Hara JF Jr, Higgins TL (1992) Total electrical power failure in a cardiothoracic intensive care unit. Crit Care Med 20:840–845

Agence nationale d'accréditation et d'évaluation en santé (2004) Manuel d'accréditation des établissements de santé. Ressources transversales—qualité et sécurité de l'environnement. ANAES, Paris, référence 16–20:37–382

Ministère de l'emploi et de la solidarité (1998) Élimination des déchets d'activités de soins à risques—guide technique. Ministère de l'emploi et de la solidarité, Paris

Agence nationale d'accréditation et d'évaluation en santé (2004) Manuel d'accréditation des établissements de santé. Ressources transversales—organisation de la qualité et de la gestion des risques. ANAES, Paris, référence 13–15:34–35

Comité Technique National des Infections Nosocomiales (2003) Désinfection des dispositifs médicaux en anesthésie et en réanimation. Ministère de la santé, de la famille et des personnes handicapées, Paris

Spaulding EH (1972) Chemical disinfection and antisepsis in the hospital. J Hosp Res 9:5–31

Centre de Coordination de la Lutte contre les Infections Nosocomiales du Sud-Ouest (2005) Entretien des locaux des établissements de soins. CCLIN Sud-Ouest, Bordeaux

Agence nationale d'accréditation et d'évaluation en santé (2004) Manuel d'accréditation des établissements de santé. Ressources transversales, Système d'information. ANAES, Paris, référence 21–24:41–42

Agence nationale d'accréditation et d'évaluation en santé (1994) Evaluation de la tenue du dossier du malade. ANAES, Paris

REANIS (n.d.) Guide de prévention des infections nosocomiales en réanimation, 2nd edn, EDK Glaxo Wellcome, Paris

Labadie JC, Kampf G, Lejeune B, Exner M, Cottron O, Girard R, Orlick M, Goetz ML, Darbord JC, Kramer A (2002) Recommendations for surgical hand disinfection—requirements, implementation and need for research. A proposal by representatives of the SFHH, DGHM and DGKH for a European discussion. J Hosp Infect 51:312–315

Hughes MG, Evans HL, Chong TW, Smith RL, Raymond DP, Pelletier SJ, Pruett TL, Sawyer RG (2004) Effect of an intensive care unit rotating empiric antibiotic schedule on the development of hospital-acquired infections on the non-intensive care unit ward. Crit Care Med 32:53–60

Timsit JF (2005) Updating of the 12th consensus conference of the Societé de Reanimation de langue française (SRLF): catheter related infections in the intensive care unit. Ann Fr Anesth Reanim 24:315–322

Long MN, Wickstrom G, Grimes A, Benton CF, Belcher B, Stamm AM (1996) Prospective, randomized study of ventilator-associated pneumonia in patients with one versus three ventilator circuit changes per week. Infect Control Hosp Epidemiol 17:14–19

Lepelletier D (2006) Meticillin-resistant Staphylococcus aureus: incidence, risk factors and interest of systematic screening for colonization in intensive-care unit. Ann Fr Anesth Reanim 25:626–632

Troche G, Joly LM, Guibert M, Zazzo JF (2005) Detection and treatment of antibiotic-resistant bacterial carriage in a surgical intensive care unit: a 6-year prospective survey. Infect Control Hosp Epidemiol 26:161–165

Acknowledgements

Participating ICUs from the REA Sud-Est network, L. Aysac and B. Tressières from the C.CLIN Sud-Est, the expert consultation group, investigation teams, Hospices Civiles de Lyon, CHRU de Montpellier, CHU de Nice and the French Ministry of Health. The ICUs participating in the survey were the following: Département Réanimation Urgences-SMUR, Centre Hospitalier, Ales; Réanimation polyvalente, Centre Hospitalier Juan-les-Pins, Antibes; Réanimation polyvalente, Centre Hospitalier, Arles; Réanimation, Centre Hospitalier Général, Aubagne; Réanimation, Centre Hospitalier, Aubenas; Réanimation médico-chirurgicale, Centre Hospitalier du Dr Récamier, Belley; Réanimation, Infirmerie Protestante, Caluire; Réanimation polyvalente, Centre Hospitalier, Cannes; Réanimation, Centre Hospitalier Antoine Gayraud, Carcassonne; Réanimation, Clinique du Parc, Castelnau-le-Lez; Réanimation polyvalente, Centre Hospitalier, Chambery; Réanimation polyvalente, Centre Hospitalier, Draguignan; Réanimation, Centre Hospitalier Fréjus–St. Raphaël, Fréjus; Réanimation polyvalente, Centre Hospitalier, Gap; Réanimation cardio-vasculaire et thoracique, Centre Hospitalier Universitaire, Grenoble; Réanimation chirurgicale 1 (URC), Centre Hospitalier Universitaire, Grenoble; Réanimation, Centre Hospitalier, La ciotat; Réanimation–Pavillon N, CHU Lyon–Hôpital Edouard Herriot, Lyon; Soins Intensifs Post-Opératoires–Unité 800, CHU Lyon–Hôpital Pierre Wertheimer, Lyon; Réanimation, Institut Paoli Calmette, Marseille; Réanimation, Clinique Vert Coteau, Marseille; Réanimation polyvalente, APHM–Hôpital Nord, Marseille; Réanimation, Centre Hospitalier, Martigues; Réanimation / USI, Polyclinique Saint Jean, Mimet; Réanimation polyvalente, Centre Hospitalier, Montelimar; Département d'Anesthésie Réanimation DAR-C, CHU Montpellier–Hôpital Gui de Chauliac, Montpellier; Département d'Anesthésie Réanimation DAR-B, CHU Montpellier–Hôpital Saint Eloi, Montpellier; Réanimation médicale et assistance respiratoire, CHU Montpellier–Hôpital Gui de Chauliac, Montpellier; Réanimation polyvalente, Centre Hospitalier, Narbonne; Réanimation polyvalente, CHU Nice–Hôpital Saint Roch, Nice; Réanimation, CHU Nice–Hôpital Archet 2, Nice; Réanimation, Centre Hospitalier, Privas; Réanimation polyvalente, Centre Hospitalier de Roanne, Roanne; Réanimation médicale, CHU Saint-Étienne–Hôpital Bellevue, Saint Etienne; Réanimation polyvalente, CHU Saint-Étienne–Hôpital Nord, Saint Etienne; Réanimation médico-chirurgicale, Hôpitaux du Pays de Mont-Blanc, Sallanches; Réanimation, Hôpitaux du Léman–Hôpital Georges Pianta, Thonon les Bains; Réanimation polyvalente, Hôpital Font-Pré, Toulon; Réanimation médico-chirurgicale, Centre Hospitalier Lucien Hussel, Vienne; Réanimation polyvalente, Centre Hospitalier, Villefranche sur Saone.; Réanimation, Centre Hospitalier Princesse Grace, Monaco; Réanimation chirurgicale–Réa Nord, CHU Lyon–Centre Hospitalier Lyon Sud, Lyon

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Rights and permissions

About this article

Cite this article

Najjar-Pellet, J., Jonquet, O., Jambou, P. et al. Quality assessment in intensive care units: proposal for a scoring system in terms of structure and process. Intensive Care Med 34, 278–285 (2008). https://doi.org/10.1007/s00134-007-0883-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00134-007-0883-9