Abstract

Enhanced geothermal systems (EGS) are an attractive source of low-carbon electricity and heating. Consequently, a number of tests of this technology have been made during the past couple of decades, and various projects are being planned or under development. EGS work by the injection of fluid into deep boreholes to increase permeability and hence allow the circulation and heating of fluid through a geothermal reservoir. Permeability is irreversibly increased by the generation of microseismicity through the shearing of pre-existing fractures or fault segments. One aspect of this technology that can cause public concern and consequently could limit the widespread adoption of EGS within populated areas is the risk of generating earthquakes that are sufficiently large to be felt (or even to cause building damage). Therefore, there is a need to balance stimulation and exploitation of the geothermal reservoir through fluid injection against the pressing requirement to keep the earthquake risk below an acceptable level. Current strategies to balance these potentially conflicting requirements rely on a traffic light system based on the observed magnitudes of the triggered earthquakes and the measured peak ground velocities from these events. In this article we propose an alternative system that uses the actual risk of generating felt (or damaging) earthquake ground motions at a site of interest (e.g. a nearby town) to control the injection rate. This risk is computed by combining characteristics of the observed seismicity of the previous 6 h with a (potentially site-specific) ground motion prediction equation to obtain a real-time seismic hazard curve; this is then convolved with the derivative of a (potentially site-specific) fragility curve. Based on the relation between computed risk and pre-defined acceptable risk thresholds, the injection is increased if the risk is below the amber level, decreased if the risk is between the amber and red levels, or stopped completely if the risk is above the red level. Based on simulations using a recently developed model of induced seismicity in geothermal systems, which is checked here using observations from the Basel EGS, in this article it is shown that the proposed procedure could lead to both acceptable levels of risk and increased permeability.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Current strategies for enhanced geothermal systems (EGS) stimulation and exploitation seek to avoid potentially damaging earthquake ground motions by keeping the magnitude of the largest event (or perhaps the largest peak ground velocity, PGV) below a certain level through the so-called ‘traffic light’ procedure (Bommer et al. 2006). Rather than use these hazard parameters, it would be better to seek to keep the risk, calculated by convolving the hazard curve and an appropriate fragility curve, below an acceptable threshold. An appropriate fragility curve could be one characterising the probability of feeling an earthquake given a certain ground-motion level. The local population is concerned with the risk that they are exposed to rather than the hazard level, which is difficult to appreciate and is not a direct measure of the potential impact of seismicity on people. This article presents how this could be done using a recently developed physical model of induced seismicity from fluid injection (Aochi et al. 2014), a ground-motion prediction equation (GMPE) developed for EGS applications (Douglas et al. 2013a), and an example fragility curve expressing the chance of feeling earthquake shaking. The proposed protocol is tested for a hypothetical reservoir.

Bachmann et al. (2011), extended by Mena et al. (2013), proposed a similar approach based on observations from the induced Basel 2006 earthquake sequence coupled to a macroseismic intensity prediction equation (IPE, e.g. Cua et al. 2010). These studies were based on epidemic-type aftershock sequence models of seismicity rather than full physical models. Bachmann et al. (2011) and Mena et al. (2013) used the actual injection history as an input to their calculations, but they did not try altering the injection (flow) rate, although this could have been done. Convertito et al. (2012) undertook similar analyses (although they did not go as far as computing the risk) for The Geysers (California) geothermal field over a period of roughly 3 years, but, as it is an observational study, the effect of varying the injection rate to control the hazard (or risk) was not studied.

In the following section the proposed approach is outlined. Testing of this approach relies on being able to simulate induced seismicity and the influence of fluid injection on this seismicity and the permeability of the reservoir. Therefore, in the subsequent section the simulation procedure developed by Aochi et al. (2014) is checked using the injection history and associated earthquake catalogue from the Basel EGS experiment of December 2006. In Sect. 4 an injection protocol is proposed following the philosophy adopted here in which the risk is controlled and tested for various scenarios. The article ends with a discussion and some brief conclusions.

2 Proposed Approach

As shown by, for example, Kennedy (2011) the seismic risk can be obtained by the so-called risk integral, in which the seismic hazard curve (derived by probabilistic seismic hazard assessment, PSHA) and the derivative of the fragility curve, which is often modelled as a lognormal probability distribution function, are convolved. As discussed by Douglas et al. (2013b), the technique of ‘risk targeting’, based on the risk integral, is being used to develop the next generation of seismic design maps in some countries (e.g. the USA). In this approach the fragility curve, expressing the probability of collapse given a certain level of ground motion, is moved left (implying weaker buildings) or right (implying stronger buildings) to obtain a design map (representing the ground motions that designs should protect against) that would lead to a uniform level of risk nationally. Because these maps consider natural seismicity, for which the hazard cannot be changed, it is the vulnerability that is altered (by imposing stricter or laxer building requirements) to change the risk level. For EGS, the vulnerability of the neighbouring buildings (or sensitivity of the local population) cannot be altered.Footnote 1 However, because the hazard can potentially be controlled by the operator, in this case, it is the hazard curve that is modified by adopting a different reservoir stimulation/exploitation scheme. This observation and the risk integral are the basis of the procedure proposed here.

In the proposed procedure the hazard curve is iteratively updated as time progresses and earthquakes occur (or do not occur). PSHA for a point source (reservoir) and a single site (e.g. centre of the local town, roughly the situation of interest for EGS) is straightforward. It can be conducted in a couple of simple integration loops based on a and b values of the Gutenberg–Richter relation for the earthquake catalogue of the reservoir (or extrapolated into the future since these parameters change with time) and an appropriate GMPE for PGV, e.g. those recently developed by Douglas et al. (2013a). Next, the fragility curve expressing the probability of the population feeling earthquake shaking given a level of PGV is required. This could be taken from the literature, or it could be developed to produce site-specific curves based on felt/non-felt reports (macroseismic intensity reports) and the maximum-likelihood method (e.g. Shinozuka et al. 2000), for example. These could even be updated as data becomes available for a specific EGS. Here we use the modified-Mercalli intensity (MMI)-PGV correlations of Worden et al. (2012) as the fragility curve. The PGVs given by this curve for 10, 75 and 95 % probability of being felt roughly correspond to the thresholds of ‘Just perceptible’ (0.1 cm/s), ‘Clearly perceptible’ (0.65 cm/s) and ‘Disturbing’ (1.3 cm/s), respectively, of the traffic light system of Bommer et al. (2006).

A one-step method of estimating the earthquake risk is to use IPEs, which directly predict the macroseismic intensity, rather than GMPEs and fragility curves. This approach is followed by Bachmann et al. (2011) and Mena et al. (2013), for example, to obtain curves that express the annual frequency of exceedance for different macroseismic intensities. We do not follow this direct approach here for two reasons. Firstly, it is less flexible than the two-step process and, secondly, it is more difficult to make it site-specific since IPEs are generally developed for entire countries or even tectonic regimes (e.g. active crustal seismicity), whereas GMPEs can be tailored to a specific site and fragility curves can be developed for a certain structure type or population. Also the lack of robust IPEs means that accounting for epistemic uncertainties through a logic tree (Kulkarni et al. 1984) is more difficult since there are fewer candidate IPEs than GMPEs and fragility curves.

By convolving the seismic hazard curve and the derivative of the fragility curve, the seismic risk at that time step, expressed in terms of the annual probability of feeling an earthquake, is computed. This annual probability could be converted to a daily probability, which is more relevant for EGS operations and felt/non-felt shaking. Next, this probability is compared to the level of acceptable risk for the local population. If the computed risk is less than the acceptable risk, then the EGS stimulation can continue (and perhaps be increased); but if it is higher, then actions should be taken, e.g. a reduction in injection rate. Details of the protocol developed here are given in Sect. 4.

3 Checking the Simulation Approach of Aochi et al. (2014)

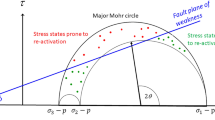

Testing of the approach proposed here relies on the ability to simulate earthquake catalogues for induced seismicity that are a function of the injection characteristics and other properties of the geothermal reservoir. Various models have been recently developed to simulate such catalogues. For example, Bruel (2007) presents a physical model to predict the seismicity of a geothermal reservoir, as do Hakimhashemi et al. (2013), who go the extra step and use their procedure to compute time-dependent seismic hazard. Goertz-Allmann and Wiemer (2013) also simulate induced seismicity due to pressure-driven stress changes (a linear diffusion model of pore pressure and a Mohr–Coulomb criterion), and they discuss the characteristics of the generated seismicity. In this article we use the recently developed simulation technique presented by Aochi et al. (2014). Making use of a ‘fault lubrication approximation’, in this technique the equations within the volumetric fault core are projected onto the 2D fault interface along which seismicity takes place, within a finite permeable zone of variable width. The seismicity is then modelled through a system of equations describing fluid migration, fault rheology, fault thickness, and stress redistribution from shear rupturing, triggered either by shear loading or by fluid injection.

Since the reliability of the stimulation protocol presented below strongly depends on the ability of the adopted simulations to model observed behaviour in geothermal reservoirs and the induced seismicity, in this section we check the approach of Aochi et al. (2014) against observations from an EGS. The observations from the 2006 Basel stimulation experiment (Häring et al. 2008) were selected for this test. We impose the injection history and check the calculated pressure and earthquake catalogue against the observations. Earthquakes with moment magnitudes larger than 1 are simulated due to the size of the smallest factures considered. The moment magnitudes of the observed earthquakes are those recomputed by Douglas et al. (2013a) from the seismic waveforms. For the earthquake catalogue, the comparison is made in the time domain and also in terms of the magnitude-frequency distribution (Gutenberg–Richter plots).

We make two modifications to the simulation procedure presented by Aochi et al. (2014). Firstly we introduce a normal stress (\( \sigma_{n} \)) on which the constitutive relation of permeability \( \kappa \) (Miller et al. 2004) is dependent:

where \( \kappa_{0} \) and \( \sigma_{0} \) are constants. Secondly, we introduce a multi-scale heterogeneity in fault strength in analogue to the approach of Ide and Aochi (2005) and Aochi and Ide (2009). We consider a set of circular patches of different sizes (radii \( r_{n} = 2^{n} r_{0} \) for rank n) whose number follows this scaling relation:

where \( r_{0} \), D, and \( N_{0} \) are constants and the integer n = 0–6.

Details of the seismicity are dependent on the asperities randomly generated in the model. As the positions and sizes of fault asperities cannot, with current technology and procedures, be measured before injection, there is always uncertainty in the seismicity that will be induced. To characterise this uncertainty, earthquake catalogues for the 2006 Basel injection have been simulated a hundred times for different initial generated asperities (Figs. 1, 2). The parameters used for all these examples are: \( \kappa_{0} = 3 \times 10^{ - 10} \) m2, \( \sigma_{0} = 6 \times 10^{6} \) MPa, D = 1.5, and \( N_{0} = \) 14; the other parameters are the same as in Aochi et al. (2014).

Comparison between the observed and 100 simulated earthquake catalogues and pore pressure changes for the 2006 Basel injection. The imposed injection rate is shown as a grey line in each panel. 100 sets of simulated seismicity (red dots) based on different distributions of randomly-generated asperities are shown as a function of time and magnitude. The observed earthquakes (M w > 1) for the 2006 Basel injection are marked by blue dots in the bottom left panel. The wellhead pressure is compared between observations (blue) and simulations (red) in the right bottom panel for a single simulation because the wellhead pressure is only weakly dependent on the seismicity in this example

Comparison between the observed (red line) and simulated (grey lines) Gutenberg–Richter plots for the 2006 Basel injection. Simulation 83 (black line; ninth row, third column on Fig. 1) appears to be the simulation providing seismicity closest to the observations.

Comparing the 100 simulated catalogues (Fig. 1) shows the importance of the random distribution of the asperities at all magnitudes. The size of the largest earthquake is controlled by the largest asperities (see below). The difference in temporal evolution originates from the spatial distribution of the asperities. The simulated seismicity shows great variation; sometimes the seismicity begins at the start of the injection and many earthquakes are generated, whilst in other cases few events are induced. The largest event occurs during the period of the highest injection rate in some cases, or it happens following the reduction in the injection rate, as was observed in the 2006 Basel experiment. These scenarios clearly demonstrate that it is important to take into account many possible scenarios within a risk assessment for induced seismicity. In the magnitude-frequency relation (Fig. 2) the observations are roughly in the middle of the simulations, although the simulations show large variations on either side.

4 Application of Proposed Method

In this section we develop a protocol for the stimulation of the EGS reservoir that seeks to keep the risk of felt shaking below a certain threshold. This protocol is developed using the induced seismicity simulation technique presented in Aochi et al. (2014) and tested above. The different input components required for these simulations are presented in the following subsections. Because of the short time scales considered, all the analyses are conducted for daily rather than annual exceedance frequencies, which are standard for PSHA of natural seismicity.

In all these examples it is assumed that the hypocentral distance (R hyp) between the reservoir and the site is 5 km, which roughly corresponds to a site directly above a reservoir being exploited as part of an EGS and a reservoir that is small enough to be assumed as a point source. The impact of changing this distance is simply to scale up or down the hazard, and consequently, the risk. The source-to-site distance has a strong impact on the ground motions experienced at a site. Therefore, if the seismicity was particularly shallow, it could be difficult to keep the risk of felt shaking below an acceptable level.

4.1 Length of Time Used for PSHA

Standard PSHA relies on the assumption that the seismic hazard is stationary in time. This assumption is, however, clearly violated in the case of induced seismicity. In this case it is necessary to assume a period over which the seismicity can be considered stationary. We have selected 6 h for this period because, as noted below, this was the interval between potential increases or decreases in the injection rate within our procedure. Using a shorter period (e.g. 1 h) would make the risk change more rapidly, whereas using a longer interval (e.g. 24 h) would lead to more gradual changes in the risk. Because in each time interval there are often too few earthquakes to compute a robust estimate of the slope (b) of the Gutenberg–Richter relation, this value is fixed a priori, and the activity rate (a) is computed based on the number of earthquakes larger than M w 1 simulated during the previous 6 h. As shown in Fig. 2, the magnitude-frequency relations of the simulated catalogues show considerable variation. Therefore, we consider the impact of b on the results by using values of 0.5, 1.0, and 1.5, which cover the range observed for the simulations; a is then normalized to give the number of earthquakes per day (assuming stationarity) so that the daily risk is obtained. After each time-step of the simulations (1 s) these parameters are updated, and the hazard and the risk are subsequently recalculated.

4.2 M max

M max is the magnitude of the largest earthquake that can occur in the reservoir. It is used to truncate the relation defining the magnitude-frequency distribution (e.g. Gutenberg–Richter relation). Kijko (2004) presents three approaches to estimate M max based on earthquake catalogues. The equations for his Case I ("Use of the Generic Formula when earthquake magnitudes follow the Gutenberg–Richter magnitude distribution") were tested here for the catalogues in each interval. Because for many simulations there are few (or even no) events in a given 6-h interval, the estimates of M max obtained using this approach show great variation from one interval to the next. In general, they are poor estimates of the maximum sizes of future earthquakes because the seismicity is non-stationary.

Therefore, estimating M max from the ongoing simulations would not account for the occurrence of events much larger than had already been observed during the injection. Thus, we choose to define this parameter a priori. M max is difficult to define for induced seismicity (e.g. Bachmann et al. 2011); although McGarr (1976) and Shapiro et al. (2013) present formulae to estimate this parameter based on gross characteristics of the reservoir and injected volume. Here, we firstly use M max = 5, which as shown below, is a reasonable assumption, given the sizes of the randomized fractures within the reservoir, with respect to the magnitude-frequency plot shown in Fig. 2. Subsequently, we discuss the impact of this choice on the computed risk. We use the doubly-truncated Gutenberg–Richter relation as the model of earthquake occurrence.

4.3 GMPE

Douglas et al. (2013a) developed a set of GMPEs using the stochastic method to account for epistemic uncertainties and to allow site-specific PSHA to be conducted. The selection and weighting of the stochastic GMPEs for site-specific analyses is discussed by Edwards and Douglas (2013) using the example of Cooper Basin EGS. For simplicity, within this article the empirical PGV GMPE (model 1, corrected for site effects) of Douglas et al. (2013a), i.e.: lnPGV = −9.999 + 1.964M w − 1.405 ln(R 2hyp + 2.9332)1/2 − 0.035R hyp is used (where PGV is in m/s). Because in the case considered there is a single source (reservoir) and a single site, the aleatory variability (σ) to be used with the GMPE is given by: σ = (φ 2SS + τ 2ZS ) where φ SS is the single-station within-event variability and τ ZS is the zone-specific between-event variability. Using φ SS and τ ZS reported by Douglas et al. (2013a) gives a σ of 0.81 (natural logarithms).

4.4 Fragility Curve

With respect to seismic risk, the first goal of EGS exploitation is not to induce earthquakes that are felt by the local population. Therefore, the measure of risk considered in this article is the probability that earthquake ground motions will be felt. As shaking widely felt by the local population corresponds to macroseismic intensity III (on the majority of scales, including the Modified Mercalli), the fragility curve used here is that modelled by the relations of Worden et al. (2012) for this intensity.

The exceedance probabilities computed by Bachmann et al. (2011) and Mena et al. (2013) for the Basel sequence using macroseismic intensities show that the probability of intensity III (felt shaking) is close to unity throughout the injection period. Therefore, it may be better to base the thresholds on the risk of higher intensities that show greater sensitivity to the injection rate. As shown by Eads et al. (2013), for collapse risk, the majority of the risk calculated by convolving the hazard curve and derivative of the fragility curve comes from the lower half of the curve, i.e. small but frequent ground motions. Therefore, it is important to constrain the lowest level for which shaking can be felt, but currently this threshold is poorly known, particularly for induced shaking.

4.5 Acceptable Risk

The question of what seismic risk is acceptable to the local population plays a central role in the procedure, but it is probably the most difficult parameter to constrain since it depends on the local population (e.g. their reaction to EGS in general, their fear of a future large earthquake, the background seismicity, and whether the number of felt earthquakes was increasing or decreasing), and there are very few published studies on the acceptability of EGS. Is one felt earthquake a year acceptable? What about one felt earthquake a month? Or a week? Analysis of local populations’ reaction to previous EGS operations and earthquake swarms (e.g. Basel 2006) could be made to define this acceptable risk by, for example, retrospectively estimating the risk at which the decision was made to halt injections. Because of the lack of previous studies on this parameter, we conducted a sensitivity analysis for two risk thresholds: one above which the risk is clearly unacceptable and injection must cease (red) and one below which the EGS operator should aim to remain (amber).

4.6 Exploitation Strategy

The fluid injection rates and the steps between different rates for the exploitation strategy proposed here are based on those employed in recent geothermal projects (see Fig. 1 for the injection history used at Basel). For the Cooper Basin, (Australia) Baisch et al. (2006) showed that the rates were less than about 40 L/s with steps of about 5 L/s between different levels; for Berlin (El Salvador), rates on average were 15 L/s according to Bommer et al. (2006). At Soultz, rates were generally lower than 50 L/s with steps of about 10 L/s (Charlety et al. 2007), and at Basel, the maximum rate was about 60 L/s with steps of around 10 L/s (Häring et al. 2008). Injection rates were generally kept constant over a certain period (e.g. roughly 12 h for Basel) before being increased or decreased.

The following injection strategy was developed after much trial and error. It was difficult to choose risk thresholds that led to a sustainable injection history (i.e. one that did not increase to large rates that are unrealistic with respect to recent geothermal projects or one where the rates decreased to zero). We recognise that other strategies are possible—the purpose of this article is to demonstrate the feasibility of using computed risk to control injection.

At the beginning of the simulation the fluid injection rate is set to 10 L/s, which is well below the maximum rates used for EGS stimulation and unlikely to cause much seismicity. Consequently, the risk should stay below the amber threshold during the initial injection period. The seismicity is then simulated using the same approach as used in the previous section, and subsequently, the hazard and risk are computed using the input parameters discussed above. Once the risk has been computed, there are two possibilities: either the risk is lower than the (amber) acceptable risk threshold or it is higher. In the first case the injection rate could be increased, and in the second it should be decreased (or if the risk is above the red threshold, then the exploitation should be completely halted and the regulator informed). The simple algorithm used to define the exploitation strategy is shown in Fig. 3. It was decided not to allow the injection rate to be changed too often so as to let the seismicity stabilize, and because it may be difficult from an operator’s viewpoint to constantly change the injection rate. Using the a (converted to a daily rate) and b values given by Bachmann et al. (2011) for the injection period, in conjunction with the other input parameters used here [e.g. the PGV GMPE and the fragility curve for intensity III of Worden et al. (2012)], a daily risk of felt motions was computed as 0.7 for Basel, which informed the value we chose for the amber threshold. Following various tests, we finally selected 0.8 for the amber threshold and 0.9 for the red threshold.

4.7 Simulations

Firstly, we conduct six simulations assuming b = 1 and M max = 5, where the influence of the randomly-generated asperities is studied (Fig. 4). The exploitation strategy described above is implemented and allowed to run until either the injection is brought to an end because the risk surpasses the red threshold, or the seismicity ends because all the asperities have ruptured.

Simulations of the injection and induced seismicity using the exploitation strategy of Fig. 3 and assuming b = 1. We ran six different simulations whose heterogeneity was randomly generated. The simulated seismicity is shown by black circles as a function of time and magnitude, the number of earthquakes per hour by grey histograms, the risk of felt shaking by yellow curves, the injection rate by blue lines and simulated well-head pressure by red curves.

As noted above, there is uncertainty over the value of b to use for these simulations, and therefore, we repeat the same simulations using b = 0.5 and 1.5 (Figs. 5, 6). The same heterogeneity map is assumed for each panel, e.g. the same map for Figs. 4a, 5a, 6a. Assuming b = 0.5 (Fig. 5) leads to a higher estimated risk (because there are more large earthquakes predicted), and consequently, the injection is stopped in all the cases. In contrast, assuming b = 1.5 (Fig. 6) leads to much lower risk estimates, and hence, higher injection rates are allowed.

When M max is reduced to 4 or even to 3, it is expected that the estimated risk would greatly reduce, and hence, higher injection rates would be authorised by the exploitation strategy. However, using low values of M max is not justified for many of the simulated reservoirs because of the large asperities that are present. Only if the reservoir could be sufficiently well imaged so that the size of the largest possible earthquakes caused by the injection could be accurately estimated would using low values of M max be justified. Given current techniques for reservoir imaging, this is not possible. As an example of what could be envisaged if such imaging techniques were available, the largest earthquakes possible for the six simulated asperity networks are computed using the size of the largest asperity to roughly estimate M max. The largest patch that can be potentially ruptured has a radius (r) of 960 m. Initially, without any change in pore pressure, the possible stress drop is 3.5 MPa, computed using a difference between static and dynamic frictional coefficients of 0.05 and an effective normal stress of 70 MPa. The relation between slip (D) and stress drop (\( \Delta \tau \)) on a circular crack is given by \( \Delta \tau = \frac{7\pi }{16}\mu \frac{D}{r} \) (Madariaga 1979), where \( \mu \) is the rigidity of the medium. Therefore, we estimate D = 0.08 m in this case. This corresponds, then, to an event of M w = 4.5, which could be used as M max.

5 Discussion

The slope of the Gutenberg–Richter plot (b) computed at each time-step does not show much variation over the entire period of injection, and hence, it is the activity rate (a) that is the principal driver of variations in the hazard and, consequently, the risk. In addition, plotting the hazard curves obtained at each time-step shows that they are generally almost straight in log–log space over many orders of magnitude. Kennedy (2011), amongst others, shows that if the hazard curve can be simplified as a straight line in log–log space, then, for the case of fragility curves expressed in terms of a lognormal distribution, the risk can be calculated analytically. Working backwards, assuming a constant b, allows thresholds on the rate of earthquakes to be defined. These could be used to control the injection rate rather than requiring the actual risk to be calculated. Alternatively, this approach would also allow the relation between the magnitude of the largest observed earthquake and the level of risk to be analytically defined, thereby returning to a classic traffic light system.

How could the protocol presented above be used by an EGS operator for a specific site? One way in which this study could help guide EGS stimulation would be to run this type of analysis using site-specific inputs (e.g. R hyp, fragility curves, GMPEs, and reservoir parameters) to provide guidance on the rates of injection that are ‘safe’ from the risk viewpoint. However, many of the parameters used to characterise the reservoir and control the seismicity are difficult to define and, therefore, it is unlikely that this guidance would provide strong constraints on the acceptable injection strategy. The other way in which the protocol could be useful is to implement such real-time risk calculations using the observed earthquake catalogue induced by the injection and then to adjust the injection rate in the same way as it was in the simulations. Such an application would rely, however, on the detection, localisation, and characterisation of the microseismicity in near real-time. A more sophisticated approach would be to simulate an earthquake catalogue for the next, say, 6 h using the planned injection rate and check whether this would lead to an acceptable level of risk; if not, the planned injection rate could be altered and the simulations re-run until the risk is acceptable.

6 Conclusions

This article proposes a new approach to define the injection strategy for use when stimulating an EGS for power production. This approach is based on the calculation of risk via the risk integral that convolves a (site-specific) real-time hazard curve with a (site-specific) fragility function. The proposed protocol then seeks to keep the calculated risk below certain acceptable thresholds but at the same time increasing the reservoir’s permeability. Using a method which was checked in this article against observations for an actual EGS to simulate earthquake catalogues of induced seismicity that are a function of the fluid injection rate, we demonstrate that this protocol enables these two requirements to be balanced.

Notes

Another way of reducing the risk would be to reduce the exposure by developing EGS away from populated areas. However, because of the dual use of these systems to produce heat for buildings (in addition to electricity), it is often preferable, from an energy efficiency point of view, for them to be developed close to a town that can use the generated heat.

References

Aochi, H. and Ide, S. (2009), Complexity in earthquake sequences controlled by multi-scale heterogeneity in fault fracture energy, Journal of Geophysical Research, 114, B03305, doi:10.1029/2008JB006034.

Aochi, H., Poisson, B., Toussaint, R., Rachez, X. and Schmittbul, J. (2014), Self-induced seismicity due to fluid circulation along faults, Geophysical Journal International, in press, doi:10.1093/gji/ggt356.

Bachmann, C. E., Wiemer, S., Woessner, J. and Hainzl, S. (2011), Statistical analysis of the induced Basel 2006 earthquake sequence: Introducing a probability-based monitoring approach for Enhanced Geothermal Systems, Geophysical Journal International, 186, 793–807, doi:10.1111/j.1365-246X.2011.05068.x.

Baisch, S., Weidler, R., Vörös, R., Wyborn, D. and de Graaf, L. (2006), Induced seismicity during the stimulation of a geothermal HFR reservoir in the Cooper Basin, Australia, Bulletin of the Seismological Society of America, 96(6), 2242–2256.

Bommer, J. J., Oates, S., Cepeda, J. M., Lindholm, C., Bird, J., Torres, R., Marroquin, G. and Rivas, J. (2006), Control of hazard due to seismicity induced by a hot fractured rock geothermal project, Engineering Geology, 83, 287–306. doi:10.1016/j.enggeo.2005.11.002.

Bruel, D. (2007), Using the migration of the induced seismicity as a constraint for fractured Hot Dry Rock reservoir modelling, International Journal of Rock Mechanics and Mining Sciences, 44(8), 1106–1117.

Charlety, J., Cuenot, N., Dorbath, L., Dorbath, C., Haessler, H. and Frogneux, M. (2007), Large earthquakes during hydraulic stimulations at the geothermal site of Soultz-sous-Forets, International Journal of Rock Mechanics and Mining Science, 44(8), 1091–1105, doi:10.1016/j.ijrmms.2007.06.003.

Convertito, V., Maercklin, N., Sharma, N. and Zollo, A. (2012), From induced seismicity to direct time-dependent seismic hazard, Bulletin of the Seismological Society of America, 102(6), 2563–2573, doi:10.1785/0120120036.

Cua, G., Wald, D. J., Allen, T. I., Garcia, D., Worden, C. B., Gerstenberger, M., Lin, K. and Marano, K. (2010), “Best practices” for using macroseismic intensity and ground motion intensity conversion equations for hazard and loss models in GEM1, GEM Technical Report 2010–4. GEM Foundation, Pavia, Italy.

Douglas, J., Edwards, B., Convertito, V., Sharma, N., Tramelli, A., Kraaijpoel, D., Mena Cabrera, B., Maercklin, N. and Troise, C. (2013a), Predicting ground motion from induced earthquakes in geothermal areas, Bulletin of the Seismological Society of America, 103(3), 1875–1897, doi:10.1785/0120120197.

Douglas, J., Ulrich, T. and Negulescu, C. (2013b), Risk-targeted seismic design maps for mainland France, Natural Hazards, 65(3), 1999–2013, doi:10.1007/s11069-012-0460-6.

Eads, L., Miranda, E., Krawinkler, H., and Lignos, D. G. (2013), An efficient method for estimating the collapse risk of structures in seismic regions, Earthquake Engineering and Structural Dynamics, 42(1), 25–41, doi:10.1002/eqe.2191.

Edwards, B. and Douglas, J. (2013), Selecting ground-motion models developed for induced seismicity in geothermal areas, Geophysical Journal International, 195(2), 1314–1322, doi:10.1093/gji/ggt310.

Goertz-Allmann, B. P. and S. Wiemer (2013), Geomechanical modeling of induced seismicity source parameters and implications for seismic hazard assessment, Geophysics, 78(1), KS25–KS39.

Hakimhashemi, A. H., Yoon, J. S., Heidbach, O., Zang, A. and Grünthal, G. (2013), FISHA – Forward induced seismic hazard assessment application to synthetic seismicity catalog generated by hydrulic (sic) stimulation modeling, Proceedings of Thirty-Eighth Workshop on Geothermal Reservoir Engineering, Stanford, California, February 11–13, SGP-TR-198.

Häring, M. O., Schanz, U., Ladner, F. and Dyer, B. C. (2008), Characterisation of the Basel 1 enhanced geothermal system, Geothermics, 37, 469–495, doi:10.1016/j.geothermics.2008.06.002.

Ide, S. and Aochi, H. (2005), Earthquakes as multiscale dynamic ruptures with heterogeneous fracture surface energy, Journal of Geophysical Research, 110, B11303, doi:10.1029/2004JB003591.

Kennedy, R. P. (2011), Performance-goal based (risk informed) approach for establishing the SSE site specific response spectrum for future nuclear power plants, Nuclear Engineering and Design, 241, 648–656, doi:10.1016/j.nucengdes.2010.08.001.

Kijko, A. (2004), Estimation of the maximum earthquake magnitude, m max , Pure and Applied Geophysics, 161, 1655–1681, doi:10.1007/s00024-004-2531-4.

Kulkarni, R. B., Youngs, R. R. and Coppersmith, K. J. (1984), Assessment of confidence intervals for results of seismic hazard analysis, Proceedings of Eighth World Conference on Earthquake Engineering, 1, 263–270.

Madariaga, R. (1979), On the relation between seismic moment an stress drop in the presence of stress and strength heterogeneity, Journal of Geophysical Research, 84, 2243–2250.

McGarr, A. (1976), Seismic moments and volume changes, Journal of Geophysical Research, 81(8), 1487–1494, doi:10.1029/JB081i008p01487.

Mena, B., Wiemer, S. and Bachmann, C. (2013), Building robust models to forecast the induced seismicity related to geothermal reservoir enhancement, Bulletin of the Seismological Society of America, 103(1), 383–392, doi:10.1785/0120120102.

Miller, S. A., Collettini, C., Chiaraluce, L., Cocco, M., Barchi, M., and Kaus, B. (2004), Aftershocks driven by a high pressure CO 2 source at depth, Nature, 427, 724–727.

Shapiro, S. A., Krüger, O. S., and Dinske, C. (2013), Probability of inducing given-magnitude earthquakes by perturbing finite volumes of rocks, Journal of Geophysical Research: Solid Earth, 118(7), 3557–3575, doi:10.1002/jgrb.50264.

Shinozuka, M., Feng, Q., Lee, J., and Naganuma, T. (2000), Statistical analysis of fragility curves, Journal of Engineering Mechanics, 126(12), 1224–1231.

Worden, C. B, Gerstenberger, M. C., Rhoades, D. A., and Wald, D. J. (2012), Probabilistic relationships between ground-motion parameters and Modified Mercalli intensity in California, Bulletin of the Seismological Society of America, 102(1), 204–221, doi:10.1785/0120110156.

Acknowledgments

This study was mainly funded by the Geothermal Engineering Integrating Mitigation of Induced Seismicity in Reservoirs (GEISER) project under contract 241321 of the European Commission Seventh Framework Programme (FP7). We also benefited from internal research funding of BRGM. We thank Anne Lemoine for help at the beginning of this study and Xavier Rachez for his comments on an earlier version of this manuscript. Finally, we thank two anonymous reviewers whose detailed comments led to significant improvements to this study.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Douglas, J., Aochi, H. Using Estimated Risk to Develop Stimulation Strategies for Enhanced Geothermal Systems. Pure Appl. Geophys. 171, 1847–1858 (2014). https://doi.org/10.1007/s00024-013-0765-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00024-013-0765-8