Abstract

The medium for recording data and information in the present world is paper, magnetic tapes, hard disks, pen drives, etc., whereas about 700 years ago palm leaves were used for this purpose. To recognize the palm leaf text, a novel concept of using a 3D inherent feature, i.e., (depth of incision) is proposed in the current study. This proposed depth sensing approach is used for background subtraction on palm leaf manuscripts. For various features extracted from the palm leaf characters, an improved recognition accuracy is also reported with the help of this 3D feature. To improve the predictive recognition accuracy and to reduce the memory needed, investigations are carried out by implementing optimization techniques.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

India is a diverse nation and rich in the literature. As of today, there are 33 languages and 2000 dialects, of which 22 are recognized under the constitution. The popular South Indian recognized languages are Telugu, Tamil, Kannada, Malayalam, Tulu, etc [1]. Telugu script, which is an offshoot of Brahmi script, has complex structural characteristics and makes character recognition a difficult task. It has 16 vowels and 36 consonants. Consonants sometimes combine with the vowels or another consonant to form a complex or compound character [2, 3]. Recognition of compound characters is a challenging task to researchers.

Palm leaves were used as a writing material to record popular literature, scientific treaties and history [4,5,6]. Countries like India, Thailand, Philippines, and Indonesia became home to large collections of palm leaf manuscripts. These are recognized as the key to knowledge in diverse fields that have been passed through generations [4,5,6]. The current work acquires information using a 3D profiler to resolve the issues related to palm leaf manuscripts. The pressure applied by the scribe on such scripts to incise characters is measured in the current work. The depth of incision, a 3D feature, is used to perform background subtraction and to improve character recognition rate.

2 Related work

Sastry et al. [7,8,9] contributed their work on palm leaf documents by extracting the 3D feature, depth of indentation which is proportional to the pressure applied by the scriber, for basic isolated Telugu characters. Only at selected points like bends, start, and end points of the character the three coordinates (X, Y, Z) were measured using a human and mechanical interface system. The two coordinates (X, Y) were measured using a mechanical instrument called measuroscope. The third coordinate Z, the depth of indentation was measured, using a dial gauge indicator having a resolution of 0.01 microns. In [7,8,9] investigations were done whether the depth of indentation or pressure applied by the scriber contributes in improving character recognition, without considering the background. There is a tendency of human errors while selecting the points from character to character in their work. It is because using two different instruments, the measurements were taken by physically removing the sample from one instrument to the other at manually selected points.

Several other authors [6, 10,11,12,13,14] have contributed their work in removing noise from various handwritten historical documents like Thai, Ottoman and Patrimonial.

3 Proposed methodology

The flowchart of the proposed recognition scheme for palm leaf scribed text is shown in Fig. 1. It is divided into four major components: 3D data acquisition, preprocessing, feature extraction, and feature selection. The detailed description of all these components is explained in the following subsections.

3.1 Data acquisition

In the current study, data have been acquired using a computer controlled XP-200 series profilometer of AMBiOS Technology [16]. A diamond point stylus profiler of radius 2.5 microns having lateral resolution of 100 nm is used to measure the pressure applied by the scribe on the palm leaf manuscripts. The movement of the stylus in lateral direction (Z direction) generates an analog signal which is converted into digital data.

The procedure for raster scanning is described with the help of Fig. 2. The profiler can be programmed for vertical raster scanning by specifying the following arguments to measure three coordinates (X, Y and Z) for the palm leaf text:

-

1.

Scan length (L)

-

2.

Scan width (W)

-

3.

Step size (S) between two consecutive scans/profiles

The scan length and width are specified for the text line as shown in Fig. 2a. Each vertical line in Fig. 2b indicates a scan profile. The difference between two consecutive profiles is the step size (S is set to 0.2 mm). For each scan, the up/down movement of the diamond point stylus along vertical direction (for specified scan width W) is captured by the high-resolution camera. The depth of indentation, Z, (a 3D feature) is recorded, converted into digital form and stored in the computer, attached to the profilometer. Each scan profile contains recorded Y and Z values, where Y is measured in microns and Z is measured in Angstrom units. The number of profiles (N) generated is given by L/S. The extreme left profile in Fig. 2b is used as reference to measure X coordinate of the text line. For every profile, X is incremented by the step size as depicted in equation (1).

where p is profile number.

A total of 67 palm leaf manuscripts were considered for experimentation. The number of Telugu character samples considered for recognition is 8745 belonging to 53 different classes. Each character class contains 165 samples. These character samples are divided into five folds (each fold contain 1749 samples).

3.2 Preprocessing

A sample analog scan profile generated for a scan width of 4mm is shown in Fig. 3. The x-axis in Fig. 3 represents the data points along vertical direction (Y) in microns and y-axis represents the corresponding depth points along Z direction in Angstroms. The blue line indicates the base of the sample. The manuscripts considered in our experiments are 300 years old; hence, they are crinkled and the same is recorded. The red line indicates the threshold applied to subtract the background / to extract the foreground text. The threshold applied is 40% of the average of all the minimum valley points \( V_\mathrm{min} \) encountered from N profiles. Provided the minimum valley point’s height \( V_{h} \) should be greater than or equal to the predefined lateral height \( L_{h} \), to avoid the errors due to the crinkled surface texture of the palm leaf scripts. The number of minimum valley points encountered in the profile shown in Fig. 3 is 2, as indicated in Fig. 3. If no minimum valley points encountered in a profile then it indicates spacing between the strokes / characters. This adaptive threshold computed from every N profiles is used to subtract the background, even when the scripts are written by multiple writers.

The foreground text extracted after background subtraction is segmented into characters using vertical projection profile algorithm. Selecting two coordinates at a time, from the segmented data points, character patterns are generated in different planes of projection, viz., XY, YZ, and XZ. For every segmented character image /pattern, the minimum boundary of either width or height of the character (whichever is greater) is normalized to a fixed value M (M=50 in the current work). Preserving its aspect ratio further these images are resized to \(\hbox {M} \times \hbox {M}\).

3.3 Feature extraction

The two features extracted for the characters /patterns generated in all the three planes of projection are as follows:

-

1.

Histogram of gradients (HoG)

-

2.

Cell-based directional distribution

For classification, k-NN classifier is employed for both these approaches.

3.3.1 Histogram of gradients

The features are extracted from the normalized palm leaf character image by slicing it into cells. Further cells are grouped to frame a block. The size of the cell selected is \(10 \times 10\), and size of the block is \(2 \times 2\) cell. By overlapping 50% of the blocks, in each cell histogram of gradient directions is computed using a 1D derivative mask [\(-\)1 0 1] along x-axis and its transpose along y-axis. The quantized gradient orientations are mapped into one of the 9 bins, spaced at \( 2\pi /9 \) intervals. The normalized group of histogram of the corresponding gradient magnitudes represents the histogram descriptor of a character. The advantage of HoG descriptor is invariant to geometric transformations as it operates on local cells [15].

The feature vector size is the product of the number of bins, the size of a block and the number of blocks in the image. The number of blocks for a \(50 \times 50\) normalized image is \(4 \times 4\), as 50% of the blocks are overlapped. Hence, the size of the feature vector is \(9 \times 2 \times 2 \times 4 \times 4 = 576\).

3.3.2 Cell-based directional distribution

In this algorithm, the object pixels are distributed based on the directional information in each cell by masking 8 directional masks [17, 18] (size of each mask is \(3\times 3 \)). The size of the cell is \( 10\times 10 \) in this work. In each cell, the pixels distributed in a particular direction are accumulated and mapped into their respective bins. Hence for 25 cells, a total of \(8 \times 25 = 200\) features represent a pattern /character.

3.4 Feature selection techniques

Reducing dimensionality or removing irrelevant features is one of the major assignments in any recognition system [19, 20]. In the current work, feature dimensionality reduction is carried out by implementing optimization techniques such as Differential Evolution (DE) [19, 21] and Particle Swarm Optimization (PSO) [22, 23]. Reducing the features needed for learning improves predictive accuracy and memory requirement [24]. Let the randomly generated initial population for all these techniques be \( POP_{A} \) defined for \( Q_{p} \) individuals / patterns with D dimensions. Let \( \overrightarrow{A}_{i,g} \) be the \( i_{th} \) individual / pattern and \( a_{j,i,g} \) be its \( j_{th} \) dimension parameter in \( g_{th} \) generation. The brief details of these techniques are given below.

3.4.1 Differential evolution

It is a population-based stochastic approach introduced by Price and Storn in 1995. The four steps involved in DE are population initialization, mutation, crossover, and selection. Let the randomly generated \( i_{th} \) individual be \( \overrightarrow{A}_{i,g} \) and \( a_{j,i,g} \) be its \( j_{th} \) dimension feature in \( g_{th} \) generation. The fitness is measured for all the individuals in the population. In the current work, the classification error computed using k-NN (k-Nearest Neighbor) classifier is used as fitness function. Let the fitness measured for \( \overrightarrow{A}_{i,g} \) individual be \(f(\overrightarrow{A}_{i,g})\). The mutant individual \( \overrightarrow{B}_{i,g} \) is defined as follows [19]:

where \( r_{0} \), \( r_{1} \) and \( r_{2} \) are three random numbers generated. F \( \epsilon \) (0,1) controls the rate of population to evolve and is inversely proportional to max(\(a_{j,r_{1},g} \),\( a_{j,r_{2},g} \)). This allows the second term in equation (2) to oscillate within limits without crossing optimal solutions. DE crosses every individual vector with the mutant vector to define a trial vector \( \overrightarrow{U}_{i,g} \) as depicted in equation (3).

where \(\varnothing \) is a random value and \(C_{r} ~ \epsilon \) (0,1) defines the crossover rate. In the current work, crossover is set to 0.5. DE algorithm [19] employs roulette wheel to replace the duplicated features in the trial vector. The roulette wheel is designed by computing positive and negative distribution factors of the features [19].

The selection process in DE chooses either the original or the trial individual for the next generation, based on the fitness \( f(\overrightarrow{A}_{i,g})\), as depicted in equation (4), to produce the next generation individual \(\overrightarrow{A}_{i,g+1}\).

The four steps of DE are repeated for the new individuals in the population until the stopping criteria is reached, to find an optimum feature subset.

3.4.2 Particle swarm optimization

PSO developed by Kennedy and Eberhart [25] motivated from the behavior of the particles in the swarm. PSO starts with an initial swarm of particles in the search domain. Let the random position and velocity vectors of an \( i_{th} \) particle /individual be \( \overrightarrow{A}_{i,g} \) and \( \overrightarrow{V}_{i,g} \), respectively, at \( g_{th} \) generation. Each particle in the swarm flies with these initial positions and velocities in the search domain to attain the best fitness. In the current work, classification error is considered as the fitness function for PSO. At generation g, \( \overrightarrow{A}_{\mathrm{best}_{i}} \) and \( \overrightarrow{G}_{\mathrm{best}} \) are the best fitness positions achieved by the \( i_{th} \) particle/individual and the swarm/group, respectively.

For every generation, the velocities and positions of the particles are computed [22] using Eqs. (5) and (6), respectively, with linearly time varying inertia weight (\( \omega \)) over generations [26]:

where \( \varnothing _{1} \) and \( \varnothing _{2} \) are two numbers generated randomly over the range [0 1], \( c_{1} \) and \( c_{2} \) are cognitive and social acceleration constants, respectively, and \( \omega \) is given by

where \( \omega _{1} \) and \( \omega _{2} \) are the inertia weights, g is the current generation and \( g_{max} \) is the maximum number of generations. The optimum values [26] set for \( \omega _{1} \) and \( \omega _{2} \) are 0.9 and 0.4, respectively. A constant value 2 is set to \( c_{1} \) and \( c_{2} \) acceleration coefficients.

For every generation, the \( \overrightarrow{A}_{best_{i}} \) and \( \overrightarrow{G}_{best} \) positions of the \( i_{th} \) particle and the swarm are updated, respectively, based on the fitness computed for the updated positions of the particles until an optimum solution is achieved.

4 Experimental results

Traditionally scanned and camera-captured documents are prone to several distortions like uneven illumination, low resolution, perspective, and motion blur. These distortions may elevate the noise levels in degraded documents. The actual textual information gets effected, while suppressing these noise levels using the conventional noise removal approaches. However, no additional noise is added using the proposed depth sensing approach. With the specified stylus force (10mg) applied on the sample document, no damage caused to it.

4.1 Preprocessing

The proposed background subtraction algorithm extracts the foreground text even from stained documents. As the pressure applied by the scriber varies from line to line and from scriber to scriber, the threshold is computed for every line separately considering all the profiles in a line, as discussed in Sect. 3.2. The sample background-subtracted images are shown in Fig. 4b for the input text strings considered in Fig. 4a. Though there is a stain in string 1, the text is extracted properly after background subtraction.

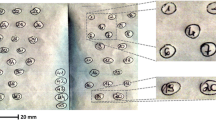

Each segmented character consists of X, Y, and Z data points. Selecting two coordinates at a time, patterns are generated in three different planes of projection viz., XY, YZ, and XZ. The patterns generated in all three planes are shown in Fig. 5a–d for some similar characters of Telugu. These Telugu characters have dissimilar patterns in YZ and XZ planes of projection which lead to improve the recognition accuracy.

4.2 Comparison of palm leaf character recognition approaches

The patterns generated in three planes of projection are trained and tested separately, to identify patterns generated in a plane that offers recognition of character patterns. Hence, the experiment is repeated for 3 times, for each plane of patterns. The number of characters considered for experimentation is 8745 from 53 different classes. All the characters are tested once by five-fold cross-validation.

With two different features extracted from the patterns, discussed in Sect. 3.3, the characters are classified using k-NN classifier (k=1). The cross-validated results obtained are compared with the existing and state-of-the-art methods which are tabulated in Table 1. The noise from the flat-bed scanned palm leaf documents in state-of-the-art method is removed using morphological operations such as dilation and erosion. The ‘HoG’ feature set yielded better classification accuracies in all three planes of projection. The best recognition rate obtained is 93.1% in YZ plane of projection using ‘HoG’ feature set. The maximum variations found for Telugu similar characters are in Y direction [7,8,9]. This inherent characteristic of Telugu script in combination with the 3D feature (depth of incision), Z, yielded better recognition accuracies in YZ plane of projection compared to the other planes of projection for all the cases. The recognition accuracies achieved in XZ plane of projection are better than that of in XY plane of projection. This is due to the contribution of Z in XZ plane of projection, which yielded better results compared to XY plane of projection. This proves that the recognition rate is improved using the 3D feature (depth).

Sastry et al. [7, 8] worked on basic isolated Telugu palm leaf characters, whereas the proposed work is done not only on basic characters but also on compound characters. These compound characters are obtained by combining vowels, consonants or both. In [7, 8] the data from palm manuscripts were acquired using a ‘Human–mechanical interface system,’ whereas in the current work the data are acquired using a sophisticated profiler.

In [7] radon transform was used to extract the features from the characters. Sastry et al. [8] recognized the palm leaf characters in two levels. In the first level, the characters were classified by extracting 2D-FFT features. The mis-recognized characters from the first level were again classified by extracting 2D-DCT features in the second level. They reported the best recognition rate of 92.8% in YZ plane of projection. This approach is computationally expensive as two sets of features were extracted for the characters.

The results reported in [7] are in line with the results obtained using the proposed approaches. The recognition accuracies are better in XY and XZ planes of projection using the proposed approaches compared to the one reported in [8]. When compared to the conventional or state-of-the-art method the character recognition results obtained with the proposed system (considering the Z feature) are better in both YZ and XZ planes.

4.3 Feature selection results

The recognition results obtained are better in YZ plane of projection, as discussed in the previous subsection for both the proposed and existing approaches. Hence in this subsection, optimization of features is carried out only for patterns obtained in YZ plane of projection. The two feature sets extracted from the patterns are concatenated and utilized to check the performance of the optimization techniques, such as DE and PSO. The subset size / number of dimensions (D) is varied from 5 to 100 features in the experiment, as the proper subset size is unpredictable. This is done because for larger subset sizes no improvement is achieved [19].

Each optimization technique is made to run with the same initial population \( POP_{A} \). The size of the population, \( Q_{p} \), is set to 40 and the maximum number of generations /iterations, \( g_\mathrm{max} \), is set to 100 for all the experiments. The fitness function is the classification error rate computed using k-NN classifier. The experiment is repeated for 20 times, for each subset size. The average classification rates for various subset sizes are shown in Fig. 6.

It has been reported in [19, 27] that the performance of DE is better than PSO and GA optimization techniques for various medical datasets. Even in the current work, it is observed that due to the exploitation and exploration capability associated with DE, it outperformed even for smaller subset sizes.

The best classification rate obtained with the combination of features extracted and PSO is 89.5% for an optimum feature subset size of 85. No improvement in recognition rate is found using PSO. The performance of DE with the combination of features extracted yielded the best recognition accuracy of 94.68% for an optimum feature subset size of 60. Thereby, the predictive classification accuracy is improved by reducing the features needed for learning [24].

5 Conclusion

In conventional scanned or camera-captured images, distortions like low resolution, perspective, and uneven illumination are common. For noisy or historical documents, these distortions may elevate the noise levels. The proposed 3D depth sensing approach subtracts the background and extracts the text from degraded palm manuscripts, even if the document has stains on it. No damage caused to the sample document, though the measurements are taken using a contact type stylus profiler.

Recognizing the similar Telugu characters is resolved using the depth information, ‘Z’. Patterns are developed in various planes of projection viz., XY, YZ, and XZ. The best recognition rate obtained is 93.1% in YZ plane of projection using ‘Histogram of Gradients’ feature set. The attribute of Telugu script in conjunction with the 3D feature, ‘Z’, yielded better recognition accuracies in YZ plane of projection compared to the other planes of projection.

To reduce feature dimensionality, optimization techniques such as Differential Evolution and Particle Swarm Optimization are implemented. The experiments are conducted by concatenating two sets of features and by varying the subset sizes. With reduced subset of features, the predictive accuracy is improved and the memory requirement is also reduced. The best recognition accuracy obtained for an optimum feature subset size of 60 is 94.68%, with Differential Evolution technique, in YZ plane of projection. Hence, there is a significant improvement in recognition rate for the reduced feature subsets considered using Differential Evolution technique.

The limitation of the proposed study is high scan time, due to this all the character samples were used in five-fold cross-validation. In future, the work will be extended for independent test set by acquiring more data. The other limitation of the proposed system is that it is applicable only for palm leaf manuscripts.

References

Bag, S., Harit, G., Bhowmick, P.: Recognition of Bangla compound characters using structural decomposition. Pattern Recognit. 47(3), 1187–1201 (2014)

Prabhakar, K., Kannan, R.J.: A comparative study of optical character recognition for Tamil script. J. Sci. Res. 35(4), 570–582 (2009)

Kannan, B., Jomy, J., Pramod, K.: Handwritten character recognition of South Indian scripts: A review. Computing Research Repository, vol. abs/1106.0107

Vijaya Lakshmi, T.R., Narahari Sastry, P., Rajinikanth, T.V.: A novel 3D approach to recognize Telugu palm leaf text. Int. J. Eng. Sci. Technol. 20(1), 143–150 (2017)

Narahari Sastry, P., Vijaya Lakshmi, T.R., Krishnan, R.K., Koteswara Rao, N.V.: Modeling of palm leaf character recognition using transform based techniques. Pattern Recognit. Lett. 84, 29–34 (2016)

Chamchong, C.R., Fung, C.: Generation of optimal binarisation output from ancient Thai manuscripts on palm leaves. Adv. Decis. Sci. 2015(925935), 1–7 (2015)

Sastry, P.N., Krishnan, R.: Isolated Telugu palm leaf character recognition using radon transform, a novel approach. In: World Congress on Information and Communication Technologies (WICT), pp. 795–802 (2012)

Sastry, P.N., Vijaya Lakshmi, T.R., Krishnan, R., Rao, N.: Analysis of Telugu palm leaf characters using multi-level recognition approach. J. Appl. Eng. Sci. 10(20), 9258–9264 (2015)

Vijaya Lakshmi, T.R., Sastry, P.N., Krishnan, R., Rao, N., Rajinikanth, T.V.: Analysis of Telugu palm leaf character recognition using 3D feature. In: International Conference on Computational Intelligence and Networks (CINE), pp. 36–41 (2015)

Garain, U., Paquet, T., Heutte, L.: On foreground–background separation in low quality document images. Int. J. Doc. Anal. Recognit. (IJDAR) 8(1), 47–63 (2006)

Chen, J., Lopresti, D.: Model-based ruling line detection in noisy handwritten documents. Pattern Recognit. Lett. 35, 34–45 (2014)

Eglin, V., Bres, S., Rivero, C.: Hermite and Gabor transforms for noise reduction and handwriting classification in ancient manuscripts. Int. J. Doc. Anal. Recognit. (IJDAR) 9(2–4), 101–122 (2007)

Şaykol, E., Sinop, A.K., Güdükbay, U., Özgür, U., Çetin, A.E.: Content-based retrieval of historical Ottoman documents stored as textual images. IEEE Trans. Image Process. 13(3), 314–325 (2004)

Kefali, A., Sari, T., Bahi, H.: Foreground–background separation by feed-forward neural networks in old manuscripts. Informatica (Slovenia) 38(4), 329–338 (2014)

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. In: 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), vol. 1. San Diego, CA, USA, pp. 886–893 (2005)

Sastry, P.N., Vijaya Lakshmi, T.R., Rao, N.V.K., Rajinikanth, T.V., Wahab, A.: Telugu handwritten character recognition using zoning features. In: International Conference on IT Convergence and Security (ICITCS), Beijing, pp. 1–4 (2014)

Vijaya Lakshmi, T.R., Sastry, P.N., Rajinikanth, T.V.: Hybrid approach for Telugu handwritten character recognition using k-NN and SVM classifiers. Int. Rev. Comput. Softw. 10(9), 923–929 (2015)

Khushaba, R.N., Al-Ani, A., Al-Jumaily, A.: Feature subset selection using differential evolution and a statistical repair mechanism. Expert Syst. Appl. 38(9), 515–526 (2011)

Vijaya Lakshmi, T.R., Sastry, P.N., Rajinikanth, T.V.: Feature optimization to recognize Telugu handwritten characters by implementing DE and PSO techniques. In: International conference on Frontiers in Intelligent Computing Theory and Applications, pp. 397–405 (2016)

Bharathi, P.T., Subashini, P.: Optimal feature subset selection using differential evolution with sequential extreme learning machine for river ice images. In: IEEE Region 10 Conference TENCON, Nov 2015, pp. 1–6 (2015)

Mirjalili, S., Hashim, S.Z.M., Sardroudi, H.M.: Training feed forward neural networks using hybrid particle swarm optimization and gravitational search algorithm. Appl. Math. Comput. 218(22), 125–137 (2012)

Mirjalili, S., Hashim, S.: A new hybrid PSOGSA algorithm for function optimization. In: International Conference on Computer and Information Application (ICCIA), Dec 2010, pp. 374–377 (2010)

Liu, H., Dougherty, E., Dy, J.G., Torkkola, K., Tuv, E., Peng, H., Ding, C., Long, F., Berens, M., Parsons, L., Zhao, Z., Yu, L.: Evolving feature selection. IEEE Intell. Syst. 20(6), 64–76 (2005)

Kennedy, J., Eberhartt, R.: Particle swarm optimization. In: Proceedings of IEEE International Conference on Neural Network, pp. 1942–1948 (1995)

Shi, Y., Eberhart, R.C.: Empirical study of particle swarm optimization. In: Proceedings of the 1999 Congress on Evolutionary Computation, vol. 3, p. 1950 (1999)

Das, S., Suganthan, P.N.: Differential evolution: a survey of the state-of-the-art. IEEE Trans. Evolut. Comput. 15(1), 4–31 (2011)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Vijaya Lakshmi, T.R., Sastry, P.N. & Rajinikanth, T.V. Feature selection to recognize text from palm leaf manuscripts. SIViP 12, 223–229 (2018). https://doi.org/10.1007/s11760-017-1149-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-017-1149-9