Abstract

Argument is a critical element to science instruction as it serves as a tool for the building of student conceptual understanding and is the fundamental component to the knowledge construction process in science. The past two decades have been witness to a significant increase in research related to science argument. This chapter explores how scientific argument is fostered in science classrooms. In doing so, the authors will juxtapose embedded forms of argument with methods that teach argument as an external component to doing science. Specific examples from the research literature will be cited to highlight these critical differences and serve as a platform for the exploration of key questions such as: What is the role of language in science? And, what is the relationship between data and evidence? Additionally, the authors will provide evidence supporting an approach that scaffolds embedded argument within scientific investigations.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

Introduction

Evolving understandings of the nature of science and the role of language in student learning has led to an increase in the emphasis placed on argument and argumentation in education, particularly science education, contexts. The wave of argument-based interventions comes with a great deal of diversity in the characteristic nature of the interventions. Not only are the interventions diverse, but the methods by which the interventions are studied are as well. The diversity of interventions and methods for assessment is positive, but also has the potential to create a nebulous sea of argument. That is, much like the notion of inquiry, argument has the potential for becoming an instructional strategy that is undefined and therefore underutilized, yet always considered a positive technique to improve student learning. Like inquiry, the effectiveness of argument is dependent on the goal of instruction. In order to prevent the translation from research to practice from becoming an ill-defined instructional strategy, researchers need to move toward understanding the particular aspects that are critical to moving students toward specific goals. This process seems to be underway at the level of intervention, but this likely is not enough. After all, there are particular interventions for inquiry that have been shown to move students toward defined goals, yet the translation to classroom application has still broken down. One method for identifying the critical components for argument would be to deconstruct interventions to isolate particular areas that prove to be effective. This method is possible in a controlled setting but becomes increasingly more difficult in most classroom contexts. An alternative would be to continue to define aspects of argument and begin comparing key characteristics of the various argument-based interventions. This chapter will compare the use of language in argument-based interventions in an effort to stimulate further discussion about the variations in the argument in science education literature.

Language in Science

The focus on language, as viewed in terms of the concept of science literacy, has shifted many times over the last century. Much of the early focus on science literacy was ensuring that a learner could read the science textbook and use the words of science correctly. However, as suggested by a number of international standards and publications (Australian Curriculum, Assessment and Reporting Authority, 2009; National Research Council (NRC), 2007; Organisation for Economic Co-Operation and Development, 2003; The United Kingdom Department for Children, Schools, and Families, n.d.) the emphasis has changed from replication of terminology to a focus on the ability to use the language to build understanding of the topic and the ability to communicate to a broad audience the science knowledge gained from studying the topic. This shift in emphasis has meant that much more attention is now focused on the relationship between language and science. Students are now expected to do much more than simply remember lists of facts, be able to spell words correctly, or recognize and define the bold words in the science text. These international documents appropriately, although not explicitly, situate language as central to science with their emphasis on the epistemic nature of science.

Norris and Phillips (2003) are much more explicit in the role of language in scientific literacy when they defined the two essential senses of literacy that frame science. The first is the derived sense of literacy in which “reading and writing do not stand only in a functional relationship with respect to science, as simply tools for the storage and transmission of science. Rather, the relationship is a constitutive one, wherein reading and writing are constitutive parts of science” (p. 226). For Norris and Phillips this is critical because these constituents are the “essential elements of the whole” (p. 226), that is, remove these language elements and there is no science. Science is not something that can be done without language. For the authors, Norris’ and Phillips’ derived sense of science literacy should also include the different modes of representation. While this is implicit within reading and writing, there is a need to understand that different modes of science are integral to the concept of reading and writing. Science is more than just text as evidenced by other modes used by scientists to construct understanding (e.g., graphs, equations, tables, diagrams, and models).

The second essential sense of literacy is the fundamental sense of science literacy. For Norris and Phillips the fundamental sense involves the “reasoning required to comprehend, interpret, analyse, and criticise any text” (p. 237). Importantly, they argue that science has to move past oracy and the oral traditions because “without text, the social practices that make science possible could not be engaged with” (p. 233). The important recording, presentation, and re-presentation of ideas, and debates and arguments that constitute the nature of the discipline are not possible without text. These two essential senses of literacy are critical to the development of scientific literacy. Simply viewing acquiring of science content knowledge (the derived sense) as success denies the importance of being able to apply the reasoning structures of science (the fundamental sense) required for reading and writing about science. This important emphasis on language is critical when discussing science argument and learning of science. The vehicle for advancing science knowledge is argument, and argument is only advanced through the use of language. Without language science cannot advance.

Orientations Toward Learning Argument

A recent review of argument-based interventions by the first author suggests different positions have been adopted for learning science argument (Cavagnetto, 2010). The review of 54 articles that reported on argument interventions categorized argument-based interventions with regard to (a) when argument is used in the intervention, (b) what the interventions are designed to stimulate argument about, and (c) what aspects of science are present in the interventions. The author found three orientations toward argument were present in the research literature. The orientations are: (a) immersion in science for learning scientific argument (immersion), (b) learning the structure of argument to learn and apply scientific argument (structure), and (c) experiencing the interaction between science and society to learn scientific argument (socioscientific).

As suggested in Cavagnetto (2010), a number of the interventions are guided by the notion that it is best to learn scientific argument by embedding argument within investigative contexts. From this immersion orientation, argument serves as a tool for the construction of understanding of both the epistemic practices of science and scientific concepts. These interventions accomplish this through the use of scaffolding prompts and cognitive conflict. One such example is the ExplanationConstructor, a computer program that scaffolds students’ understanding of the relationship between investigation and explanation. A second example, which will be elaborated on in more detail later in the chapter, is the Science Writing Heuristic (SWH) approach (Hand & Keys, 1999). The SWH utilizes questions to prompt student construction and critique of arguments. Other interventions such as the use of personally seeded discussions (Clark and Sampson, 2007) and concept cartoons (Keogh & Naylor, 1999) attempt to establish cognitive conflict as a way to foster argument. Personally seeded discussions use a computer program to match opposing student explanations of natural phenomena. The students then engage in dialogue to determine which explanation captures the phenomena best. Concept cartoons use cartoons that depict scenarios centered on common misconceptions as a means to generate dialogue and interest in understanding the science principle. While argument is important in these interventions, it is in pursuit of understanding science content.

Another way to facilitate argument competence is by explicitly teaching a structure for argument and subsequently asking students to apply the structure. The structure orientation has been advanced primarily by the work published as part of the IDEAS project (Erduran, Simon, & Osborne, 2004; Osborne, Erduran, & Simon, 2004; Simon, Erduran, & Osborne, 2006; and von Aufschnaiter , Erduran, Osborne, & Simon, 2008) and the Claims, Evidence, and Reasoning structure identified in McNeill (2009); McNeill, Lizotte, Krajcik, and Marx (2006); and McNeill and Krajcik (2008). In the IDEAS project, students are taught Toulmin’s (1958) argument structure and then gain experience with its application across nine argument topics. In the published studies to date, students were most often asked to generate explanations by evaluating evidence for competing mechanisms for a phenomenon. Similarly, the claims, evidence, and reasoning structure reported by McNeill et al. were developed as a more digestible version of Toulmin’s structure. These interventions emphasize the structure of argument as a scaffold to critical thinking and a product of inquiry.

A third orientation toward learning scientific argument emphasizes the interaction between science and society, including moral, ethical, and political influences on decision making in scientific contexts. In these interventions, socioscientific and science, technology, society issues-based interventions are used as contexts for engaging students in argument. Argument then serves as a vehicle for students to gain an understanding of the social and cultural elements that influence science. This orientation has been advanced by Zeidler, Sadler, and colleagues. For example, Walker and Zeidler (2007) report on a policy-making debate about genetically modified foods. Students participated in explorations using web-based investigative software and applied their conceptual understandings in the policy-making debate. A similar intervention occurred in Sadler, Chambers, and Zeidler (2004). These interventions have primarily focused on realizing moral, ethical, and political considerations associated with the application of science knowledge rather than argument for constructing an understanding of scientific principles. However, Zeidler and Sadler now appear to be moving toward using socioscientific issues as a curricular context for courses of study (Fowler, Zeidler, & Sadler, 2009).

When looking across these three orientations the diversity among argument is evident. The diversity of the characteristics and goals of the interventions certainly illustrate a clear movement by the science education community to broaden the instructional goals from those historically found in school curricula or emphasized in textbooks. In addition to highlighting the diversity of these interventions, and for the authors more importantly, Cavagnetto’s characterization of the three orientations allows researchers to gain a clearer understanding of the perspective of language in which these orientations are founded.

There has been an ongoing debate about the best approach to introduce language instruction within classrooms, particularly in relation to science classrooms. The work of Halliday and Martin (1993) clearly emphasized the need for students to have to engage with the structure of the genres of science as a precursor to doing science. This position adopts the view that there is a need to learn to use the language prior to learning the science. For example, students need to learn the structure of the laboratory report prior to using the format to engage with laboratory activities. Gee (2004) has argued for the opposite position, contending that language must be embedded in the learning experience in order for students to gain a rich understanding of the disciplinary language. From Gee’s stance, language is viewed as a learning tool. That is, learning how to use the language is not separated from learning science.

While there is much debate about the relative merits at the extremes of these positions, Hand and Prain (2006) have argued for the need for some convergence of these positions. They believe there is a continuum of positions such that while there is a requirement for students to engage with the language of the discipline as a learning tool in order to learn the content, they also believe students need to understand the structure of the genres used within science. Klein (2006), in discussing the relative importance of first- and second-order cognitive science with respect to science literacy, also argues that there is no one position in terms of language that should be adopted. He suggests that in

the middle of the spectrum are practices that integrate expressive features of human thought and language with denotative features of authentic science text, such as concept mapping, graphing and the SWH. The result is that contemporary reforms in science literacy education accommodate students’ cognition and language, while preparing them to participate in disciplinary knowledge construction. Furthermore, the central hypothesis overarching these interpretations is that enhanced science literacy in the fundamental sense will result in improved understanding of the big ideas of science and fuller participation in the public debate about science, technology, society, and environment issues—the derived sense of science literacy. (p. 171)

The importance of recognizing the need to have some middle ground also applies to the concept of science argument. Much of the structure orientation (e.g., work done by Osborne et al. (2004)) is based on the work of Halliday and Martin. The work has focused on the dealing with promoting argument as a structure to be learnt prior to using argument within class. As they suggest “argument is a discourse that needs to be explicitly taught, through the provision of suitable activity, support, and modeling” (Simon et al., 2006, p. 237).

Conversely, the emphasis of the immersion orientation is to embed science argument within the context of doing inquiry, that is, students need to use science argument as a critical component of building understanding of the content. By using the scaffolds as a guide for completing the science inquiry, students are required to both construct understanding and build their understanding around an argument framework. Building on the writing to learn framework (Prain & Hand, 1996), there is a need for students to engage with the language of the science, the language of argument and all the negotiation of meaning that is required in moving between the various elements of the argument structure. Having students use science argument within the context of the topics that they have to build understanding of means that students are not separating the concept of argument from how knowledge is constructed in science.

Ford recognized this consistency among building understanding of science concepts and understanding of the epistemology of science. In his work related to the basis of authority in science, Ford (2008) argues for moving students toward a grasp of scientific practice because it facilitates the learning of scientific principles. As Ford contends, “a grasp of practice is necessary for scientists to participate in the creation of new knowledge because it provides an overview of its architecture and how to navigate it. A grasp of practice motivates and guides a search within this architecture for the informational content, indeed the meaning, of canonical scientific knowledge” (p. 406). For Ford, the epistemological nature of science serves as a framework for negotiating content.

This continuum of positions for introducing language has implications not only for broad characteristics of argument-based instructional interventions, but also for the way in which terms associated with science and argument are used within the argument-based interventions. One area that appears to illustrate these implications is the relationship between data and evidence. One example of the turbidity of the relationship between data and evidence is found in the National Research Council’s Ready Set Science (p. 133). The book highlights a claim, evidence, and reasoning structure and provides the following explanation:

-

Claim: What happened, and why did it happen?

-

Evidence: What information or data support the claim?

-

Reasoning: What justification shows why the data count as evidence to support the claim?

The NRC follows these points by suggesting that students who utilize this framework (and the curriculum of which this framework is a fundamental component) “make sense of the phenomena under study (claim), articulate that understanding (evidence), and defend that understanding to their peers” (p. 133). While we recognize that the claim, evidence, and reasoning structure is more clearly defined in McNeill et al. (2006), we would suggest that as characterized by the NRC, reasoning is undervalued. That is, the characterization suggests that reasoning occurs only at a defined point of inquiry rather than throughout as a critical aspect of the entire process. While we suspect that McNeill and colleagues may not have intended for such an interpretation, practitioners are most likely to work from the NRC compilation rather than directly excavating ideas from the research literature. Therefore, this claim, evidence, and reasoning framework warrants discussion. Does this oversight stem from a mechanistic view of language? From a mechanistic perspective, the argument structure must be mastered and is therefore the focus. Argument appears to be a product of inquiry rather than a means of inquiry. To instruct students in the structure of argument, they do not need to interpret or analyze data. While we acknowledge that some of the structure-oriented interventions do include data analysis, many do not. In many instances, evidence is provided and students need to make a claim based on the evidence. This is found not only in some of the Investigating and Questioning our World through Science and Technology (IQWST) materials but also in the commonly cited Ideas, Evidence, and Argument in Science (IDEAS) materials. Importantly, we are not suggesting that these materials do not hold value, rather we are contending that the structures that drive these interventions blur the lines between data and evidence.

Data and Evidence

What is the relationship between data and evidence? In framing this discussion we recognize that there are two orientations that are generally used within classrooms. The first is prepared arguments that are given to students, that is, examples that scientists have been involved with or textbook examples that students are required to use. The second is the argument-based inquiry activities that students undertake as part of the work in which they have to generate an argument from the activity. Our discussions are focused on the second orientation. In other words, we are focused on how students move from an inquiry activity to frame an argument as a consequence of the investigation. As such we need to address the use of such words as data and evidence.

Before moving to discuss the use of the words, we would state that for us the concept of immersion within the process of argument is a critical component of the learning process. Using language as a learning tool as the conceptual frame for this work means that using argument as a learning tool becomes a subset of this concept given that arguments are language based. That is, arguments are based in language where language is represented across all the modes necessary to frame the argument (e.g., mathematical, symbolic, textual, etc.). Arguments do not exist until we create them. The production of any argument involves a learner/participant in negotiating publically and personally across a variety of settings. These include observations, written text (reading), oral text (debate), and in the construction of written text (written report).

Data

What constitutes data? When doing an inquiry what is considered data? For most of the work we do in school science inquiries, and in science generally, data is taken as being the observations completed for the investigation. What is seen and recorded is generally taken as being the data obtained from the inquiry. We constantly encourage children to write down everything they observe—colors, smells, changes in position, and so forth. We try where possible to get them to be as diligent as they can be and to record these data in some systematic manner. This recording can be in a table format or in the form of accurate notes. Thus at the end of the investigation and before the write up takes place, we are anticipating that students will have a rich source of data to use as they move forward to complete the write up.

However, are data to be gained only from the hands-on activities where observation skills dominate? Does information from sources other than hands-on activities provide data? That is, when reading to see what others say about the investigation, whether the authors are scientists or other students, is this serving as evidence or data? When collecting information from these sources the learner is trying to build support for his/her argument. The information acts as data—the learner uses the information as part of the support for a particular line of argument.

The immediate question that needs to be asked is how does one use this data? We are constantly saying or are constantly told data does not speak! That is, there is no neon sign from nature explaining its thinking. So if data does not speak the question goes—how do we move from data to evidence?

Evidence

If data does not speak, does not tell us what is useful or not useful, does not tell us which points are related to each other, how do we generate evidence? What is necessary to move from data to evidence? Who makes decisions about what is good evidence? Sufficient evidence? Appropriate evidence? While there are a number of different teaching/learning strategies that can be discussed, theoretically we have to deal with the cognitive perspective.

Shifting from data to evidence requires us to engage in cognitive work. A student has to analyze and synthesize the data points into some coherent series. There are critical decisions that need to be made such as what to keep, what to discard, and how well the data points are connected. That is, data does not speak and so the learner has to apply some critical thinking and reasoning to be able to make decisions to produce the required evidence he/she needs to make an argument. Thus, data plus reasoning result in evidence.

This distinction is important because we know that students have difficulty in moving from a series of observation points to constructing a logical line of reasoning that allows him/her to connect the data points in some coherent manner. The learner has to negotiate between their prior ideas and beliefs and with what they have collected as observations, that is, their data. Evidence is not separate from reasoning. The problem with a structure that highlights a claim, evidence, and reasoning approach is that evidence construction appears to occur separate from reasoning.

This concept of reasoning is further highlighted when we ask students to move to determine what scientists may say about the ideas they are exploring. Students have to negotiate with the text they are reading to understand what is being described, and then negotiate with themselves in terms of what knowledge they can associate with that being described in text. We need to be constantly asking them why they use particular bits of information (data) from text in support of the claim they wish to make.

Students do not choose which information or data point to use and then reason about it. They choose the point because they have to think critically and reason in the process of choosing. While this may be taken as a given, a structure that appears to separate reasoning from evidence has the great potential for teachers and learners to think that providing an answer for each is a separate process. First you provide evidence and then you supply the reasoning. The question is how can they be separate.

Argument-Based Inquiry: An Immersion Experience

After a chance meeting at the NARST conference in Chicago in 1997 one of the authors (Hand) collaborated with Carolyn Keys to explore the idea of building a framework that would link inquiry, argumentation, and an emphasis on language. The result was the development of the SWH approach. The SWH approach consists of a framework to guide activities as well as a metacognitive support to prompt student reasoning about data. Similar to Gowin’s vee heuristic (1981, p. 157), the SWH provides learners with a heuristic template to guide science activity and reasoning in writing. Further, the SWH provides teachers with a template of suggested strategies to enhance learning from laboratory activities (see Table 3.1). As a whole, the activities and metacognitive scaffolds seek to provide authentic meaning-making opportunities for learners. As suggested in the teacher template, the negotiation of meaning occurs across multiple formats for discussion and writing. The approach is conceptualized as a bridge between informal, expressive writing modes that foster personally constructed science understandings, and more formal, public modes that focus on canonical forms of reasoning in science. In this way the heuristic scaffolds learners in both understanding their own lab activity and connecting this knowledge to other science ideas. The template for student thinking (see Table 3.1) prompts learners to generate questions, claims, and evidence for claims. It also prompts them to compare their laboratory findings with others, including their peers and information in the textbook, Internet, or other sources. The template for student thinking also prompts learners to reflect on how their own ideas have changed during the experience of the laboratory activity. The SWH can be understood as an alternative format for laboratory reports, as well as an enhancement of learning possibilities of this science genre. Instead of responding to the five traditional sections, purpose, methods, observations, results, and conclusions, students are expected to respond to prompts eliciting questioning, knowledge claims, evidence, description of data and observations, methods, and to reflect on changes to their own thinking.

While the SWH recognizes the need for students to conduct laboratory investigations that develop their understanding of scientific practice, the teachers’ template also seeks to provide a stronger pedagogical focus for this learning. In other words, the SWH is based on the assumption that science writing genres in school should reflect some of the characteristics of scientists’ writing, but also be shaped as pedagogical tools to encourage students to “unpack” scientific meaning and reasoning. The SWH is intended to promote both scientific thinking and reasoning in the laboratory, as well as metacognition, where learners become aware of the basis of their knowledge and are able to monitor more explicitly their learning. Because the SWH focuses on canonical forms of scientific thinking, such as the development of links between claims and evidence, it also has the potential to build learners’ understandings of the nature of science, strengthen conceptual understandings, and engage them in authentic argumentation processes of science.

The SWH emphasizes the collaborative nature of scientific activity, that is, scientific argumentation, where learners are expected to engage in a continuous cycle of negotiating and clarifying meanings and explanations with their peers and teacher. In other words, the SWH is designed to promote classroom discussion where students’ personal explanations and observations are tested against the perceptions and contributions of the broader group. Learners are encouraged to make explicit and defensible connections between questions, observations, data, claims, and evidence. When students state a claim for an investigation, they are expected to describe a pattern, make a generalization, state a relationship, or construct an explanation.

The SWH promotes students participation in setting their own investigative agenda for laboratory work, framing questions, proposing methods to address these questions, and carrying out appropriate investigations. Such an approach to laboratory work is advocated in many national science curriculum documents on the grounds that this freedom of choice will promote greater student engagement and motivation with topics. However, in practice much laboratory work follows a narrow teacher agenda that does not allow for broader questioning or more diverse data interpretation. When procedures are uniform for all students, where data are similar and where claims match expected outcomes, then the reporting of results and conclusions often lacks opportunities for deeper student learning about the topic or for developing scientific reasoning skills. To address these issues the SWH is designed to provide scaffolding for purposeful thinking about the relationships between questions, evidence, and claims.

Research on the SWH Approach

A number of quasi-experimental studies have been conducted to test the efficacy and impact of the SWH approach. These include the following:

A comparison between traditional teaching approaches and the SWH approach. Traditional teaching refers to the approaches that the teachers were using at the time of the study. In the first study this involved didactic teaching and some laboratory activities, while in the second study this involved using student recipe-type laboratory activities and report formats.

-

A study by Akkus, Gunel, and Hand (2007) examined if there was a difference in student test performance between high levels of traditional science teaching and high-quality SWH implementation, with seven teachers. The results from the teacher-generated tests were very interesting in that the study looked at the difference between high achievers and low achievers in each group. The difference between high achievers in each group was not significant—they were essentially equal. However, when comparing the effect size difference between high and low achievers the following results were obtained: for the high traditional teaching the effect size difference was 1.23 between high and low achievers, while for the high SWH teaching the effect size difference was only 0.13. These results are very encouraging and indicate that the SWH approach is effective for all learners in the classroom.

-

A study by Rudd, Greenbowe, and Hand (2007) was carried out to determine the effectiveness of using the SWH approach in university freshman general chemistry laboratory activities compared to the traditional formats used, with particular focus on the topic of equilibrium. To determine whether students in the SWH or standard sections exhibited better understanding of the concept of chemical equilibrium, the student explanations on the lecture exam problem were analyzed using mean explanation scores. Using the baseline knowledge score as a covariate, the ANCOVA results (F = 4.913; df = 1.49; p = 0.031) indicated a statistically significant association between higher explanation scores and the SWH format. The SWH sections demonstrated a greater ability than standard sections to identify the equilibrium condition and to explain aspects of equilibrium despite these sections having a lower baseline knowledge score.

-

A study by Hohenshell and Hand (2006) examined the difference in student performance with Year 10 biology students who completed the laboratory activities using traditional approaches versus the SWH approach. The study examined students’ test performance immediately after completing all the laboratory activities and then after completing a written summary report of the unit of study. Results from the first round of testing indicated that there were no significant differences on recall and conceptual question scores between the control (traditional laboratory approaches and report format) and the treatment group (SWH approach). However, on the second round of test, after completing the summary writing activities the SWH students scored significantly better on conceptual questions than the control students (F(1.43) = 5.53, p = 0.023, partial η 2 = 0.114).

Studies examining the impact of the quality of implementation of the SWH approach on student success on examinations. The purpose of these studies was to begin the process of determining the importance of adopting the particular strategies required when using the SWH approach. Rather than compare the SWH approach to traditional approaches, these studies compared student performance resulting from different levels of implementation of the SWH approach.

-

A National Science Foundation-funded project to adapt the SWH approach to freshman general chemistry for science and engineering majors’ laboratory activities demonstrated that the quality of implementation impacts performance on standardized tests and positively impacts the performance of females and low-achieving students—two groups that are viewed as disadvantaged in science classrooms. When comparing the difference between low and high implementation of the SWH approach, the following results were obtained of students’ scores on American Chemical Society (ACS) standardized tests. On the pretest (ACS California diagnostic test) the difference, measured as Cohen d statistic, between students with high implementing teaching assistants (TAs) and low implementing TAs was 0.07. At the end of the semester the difference between the two groups on the ACS end of semester 1 test was 0.45 (medium effect size difference). The gap between males and females decreased from 0.45 (medium effect) on the pretest to 0.04 (no effect) on the posttest; while the gap between high and low achieving students decreased from 2.7 (large effect) to 0.7 (large effect) (Poock, Burke, Greenbowe & Hand, 2007).

-

In a study by Mohammad (2007) of a one-semester freshman chemistry course for the “soft” sciences (agricultural, food science, etc.) students at the same university similar results were obtained, particularly with benefits to females in high implementation use of the SWH approach.

-

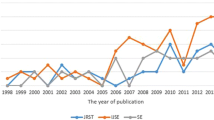

In a study of six middle/secondary school science teachers, Gunel (2006) tracked the impact of implementation of the SWH approach on students’ performance on the Iowa Test of Basic Skills (ITBS)/Iowa Test of Educational Development science tests across a 3-year period. His results show that for teachers who remained low across the period, the magnitude of effect size change in students’ scores ranged from 0 to 0.4. For the teacher who shifted from traditional instruction to high-level implementation, there was an effect size change in his students’ scores of 1.0 across the 3-year time period.

Studying the impact at the elementary level. Results from work done at the elementary level reflect those obtained from the other studies at middle/secondary and college level.

Results from a 3-year State of Iowa funded project involving 32 K-6 teachers who implemented the SWH approach for teaching science again supported the previous results when examining the quality of implementation. The results reported are for the first 2 years of the project. Teachers were rated as low, medium, or high in their implementation and the students performance on the ITBS science test were tracked (no teacher was rated as high). Grade equivalency growth scores were calculated and used in order to use teacher ranking as the dependent variable given that the analysis collapsed teachers into the low or medium level regardless of grade level. The results show that there were significant differences in ITBS science scores between students whose teachers are low and medium implementers of the SWH approach. This gap increased from an effect size of 0.073 in year 1 to an effect size of 0.268 in year 2. The gap between low SES students in low and medium implementation in year 1 (effect size 0.291) was almost the same as in year 2 (effect size 0.284). The gap between IEP students in low and medium implementation grew from year 1 (effect size 0.158) to year 2 (effect size 0.229).

The importance of such studies is that they begin to provide evidence for the claim that argument-based inquiry practices are valuable in promoting learning opportunities within science classrooms. These studies described above provide evidence that the SWH is an argument-based inquiry approach that can be used across a broad range of ages. While recognition is given to the argument that the sophistication of constructed arguments varies across the grade levels, the critical point for the authors is that we are able to involve children in the formation of arguments at an early age. This is important—we can involve all children in building science arguments by introducing a question, claims, and evidence approach to doing science. Students can be involved in constructing and critiquing arguments, differentiating between data and evidence, and as a consequence improve their understanding of science concepts.

Conclusion

For the authors, argument should be central to school science primarily because it is a vehicle for students to develop their understandings of scientific principles. We find this important, as we feel the current move toward argument has become one with an end goal that focuses on argument structure. While we acknowledge that rhetorical structures have importance in participation in science we believe that the structural level offered by models such as Toulmin’s (1958) is simply too defined to be practical scaffolds for learning science. We align with Ford (2008) who suggests that understanding the practice of science is important because it allows students to understand scientific principles as scientists understand principles.

As suggested by the previously cited work on the SWH approach, public negotiations require students to engage with science principles at a level not achieved by traditional instruction. Subsequently, argument has the potential to increase student achievement on standardized metrics such as national and state exams. While there is some evidence of student achievement on standardized metrics with the use of the SWH approach, most argument interventions choose not to focus on this politically critical aspect of achievement. As such, it is difficult to make broad empirical claims of the benefits of argument on learning science content. This is a critical point for science educators because this lack of recognition of argument as a vehicle for learning content reduces the potential policy impacts of argument in science. While argument has been recognized as an important goal for science education in documents like Ready, Set, Science it is not recognized as an important goal by school administrators and teachers who are judged on students’ abilities to pass content-based standardized exams. Being armed with numbers indicating increased student performance on such measures allows for a greater case to be made for inclusion. Using standardized exams as metrics for measuring argument-based interventions would also allow the research community to benefit more from individual contributions. Currently, it is difficult to conduct any meta-analysis to capture current findings as each group of researchers tends to use different outcome measures. While we recognize that research has specific questions that require unique methods of analysis, we feel it is important to highlight that content matters. That is, the level of sophistication of argument is linked not only to rhetorical structure but also to student content understanding.

References

Akkus, R., Gunel, M., & Hand, B. (2007). Comparing an inquiry based approach known as the Science Writing Heuristic to traditional science teaching practices: Are there differences? International Journal of Science Education, 29, 1745–1765.

Australian Curriculum, Assessment and Reporting Authority. (2009, May). Shape of the Australian curriculum: Science. Retrieved December 28, 2009, from http://www.acara.edu.au

Cavagnetto, A. R. (2010). Argument to foster scientific literacy: A review of argument interventions in K-12 science contexts. Review of Educational Research, 80(3), 336–371.

Clark, D. B., & Sampson, V. D. (2007). Personally-seeded discussions to scaffold online argumentation. International Journal of Science Education, 29(3), 253–277.

Erduran, S., Simon, S., & Osborne, J. (2004). TAPping into argumentation: Developments in the application of Toulmin’s argument pattern for studying science discourse. Science Education, 88(6), 915–933.

Ford, M. J. (2008). Disciplinary authority and accountability in scientific practice and learning. Science Education, 92(3), 404–423.

Fowler, S., Zeidler, D., & Sadler, T. (2009). Moral sensitivity in the context of socioscientific issues in high school science students. International Journal of Science Education, 31(2), 279–296.

Gee, J. P. (2004). Language in the science classroom: Academic social languages as the heart of school-based literacy. In E. W. Saul (Ed.), Crossing borders in literacy and science instruction: Perspectives on theory and practice (pp. 13–32). Arlington, VA: NSTA Press.

Gowin, D. B. (1981). Educating. Ithaca, NY: Cornell University Press.

Gunel, M. (2006). Investigating the impact of teachers’ implementation practices on academic achievement in science during a long-term professional development program on the Science Writing Heuristic. Unpublished PhD thesis, Iowa State University.

Halliday, M. A. K., & Martin, J. R. (1993). Writing science: Literacy and discursive power. London: Falmer Press.

Hand, B., & Prain, V. (2006). Moving from crossing borders to convergence of understandings in promoting science literacy. International Journal of Science Education, 28, 101–107.

Hand, B., & Keys, C. (1999). Inquiry investigation. The Science Teacher, 66(4), 27–29.

Hohenshell, L., & Hand, B. (2006). Writing-to-learn strategies in secondary school cell biology. International Journal of Science Education, 28, 261–289.

Keogh, B., & Naylor, S. (1999). Concept cartoons, teaching and learning in science: An evaluation. International Journal of Science Education, 21(4), 431–446.

Klein, P. D. (2006). The challenges of scientific literacy: From the viewpoint of second-generation cognitive science. International Journal of Science Education, 28, 143–178.

McNeill, K. L. (2009). Teachers’ use of curriculum to support students in writing scientific arguments to explain phenomena. Science Education, 93(2; 2), 233–268.

McNeill, K. L., & Krajcik, J. (2008). Scientific explanations: Characterizing and evaluating the effects of teachers’ instructional practices on student learning. Journal of Research in Science Teaching, 45(1), 53–78.

McNeill, K., Lizotte, D., Krajcik, J., & Marx, R. (2006). Supporting students’ construction of scientific explanations by fading scaffolds in instructional materials. Journal of the Learning Sciences, 15(2), 153–191.

Mohammad, E. G. (2007). Using the science writing heuristic approach as a tool for assessing and promoting students’ conceptual understanding and perceptions in the general chemistry laboratory ProQuest Dissertations And Theses; Thesis (Ph.D.)–Iowa State University, 2007.; Publication Number: AAI3274832; ISBN: 9780549148166; Source: Dissertation Abstracts International, Volume: 68-07, Section: A, page: 2792.; 143 p.

National Research Council. (2007). Taking science to school: Learning and teaching science in grades K-8. Washington, DC: The National Academies Press.

Norris, S. P., & Phillips, L. M. (2003). How literacy in its fundamental sense is central to scientific literacy. Science Education, 87(2), 224–240.

Organisation for Economic Co-Operation and Development. (2003). The PISA 2003 assessment framework. Retrieved from December 28, 2009, from http://www.pisa.oecd.org

Osborne, J., Erduran, S., & Simon, S. (2004). Enhancing the quality of argumentation in school science. Journal of Research in Science Teaching, 41(10), 994–1020.

Poock, J. A., Burke, K. A., Greenbowe, T. J., & Hand, B. M. (2007) Using the Science Writing Heuristic to improve students’ academic performance. Journal of Chemical Education, 84, 1371–1379.

Prain, V., & Hand, B. (1996). Writing for learning in secondary science: Rethinking practices. Teaching and Teacher Education, 12(6), 609–626.

Rudd, II, J. A., Greenbowe, T. J., & Hand, B. M. (2007) Using the Science Writing Heuristic to improve students’ understanding of general equilibrium. Journal of Chemical Education, 84, 2007–2011.

Sadler, T., Chambers, F., & Zeidler, D. (2004). Student conceptualizations of the nature of science in response to a socioscientific issue. International Journal of Science Education, 26(4), 387–409.

Simon, S., Erduran, S., & Osborne, J. (2006). Learning to teach argumentation: Research and development in the science classroom. International Journal of Science Education, 28(2–3), 235–260.

Toulmin, S. (1958). The uses of argument. Cambridge: Cambridge University Press.

United Kingdom Department for Children, Schools, and Families. (n.d.). The national strategies. Retrieved December 28, 2009, from http://nationalstrategies.standards.dcsf.gov.uk/

von Aufschnaiter, C., Erduran, S., Osborne, J., & Simon, S. (2008). Arguing to learn and learning to argue: Case studies of how students’ argumentation relates to their scientific knowledge. Journal of Research in Science Teaching, 45(1), 101–131.

Walker, K. A., & Zeidler, D. L. (2007). Promoting discourse about socioscientific issues through scaffolded inquiry. International Journal of Science Education, 29(11), 1387–1410.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2012 Springer Science+Business Media B.V.

About this chapter

Cite this chapter

Cavagnetto, A., Hand, B. (2012). The Importance of Embedding Argument Within Science Classrooms. In: Khine, M. (eds) Perspectives on Scientific Argumentation. Springer, Dordrecht. https://doi.org/10.1007/978-94-007-2470-9_3

Download citation

DOI: https://doi.org/10.1007/978-94-007-2470-9_3

Published:

Publisher Name: Springer, Dordrecht

Print ISBN: 978-94-007-2469-3

Online ISBN: 978-94-007-2470-9

eBook Packages: Humanities, Social Sciences and LawEducation (R0)