Abstract

Rapid depletion of global fertilizer and fossil fuel reserves, combined with concerns about global warming, have resulted in increased interest in alternative strategies for sustaining agricultural production. Moreover, many farmers are being caught in a vicious spiral of unsustainability related to depletion and degradation of land and water resources, increasing labor and input costs, and decreasing profit margins. To reduce their dependence on external inputs and to enhance inherent soil fertility, farmers, thus, may opt to employ farm-generated renewable resources, including the use of cover crops. However, perceived risks and complexity of cover-crop-based systems may prevent their initial adoption and long-term use. In this review article, we provide a historic perspective on cover-crop use, discuss their current revival in the context of promotion of green technologies, and outline key selection and management considerations for their effective use.

Based on reports in the literature, we conclude that cover crops can contribute to carbon sequestration, especially in no-tillage systems, whereas such benefits may be minimal for frequently tilled sandy soils. Due to the presence of a natural soil cover, they reduce erosion while enhancing the retention and availability of both nutrients and water. Moreover, cover-crop-based systems provide a renewable N source, and can also be instrumental in weed suppression and pest management in organic production systems. Selection of species that provide multiple benefits, design of sound crop rotations, and improved synchronization of nutrient-release patterns and subsequent crop demands, are among the most critical technical factors to enhance the overall performance of cover-crop-based systems. Especially under adverse conditions, use of mixtures with complementary traits enhances their functionality and resilience. Since traditional research and extension approaches tend to be unfit for developing suitable cover-crop-based systems adapted to local production settings, other technology development and transfer approaches are required. The demonstration of direct benefits and active participation of farmers during system design, technology development, and transfer phases, were shown to be critical for effective adaptation and diffusion of cover-crop-based innovations within and across farm boundaries. In conclusion, we would like to state that the implementation of suitable policies providing technical support and financial incentives to farmers, to award them for providing ecological services, is required for more widespread adoption of cover crops.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Cover crops

- green technologies

- management

- sustainable agro systems

- carbon sequestration Americas

- pest control

- tillage

- rotation

- weeds

- nematode

- crimson clover

- winter rye

- black oats

- living mulch

- citrus

- broccoli

- forage

- ecological service

- adapation

- green manure

2.1 Introduction

“Cover crops” are herbaceous plants that alternate commercial crops during fallow periods to provide a favorable soil microclimate, minimize soil degradation, suppress weeds, and enhance inherent soil fertility (Sarrantonio and Gallandt 2003; Sullivan 2003; Anderson et al. 2001; Giller 2001). “Green manures” are cover crops primarily used as a soil amendment and nutrient source for a subsequent crop (Giller 2001). “Living mulches” are cover crops grown simultaneously with commercial crops that provide a living mulch layer throughout the season (Hartwig and Ammon 2002). For the purpose of this review, we will not distinguish among these uses, and use the term cover crop in its broadest context instead.

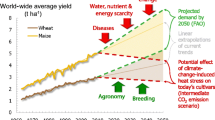

Historically, cover crops have been effective in closing nutrient cycles and were integral part of food production systems that gave rise to modern agriculture (Drinkwater and Snapp 2007; McNeill and Winiwarter 2004; Pieters 1927). However, during the second part of the twentieth century, the “contemporary agricultural revolution” resulted in an uncoupling of C and N cycles (Drinkwater and Snapp 2007; Mazoyer and Roudart 2006). As an integral part of the “agricultural revolution” process, the use of inorganic fertilizer greatly increased, since these materials provide growers with a concentrated and custom-designed nutrient source (Smil 2001). The contemporary agricultural revolution, thus, directly contributed to an erosion of traditional techniques for sustaining inherent soil fertility, including the use of cover crops (Baligar and Fageria 2007; Sarrantonio and Gallandt 2003; Gliessman et al. 1981). Farmers throughout the Americas are increasingly being caught in a vicious spiral of unsustainability related to depletion and degradation of land and water resources, increasing labor and input costs, and decreasing profit margins (Cherr et al. 2006b; Dogliotti et al. 2005). In many cases, farmers were forced to enhance family income via intensification, specialization, and production of cash crops, or alternatively, abandon their operations (Dogliotti et al. 2005).

With current concerns related to global warming, rapid depletion of fertilizers and fossil fuel reserves, agriculture is required to provide more diverse ecological services and make more efficient use of natural/renewable resources (van der Ploeg 2008; Cherr et al. 2006b). Within this context, improved integration of the use of cover crops may once again become the cornerstone of sustainable agroecosystems (Baligar and Fageria 2007). However, the development of functional cover-crop-based systems will require a more integrated and system-based approach, rather than reinstating traditional production practices. The scope of this paper is to (i) provide a historic perspective on the use of cover-crop-based systems in agroecosystems; (ii) document specific services and benefits provided by cover crops with special reference to their use in the Americas; (iii) discuss selection procedures for cover crops; (iv) outline key management aspects that facilitate integration and performance of cover crops into agrosystems; (v) discuss potential limitations and challenges during the design and implementation of cover-crop-based systems.

2.2 Historic Perspective

Starting at the cradle of agriculture in southwest Asia, farmers utilized leguminous crops, including peas and lentils, to restore inherent soil fertility and to sustain grain crop production (McNeill and Winiwarter 2004). In England, fallows were replaced by clovers in grain–turnip production systems to improve soil fertility, whereas in the Americas, beans were used for this purpose (Russell 1913). During the early 1800s, continuous population growth and urbanization required the use of more concentrated forms of fertilizer and mined mineral guano deposits to offset declining inherent soil fertility in Western Europe and New World, but this resource was both scarce and relatively expensive (McNeill and Winiwarter 2004). During the 1870s, mucuna (Mucuna pruriens) was introduced in Florida as a forage crop and by 1897 it was used by hundreds of citrus growers as an affordable alternative to improve soil fertility while it was also used as a forage crop (Crow et al. 2001; Buckles et al. 1998; Tracey and Coe 1918). Mucuna was introduced in Guatemala during the 1920s as a forage source and as a rotational crop for maize-based systems. Its use spontaneously spread and was adopted by farmers in neighboring countries as well (Giller 2001). In Uruguay, vetch (Vicia villosa Roth) and oats (Avena sativa L.) were introduced as green manures in vineyards around 1960, but due to increased supply of inexpensive fertilizer and lack of suitable cultivation tools, this practice was discontinued (Selaya Garvizu 2000).

Annual winter cover crops were integral part of many North American cropping systems during the first part of the last century (Pieters 1927). However, their use was gradually abandoned due to the availability of inexpensive synthetic fertilizer during the 1950s, which provided growers with concentrated nutrient sources that could be easily managed (Tonitto et al. 2006; Smil 2001). As a result, soil fertility strategies shifted from building SOM and inherent soil fertility via sound crop rotations and supplementary use of (in)organic nutrient sources, to a system dominated by external inputs used to boast labile nutrient pools and crop yields (Drinkwater and Snapp 2007). Moreover, externalities associated with the excessive use of agrochemicals were typically ignored while inherent system’s functions and services were gradually being lost (Cherr et al. 2006b). Additionally, the shift toward large-scaled and highly specialized operations diminished inherent diversity and resilience of local agricultural production systems (van der Ploeg 2008; Shennan 2008; Baligar and Fageria 2007; Cherr et al. 2006b).

In terms of awareness of potential negative aspects of industrialized agriculture, the “great dust bowl” occurring in the USA in the 1930s, gave rise to increased emphasis on soil conservation, including the use of cover crops (Hartwig and Ammon 2002). During the 1970s, externalities associated with maintaining large labile nutrient pools became a major concern and practices were proposed to reduce environmental impacts, including the use of cover crops (Drinkwater and Snapp 2007; Mays et al. 2003; Dabney et al. 2001). Although agricultural development resulted in an unprecedented increase in productivity, it also promoted increased specialization and required substantial capital investments, while “real” prices of agricultural commodities dropped by a factor 2–4 between 1950 and 2000. Especially small farmers were not able to adapt to this transition and the majority of them was forced to abandon farming (Mazoyer and Roudart 2006). Moreover, in many developing regions, green revolution technologies were less effective in more adverse, risk-prone, and resource-limited production environments (Shennan 2008; El-Hage Scialabba and Hattam 2008). During the 1960s a modified form of the agricultural revolution occurred in Latin America which involved investments in local infrastructure, access to loans, improved inputs, and price subsidies (Mazoyer and Roudart 2006). However, in Brazil, increased mechanization and intensification of agriculture in hilly regions resulted in rampant erosion and soil degradation, which undermined the inherent production capacity of local production systems (Prado Wildner et al. 2004).

During the 1980s, adoption of cover crops as part of conservation technologies increased exponentially by farmers in southern Brazil (Calegari 2003; Landers 2001). This process has resulted in a gradual reversal of the degradation of the natural production base since farmers were able to partially restored SOM levels and also reduce their dependence on external inputs. This kind of revolutionary success story inspires confidence in potential role of cover-crop-based technology to reverse the downward spiral of unsustainability that still prevails in many regions. This unprecedented successful expansion of no-tillage technology expansion in this region was clearly driven by farmers who actively engaged in technology development and transfer, and combined with favorable government policies, this greatly facilitated the scaling out process on a more regional scale. On the other hand, the use of no-tillage and/or cover crops by commercial vegetable growers in the SE USA was limited. This was related to the high crop value and risk-averse behavior of conventional producers (Phatak et al. 2002). However, increased concerns related to environmental quality, energy use, and global warming, have resulted in a shift toward resource preservation with an increased focus on sustainability and/or ecological-based (organic) production systems (Shennan 2008; Ngouajio et al. 2003; Hartwig and Ammon 2002; Lu et al. 2000). In summary, although cover crops were abandoned due to green revolution technologies, due to the current interest in green technologies, they are once more becoming the cornerstone of sustainable agrosystems (Baligar and Fageria 2007; Cherr et al. 2006b; Sullivan 2003; Phatak et al. 2002; Shennan 1992).

2.3 Services and Benefits

Regarding the use of cover crops, it is important to distinguish “ecosystem goods” from “ecosystem services” (Shennan 2008). From a producer’s perspective, cultivation of a cover crop may yield direct forage benefits and improved grain yields in integrated systems, while from a policy view its use also provides environmental benefits, e.g., erosion control and clean drinking water. Adoption of cover-crop-based systems tends to be strongly influenced by the perception of different stakeholders of what (direct) benefits cover crops will provide under local conditions and increased awareness of such services is, thus, critical (Anderson et al. 2001). An overview of a number of these direct and indirect services is provided in Fig. 2.1, while specific aspects will be discussed in more detail below.

2.3.1 Soil Organic Matter

Maintaining soil organic matter (SOM) is critical for sustaining soil quality and crop productivity, especially in the absence of external inputs (Fageria et al. 2005; Sarrantonio and Gallandt 2003). Cover crops may enhance SOM content in the soil provided that SOM addition rate exceeds SOM breakdown (Calegari 2003; Sullivan 2003). The use of cover crops, the presence of crop residues and SOM, have all been linked to improved soil aggregation and soil structure and enhanced water infiltration, retention, drainage, and soil aeration, thus, reducing runoff and erosion (Sainju et al. 2007; Fageria et al. 2005; Dabney et al. 2001; Miyao and Robins 2001; Creamer et al. 1996b; Gulick et al. 1994; Derpsch et al. 1986). Increasing SOM also favors root growth, available water capacity (AWC), effective soil water storage, and potential yield in water-limiting environments (Sustainable Agricultural Network 2007; Fageria et al. 2005; Anderson et al. 2001; Derpsch et al. 1986). Hudson (1994) reviewed historic data sets on the effect of SOM on AWC and showed that AWC was increased by 2.2–3.5% for each percent increase in SOM. Increased SOM also greatly improves cation retention, and combined with complexation and mineralization of nutrients, it, thus, greatly improves crop nutrient availability (Anderson et al. 2001). Cover-crop residues were shown to enhance the benefits of no-tillage on aggregate stability, microbial biomass, SOM, and soil enzymes (Roldan et al. 2003; Zotarelli et al. 2005a, b, 2007; Fageria et al. 2005; Calegari 2003). Amado et al. (2006) emphasized the importance of including leguminous cover crops in no-tillage systems as a strategy for increasing carbon sequestration in tropical and subtropical regions. However, for non-utrient limited systems and under adverse growth conditions, growing recalcitrant nonleguminous cover crops with a greater biomass production, may be more effective in boasting SOM (Barber and Navarro 1994a).

Overall dry-matter production and nutrient accumulation by cover crops affects their potential to increase SOM. The production capacity of cover crops is dictated by genetic traits, including C3 versus C4 photosynthetic pathways, the ability of roots to form symbiotic associations, canopy characteristics, tissue composition, and growth duration. These traits, in turn, control crop radiation, water and nutrient use efficiencies, and also provide limitations to how cover-crop-based systems will perform. A review by Cherr et al. (2006b) showed that under optimal conditions, annual cover crop may accumulate up to 4.4–5.6 Mg C ha−1 during a period of 3–5 months.

Overall decomposition of cover-crop residues is affected by (1) amount that is applied; (2) biochemical composition; (3) physical properties as related to crop development stage and/or termination practices; (4) soil texture, temperature, and moisture conditions; (5) soil contact; (6) nutrient availability and fertilizer addition (Balkcon and Reeves 2005; Sullivan 2003; Berkenkamp et al. 2002; Ma et al. 1999; Honeycutt and Potaro 1990; Schomberg et al. 1994). The base temperature for decomposition is assumed to be on the order of −2°C to 0°C, while decomposition rates double when soil temperature increases by 9°C (Yang and Janssen 2002). Decomposition is fastest at high temperatures (30–35°C), adequate moisture (e.g., at field capacity), adequate N tissue levels (e.g. C:N ratios <25), and favorable lignin/N ratios (Cherr et al. 2006b; Quemada et al. 1997). Under hot and humid conditions, decomposition rates may be four to five times greater compared to temperate settings (Lal et al. 2000). Decomposition rates are on the order of 0.2, 0.05 and 0.0095 day−1 for glucose- versus cellulose- versus lignin-based carbon pools (Quemada et al. 1997). Stems, which contain less N and more lignin and cellulose, thus, may decompose up to five times slower compared to leaves (Cherr et al. 2006b).

The fraction remaining after 1 year (the effective SOM addition rate) may be relatively small (e.g. <0.1–0.4) depending on pedo-climatic conditions and residue properties (Yang and Janssen 2000). Compared to the more stable soil C-pool, the addition of cover-crop residues to the stable SOM pool, thus, may be relatively small, since a soil with 1% SOM contains 24 Mg C ha−1 in just the upper 0–30 cm. Additionally, as discussed above, only a small fraction of the C from cover crops may be converted to effective SOM, whereas most of it is lost during the decomposition process.

In Brazil soil C-enrichment, even under no-tillage, was only 10% of the C-addition rate (Metay et al. 2007). In California, the use of cover-crop-based no-tillage tomato system on a clay loam soil cover crops generated 1.8–2.3 Mg C ha−1 year−1. After a period of 5 years, overall soil-C sequestration was 4.5 Mg C ha−1 compared to 3.8 Mg C−1 for standard tillage systems, while noncover-crop systems showed a net loss of 0.1–0.4 Mg C ha−1 (Veenstra et al. 2007). Under these conditions, crop carbon addition rate was more important than tillage management in terms of SOM accumulation, where as in Brazil the opposite may be true (Metay et al. 2007; Amado et al. 2006). Use of leguminous cover crop and/or fertilizers will result in a decrease in C:N value of recently formed SOM compared to monocultures of gramineous cover crops (Ding et al. 2006). A biculture of hairy vetch (Vicia villosa Roth) and rye (Secale cerale L.) was more effective in sequestering C compared to cover-crop monocultures while adding fertilizer enhanced overall SOM accumulation (Sainju et al. 2006). In India, continuous use of perennial cover crops in a coconut plantation for 12 years greatly enhanced basal respiration, microbial C and N, reduced C:N ratios of microbial biomass, while SOM values in the upper 20 cm also increased by a factor 2–3 (Dinesh 2004; Dinesh et al. 2006).

Under the hot/humid weather conditions and sandy soils prevailing in Florida, use of annual cover crops in a no-tillage sweet corn production system generated upto 7.2–9.6 Mg C ha−1 year−1. However, despite these high C addition rates, SOM still declined from 1.4% (year 1) to 1.3% (year 2) which was related to the site previously being under pasture (Cherr 2004). After 4 years of cover-crop-based systems, SOM reached an equilibrium of about 1.2% in cover-crop-based systems, while not adding any crop residues, combined with frequent tillage, resulted in a decline in SOM to 0.8%. Under these conditions, alternating vegetable production systems with semipermanent pastures, which tend to have higher effective C addition rates, may be required to boast SOM values. This is in agreement with reports that sod-forming grass-legume leys are more effective in enhancing SOM compared to the use of annual green manures (Hansen et al. 2005; Sullivan 2003). However, for production settings with more fine-textured soils, which is critical for occlusion (protection) of soil organic matter (Zotarelli et al. 2007), the integrated use of cover crops and no-tillage was shown to increase SOM in annual cropping systems as well (Matus et al. 2008; Roldan et al. 2003; Sanchez et al. 2007; Amado et al. 2006; Luna-Orea and Wagger 1996; Barber and Navarro 1994b).

Since changes in SOM are slow and may be masked by inherent variability, the use of models may be useful. Simulations with models such as NDICEA (Van der Burgt et al. 2006) allow improved assessment of how cover crops may affect SOM trends over time. Using this model it was shown that for traditional vegetable cropping systems in Uruguay, SOM values decreased by 420–700 kg ha−1 year−1. Due to erosion rates of 18–19 Mg ha−1 year−1, total SOM loss amounted to 800–1,170 kg ha−1year−1 (Selaya Garvizu 2000). In cover-crop-based systems, approximately 4–5 Mg ha−1 year−1 crop residues were added, and SOM levels could be maintained while soil erosion was reduced by 67% (Selaya Garvizu 2000).

2.3.2 Physical Functions

Cover crops will modify the microclimate by reducing kinetic energy of rainfall, soil temperature fluctuations, wind speed, and crop damage associated with sand blasting (Fageria et al. 2005; Bravo et al. 2004; Anderson et al. 2001; Dabney et al. 2001; Masiunas 1998). Their canopy and residues will diminish the impact of raindrops, thereby reducing soil crusting and erosion, while their stems and root system also provides a physical barrier that can prevent sheet erosion and gully formation (Sarrantonio and Gallandt 2003; Masiunas 1998; Derpsch et al. 1986). Their use on sloping lands can provide a viable and labor-efficient alternative to the development of stone embankments and terracing (Bunch 1996). Erosion control (as shown in Fig. 2.2), thus, is one of the core services that cover crops provide (Prado Wildner et al. 2004). Use of leguminous live mulches, thus, may reduce runoff and soil erosion by 50% and 97%, respectively (Hartwig and Ammon 2002). Cover crops, such as deep-rooted radish, can penetrate compacted subsoil layers and prior root channels can enhance soil water infiltration, root penetration, soil water-holding capacity, and thus, crop water use efficiency of subsequent crops (Weil and Kremen 2007; Sarrantonio and Gallandt 2003; Giller 2001). Cover crops provide a structural habitat for both beneficial insects and birds, and by reducing light levels and soil temperature fluctuations at the soil surface, they also reduce weed germination (Bottenberg et al. 1997; Bugg and Waddington 1994). Moreover, cover-crop residues conserve soil moisture and favor beneficial fungi producing glomalin that, in turn, enhances the formation of stable soil aggregates, water percolation, and retention (Sustainable Agricultural Network 2007; Altieri 2002).

2.3.3 Soil Fertility

Cover crops and their residues accumulate and/or retain nutrients either by symbiotic N fixation, uptake during growth, or immobilization after crop senescence. Thereby, they enhance nutrient retention and recycling and reduce the risk of potential nutrient-leaching losses by functioning as a “catch crop” (Cherr et al. 2006b; Dabney et al. 2001). Cover-crop-derived nutrients are typically released gradually over time, which may reduce the risk of toxicity, leaching, and thus, may enhance nutrient efficiency compared to use of highly soluble inorganic fertilizers (Cherr et al. 2006b). In many cases, actual yield benefits exceed those expected based merely on cover-crop-derived nutrients, which may be related to cover crops providing a much broader array of ecological services compared to the exclusive use of synthetic fertilizer (Bhardwaj 2006). However, utilization of N released by cover crops can also be poor if nutrient release is not synchronized with the crop demand of a subsequent crop (Baijukya et al. 2006).

Leguminous crops provide supplementary nitrogen via symbiotic N fixation and their relatively low C:N ratio also increases mineralization which reduces the risk of N deficiency for subsequent and/or companion crops (Sanchez et al. 2007; Cherr et al. 2006b; Schroth et al. 2001). Use of leguminous cover crops, thus, provide an on-farm renewable form of N, thereby, reducing energy cost associated with production and transport of fertilizers (Cherr et al. 2006b). In organic systems, use of leguminous cover crops also offset P accumulation and potential environmental risks associated with the excessive use of animal manures (Cherr et al. 2006b), while the use of a soil-building cover crop may also be required to meet certification requirements (Delate et al. 2003). Under favorable conditions, cover crops may accumulate substantial amounts of N (150–328 kg N ha−1), with 31–93% of this N being derived from biological N fixation (partially) offsetting N removal via harvesting of commercial crops (Cherr et al. 2006b; Giller 2001). Giller (2001) provided an overview of reported N accumulation and fraction of N derived via symbiotic N fixation for commonly used tropical legumes. Similar information for other cover crops and/or nutrients may be obtained elsewhere (Cherr et al. 2006b; Fageria et al. 2005; Calegari 2003).

Roots of cover crops also exude organic compounds which can enhance soil microbial activity, mycorrhizal activity, soil structure, and nutrient availability (Pegoraro et al. 2005; Calegari 2003; Dabney et al. 2001). Prolonged use of grass-clover mixture or annual cover crops, in combination with no-tillage, may reduce runoff and erosion, thereby reducing loss of fertile top soil and SOM, which in turn, can further enhance soil water retention and nutrient use efficiency (Sanchez et al. 2007; Bunch 1996). Deep-rooted types such as rye (Secale cereal L.) and sunn hemp (Crotalaria juncea L) can effectively scavenge nutrients from deep soil layers and render them more readily available for subsequent crops (Wang et al. 2006; Fageria et al. 2005; Calegari 2003; Sullivan 2003). Fast-growing and deep-rooting cover crops such as winter rye, radish, and brassicas, deplete labile residual N pools and are very effective in retaining nutrients (Vidal and Lopez 2005; Isse et al. 1999; Dabney et al. 2001; Wyland et al. 1996). In Maryland, brassicas depleted residual soil N up to a soil depth of 180 cm and took up more N compared to rye (Weil and Kremen 2007). Leguminous cover crops have low C:N ratios and can release large amounts of N instantaneously and their use, thus, may result in excessive N-leaching especially on sandy soils (Avila 2006; Sainju et al. 2006).

2.3.4 Pest Management

2.3.4.1 Soil Ecology

In balanced ecosystems, pests are internally managed by natural enemies while management practices should be geared toward favoring beneficial organisms rather than erradicating pests (Sustainable Agriculture Network 2007) and promoting disease-supression mechanisms (van Bruggen and Semenov 2000). During the past decades, there has been increased concern in pesticide use in agriculture, especially in intensively managed vegetable crops (Abdul-Baki et al. 2004; Masiunas 1998). Effective use of cover crops may reduce herbicide use and cost associated with soil fumigation (Abdul-Baki et al. 2004; Carrera et al. 2004) and they can reduce potential leaching of both nutrients and pesticides (Masiunas 1998; Wyland et al. 1996). Moreover, cover-crop-based systems enhance ecological diversity, productivity, and stability of agrosystems as well (Shenann 2008; Cherr et al. 2006b). Several cultural practices such as crop rotation, cover crops, mulches, composts, and animal manures affect SOM, disease supressiveness of soils, and thus, minimize both the incidence and severity of soil-borne diseases.

2.3.4.2 Diseases

Cover crops suppress diseases by interfering with disease cycle phases such as dispersal, host infection, disease development, propagation, population buildup, and survival of the pathogen, in a number of ways. The presence of cover-crop mulches minimizes pathogen dispersal via splashing, water runoff, and/or wind-borne processes (Cantonwine et al. 2007; Everts 2002; Ntahimpera et al. 1998; Ristaino et al. 1997). Organic residues also reduce the incidence and severity of soil-borne diseases by inducing inherent soil suppressiveness, while excessive use of inorganic fertilizers may cause nutrient imbalances and lower pest resistance (Altieri and Nicholls 2003). When selecting cover crops, information on how effective these crops are in hosting or suppressing pathogens is needed (Abawi and Widmer 2000). The host–nonhost–tillage system interaction aspect should, thus, be considered carefully (Colbach et al. 1997). Saprophytic pathogens survive on cover-crop residues and this effect is also greatly affected by tillage. In some cases, the cover crops can be a host for the pathogen but will not develop any disease symptoms itself. Fusarium oxysporum f.sp. phaseoli may prevail in a leguminous cover crops when rotated with beans (Dhingra and Coelho Netto 2001). When cover crops are not properly decomposed, pathogen population such as Phytium spp. increase, causing severe epidemics (Manici et al 2004). Incorporation of crop residues greatly affect soil microbial populations and can increase pathogen inhibitory activity as was shown for Phytopthora root rot in alfalafa, Verticillium wilt in potato and Rhizoctonia solani root rot by changes in resident Streptomyces spp. community (Mazzola 2004; Wiggins and Kinkel 2005a, b). Incorporation of crop residues with or without tarping can provide soil disinfestation via biochemical mechanisms (Blok et al. 2000; Gamliel et al. 2000). For example, breakdown of Brassica residues containing glucosinolates resulted in the formation of bio-toxins, including isothiocyanates, that provide (partial) control of diseases, weeds, and parasitic nematodes (Weil and Kremen 2007). Cover crops also promote disease supressiveness by favoring certain groups of the resident soil microbial community, as related to the interactive effects of root exudates and root affinity of different crops on beneficial organisms (van Elsas et al. 2002; Mazzola 2004).

However, in some cases, cover crops increase disease incidence as was shown for pathogens with a wide host range such as Sclerotium rolfsii (Gilsanz et al. 2004; Jenkins and Averre 1986; Taylor and Rodríguez-Kábana 1999b, Widmer et al.; 2002). The use of cover-crop residues for mulching enhanced disease suppression of Sclerotinia sclerotiorum – beans pathosystem (Ferraz et al. 1999), while in a no-tillage small grain system, they promoted Rhizoctonia solani (Chung et al. 1988). Brassica species were quite effective in controlling Sclerotinia diseases in lettuce, whereas oats and broad beans did not provide any disease control (Chung et al. 1988). Verticilium wilt incidence in potato was reduced when potato was grown after corn or sudangrass compared to planting it after rape or winter peas (Davis et al. 1996). Cover-crop-based systems (leguminous vs brassicas) had no effect on the Fusarium incidence in processing tomatoes in California compared to control systems. Although they showed yield benefits compared to noncover crop controls, yields were still lower compared to the use of Metham which was more effective in controlling Fusarium (Miyao et al. 2006).

2.3.4.3 Insects

Cover crops and their residues also reduce insect pest populations as was reported for a range of insects, including aphids, beetles, caterpillars, leafhoppers, moths, and thrips (detailed reviews are provided by Sarrantonio and Gallandt 2003; Masiunas 1998). This is related to changes in biophysical soil conditions, formation of protective niches for beneficial organisms, release of allelochemicals, and changes in soil ecology (Tillman et al. 2004; Sarrantonio and Gallandt 2003). Cover crops also increase biodiversity by creating more favorable conditions for free-living bactivores and fungivors, and other predators. Combined with reduced proliferation of pests, the presence of cover crops (residues) hampers dispersal of visual and olfactory clues emitted by host crops, thus, resulting in more effective insect pest suppression as well (Tillman et al. 2004; Masiunas 1998). However, in other cases, cover crops provide a shelter for insect pests as well (Masiunas 1998).

2.3.4.4 Nematodes

Reduction of nematodes by cover crops is well-documented (Abawi and Widmer 2000; Taylor and Rodríguez-Kábana 1999a; Widmer et al. 2002). Several grassy and leguminous cover crops, including Crotalaria, Mucuna, and Tagetes species, were shown to be nonhost or a suppressor of selected parasitic nematodes (Wang et al. 2007; Crow et al. 2001; McSorley 2001). Crop rotations, including such species, disrupt the life cycle of parasitic nematodes and reduce the risk of breakdown of inbred nematode resistance of commercial crops (McSorley 2001). In some cases, nematode-suppression action is related to the beneficial effects of cover-crop residues on predatory nematodes and nematode-trapping fungi (Cherr et al. 2006b). However, no cover crop will function as a nonhost for all parasitic nematodes, while in several cases, cover crops were shown to favor the growth of parasitic nematodes as well (Isaac et al. 2007; Sanchez et al. 2007; Crow et al. 2001; Cherr et al. 2006b). Reports on crops being a host versus nonhost may conflict at times, which can be related to differences in pedo-climatic conditions, nematode races, and cover-crop cultivars and thus, the results may need to be verified for local production settings.

2.3.4.5 Weeds

In organic systems, cost-effective weed control is the foremost production factor hampering successful transition (Kruidhof 2008; Linares et al. 2008). Well-designed cover-crop systems can reduce herbicide use and labor use for weed control, and cover crops, thus, afford farmers with a cost-effective strategy for weed control, which is a key deciding factor in their adaptive use (Gutiererez Rojas et al. 2004; Anderson et al. 2001; Neill and Lee 2001; Teasdale and Abdul-Baki 1998; Bunch 1996; Barber and Navarro 1994a). Cover crops suppress weeds via resource competition, niche disruption, and release of phytotoxins from both root exudates and decomposing residues, thereby minimizing seed banks, and the germination, growth, and reproduction of weeds (Kruidhof 2008; Moonen and Barberi 2006; Fageria et al. 2005; Sarrantonio and Gallandt 2003). Their effectiveness in suppressing weeds is affected by plant density, initial growth rate, aboveground biomass, leaf area duration, persistence of residues, and time of planting of a subsequent crop (Kruidhof 2008; Linares et al. 2008; Sarrantonio and Gallandt 2003; Cassini 2004; Dabney et al. 2001).

Although cover crops greatly reduced weed growth in conventional vegetable-cropping systems, in some cases applying herbicide may still be required to minimize the risk of yield reductions (Teasdale and Abdul-Baki 1998). Annual cover crops such as mucuna may also be used to control perennial weeds, provided that they effectively shade out these weeds just prior to weeds starting replenishing their storage organs (e.g., rhizomes) with assimilates (Teasdale et al. 2007). Repeated use of annual cover crops combined with no-tillage in organic systems did not control grassy weeds (Treadwell et al. 2007). Their continuous use can also result in a shift toward perennial weeds which can be addressed by alternating crop systems with pastures (M. Altieri, 2008). Use of cover-crop mixtures with complementary canopy characteristics (e.g., rye and clover as shown in Fig. 2.3) and differential root traits (e.g., fibrous vs deep tap roots) will provide superior cover-crop performance and thus, more effective weed control (Linares et al. 2008; Drinkwater and Snapp 2007; Masiunas 1998). Use of a “cover crop weed index” (ratio of aboveground dry weights of cover crops and weeds) was shown to be a useful tool for assessing weed-suppression capacity of cover crops (Linares et al. 2008).

Mowing in orchards can provide more effective weed control when combined with a grassy vegetation compared to its use with annual legumes (Matheis and Victoria Filho 2005). Use of mowed leguminous live mulches in an organic wheat system reduced weed growth by 65–86% but grain yields were only a fraction of those in weed-free controls possibly due to resource competition between cover crops and the wheat crop (Hiltbrunner et al. 2007). Leguminous cover crops may have a competitive edge on weeds under N-limiting conditions, whereas the use of repeating mowing may be effective to control taller weeds for more fertile production sites.

Weed suppression by cover-crop residues is related to the effective soil coverage which may be sustained for 30–75 days (as shown in Fig. 2.4). This depends on decomposition as related to residue amount and biochemical properties, rainfall, soil temperature, and weed pressure/vigor (Teasdale et al. 2004; Ruffo and Bollero 2003; Masiunas 1998; Creamer et al. 1996). Incorporation of residues reduces their weed-suppression capacity due to increased light levels, transfer of dormant seeds to the soil surface, and also results in increased breakdown and dilution of allelochemicals (Masiunas 1998). Rye and barley residues were effective in suppressing broadleaf weeds, while hairy vetch residues enhanced weed growth (Creamer et al. 1996), which may be related to their releasing nutrients (Teasdale et al. 2007), while rye was less effective in suppressing grassy weeds (Masiunas 1998).

2.3.5 Food and Forage Production

Although food and forage production may not be the main purpose of cover-crop use, some systems may also provide products for human consumption, grazing, and/or to produce fodder as was reported for, example, Canavalia ensifomis, Dilochis lablab, Avena strigosa, Vicia villosa (Nyende and Delve 2004; Pieri et al. 2002; Anderson et al. 2001). Examples of cover crops suited for human consumption include Cajanus cajan (pigeon pea), Dolichos lablab, and cowpea (Vigna cinsensis). Integrating livestock components into cover-crop-based systems can improve bio-economic efficiencies, profits, and human health. Potential applications may include the use of cover crops to regenerate degraded pasture land, improvement of the animal diet, and enhancement of the intensification of small-scale farming systems (Anderson et al. 2001). Ironically, mucuna may have been introduced in Central America to be used as forage crop for mules employed in banana plantations (Anderson et al. 2001). In such integrated systems, (leguminous) cover crops may also provide (high-quality) forages, but unless manures are internally recycled, this may reduce soil improvement services and yield benefits provided by the cover crops (Anderson et al. 2001). As an example, cattle grazing of mucuna prior to corn planting reduced its effectiveness in suppressing weeds and improving corn yields (Bernandino-Hernandez et al. 2006).

2.3.6 Economic Benefits

In terms of conventional economics, key considerations are seed and labor costs which tend to account for the largest cost factors of cover-crop-based systems (Sullivan 2003; Lu et al. 2000). The seed costs of leguminous crops are twice as high as small grains, but residues of grains have high C:N ratios and may require additional N application of 25–35 kg N ha−1 to reduce the risk of N immobilization which may offset potential seed cost savings (Sustainable Agriculture Network 2007). In South Georgia, self-reseeding systems of crimson clover were developed in rotation with cotton. In this case, the absence of additional tillage and seed costs combined with the automatic senescence of the cover crop prior to the maturation of the cotton crop resulted in cost-effective systems (Cherr et al. 2006b; Dabney et al. 2001).

Several studies documented significant yield benefits derived from the use of cover crops (Sanchez et al. 2007; Avila 2006; Cherr et al. 2006b, c; Fontanetti et al. 2006; Abdul-Baki et al. 2004; Neill and Lee 2001; Derpsch and Florentin 1992). These yield increases may be related to N benefits, improved soil structure and water retention, and reduced incidence of pests. In addition to yield benefits, cover crops may also enhance crop quality as is the case of the use of winter rye interplanted with melon that protects the young fruits from sand blasting and scarring. Combined with reduced fertilizer and pesticide costs, this may offset the additional seed and cultivation costs of cover-crop-based systems (Weil and Kremen 2007; Bergtold et al. 2005; Fageria et al. 2005; Sullivan 2003). In addition to reducing fertilizer costs on poor or compacted soils, the use of cover crop (residues) may also enhance aeration and intrinsic yield potential and/or reduce crop risk under water-limited conditions (Sustainable Agriculture Network 2007; Villarreal-Romero et al. 2006; Bergtold et al. 2005; Schroth et al. 2001; Masiunas 1998). Lu et al. (2000) reviewed several studies and commented that many studies only looked at relatively short production cycles. Typically, there were no or only small significant differences in terms of yield benefits. However, in a number of cases, cover-crop-based systems showed appreciable yield fluctuations and higher labor and fuel costs. It was stated that many systems and technologies were still being developed and system performance/yields were either inconsistent or suboptimal. System design and adaptation, thus, may take several years and a number of design and evaluation cycles may be required. This is evident from research by Abdul-Baki during the past decades focusing on developing an integrated technology package, including no-tillage, mixed cover crops, mechanical termination of cover-crop residues, and use of cover-crop-mulched vegetable systems. After initial system design and development in Maryland, this system was perfected, adapted, and successfully used for different crops, regions, and production systems (Abdul-Baki et al. 1996, 1999, 2004; Carrera et al. 2004, 2005, 2007; Teasdale and Abdul-Baki 1998; Wang et al. 2005).

However, many leguminous cover-crop systems may not provide adequate N to meet crop demand and supplemental N fertilizer is still required to reduce the risk of yield reductions of subsequent commercial crops (Cherr et al. 2007; Lu et al. 2000). This is confirmed by a meta-analysis of cropping systems in temperate regions, which showed that legume-fertilized systems had 10% lower yields compared to N-fertilizer systems unless N accumulation in cover-crop residues exceeded 110 kg N ha−1 (Tonitto et al. 2006). A similar study in North America showed that the use of grasses did not affect subsequent maize yields; legumes increased these yields by 37% compared to nonfertilized control systems but yield benefits decreased as N-fertilizer rate increased (Miguez and Bollero 2005). Based on past experiences, limited use of cover crops in high-value commodities was often related to the low cost of inorganic fertilizers. Moreover, most conventional but also some organic nutrient sources have relatively constant and predictable nutrient content and release patterns, while for cover crops, both the nutrient accumulation potential and release patterns tend to be highly variable. Due to this added level of complexity, the integration of cover crops in conventional systems requires farmers to become better managers to ensure optimal system performance (Shennan 2008; Cherr et al. 2006b). However, the exponential (800%) increase in fossil fuel prices between 1998 and 2008 resulted in increases of N- and P-fertilizer prices of 226% and 307%. This unprecedented increase in energy and fertilizer prices, along with the rapid depletion of mineral nutrient reserves, underlines the need for alternative nutrient sources (Wilke and Snapp 2008).

2.3.7 Ecological Services

In the past, externalities and actual replacement costs of nonrenewable resources were not included in production costs. Moreover, in many countries, including India and Mexico, fertilizers are greatly subsidized to improve national food security (Cherr et al. 2006b). This undermines the viability of green technologies, more sustainable development options, and puts a heavy burden on local economies. In terms of ecological services, cover-crop-based systems greatly reduce sediment losses associated with erosion, which are the main agricultural pollutants that also reduce the inherent production capacity of agroecosystems, especially in regions such as Brazil and Uruguay (Dogliotti et al. 2004; Prado Wildner et al. 2004; Dabney et al. 2001). Although cover crops should be an integral part of organic production systems, commercial organic growers may still, to a large extent, depend on animal manures, waste products of other sectors, and allowable synthetic compounds. Pursuing an “input substitution” approach hampers the closing of energy and nutrient cycles, and is in contrast with the farm-based integrated organic approach (Cherr et al. 2006b).

For conventional systems, use of cover-crop-derived mulches may reduce the need for plastic mulches and or soil fumigants (Abdul-Baki et al. 1996, 2004). Replacing a bare fallow with cover crops may also enhance nutrient retention and reduce N-leaching by upto 70% (Wyland et al. 1996). A meta-analysis of cropping-system studies showed that nitrate-leaching in legume-based systems was 40% lower compared to conventional systems (Tonitto et al. 2006). However, late planting and slow initial growth will hamper the effectiveness of cover crops in retaining residual soil nutrients (Mays et al. 2003). Poor system design and/or lack of synchronization result in inefficient N use and poor yields (Cherr 2004; Avila 2006). Therefore, for cover-crop-based systems to be ecologically sound and economically viable, development of integrated systems that provide multiple benefits to offset potential risks and investment costs is essential (Cherr et al. 2006b). In the USA, the Natural Resource Conservation Services (NRCS) awards growers for the environmental services associated with cover-crop use (Bergtold et al. 2005). However, improved assessment of true fertilizer costs will be required and farmers growing cover crops should also receive carbon credits as well (Sainju et al. 2006).

In summary, steady-state SOM values and C-addition rates required to sustain SOM will vary widely depending on pedo-climatic conditions and actual management practices. Models may provide an effective tool to assess potential benefits of cover crops in enhancing SOM (Dogliotti et al. 2005; Lal et al. 2000). Although cover crops can enhance inherent soil fertility and improve profits, inadequate management skills, poor system design, and lack of synchronization will greatly reduce such benefits. Especially in organic systems, cover crops can provide cost-effective weed suppression while in conventional systems, the use of cover crops may not be viable unless they provide multiple benefits and farmers are being awarded for ecological capital generated by growing cover crops.

2.4 Selection

The selection of cover crops is based on pedo-climatic conditions, the set of services required, current crop rotation schemes, and alternative management options (Sustainable Agriculture Network 2007; Cherr et al. 2006b; Anderson et al. 2001). An example of steps taken during the screening process of a large number of cover crops (mixes) in Ohio was discussed by Creamer et al. (1997). Although cover crops provide a myriad of services, the “perfect” cover crop simply does not exist. Consequently, priorities among a set of critical services that cover crop (mixture) should offer need to be determined first. These may include (i) providing nitrogen; (ii) retaining/recycling nutrients and soil moisture; (iii) reducing soil degradation/erosion; (iv) sustaining/increasing SOM levels; (v) reducing the incidence of pests; and (vi) providing products and income (Sustainable Agricultural Network 2007; Cherr et al. 2006b). First, a detailed analysis of the current crop management system on a field level, including crop rotations, duration of commercial crops, inter-crop/fallow period, tillage systems, along with an assesment of potential risk of pests and diseases of commercial crops, is required. Some additional practical selection and screening considerations include the following:

-

Adaptation to drought, flooding, low pH, nutrient limitations, and shading (live mulch)

-

Combining species with complementary growth cycles, canopy traits, and root functionality

-

Lack of adverse traits

-

Unfavorable residue properties (e.g., excessively high C:N ratio, coarse, and recalcitratant residues hampering seed bed preparation, allelopathetic properties that hamper initial germination, and growth of subsequent commercial crops)

-

Competition with cash crops for light, land, water, nutrients, labor, and capital

-

Weediness and/or excessive vigor/regrowth after mowing or mechanical killing

-

Ability to promote (host) pests and diseases

-

-

Availabilty of affordable seeds, suitable equipment, techniques, and information to ensure optimal cover-crop growth, termination, and overall system performance

Following these steps, an initial assesment may be made of perceived benefits and risks which may be used for ranking potential cover-crop (mixture) candidates and/or cultivars; this typically will be based on expert knowledge since no actual data may be available. The next step will be to provide an assessment of the actual services being rendered by such systems (either via field measurements/observation or using computer simulations) to further refine the crop rotation design and cover-crop management (Altieri et al. 2008; Cherr et al. 2006b). In practice, this may be a process of “trial and error” to properly integrate all relevant information as related to local pedo-climatic conditions into the decision-making process. The development of management practices and a suitable site-specific cover-crop-based cropping system that are relevant within the local context, thus, may require several years and a number of experimental learning cycles, while cover-crop-based systems may continue to evolve over time as well. Some of the most pertinent aspects of cover-crop selection will be discussed in more detail below. The use of expert systems, such as GreenCover (Cherr et al. unpublished; http://lyra.ifas.ufl.edu/GreenCover) and ROTAT (Dogliotti et al. 2003), may facilitate the first selection step of designing suitable cover crops.

2.4.1 Adaptation

Adaptation may include day length, temperature, radiation, rainfall, soil, pests, and crop duration aspects. Cover crops can be grouped as being adapted to “cold/temperate” versus “warm/tropical” growth environments (Anderson et al. 2001). The first type may survive a freeze upto −10°C while their growth may be hampered under hot conditions (>25–30°C). Leguminous species within this group include Lupinus, Trifolium, and Vicia species and they grow well in temperate climates, during the winter season in subtropical climates, or in the tropical highlands (Cherr et al. 2006b; Giller 2001). The second group does not tolerate freezes (<−2°C) but may thrive under hot (>35°C) conditions. Some of the key leguminous species within this group include the genus Canavalia (e.g., Jack bean C. ensiformis), Crotalaria (e.g., sunn hemp C. Juncea), and Mucuna (e.g., velvet bean). Tropical species may also be more easily grown during the summer months as one moves toward the subtropics or even throughout the year (tropical regions). Both temperature and day length affect crop development and growth duration. Use of simple phenology models facilitates the selection of suitable species for different production environments, which can be particularly important in hillside environments (Keatinge et al. 1998). Over-sowing cover crops into existing crops (e.g., maize) requires the selection of species that are adapted to low initial light regimes (Anderson et al. 2001).

Adaptation to local soil conditions, as related to soil drainage, texture, pH, and presence of compatible rhizobia strain for leguminous crops, is critical (Cherr et al. 2006b; Giller 2001). On soils with adequate moisture storage capacities, cover crops may be grown during the dry season, while in other cases, the growth may be limited by rainfall since adequate soil moisture is required during initial growth. Crop water requirements of cover crops depend on crop type and growth duration, but in many cases, cumulative water use may be comparable to that of commercial crops, and in water-limited systems, cover crops may deplete residual soil moisture reserves as well and may have to be killed prematurely (Cherr et al. 2006b). Especially, leguminous crops may be poorly adapted to either extremely acidic or alkaline soils or poorly drained soils (Cherr et al. 2006b; Giller 2001). When introducing new non-promiscuous leguminous types, the presence of suitable inoculum is critical, since poor nodulation hampers crop growth and N accumulation (Giller 2001). Leguminous crops, although adapted to N-limiting conditions, may have appreciable needs for other nutrients (including K, P, Mo), while due to their slow initial growth, they are not very efficient in utilizing residual soil nutrients.

2.4.2 Vigor and Reproduction

Initial growth of small-seeded cover crops, e.g., clovers, may not be as vigorous compared to larger-seeded types which have more reserves and can be planted deeper, especially when rainfall during initial growth is erratic (Cherr et al. 2006b). Self-seeding types, e.g., crimson clover, may provide an ample seed bank and thus, germination may be triggered automatically when conditions are favorable (Cherr et al. 2006b). However, reseeding types may also become a potential pest themselves, especially when they are hard- and/or large-seeded types. In this case, timely mowing prior to seed set may be required although the original planted crop may become a dormant seed bank in itself unless it is stratified in an appropriate manner. Cover crops such as sunn hemp over time may become rather tall (>3 m) with very thick and recalcitrant stems that may pose serious problems in subsequent vegetable crops, since they can hamper bed formation. In this case, repeated mowing may be required (N. Roe, personal communication). Other cover crops may have a viny and rather aggressive growth habit, e.g., cowpea and velvet bean, that can interfere with commercial crops when used as green mulch as was reported in citrus (Linares et al. 2008).

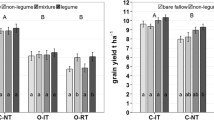

2.4.3 Functionality and Performance

In many hilly regions in Latin America, cover crops are an integral component of no-tillage systems, since they can reduce soil erosion, labor, and herbicide costs, and can alsoincrease yields (Prado Wildner et al. 2004). In organic systems, they can be a critical component of integrated weed management strategies (Linares et al. 2008). The actual performance of cover crops depends on system design, inherent soil fertility, pedo-climatic conditions, management (including the use of well-adapted species), and crop duration (Cherr et al. 2006b; Giller 2001). Although potential cover-crop production may be highest in warm and high rainfall environments, SOM breakdown and potential nutrient losses under such conditions also tend to be much greater, and thus, net benefits may be actually lower compared to more temperate climates. Information on adaption, growth, and performance may be obtained from the literature (Baligar and Fageria 2007; Sustainable Agriculture Network 2007; Cherr et al. 2006b). Even within cover-crop species, there may be appreciable differences in specific traits that can greatly affect their adaptation and functionality as related to specific production settings (e.g., cold and drought tolerance; shoot:root ratio) as was shown for hairy vetch (Wilke and Snapp 2008). Use of cover-crop mixes with complementary traits may enhance the functionality, productivity, resilience, and adaptability of cover-crop-based systems and thus, facilitate more efficient resource use capture under adverse conditions (Malézieux et al. 2009; Altieri et al. 2008; Linares et al. 2008; Drinkwater and Snapp 2007; Weil and Kremen 2007; Teasdale et al. 2004; Dabney et al. 2001; Creamer et al. 1997). Moreover, a combination of several species may provide the benefits of different included species within a single year (Calegari 2003), whereas no single cover-crop species consistently performs superior across different years and field sections (Linares et al. 2008; Carrera et al. 2005).

Typically, cover crops are not irrigated nor are they being fertilized. The growth of cover crops may be superior on more fine-textured soils since these soils often have higher SOM values, inherent soil fertility, and better water and nutrient-retention capacities. This may result in a positive feedback mechanism that, in turn, can further boast cover-crop performance over time (Cherr et al. 2006b). However, on very heavy soils, limited drainage may also result in poor aeration and increased incidence of diseases, thus, resulting in poor stands and suboptimal cover-crop performance. In organic tomato production systems in California, mixtures of grasses with leguminous cover crops accumulated more biomass but less N, whereas their residues had higher C:N ratios which delayed mineralization (Madden et al. 2004). On very sandy soils, low inherent soil fertility, among other factors, may limit growth of the cover crops, whereas nutrients accumulated in its residue may be also readily lost due to leaching prior to the peak nutrient demand of a subsequent commercial crop (Cherr et al. 2007). As a result, in adverse production environments, the growth and the benefits that cover crops provide may be limited and integrated soil fertility management practices may be required to enhance overall system performance (Tittonell 2008; Giller 2001). In summary, a design of an appropriate cover-crop system based on key desired ecological functions, is critical for system performance. The use of expert knowledge and computer-based evaluation tools can facilitate initial screening, while optimal system design may require numerous design cycles to tailor systems to local management conditions.

2.5 Management

2.5.1 Rotation

Developing suitable crop rotation schemes is critical for enhancing systems performance. The design of both spatial and temporal crop arrangements on a farm level will be based on meeting a set of grower-defined production objectives along with adhering to site-specific phyto-sanitory guidelines. Growers typically allocate cover crops to underutilized temporal and/or spatial components of their cropping system, e.g., fallow period or row middles, which constrain their use. The growth season of cover crops is, thus, defined by the cropping season of commercial crops which, in turn, is dictated by rainfall or temperature patterns. Although it requires special equipment, undersowing of a cover crop in an existing crop may be desirable, since it facilitates more efficient resource use while reducing potential nutrient losses and erosion risks (Hartwig and Ammon 2002; Sullivan 2003). In the southern USA, cover crops such as sorghum, sudan grass, or sunn hemp may be grown during times when it is too hot to grow commercial crops as is the case in Florida (Avila 2006). In the case of more complex arable cropping systems, the use of software tools to explore such options to generate viable alternatives greatly facilitates the design process (Bachinger and Zander 2007; Dogliotti et al. 2003).

2.5.2 Biomass Production and Residue Quality

Most cover crops follow a “logistic” or “expo-linear” growth pattern, so after an initial “lag-phase” prior to canopy closure, biomass accumulation rates tend to be relatively constant before leveling off toward crop maturation (Kruidhof 2008; Yin et al. 2003). Although there is a multitude of information on cover-crop performance in terms of biomass and N accumulation at maturity, narrow windows of opportunity for planting commercial crops may require cover crops to be killed prematurely (Cherr et al. 2006b). In this case, simple linear equations, thus, may be developed to estimate the amount of residues as a function of crop yield (Steiner et al. 1996). Alternatively, degree day-based models may be used to predict biomass and N accumulation of cover crops as a function of accumulated temperature units (Schomberg et al. 2007, Cherr et al. 2006c).

The carbon content of most plant material is relatively constant over time with values being on the order of 40–44% (Avila 2006; Dinesh et al. 2006). Overall plant N concentration typically follows an exponential decay curve over time (“N dilution curve”) and final N tissue concentration is, thus, a function of crop type, crop age, and N supply (Lemaire and Gaston 1997). In terms of N accumulation and subsequent N release of cover crops, based on data outlined by Cherr et al. (2006b) calculated N concentrations for temperate versus tropical legumes are on the order of 1.9–3.6% and 2.6–4.8% compared to 0.7–2.5% for nonleguminous crops which translates to corresponding C:N ranges of 8–15, 11–21, and 16–57, respectively. Calegari (2003) provided a detailed overview on the mineral composition and C:N ratio of different cover crops grown in Brazil. Such information provides an insight into the overall nutrient supply capacity of cover-crop residues, though values may differ on the basis of local soil fertility regimes. As cover crops mature, there is a gradual shift toward both structural and reproductive parts (Cherr et al. 2006c). With aging, both the leaf fraction and the N content of leaves and stems decrease, whereas more recalcitrant compounds and seed proteins may accumulate (Cherr 2004; Cherr et al. 2006b; Lemaire and Gaston 1997). Increasing plant density will result in early canopy closure, higher initial biomass accumulation rates, and dry matter allocation to less recalcitrant and high-N plant parts, while excessive high plant densities may reduce growth due to crowding (Cherr et al. 2006b). Repeated mowing for sod-forming or indeterminate cover crops can delay the shift toward more recalcitrant plant parts, enhance N content, and increase total biomass production (Cherr et al. 2006b; Snapp and Borden 2005). Planting density, time of “mowing” or “killing” cover crops, thus, affect both residue quantity and quality, and may be used to manipulate system dynamics.

2.5.3 Cover-Crop Termination and Residue Management

At the end of the fallow season, cover crops may be killed by herbicide, mowing, flaming, or by a crimper (Sustainable Agricultural Network 2007; Calegari 2003; Sullivan 2003; Lu et al. 2000; Masiunas 1998). Mowing may result in the formation of a compact mulch layer, that in turn, may help to conserve soil moisture and reduce soil erosion (Fig. 2.4). Rolled residues decompose slower compared to the use of mowing or herbicides, while the residue layer also tends to persist longer, and provides more effective long-term soil erosion control (Lu et al. 2000). Timing of mowing, as related to cover-crop development stage, is critical in term of maximizing biomass and N accumulation while reducing the risk that cover crops regrow or set seed and thereby interfere with a subsequent commercial crop (Prado Wildner et al. 2004; Sullivan 2003). The optimal time of residue killing is also related to cover crops’ main function. If soil conservation and SOM buildup are priorities, older and more lignified residues may be preferable. However, delaying killing may hamper the effectiveness of rollers/crimpers, whereas residues are also more likely to interfere with planting equipment, while the resulting augmented C:N ratio can also increase the risk of initial N immobilization. Mowing and use of herbicide, on the other hand, will increase residue decomposition and subsequent mineralization (Snapp and Borden 2005). Many farmers may opt to delay planting after residue kill to reduce the risk of transmittance of herbivores feeding on residues invading the new crop, to ensure adequate settling of residues which facilitates planting operations, and to prevent the negative effects of allelopathetic compounds on the emerging crop (Prado Wildner et al. 2004). Alternatively, placement of seeds below the residue layer can reduce the risk of potential allelopathetic substances hampering initial growth (Altieri et al. 2008).

2.5.4 Tillage

Soil incorporation of cover crops enhances soil residue contact and also buffers its moisture content which tends to speed up decomposition, while surface applied residue may have a greater capacity for N immobilization (Cherr et al. 2006b). Surface application of residues also favors saprophytic decomposition by fungi, whereas bacterial decomposition is prevailing more for incorporated residues and repeated tillage tends to greatly enhance mineralization (Lal et al. 2000). Leaving mulch residues of cowpea, used as a cover crop in a lettuce production system, was much more effective in suppressing weeds compared to tilling in residues but it also reduced lettuce yields by 20% (Ngouajio et al. 2003). Use of no-tillage may reduce labor costs, energy use, and potential erosion while increasing carbon sequestration, biodiversity, and soil moisture conservation (Triplett and Dick 2008; Peigné et al. 2007; Giller 2001). In Brazil, it was demonstrated that the integrated use of cover crops with no-tillage is critical for enhancing/sustaining SOM (Calegari 2003). These techniques are complementary work and work synergistically while the use of conventional tillage will promote rapid breakdown of SOM which may partially offset cover-crop benefits (Phatak et al. 2002). However, in organic production systems, no-tillage may result in increased incidence of grassy and perennial weeds, while for poorly drained/structured soils and under excessive wet soil conditions, its use may have unfavorable effects on soil tilth, crop growth, incidence of plant pathogens, and it may also increase the risk of N immobilization (Peigné et al. 2007). Although no-tillage and the presence of crop residues near the surface may reduce soil evaporation, it can promote root proliferation near the soil surface, thus, rendering subsequent commercial crops more vulnerable to prolonged drought stress (Cherr et al. 2006a).

2.5.5 Synchronization

Residue decomposition rates depend on both crop composition management and pedo-climatic conditions (Snapp and Borden 2005). Release patterns tend to be highly variable both in space and time. The release of readily available crop nutrients from cover-crop residues, thus, may not coincide with peak nutrient requirements of a subsequent crop (poor synchrony). This problem is evident from the large number of studies reviewed by Sarrantonio and Gallandt (2003) in which nutrient release was either premature or too late. Residue C:N values will, to a large degree, determine initial decomposition rates together with factors such as the content of water-soluble and intermediate available carbon compounds in the residue (Ma et al. 1999). Nitrogen allocation to root systems may be on the order of 7–32% and 20–25% of its N may be released to the soil prior to crop senescence (Cherr et al. 2006b). Moreover, under hot and humid conditions, nutrient release from low C:N residue materials may be premature and N-leaching losses can be very high (Cherr et al. 2007; Giller 2001). However, under cold and/or dry conditions, use of more recalcitrant residues, and N-limited conditions, will delay initial release and net N immobilization may hamper initial growth of commercial crops (Cherr et al. 2006b; Sarrantonio and Gallandt 2003). However, better synchronization requires improved understanding of residue decomposition and net mineralization. However, since these processes are affected by a large number of biotic, pedo-climatic, and management factors, appropriate use of decomposition models may be required to provide a better insight on how interactions among management factors come into play. These model tools may then be integrated into decision-support tools for farm managers/advisors, which was the rationale for developing the NDICEA model (van der Burgt et al. 2006). Thus, such tools can be effectively used to improve the synchronization of nutrient-release patterns with crop demand which should facilitate the successful integration of cover crops in conventional systems. Based on predictions of such models, management options such as use of different spatial and/or temporal crop arrangements, use of cover-crop mixtures to modify initial C:N ratios, time and method of killing, and method of incorporation, among others may be used to enhance synchronization (Weil and Kremen 2007; Cherr et al. 2006b; Balkcon and Reeves 2005; Sullivan 2003). As an example, using a biculture of rye and vetch and modifying seed-mixture ratios can facilitate improved synchronization. Increasing the vetch:rye ratio will speed up initial mineralization, reduce the risk of initial immobilization, but may increase potential N-leaching risks (Kuo and Sainju 1997; Teasdale and Abdul-Baki 1998). In summary, it is evident that poor synchronization favors inefficiencies and increases potential nutrient losses. This is one of the key factors deterring conventional farmers from adopting cover-crop-based systems. Use of cover-crop mixes, improved timing of mowing and/or incorporation, along with use of decision-support tools such as NDICEA are some of the key options to enhance synchronization.

2.6 Limitations and Challenges

2.7 Information and Technology Transfer

Although cover crops provide a myriad of services, their adaptation by conventional farmers typically has been slow (Sarrantonio and Gallandt 2003). In Brazil, they were introduced during the 1970s, but wide-scale adoption took several decades (Prado Wildner et al. 2004). Some potential challenges may include: additional production costs (in terms of land, labor, and inputs), the complexity of cover-crop-based systems, the lack of pertinent information and suitable technology transfer methods, the uncertainty of release patterns from cover-crop residues, and lack of secure land tenure (Singer and Nusser 2007; Cherr et al. 2006b; Nyende and Delve 2004; Sarrantonio and Gallandt 2003; Lu et al. 2000). This additional level of complexity, combined with lack of information on suitable management practices, along with the perceived risks associated with cover-crop-based systems, prevents growers from adopting cover-crop-based systems (Shennan 2008; Sarrantonio and Gallandt 2003).

Regarding information on cover crops, a search of the CAB citation index for “cover crops” clearly indicated an increased interest in cover crops during the past decades. The annual number of papers on this topic decreased from 74 (1961–1970) to 37 (1971–1980), but then increased again from 56 (1981–1990) to 160 (1991–2000), and then to 221 (2001–2007). Despite this impressive increase in publication numbers, producers still cite lack of useful information about cover crops as one of the greatest barrier to their use (Singer and Nusser 2007). Although, during the first half of the last century, most farmers routinely used cover crops, this traditional knowledge base has been gradually lost. Even within research and extension faculty, there was a complete erosion of knowledge and experience as faculty members with a more traditional farm background retired. Moreover, during the past decades, academic interest has shifted toward genetic engineering technology, typically resulting in the recruitment of scientists lacking basic agronomic knowledge. Furthermore, most conventional farmers are not in a position to take the economic risk associated with experimentation and exploration of suitable cover-crop technologies and thus, increasingly depend on external information sources (Weil and Kremen 2007; Cherr et al. 2006b). Therefore, lack of appropriate information and technology transfer approaches still continues to be among the key factors hampering the adoption of cover-crop-based systems (Bunch 2000).

The traditional “top-down” approach used by research and development institutes to provide technical solutions to farmers in the absence of a thorough understanding of local socioeconomic conditions and agroecosystems appears to be especially ineffective for cover-crop-based systems (Anderson et al. 2001). Establishing “innovation groups,” a technology development and exchange structure in which farmers play a key role and/or “farmer-to-farmer” training networks, in which innovative farmers assume an active role as educators, may be more appropriate for propagating cover-crop-based technologies (Anderson et al. 2001; Horlings 1998). Since most university programs are still poorly equipped to address the specific needs of organic farmers, this producer group may still be forced to engage in some on-farm experimentation with cover-crop-based systems, especially since this group appears to benefit greatly from the use of cover crops (Linares et al. 2008).

2.7.1 Resource Management

The growth and nutrient accumulation among cover-crop-based systems may vary greatly between fields and years, while subsequent nutrient-release patterns are also affected by a great number of pedo-climatic and management factors. Limited knowledge of these processes on a field scale will result in poor synchronization between nutrient release by cover crops and subsequent crop demand of commercial crops, thereby increasing the risk of inefficient N use and poor system performance (Cherr et al. 2006b). Although simulation models could harness some of this complexity, most of these models were developed for scientists and are difficult to implement, whereas models for informed decision-making and improved management of cover crops require a combination of a sound scientific basis with practice-oriented model design (van der Burgt et al. 2006).

In terms of combining cover crops with no-tillage systems, although such systems provide multiple benefits, there are also several additional challenges. Cover crops grown as live mulches or ineffective crop-kill of annual cover crops, such as ryegrass or vetch, can result in cover crops competing with cash crops which may reduce yields (Hiltbrunner et al. 2007; Teasdale et al. 2007; Madden et al. 2004). Residues of cover crops can hamper soil cultivation and initial germination (due to inconsistent seed cover), delay planting operations (since residues need some time to decompose/die), harbor pests and diseases, decrease initial crop growth (due to N immobilization, release of growth inhibiting compounds, or crop competition), and/or reduce soil temperatures (Peigné et al. 2007; Teasdale et al. 2007; Weil and Kremen 2007; Avila 2006; Cherr et al. 2006b; Masiunas 1998). However, in sweet maize a reduction in initial plant stands in no-till rye–vetch cover-crop-based systems was offset by improved growth and yields were still higher compared to bare soil control (Carrera et al. 2004). But for vegetable crops, the use of cover crops delayed crop maturation and/or reduced both initial crop growth and final yield of subsequent crops (Avila 2006; Sarrantonio and Gallandt 2003; Abdul-Baki et al. 1996, 1999; Creamer et al. 1996). Especially for high-value commodities such as vegetables where precocity may translate into significant price premiums, such effects may have a strong negative impact on profitability (Avila 2006; Creamer et al. 1996). Only after researchers became aware of these issues and the system was redesigned (e.g., by using strip-till) this problem could be addressed (Phatak et al. 2002). Such adaptive learning and innovation cycles should be an integral part of training programs to enhance the efficiency of technology transfer (Douthwaite et al. 2002).

Under water-limiting conditions, use of cover crops will also deplete residual soil moisture levels and thereby, can reduce yields of subsequent crops (Sustainable Agricultural Network 2007). Use of winter cover crops in semiarid conditions in California, reduced soil water storage by 65–74 mm, thereby, impacting the preirrigation needs of subsequent crops and/or performance of subsequent annual crops (Michell et al. 1999). In perennial systems (e.g., vineyards), perennial cover crops were shown to have both higher root densities and deeper root systems, thus, resulting in more pronounced soil water depletion but either one affects spatial and temporal water supply. Grapevines may adapt its rooting pattern to minimize water stress, while supplemental irrigation mainly benefits cover crops (Celette et al. 2008). Although cover crops may provide a time-released source of N which is often perceived to be more efficient compared to inorganic N, poor synchronization will result in high potential N losses and thereby, greatly reduce efficiencies from residue-derived N and may also increase the risk of N-leaching (Cherr et al. 2007).

2.7.2 Socioeconomic Constraints