Abstract

We show how scaled Bregman distances can be used for the goal-oriented design of new outlier- and inlier robust statistical inference tools. Those extend several known distance-based robustness (respectively, stability) methods at once. Numerous special cases are illustrated, including 3D computer graphical comparison methods. For the discrete case, some universally applicable results on the asymptotics of the underlying scaled-Bregman-distance test statistics are derived as well.

Dedicated to the Indian Statistical Institute and to the People of India.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

It is well-known that density-based distances—also known as divergences, disparities, (dis)similarity measures, proximity measures—between two probability distributions serve as useful tools for parameter estimation, testing for goodness-of-fit respectively homogeneity respectively independence, clustering, change point detection, Bayesian decision procedures, as well as for other research fields such as information theory, signal processing including image and speech processing, pattern

recognition, feature extraction, machine learning, econometrics, and statistical physics. For some comprehensive surveys on the distance approach to statistics and probability, the reader is referred to the insightful books of, e.g. Liese and Vajda (1987), Read and Cressie (1988), Vajda (1989), Csiszar and Shields (2004), Stummer (2004), Pardo (2006), Liese and Miescke (2008), Basu et al. (2011), Voinov et al. (2013), and the references therein; see also the survey papers of, e.g. Maasoumi (1993), Golan (2003), Liese and Vajda (2006), Vajda and van der Meulen (2010). Distance-based bounds of Bayes risks (e.g. in finance) can be found, e.g. in Stummer and Vajda (2007), see also Stummer and Lao (2012). Amongst others, some important density-based distribution-distance classes are:

-

the Csiszar-Ali-Silvey divergences CASD (C. 1963, A.-S. 1966): this includes, e.g. the total variation distance, exponentials of Renyi cross-entropies (Hellinger integrals), and the power divergences (also known as \(\alpha {-}\)entropies, Cressie-Read measures, Tsallis cross-entropies); the latter cover, e.g. the Kullback–Leibler information divergence (relative entropy), the (squared) Hellinger distance, the Pearson chi-square divergence;

-

the “classical” Bregman distances CBD (see, e.g. Bregman (1967), Csiszar (1991), Csiszar (1994), Csiszar (1995), Pardo and Vajda (1997), Pardo and Vajda (2003)): this includes, e.g. the density power divergences DPD (also known as Basu-Harris-Hjort-Jones BHHJ distances, cf. Basu et al. (1998)) with the squared \(L_2{-}\)norm as special case.

The modern use of density-based distances between distributions with a view towards robustness investigations started with the seminal paper of Beran (1977), which was considerably extended by Lindsay (1994), Basu and Lindsay (1994); the latter two articles develop insightful comparisons between the robustness performance of some (in effect) CASD distances, in terms of the important concept of the residual adjustment function RAF (having the Pearson residual between the data and the candidate model as its argument). In contrast, the robustness properties of the above-mentioned DPDs were studied by Basu et al. (1998). The growing literature of these two research lines is comprehensively summarized in the book of Basu et al. (2011); more recently, Basu et al. (2013, 2015a) develop the asymptotic distribution of DPD test statistics, Ghosh and Basu (2013, 2014) apply the DPD family for robust and efficient parameter estimation for linear regressions and for censored data, whereas Basu et al. (2015b) use the DPD for the development of robust tests for the equality of two normal means. Concerning some recent progress of divergences, Stummer (2007) as well as Stummer and Vajda (2012) introduced the concept of scaled Bregman divergences/distances SBD, which enlarges all the above-mentioned (nearly disjoint) CASD and CBD divergence classes at once. Hence, the SBD class constitutes a broad framework for dealing with a wide range of data analyses in a well-structured way; for each concrete data analysis, the free choice of the two major SBD building blocks (generator, scaling measure) implies much flexibility for interdisciplinary situation-based inference, see e.g. Kißlinger and Stummer (2013, 2015a, b) for corresponding exemplary contexts of applicability for parameter estimation, goodness-of-fit testing, model search, change detection, etc. Combining the insights of the preceding explanations suggests that all the above-mentioned robustness and efficiency investigations can be covered at once and further extended by means of SBDs. Accordingly, the main goals of this paper are (i) to build up a SBD-based bivariate statistical-engineering paradigm for the goal-oriented design of new outlier- and inlier-robust statistical inference tools, and (ii) to derive asymptotic distributions of the corresponding SBD test statistics. To achieve this, we first develop in Sect. 2 some new classes of scale connectors. Subsequently, in order to obtain a comfortable interpretability of the underlying robustness structure—through 3D visualization—we introduce in Sect. 3 the concept of density-pair adjustment functions DAF(0) and DAF(1) of zeroth and first order; the latter is a flexible bivariate extension of the above-mentioned (univariate) RAF. Finally, for the finite discrete case the asymptoticity (ii) is investigated in Sect. 4. Throughout this paper, we present numerous 3D plots in order to illustrate the immediate applicability of our methods.

2 Scaled Bregman Divergences

Suppose that we want to measure the divergence (distance, dissimilarity, proximity) D(P, Q) between two probability distributions P, Q on a space \(\mathscr {X}\) (with \(|\mathscr {X}|\ge 2\)) equipped with a \(\sigma {-}\)algebra \(\mathscr {A}\). Typically, one has the following constellations:

-

P and Q are both “theoretical distributions”, e.g. \(P=Poisson(a_1)\) and \(Q=Poisson(a_2)\);

-

P and Q are both (random sample versions of) “data-derived distributions”, which appear, e.g. in the context of change detection, two-sample testing; for instance, \(P:= P_{N}^{emp} := \frac{1}{N} \cdot \sum _{i=1}^{N} \delta _{X_{i}}[\cdot ]\) is the histogram-conform empirical distributionFootnote 1 of an iid sample \(X_1, \ldots , X_N\) of size N from \(P_{\theta _1}\) and \(Q:= P_{M}^{emp}\) is the empirical distribution of an iid sample \(\widetilde{Y}_1, \ldots , \widetilde{Y}_M\) from \(P_{\theta _2}\);

-

P is a data-derived distribution and Q is a theoretical distribution; e.g. in the context of minimum distance estimation or goodness-of-fit testing, \(P = P_{N}^{emp}\) and \(Q=P_{\theta }\) is a hypothetical candidate for the unknown underlying true sampling distribution \(P_{\theta _{1}}\). Thus, \(D(P_{N}^{emp},P_{\theta })\) measures the discrepancy between the “pattern” of the observed data and the “pattern” predicted by the candidate model.

Since the ultimate purposes of a (divergence-based) statistical inference may vary from case to case, some goal-oriented situation-based well-structured flexibility can be obtained by using a toolbox \(\mathscr {D} := \{D_{\phi ,M}(P,Q) : \phi \in \varPhi , M \in \mathscr {M} \}\) of divergences which is far-reaching due to various different choices of a “generator” \(\phi \in \varPhi \) and a “scaling measure” \(M \in \mathscr {M}\). In particular, this should also cover robustness issues (in a wide sense). To find good choices of \(\mathscr {D}\) is one of the purposes of “statistical engineering”. One possible candidate for a (density-based) wide divergence family \(\mathscr {D}\) is the concept of scaled Bregman divergences SBD of Stummer (2007), Stummer and Vajda (2012). To describe this, for the sake of brevity we deal with the generator class \(\varPhi =\varPhi _{C_1}\) of functions \(\phi :(0,\infty )\mapsto {\mathbb {R}}\) which are continuously differentiable with derivative \(\phi ^{\prime }\), strictly convex and continuously extended to \(t=0\), and (w.l.o.g.) satisfy \(\phi (1)=0\), \(\phi ^{\prime }(1)=0\). Furthermore, for a fixed \(\sigma \)-finite (“reference”) measure \(\lambda \) on \(\mathscr {X}\) we denote by \(\mathscr {M}_{\lambda }\) resp. \(\mathscr {P}_{\lambda }\) the family of all \(\sigma {-}\)finite measures M on \(\mathscr {X}\) resp. all probability measures (distributions) P on \(\mathscr {X}\) having densities \(m={\frac{\mathrm {d}M}{\mathrm {d}\lambda }} \ge 0\) resp. \(p={\frac{\mathrm {d}P}{\mathrm {d}\lambda }} \ge 0\) with respect to \(\lambda \). For \(Q \in \mathscr {P}_{\lambda }\), we write \(q={\frac{\mathrm {d}Q}{\mathrm {d}\lambda }} \ge 0\). Within such a context (and even for non-differentiable generators \(\phi \)), Stummer (2007), Stummer and Vajda (2012) introduced the following framework of statistical distances:

Definition 1

Let \(\phi \in \varPhi _{C_1}\) and \(\lambda \) be \(\sigma \)-finite measure on \(\mathscr {X}\). Then the Bregman divergence of \(P,Q \in \mathscr {P}_{\lambda }\) scaled by \(M \in \mathscr {M}_{\lambda }\) is defined by

To guarantee the existence of the integrals in (1), (2) (with possibly infinite values), the zeros of p, q, m have to be combined by proper conventions (see, e.g. Kißlinger and Stummer (2013) for some discussions; the full details will appear elsewhere).

Notice that—for fixed M—one gets \(B_{\phi }\left( P,Q\,|\,M\right) = B_{\tilde{\phi }}\left( P,Q\,|\,M\right) \) for any \(\tilde{\phi }(t):= \phi (t) + c_1 + c_2 \cdot t\) (\(t \in ]0,\infty [\)) with constants \(c_1,c_2 \in \mathbb {R}\). Moreover, there exist “essentially different” pairs \((\phi ,M)\) and \((\breve{\phi },\breve{M})\) (where \(\phi (t) - \breve{\phi }(t)\) is nonlinear in t) for which \(B_{\phi }\left( P,Q\,|\,M\right) = B_{\breve{\phi }}\left( P,Q\,|\,\breve{M}\right) \), c.f. Remark 1a below. For power functions

one obtains from (2) the scaled Bregman power distances

(cf. Stummer and Vajda (2012), Kißlinger and Stummer (2013)), especially

In the discrete case where \(\mathscr {X} = \{x_1, x_2, \ldots \}\) is finite or countable and \(\lambda := \lambda _{count}\) is the counting measure (i.e., \(\lambda _{count}[\{x_k\}] =1\) for all k), then p, q, m are (probability) mass functions and (2) becomes

For instance, if \(P:= P_{N}^{emp} := \frac{1}{N} \cdot \sum _{i=1}^{N} \delta _{X_{i}}[\cdot ]\) is the above-mentioned data-derived empirical distribution of an iid sample \(X_1, \ldots , X_N\) of size N, the corresponding probability mass functions are the relative frequencies \(p(x) = p_{N}^{emp}(x) = \frac{1}{N} \cdot \# \{ i \in \{ 1, \ldots , N\}: X_i =x \} \); if \(Q=P_{\theta }\) is a hypothetical candidate model, then \(q(x) = p_{\theta }(x)\). In contrast, for \(\mathscr {X}=\mathbb {R}\) and Lebesgue measure \(\lambda := \lambda _{Leb}\), one gets p, q, m as “classical” (probability) densities and (2) reads as “classical” Lebesgue (Riemann) integral. As an example, take the standard Gaussian \(p(x) = \frac{1}{2\pi } exp\{-x^2/2\}\).

Returning back to the general context, for applicability purposes we aim here for a wide class \(\mathscr {M}\) of scaling measures M such that the outcoming SBDs \(B_{\phi }\left( P,Q\,|\,M\right) \)

-

cover much more than the frequently used concepts of Csiszar-Ali-Silvey divergences CASD and classical Bregman distances CBD,

-

can be used to tackle robustness (and many other) issues in a well-structured, finely nuanced way,

-

lead (despite of the involved generality) to concrete explicit asymptotic results for data analyses where the sample size grows to infinity.

Correspondingly, in the following we confine ourselves to the special subframework \(B_{\phi }\left( P,Q\,|\,W(P,Q)\right) \) with scaling measures of the form \(M=W(P,Q)\) in the sense that \(m(x)=w(p(x),q(x)) \ge 0\) (\(\lambda {-}\)a.a. \(x \in \mathscr {X}\)) for some (measurable) “scale-connector” \(w:[0,\infty [ \times [0,\infty [ \mapsto [0,\infty ]\) between the densities p(x) and q(x) (where w is strictly positive on \(]0,\infty [ \times ]0,\infty [\)). In the discrete case we shall restrict w to \([0,1]\times [0,1]\) (take, e.g. \(P:= P_{N}^{emp}\) and \(Q=P_{\theta }\) as a running example); accordingly, the underlying construction principle and 3D plotting technique of \(B_{\phi _{\alpha }}\left( P,Q\,|\,W_{\beta }(P,Q)\right) \) is illustrated in Fig. 1, where we allow w to depend on a parameter \(\beta \). Within such a context, the following special choices of scale connectors \(w(\cdot ,\cdot )\) are of particular interest:

1. no scaling: \(M=1\), i.e. \(w(u,v): = w_{no}(u,v) =1\) for all \(u,v \in [0,\infty [\). Then,

is the classical Bregman distance CBD between P and Q, generated by \(\phi \). For the particular choice \(\phi =\phi _{\alpha }\) (cf. (3)), \(B_{\phi _{\alpha }}\left( P,Q\,|\,1\right) \) (cf. (4)) is a multiple of the \(\alpha {-}\)order density power divergence DPD\(_{\alpha }\) (also known as BHHJ divergence) of Basu et al. (1998); see also Basu et al. (2011, 2013, 2015a, b), Ghosh and Basu (2013, 2014), for recent applications. Notice that, e.g. for finite space \(\mathscr {X}\), \(B_{\phi _{2}}\left( P,Q\,|\,1\right) \) corresponds to the squared \(L_2{-}\)norm.

2. multiple idempotency scaling: the scale connector \(w(\cdot ,\cdot )\) is arbitrary with the only constraint that

For instance, this will turn out to be important for obtaining a “straight” (i.e., unmixtured) chi-square distribution for the asymptotics of corresponding scaled Bregman distances in an i.i.d. sample context, see Subcase 3 in Sect. 4 below. Notice that for two multiple idempotency scalings \(w_1\) and \(w_2\), also \(c_1 w_1 + c_2 w_2\) (\(c_1, c_2 \ge 0\) with \(c_1+c_2 >0\)), \(\min \{w_1,w_2\}\) and \(\max \{w_1,w_2\}\) are multiple idempotency scalings; however, the latter two may not inherit the differentiability properties of \(w_1\) and \(w_2\) which may lead to complications in asymptoticity assertions. Let us mention some important special cases (see the figures in Fig. 2 for illustration in the discrete case where \(u,v \in [0,1]\)):

Some scale connectors; \(c=1\). a \(w_{0,1}(u,v) = v\) (all Csiszar-Ali-Silvey divergences). b \(w_{0.45,1}(u,v) = 0.45 \cdot u + 0.55 \cdot v\). c \(w_{0.45,0.5}(u,v) = (0.45 \sqrt{u} + 0.55 \sqrt{v})^2\). d \(w_{0.45,0}(u,v) =u^{0.45} \cdot v^{0.55}\). e \(w_{0.45,\tilde{f}_{6}}(u,v)=\frac{1}{6} \log \left( 0.45 e^{6 u} + 0.55 e^{6 v}\right) \). f \(w_{E}(u,v) = \dfrac{1+uv-\sqrt{(1-u^2)(1-v^2)}}{u+v}\). g \(w_{0.45}^{med}(u,v) = \text {med}\{\min \{u,v\}, 0.45, \max \{u,v\} \}\). h \(w_{adj}(u,v)\) with \(h_{in}=-0.33\), \(h_{out}=-0.25\), etc. i \(w_{adj}(u,v)\) with \(h_{in}=0\), \(h_{out}=0\), etc. j \(w_{adj}^{smooth}(u,v)\) with \(h_{in}=-0.5\), \(h_{out}=0.3\), \(\delta =10^{-7}\), etc. k Parameter description for \(w_{adj}(u,v)\), cf. (h), (i), (j). l classical outlier/inlier areas \(A_{out}^{cl}\) resp. \(A_{in}^{cl}\)

-

2a.

Scalings of the form

$$\begin{aligned} w(u,v):= w_{\beta ,f}(u,v):=c\cdot f^{-1}\left( \beta \cdot f(u)+(1-\beta )\cdot f(v)\right) \ , u\in [0,\infty [\ , v\in [0,\infty [ \end{aligned}$$(8)for some strictly monotone function \(f:[0,\infty [\rightarrow [0,\infty [\), \(\beta \in [0,1]\). This means that the scale connector \(w_{\beta ,f}\) is a positive multiple of a weighted generalized quasi-linear mean WGQLM (between u and v); for a general comprehensive study on WGQLMs, see e.g. Grabisch et al. (2009). For fixed \(\beta \in [0,1]\) and \(u,v \in [0,\infty [\), we derive from (8) the following useful subsubcases:

-

2ai.

multiple of weighted rth-power mean WRPM, \(r\in \mathbb {R}\backslash \{0\}\):

$$\begin{aligned} w_{\beta ,f_{r}}(u,v):= w_{\beta ,r}(u,v) := c \cdot \left( \beta \cdot u^r+(1-\beta )\cdot v^r\right) ^\frac{1}{r}, \quad \text {where}\, f_{r}(z) := z^r. \nonumber \end{aligned}$$Notice that there is a one-to-one correspondence between \(w_{\beta ,r}(u,v)\) and \(w_{\beta _1,\beta _2,r}(u,v) := \left( \beta _1\cdot u^r+\beta _2\cdot v^r\right) ^{1/r}\) with \(\beta _1,\beta _2 \ge 0\) such that \(\beta _1 +\beta _2 >0\). For the rest of this paragraph 2ai, we assume \(c=1\). The case \(r=1\) corresponds to weighted-arithmetic-mean scaling (mixture scaling) \(w_{\beta ,1}(u,v) = \beta \cdot u {+}(1-\beta )\cdot v\) (cf. Fig. 2b). In particular, with \(w_{0,1}(u,v) = v\) (cf. Fig. 2a) one obtains the corresponding Csiszar-Ali-Silvey \(\phi \) divergence CASD

$$\begin{aligned} B_{\phi }\left( P,Q\,|\,W_{0,1}(P,Q)\right) = B_{\phi }\left( P,Q\,|\,Q\right) =\int _{{\mathscr {X}}} q(x) \ \phi \left( {\frac{p(x)}{q(x)}}\right) \mathrm {d}\lambda (x) \end{aligned}$$(9)(cf. Stummer (2007), Stummer and Vajda (2012)). Hence, in our context every CASD appears as a subsubcase. For \(\phi (t) := \phi _1(t) := t\log t + 1- t\) one arrives at the Kullback–Leibler information divergence KL (relative entropy)

$$\begin{aligned} B_{\phi _1}\left( P,Q\,|\,W_{0,1}(P,Q)\right) = B_{\phi _1}\left( P,Q\,|\,Q\right) =\int _{{\mathscr {X}}} p(x) \ \log \left( {\frac{p(x)}{q(x)}}\right) \mathrm {d}\lambda (x) \ . \nonumber \end{aligned}$$Moreover, the choice \(\phi (t) := \phi _{1/2}(t)\) (cf. (3)) leads to the (squared) Hellinger distance \(B_{\phi _{1/2}}\left( P,Q\,|\,W_{0,1}(P,Q)\right) = 2 \int _{{\mathscr {X}}} (\sqrt{p(x)}-\sqrt{q(x)})^2 \; \mathrm {d}\lambda (x) \), whereas for \(\phi (t) := \phi _2(t)\) (cf. (3)) we end up with Pearson’s chi-square divergence

$$\begin{aligned} B_{\phi _2}\left( P,Q\,|\,W_{0,1}(P,Q)\right) = B_{\phi _2}\left( P,Q\,|\,Q\right) = \frac{1}{2} \int _{{\mathscr {X}}} {\frac{(p(x)-q(x))^2}{q(x)}} \; \mathrm {d}\lambda (x) \ . \nonumber \end{aligned}$$For general \(\beta \in [0,1]\), we deduce

$$\begin{aligned}&B_{\phi _2}\left( P,Q\,|\,W_{\beta ,1}(P,Q)\right) = \frac{1}{2} \int _{{\mathscr {X}}} {\frac{(p(x)-q(x))^2}{\beta p(x) + (1-\beta ) q(x)}} \; \mathrm {d}\lambda (x) \nonumber \end{aligned}$$which is (the general-space-form of) the blended weight chi-square divergence BWCD of Lindsay (1994); in particular, \(B_{\phi _2}\left( P,Q\,|\,W_{1,1}(P,Q)\right) \) is Neyman’s chi-square divergence.

The more general divergences \(B_{\phi _{\alpha }}\left( P,Q\,|\,W_{\beta ,1}(P,Q)\right) \) were used in Kißlinger and Stummer (2013). As far as r is concerned, other interesting special cases (in addition to \(r=1\)) of \(B_{\phi }\left( P,Q\,|\,W_{\beta ,r}(P,Q)\right) \) are the scaling by (multiple of) weighted quadratic mean (\(r=2\)), weighted harmonic mean (\(r=-1\)), and weighted square root mean (\(r=1/2\), cf. Fig. 2c). For instance, the divergence

$$ B_{\phi _2}\left( P,Q\,|\,W_{\beta ,1/2}(P,Q)\right) = \frac{1}{2} \int \limits _{{\mathscr {X}}} {\frac{(p(x)-q(x))^2}{\left( \beta \sqrt{p(x)} +(1-\beta )\sqrt{q(x)}\right) ^2}} \; \mathrm {d}\lambda (x) $$corresponds to (the general-space-form of) the blended weight Hellinger distance of Lindsay (1994), Basu and Lindsay (1994).

-

2aii.

multiple of weighted geometric mean WGM:

$$\begin{aligned} w_{\beta ,f_{0}}(u,v):= w_{\beta ,0}(u,v) := c \cdot u^{\beta } \cdot v^{1-\beta }, \quad \text {where}\, f_{0}(z) := \log (z) \nonumber \end{aligned}$$(cf. Fig. 2d). Notice that \(\lim _{r \rightarrow 0} w_{\beta ,r}(u,v) = w_{\beta ,0}(u,v)\). For an exemplary application of \(B_{\phi _{\alpha }}\left( P,Q\,|\,W_{\beta ,0}(P,Q)\right) \) to model search for (auto) regressions, see Kißlinger and Stummer (2015b).

-

2aiii.

multiple of weighted exponential mean WEM, \(r\in \mathbb {R}\backslash \{0\}\) (cf. Fig. 2e):

$$\begin{aligned} w_{\beta ,\tilde{f}_{r}}(u,v):= \frac{c}{r} \cdot \log \left( \beta e^{r u} + (1-\beta ) e^{rv}\right) , \quad \text {where}\, \tilde{f}_{r}(z) := \exp (rz). \end{aligned}$$(10) -

2aiv.

multiple of transformed Einstein sum: if u, v are restricted to [0,1]—as it is the case of probability mass functions in (5)—then for \(u+v >0\)

$$\begin{aligned} w_{E}(u,v) = c \cdot \dfrac{1+uv-\sqrt{(1-u^2)(1-v^2)}}{u+v} \quad \text {where}\, f(z) := \log \left( \frac{1+z}{1-z} \right) \nonumber \end{aligned}$$(cf. Fig. 2f). Within a context not concerned with probability distances, the special case \(c=1\) can be found, e.g. in Grabisch et al. (2009).

-

2ai.

-

2b.

(further) limits of weighted rth-power means: for any \(\beta \in [0,1]\)

$$\begin{aligned}&w_{\beta ,\infty }(u,v) := \lim _{r \rightarrow \infty } w_{\beta ,r}(u,v) = c \cdot \max \{u,v\} \nonumber \\&w_{\beta ,-\infty }(u,v) := \lim _{r \rightarrow -\infty } w_{\beta ,r}(u,v) = c \cdot \min \{u,v\} \ . \nonumber \end{aligned}$$Notice the following bounds:

$$\begin{aligned} \forall c \in ]0,\infty [ \ \forall \beta \in [0,1] : \ \ c \cdot \min \{u,v\} \le w_{\beta ,r}(u,v) \le c \cdot \max \{u,v\} \ , \quad u,v \in [0,\infty [. \nonumber \end{aligned}$$ -

2c.

\(\beta {-}\)median BM, \(\beta \in [0,\infty [\) (cf. Fig. 2g):

$$\begin{aligned} w_{\beta }^{med} := c \cdot \text {med}\{\min \{u,v\}, \beta , \max \{u,v\} \} \ \end{aligned}$$(11)where \(med(x_1,x_2,x_3)\) denotes the second smallest of the three numbers \(x_1,x_2,x_3\).

-

2d.

flexible robustness-adjustable scale connector (“robustness adjuster”): here, we confine ourselves to \(u,v \in [0,1]\) which holds for the discrete case where \(u=p(x)\), \(v=q(x)\) are probability masses (cf. (5)). For parameters \(\underline{\varepsilon }_0\), \(\underline{\varepsilon }_1\), \(\overline{\varepsilon }_0\), \(\overline{\varepsilon }_1\), \(u_{in}\), \(v_{in}\), \(u_{out}\), \(v_{out}\), \(h_{in}\), \(h_{out}\) satisfying the constraints \(0\le u_{in} \le 1- \underline{\varepsilon }_1 \le 1\), \(0\le \overline{\varepsilon }_0 \le 1- u_{out} \le 1\), \(0\le \underline{\varepsilon }_0 \le 1- v_{in} \le 1\), \(0\le v_{out} \le 1-\overline{\varepsilon }_1 \le 1\), \(h_{in} > -1\), \(h_{out} > -1\), we define \(\overline{\varepsilon }_v:=\overline{\varepsilon }_0+v(\overline{\varepsilon }_1-\overline{\varepsilon }_0)\), \(\underline{\varepsilon }_v:=\underline{\varepsilon }_0+v(\underline{\varepsilon }_1-\underline{\varepsilon }_0)\) and

$$\begin{aligned} w(u,v):= & {} w_{adj}(u,v) \ := \ w_{mid}(u,v) + w_{in}(u,v) + w_{out}(u,v) \nonumber \\:= & {} 1+(1-v)\cdot \left\{ |u-v|\cdot \left( \dfrac{1}{\overline{\varepsilon }_v} \mathbbm {1}_{[0,\overline{\varepsilon }_v]}(u-v)+\dfrac{1}{\underline{\varepsilon }_v}\mathbbm {1}_{[-\underline{\varepsilon }_v,0)}(u-v)\right) \right. \nonumber \\&\left. -\mathbbm {1}_{[-\underline{\varepsilon }_v;\overline{\varepsilon }_v]}(u-v)\right\} \nonumber \\&+ sgn(h_{in})\cdot \max \left( |h_{in}|\left( 1-\frac{1}{v_{in}}-\frac{u}{u_{in}}+\frac{v}{v_{in}}\right) ,0\right) \nonumber \\&+sgn(h_{out})\cdot \max \left( |h_{out}|\left( 1-\frac{1}{u_{out}}+\frac{u}{u_{out}}-\frac{v}{v_{out}}\right) ,0\right) \ , \end{aligned}$$(12)cf. Fig. 2k. Notice that \(w(\cdot ,\cdot )\) takes the form of the sum of a “plateau \(w_{mid}(u,v)\) of height 1 with increasing rift valley around the diagonal (v, v)”, a “pyramid \(w_{in}(u,v)\) of (possibly negative) height \(h_{in}\) around \((u,v)=(0,1)\)” and a “pyramid \(w_{out}(u,v)\) of (possibly negative) height \(h_{out}\) around \((u,v)=(1,0)\)”; depending on the values of the parameters, this may look like an edged version of a butterfly, starship or sailplane; see, e.g. Fig. 2h (where here and for (i), (j) we have fixed the parameters \(\overline{\varepsilon }_0=0.12,\,\overline{\varepsilon }_1=0.15,\underline{\varepsilon }_0=0.13,\,\underline{\varepsilon }_1=0.1\), \(v_{in}=v_{out}=u_{in}=u_{out}=0.1\)). Furthermore, \(w_{adj}(v,v) = v\) for all \(v \in [0,1]\). If \(h_{in} = h_{out} = 0\) (see Fig. 2i) and additionally \(\underline{\varepsilon }_0 = \underline{\varepsilon }_1 = \overline{\varepsilon }_0 = \overline{\varepsilon }_1 =: \varepsilon \) with extremely small \(\varepsilon >0\) (e.g. less than the rounding-errors-concerning machine epsilon of your computer), then for all practical purposes \(w_{adj}(\cdot ,\cdot )\) is “equal” to the no-scaling connector \(w_{no}(u,v)\) and thus \(B_{\phi }\left( P,Q\,|\,W_{adj}(P,Q)\right) \) is “computationally indistinguishable” from the unscaled classical Bregman divergence \(B_{\phi }\left( P,Q\,|\,1\right) \) (e.g. for \(\phi = \phi _{\alpha }\), the latter are the DPD\(_{\alpha }\)). Of course, the scale connector \(w_{adj}(\cdot ,\cdot )\) is generally non-smooth which may be uncomfortable for asymptotics properties (see Sect. 4 below). However, one can generate a smoothed version \(w_{adj}^{smooth}(u,v) = \int _{\mathbb {R}^2} w_{adj}(\xi _1,\xi _2) \ g_{\delta }(u-\xi _1,v-\xi _2) \ \mathrm {d}(\xi _1,\xi _2)\) in terms of a mollifier \(g_{\delta }(\cdot ,\cdot )\) with some small tuning parameter \(\delta >0\), e.g. \(g_{\delta }(z_1,z_2) := \frac{c}{\delta ^2} \exp \{ -1 /(1-(z_1^2+z_2^2)/\delta ^2)\} \mathbbm {1}_{[0,\delta ^2[}(z_1^2+z_2^2)\) where \(c>0\) is the normalizing constant; see Fig. 2j where we have used \(\delta =10^{-7}\), together with parameters \(\overline{\varepsilon }_0=0.6,\,\overline{\varepsilon }_1=0.2,\underline{\varepsilon }_0=0.3,\,\underline{\varepsilon }_1=0.1\), \(v_{in}=0.4\), \(v_{out}=u_{in}=0.1\), \(u_{out}=0.2\). For “practical purposes”, \(w_{adj}^{smooth}(\cdot ,\cdot )\) “coincides” with \(w_{adj}(\cdot ,\cdot )\). An analogous smoothing can be done for the scale connectors in 2b and 2c.

-

2e.

Following the lines of Grabisch et al. (2009) on general aggregation functions, one can construct scale connectors which satisfy (7) by means of \(w(u,v) = c \cdot \varUpsilon _{H}^{-1}(H(u,v))\) for any (measurable) function \(H:[0,\infty [ \times [0,\infty [ \mapsto [0, \infty ]\) for which \(z \mapsto \varUpsilon _{H}(z) := H(z,z)\) is strictly increasing. For scale connectors where u, v are restricted to [0,1], this applies analogously.

Remark 1

(a) Notice that the scale connectors w in 2ai, 2aii, 2b can be written in the form \(w(u,v) = c \cdot v \cdot h\left( \frac{u}{v}\right) \) for some function \(h: [0,\infty ] \mapsto [0,\infty ]\) (i.e. the plot of \((u,v) \mapsto w(u,v)/v\) is constant along every line through the origin) and hence the corresponding scaled Bregman divergences satisfy \(B_{\phi _{\alpha }}\left( P,Q\,|\,W(P,Q)\right) = B_{\widetilde{\phi }_{\alpha }}\left( P,Q\,|\,Q\right) \) (\(\alpha \in \mathbb {R}\backslash \{0,1\}\)), i.e. they can be interpreted as a CASD with non-obvious generator \(\widetilde{\phi }_{\alpha }(t) := \frac{1}{\alpha -1} \left[ h(t)^{1-\alpha } \cdot \left\{ \frac{t^{\alpha }}{\alpha } -t + \frac{\alpha -1}{\alpha } \right\} \right] \). For instance, the BWCD is representable as \(B_{\phi _2}\left( P,Q\,|\,W_{\beta ,1}(P,Q)\right) = B_{\widetilde{\phi }_2}\left( P,Q\,|\,Q\right) \) where \(\widetilde{\phi }_2(t) = (1-t)^2/(2 \cdot ((1-\beta )+\beta \cdot t))\) turns out to be Rukhin’s generator (cf. Rukhin (1994), see, e.g. also Marhuenda et al. (2005), Pardo (2006)). Moreover, for the (squared) Hellinger distance one has \(B_{\phi _2}\left( P,Q\,|\,W_{1/2,1/2}(P,Q)\right) = B_{\phi _{1/2}}\left( P,Q\,|\,W_{0,1}(P,Q)\right) \).

(b) The scale connectors w(u, v) in 2aiii, 2aiv, 2c, 2d can NOT be written in the form of \(c \cdot v \cdot h\left( \frac{u}{v}\right) \), and hence the CASD-connection of Remark 1a does not apply.

(c) In case of symmetric scale connectors \(w(u,v)=w(v,u)\), one can produce symmetric divergences by means of either

\(B_{\phi }\left( P,Q\,|\,W(P,Q)\right) + B_{\phi }\left( Q,P\,|\,W(P,Q)\right) \),

\(\max \{B_{\phi }\left( P,Q\,|\,W(P,Q)\right) , B_{\phi }\left( Q,P\,|\,W(P,Q)\right) \}\),

\(\min \{B_{\phi }\left( P,Q\,|\,W(P,Q)\right) ,B_{\phi }\left( Q, P\,|\,W(P,Q)\right) \}\);

this also works for \(\phi (t)= \phi _1(t)\) together with arbitrary scale-connectors w.

(d) Instead of (robustly) measuring the distance between “full probability distributions” P, Q—scaled by a “full non-probability distribution” M—with our new method one can analogously measure (robustly) the distance between the families \((P[E_z])_{z \in \mathscr {I}}\), \((Q[E_z])_{z \in \mathscr {I}}\)—scaled by \((M[E_z])_{z \in \mathscr {I}}\)—of probabilities of some selected concrete (e.g. increasing) events \((E_z)_{z \in \mathscr {I}} \subset \mathscr {A}\) of interest.

3 Robustness

In the previous section, we have introduced a toolbox \(\mathscr {D}_{sc} := \{B_{\phi }\left( P,Q\,|\, M \right) : \phi \in \varPhi , M \in \mathscr {M}_{sc} \}\) of divergences where the class of generators is (say) \(\varPhi =\varPhi _{C_1}\) and the class \(\mathscr {M}_{sc}\) of scalings consists of those measures \(M=W(P,Q)\) which have \(\lambda {-}\)density \(m(x)=w(p(x),q(x)) \ge 0\) with some “scale-connector” \(w(\cdot ,\cdot )\) between the \(\lambda {-}\)densities p(x) and q(x). In the following, as a part of statistical engineering, we discuss some criteria of how to find good choices of \(\phi \) and w, having in mind robustness respectively stability respectively sensitivity (in a wide sense). For the sake of brevity, this will be done onlyFootnote 2 in the above-mentioned context of (finite or countable) discrete spaces \(\mathscr {X} = \{x_1, x_2, \ldots \}\) of outcomes \(x_i\) where \(\lambda _{count}[\{x_i\}] =1\) for all i, \(p(x):= P[\{x\}]\) and \(q(x):= Q[\{x\}]\) are probability mass functions; accordingly, \(w:[0,1] \times [0,1] \mapsto [0,\infty ]\) and (5) becomes

Since the ultimate purposes of a statistical inference based on \(\mathscr {D}_{sc}\) may vary from case to case—e.g. it may serve as a basis for some desired decision/action of quite heterogeneous nature in finance, medicine, biology, signal-processing, etc.—the flexibility in choosing generators \(\phi \) and scale-connectors w should be narrowed down in a well-structured, goal-oriented way:

-

Step 1. Declare (or identify) areas A of specific interest for \((u,v):=(p(x),q(x))\): for instance, the “outlier area”

$$\begin{aligned} A_{out} := \{ (u,v) \in [0,1]^2: u\, \text { is much larger than}\, v, \text { and } v \, \text { is small} \} \ , \end{aligned}$$the “inlier area”

$$\begin{aligned} A_{in} := \{ (u,v) \in [0,1]^2: u \,\text { is much smaller than }\, v, \text { and } v \text { is large} \} \ , \end{aligned}$$the “midlier area”

$$\begin{aligned} A_{mid} := \{ (u,v) \in [0,1]^2: u \text { is approximately (but not exactly) equal to } v \} \ , \end{aligned}$$and the “matching area”

$$\begin{aligned} A_{mat} := \{(v,v): v\in [0,1]\} \ . \end{aligned}$$The verbal names of \(A_{out}\), \(A_{in}\) are given in accordance with applications where \(P:= P_{N}^{emp} := \frac{1}{N} \cdot \sum _{i=1}^{N} \delta _{X_{i}}[\cdot ]\) is the above-mentioned empirical distribution of an iid sample \(X_1, \ldots , X_N\) of size N, \(u:= p(x) = p_{N}^{emp}(x) = \frac{1}{N} \cdot \# \{ i \in \{ 1, \ldots , N\}: X_i =x \} \), and \(Q=P_{\theta }\) is a discrete candidate model distribution with \(v:= q(x) = p_{\theta }(x)\); we suggest to take this frequent situation as a running example for the robustness considerations in the whole Sect. 3. Then, \(A_{out}\) corresponds to outcomes x which appear in the sample much more often than described by the model where x is rare. Moreover, \(A_{in}\) corresponds to outcomes x which appear in the sample much less often than described by the model where x is a very frequent. In other words, \(A_{out}\), \(A_{in}\) represent “high unusualnesses” (“surprising observations”)—in terms of probabilities rather than geometryFootnote 3—in the sampled data as compared to the candidate model. Of course, one has to define \(A_{out}\), \(A_{in}\), \(A_{mid}\) more quantitatively, adapted to the task to be solved. Notice that our formulation of \(A_{out}\) allows for more flexibility (e.g. expressed by its boundary) than the more restrictive “classical” definition (for some large constant \(\tilde{c} >0\) and Pearson residual \(\delta := \frac{u}{v} -1\)) \(A_{out}^{cl} := \{ (u,v) \in [0,1]^2: \frac{u}{v} \ge \tilde{c} \} = \{ (u,v) \in [0,1]^2: \delta \ge \tilde{c} -1 \} = \{ (u,v) \in [0,1]^2: v \le \frac{u}{\tilde{c}} \,\text { if}\, u >0\, \text {and } \ldots \text { if}\, u=0\} \). Analogously, our \(A_{in}\) allows for more flexibility than the classical quantification (for some small constant \(\breve{c} >0)\) \(A_{in}^{cl} := \{ (u,v) \in [0,1]^2: \frac{u}{v} \le \breve{c} \} = \{ (u,v) \in [0,1]^2: \delta \le \breve{c} -1 \} = \{ (u,v) \in [0,1]^2: v \ge \frac{u}{\breve{c}}\, \text { if}\, u >0\, \text {and }\, \ldots \text { if}\, u=0\} \); see Fig. 2l.

-

Step 2. On the areas A determined in Step 1, specify desired “adjustments” on the involved generator \(\phi \) and scale connector w which amount to dampening (downweighting) respectively amplification (highlighting) on A, to be quantified in absolute values and/or relative to a given benchmark.

In the following, we explain Step 2 in detail where for the sake of brevity we mainly concentrate on robustness (arguing on \(A_{out}\), \(A_{in}\)); for sufficiency studies, the behaviour on \(A_{mid}\) should be focused on. To gain better insights, with the following investigations we aim for a comfortable interpretability and comparability of the underlying robustness structures, also supported through geometric 3D visualizations. To start with, we have seen in Sect. 2 that all Csiszar-Ali-Silvey divergences CASD and all classical Bregman divergences CBD are special cases of our scaled Bregman divergences SBD. Hence, we can extend all the known robustness results on CASD and CBD to a more general, unifying analysis. For the sake of brevity, this will be done only in extracts; the full details will appear elsewhere.

Some density-pair adjustment functions DAF(0) of zeroth order (cf. (a)–(h)), and comparisons of DAF(0) (cf. (i)–(l)). a \(b_{\phi _1,w_{0,1}}(u,v)=u\log \frac{u}{v}-(u-v)\) (KL case). b \(b_{\phi _{1/2},w_{0,1}}(u,v)=2(\sqrt{u}-\sqrt{v})^2\) (HD case). c \(b_{\phi _{2},w_{0,1}}(u,v)=\frac{v}{2}\left( \frac{u}{v}-1\right) ^2\) (PCS case). d \(b_{\phi _{NED},w_{0,1}}(u,v)=v \cdot \phi _{NED}\left( \frac{u}{v}\right) \) (NED case). e \(b_{\phi _{2},w_{no}}(u,v)=\frac{(u-v)^2}{2}\) (DPD\(_2\) case). f \(b_{\phi _{2},w_{0.45,\tilde{f}_{6}}}(u,v)=6\frac{(u-v)^2}{2\log \left( 0.45e^{6u}+0.55e^{6v}\right) }\) (cf. (10)). g \(b_{\phi _{2},w_{0.45}^{med}}(u,v)=\frac{(u-v)^2}{2\text {med}\{\min \{u,v\}, \beta , \max \{u,v\} \}}\) (cf. (11)). h \(b_{\phi _{2},w_{adj}}(u,v)\) (cf. (12)) with parameters taken as in Fig. 2j. i \(w_{0.45}^{med}(u,v) - w_{0.45,0}(u,v)=w_{0.45}^{med}(u,v)-u^{0.45}v^{0.55}\). j \(\mu _{w_{no}}(u,v)=1-\frac{0.5v\left( \frac{u}{v}-1\right) ^2}{\frac{u}{v}\log \frac{u}{v}+1-\frac{u}{v}}\) (DPD\(_{2}\) vs. KL case). k \(\mu _{w}(u,v)\) for \(w(u,v) = w_{0.45}^{med}(u,v)\). l \(\mu _{w}(u,v)\) for \(w(u,v) = \min (w_{0.42,1}(u,v),w_{0.25,1}(u,v))\)

Case 1: Robustness without derivatives (“zeroth order”). For goodness-of-fit testing, the detection of distributional changes in data streams, two-sample tests, fast crude model search in time series (see, e.g. Kißlinger and Stummer (2015b)), and other tasks, the magnitude of \(B_{\phi }\left( P,Q\,|\,W(P,Q)\right) \) itself is of major importance. To evaluate its performance “microscopically”, one can take an absolute view and inspect the magnitude of the “summand builder” (cf. (13)) \(b_{\phi ,w}(u,v) := w(u,v) \cdot b_{\phi }(\frac{u}{w(u,v)},\frac{v}{w(u,v)})\) on the areas A of interest. For instance, depending on the data-analytic goals, one may like \(b_{\phi ,w}(u,v)\) to have “small (resp. large) values” on \(A_{out}\) and \(A_{in}\). In Fig. 3 we present \(b_{\phi ,w_{0,1}}(u,v)\) of the following CASD (see the representation in (16) below): (a) the Kullback–Leibler divergence KL (cf. \(\phi =\phi _1\)), (b) the (squared) Hellinger distance HD (cf. \(\phi =\phi _{1/2}\)), (c) the Pearson chi-square divergence PCS (cf. \(\phi =\phi _{2}\)), as well as (d) the negative exponential disparity NED of Lindsay (1994) with \(\phi (t):= \phi _{NED}(t) := \exp (1-t) + t -2\). Concerning the non-CASD case (cf. Remark 1a above), we show in Fig. 3 \(b_{\phi _2,w}(u,v)\) for the weights (e) \(w=w_{no}\) (no scaling, leading to DPD\(_2\)), (f) \(w=w_{0.45,\tilde{f}_{6}}\) (WEM scaling, cf. (10)), (g) \(w=w_{0.45}^{med}\) (\(\beta {-}\)median scaling, cf. (11)), and (h) \(w=w_{adj}\) with the same parameters as in Fig. 2j (cf. (12)). In (a)–(h), notice the partially large differences in the outlier area \(A_{out}\) which corresponds to the left corner. In addition to the above-mentioned absolute view, one can also take a relative view and compare the performance of the scaled Bregman divergence \(B_{\phi }\left( P,Q\,|\,W(P,Q)\right) \) with that of an “overall” benchmark (respectively, an alternative) \(B_{\widetilde{\phi }}( P,Q\,|\,\widetilde{W}(P,Q))\). For instance, one may like to check for \(B_{\phi }( P,Q\,|\,W(P,Q)) \gtreqqless B_{\widetilde{\phi }}( P,Q\,|\,\widetilde{W}(P,Q))\), or even more “microscopically” (cf. (13)) for

for all \((u,v) \in [0,1]^2\) and especially for \((u,v) \in A_{out}, A_{in}, A_{mid}\), \(A_{mat}\). The corresponding visual comparison can be done by overlaying \((u,v,b_{\phi ,w}(u,v))\) and \((u,v,b_{\widetilde{\phi },\widetilde{w}}(u,v))\) in the same 3D plot, or by overlaying \((u,v,b_{\phi ,w}(u,v) - b_{\widetilde{\phi },\widetilde{w}}(u,v))\) and (u, v, 0) in another 3D plot; the latter has the advantage of an “eye-stabilizing” reference plane. Intuitively, the inequality < in (14) means “point-wise” dampening (downweighting) with respect to the benchmark, whereas > amounts to amplification (highlighting) with respect to the benchmark. In the light of this, one can interpret \((u,v) \mapsto b_{\phi ,w}(u,v)\) as a density-pair adjustment function DAF(0) of zeroth order. In principle, for fixed \((\widetilde{\phi }\), \(\widetilde{w})\) one can encounter three situations in the relative quality assessment of some candidate \((\phi \), w). Firstly, the scaling is the same, i.e. \(w(u,v) = \widetilde{w}(u,v)\), and thus (14) turns into

and in case of \(w(u,v)>0\) one can further simplify by dividing through w(u, v). For instance, if one compares two CASD \(B_{\phi }\left( P,Q\,|\,Q\right) \gtreqqless B_{\widetilde{\phi }}\left( P,Q\,|\,Q\right) \) then—due to \(w(u,v) = \widetilde{w}(u,v) = w_{0,1}(u,v) = v\)—the inequality (15) simplifies to

which on \(\{(u,v)\in [0,1]^2: v>0 \}\) amounts to \(\phi \left( \frac{u}{v}\right) \ \gtreqqless \ \widetilde{\phi }\left( \frac{u}{v}\right) \). Consistently, for the outlier area one can, e.g. use the classical variant \(A_{out}^{cl}\) and for the inlier area \(A_{in}^{cl}\). For classical unscaled Bregman divergences CBD, \(B_{\phi }\left( P,Q\,|\,1\right) \gtreqqless B_{\widetilde{\phi }}\left( P,Q\,|\,1\right) \) leads to \(b_{\phi }\left( u,v\right) \gtreqqless b_{\widetilde{\phi }}\left( u,v\right) \) (cf. (15)). As a second situation in comparative quality assessment, the generator functions coincide, i.e. \(\phi = \widetilde{\phi }\), and hence (14) becomes

Within such a context, one can compare a CASD with its classical unscaled Bregman divergence CBD “counterpart”. As an example, one can take \(\phi (t) = \widetilde{\phi }(t) = \phi _2(t) = \frac{(t-1)^2}{2}\) which simplifies (17) to

The choice \(w(u,v)= w_{0,1}(u,v)= v\) leads to the PCS \(B_{\phi _2}\left( P,Q\,|\,Q\right) \) and \(\widetilde{w}(u,v)=1\) corresponds to the DPD\(_{2}\) \(B_{\phi _2}\left( P,Q\,|\,1\right) \). Further examples of w(u, v) can be drawn from Sect. 2. Clearly, (18) amounts to \(w(u,v) \lesseqqgtr \widetilde{w}(u,v)\) respectively \(w(u,v) - \widetilde{w}(u,v) \lesseqqgtr 0\) or \(\frac{w(u,v)}{\widetilde{w}(u,v)} \lesseqqgtr 1\) (with special care for eventual zeros); see Fig. 3i for the difference comparison \(w_{0.45}^{med}(u,v) - w_{0.45,0}(u,v)\) between the \(\beta {-}\)median BM \(w_{0.45}^{med}(u,v)\) and the weighted geometric mean WGM \(w_{0.45,0}(u,v)\). Notice that the case \(w(u,v) = w_{adj}(u,v) \lesseqqgtr 1= w_{no}(u,v) = \widetilde{w}(u,v)\) of the robustness adjuster against the CDB-relevant unit scaling is especially easy to compare visually. The third situation is the “crossover” case where \(\phi \) is different from \(\widetilde{\phi }\) and w is different from \(\widetilde{w}\). Then, (14) needs to be treated more individually. The most fundamental example for a benchmark is clearly the Kullback–Leibler divergence KL \(B_{\widetilde{\phi }}\left( P,Q\,|\,\widetilde{W}(P,Q)\right) = B_{\phi _1}\left( P,Q\,|\,Q\right) \) with generator \(\widetilde{\phi }(t) = \phi _1(t) = t \, \log t + 1 -t \ge 0\) (with \(\phi _1(1)=\phi _1^{\prime }(1)=0\), \(\phi _1^{\prime \prime }(1)=1\)), as well as scale connector \(\widetilde{w}(u,v)=w_{0,1}(u,v)=v\), yielding \(b_{\widetilde{\phi },\widetilde{w}}(u,v) = v \left( \frac{u}{v} \log \left( \frac{u}{v} \right) +1 - \frac{u}{v} \right) \). Hence, the comparison (14) specializes to

For \(\phi = \phi _{2}\) we get

see, e.g. Fig. 3j for the no-scaling case \(w=w_{no}\) (i.e., the DPD\(_{2}\) vs. KL comparison) and (k) for the \(\beta {-}\)median BM case \(w_{0.45}^{med}\). The check for (19) simplifies considerably if \(w(u,v) = v \cdot h\left( \frac{u}{v} \right) \) for some function h (see, e.g. Fig. 3l for the scale connector \(w(u,v) = \min (w_{0.42,1}(u,v),w_{0.25,1}(u,v))\) being the minimum of two weighted arithmetic means). Then the left-hand side can be rewritten as a CASD (cf. Remark 1a above) and hence context (16) applies, too.

Case 2: Robustness with first derivatives (“first order”). In many cases, one of the two distributions P, Q depends on a multidimensional parameter, say \(Q \in \mathscr {Q}_{\varTheta } := \{ Q_{\theta }: \theta \in \varTheta \}\), \(\varTheta \subset \mathbb {R}^d\). Then, not only the magnitude of \(B_{\phi }\left( P,Q_{\theta }\,|\,W(P,Q_{\theta })\right) \) but also its derivative \(\nabla _{\theta } \ B_{\phi }\left( P,Q_{\theta } \,|\,W(P,Q_{\theta })\right) \) with respect to \(\theta \) may be of major importance. The most well-known context is minimum distance estimation, where P is a data-derived probability-measure-valued statistical functional and one wants to find the member \(Q_{\widehat{\theta }}\) of \(\mathscr {Q}_{\varTheta }\) which has the shortest distance to P (if this exists), i.e. \(\widehat{\theta } = \text {argmin}_{\theta \in \varTheta } B_{\phi }\left( P,Q_{\theta }\,|\,W(P,Q_{\theta })\right) \); for instance, \(P:= P_{N}^{emp} := \frac{1}{N} \cdot \sum _{i=1}^{N} \delta _{X_{i}}[\cdot ]\) is the above-mentioned empirical distribution of an iid sample \(X_1, \ldots , X_N\) of size N. If \(\phi \) and w are smooth enough, the corresponding optimization leads to the estimating equation

provided that one can interchange the sum and the derivative. In the same spirit as in Step 1, it makes sense to study the density-pair adjustment function DAF(1) of first order \((u,v) \mapsto a_{\phi ,w}(u,v)\) defined by

where we have used the definition of \(b_{\phi }\) given in (13). Notice that for arbitrary CASD \(B_{\phi }\left( P,Q\,|\,Q\right) \) (which corresponds to the special choice \(w(u,v)=w_{0,1}(u,v) =v\)), the first-order DAF(1) defined in (20) reduces by use of \(\phi (1) = \phi ^{\prime }(1) = 0\), \(C_{\phi }(t) := \phi (t+1)\) (\(t\in [-1,\infty [\)), and the Pearson residual \(\delta = \frac{u}{v} -1 \in [-1,\infty [\) to

Here, \(\check{a}_{C_{\phi }}(\cdot )\) is the residual adjustment function RAF of Lindsay (1994), Basu and Lindsay (1994), defined for \(C_{\phi }{-}\)disparities which are nothing but alternative representations of CASD divergences with generator \(\phi \). In other words, (21) shows that our framework of first-order density-pair adjustment functions DAF(1) \(a_{\phi ,w}(u,v)\) is a bivariate generalization of the concept of univariate residual adjustment functions RAFs. This extension allows in particular for comfortable direct comparison between the robustness structures of the (nearly disjoint) CASD and the CBD worlds, as it will be demonstrated below. Also notice that the RAF has unbounded domain, whereas the first-order DAF has bounded domain which is advantageous for plotting purposes (of bounded a). The inspection of the function \(a_{\phi ,w}(\cdot ,\cdot )\) for the two effects dampening or amplification—especially on the areas \(A_{out}\), \(A_{in}\), \(A_{mid}\), \(A_{mat}\) of interest—can be performed analogously to the inspection of \(b_{\phi ,w}(\cdot ,\cdot )\), in absolute values and/or relative to a benchmark. For instance, for outlier and inlier dampening, \(|a_{\phi ,w}(\cdot ,\cdot )|\) should be close to zero on \(A_{out}\), \(A_{in}\), and closer to zero than the benchmark. The latter may be the Kullback–Leibler divergence case

Some first-order density-pair adjustment functions DAF(1) (cf. (a)–(h)), and comparisons of DAF(1) (cf. (i)–(s)). OUT refers to outlier performance (for (a) in absolute terms, for (b)–(h) relative to the benchmark (a)), IN refers to inlier performance, ASYMP refers to the asymptotics in Corollary 1 (= nice) resp. the more complicated Theorem 1 (= compl). a \(a_{\phi _1,w_{0,1}}(u,v) = \frac{u}{v} -1\), KL (benchmark): OUT = bad, IN = ok, ASYMP= nice. b \(a_{\phi _{1/2},w_{0,1}}(u,v) = 2\cdot (\sqrt{u/v}-1)\), HD: OUT = less bad, IN = worse, ASYMP = nice. c \(a_{\phi _2,w_{0,1}}(u,v) = ((u^2/v^2)-1)/2\), PCS: OUT = worse, IN = better, ASYMP = nice. d \(a_{\phi _{NED},w_{0,1}}(u,v) = 2-(1+\frac{u}{v})\cdot \exp ^{1-(u/v)}\), NED: OUT = much better, IN = better, ASYMP = nice. e \(a_{\phi _2,w_{no}}(u,v) = u-v\), DPD\(_2\): OUT = much better, IN = better, ASYMP = compl; also inefficient. f \(a_{\phi _{\alpha },w_{no}}(u,v) = (u-v) \cdot v^{\alpha -2}\), DPD\(_{1.67}\): OUT = much better, IN = better, ASYMP = compl. g \(a_{\phi _2,w_{0.45,\tilde{f}_{6}}}(u,v)\), \(\phi = \phi _2\), \(w = WEM\): OUT = much better, IN = better, ASYMP = nice. h \(a_{adj}^{des}(u,v)\) (designed) \(\phi = \phi _2\), w cf. (23): OUT = much better, IN = much better. i \(\rho _{\phi _{1/2},w_{0,1}}(u,v)=-\left( \sqrt{\frac{u}{v}}-1\right) ^2\) (HD vs. KL). j \(\rho _{\phi _{2},w_{0,1}}(u,v)=\frac{1}{2}\left( \frac{u}{v}-1\right) ^2\) (PCS vs. KL). k \(\rho _{\phi _{NED},w_{0,1}}(u,v)=2-\left( 1+\frac{u}{v}\right) \exp \left( 1-\frac{u}{v}\right) -\frac{u}{v}+1\) (NED vs. KL). l \(\rho _{\phi _{2},w_{0.45,\tilde{f}_{6}}}(u,v)\). m \(\rho _{\phi _{2},w^{des}}(u,v)= a_{adj}^{des}(u,v) - \frac{u}{v} +1\). n \(\rho _{\phi _{2},w_{no}}(u,v)=\left( \frac{u}{v}-1\right) (v-1)\) (DPD\(_2\) vs. KL). o \(a_{\phi _{1/2},w_{0,1}}(u,v) - a_{\phi _2,w_{no}}(u,v)=2\left( \sqrt{\frac{u}{v}}-1\right) -(u-v)\) (HD vs. DPD\(_2\)). p \(\zeta _{w_{0,1}}(u,v)=\left( \frac{u}{v}-1\right) \cdot \left( 1+\frac{1}{2}\left( \frac{u}{v}-1\right) -v\right) \) (PCS vs. DPD\(_2\)). q \(a_{\phi _{NED},w_{0,1}}(u,v) - a_{\phi _2,w_{no}}(u,v)=2-\left( 1+\frac{u}{v}\right) \exp \left( 1-\frac{u}{v}\right) -(u-v)\) (NED vs. DPD\(_2\)). r \(a_{\phi _{2},w_{0.45,\tilde{f}_{6}}}(u,v) - a_{\phi _{NED},w_{0,1}}(u,v)\). s \(a_{\phi _{2},w_{0.45,\tilde{f}_{6}}}(u,v) - a_{\phi _2,w_{no}}(u,v)\)

which is positive on the area \(A_{sub} := \{(u,v)\in [0,1]^2: v < u\}\) and especially highly positive on \(A_{out} \subset A_{sub}\); in contrast, \(a_{\phi _1,w_{0,1}}(u,v)\) is negative on \(A_{sup} := \{(u,v)\in [0,1]^2: v > u\}\) and especially moderately negative on \(A_{in} \subset A_{sup}\) (see also Fig. 2l for an illustration of \(A_{sub}\), \(A_{sup}\) together with the classical outlier and inlier areas \(A_{out}^{cl}\), \(A_{in}^{cl}\) which are less flexible than our \(A_{out}\), \(A_{in}\)). In Fig. 4, we have plotted the DAF(1) \(a_{\phi ,w_{0,1}}(u,v)\) (cut-off at height 6) of some prominent CASD, namely of (a) the Kullback–Leibler divergence KL (cf. \(\phi =\phi _1\)), (b) the (squared) Hellinger distance HD (cf. \(\phi =\phi _{1/2}\)), (c) the Pearson chi-square divergence PCS (cf. \(\phi =\phi _2\)), and (d) the negative exponential disparity NED (cf. \(\phi = \phi _{NED}\)). Notice that on \(A_{sub}\) (and especially on the outlier area \(A_{out}\) in the left corner) the DAF(1) of HD resp. PCS resp. NED is closer to zero resp. farther from zero resp. much closer to zero than the DAF(1) of the KL; moreover, on \(A_{sup}\) (and especially on the inlier area \(A_{in}\) in the right corner) the DAF(1) of HD resp. PCS resp. NED is farther from zero resp. closer to zero resp. closer to zero than the DAF(1) of the KL (see also the corresponding difference plots (i), (j), (k) described below). This indicates that the outlier robustness of HD and NED (resp. PCS) is better (resp. worse) than that of the KL, and the inlier robustness of PCS and NED (resp. HD) is better (resp. worse) than that of the KL. Hence, these 3D plots of the bivariate DAF(1) confirm and extend the well-known results deduced from the 2D Plots of the univariate residual adjustment functions RAF. In contrast to the CASD world above, for unscaled classical Bregman divergences CBD \(B_{\phi }\left( P,Q\,|\,1\right) \) one obtains from (20) with \(w(u,v) = w_{no}(u,v) =1\) the first-order DAF(1) \(a_{\phi ,w_{no}}(u,v) = \phi ^{\prime \prime }\left( v\right) \cdot \left( u - v\right) \). The linear dependence in u shows that all CBD have restricted flexibility in robustness modelling, compared with our SBD. To continue with other special cases, for \(\phi (t) = \phi _2(t) = \frac{(t-1)^2}{2}\) we get from (20)

Some examples for (22) are presented in Fig. 4, namely (e) \(a_{\phi _2,w_{no}}(u,v) = u-v\) (DPD\(_2\) case), (f) \(a_{\phi _{\alpha },w_{no}}(u,v) = (u-v) \cdot v^{\alpha -2}\) (DPD\(_{\alpha }\) case, \(\alpha =1.67\)) and (g) \(a_{\phi _2,w_{\beta ,\tilde{f}_{r}}}(u,v)\) with WEM scale connector \(w_{\beta ,\tilde{f}_{r}}(u,v)\); the inspections of the left resp. right corners indicate that the performance of (e), (g) for outliers resp. inliers are comparable and (mostly) even better than the—already very good—corresponding performance of the NED (d). The behaviour in the midlier area indicates that the efficiency of (g) (but not of (e)) is similar with that of (d).

One can also carry out some “reverse statistical engineering” by first fixing a first-order density-pair adjustment function \(a^{des}(u,v)\) with desired properties on the areas A of interest (e.g. on \(A_{out}\), \(A_{in}\), \(A_{mid}\)) (and integrability with respect to \(v\in [0,1]\) should hold, too). Thereafter, one wants to deduce a corresponding scale connector w which satisfies \(a_{\phi _2,w}(u,v)= a^{des}(u,v)\) for all \((u,v) \in [0,1]^2\); this amounts to solving the differential equation produced by (22) under some designable constraint, (say), e.g. the “boundary” condition \(w(\cdot ,c)=h(\cdot )\) for some arbitrary strictly positive function \(h(\cdot )\) on [0, 1] and some arbitrary constant \(c \in [0,1]\). Accordingly, (with special care of possible zeros) (22) is satisfied by

For instance, one can take the “first-order DAF(1) robustness adjuster”

which is a robustified version of the first-order DAF(1) \(a_{\phi _1,w_{0,1}}(u,v) = \frac{u}{v} -1\) of the Kullback–Leibler divergence KL. The range and meaning of the constants \(u_{in}\), \(v_{in}\), \(u_{out}\), \(v_{out}\), \(h_{in}\), \(h_{out}\) in (24) is the same as in Sect. 2.2d above (with the exception that \(h_{in}\), \(h_{out}\) can be smaller than \(-1\)), and the cut-off constant cut is strictly positive; in Fig. 4h one can find a plot for the choice \(u_{in}=v_{in}=u_{out}=v_{out}=0.2\), \(h_{in}=+0.9\), \(h_{out}=-1.6\), \(cut=1.6\). As a less flexible alternative to (23), if \(\varphi (u,v) := \frac{a^{des}(u,v)}{u-v} =: \varphi (v)\) does not depend on u then with the generator \(\widetilde{\phi }(t) := \int _{c_1}^{v} \int _{c_2}^{z} \varphi (s) \ \mathrm {d}s\) (with arbitrary constants \(c_1,c_2 \in [0,1]\)) the classical Bregman divergence CBD \(B_{\widetilde{\phi }}\left( P,Q\,|\,1\right) \) has the desired DAF(1), since \(a_{\widetilde{\phi },w_{no}}(u,v) = \widetilde{\phi }^{\prime \prime }\left( v\right) \cdot \left( u - v\right) = a^{des}(u,v)\).

For relative-performance analysis, we compare whether \(- \nabla _{\theta } \ B_{\phi }( P,Q_{\theta } \,|\,W(P,Q_{\theta }))\) is \(\ \gtreqqless \ \) the benchmark \(- \nabla _{\theta } \ B_{\widetilde{\phi }}\left( P,Q_{\theta } \,|\,\widetilde{W}(P,Q_{\theta })\right) \). Accordingly, (20) leads to

for all \((u,v) \in [0,1] \times [0,1]\) and especially for \((u,v) \in A_{out}, A_{in}, A_{mid}\), \(A_{mat}\). The corresponding visual comparison can be achieved by overlaying \((u,v,a_{\phi ,w}(u,v))\) and \((u,v,a_{\widetilde{\phi },\widetilde{w}}(u,v))\) in the same 3D plot, or (for magnitude inspection) by overlaying \(\Big (u,v,\frac{a_{\phi ,w}(u,v)}{a_{\widetilde{\phi },\widetilde{w}}(u,v)}\Big )\) and (u, v, 1) in another 3D plot. Also, the overlay of \((u,v,a_{\phi ,w}(u,v) - a_{\widetilde{\phi },\widetilde{w}}(u,v))\) and (u, v, 0) is a natural choice; in accordance with the above-mentioned “nearness-to-zero” robustness-quality criteria for \(a_{\phi ,w}\), the difference \(a_{\phi ,w}(u,v) - a_{\widetilde{\phi },\widetilde{w}}(u,v)\) should be negative on \(A_{sub}\) or at least on \(A_{out} \subset A_{sub}\) (i.e. in the left corner of the figures) and positive on \(A_{sup}\) or at least on \(A_{in} \subset A_{sup}\) (i.e. in the right corner).

For the special case \(w(u,v) = \widetilde{w}(u,v) = w_{0,1}(u,v) =v\) of CASD, the corresponding 3D overlay of \((u,v,a_{\phi ,w_{0,1}}(u,v))\) (cf. (21)) and \((u,v,a_{\widetilde{\phi },w_{0,1}}(u,v))\) serves as an alternative of the 2D overlay of the RAF plots \((\delta ,\check{a}_{C_{\phi }}(\delta ))\) and \((\delta ,\check{a}_{C_{\widetilde{\phi }}}(\delta ))\). However, the interpretation of this kind of 3D overlay is not optimally describeable in greyscale pictures, in a few words; thus we prefer to show the a-difference-plots.

For the Kullback–Leibler divergence benchmark, with \(a_{\phi _1,w_{0,1}}(u,v)) = \frac{u}{v}-1\) we get

and more generally

Concerning (26), in Fig. 4 we present \(\rho _{\phi ,w}(u,v)\) for the CASD cases of (i) (squared) Hellinger distance HD, (j) Pearson chi-square divergence PCS, and (k) negative exponential disparity NED, as well as for the non-CASD cases of (l) \(\phi =\phi _2\), WEM \(w= w_{\rho ,\tilde{f}_{r}}\), (m) \(\phi =\phi _2\), \(w=w^{des}\) of the “first-order DAF(1) robustness adjuster” \(a_{adj}^{des}\), and (n) \(\phi =\phi _2\), \(w=w_{no}\) (DPD\(_2\) vs. KL).

Another interesting line of comparison is amongst the \(\phi _2{-}\)family

which for \(u\ne v\) can be further simplified. Alternatively, \(\frac{a_{\phi _2,w}(u,v)}{a_{\phi _2,w_{no}}(u,v)} \ \gtreqqless \ 1\) is useful for the quantification of the “relative magnitude” of the method. Thus, now the benchmark is of non-CASD type, namely DPD\(_2\). An example for the applicability of (27) can be found in Fig. 4p, where \(\zeta _{w}(u,v)\) is given for the CASD case of Pearson’s chi-square divergence PCS. In “crossover” contexts, some comparisons along the right-hand side of (25) between CASDs (other than PCS) and the DPD\(_2\) are represented in Fig. 4o for the (squared) Hellinger distance HD, and Fig. 4q for the negative exponential disparity NED. As the NED is known to be highly robust against outliers and inliers, it makes sense to use it as a benchmark itself. In Fig. 4r we plotted \(a_{\phi _{2},w_{0.45,\tilde{f}_{6}}}(u,v) - a_{\phi _{NED},w_{0,1}}(u,v)\) which shows that our new divergence \(B_{\phi _2}( P,Q\,|\,W_{0.45,\tilde{f}_{6}}(P,Q))\) has even better robustness properties than the NED which can be written in SBD form as \(B_{\phi _{NED}}\left( P,Q\,|\,Q\right) = B_{\phi _{NED}}\left( P,Q\,|\,W_{0,1}(P,Q)\right) \). The comparison of the robustness of \(B_{\phi _2}( P,Q\,|\,W_{0.45,\tilde{f}_{6}}(P,Q))\) with that of the DPD\(_2\) \(B_{\phi _2}\left( P,Q\,|\,1\right) \) can be found in Fig. 4s by means of \(a_{\phi _{2},w_{0.45,\tilde{f}_{6}}}(u,v) - a_{\phi _2,w_{no}}(u,v)\). One can conclude that both divergences are similarly robust; however, by changing the WEM scale connector \(w_{0.45,\tilde{f}_{6}}\) to \(w_{\beta ,\tilde{f}_{r}}\) with some other parameters \((\beta ,r)\ne (0.45,6)\), one can even outperform the density power divergence DPD\(_2\). For the sake of brevity, this will appear elsewhere.

As a final remark, let us mention that for fixed area A of interest (e.g. \(A_{out}\), \(A_{in}\)), the behaviour of the first-order DAF(1) \(a_{\phi ,w}(\cdot ,\cdot )\) may differ from that of the zeroth-order DAF(0) \(b_{\phi ,w}(\cdot ,\cdot )\). For instance, the dampening (respectively, amplification) may be considerably weaker or stronger, or may even switch from dampening to amplification, or vice versa. To quantify this effect is important for avoiding undesired effects if, e.g. one uses \(B_{\phi }\left( P,Q_{\theta }\,|\,W(P,Q_{\theta })\right) \) synchronously for minimum distance estimation and goodness-of-fit testing.

As a corresponding quantifier we suggest the adjustment propagation function APF

For example, for \(\phi (t) = \phi _2(t) = \frac{(t-1)^2}{2}\) one obtains from (18) and (22) the APF \(\eta _{\phi _2,w}(u,v) = \frac{2}{u-v} + \frac{\partial }{\partial v} \log w(u,v)\).

4 General Asymptotic Results for Finite Discrete Case

In this section, we assume additionally that the function \(\phi (\cdot ) \in \varPhi _{C_1}\) is thrice continuously differentiable on \(]0,\infty ]\) (which implies \(\phi ^{\prime \prime }(1) >0\)), as well as that all three functions w(u, v), \(w_{1}(u,v) := \frac{\partial w}{\partial u} (u,v)\) and \(w_{11}(u,v) := \frac{\partial ^2 w}{\partial u^2} (u,v)\) are continuous in all (u, v) of some (maybe tiny) neighbourhood of the diagonal \(\{(t,t) : t \in ]0,1[ \}\).Footnote 4 In such a setup, we deal with the following context: for \(i \in \mathbb {N}\) let the observation of the ith data point be represented by the random variable \(X_i\) which takes values in some finite space \(\mathscr {X} := \{x_1, \ldots , x_s\}\) Footnote 5 which has \(s:= |\mathscr {X}|\ge 2\) outcomes (and thus, we choose \(\lambda := \lambda _{count}\) as reference measure). Accordingly, let \(X_1, \ldots , X_N\) represent a random sample of independent and identically distributed observations generated from an unknown true distribution \(P_{\theta _{true}}\) which is supposed to be a member of a parametric family \(\mathscr {P}_{\varTheta } := \{ P_{\theta } : \theta \in \varTheta \,\text {and}\, P_{\theta }\, \text {has the probability mass function}\, p_{\theta }(\cdot ) \,\text {with respect to}\, \lambda \}\) of hypothetical, potential candidate distributions. Here, \(\varTheta \subset \mathbb {R}^{\ell }\) is a \(\ell {-}\)dimensional parameter set. Moreover, we denote by \(P:= P_{N}^{emp} := \frac{1}{N} \cdot \sum _{i=1}^{N} \delta _{X_{i}}[\cdot ]\) the corresponding empirical distribution for which the probability mass function \(p_{N}^{emp}(\cdot )\) consists of the relative frequencies \(p(x) = p_{N}^{emp}(x) = \frac{1}{N} \cdot \# \{ i \in \{ 1, \ldots , N\}: X_i =x \} \) (i.e. the “histogram entries”). Notice that \(P_{N}^{emp}\) is a probability-measure valued statistical functional (statistics). If the sample size N becomes large enough, it is intuitively plausible that the scaled Bregman divergence (cf. (13))

between the data-derived empirical distribution \(P_{N}^{emp}\) and the candidate model \(P_{\theta }\) converges to zero, provided that we have found the correct model in the sense that \(P_{\theta }\) is equal to the true data generating distribution \(P_{\theta _{true}}\). In the same line of argumentation, \(B_{\phi }\left( P_{N}^{emp},P_{\theta }\,|\,W(P_{N}^{emp},P_{\theta })\right) \) becomes close to zero, provided that \(P_{\theta }\) is close to \(P_{\theta _{true}}\). Notice that (say, for strictly positive probability mass functions \(p_{N}^{emp}(\cdot )\) and \(p_{\theta }(\cdot )\)) the Kullback–Leibler divergence KL case

is nothing but the (multiple of the) very prominent likelihood ratio test statistics (likelihood disparity); minimizing it over \(\theta \) produces the maximum likelihood estimate \(\widehat{\theta }^{MLE}\). Moreover,

represents the (multiple of the) Pearson chi-square test statistics. Concerning the above-mentioned conjectures where the sample size N tends to infinity, in case of \(P_{\theta _{true}} = P_{\theta }\) one can even derive the limit distribution of the scaled-Bregman-divergence test statistics \(T_{N}^{\phi ,w}(P_{N}^{emp},P_{\theta })\) in quite “universal generality”:

Theorem 1

Under the null hypothesis “\(H_0\): \(P_{\theta _{true}} = P_{\theta }\) with \(p_{\theta }(x) >0\) for all \(x\in \mathscr {X}\)”, the asymptotic distribution (as \(N \rightarrow \infty \)) of

has the following density \(f_{s^*}\) Footnote 6:

where \(s^*:=rank(\varvec{\varSigma }{} \mathbf A \varvec{\varSigma })\) is the number of the strictly positive eigenvalues \((\gamma ^{\phi ,\theta }_i)_{i=1,...,s^*}\) of the matrix \(\mathbf A \varvec{\varSigma }=\left( \bar{c}_i\cdot (\delta _{ij}-p_\theta (x_{j}))\right) _{i,j=1,...,s}\) consisting of

Here we have used Kronecker’s delta \(\delta _{ij}\) which is 1 iff \(i=j\) and 0 else.

In particular, the asymptotic distribution (as \(N \rightarrow \infty \)) of \(T_{N}^{\phi ,w}(P_{N}^{emp},P_{\theta })\) coincides with the distribution of a weighted linear combination of standard-chi-square-distributed random variables where the weights are the \(\gamma ^{\phi ,\theta }_i\) (\(i=1,\ldots ,s^*\)).

The proof of Theorem 1 is given in the appendix. Furthermore, let us mention that we can also study the asymptotics of (i) the statistics \(2N \cdot B_{\phi }\left( P_{\widehat{\theta }_{N}},P_{\theta }\,|\,W(P_{\widehat{\theta }_{N}},P_{\theta })\right) \) with scaled-Bregman-divergence minimum distance estimator \(\widehat{\theta }_{N}\), as well as (ii) the two-sample statistics \(2\cdot \dfrac{N_1\cdot N_2}{N_1+N_2}\cdot B_\phi \Big (P_{N_1},P_{N_2}|W(P_{N_1},P_{N_2})\Big )\). The corresponding theorems about the latter two have a structure which is similar to that of Theorem 1, with (partially) different matrices \(\mathbf A \) and \(\varvec{\varSigma }\). The details will appear elsewhere.

From the structure of Theorem 1, one can see that the asymptotic density \(f_{s^*}\) of the scaled-Bregman-divergence test statistics \(2N \cdot B_{\phi }\left( P_{N}^{emp},P_{\theta }\,|\,W(P_{N}^{emp},P_{\theta })\right) \) depends in general on the parameter \(\theta \). However, for a very large subclass we end up with a parameter-free chi-square limit distribution:

Corollary 1

Let the assumptions of Theorem 1 be satisfied. If \(\bar{c}_j\equiv \bar{c} >0\) does not depend on \(j \in \{1,\ldots ,s\}\), then \(2N \cdot B_{\phi }\left( P_{N}^{emp},P_{\theta }\,|\,W(P_{N}^{emp},P_{\theta })\right) /\bar{c}\) is asymptotically chi-square-distributed with \(s-1\) degrees of freedom.

Having found out explicitly the asymptotic distribution of the test statistics, one can derive corresponding goodness-of-fit tests in a straightforward manner (chi-square-distribution quantiles, etc.). To prove Corollary 1 from Theorem 1, it is straightforward to see that \(s^*=s-1\) and \(\gamma ^{\phi ,\theta }_i =\bar{c}\) for all \(i=1,...,s-1\). Plugging this into the definitions of \(c_0\) and \(c_k\), one can show inductively that \(c_k = \dfrac{\varGamma (k+(s^*/2))}{k ! \cdot \varGamma (s^*/2)\cdot \bar{c}^{k+(s^*/2)}}\). Hence, \( f_{s^*}(y;\,\varvec{\gamma }^{\phi ,\theta }) = \widetilde{g}(y/c) /c\) where \(\widetilde{g}(\cdot )\) is the density of a chi-square distribution with \(s^*\) degrees of freedom.

The following important contexts are covered by Corollary 1:

. Let the true distribution \(P_{\theta _{true}}\) be the uniform distribution on \(\mathscr {X}=\{1,\cdots ,s\}\), i.e. under \(H_0\) one has \(p_\theta (x_{i})=1/s\) for all \(i=1,...,s\). Accordingly, the factor \(\bar{c_j}\) becomes \(\bar{c}_j=\bar{c}= \phi ''\left( 1/(s\cdot w\left( \frac{1}{s},\frac{1}{s}\right) )\right) /(s\cdot w\left( \frac{1}{s},\frac{1}{s}\right) )\). Hence, our Corollary 1 generalizes the Corollary 5 of Pardo and Vajda (2003) who used the uniform distribution together with the unit scaling \(w(u,v)=w_{no}(u,v)=1\).

. Let the true distribution \(P_{\theta _{true}}\) be the uniform distribution on \(\mathscr {X}=\{1,\cdots ,s\}\), i.e. under \(H_0\) one has \(p_\theta (x_{i})=1/s\) for all \(i=1,...,s\). Accordingly, the factor \(\bar{c_j}\) becomes \(\bar{c}_j=\bar{c}= \phi ''\left( 1/(s\cdot w\left( \frac{1}{s},\frac{1}{s}\right) )\right) /(s\cdot w\left( \frac{1}{s},\frac{1}{s}\right) )\). Hence, our Corollary 1 generalizes the Corollary 5 of Pardo and Vajda (2003) who used the uniform distribution together with the unit scaling \(w(u,v)=w_{no}(u,v)=1\).

. For the corresponding generator \(\phi (t)=\phi _{1}(t)=t\cdot \log (t)+1-t\) one gets \(\phi ''(t)=\frac{1}{t}\) and hence \(\bar{c}_j=\bar{c}=t\cdot \phi ''(t)\equiv 1\) for any arbitrary scale connector \(w(\cdot ,\cdot )\). Thus, Corollary 1 is much more general than the classical result that the 2N-fold of the likelihood ratio statistics (28) is asymptotically chi-square-distributed with \(s-1\) degrees of freedom.

. For the corresponding generator \(\phi (t)=\phi _{1}(t)=t\cdot \log (t)+1-t\) one gets \(\phi ''(t)=\frac{1}{t}\) and hence \(\bar{c}_j=\bar{c}=t\cdot \phi ''(t)\equiv 1\) for any arbitrary scale connector \(w(\cdot ,\cdot )\). Thus, Corollary 1 is much more general than the classical result that the 2N-fold of the likelihood ratio statistics (28) is asymptotically chi-square-distributed with \(s-1\) degrees of freedom.

However, one can go far beyond:

. Let \(w(\cdot ,\cdot )\) be an arbitrary scale connector which satisfies the condition \(\exists c >0 \ \forall v \in [0,\infty [ \ \ \ w(v,v)=c \cdot v \ \) (cf. (7)) and which is twice continuously differentiable in its first component in some neighborhood of the diagonal. Then we deduce \(\bar{c}_j=\phi ''\left( \dfrac{p_\theta (x_{j})}{w(p_\theta (x_{j}),p_\theta (x_{j}))}\right) \cdot \dfrac{p_\theta (x_{j})}{w(p_\theta (x_{j}),p_\theta (x_{j}))}=\frac{\phi ''\left( \frac{1}{c}\right) }{c}\). Hence, from Corollary 1 we see that for all (eventually sufficiently smoothed) scale connectors w of Sect. 2.2 and all generators \(\phi \), the corresponding scaled-Bregman-divergence test statistics \(2N \cdot B_{\phi }\left( P_{N}^{emp},P_{\theta }\,|\,W(P_{N}^{emp},P_{\theta })\right) /\bar{c}\) is asymptotically chi-square-distributed with \(s-1\) degrees of freedom. From this general assertion one can immediately deduce the well-known result (see, e.g. Zografos et al. (1990), Basu and Sarkar (1994) for the one-to-one concept of disparities, Pardo (2006)) about the asymptotic chi-square-distribution (of \({\text {s}}-1\) degrees of freedom) of all \(2N/\phi ''(1)\)-folds of Csiszar-Ali-Silvey divergences CASD test statistics, since they are imbedded as (cf. (9) from Sect. 2.2.ai)

. Let \(w(\cdot ,\cdot )\) be an arbitrary scale connector which satisfies the condition \(\exists c >0 \ \forall v \in [0,\infty [ \ \ \ w(v,v)=c \cdot v \ \) (cf. (7)) and which is twice continuously differentiable in its first component in some neighborhood of the diagonal. Then we deduce \(\bar{c}_j=\phi ''\left( \dfrac{p_\theta (x_{j})}{w(p_\theta (x_{j}),p_\theta (x_{j}))}\right) \cdot \dfrac{p_\theta (x_{j})}{w(p_\theta (x_{j}),p_\theta (x_{j}))}=\frac{\phi ''\left( \frac{1}{c}\right) }{c}\). Hence, from Corollary 1 we see that for all (eventually sufficiently smoothed) scale connectors w of Sect. 2.2 and all generators \(\phi \), the corresponding scaled-Bregman-divergence test statistics \(2N \cdot B_{\phi }\left( P_{N}^{emp},P_{\theta }\,|\,W(P_{N}^{emp},P_{\theta })\right) /\bar{c}\) is asymptotically chi-square-distributed with \(s-1\) degrees of freedom. From this general assertion one can immediately deduce the well-known result (see, e.g. Zografos et al. (1990), Basu and Sarkar (1994) for the one-to-one concept of disparities, Pardo (2006)) about the asymptotic chi-square-distribution (of \({\text {s}}-1\) degrees of freedom) of all \(2N/\phi ''(1)\)-folds of Csiszar-Ali-Silvey divergences CASD test statistics, since they are imbedded as (cf. (9) from Sect. 2.2.ai)

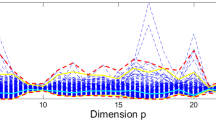

To continue, notice that the case of classical-Bregman-distance test statistics

(cf. (6)) is not covered by Corollary 1; however from the more general Theorem 1 one can deduce its parameter-dependent asymptotic distribution by plugging \(w(u,v) =w_{no}(u,v)=1\) into (33) which leads to \(\bar{c}_j = p_\theta (x_{j}) \cdot \phi ''\left( p_\theta (x_{j})\right) \); for the special case of the DPD\(_{\alpha }\) \(2N \cdot B_{\phi _{\alpha }}\left( P_{N}^{emp},P_{\theta }\,|\,1\right) \) (\(\alpha \in \mathbb {R}\backslash \{0,1\}\)) this reduces to \(\bar{c}_j = p_\theta (x_{j})^{\alpha -1}\); an asymptoticity result which is similar to the latter can be found in Basu et al. (2013), with the following main differences: they use \(2 \alpha N \cdot B_{\phi _{\alpha }}( P_{\widehat{\theta }_{\beta }^{(N)}},P_{\theta }\,|\,1)\) where \(\alpha >1\), \(P_{\theta }\) need not (but is allowed to) be discrete, and \(\widehat{\theta }_{\beta }^{(N)}\) is the parameter \(\vartheta \) which minimizes \(\beta \cdot B_{\phi _{\beta }}\left( P_{N}^{emp},P_{\vartheta }\,|\,1\right) \) for \(\beta >1\).

As a modelling alternative to \(2N \cdot B_{\phi }\left( P_{N}^{emp},P_{\theta }\,|\,1\right) \), one can work with the smoothed robustness-adjusted scale connector \(w_{adj}^{smooth}(u,v)\) of Sect. 2.2d—with \(h_{in} = h_{out} = 0\) (see Fig. 2i) and \(\underline{\varepsilon }_0 = \underline{\varepsilon }_1 = \overline{\varepsilon }_0 = \overline{\varepsilon }_1 =: \varepsilon \) with extremely small \(\varepsilon >0\) (e.g. less than the rounding-errors-concerning machine epsilon of your computer); then for all practical purposes at reasonable sample sizes,

\(\widetilde{B}_N := 2N B_{\phi }(P_{N}^{emp},P_{\theta }\,|\,W_{adj}^{smooth}(P_{N}^{emp},P_{\theta }))\) is “computationally indistinguishable” from \(2N B_{\phi }(P_{N}^{emp}, P_{\theta }\,|\,1)\), but \(\widetilde{B}_N/\phi ''(1)\) is asymptotically chi-square-distributed with \(s-1\) degrees of freedom (being parameter-free).

Let us finally remark that all our concepts can also be performed for non-probability measures P, Q, and for similar functions. This will appear in a forthcoming paper.

Notes

- 1.

Notice that \(\delta _{y}\) is Dirac’s one-point distribution at y (i.e. \(\delta _{y}[A] = 1\) iff \(y \in A\) and \(\delta _{y}[A] = 0\) else).

- 2.

The general case follows analogously and will appear elsewhere.

- 3.

Which in view of the prevailing model uncertainty is a very reasonable thing; think of outliers in the lifetime data of patients infected by a lethal disease—with better modelling they may not be outliers anymore.

- 4.

- 5.

(Equipped with some \(\sigma {-}\)algebra \(\mathscr {A}\)).

- 6.

(with respect to the one-dim. Lebesgue measure).

References

Ali SM, Silvey SD (1966) A general class of coefficients of divergence of one distribution from another. J Roy Stat Soc B 28:131–142

Basu A, Lindsay BG (1994) Minimum disparity estimation for continuous models: efficiency, distributions and robustness. Ann Inst Statis Math 46:683–705

Basu A, Sarkar S (1994) On disparity based goodness-of-fit tests for multinomial models. Statis Probab Lett 19:307–312

Basu A, Harris IR, Hjort N, Jones M (1998) Robust and efficient estimation by minimising a density power divergence. Biometrika 85(3):549–559

Basu A, Shioya H, Park C (2011) Statistical inference: the minimum distance approach. CRC, Boca Raton

Basu A, Mandal A, Martin N, Pardo L (2013) Testing statistical hypotheses based on the density power divergence. Ann Inst Statis Math 65(2):319–348

Basu A, Mandal A, Martin N, Pardo L (2015a) Density power divergence tests for composite null hypotheses. arXiv:14030330v2

Basu A, Mandal A, Martin N, Pardo L (2015b) Robust tests for the equality of two normal means based on the density power divergence. Metrika 78:611–634

Beran RJ (1977) Minimum hellinger distance estimates for parametric models. Ann Stat 5:445–463

Bregman LM (1967) The relaxation method of finding the common point of convex sets and its application to the solution of problems in convex programming. USSR Comput Math Math Phys 7(3):200–217

Csiszar I (1963) Eine informationstheoretische Ungleichung und ihre Anwendung auf den Beweis der Ergodizität von Markoffschen Ketten. Publ Math Inst Hungar Acad Sci A 8:85–108

Csiszar I (1991) Why least squares and maximum entropy? An axiomatic approach to inference for linear inverse problems. Ann Stat 19(4):2032–2066

Csiszar I (1994) Maximum entropy and related methods. In: Transactions 12th Prague Conference Information Theory, Statistical Decision Functions and Random Processes, Czech Acad Sci Prague, pp 58–62

Csiszar I (1995) Generalized projections for non-negative functions. Acta Mathematica Hungarica 68:161–186

Csiszar I, Shields PC (2004) Information theory and statistics: a tutorial. now. Hanover, Mass

Dik JJ, de Gunst MCM (1985) The distribution of general quadratic forms in normal variables. Statistica Neerlandica 39:14–26

Ghosh A, Basu A (2013) Robust estimation for independent non-homogeneous observations using density power divergence with applications to linear regression. Electron J Stat 7:2420–2456

Ghosh A, Basu A (2014) Robust and efficient parameter etsimation based on censored data with stochastic covariates. arXiv:14105170v2

Golan A (2003) Information and entropy econometrics editors view. J Econometrics 107:1–15

Grabisch M, Marichal JL, Mesiar R, Pap E (2009) Aggregation functions. Cambridge University Press

Kißlinger AL, Stummer W (2013) Some decision procedures based on scaled Bregman distance surfaces. In: Nielsen F, Barbaresco F (eds) GSI 2013, Lecture Notes in Computer Science LNCS, 8085. Springer, Berlin, pp 479–486

Kißlinger AL, Stummer W (2015a) A new information-geometric method of change detection. Preprint

Kißlinger AL, Stummer W (2015b) New model search for nonlinear recursive models, regressions and autoregressions. In: Nielsen F, Barbaresco F, SCSL (eds) GSI 2015, Lecture Notes in Computer Science LNCS 9389. Springer, Switzerland, pp 693–701

Kotz S, Johnson N, Boyd D (1967) Series representations of distributions of quadratic forms in normal variables. i. central case. Ann Math Stat 38(3):823–837

Liese F, Miescke KJ (2008) Statistical Decision Theory: Estimation, Testing, and Selection. Springer, New York

Liese F, Vajda I (1987) Convex statistical distances. Teubner, Leipzig

Liese F, Vajda I (2006) On divergences and informations in statistics and information theory. IEEE Trans Inf Theory 52(10):4394–4412

Lindsay BG (1994) Efficiency versus robustness: the case for minimum Hellinger distance and related methods. Ann Statis 22(2):1081–1114

Maasoumi E (1993) A compendium to information theory in economics and econometrics. Econom Rev 12(2):137–181

Marhuenda Y, Morales D, Pardo JA, Pardo MC (2005) Choosing the best Rukhin goodness-of-fit statistics. Comp Statis Data Anal 49:643–662

Pardo L (2006) Statistical inference based on divergence measures. Chapman & Hall/CRC, Taylor & Francis Group

Pardo MC, Vajda I (1997) About distances of discrete distributions satisfying the data processing theorem of information theory. IEEE Trans Inf Theory 43(4):1288–1293

Pardo MC, Vajda I (2003) On asymptotic properties of information-theoretic divergences. IEEE Trans Inf Theory 49(7):1860–1868

Read TRC, Cressie NAC (1988) Goodness-of-fit statistics for discrete multivariate data. Springer, New York

Rukhin AL (1994) Optimal estimator for the mixture parameter by the method of moments and information affinity. In: Transactiona 12th Prague Conference Information Theory, Statistical Decision Functions and Random Processes. Czech Acad Sci, Prague, pp 214–216

Serfling RJ (1980) Approximation theorems of mathematical statistics. Wiley Series in Probability and Mathematical Statistics

Stummer W (2004) Exponentials, diffusions, finance, entropy and information. Shaker, Aachen

Stummer W (2007) Some Bregman distances between financial diffusion processes. Proc Appl Math Mech (PAMM) 7:1050,503–1050,504

Stummer W, Lao W (2012) Limits of Bayesian decision related quantities of binomial asset price models. Kybernetika 48(4):750–767

Stummer W, Vajda I (2007) Optimal statistical decisions about some alternative financial models. J Econometrics 137:441–471

Stummer W, Vajda I (2012) On Bregman distances and divergences of probability measures. IEEE Trans Inf Theory 58(3):1277–1288

Vajda I (1989) Theory of statistical inference and information. Kluwer, Dordrecht

Vajda I, van der Meulen EC (2010) Goodness-of-fit criteria based on observations quantized by hypothetical and empirical percentiles. In: Karian Z, Dudewicz E (eds) Handbook of Fitting statistical distributions with R. CRC, Heidelberg, pp 917–994

Vapnik VN, Chervonenkis AY (1968) On the uniform convergence of frequencies of occurence of events to their probabilities. Sov Math Doklady 9(4):915–918, corrected reprint in: Schölkopf B et al (eds) (2013) Empirical Inference. Springer, Berlin, pp 7–12

Voinov V, Nikulin M, Balakrishnan N (2013) Chi-squared goodness of fit tests with applications. Academic Press

Zografos K, Ferentinos K, Papaioannou T (1990) Phi-divergence statistics: sampling properties and multinomial goodness of fit and divergence tests. Commun Statist A - Theory Meth 19(5):1785–1802

Acknowledgments

We are indebted to Ingo Klein for inspiring suggestions and remarks. The second author would like to thank the authorities of the Indian Statistical Institute for their great hospitality. Furthermore, we are grateful to both anonymous referees for useful suggestions.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

Proof of Theorem 1 Let us start by rewriting

where the function \(\psi : [0,1] \times ]0,1] \mapsto [0,\infty [\) is defined by

(with the proper extension for \(u=0\)). As an ingredient for the below-mentioned Taylor expansion, we compute the first two partial derivatives of \(\psi (\cdot ,\cdot )\) with respect to its first argument: