Abstract

Many different parameter choice methods have been proposed for regularization in both deterministic and stochastic settings. The performance of a particular method in a specific setting and its comparison to other methods is sometimes hard to predict. This chapter reviews many of the existing parameter choice methods and evaluates and compares them in a large simulation study for spectral cutoff and Tikhonov regularization.

The numerical tests deal with downward continuation, i.e., an exponentially ill-posed problem, which is found in many geoscientific applications, in particular those involving satellite measurements. A wide range of scenarios for these linear inverse problems are covered with regard to both white and colored stochastic noise. The results show some marked differences between the methods, in particular, in their stability with respect to the noise and its type. We conclude with a table of properties of the methods and a summary of the simulation results, from which we identify the best methods.

Access provided by Autonomous University of Puebla. Download reference work entry PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction and Inverse Problems Considered

1.1 Introduction

Inverse problems are an important part of geomathematics, especially now in the age of satellite measurement technologies. Most applications want to use measurements made at satellite altitude (e.g., by satellite-to-satellite tracking (SST), satellite gravity gradiometry (SGG), magnetic field measurements) to determine a geoscientific quantity such as the gravitational potential or the magnetic field on the Earth’s surface. This process involves some variant of downward continuation, which is known to be an exponentially ill-posed problem requiring regularization. For further details, we refer to Freeden (1999), Freeden and Michel (2004), Freeden and Gerhards (2013), Lu and Pereverzev (2014), and the references therein.

These ill-posed problems can be expressed mathematically as a linear inverse problem

where A is a linear compact operator mapping between two separable Hilbert spaces \(\mathcal{X}\) and \(\mathcal{Y}\). In practical situations, only a noisy version y δ of y is available as data. Because of the compactness of A, solving (1) for x is unstable, and one needs to regularize the problem to obtain a reasonable approximate solution (see, e.g., Engl et al. 1996).

The two most popular regularization methods are spectral cutoff regularization (also called truncated singular value decomposition) and Tikhonov regularization (also called ridge regression or Wiener filtering in certain contexts). Spectral cutoff uses projection onto a subspace spanned by singular vectors corresponding to the larger singular values of A, while Tikhonov regularization is a penalized variational approach which shrinks the contribution of the smaller singular values of A. For both regularization methods, the choice of the regularization parameter is crucial. Many different methods for choosing this parameter have been proposed, often with analytical justification in mind. However, this usually requires a particular setting, and it may not be known how the method performs in all practical situations and under general conditions.

The paper of Bauer and Lukas (2011) provides a comprehensive review of most of the existing parameter choice methods and compares them through a large simulation study. Other more limited comparative studies can be found in Wahba (1985), Kohn et al. (1991), Thompson et al. (1991), Hanke and Hansen (1993), Hansen (1994, 1998, Chap. 7), Lukas (1998b), Farquharson and Oldenburg (2004), Abascal et al. (2008), Åkesson and Daun (2008), Rust and O’Leary (2008), Correia et al. (2009), Hämarik and Raus (2009), Hämarik et al. (2009, 2011, 2012), Palm (2010), and Reichel and Rodriguez (2013).

Bauer and Lukas (2011) consider synthetic inverse problems with singular values having power-type decay. This paper is based on the survey of Bauer and Lukas (2011), but specifically treats exponentially ill-posed problems closely related to real applications in geomathematics. For these inverse problems, a thorough and up-to-date comparative study of parameter choice methods for spectral cutoff and Tikhonov regularization is provided, taking into account deterministic and stochastic settings. In particular, we investigate the variability of each method both for white noise and for colored noise. For ease of reference, we list below the subsections for the parameter choice methods that are considered. Further methods are briefly discussed in Sect. 4.19 where we also explain why they are left out in this paper.

-

4.1

Discrepancy principle

-

4.2

Transformed discrepancy principle

-

4.3

Modified Discrepancy Principle (MD rule)

-

4.4

Monotone error (ME) rule

-

4.5

Varying discrepancy principle

-

4.6

Balancing principle, balancing principle (white), fast balancing principle

-

4.7

Hardened balancing principle, hardened balancing principle (white)

-

4.8

Quasi-optimality criterion

-

4.9

L-curve method

-

4.10

Extrapolated error method

-

4.11

Modified discrepancy partner (MDP) rule

-

4.12

Normalized cumulative periodogram method

-

4.13

Residual method

-

4.14

Generalized maximum likelihood

-

4.15

Generalized cross-validation

-

4.16

Robust generalized cross-validation

-

4.17

Strong robust GCV

-

4.18

Modified generalized cross-validation

We introduce the downward continuation problem in Sect. 1.2. Section 2 summarizes regularization of linear inverse problems. In Sect. 3, the design of our numerical experiments and the main algorithms used for their generation are presented in brief; we refer to Bauer and Lukas (2011) for a more detailed explanation of those parts that are similar. All the parameter choice methods are briefly described in Sect. 4 and their corresponding results are displayed and commented on there. A summary and conclusions can be found in Sect. 5.

1.2 Inverse Problems Considered

Due to its compactness, the operator A of (1) admits a singular value decomposition (SVD) \(\{\sigma _{k},u_{k},v_{k}\}_{k\in \mathbb{N} }\), where \(\{u_{k}\}_{k\in \mathbb{N} }\) and \(\{v_{k}\}_{k\in \mathbb{N} }\) are orthonormal in \(\mathcal{X}\) and \(\mathcal{Y}\), respectively, \(Au_{k} =\sigma _{k}v_{k}\), \(A^{{\ast}}v_{k} =\sigma _{k}u_{k}\), and \(\sigma _{k} > 0\) are in decreasing order. The decay rate of the singular values \(\sigma _{k}\) determines the degree of ill-posedness of the inverse problem. For the inverse problem of downward continuation, we have \(\mathcal{X} = L^{2}(\Omega _{R})\), i.e., the space of square-integrable functions on the sphere \(\Omega _{R}\) of radius R (radius of a spherical Earth), and \(\mathcal{Y} = L^{2}(\Omega _{R+h})\), i.e., the space of square-integrable functions on the sphere of radius R + h, where h is the satellite altitude (assuming a simplified spherical orbit). Then x stands for, e.g., the gravitational potential on the Earth’s surface and y denotes the same potential at the satellite’s orbit. The orthonormal systems are given in terms of spherical harmonics Y m, j of degree \(m \in \mathbb{N}_{ 0}\) and order \(j = -m,\ldots,m\) which are adjusted to the corresponding radii. Thus, \(u_{k} = u_{k(m,j)} = \tfrac{1} {R}Y _{m,j}\), \(v_{k} = v_{k(m,j)} = \tfrac{1} {R+h}Y _{m,j}\) and the singular values of the operator \(A: \mathcal{X} \rightarrow \mathcal{Y}\) of (1) are given by

Note that this operator is the so-called upward continuation, its (generalized) inverse is the downward continuation. Obviously, the singular values in (2) are exponentially decreasing, i.e., downward continuation is an exponentially ill-posed problem. Since there are 2m + 1 spherical harmonics of degree m and the singular values do not depend on the order j, the singular values occur with this multiplicity. Note that we use the notation k(m, j) in (2) to indicate the renumbering, which is given by \(k(m,j) = m^{2} + m + 1 + j\). It is also well known that there are (M + 1)2 spherical harmonics of degree M or less (see, e.g., Freeden and Gutting (2013) for a detailed introduction of spherical harmonics and their properties). It should also be noted that instead of L 2-spaces the theory of spherical Sobolev spaces can be applied.

The SST and SGG problems that are mentioned in the introduction can be investigated in simplified form such that y is the first or second radial derivative of the gravitational potential instead of the potential itself. This provides the following singular values for \(m \in \mathbb{N}\), \(j = -m,\ldots,m\):

In both cases, the singular values are still exponentially decreasing as k or m tend to infinity. Further details can be found in, e.g., Freeden (1999), Freeden and Michel (2004), Freeden and Gerhards (2013), Freeden and Schreiner (2015), Lu and Pereverzev (2014), and the references therein. Another ill-posed problem closely related to downward continuation is the inverse gravimetric problem (see Freeden and Michel (2004), Michel (2014), and the references therein). While the problems discussed above are exponentially ill-posed in a formal sense, in practice, where there is a bound on the degree of the spherical harmonics, the problems may exhibit behavior of more moderately ill-posed problems. In particular, it is argued in Lu and Pereverzev (2014) that the SGG problem for the GOCE mission, where the degree is bounded by 300, can be considered as mildly (polynomially) ill-posed, with \(\sigma _{k(m,j)}^{\mathrm{SGG}} \approx (m + 1/2)^{-5.5}\) for 100 ≤ m ≤ 300. This observation also applies to the finite dimensional problems to be considered in our numerical experiments. For example, with R = 6, 371 km and h = 300 km, the eigenvalues \(\sigma _{k(m,j)}\) in (2) satisfy \(0.5R^{4}(m + 1/2)^{-8.5} \leq \sigma _{k(m,j)} \leq 3R^{4}(m + 1/2)^{-8.5}\) for 100 ≤ m ≤ 300.

2 Preliminaries

In practice, an inverse problem is often discretized (either as the model or for computation) and/or only a finite set of discrete data is available. Starting with (1), we distinguish the following three cases which are considered in one common framework:

- Case C1.:

-

Infinite dimensional situation, where A is a compact linear operator mapping between two separable Hilbert spaces \(\mathcal{X}\) and \(\mathcal{Y}\).

- Case C2.:

-

Finite dimensional situation, where A is a matrix with large condition number mapping between \(\mathcal{X} = \mathbb{R}^{ p}\) and \(\mathcal{Y} = \mathbb{R}^{ q}\), p ≤ q. Assume that rank(A) = p. This case is often called a discrete ill-posed problem.

- Case C3.:

-

Discrete data situation, where the underlying problem is still infinite dimensional, but we only have measurements y i = y(t i ) at the q points {t i } i = 1, …, q . Then A is a finite rank operator between \(\mathcal{X}\) and \(\mathcal{Y} = \mathbb{R}^{ q}\) with the correspondence \((Ax)_{i} = (A_{\infty }x)(t_{i})\), i = 1, …, q. Assume that rank(A) = q. This case is known as a semi-discrete model.

In all cases, the element \(y \in \mathcal{Y}\) is perturbed by noise, giving the data y δ.

2.1 Regularization Methods

In this paper, we will concentrate on the two main regularization methods that are used for solving linear inverse problems – spectral cutoff and Tikhonov regularization. Detailed accounts of these methods can be found, e.g., in Groetsch (1984), Hofmann (1986), and Engl et al. (1996).

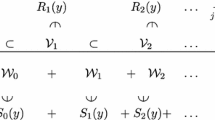

By the use of the singular value decomposition, we obtain

where R is \(\infty \), p, and q, respectively, in cases C1, C2, and C3 above. Spectral cutoff regularization is defined by

where l( ⋅ ) is an ascending integer-valued function. The traditional choice is l(n) = n, but a general l( ⋅ ) allows us to restrict the regularized solutions to an appropriate subset, thereby reducing the computation time significantly without affecting the results.

Tikhonov regularization has a continuous regularization parameter α, but in practice, one often searches over a discrete set. Here we use a geometric sequence of parameter values α n = α 0 q α n, where 0 < q α < 1 and \(n \in \mathbb{N}\). Tikhonov regularization is defined by the variational formulation

or, equivalently, by

where A ∗ is the adjoint of A. Here and throughout, the norm meant by \(\|\!\cdot \!\|\) refers to the Hilbert space in use and will be clear from the context.

Note that, for both spectral cutoff and Tikhonov regularization, a larger value of the index n corresponds to less smoothing. For both methods, let x n 0 be the regularized solution in the case of noise-free data and let A n −1 be the linear regularization operator that maps y δ to x n δ, i.e., it holds that

2.2 Deterministic and Stochastic Noise

Several kinds of additive noise models are used in the study of inverse problems (cf. Eggermont et al. 2014 and the references therein). To describe the major ones, we will denote \(y^{\delta } = y+\delta \xi\), where \(\xi\) is a normalized noise element and δ > 0 is the noise level.

The most common noise model in the classical inverse problems literature is deterministic noise (cf. Engl et al. 1996), where \(\xi \in \mathcal{Y}\) with \(\|\xi \|\leq 1\), so \(\|y^{\delta } - y\| \leq \delta\). This noise model is quite suitable to represent discretization errors, but it is rather poor for describing random measurement errors arising in practice.

A practical noise model for a discrete data vector \(y^{\delta } \in \mathbb{R}^{ q}\) (for cases C2 and C3, see, e.g., Wahba 1990) is \(y^{\delta } = y+\delta \xi\), where the components \(\xi _{i}\) are i.i.d. random variables with mean \(\mathbb{E}\xi _{i} = 0\) and variance \(\mathbb{E}\xi _{i}^{2} = 1\). Then δ is the standard deviation of each error component \(\delta \xi _{i}\) and \(\mathbb{E}\|y^{\delta } - y\|^{2} =\delta ^{2}\mathbb{E}\|\xi \|^{2} = q\delta ^{2}\). This model can be extended to one involving correlated errors, where \(\varepsilon _{i} =\delta \xi _{i}\) has covariance matrix \(C = [\mathbb{E}(\varepsilon _{i}\varepsilon _{\!j})]\).

A stochastic noise model can also be defined in an infinite dimensional setting (case C1) by using the singular value decomposition of A. Suppose that the Fourier coefficients \(\left < y,v_{k}\right >\) are known only as the sequence data

where \(\xi _{k} = \left < \xi,v_{k}\right >\) are independent normal \(\mathcal{N}(0,1)\) random variables and \(\xi\) is a zero-mean weak Gaussian random element. This is called a continuous Gaussian white noise model (cf. Lepskij 1990). Note there is no bound for the error in \(\mathcal{Y}\) here, since \(\mathbb{E}\sum (y_{k}^{\delta } -\left < y,v_{k}\right >)^{2} =\sum \delta ^{2}\) is infinite.

Colored noise can be defined by introducing a covariance matrix K for the random variables \(\xi _{k}\) so that \(\mathbb{E}(\xi _{k}\xi _{l}) = K_{kl}\), in which case we have \(\mathbb{E}\left < \xi,f\right >\left < \xi,g\right > =\sum _{kl}f_{k}K_{kl}g_{l}\) for any pair \(f,g \in \mathcal{Y}\) with \(f_{k} = \left < f,v_{k}\right >\) and \(g_{k} = \left < g,v_{k}\right >\). A simple choice is to assume K to be diagonal. Then, if the entries K kk are increasing, it is called blue noise, and, if they are decreasing, it is called red noise.

For the finite dimensional case C2, if \(y^{\delta } = y+\delta \xi\) with \(\xi \sim N(0,I)\), then, clearly, using the orthonormal singular vectors \(v_{k} \in \mathbb{R}^{ q}\) of A, the model can be written equivalently as a Gaussian white noise model with finite sequence data.

2.3 Assumptions on the Solution x (Source Condition)

In most of the literature on regularization, it is assumed that x is a fixed (nonrandom) element of \(\mathcal{X}\). It is known (see, e.g., Cox 1988; Lukas 1988; Engl et al. 1996) that the error \(\|x - x_{n}^{\delta }\|\) or the expected squared error \(\mathbb{E}\|x - x_{n}^{\delta }\|^{2}\) (with respect to the noise distribution) in the regularized solution (3) or (4) depends on the abstract smoothness of the unknown solution x. The smoothness assumption made on x is called a source condition and is usually of the form \(x \in \mathcal{R}((A^{{\ast}}A)^{s})\) for some s > 0 (called Hölder type). However, this form is not suitable for a severely ill-posed problem, where the singular values of the operator have exponential decay, while the unknown solution has relatively low smoothness, in particular for downward continuation. For such problems, it is natural to use the logarithmic source condition \(x \in \{ u: u =\ln ^{-p}(A^{{\ast}}A)^{-1}v,\|v\| \leq K\}\) (cf. Mair 1994; Hohage 2000; Pereverzev and Schock 2000). General source conditions are also used in Mathé and Pereverzev (2003) and Nair et al. (2003).

In the Bayesian approach to inverse problems (cf. Tarantola 1987; Evans and Stark 2002; Kaipio and Somersalo 2005; Hofinger and Pikkarainen 2007), it is assumed that x is a random element of \(\mathcal{X}\) with some prior distribution. These models can be formulated in any of the cases C1, C2, or C3 above. It is known (cf. Larkin 1972; Hofmann 1986; Wahba 1990; Fitzpatrick 1991) that, with a Gaussian prior and independent Gaussian error distribution, the posterior mean given the data is the solution of a certain Tikhonov regularization problem and the appropriate choice for the regularization parameter is determined by the variance of x and the noise level δ. However, in practice both are usually unknown.

2.4 Parameter Choice Method

A parameter choice method is a rule that assigns a value for the regularization parameter. In the case of a discrete set of parameters, the method selects a value for the index, which will be denoted by n ∗. Parameter choice methods can be classified according to the input used to make the choice. There are three basic types (see, e.g., Engl et al. 1996; Bauer and Kindermann 2009):

-

A priori method, i.e., n ∗ is a function of δ and information about the smoothness of x. Since information about x is required, but in practice not known, such methods are not discussed here.

-

A posteriori method, i.e., n ∗ = n ∗(δ, y δ). The noise level δ is required and has to be estimated if it is not known.

-

Data-driven method, i.e., n ∗ = n ∗(y δ). The method only requires the data y δ as input. In the literature on deterministic noise, these methods are sometimes called “heuristic methods”, but this has a negative connotation that is not generally deserved.

We will consider several methods of each of the second and third types. For these methods, if y δ contains stochastic noise, then n ∗ is a random variable. Each method computes an associated function F(n), and n ∗ is defined as either the point at which F falls below a threshold (type 1) or the minimizer of F (type 2). Most methods of type 1 originate from a deterministic setting and use a (sensitive) tuning parameter. The methods of type 2 mostly come from a stochastic framework or from heuristic ideas and work without (sensitive) tuning parameters.

2.5 Optimal Regularization Parameter

For the problem Ax = y with data y δ, we define the optimal regularization parameter (index) by

If y δ contains stochastic noise, then n opt is a random variable. In our numerical experiments of each parameter choice method, we will assess the accuracy of the choice n ∗ by computing the inefficiency defined by

The closer this is to 1, the better is the parameter choice. Using stochastic noise with a large number of replicates of the problem, we can estimate the distribution of the inefficiencies and hence determine the performance of the method.

It is clear that, since x is unknown, a practical parameter choice method must use some other known or easily computed/estimated quantities. Many methods use the norm of the residual defined as \(\|y^{\delta } - Ax_{n}^{\delta }\|\). If the data are finite and the norm is the Euclidean norm, this is the square root of the usual residual sum of squares, and so it is easily computed.

Splitting the error \(\|x - x_{n}^{\delta }\|\) such that

the first term (regularization error) is bounded by a decreasing function \(\varphi (n)\) which depends on the source condition and the regularization method. A higher smoothness s for \(x \in \mathcal{R}((A^{{\ast}}A)^{s})\) improves the rate of decrease. If this is only possible up to a maximal value s 0, this value is the so-called qualification and the method exhibits saturation. Tikhonov regularization has qualification s 0 = 1, while spectral cutoff has infinite qualification (see, e.g., Engl et al. 1996). Under a logarithmic source condition, slower (logarithmic) rates of decrease are obtained for standard regularizations, in particular for Tikhonov and spectral cutoff regularization (Mair 1994; Hohage 2000; Pereverzev and Schock 2000). It follows that Tikhonov regularization does not exhibit saturation here.

The second term (propagated noise error) on the right-hand side of (7) can often be bounded for regularization methods as

where ϱ is a known increasing function of n, indicating that, with less smoothing, there is more influence of the data noise. For spectral cutoff regularization, (8) holds with \(\varrho (n) =\sigma _{ l(n)}^{-1}\) and, for Tikhonov regularization, (8) holds with \(\varrho (n) =\alpha _{ n}^{-1/2}\) (cf. Engl et al. 1996).

In the case of stochastic noise, the risk, i.e., the expected squared error \(\mathbb{E}\|x - x_{n}^{\delta }\|^{2}\), is considered. For noise with zero mean, instead of (7), the risk can be decomposed exactly into a sum of squared bias \(\|x - x_{n}^{0}\|^{2}\) and variance terms \(\mathbb{E}\|x_{n}^{0} - x_{n}^{\delta }\|^{2}\), i.e.,

The squared bias can be bounded as before and, under suitable assumptions, the variance can be expressed as \(\delta ^{2}\varrho ^{2}(n)\) for some increasing function \(\varrho (n)\). For white noise, the spectral cutoff solution (3) has variance

and the Tikhonov regularized solution (4) has variance

A much more detailed discussion of the above errors (including minimax results) in various situations can be found in Cox (1988), Lukas (1988), Engl et al. (1996), Mair and Ruymgaart (1996), Mathé and Pereverzev (2003), Cavalier et al. (2004), Bauer (2007), and Hofmann and Mathé (2007).

It should be pointed out that Bakushinskii (1984) states that, for an ill-posed problem, a parameter choice rule that does not explicitly use the noise level (e.g., data-driven rules) cannot yield a regularization method such that the worst-case error converges to 0 as δ → 0. This Bakushinskii veto is important for deterministic noise, but it is not really appropriate for stochastic noise (cf. Bauer and Kindermann 2009; Becker 2011). In this situation, as we shall see, there are data-driven rules yielding regularization methods that converge with respect to the risk and perform very well in practice (see also Bauer and Lukas 2011).

For some methods, there are stronger results involving oracle inequalities (see Cavalier et al. 2002; Candès 2006; Bauer and Reiß 2008; Cavalier 2008), which provide, for any noise level, a bound on the risk \(\mathbb{E}\|x - x_{n}^{\delta }\|^{2}\) relative to the smallest possible value of the risk and allow the classification of methods as asymptotically optimal.

Similar results exist for some methods with a discrete noisy data vector \(y^{\delta } \in \mathbb{R}^{ q}\) in case C3, where the asymptotic analysis is as \(q \rightarrow \infty \) with fixed variance δ 2. There are connections between results for the continuous white noise model and for discrete sampled data. In particular, for function estimation (A = I) and other problems, it is known that, under certain conditions, asymptotic results for the continuous white noise model as δ → 0 can be translated into asymptotic results for discrete data as \(q \rightarrow \infty \) (cf. Brown and Low 1996; Pensky and Sapatinas 2010).

3 Evaluation Process and Experiments

This section presents the design of the evaluation process and numerical experiments that will be used for each parameter choice method described in Sect. 4.

3.1 Construction of Our Numerical Experiments

Each parameter choice method will be assessed using the same large set of test problems corresponding to downward continuation. The results will be shown for all situations, independent of any prior knowledge about situations where the method does not work. For each parameter choice method, the experiments used the same random seed, so every method had exactly the same set of operators, solutions, and noisy data to deal with. The experiments were implemented in MATLABⓇ whose notation we also use to describe the algorithms in this section.

The test problems are finite dimensional problems (case C2), where \(A: \mathcal{X} \rightarrow \mathcal{Y}\) and \(\mathcal{X} = \mathcal{Y} = \mathbb{R}^{ q}\) with Euclidean norms. The problems are characterized by the following parameters:

-

\(M_{\max }\): maximal spherical harmonic degree, i.e., the number of singular values of A is \(q = (M_{\max } + 1)^{2}\);

-

h: varying heights in (2) (with R = 6, 371 km) determining the speed of the exponential decay behavior;

-

ν: (polynomial) decay behavior (smoothness) of x;

-

\(\log (N2S)\): \(\log _{10}\) of the noise-to-signal ratio \(N2S = (\mathbb{E}\|y^{\delta } - y\|^{2})^{1/2}/\|y\|\);

-

ω: noise behavior (corresponds to the color).

3.1.1 Generation of the Operators

The operator A will be taken to be a random diagonal q × q matrix, with diagonal elements (i.e., eigenvalues or singular values) decaying like

where \(k(m,j) = m^{2} + m + 1 + j\). A larger value of h corresponds to a more ill-posed problem, all of which are exponentially ill-posed. The diagonal vector is generated by the following procedure:

-

\(\mathop{\mathrm{for}}\nolimits \:deg = 0: M_{\max } + 10\)

-

\(\mathit{OrderVector}(\mathit{deg}^{\wedge }2 + 1: (\mathit{deg} + 1)^{\wedge }2) = \mathit{deg};\)

-

\(\mathop{\mathrm{end}}\nolimits\)

-

\(\mathit{NumberEigenvalues} = (M_{\max } + 1)^{\wedge }2;\)

-

\(\mathit{HelpVar} = (6,371/(6,371 + h)).^{\wedge }\mathit{OrderVector};\)

-

\(\mathit{Perturb} =\mathop{ \mathrm{exp}}\nolimits (0.5 {\ast}\mathop{\mathrm{randn}}\nolimits (\mathop{\mathrm{length}}\nolimits (HelpV ar),1) - 0.5^{2}/2);\)

-

\(HelpOp =\mathop{ \mathrm{sort}}\nolimits (HelpV ar\,\,. {\ast}\,\, Perturb,\mathop{\mathrm{`descend'}}\nolimits );\)

-

A = HelpOp(1: NumberEigenvalues);

For each parameter choice method, more than 160,000 trials are done using about 40,000 up to 120,000 eigenvalues each time, so speed and memory usage are very important issues. Note that for the maximal degree \(M_{\max }\) we have to deal with \((M_{\max } + 1)^{2}\) eigenfunctions and \(M_{\max }\) can be up to 350 (see Tables 1 and 2) in our tests. For this reason, we use the simplest possible form of an inverse problem, i.e., diagonal matrices. Because of the singular value decomposition, these diagonal problems are no less or more ill-posed than other discrete inverse problems. Furthermore, this approach enables us to see the effects of ill-posedness with almost no side effects originating from numerical errors due to machine precision and other machine-dependent errors. In addition, for Gaussian white noise, there is no difference at all in using diagonal matrices to investigate stochastic behavior. This follows from the invariance of white noise under the orthogonal transformation involved in the SVD.

We also wanted to ensure that we do not use, even accidently, specific features of the operator that would help the inversion, but cannot be found in practice (called “inverse crimes”; see, e.g., Colton and Kress 1998) . Therefore, we used a slight random perturbation of the sequence \(\sigma _{k}\). The procedure balances retaining the overall exponential decay behavior of the operator including the multiplicity of its singular values while providing some randomness in this component.

3.1.2 Solution Generation

Each time a solution x is generated, we use the following procedure (the variables OrderVector and NumberEigenvalues are the same as in Sect. 3.1:

-

\(HelpV ar = (\mathit{OrderVector(1: NumberEigenvalues}) + 1).^{\wedge }(-\nu );\)

-

\(\mathit{Sign} = 2 {\ast}\mathop{\mathrm{ceil}}\nolimits (2. {\ast}\mathop{\mathrm{rand}}\nolimits (\mathit{NumberEigenvalues},1)) - 3;\)

-

\(\mathit{Perturb} = 1 + 0.1 {\ast}\mathop{\mathrm{randn}}\nolimits (\mathit{NumberEigenvalues},1)\)

-

x = Sign . ∗ Perturb . ∗ HelpVar;

This can be interpreted as generating random Fourier coefficients x k with decay behavior \(\vert x_{k}\vert = \vert x_{k(m,j)}\vert \approx m^{-\nu }\) and random sign of equal probability. A larger value of ν gives a smoother solution x.

For such polynomial decay rates, the Sobolev lemma allows an interpretation in terms of differentiability, i.e., smoothness. In our setting with 2m + 1 eigenfunctions (spherical harmonics) of polynomial degree m, this means that we require a decay of the order of \(m^{-1-\varepsilon }\), for some \(\varepsilon > 0\) (i.e., ν > 1), to guarantee x is square integrable. Moreover, it is known (see, e.g., Freeden et al. 1998; Freeden 1999; Freeden and Michel 2004) that for ν > r + 2 the function defined by the Fourier coefficients x k , \(\vert x_{k}\vert = \vert x_{k(m,j)}\vert \approx m^{-\nu }\), corresponds to an x ∈ C (r), \(r \in \mathbb{N}_{ 0}\). Therefore, we only consider ν ≥ 2 in our tests.

An alternative way to generate solutions of different smoothness is through a source condition. However, using the standard source condition to define \(x = (A^{{\ast}}A)^{s}x_{0}\) for some \(x_{0} \in \mathcal{X}\) is not suitable for the downward continuation problem as it would imply \(x \in C^{(\infty )}\) which is of less interest in practice. A more appropriate logarithmic source condition could be used to generate the solutions, but it is easier to use the direct approach above. Furthermore, our approach also allows encapsulation in the software design, i.e., the solution should not “see” the operator and vice versa. Note that, because the theory of saturation is based on source conditions, it does not apply directly for our assumed form of x. Nevertheless, we see similar results and will call these saturation effects.

In our evaluation of the methods, we want to identify the effect of the smoothness of the solution, determined by the decay behavior of x and characterized by the parameter ν, and also the effect of the noise-to-signal ratio N2S. To avoid too much variability in these features of x for different replicates of x, we chose not to use a colored Gaussian variable for x as in Kaipio and Somersalo (2005) and Bauer (2007). For a Gaussian random variable, both the norm of x and the decay behavior of x vary over a large scale, which also affects the noise-to-signal ratio.

3.1.3 Noise Generation

We use a finite stochastic noise model. First, for each replicate y = Ax, the noise level δ is defined from the input noise-to-signal ratio N2S as \(\delta = N2S {\ast}\| y\|/\sqrt{q}\). Then, each time a noise vector is generated, we employ the same procedure as in Bauer and Lukas (2011): we transform y via the discrete cosine transform to the time domain, add (possibly serially correlated) noise with standard deviation δ, and transform back. The degree of correlation and color is determined by ω. By adding the noise in a different space from the one used to generate y = Ax, we avoided potential inverse crimes.

For ω = 0, the noise is simply Gaussian white noise. For ω ≠ 0, the noise is defined by a moving average process in which both the weights and order depend on ω. The noise has higher correlation for larger ω, and it has color red for ω > 0 and color blue for ω < 0 (the algorithm can be found in Bauer and Lukas 2011). This type of correlation is observed in many practical applications, for example, in geodesy (cf. Ditmar et al. 2003, 2007).

For our experiments, we considered two scenarios for the noise:

-

White noise, i.e., ω = 0

-

Colored noise of unknown random color, i.e., ω is chosen as a pseudorandom variate, uniformly distributed in the interval [−0. 5, 0. 5].

3.1.4 Regularization Operators

For the Tikhonov regularization parameter sequence α n = α 0 q α n, \(n \in \mathbb{N}\), in (4), we used 100 values from 1 to 10−14 with logarithmic equal spacing, i.e., α 0 = 1. 3849 and q α = 0. 7221. The set of test cases for Tikhonov regularization is given in Table 1. The parameter values for these cases were chosen to achieve a balance between speed and the representation of a reasonably wide set of problems. In addition, the parameter values are constrained so that the optimal regularization parameter lies clearly between 1 and 10−14, i.e., it is not near an endpoint.

For the sequence l(n) of cutoff points in (3), i.e., for spectral cutoff regularization, we used the same procedure as in Bauer and Lukas (2011). The cutoff points are chosen such that there is a minimum spacing of 3 and, more importantly, the cutoff point n + 1 is the first to possess an eigenvalue \(\sigma _{k(n+1)} <\sigma _{k(n)}/1.07\), where \(\sigma _{k(n)}\) is the eigenvalue corresponding to the cutoff point n. By this, we can avoid in most cases that there are two cutoff points corresponding to the same spherical harmonic degree m. This method is referred to as exponential spectral cutoff (ExpCutOff) , and it also achieves the minimax rate for optimal parameter choice (cf. Bauer and Pereverzev 2005).

The set of test cases we used for ExpCutOff is given in Table 2. Again, the parameter values for these cases are constrained so that the optimal parameter n opt has l(n opt ) clearly not near an endpoint.

3.1.5 Maximal Regularization Parameter

For most parameter choice methods, the use of a discrete set of regularization parameters with a fine enough resolution, as chosen above, does not alter the behavior of the method. Clearly, for the efficient implementation of these methods, it is useful to have a bound on the value of n opt (i.e., a maximal regularization parameter), especially if the method minimizes some function.

For a few parameter choice methods, e.g., the balancing principle (Sect. 4.6), a maximal index N is an essential input in the algorithm itself. The actual value of N is not crucial so long as n opt < N and, for the sake of computational efficiency, N is not too large. For both spectral cutoff and Tikhonov regularization in a stochastic setting, it is reasonable to define the maximal index as

where \(\mathbb{E}\|x_{n}^{0} - x_{n}^{\delta }\|^{2} =\delta ^{2}\varrho ^{2}(n)\) and \(\delta ^{2}\varrho ^{2}(\infty )\) is the supremum of the variance. We expect that n opt < N because if n > N, then it follows from (9) that \(\mathbb{E}\|x - x_{n}^{\delta }\|^{2} > 0.5\mathbb{E}\|x - x_{\infty }^{\delta }\|^{2}\), but we would expect \(\mathbb{E}\|x - x_{n_{opt}}^{\delta }\|^{2}\) to be much smaller than the right-hand side.

To obtain N in practice, one either has to have an analytic expression for \(\delta ^{2}\varrho ^{2}(n)\), as in (10) and (11) for white noise, or a good estimate of it. For any noise color, if several independent data sets are available, then a good estimate is

In the experiments, we use four data sets to obtain N for the methods that require a maximal parameter. Often, two sets of data are sufficient (see Bauer 2007 for further details).

Furthermore, so that all the parameter choice methods can be compared on an equal basis, we use the same maximal index N for all the methods. For many methods, the usage of N has almost no effect on the results, but for some methods, it has the beneficial effect of reducing the number of severely under-smoothed solutions (see also Bauer and Lukas 2011).

If, in practice, only a single data set is available, then it may not be possible to estimate \(\delta ^{2}\varrho ^{2}(n)\) if the noise is correlated with unknown covariance. Then one can define a maximal index N 1 by l(N 1) = q for spectral cutoff and by \(\alpha _{N_{1}} \approx \sigma _{q}^{2}\) for Tikhonov regularization. For the methods that perform much worse without the use of the maximal index N, the results for N and N 1 may be quite different. However, for the methods that perform essentially the same with or without the use of N, the results for N and N 1 will be very similar (see Bauer and Lukas 2011).

3.1.6 Runs and Organization of the Results

For each parameter choice method, we performed exactly the same experiments, constructed as follows:

-

For each of the cases in Tables 1 and 2, and for both white noise and random colored noise, we generated 4 operators A as described in Sect. 3.1.

-

For each operator A, we generated 8 solutions x as described in Sect. 3.1.

-

For each pair of (A, x), we generated 8 times 8 different noisy data vectors y δ as described in Sect. 3.1. In the colored noise scenario, each group of 8 data vectors has a different, randomly chosen color, and within the group, the color is the same.

This means that, for each test case and noise scenario, there are 2,048 inverse problems that need to be solved. The hierarchical structure was chosen in order to considerably reduce the computational cost. In total, this chapter is based on the solution of more than 3. 4 million inverse problems each with an operator of at least 40,000 singular values, in most cases more than twice that number.

For each parameter choice method, we will display the simulation results, i.e., the inefficiencies as defined by (6), in one figure, with four panels corresponding to Tikhonov and ExpCutOff regularization under both the white noise and colored noise scenarios. Each panel has the following features:

-

For each test case (denoted by \((h,\nu,\log (N2S))\), with \(M_{\max }\) determined from Tables 1 and 2), a box plot (marking the lower and upper quartiles) shows the distribution of computed inefficiencies for the method, with blue whiskers showing the range. The whiskers have maximum length of 4 times the interquartile range, and outliers beyond this are marked with a red + symbol.

-

For each test case, the red middle band in the box shows the median of the computed inefficiencies, and an open green dot shows the sample mean.

3.2 Error Comparison in Case of Optimal Solutions

To conclude this section, Fig. 1 shows the distribution of the optimal errors \(\|x - x_{n_{opt}}^{\delta }\|\) for Tikhonov and ExpCutOff regularization under both the white and colored noise scenarios. The test cases and replicates are the same as those used for the simulations in the next section (see Tables 1 and 2). The box plots are constructed in the same way as described in Sect. 3.1.

The optimal errors differ by several orders of magnitude across the test cases, mostly because of the different noise levels. In addition, there can be significant variability within one test case, especially for the colored noise scenario. Depending on whether the colored noise is at the blue end or red end, both regularization methods will find it easier or harder, respectively, to extract the solution x, compared to the white noise situation.

Furthermore, we can see some saturation effects for Tikhonov regularization. The errors for the parameter sets (300, 4, −8) and (300, 5, −8) or (500, 4, −8) and (500, 5, −8) (to a lesser degree also (300, 4, −10) and (300, 5, −10)) indicate there is no improvement as the smoothness increases beyond a certain value. Therefore, for our finite dimensional exponentially ill-posed problems, Tikhonov regularization behaves in a similar way as for a polynomially ill-posed problem, in line with the discussion at the end of Sect. 1.2.

4 Description and Evaluation of Methods

In this section, we will review most of the available parameter choice methods and evaluate them according to the process outlined in Sect. 3. For each method, we will start by describing the origin and rationale of the method. Then we will state the input requirements of the method and the algorithm that we use. This will be followed by a brief discussion of known theoretical and practical issues about the method, including whether the method works for other regularization methods. Finally, we will present the results of the numerical experiments for the method.

The methods are ordered as follows:

-

The first group requires knowledge of the noise level δ. We provide the correct δ in all our tests even though this is usually not available in practice. An estimation of the noise level might induce further errors.

-

The second group requires at least two independent sets of data as input.

-

The third group requires no knowledge about the noise.

Many of the methods use a tuning parameter or some other parameter that must be chosen. For each of these methods, we have used the tuning parameters of Bauer and Lukas (2011) to allow an easier comparison of the results. However, the setting might not be optimal for the problems here, and the optimal settings for different problems might vary significantly. Section 4.19 contains a list of methods that were not included in this study.

4.1 Discrepancy Principle

The discrepancy principle, which was originally proposed by Phillips (1962) and then developed and analyzed by Morozov (1966, 1984), is one of the oldest and most widely used parameter choice procedures (cf. Engl et al. 1996 and references therein). The rationale is simply that for a good regularized solution, the norm of the residual should match the noise level δ of the data. Although the method was originally developed in a deterministic setting, it has also been studied in a discrete, stochastic setting (see, e.g., Davies and Anderssen 1986; Lukas 1995; Vogel 2002) and a continuous stochastic setting (see Blanchard and Mathé 2012).

Method The method needs the following input:

-

Norms of residuals \(\{Ax_{n}^{\delta } - y^{\delta }\}_{n\leq N}\) until a certain bound is satisfied

-

Noise level δ

-

Tuning parameter τ

In a deterministic setting with \(\|y^{\delta } - y\| \leq \delta\), the parameter choice n ∗ is the first n such that \(\|Ax_{n}^{\delta } - y^{\delta }\| \leq \tau \delta\), where τ ≥ 1 is a tuning parameter. In our stochastic setting, with the error in each element of \(y^{\delta } \in \mathbb{R}^{ q}\) having standard deviation δ, the choice n ∗ is the first n such that

where we use τ = 1. 5.

Known Issues There has been a lot of work done on the convergence properties of this method (see, e.g., Groetsch 1984; Morozov 1984; Engl et al. 1996). The convergence rate in the deterministic setting for Tikhonov regularization is at the optimal order \(O(\delta ^{2s/(2s+1)})\) if s ∈ (0, 1∕2], but at the suboptimal order O(δ 1∕2) if s > 1∕2. For spectral cutoff, the discrepancy principle gives the optimal order for any value of s. Under a logarithmic source condition, the discrepancy principle attains the optimal order for both Tikhonov and spectral cutoff regularization (see Hohage 2000, Pereverzev and Schock 2000; Nair et al. 2003; Mathé 2006). In the stochastic setting with a data vector \(y^{\delta } \in \mathbb{R}^{ q}\) containing uncorrelated errors of variance δ 2, for Tikhonov regularization, it is known (cf. Davies and Anderssen 1986; Lukas 1995; Vogel 2002) that as the sample size \(q \rightarrow \infty \), the “expected” discrepancy principle estimate has the optimal rate for the prediction risk \(\mathbb{E}\|Ax_{n}^{\delta } - y\|^{2}\) (though, if τ = 1, the constant makes it over-smoothing). It is also order optimal for the \(\mathcal{X}\)-norm risk \(\mathbb{E}\|x_{n}^{\delta } - x\|^{2}\) if x is not too smooth relative to the operator A, but otherwise it is order suboptimal (under-smoothing). Lukas (1998b) shows that for τ = 1, the actual estimate is asymptotically unstable in a relative sense. For spectral cutoff, the “expected” estimate is order optimal for both the prediction risk and the \(\mathcal{X}\)-norm risk (cf. Vogel 2002). For a preconditioned Gaussian white noise model and general linear regularization, Blanchard and Mathé (2012) show that the discrepancy principle yields suboptimal rates as δ → 0.

The discrepancy principle is one of the fastest methods available, since one only needs to compute the residuals until the bound (14) is satisfied. However, it has the serious drawback that it needs an accurate estimate of the noise level; even small misestimations can lead to very poor solutions (see Hansen 1998, Chap. 7). The discrepancy principle has also been applied to and analyzed for various iterative regularization methods for linear and nonlinear problems in the deterministic setting (see Hanke and Hansen 1993; Engl et al. 1996; Engl and Scherzer 2000; Bakushinsky and Smirnova 2005; Kaltenbacher et al. 2008; Jin and Tautenhahn 2009; and the references therein). It has also been analyzed for conjugate gradient iteration in a continuous stochastic setting by Blanchard and Mathé (2012).

Results From Fig. 2, for white noise, the discrepancy principle performs reasonably well for spectral cutoff regularization, as well as for Tikhonov regularization with less smooth solutions. However, significant saturation effects can be seen for ν ≥ 4. The results for colored noise in Fig. 2 are mediocre, with quite a bit of variation. However, the performance for Tikhonov regularization is not much worse than it is for white noise.

4.2 Transformed Discrepancy Principle

Motivated by the instability of the discrepancy principle to an incorrect noise level, Raus (1990, 1992) and Hämarik and Raus (2006) developed a parameter choice method in a deterministic setting where the noise level in the data y δ is known only approximately as \(\hat{\delta }\).

Method The transformed discrepancy principle needs the following input:

-

Norms of transformed residuals \(\{C(Ax_{n}^{\delta } - y^{\delta })\}_{n\leq N}\) where the operator C depends on A, the regularization method, and its qualification

-

Rough estimate \(\hat{\delta }\) of the noise level δ

-

Tuning parameter b

For the stochastic case with \(y^{\delta } \in \mathbb{R}^{ q}\), it is assumed that a rough estimate \(\hat{\delta }\) of the error standard deviation δ is known. For Tikhonov regularization, one computes n ∗ as the least integer n for which

where \(\alpha _{n} =\alpha _{0}q_{\alpha }^{n}\) and b is some constant satisfying \(b >\gamma = ((1/4)^{1/4}(3/4)^{3/4})^{2} \approx 0.3248\). For spectral cutoff, one computes n ∗ as the least integer n for which

where b is some constant satisfying \(b >\gamma = 1/2\). We choose b = 1. 5γ for both regularization methods.

Known Issues Note that the right-hand side of (15) is an approximate scaled bound of the propagated noise error \(\|x_{n}^{0} - x_{n}^{\delta }\| \leq \delta /\sqrt{\alpha _{n}}\). On the left-hand side of (15) is the norm of the residual transformed to the domain space under the approximate inverse A n −1 of A. For this reason, we refer to this parameter choice method as the transformed discrepancy principle.

It was shown in Raus (1992) that, for deterministic noise, the method leads to optimal convergence rates when the noise level is known exactly, and it also converges under the assumption that \(\|y - y^{\delta }\| = O(\hat{\delta })\) as \(\hat{\delta }\rightarrow 0\). Consequently, it is more stable than the discrepancy principle in this case. No knowledge of the solution smoothness is required. A stronger result involving a deterministic oracle inequality was shown in Raus and Hämarik (2007) for the usual noise assumption. The method was also defined and shown to be convergent for problems where the operator is only known approximately as A η, where \(\|A^{\eta } - A\| \leq \eta\) (Raus 1992). Like the discrepancy principle, the method can be applied easily to iterative regularization methods.

Results Figure 3 shows that the transformed discrepancy principle has good to acceptable performance for all cases with white noise. Consistent with the theory, there is no saturation effect for Tikhonov regularization, so in many cases the results are significantly better than those for the discrepancy principle. However, there is substantial variation for some cases in the colored noise scenario. The results for spectral cutoff are slightly better than those of the discrepancy principle. In all situations, the results are very similar to those of the ME rule (Sect. 4.4).

4.3 Modified Discrepancy Principle (MD Rule)

The modified discrepancy principle (MD rule) was developed by Gfrerer (1987) and Engl and Gfrerer (1988) and independently by Raus (1984, 1985) for Tikhonov regularization and general regularization methods in a continuous, deterministic setting (see also Engl 1993 and Sects. 4.4 and 5.1 in Engl et al. 1996). The basic idea of the method is to minimize a bound on the squared error of the regularized solution derived from (7) to yield a practical a posteriori method with optimal convergence rates. It is also known as the Raus–Gfrerer rule or the minimum bound method. In Lukas (1998a), the method was adapted to the discrete, stochastic setting for Tikhonov regularization.

The method was developed for regularization methods defined using the spectrum of A ∗ A by \(x_{\alpha }^{\delta } = g_{\alpha }(A^{{\ast}}A)A^{{\ast}}y^{\delta }\), where \(\lim _{\alpha \rightarrow 0}g_{\alpha }(\lambda ) = 1/\lambda\). This includes Tikhonov regularization, for which \(g_{\alpha }(\lambda ) = 1/(\lambda +\alpha )\). For such methods, one can derive from (7) a bound on the squared error of the form

The minimizer of (17) is defined by

By using y δ in place of y, the parameter choice is defined by f(α, y δ) = δ 2 or f(α, y δ) = τ 2 δ 2 for a tuning parameter τ.

Method To use the MD rule, we need to be able to compute \((\varphi ^{2})'(\alpha,y)\) and \((\varrho ^{2})'(\alpha )\) which can be done effectively for Tikhonov and other regularization methods (see Engl and Gfrerer 1988 and Engl et al. 1996, Sect. 5.1). The method can also be applied to regularization methods with a discrete parameter, including spectral cutoff, with the derivatives above replaced by differences. The required input consists of the following:

-

All regularized solutions \(\{x_{n}^{\delta }\}_{n\leq N}\) until a certain bound is satisfied

-

Noise level δ

-

Tuning parameter τ

For Tikhonov regularization, the function \(\varrho ^{2}(\alpha )\) in the bound (17) is \(\varrho ^{2}(\alpha ) =\alpha ^{-1}\), and the parameter choice is defined by

Using the discrete set \(\{\alpha _{n} =\alpha _{0}q_{\alpha }^{n}\}\), we can approximate the derivative \(dx_{\alpha }^{\delta }/d\alpha\) on the left-hand side with \((x_{n}^{\delta } - x_{n+1}^{\delta })(-\alpha _{n}\log q_{\alpha })^{-1}\). For spectral cutoff regularization, the term \(\delta ^{2}\varrho ^{2}(\alpha )\) in (17) is \(\delta ^{2}\sigma _{l(n)}^{-2}\), and the method can be adapted by using differences. Thus, the parameter choice n ∗ is defined as the first n such that

where

For the tuning parameter, we use τ = 1. 5 for Tikhonov regularization and τ = 0. 5 for spectral cutoff regularization. Note that, for spectral cutoff, this rule is different from the MD rule defined in Raus and Hämarik (2007) since the latter is just the usual discrepancy principle for this regularization method.

Known Issues The MD rule was a significant advance on the discrepancy principle because it achieves the optimal rate of convergence as δ → 0 for deterministic noise, and it does so without any knowledge of the smoothness of the solution x (cf. Gfrerer 1987; Engl and Gfrerer 1988). The method also yields optimal rates for finite dimensional implementations (cf. Groetsch and Neubauer 1989) and when the operator is only known approximately (cf. Neubauer 1988). A deterministic oracle inequality is known for the MD rule with Tikhonov regularization (Raus and Hämarik 2007; Hämarik et al. 2012).

The discrete, stochastic version of the method, with a particular tuning constant, is asymptotically (as \(q \rightarrow \infty \)) equivalent to an unbiased risk method (see Lukas 1998a; Cavalier et al. 2002), and, in expectation, it yields the optimal convergence rate. The unbiased risk method chooses the parameter by minimizing an unbiased (for white noise) estimate of the risk, i.e., the expected squared error. However, in Lukas (1998b), it is shown asymptotically and by simulations that both of these methods are unstable and have high variability (see also Cavalier and Golubev (2006) for the unbiased risk method). By changing the tuning parameter, it is possible to improve the stability of the method.

The modified discrepancy principle can also be applied to iterative regularization methods for linear problems, and it achieves optimal convergence rates in a deterministic setting (cf. Engl and Gfrerer 1988). For nonlinear problems, the method has been extended for Tikhonov regularization and shown to yield optimal rates (see Scherzer et al. 1993; Jin and Hou 1999). In addition, it has been proposed in Jin (2000) as the stopping rule for the iteratively regularized Gauss–Newton method yielding again optimal rates.

Results As seen in Fig. 4, the MD rule performs very well in the white noise situation, especially for spectral cutoff regularization. However, it does not perform quite so well for colored noise; in particular, test cases with smoother solutions show a lot of variability. Consistent with the theory, the median results are much better than those of the discrepancy principle in the cases with smoother solutions.

4.4 Monotone Error (ME) Rule

The monotone error (ME) rule was proposed in Alifanov and Rumyantsev (1979), Hämarik and Raus (1999), and Tautenhahn and Hämarik (1999) for various regularization methods in a deterministic setting, and it was extensively discussed along with other similar parameter choice rules by Hämarik and Tautenhahn (2001, 2003). The rule is based on the observation that if n is too small (i.e., too much smoothing), then the error \(\|x - x_{n}^{\delta }\|\) (like the regularization error \(\|x - x_{n}^{0}\|\)) decreases monotonically as n increases.

Method As input one needs the following:

-

All regularized solutions \(\{x_{n}^{\delta }\}_{n\leq N}\) until a certain bound is satisfied

-

Noise level δ

-

Tuning parameter τ

In contrast to similar methods, no tuning parameter is required. However, in order to gain some security in the case that δ is only known roughly, a tuning parameter τ ≥ 1 is advisable. For continuous regularization methods, the algorithm is formulated by differentiating with respect to the regularization parameter α. The parameter choice α ∗ is the largest α such that

In order to use it in our framework, we have generated a simple discretized version by replacing the differentials with adjacent differences. Then, in the stochastic setting with \(y^{\delta } \in \mathbb{R}^{ q}\) containing errors of standard deviation δ, the parameter choice n ∗ is the first n such that

We take τ = 1. 5 for Tikhonov regularization and τ = 0. 75 for spectral cutoff regularization.

Known Issues For Tikhonov regularization (and iterated Tikhonov regularization) in a deterministic setting, the ME rule has some favorable properties (cf. Tautenhahn and Hämarik 1999). If α > α ∗, then the error \(\|x - x_{\alpha }^{\delta }\|\) decreases monotonically as α is decreased and so \(\|x - x_{\alpha _{{\ast}}}^{\delta }\| <\| x - x_{\alpha }^{\delta }\|\), which provides a useful bound for parameter selection. Unlike the discrepancy principle (Sect. 4.1), the ME rule is order optimal for the maximal range of the smoothness index (up to the qualification). In addition, for any noise level δ, it leads to smaller errors than the modified discrepancy rule (Sect. 4.3) for the same tuning parameter. With precisely known δ, the optimal tuning parameter is τ = 1. In the case of spectral cutoff regularization, no optimality results are known.

In Hämarik and Raus (2009) an alternative discretized version to (20) defines n ∗ as the first n such that

This version has the advantage that, like the continuous version, the error in x n δ decreases monotonically as n is increased for n < n ∗.

The ME rule can also be applied to iterative regularization methods, in particular Landweber iteration, for which it is order optimal (see Hämarik and Tautenhahn 2001, 2003).

Results Figure 5 shows that the ME rule has mostly good to acceptable performance for all cases with white noise. There is substantial variation for some cases in the colored noise scenario. The performance for Tikhonov regularization is slightly better than that of the modified discrepancy rule in Fig. 4, which is consistent with the theory. For both Tikhonov and spectral cutoff regularization, the performance is almost identical to that of the transformed discrepancy principle in Sect. 4.2.

4.5 Varying Discrepancy Principle

This method, due to Lu and Mathé (2014) and based on Blanchard and Mathé (2012), was developed by modifying the usual discrepancy principle to take into account stochastic noise and to achieve optimal convergence rates in a continuous stochastic setting. The modification involves a certain weighted form of the discrepancy with a varying weight.

Method The varying discrepancy principle needs the following input:

-

Norms of weighted residuals \(\{(\lambda _{n}I + A^{{\ast}}A)^{-1/2}A^{{\ast}}(Ax_{n}^{\delta } - y^{\delta })\}_{n\leq N}\)

-

The effective dimension, i.e., the trace of \((\lambda _{n}I + A^{{\ast}}A)^{-1}A^{{\ast}}A\)

-

The noise level δ, where the noise is assumed to be Gaussian white

-

Tuning parameter τ

The parameter choice is the first n such that

where \(\lambda _{n} =\alpha _{n}\) for Tikhonov regularization and \(\lambda _{n} =\sigma _{ l(n)}^{2}\) for spectral cutoff. For the tuning parameter τ > 1, we use τ = 4 for Tikhonov regularization and τ = 1. 1 for spectral cutoff.

Known Issues The rule was developed for general linear regularization methods as well as conjugate gradient iteration. In Blanchard and Mathé (2012) the discrepancy is weighted using a fixed weight parameter \(\lambda\), and it is shown that this weighted discrepancy principle is order optimal as δ → 0 for an appropriate choice of \(\lambda\) depending on the solution smoothness. Based on this optimality result, Lu and Mathé (2014) proposed varying \(\lambda\) yielding the a posteriori method above. It is shown that the method is order optimal provided that the solution has a certain self-similarity property.

In Blanchard and Mathé (2012) and Lu and Mathé (2014), the regular methods are combined with an “emergency stop” condition, which defines a maximal regularization parameter. We have not implemented this condition here, but we have used the maximal regularization parameter that is common to all the methods evaluated.

Results As seen in Fig. 6, there are significant saturation effects for ν ≥ 4 in the Tikhonov case. Apart from that, the performance with white noise is good, almost as good as for the MD rule in Sect. 4.3. For colored noise, the varying discrepancy principle does not perform quite so well; in particular, test cases with smoother solutions show a lot of variability.

4.6 Balancing Principle

The balancing principle, due to Lepskij (1990), was originally derived for statistical estimation from direct observations in a white noise model. Since then, it has been developed further for regularization of linear inverse problems (see, e.g., Goldenshluger and Pereverzev 2000; Tsybakov 2000; Mathé and Pereverzev 2003; Bauer and Pereverzev 2005; Mathé and Pereverzev 2006) and nonlinear inverse problems (cf. Bauer and Hohage 2005; Bauer et al. 2009) in deterministic and stochastic settings. The principle aims to balance the known propagated noise error bound \(\delta \varrho (n)\) in (8) with the unknown regularization error (7) by an adaptive procedure that employs a collection of differences of regularized solutions.

Method As input one needs the following:

-

Maximal index N.

-

All regularized solutions \(\{x_{n}^{\delta }\}_{n\leq N}\) up to N.

-

An upper bound \(\delta \varrho (n)\) for the propagated noise error \(\|x_{n}^{0} - x_{n}^{\delta }\|\); bounds are known for spectral cutoff and Tikhonov regularization (see after (8)). In the stochastic setting, a bound or estimate \(\delta ^{2}\varrho ^{2}(n)\) of the variance \(\mathbb{E}\|x_{n}^{0} - x_{n}^{\delta }\|^{2}\).

-

Noise level δ (and the covariance in the stochastic setting if known). Then one can use known expressions for \(\delta \varrho (n)\). Alternatively, if one has two or more independent sets of data y i δ, then \(\mathbb{E}\|x_{n}^{0} - x_{n}^{\delta }\|^{2}\) can be estimated by (13).

-

Tuning constant κ.

Define the balancing functional by

The smoothed balancing functional (which is monotonically decreasing) is defined as \(B(n) =\max \nolimits _{n\leq k\leq N}\left \{b(k)\right \}\). Then the parameter choice n ∗ is the first n such that B(n) ≤ κ.

In our implementation, we take κ = 1. We will consider two input scenarios:

-

1.

There is one data set with δ as the only known property of the noise, in which case we use the known expressions (10) and (11) of \(\delta ^{2}\varrho ^{2}(n)\) for the white noise model; we refer to this method as the balancing principle (white). These results are given in Fig. 7.

-

2.

Four independent sets of data y i δ are available, in which case we use the estimate (13) for \(\delta ^{2}\varrho ^{2}(n)\). Figure 8 shows the results for the fast balancing variant that is described in the next paragraph.

In each situation, we use the maximal index N as defined in (12).

Known Issues The balancing principle is one of the few parameter choice methods for which oracle inequalities for the error are known (cf. Raus and Hämarik 2007; Bauer et al. 2009), i.e., there are stronger results than rates of convergence alone. In particular, the balancing principle does not have a saturation problem, and, for stochastic noise, it is optimal up to at most a \(\log (1/\delta )\) factor (which has not been observed in practice).

According to the theory (cf. Mathé and Pereverzev 2003), the constant κ should be 1 in the deterministic setting. In the stochastic setting with continuous Gaussian white noise, κ should be \(O((\log \delta ^{-1})^{1/2})\) for mildly ill-posed problems and \(O((\log \log \delta ^{-1})^{1/2})\) for severely ill-posed problems (cf. Bauer and Pereverzev 2005).

The constant κ acts as a stability parameter. The method is very stable when κ is chosen sufficiently large, but it can be quite unstable if κ is chosen too small. A choice of κ ∈ [0. 5, 1. 5] appears to give good results, independent of the noise situation and the inverse problem.

Instead of the balancing functional in (21), one can use the modified version

where ℓ(n) is an increasing sequence for which \(\varrho (\ell(n))/\varrho (n) \geq \beta > 1\) for some constant β. This definition requires fewer evaluation steps while also giving a convergent method (see Mathé and Pereverzev 2003; Bauer and Munk 2007). Our experience is that, provided β is big enough so that ℓ(n) − n ≥ 4, the results are not distinguishable from those of the original version. In the experiments reported here, we used ℓ(n) defined by \(\varrho (\ell(n))/\varrho (n) = 2\). The choice of n ∗ as the first n with b(n) ≤ κ (b(n) as in (22)) is called the fast balancing principle. This method has the advantage of requiring a much lower number of computations than the original version. For the fast balancing principle with \(\ell(n) = n + 1\) in (22), optimality results are known (cf. Hämarik and Raus 2009) for Tikhonov and other regularization methods with deterministic noise, given either the actual or an approximate noise level.

For a white noise model, instead of using several independent data sets or the analytic expressions (10) and (11) of \(\delta ^{2}\varrho ^{2}(n)\), one can use the Monte Carlo estimate \(\varrho ^{2}(n) \approx \mathrm{Mean}\|A_{n}^{-1}\hat{\xi }_{i}\|^{2}\), where \(\hat{\xi }_{i}\) are synthetic vectors of independent standard normal pseudorandom variates, together with a known or estimated value of the noise variance δ 2. The same estimate applies to any regularization method with linear regularization operator A n −1.

Results In our experiments, the results for the balancing principle and its fast version with the same input are very hard to distinguish, and therefore we included only the figure for the fast variant (Fig. 8). Both are listed in Table 4 in our conclusion (Sect. 5).

The results in Figs. 7 and 8 for the white noise situation are almost identical for both Tikhonov and spectral cutoff regularization (slightly better for balancing principle (white) in Fig. 7). The method is stable and performs quite well. In Fig. 8, the results for colored noise are quite similar (with only slightly more variation) to those for white noise, since here the method adapts automatically to the noise color. On the other hand, in Fig. 7, there are a considerable number of outliers for the colored noise scenario, in particular for smoother solutions x.

4.7 Hardened Balancing Principle

The hardened balancing principle is a modified version of the balancing principle of Sect. 4.6 in the stochastic setting and was first proposed in Bauer (2007). It has the advantage that it does not require a tuning parameter.

Method The input is the same as for the balancing principle in Sect. 4.6 without the tuning parameter and the noise level, so it can be computed parallel to this one. Furthermore, an expression or approximation of the scaled variance \(\varrho ^{2}(n) =\delta ^{-2}\mathbb{E}\|x_{n}^{0} - x_{n}^{\delta }\|^{2}\), or any scalar multiple of this (so δ can be unknown), is required. Define the balancing functional b(n) as in (21) and smoothed balancing functional \(B(n) =\max \nolimits _{n\leq k\leq N}\left \{b(k)\right \}\). The parameter choice is

Obviously, the same choice is obtained if any scalar multiple of \(\varrho (n)\) is used in its place. We use the same two inputs for (scaled) \(\varrho (n)\) as for the balancing principle:

-

1.

To address the situation of one data set with no knowledge about the noise, we use the known expressions (10) and (11) of \(\delta ^{2}\varrho ^{2}(n)\) for the white noise model. We refer to this method as the hardened balancing principle (white), and its results are depicted in Fig. 9.

-

2.

We estimate \(\delta ^{2}\varrho ^{2}(n)\) using (13) with four independent sets of data y i δ. Figure 10 shows the results.

In each situation, we use the maximal index N in (12). For better computational efficiency, as for the balancing principle, one can use the modified version of the balancing functional defined in (22) instead of (21). In our experiments, we used the modified version with ℓ(n) defined by \(\varrho (\ell(n))/\varrho (n) = 2\).

Known Issues For spectral cutoff regularization, a version of the hardened balancing principle has been analyzed by Bauer and Reiß (2008) in a Bayesian framework, with stochastic x and noise, and sequence data. It is shown that the parameter choice satisfies an oracle inequality and is optimal (up to a constant independent of the noise level) with respect to the risk for some error moment. As a consequence, the method is not prone to saturation. Because the basis of the proofs is the same as for the quasi-optimality criterion, it is very likely that similar results hold for Tikhonov regularization, even with deterministic source conditions (cf. Bauer and Kindermann 2008).

Numerical experiments in Bauer (2007) and Bauer and Reiß (2008) indicate that the method is very stable. Some care needs to be taken when the Tikhonov regularization parameter becomes smaller than the smallest eigenvalue of A ∗ A. However, this situation can be easily (and automatically) detected in practice by choosing N as in (12).

All remarks in Sect. 4.6 concerning the choice of \(\varrho (n)\) also hold true for the hardened balancing principle. In particular, for the white noise model, one can use the Monte Carlo estimate of \(\varrho ^{2}(n)\) as for the balancing principle in Sect. 4.6.

Results Figure 9 displays the results for the hardened balancing principle (white), which uses the variance expressions (10) and (11) for the white noise model. As expected, it performs very well in the white noise situation, with results that are almost identical to those in Fig. 10. In the colored noise situation, there is much more variability – note that there are extreme outliers that lead to some large means in the spectral cutoff case. The performance for Tikhonov regularization is better in the cases with less smooth solutions.

Figure 10 shows that the hardened balancing principle using four independent data sets for variance estimation is extremely stable for both white and colored noise, and it has excellent efficiency values in all the test cases for both Tikhonov and spectral cutoff regularization.

4.8 Quasi-optimality Criterion

The quasi-optimality criterion (see, e.g., Tikhonov and Arsenin 1977; Hofmann 1986) is one of the oldest and simplest available parameter choice methods. It was originally introduced by Tikhonov and Glasko (1965) and became better known from the continuous version proposed by Tikhonov and Arsenin (1977). A good overview of the method and its history can be found in Bauer and Kindermann (2009).

Method As input for a certain minimization, one needs the following:

-

Maximal index N, a suitable value is defined in (12)

-

All regularized solutions \(\{x_{n}^{\delta }\}_{n\leq N}\) up to N

The noise level does not need to be known, and there is no tuning parameter. The parameter choice n ∗ is defined simply as

The continuous version for Tikhonov regularization defines the parameter choice by \(\alpha _{{\ast}} =\mathop{ \mathrm{{\ast}}}\nolimits argmin\left \|\alpha \frac{d} {d\alpha }x_{\alpha }^{\delta }\right \|\). Using a difference quotient in place of the derivative for the discrete parameters \(\alpha _{n} =\alpha _{0}q_{\alpha }^{n}\), it is clear that these versions are consistent.

Known Issues For a discrete set of regularization parameters, the use of a suitable maximal index is essential. This is because the method is based on a discrete evaluation of a differential and hence is very sensitive to a situation where the regularization operators A n −1 are formally different, but are practically the same due to the finiteness of the considered problem. This happens especially when the regularization parameter α n for Tikhonov regularization falls below the smallest eigenvalue of the operator A ∗ A. This issue does not apply to infinite dimensional inverse problems (case C1) and, therefore, is rarely considered in the inverse problems literature. In order for the quasi-optimality criterion to be successful for spectral cutoff regularization, the cutoff points l(n) need to be chosen carefully and far enough apart (cf. Bauer and Reiß 2008).

The noise can be stochastic (white or colored) or deterministic, but more care is advised in the deterministic setting because of the conditions on the error (see Bauer and Kindermann 2008).

Convergence of Tikhonov regularization with the quasi-optimality criterion was shown in Leonov (1979) in the discrete (i.e., not ill-posed, but only ill-conditioned) case with deterministic noise. The question of convergence in infinite dimensional spaces was discussed in Glasko and Kriksin (1984), where abstract conditions were given.

Although it has been used successfully in a number of practical situations (see, e.g., Pohl et al. 2001), the first more concrete proofs for the quasi-optimality criterion were provided only quite recently – in Bauer and Reiß (2008) for spectral cutoff regularization in a Bayesian setting and in Bauer and Kindermann (2008) for Tikhonov regularization with either deterministic or stochastic noise. In both papers, oracle inequalities are derived under certain conditions, which show that the method is near optimal and is not prone to saturation. One of the conditions is that the noise has weight in all frequency components (so, e.g., it is not band limited), which is usually true in practice. A more theoretical analysis, including convergence properties, in some deterministic settings was derived in Kindermann and Neubauer (2008) and Neubauer (2008) and generalized in Kindermann (2011).

For iterative regularization methods, less is known about the behavior of the quasi-optimality criterion. There are some convergence results in Kindermann and Neubauer (2008), Neubauer (2008), and Kindermann (2011), for deterministic noise, in particular a slow convergence rate for Landweber iteration.

Results As seen in Fig. 11, the quasi-optimality criterion has excellent performance across all the test cases for spectral cutoff and Tikhonov regularization with either white or colored noise. Keeping in mind that it is computationally the simplest of the available methods and that it does not require the noise level, the performance is remarkable.

4.9 L-Curve Method

The L-curve method, proposed by Hansen (1992, 1998) and Hansen and O’Leary (1993), is based on the long-known fact that a log-log parametric plot of \((\|Ax_{n}^{\delta } - y^{\delta }\|,\|x_{n}^{\delta }\|)\) often has a distinct L-shape (cf. Lawson and Hanson 1974). Points on the vertical part correspond to large n (under-smoothed solutions) and those on the horizontal part correspond to small n (over-smoothed solutions), which suggests that the “corner point” of the L-curve should define a good value of the parameter n. Due to its simplicity and intuitive appeal, the method became popular in a number of application areas.

Method As input to minimize a certain function, one needs the following:

-

Norms of all residuals \(\{Ax_{n}^{\delta } - y^{\delta }\}_{n\leq N}\)

-

Norms of the regularized solutions \(\{x_{n}^{\delta }\}_{n\leq N}\)

The noise level does not need to be known. The parameter choice is defined by the product of the norms of the residual and regularized solution, i.e.,

Here the “corner point” is defined by the slope of its “tangent” being − 1 as in Reginska (1996) (see also Engl et al. 1996). The generalizations minimize \(\|Ax_{n}^{\delta } - y^{\delta }\|\|x_{n}^{\delta }\|^{\tau }\) (see Reginska 1996; Lu and Mathé 2013), where τ is a tuning constant, but we will not consider this here.

Known Issues One of the problems with the L-curve approach is that the “corner point” is not a well-defined notion, and several algorithms have evolved with different definitions. The first algorithm for Tikhonov regularization used the maximum curvature of the L-curve (see Hansen and O’Leary 1993; Calvetti et al. 2002). The above algorithm by Reginska (1996) is a simpler alternative, which can also be applied with discrete regularization parameters. Our experience (see also Johnstone and Gulrajani 2000) is that these algorithms have similar performance. Other algorithms are given in Hansen et al. (2007) and the references therein.

Recently, Lu and Mathé (2013) derived the first rigorous optimality result for the L-curve criterion. They showed, in the deterministic setting, that if the L-curve functional defined above has an interior global minimizer, then this choice satisfies a certain optimality property. However, there is no guarantee that there will be such a minimizer. In many (but not all) problems, the L-curve method (in its various forms) has been observed to give a reasonably good and robust parameter choice, and it can cope with correlated errors (see, e.g., Hanke and Hansen 1993; Hansen and O’Leary 1993; Hansen 1998; Hansen et al. 2007; Abascal et al. 2008; Åkesson and Daun 2008; Correia et al. 2009).

However, it is known theoretically that the L-curve method (from Hansen and O’Leary 1993) has serious limitations (cf. Engl et al. 1996; Yagola et al. 2002): the L-curve corner may not even exist and it is not convergent, neither in the deterministic setting (under-smoothing) nor in the stochastic setting (over-smoothing). These effects have been observed in numerical experiments (see, e.g., Johnstone and Gulrajani 2000; Hansen 2001). Moreover, finding the L-curve corner is hard to automate, in particular with discrete regularization parameters. For more details, we refer to Hanke (1996), Reginska (1996), and Vogel (1996, 2002).

The L-curve method can also be extended to various iterative regularization methods (see Hanke and Hansen 1993, Hansen 1998, Kilmer and O’Leary 2001, Farquharson and Oldenburg 2004, Kindermann 2011).

Results As seen in Fig. 12, the L-curve method has very poor performance across all the test cases for both Tikhonov and spectral cutoff regularization. Note that “missing” parts in the panels indicate that the inefficiency is very large!

4.10 Extrapolated Error Method

This method, developed by Brezinski et al. (2008, 2009) for discrete ill-posed problems, chooses the regularization parameter by minimizing an estimate of the 2-norm error \(\|x - x_{n}^{\delta }\|\) found by an extrapolation procedure.

Method The input required for a certain minimization is:

-

Norms of all residuals \(\{r_{n} = y^{\delta } - Ax_{n}^{\delta }\}_{n\leq N}\)

-

Norms of transformed residuals \(\{A^{{\ast}}r_{n}\}_{n\leq N}\)

The parameter choice is