Abstract

In this paper, an interactive version of the ParEGO algorithm is introduced for identifying most preferred solutions for computationally expensive multiobjective optimization problems. It enables a decision maker to guide the search with her preferences and change them in case new insight is gained about the feasibility of the preferences. At each interaction, the decision maker is shown a subset of non-dominated solutions and she is assumed to provide her preferences in the form of preferred ranges for each objective. Internally, the algorithm samples reference points within the hyperbox defined by the preferred ranges in the objective space and uses a DACE model to approximate an achievement (scalarizing) function as a single objective to scalarize the problem. The resulting solution is then evaluated with the real objective functions and used to improve the DACE model in further iterations. The potential of the proposed algorithm is illustrated via a four-objective optimization problem related to water management with promising results.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

- Surrogate-based optimization

- Interactive multiobjective optimization

- Preference information

- Computational cost

- Visualization

1 Introduction

Multiobjective optimization problems have several conflicting objective functions to be optimized simultaneously. Due to the conflict, optimal solutions have inherent trade-offs between the objectives and are called Pareto optimal solutions where none of the objectives can be improved without impairing some other one [15]. Without any additional information, all the Pareto optimal solutions are equally good. On a general level, one can see two approaches for solving multiobjective optimization problems: (1) approximate the whole Pareto front, that is, the set of all Pareto optimal solutions or (2) identify the most preferred Pareto optimal solution(s) utilizing preference information from a human decision maker. In this paper, we concentrate on the latter approach.

Decision maker preferences are nowadays widely used to find most preferred solutions in both multiple criteria decision making and evolutionary multiobjective optimization communities (see e.g. [2, 4, 15, 21]). Preferences can be used before optimization, after optimization, or iteratively during the optimization process [15]. The methods belonging to the latter approach are called interactive multiobjective optimization methods where the decision maker is iteratively involved in the solution process. The benefits of interactive methods include learning about the interdependencies between the conflicting objectives and ones preferences especially for problems with a high number of objectives. This new knowledge gained during the interactive solution process may necessitate adjusting decision maker’s preferences. Interactive methods indeed allow the decision maker to change her preferences which is not possible if the preferences are asked before optimization (see, e.g., [22]). Therefore, they have been found promising for solving real-world problems [17, 18].

In computationally expensive multiobjective optimization problems, a single evaluation of the objective and/or constraint functions takes considerable amount of time. To be able to tackle such problems, surrogate-based approaches are commonly used where the idea is to approximate the problem with simpler functions that are faster to evaluate [10, 13, 24, 26]. There are different ways of using surrogates in multiobjective optimization [24], for example, (1) to approximate each objective function separately and apply any suitable multiobjective optimization algorithm by using the approximated functions (the most widely used approach, see e.g. [3, 10, 24]), (2) to approximate a scalarizing function that converts multiple objectives together with decision maker preferences into a single objective optimization problem [7, 12], or (3) to approximate directly the Pareto front [6, 9, 19]. However from decision making point of view, providing most preferred solutions to the decision maker for computationally expensive multiobjective optimization problems was identified as a future research challenge in a recent survey [24].

The contribution of this paper is to introduce an interactive multiobjective optimization method for computationally expensive multiobjective optimization problems to partly answer the challenge mentioned above. It is based on the ParEGO algorithm [12] developed for approximating the whole Pareto front for computationally expensive multiobjective optimization problems. ParEGO uses a weighted Tchebycheff function to convert multiple objectives into a single objective optimization problem and randomly generates weights at each iteration to be able to approximate the whole Pareto front. Since ParEGO uses a surrogate to approximate a scalarization function, it is convenient to add also decision maker preferences and, thus, try to approximate only preferred parts of the Pareto front. This is done by replacing the weighted Tchebycheff function with an achievement scalarizing function and using reference points instead of weights to include decision maker preferences. The decision maker guides the search by specifying preferred ranges for each objective function. By specifying ranges, the decision maker deals directly with objective function values (as opposed to e.g. weights that have no clear meaning) and it allows the decision maker to explore more if she does not have exact values in mind. When compared to approaches that use surrogates to approximate directly the whole Pareto front (e.g. [6, 9, 19]), the benefit of interactive ParEGO is that it does not need any pre-computed Pareto optimal solutions as an input. On the other hand, when compared to the approaches that use surrogates for each objective separately, the number of surrogates used is always one independently of the number of objectives used.

The rest of the paper is organized as follows. Section 2 presents the problem formulation along with brief introduction of the basics of the ParEGO algorithm. In Sect. 3, ideas of incorporating decision maker preferences into ParEGO are discussed and the interactive ParEGO algorithm is introduced. Section 4 illustrates the potential and ability of interactive ParEGO to utilize changing preference information through a four-objective example related to water management. Finally, the paper ends with conclusions and future research ideas presented in Sect. 5.

2 Basics of ParEGO

We consider a multiobjective optimization problem

where all k objective functions \(f_i: \mathbb {R}^{n} \rightarrow \mathbb {R}^{} \), \(i=1,\dots ,k\), are to be minimized. The feasible region \(S\subset \mathbb {R}^{n} \) denotes the set of feasible decision variable values \( \varvec{x} =(x_1,\dots ,x_n)^T\). Here we assume that the function evaluations are costly in the sense of taking a long time.

The ParEGO algorithm [12] was developed for computationally expensive multiobjective optimization problems and it is based on the efficient global optimization (EGO) algorithm [11]. In ParEGO, the multiobjective problem (1) is converted into a single objective optimization problem by using the augmented Tchebycheff scalarizing function

where \( \varvec{w} =(w_1,\dots ,w_k)^T\), \(w_i\ge 0\) and \(\sum _{i=1,\dots ,k}w_i=1\). The term containing \(\rho >0\) is called an augmentation term and it is used to guarantee that the solutions of problem (2) are Pareto optimal [15]. An important reason for choosing the Tchebycheff scalarization was that it can find Pareto optimal solutions also in the non-convex part of the Pareto front. The overall goal in ParEGO is to find an approximation for the whole Pareto front. Next, the basic idea of ParEGO is briefly described.

ParEGO starts by using the latin hypercube sampling to find \(11n-1\) points in the decision space which are then evaluated by the real functions. Those points are then used to train a Kriging-based design and analysis of computer experiments (DACE) model to approximate the objective function in problem (2). To train the DACE model, Nelder and Mead downhill simplex algorithm is used to maximize the likelihood. Then at each iteration of ParEGO, a new point to be evaluated with the real functions is determined by using a single objective genetic algorithm to maximize the expected improvement. After finding the new point, it is evaluated with the real functions and, then, the DACE model is re-trained by considering also the new point. The updated DACE model is then used to find the next point and this iteration continues until the budget for function evaluations with the real functions is exhausted.

We can make some important observations of ParEGO. Note that at each iteration, one new point is evaluated with the real functions. It is possible to use all the points evaluated so far with the real functions to train the DACE model. However, when the number of the points increases, the more time training takes. It is also possible to use only some subset of the points in training in order to reduce the training time while compromising the accuracy if needed [5]. Further, for each iteration, the weight vector \( \varvec{w} \) used in problem (2) is randomly generated in order to finally cover the whole Pareto front. In practice, ParEGO does not set any limitations for the number of objective functions to be considered since it only affects on the generation of the weight vectors. However, the current implementation found in https://github.com/CristinaCristescu/ParEGO_Eigen supports only problems up to four objective functions [5]. More details of ParEGO can be found in [12].

3 Preferences with ParEGO

Next we discuss how to include decision maker preferences into ParEGO. A natural way for this would be to apply the ideas of the interactive weighted Tchebycheff procedure introduced in [23] where the idea is to reduce the space of feasible weight vectors based on preferences from the decision maker. At each iteration, the decision maker sees some Pareto optimal solutions and is asked to select the most preferred one. Based on the selection, the weight vector space is reduced and the new Pareto optimal solutions are generated by using the reduced space of weight vectors. The limitations of the interactive weighted Tchebycheff approach include that one can not go back to the part of the space that has been eliminated (i.e. change preferences). It means that an important property of interactive approaches, a possibility to change one’s mind, is not available. For that reason, we do not go towards that path but take another approach utilizing achievement (scalarizing) function (ACH) [25] and preferred ranges for the objective function values as a form of preference information that will enable the decision maker to change her mind during the interactive decision making process. Next, our approach is discussed in more details.

3.1 A Priori Preferences

We start by discussing how to include a priori preferences to ParEGO, that is, how to handle fixed preferences expressed before optimization. That will also serve as a building block for interactive ParEGO described later. As mentioned above, we chose to use preferred ranges for objectives as decision maker preferences since dealing directly with objective function values is found cognitively easy way of expressing preferences for the decision maker [14]. Preferred ranges will result in a k-dimensional hyperbox \(H = \{ \varvec{z} \in \mathbb {R}^{k} |a_i\le z_i \le b_i\}\) in the objective space. In practice, this hyperbox can be considered as either (1) optimistic which means that it does not contain any feasible solutions, (2) pessimistic where all the solutions are dominated or (3) neither optimistic nor pessimistic which means that it intersects with the Pareto front [7]. Different types of hyperboxes are illustrated in Fig. 1.

Further, multiple objectives are scalarized by using ACH instead of the weighted Tchebycheff scalarizing function used in original ParEGO. The mathematical formulation of the augmented ACH used here is

The weights typically used with ACH are \(w=( \varvec{z} ^{nad}- \varvec{z} ^{ideal})^{-1}\) where \( \varvec{z} ^{ideal}\) and \( \varvec{z} ^{nad}\) denote vectors containing the best and the worst values for the objectives in the Pareto front, respectively. Note that the weights used in the weighted Tchebycheff scalarizing function (2) are used as a preference information to get different Pareto optimal solutions as opposed to the weights in ACH (3) that are used to normalize the scales of the objective function values. In the case of ParEGO, the objective functions are normalized based on the set of evaluated solutions and, therefore, weights \(w_i=1, i=1,\dots ,k\) are used. For the augmentation term, we here set \(\rho =0.05\) as has been done in original ParEGO. The solution of problem (3) is Pareto optimal [15] and different Pareto optimal solutions can be obtained by varying the reference point \( \varvec{\bar{z}} \in \mathbb {R}^{k} \). Thus, the reference point \( \varvec{\bar{z}} \) is the way to include preference information to ACH and, in our approach, to ParEGO. These reference points will be sampled from the hyperbox H determined based on the decision maker preferences in analogy of sampling the weights for the augmented weighted Tchebycheff function in the original ParEGO.

The basic idea here is that decision maker fixes the preferred ranges for the objectives resulting into a hyperbox H in the objective space. Then, ParEGO with ACH is run by using reference points sampled within H. Note that it is not needed to sample reference points from the whole H since it is known that all the reference points along the direction specified by the weight vector \( \varvec{w} \) give the same Pareto optimal solution. Therefore, it is enough to sample reference points in H such that they lie in the plane orthogonal to \( \varvec{w} \).

To demonstrate how the modified ParEGO can handle a priori preferences and how our basic building block for the interactive ParEGO works, Figs. 2 and 3 show two example runs for the three-objective DTLZ2 problem used in [12], which has the following formulation:

where \(\alpha =1\). Figure 2 illustrates a case where ParEGO was run for 100 real function evaluations by using the preferred ranges \(0.4\le f_1\le 0.5\), \(0.5\le f_2\le 0.6\), and \(0.4\le f_3\le 0.5\). Parallel coordinate plot of the non-dominated points obtained after the initial sampling together with the preferred ranges are shown in Fig. 2 by using blue and red color (together with symbol X), respectively. Similarly, Fig. 3 shows a case where the preferred ranges \(0.1\le f_1\le 0.3\), \(0.7\le f_2\le 0.9\), and \(0.0\le f_3\le 0.2\) were used. In both the figures, one can observe that the obtained solutions follow the given preferences quite well, although not perfectly since the given ranges are not feasible.

3.2 Interactive ParEGO

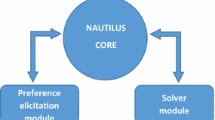

In this paper, we are not satisfied with fixed preferences since our overall goal is to develop an interactive version of ParEGO where the decision maker is able to change her mind if needed to better steer the solution process towards preferred solutions. Therefore, we will use progressive preference articulation that is used in interactive multiobjective optimization approaches [15, 17, 18]. Algorithm 1 shows the steps of the interactive ParEGO algorithm which has three input parameters. The first is the budget of function evaluations \(FE_{max}\) available for the decision making process. The second parameter fixes the frequency of interaction, that is, how many iterations to run ParEGO with given preferences before the next interaction. The last parameter determines how many solutions the decision maker wants to see at each interaction. The output of the algorithm will be the solution most preferred by the DM.

After initialization step 1, \(11n-1\) points are sampled in the decision space by using latin hypercube sampling in the same way than in the original ParEGO algorithm in step 2. The resulting points are evaluated by using the real functions and added to an archive A that will contain all the points evaluated with the real functions. In step 3, non-dominated solutions of A are determined and shown to the decision maker. If there are more than \(N_S\) non-dominated solutions in A, the number will be reduced to \(N_S\). This can be done e.g. by using clustering. Otherwise, all the non-dominated solutions are shown to decision maker. The solutions can be visualized to the decision maker with parallel coordinate plots or some other suitable visualization technique depending on the number of objectives [16]. Step 4 is the termination step of the algorithm and it involves two different criteria: stopping if decision maker so desires or if the budget of function evaluations is about to be exceeded. Thus in order to have any interactions, the budget for function evaluations \(FE_{max}\) should be more than \(11n-1 + F_{IA}\). If the decision maker wants to continue and there is budget for function evaluations remaining, then the decision maker is asked to provide preferred ranges for all the objective functions in step 5. The given ranges are then treated as preference information in a way described in Sect. 3.1. Next in step 6, \(F_{IA}\) iterations of ParEGO are run with the given preference information resulting into the same amount of new points evaluated with the real functions. Then in step 7, those points are added to the archive A, the function evaluations used and the number of interactions are updated, and the algorithm continues from step 3.

4 Numerical Example

To illustrate the performance and potential of the interactive ParEGO algorithm, we here describe an interactive solution process for the four-objective optimization problem related to water management. We chose this problem because the objective functions in that have some real meaning unlike the objectives in the synthetic test problems often used to evaluate the performance of multiobjective optimization methods. In this way, the interactive solution process becomes more meaningful and easier to follow. In addition, although the example problem is not computationally expensive, it enables faster testing and the limited budget of available function evaluations reflects the challenge with computationally expensive problems. Before describing the water management problem along with the interactive solution process, we illustrate how the interactive ParEGO reacts to changing preferences through the three-objective DTLZ4 problem.

4.1 DTLZ4

Here, we consider the three-objective DTLZ4 problem which is a modification of the DTLZ2 problem described in Eq. (4) by using \(\alpha =100\). The Pareto front for both DTLZ2 and DTLZ4 is one eighth of a sphere of radius 1, centred on (0, 0, 0) but using \(\alpha =100\) severely biases the density distribution of solutions. To demonstrate the effect of changing preferences, four different preferred ranges (shown in Table 1) were consequently used after initialization.

Parameter values used were \(FE_{max} = 200\) and \(F_{IA} = 30\). The resulting non-dominated solutions of a single run are shown as a scatter plot in Fig. 4 where different colors/symbols denote solutions obtained after different interactions, i.e., with different ranges. As can be seen, the solutions obtained follow the ranges given despite the limited number of function evaluations used.

4.2 Water Management Problem

The problem deals with water management in the (hypothetical) Fast Water Valley on a stretch of 100 river miles presented in [20]. There is a Fresh Fishery situated near the head of the valley causing pollution to the river. The city of Fortuna having population of 300 000 produces municipal waste pollution and is located 50 miles downstream from the fishery. The measure for water quality is expressed in terms of dissolved oxygen (DO) concentration and both the pollutants are described in pounds of biochemical oxygen demanding material (BOD). There exist primary treatment facilities that reduce the BOD in the gross discharge of the fishery and the city by 30 percent. Additional treatment facilities would increase the tax rate in Fortuna and decrease the return on investment (ROI) from the Fresh Fishery. The decision maker is interested in four objective functions: \(f_1=\) water quality in the fishery, \(f_2=\) water quality in the city, \(f_3=\) ROI from the fishery, and \(f_4=\) addition to the city tax rate resulting in to the following optimization problem

The units for \(f_1\) and \(f_2\) are \([mg/L\text { of DO}]\) while the unit for \(f_3\) is \([\%]\). The unit for the addition to the city tax rate \(f_4\) is \([1/1000\text { of }\$]\), i.e., \(f_4=\delta \) results in the tax rate of about \(\$\delta \) per \(\$1000\) assessed value at Fortuna. The decision variables \(x_1\) and \(x_2\) describe the proportionate amount of BOD removed from water discharge at the fishery and at the city, respectively.

4.3 Interactive Solution Process

Parameter values used in this study were \(FE_{max} = 100\), \(F_{IA} = 20\), and \(N_S = 5\). These values were chosen just to illustrate the performance and a study on their effect in the performance of the method is left as a topic for future study. In addition, k-means clustering with squared Euclidean distance was used to reduce the number of produced solutions if it was more than \(N_S\) and, from each \(N_S\) cluster identified, the solution closest to cluster centroid was chosen. An alternative for k-means would be to use multiobjective clustering techniques where the number of clusters is not fixed but determined by the clustering algorithm [8]. Exploring this option is left for future research.

An important aspect related to interactive multiobjective optimization methods is the graphical user interface that implements the interaction between the decision maker and the method. In this example, we have used the parallel coordinate plot tool developed in Prof. Patrick Reed’s group in Cornell [1] to visualize the non-dominated solutions to the decision maker and for her/him to analyze them in order to adjust the preferred ranges if necessary. It is important to note that the figures of parallel coordinate plots used to illustrate the solutions in this paper do not really provide the same possibilities to analyze them as the tool itself. In other words, by using the tool the decision maker can interact with the visualizations and get a better understanding by e.g. filtering the solutions or changing the positions of different objectives to have better understanding of the trade-offs. Developing a more enhanced user interface is one of the future research topics.

To start with, latin hypercube sampling for this problem corresponded to 21 points in the two dimensional decision space and a set of five representative non-dominated solutions among those is shown in Fig. 5. From the figure, the decision maker could also observe the initial ranges for the objectives that help in determining preferred ranges.

Our aim here was to show how changes in preferences during the solution process can be taken into account with the interactive ParEGO and we illustrate this by considering preferences from different stakeholders’ points of view. We start from a citizen’s perspective who is living in the city of Fortuna. The first goal is to try to maximize the water quality in the city and, thus, the preferred ranges \(5.5\le f_1\le 6.2\), \(3.3\le f_2\le 4.0\), \(4.0\le f_3\le 6.0\), and \(3.0\le f_4\le 5.0\) were used.

The resulting non-dominated solutions after clustering are shown in Fig. 6 which also shows the preferred ranges from the first interaction (in red and with symbol X). One can observe that it was not possible to improve water quality in the city for more than around 3.45 which resulted in the city tax rate increase of over 9. This was not found satisfactory and, therefore, the aim was to find satisfactory water quality levels in the city without too large tax rate increase. To continue with, the decision maker gave new ranges \(5.5\le f_1\le 6.2\), \(3.0\le f_2\le 3.5\), \(2.0\le f_3\le 6.0\), and \(5.0\le f_4\le 7.0\).

The set of five non-dominated solutions together with the preferred ranges are shown in Fig. 7. Of the solutions shown to the decision maker, there were two within the preferred ranges for the city tax increase: (5.00, 3.32, 7.44, 5.70) and (6.09, 3.34, 5.82, 5.70). Both the solutions have similar values for \(f_2\) and \(f_4\) while the values differed for the other objectives. Thus, with these levels for the water quality in the city and the city tax increase, there exists a clear trade-off between the water quality in the fishery and the fishery ROI. For the next interaction, we looked at the problem from the fishery shareholder perspective and the aim was to maximize the ROI from the fishery and see what happens to the other objectives. Thus, the new ranges given were \(4.0\le f_1\le 6.5\), \(2.5\le f_2\le 3.5\), \(6.0\le f_3\le 8.0\), and \(0.0\le f_4\le 10.0\).

The resulting solutions are shown in Fig. 8. One can observe, that in the solution with the best ROI, (5.34, 2.86, 7.30, 0.00), the tax rate in the city does not increase since no additional water treatment is used. On the other hand, the water quality in both the city and the fishery is degraded. Thus, in order to keep the ROI from the fishery at a relatively high level and, simultaneously, not to compromise the water quality too much, the city tax rate should be increased.

Finally, we looked at the problem from perspective of the mayor of Fortuna. From her point of view, all the objectives are important since the quality of the water in the city directly affects to the living conditions, the fishery brings jobs and food to people in the city, and the tax rate should be kept at a relatively low level in order to keep the citizens happy. Therefore, she aims at a balanced solution between all the objectives and the preferred ranges \(4.0\le f_1\le 6.5\), \(2.5\le f_2\le 3.5\), \(6.0\le f_3\le 8.0\), and \(0.0\le f_4\le 10.0\) were taken around the middle of the ranges of each objective obtained among all solutions evaluated so far.

The resulting set of five representative non-dominated solutions along with the preferred ranges are shown in Fig. 9. The first observation is that there is not a single solution that satisfies all the preferred ranges and it might be the case that such a solution does not exist. The closest one has the values (5.95, 3.27, 6.45, 4.11) which seems to balance all the objectives quite nicely although it has quite high ROI from the fishery. However, if one looks at all the non-dominated solutions found during the solution process shown in Fig. 10, there are not many solutions having ROI from the fishery below 5.0. At this point, the interactive solution process ends.

5 Conclusions

In this paper, an interactive ParEGO algorithm utilizing decision maker preferences in an iterative manner is proposed. As opposed to the original ParEGO algorithm, the aim is not to approximate the whole Pareto front but to help the decision maker to find her most preferred solution. The key modifications to the existing ParEGO algorithm are (1) incorporating decision maker preferences to focus the search, (2) interacting with the decision maker by showing her/him intermediate non-dominated solutions and asking for preferred ranges for the objective functions, and (3) replacing the Tchebycheff scalarization with the achievement scalarizing function enabling the usage of reference points inside the algorithm. The potential of the proposed algorithm was demonstrated through the four objective optimization problem in water management and its ability to consider preference information that changes during the solution process seems promising. The topics for future research include a study on the influence of the three parameters of the algorithm, that is, the budget for real function evaluations, the frequency of interaction, and the number of solutions shown to the decision maker, building a more enhanced graphical user interface for implementing the interaction, using multiobjective clustering to reduce the number of solutions shown to the decision maker, and finally, applying the method to computationally expensive real-world problems.

References

Web-based parallel coordinate plot. https://reed.cee.cornell.edu/parallel-axis-categories/parallel/. Accessed 14 Oct 2016

Branke, J., Deb, K., Miettinen, K., Słowiński, R. (eds.): Multiobjective Optimization: Interactive and Evolutionary Approaches. LNCS, vol. 5252. Springer, Heidelberg (2008)

Chugh, T., Jin, Y., Miettinen, K., Hakanen, J., Sindhya, K.: A surrogate-assisted reference vector guided evolutionary algorithm for computationally expensive many-objective optimization. IEEE Trans. Evol. Comput. (2017, to appear)

Coello, C.: Handling preferences in evolutionary multiobjective optimization: a survey. In: 2000 IEEE Congress on Evolutionary Computation (CEC), pp. 30–37. IEEE (2000)

Cristescu, C., Knowles, J.: Surrogate-based multiobjective optimization: ParEGO update and test. Workshop on Computational Intelligence (UKCI) (2015)

Eskelinen, P., Miettinen, K., Klamroth, K., Hakanen, J.: Pareto navigator for interactive nonlinear multiobjective optimization. OR Spectr. 32, 211–227 (2010)

Haanpää, T.: Approximation method for computationally expensive nonconvex multiobjective optimization problems. Jyväskylä Studies in Computing 157, PhD thesis, University of Jyväskylä, Jyväskylä (2012). http://urn.fi/URN:ISBN:978-951-39-4968-6

Handl, J., Knowles, J.: An evolutionary approach to multiobjective clustering. IEEE Trans. Evol. Comput. 11(1), 56–76 (2007)

Hartikainen, M., Miettinen, K., Wiecek, M.M.: PAINT: Pareto front interpolation for nonlinear multiobjective optimization. Comput. Optim. Appl. 52(3), 845–867 (2012)

Jin, Y.: Surrogate-assisted evolutionary computation: recent advances and future challenges. Swarm Evol. Comput. 1(2), 61–70 (2011)

Jones, D.R., Schonlau, M., Welch, W.J.: Efficient global optimization of expensive black-box functions. J. Global Optim. 13(4), 455–492 (1998)

Knowles, J.: ParEGO: a hybrid algorithm with on-line landscape approximation for expensive multiobjective optimization problems. IEEE Trans. Evol. Comput. 10(1), 50–66 (2005)

Knowles, J., Nakayama, H.: Meta-Modeling in Multiobjective Optimization. In: Branke, J., Deb, K., Miettinen, K., Słowiński, R. (eds.) Multiobjective Optimization. LNCS, vol. 5252, pp. 245–284. Springer, Heidelberg (2008). doi:10.1007/978-3-540-88908-3_10

Larichev, O.: Cognitive validity in design of decision aiding techniques. J. Multi-Criteria Decis. Anal. 1(3), 127–138 (1992)

Miettinen, K.: Nonlinear Multiobjective Optimization. Kluwer Academic Publishers, Boston (1999)

Miettinen, K.: Survey of methods to visualize alternatives in multiple criteria decision making problems. OR Spectr. 36(1), 3–37 (2014)

Miettinen, K., Hakanen, J., Podkopaev, D.: Interactive nonlinear multiobjective optimization methods. In: Greco, S., Ehrgott, M., Figueira, J. (eds.) Multiple Criteria Decision Analysis: State of the Art Surveys. International Series in Operations Research & Management Science, vol. 233, 2nd edn, pp. 927–976. Springer, New York (2016). doi:10.1007/978-1-4939-3094-4_22

Miettinen, K., Ruiz, F., Wierzbicki, A.P.: Introduction to multiobjective optimization: interactive approaches. In: Branke, J., Deb, K., Miettinen, K., Słowiński, R. (eds.) Multiobjective Optimization. LNCS, vol. 5252, pp. 27–57. Springer, Heidelberg (2008). doi:10.1007/978-3-540-88908-3_2

Monz, M., Kufer, K.H., Bortfeld, T.R., Thieke, C.: Pareto navigation - algorithmic foundation of interactive multi-criteria IMRT planning. Phys. Med. Biol. 53(4), 985–998 (2008)

Narula, S.C., Weistroffer, H.R.: A flexible method for nonlinear multicriteria decision-making problems. IEEE Trans. Syst. Man Cybern. 19(4), 883–887 (1989)

Purshouse, R., Deb, K., Mansor, M., Mostaghim, S., Wang, R.: A review of hybrid evolutionary multiple criteria decision making methods. In: 2014 IEEE Congress on Evolutionary Computation (CEC), pp. 1147–1154. IEEE (2014)

Sindhya, K., Ojalehto, V., Savolainen, J.: Niemistö, H., Hakanen, J., Miettinen, K.: Coupling dynamic simulation and interactive multiobjective optimization for complex problems: an APROS-NIMBUS case study. Expert Syst. Appl. 41, 2546–2558 (2014)

Steuer, R.E., Choo, E.U.: An interactive weighted Tchebycheff procedure for multiple objective programming. Math. Program. 26(3), 326–344 (1983)

Tabatabaei, M., Hakanen, J., Hartikainen, M., Miettinen, K., Sindhya, K.: A survey on handling computationally expensive multiobjective optimization problems using surrogates: non-nature inspired methods. Struct. Multi. Optim. 52(1), 1–25 (2015)

Wierzbicki, A.P.: Reference point approaches. In: Gal, T., Stewart, T.J., Hanne, T. (eds.) Multicriteria Decision Making: Advances in MCDM Models, Algorithms, Theory, and Applications, pp. 9-1–9-39. Kluwer Academic Publishers, Boston (1999)

Zhou, A., Qu, B.Y., Li, H., Zhao, S.Z., Suganthan, P.N., Zhang, Q.: Multiobjective evolutionary algorithms: a survey of the state of the art. Swarm Evol. Comput. 1(1), 32–49 (2011)

Acknowledgment

This work was supported by the FiDiPro project DeCoMo funded by TEKES, The Finnish Funding Agency for Innovation. The authors want to thank Mr. Tinkle Chugh, Prof. Yaochu Jin, Prof. Kaisa Miettinen, and Dr. Karthik Sindhya for discussions related to the ideas presented in the paper. In addition, the authors would like to acknowledge Prof. Patrick Reed’s group Decision Analytics for Complex Systems (Cornell University) related to their parallel coordinate plotting tool.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Hakanen, J., Knowles, J.D. (2017). On Using Decision Maker Preferences with ParEGO. In: Trautmann, H., et al. Evolutionary Multi-Criterion Optimization. EMO 2017. Lecture Notes in Computer Science(), vol 10173. Springer, Cham. https://doi.org/10.1007/978-3-319-54157-0_20

Download citation

DOI: https://doi.org/10.1007/978-3-319-54157-0_20

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-54156-3

Online ISBN: 978-3-319-54157-0

eBook Packages: Computer ScienceComputer Science (R0)