Abstract

Much of the world’s population health, public health and clinical information is based on self-reported data. However, significant and meaningful bias exists across a broad range of health indicators when self-reported data are compared to direct measures. This bias can lead to over- and underestimation of risk factor and disease prevalence and burden. Understanding the implications of such bias for health surveillance, research, clinical practice and policy development may provoke adjustments to current epidemiological practice and may assist in understanding and improving the health of populations.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Systemic Blood Pressure

- Reporting Bias

- Health Surveillance

- Canadian Community Health Survey

- Obesity Class

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

14.1 Introduction

Measuring the state of health within a population is crucial for health surveillance, research, clinical practice and policy development. It provides a current picture of a population’s status, allows for monitoring changes over time and indicates inequities between population sub-groups and among countries. Adequate measurement strategies are essential to ensure that evidence upon which resources will be allocated and interventions designed is reliable and valid.

Occasionally, epidemiologists who seek to relate physical activity and health may have access to relatively accurate data, such as clinical measurements of height, weight, and systemic blood pressure. But much of our health information is based on subjective or self-reported measures of health, because most population data come from surveys that rely on self-reports of participants’ health status and disease experience. Self-reports are often used because of their practicality, low cost, low participant burden, and general acceptance in the population [1]. Increasingly, however, the accuracy of self-reported data has been called into question and there has been a push to include more objective measures in our health information system [2]; a trend that is facilitated by advances in technology allowing for more feasible direct measurement. This brief analysis examines the bias in self-reported information across a range of population, public health and clinical conditions and, using obesity as an example, discusses the implications of this bias for Canadian policy and practice.

14.2 Self-Report vs. Direct Measures Bias

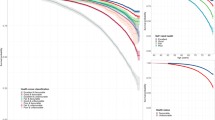

A recent series of systematic reviews has highlighted the bias in self-reported measurements for a variety of health conditions and determinants in both children and adults. Reviews have examined the relationship between reported and measured height, body mass, and body mass index (BMI) (64 studies) [3], smoking (67 studies) [4], hypertension (144 studies) [5] and physical activity in adults (173 studies) [6] and in children (83 studies) [7]. These reviews have consistently demonstrated that reported data under- or over-estimated measured values (Table 14.1). For example, self-reported height was consistently overestimated, while body mass and BMI were consistently underestimated in adults, which led to an underestimation in obesity prevalence [3]. Smoking [4] and hypertension [5] prevalence were also underestimated when data were based on individuals’ self-reports. Furthermore, if a standard clinically-determined systemic blood pressure of 140/90 mmHg was used to diagnose hypertension, just over half of respondents in the studies, which included data on more than 1 million people, were aware of their hypertensive status [5].

Low to moderate correlations were found between direct measures of physical activity (e.g. accelerometers, doubly labelled water) and self-reports (e.g. surveys, questionnaires, diaries) [6, 7]. In pediatric populations (less than 19 years of age) the self-reported measures of physical activity overestimated children’s activity levels, implying that children and youth were much less active than they believed they were (overall mean percent difference of 147 %) [7]. In adults both under- and over-reporting were present and varied according to the sex of the participants and the level of physical activity measured, with greater discrepancies seen at higher levels of exertion or with more vigorous exercise [6].

Katzmarzyk and Tremblay [8] discussed the apparent contradiction in Canadian health surveillance data that indicated a temporal decrease in physical inactivity and a decrease in food intake, yet an increase in obesity and obesity/inactivity-related chronic disease. They concluded that inherent short-comings of self-report data and inconsistencies in data analyses likely contribute to these contradictory findings and they suggested the use of direct measures. The recent reports on the fitness of the nation from the Canadian Health Measures Survey (CHMS) [9, 10] strongly suggest the physical inactivity trend data are misleading and likely incorrect.

Other recent Canadian data have confirmed the bias between reported and measured health conditions such as obesity. For instance, Shields and colleagues [11] found that the prevalence of obesity based on measured data was 7 percentage points higher than the estimate based on self-reported data (22.6 % versus 15.2 %). They also found that the extent of under-reporting rises as BMI increases, so the greatest bias was seen in individuals who were overweight or obese [11].

14.3 Implications for Health Surveillance

Underestimating disease prevalence is one consequence of the reporting bias discussed above, but the misclassification that results from using reported data can have further implications for understanding the burden associated with specific health conditions. Using obesity as an example, a study using data from the 2005 Canadian Community Health Survey found that for adults aged 40 years and older who were classified as obese based on self-reported data 360,000 were also classified as having diabetes. If, however, measured data were used to classify respondents as obese, then 530,000 adults (nearly 50 % more) had diabetes [12].With self-reported data, therefore, the burden of disease due to obesity is significantly underestimated.

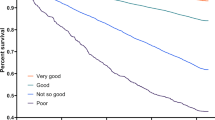

Research has also shown that when estimates of obesity are based on self-reported data, the relationship between obesity and obesity-related health conditions such as diabetes, hypercholesterolemia, hypertension, arthritis and heart disease is substantially exaggerated [12–14]. One study [12] found that the odds ratios for associations between measured overweight, obesity class I and obesity class II or III and diabetes were 1.4, 2.2, and 7.0 respectively, but when the reported BMI was used to classify respondents into obesity categories the odds ratios increased to 2.6, 3.2, and 11.8. This distortion occurs because fewer respondents are classified as overweight or obese when the classification is based on reported data, since many of the population are classified into a lower weight category. Yet, the average weights of those who do self-report as being overweight or obese are higher than the average weights of those whose measured data place them in the overweight or obese categories. As a result, a stronger association with morbidity is observed when overweight and obese categories are based on self-reported data, because the respondents in these categories are actually heavier (Fig. 14.1).

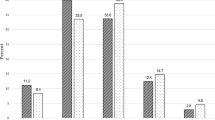

Researchers have attempted to correct self-reported data statistically to determine if the reported numbers could be adjusted to approximate the measured values more closely [15–18] (Fig. 14.2). This was successfully accomplished in a Canadian study in which the reported prevalence of excessive body mass was corrected sufficiently so that the prevalence of overweight and obesity was no longer statistically different from the corresponding measured estimates [18]. In addition, sensitivity (the proportion of the population correctly classified as obese) for males increased from 59 % using reported data to 74 % using corrected data, and from 69 % to 86 % in females.

The generalizability of these correction equations, however, is questionable; the reporting bias in Canada, for example, has varied over time, doubling in the last decade [19]. If the bias was constant, or at least changed systematically over time as it has in the United States, it is more likely that a standardized statistical adjustment could be successful. Therefore, the most effective way to deal with reporting bias may not be by making post-collection data corrections, but rather by increasing the epidemiologist’s capacity to collect directly measured data.

14.4 Implications for Research

Though self-report methods of assessing health indicators are convenient for research purposes, they must be employed with caution, especially when they are related to socially desirable behaviours, and due consideration should be given to the fact that results may lack reliability and validity. Accordingly, future research should:

-

further compare self-report and direct measures across different variables and in different populations;

-

where possible use directly measured data to reassess behaviour—health relationships that have been examined previously using self-report data;

-

work to advance direct measurement methods to reduce their cost, respondent burden and reactivity; and

-

if subjective measures are used, ensure that a subset of research respondents are assessed by both self-report and direct measurements, allowing for study-specific correction factors to be developed and used.

14.5 Implications for Clinical Practice

Standard clinical practice regularly uses a variety of biomarkers to inform diagnoses and monitor treatment progress. These measurements are generally collected using carefully validated procedures and analytical techniques that are both accurate and precise. Such data quality assurance is not mirrored when behavioural information is collected. The systematic reviews summarized above [3–7] clearly indicate cause for concern when relying on self-reported data to assess health-related behaviours and their outcomes. Consequently, indicators related to common chronic diseases should be tracked with direct measurements (e.g. height, body mass, systemic blood pressure). Pedometers or accelerometers can be used to measure daily activity objectively, and because there is as yet no equivalent direct measurement procedure for diet, the development of an appropriate technique should be a high priority for future research. Assessment of behaviour modification treatments that are based on self-report or subjective data may result in misleading findings and suboptimal clinical care.

14.6 Implications for Policy

Research based on data with measurement biases as described above may not contribute meaningfully to the health research literature. Indeed, it may utilize finite research resources ineffectively. It may also misinform policy directions, and could even cause harm. These side-effects of poor measurement can occur at the individual patient/respondent level (misinforming, misdiagnosing, misadvising) as well as at the population level (misinforming policy, expenditure allocations, burden of disease planning). Using self-reported data to determine obesity status, the estimated number of Canadian adults with diabetes was underestimated by nearly 50 % [12].

14.7 Limitations of Direct Measurements

Direct measures are not without their limitations. For example, the estimation of the intensity and total volume of weekly energy expenditures has become practical for epidemiologists with the replacement of questionnaires by relatively low cost objective monitoring devices. Step counting has become progressively more sophisticated, with an ability to classify the intensity of impulses and accumulate activity data over long periods. Pedometers/accelerometers yield precise data for standard laboratory exercise, and in groups where steady, moderately paced walking is the main form of energy expenditure they can provide very useful epidemiological data. Nevertheless, such instruments remain vulnerable to external vibration and they fail to reflect adequately the energy expenditures incurred in hill climbing and isometric activity, as well as many of the everyday activities of children and younger adults. Multi-phasic devices hold promise as a means of assessing atypical activities, but appropriate and universally applicable algorithms based on such equipment have as yet to be developed. Moreover, the multiphasic equipment is at present too costly and complex for epidemiological use.

14.8 Conclusions

Much of the world’s epidemiological research and evidence is based on self-reported data. Such data have systematic biases and limitations, and the reported values often deviate significantly and meaningfully from more robust direct (objective) measurements. This bias can lead to both over- and under-estimation of risk factor and disease prevalence and burden. Understanding the implications of such bias on health surveillance, research, clinical practice and policy development may provoke adjustments to current epidemiological practice that can assist in understanding and improving the health of populations.

References

Singleton RA, Straits BC, Straits MM. Approaches to social research. 2nd ed. New York: Oxford University Press; 1993.

Tremblay MS. The need for directly measured health data in Canada. Can J Public Health. 2004;95:165–6.

Connor Gorber S, Tremblay M, Moher D, et al. A comparison of direct vs. self-report measures for assessing height, weight and body mass index: a systematic review. Obes Rev. 2007;8:307–26.

Connor Gorber S, Schofield-Hurwitz S, Hardt J, et al. The accuracy of self-reported smoking: a systematic review of the relationship between self-reported and objectively assessed smoking status. Nicotine Tob Res. 2009;11:12–24.

Connor Gorber S, Tremblay M, Campbell N, et al. The accuracy of self-reported hypertension; a systematic review and meta-analysis. Curr Hypertens Rev. 2008;4:36–62.

Prince SA, Adamo K, Hamel ME, et al. A comparison of direct versus self-report measures for assessing physical activity in adults: a systematic review. Int J Behav Nutr Phys Act. 2008;5:56.

Adamo KB, Prince SA, Tricco AC, et al. A comparison of indirect vs. direct measures for assessing physical activity in the pediatric population: a systematic review. Int J Pediatr Obes. 2009;4:2–27.

Katzmarzyk PT, Tremblay MS. Limitations of Canada’s physical activity data: implications for monitoring trends. Appl Physiol Nutr Metab. 2007;32 Suppl 2:S185–94.

Shields M, Tremblay MS, Laviolette M, et al. Fitness of Canadian adults: results from the 2007-2009 Canadian Health Measures Survey. Health Rep. 2010;21(1):21–36.

Tremblay MS, Shields M, Laviolette M, et al. Fitness of Canadian children: results from the Canadian Health Measures Survey. Health Rep. 2010;21(1):7–20.

Shields M, Connor Gorber S, Tremblay MS. Estimates of obesity based on self-report versus direct measures. Health Rep. 2008;19(2):61–76.

Shields M, Connor Gorber S, Tremblay MS. Effects of measurement on obesity and morbidity. Health Rep. 2008;19(2):77–84.

Chiolero A, Peytremann-Bridevaux I, Paccaud F. Associations between obesity and health conditions may be overestimated if self-reported body mass index is used. Obes Rev. 2007;8:373–4.

Shields M, Connor Gorber S, Tremblay MS. Associations between obesity and morbidity: effects of measurement methods. Obes Rev. 2008;9:501–2.

Rowland ML. Self-reported weight and height. Am J Clin Nutr. 1990;52:1125–33.

Kuskowska-Wolk A, Bergstrom R, Bostrom G. Relationship between questionnaire data and medical records of height, weight and body mass index. Int J Obes. 1992;16:1–9.

Plankey MW, Stevens J, Flegal KM, et al. Prediction equations do not eliminate systematic error in self-reported body mass index. Obes Res. 1997;5:308–14.

Connor Gorber S, Shields M, Tremblay MS, et al. The feasibility of establishing correction factors to adjust self-reported estimates of obesity in the Canadian community health survey. Health Rep. 2008;19(3):71–82.

Connor Gorber S, Tremblay MS. The bias in self-reported obesity from 1976 to 2005, Canada—U.S. comparison. Obesity. 2010;18:354–61.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Gorber, S.C., Tremblay, M.S. (2016). Self-Report and Direct Measures of Health: Bias and Implications. In: Shephard, R., Tudor-Locke, C. (eds) The Objective Monitoring of Physical Activity: Contributions of Accelerometry to Epidemiology, Exercise Science and Rehabilitation. Springer Series on Epidemiology and Public Health. Springer, Cham. https://doi.org/10.1007/978-3-319-29577-0_14

Download citation

DOI: https://doi.org/10.1007/978-3-319-29577-0_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-29575-6

Online ISBN: 978-3-319-29577-0

eBook Packages: MedicineMedicine (R0)