Abstract

We propose two expressive and complementary techniques for the verification of safety properties of infinite-state BIP models. Both our techniques deal with the full BIP specification, while the existing approaches impose considerable restrictions: they either verify finite-state systems or they do not handle the transfer of data on the interactions and priorities.

Firstly, we propose an instantiation of the ESST (Explicit Scheduler Symbolic Thread) framework to verify BIP models. The key insight is to apply symbolic reasoning to analyze the behavior of the system described by the BIP components, and an explicit-state search to analyze the behavior of the system induced by the BIP interactions and priorities. The combination of symbolic and explicit exploration techniques allow to benefit from abstraction, useful when reasoning about data, and from partial order reduction, useful to mitigate the state space explosion due to concurrency.

Secondly, we propose an encoding from a BIP model into a symbolic, infinite-state transition system. This technique allows us to leverage the state of the art verification algorithms for the analysis of infinite-state systems.

We implemented both techniques and we evaluated their performance against the existing approaches. The results show the effectiveness of our approaches with respect to the state of the art, and their complementarity for the analysis of safe and unsafe BIP models.

This work was carried out within the D-MILS project, which is partially funded under the European Commission’s Seventh Framework Programme (FP7).

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Partial Order Reduction

- Symbolic Transition Systems (STS)

- Scheduler State

- DFinder

- Lazy Predicate Abstraction

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

BIP [2, 4] is a framework for the component-based design of complex concurrent systems that is being actively used in many industrial settings [3, 5]. The verification of BIP plays a crucial role in the Rigorous System Design methodology [28], where a correct implementation of the system is obtained by a series of transformations from its high-level model; proving that a property holds in the model will ensure that it holds in the implementation.

Despite the importance of verifying BIP models, the existing approaches (e.g. implemented in tools like DFinder [7],VCS [20] and Bip2Uppaal [29]) impose considerable restrictions on the models that can be analyzed. In particular, only DFinder verifies models with infinite-state data variables. However, DFinder does not consider the data transfer on interactions, an essential feature to express that data is exchanged among the components (consider that in BIP the components cannot share variables), and the priorities among interactions.

In this paper, we focus on the safety property verification of infinite-state BIP models, and we propose two techniques that are: (i) expressive enough to capture all the features of infinite-state BIP models (e.g. data transfer, priorities); (ii) complementary, with respect to the performance, for verifying safe and unsafe models.

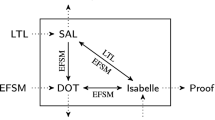

The first solution is a novel verification algorithm based on the Explicit Scheduler, Symbolic Threads (ESST) framework [16]. The ESST extends lazy predicate abstraction and refinement [21, 22] to verify concurrent programs formed by a set of cooperative threads and a non-preemptive scheduler; the main characteristic of the approach is to use lazy predicate abstraction to explore threads and explicit-state techniques to explore the scheduler. The choice of ESST is motivated by the clear separation of computations and coordination in the BIP language, which is similar to the separation of threads and scheduler in the ESST, and by the ESST efficiency, since the ESST outperforms the verification techniques based on sequentialization (e.g. in the context of SystemC and Fair Threads programs [16]). In our work, we show an efficient instantiation of the ESST framework in an algorithm that verifies \(\textsc {BIP}\) models (\(\textsc {ESST} _\textsc {BIP} \)). The instantiation is not trivial, and consists of defining a suitable interaction model between the threads and the scheduler, the consequent mapping of \(\textsc {BIP}\) components into threads, and of implementing the scheduler. Moreover, we improve the performance of our approach with several optimizations, which are justified by the \(\textsc {BIP}\) semantic.

In our second solution we explore a conceptually simple, but still novel, encoding of a \(\textsc {BIP}\) model into an infinite-state transition system. This alternative flow is motivated by the recent advancements in the verification of infinite-state systems (e.g. see [14, 23]). Also this technique supports all the \(\textsc {BIP}\) features, like priorities and data transfer on interactions.

We provide an implementation of both approaches: the \(\textsc {ESST} _\textsc {BIP} \) is implemented in the Kratos [13] software model checker; the translational approach is performed using the \(\textsc {BIP}\) framework and then verified with the nuXmv [12] model checker. We performed a thorough experimental evaluation comparing the performance of the two techniques and of DFinder (in this case, only on the models without data transfers). The results show that the proposed approaches always perform better than DFinder, and that \(\textsc {ESST} _\textsc {BIP} \) and the translational approach using nuXmv are complementary, with \(\textsc {ESST} _\textsc {BIP} \) being more efficient in finding counterexamples for unsafe models, while the translational approach using nuXmv is more efficient in proving correctness of safe models.

This paper is structured as follows. We first provide the background of the \(\textsc {BIP}\) language in Sect. 2. Then in Sect. 3 we describe the \(\textsc {ESST} _\textsc {BIP} \) algorithm, as well as its optimizations. In Sect. 4 we show the encoding of \(\textsc {BIP}\) into a symbolic transition system. Then, in Sect. 5 we review the related work and in Sect. 6 we present the experimental evaluation. Finally, in Sect. 7 we draw some conclusions and outline directions for future work.

2 The BIP Model

We denote by Var a set of variables with domain \(\mathbb {Z}\) Footnote 1, (i.e. for all \(x \in Var\), \(Dom(x)=\mathbb {Z}\)). An assignment is of the form \(x := exp\), where \(x\in Var\) and exp is a linear expression over Var. An assumption is of the form [bexp], where bexp is a Boolean combination of predicates over Var. Let \(BExp({Var})\) be the set of assumptions and \(Exp({Var})\) be the set of assignments. Let \(Ops(Var)=BExp({Var}) \cup Exp({Var}) \cup \{{ skip }\}\) be the set of edge operations, where \({ skip }\) denotes an operation without effects on Var. A state \(s: Var \rightarrow \mathbb {Z}\) is a mapping from variables to their valuations; we use \({ State}\) to denote the set of all possible states. We define an evaluation function \([\![\cdot ]\!]_{\mathcal {E}}: exp \rightarrow ({ State}\rightarrow \mathbb {Z})\) for assignments and \([\![\cdot ]\!]_{\mathcal {B}}: bexp \rightarrow ({ State}\rightarrow \{true,false\})\) for assumptions. We refer to [16] for the definition of \([\![\cdot ]\!]_{\mathcal {E}}\) and \([\![\cdot ]\!]_{\mathcal {B}}\). We denote by \(s[x:=e]\) the substitution of x by e in expression s.

The BIP Syntax. An atomic component is a tuple \(B_{i} = {\langle {Var_{i}, Q_{i}, P_{i}, E_{i}, l_{0_i}}\rangle }\) where \(Var_{i}\) is a set of variables, \(Q_{i}\) is a set of locations, \(P_{i}\) is a set of ports, \(E_{i} \subseteq Q_{i} \times P_{i} \times BExp({Var_{i}}) \times Exp({Var_{i}}) \times Q_{i}' \) is a set of edges extended with guards and operations and \(l_{0_i} \in Q_{i}\) is the initial location.

We assume that, for each location, every pair of outgoing edges labeled with the same port has disjoint guards. This can be achieved by simply renaming the ports and imposes no restrictions on the \(\textsc {BIP}\) expressiveness. We also identify a set of error locations, \(Q_{err_{i}} \subseteq Q_{i}\) to encode the safety propertyFootnote 2.

Let \(\mathcal {B} = \{B_{1}, \ldots , B_{n}\}\) be a set of atomic components. An interaction \(\gamma \) for \(\mathcal {B}\) is a tuple \({\langle {Act, g, op}\rangle }\) such that: \(Act\subseteq \bigcup _{i=1}^n P_{i}\), \(Act\ne \emptyset \), and for all \(i \in [1, n]\), \(|Act\cap P_{i}| \le 1\) , \(g \in BExp({\bigcup _{B{j}\in \gamma _{\mathcal {B}}} Var_{j}})\) and \(op \in Exp({\bigcup _{B{j}\in \gamma _{\mathcal {B}}} Var_{j}})\), where \(\gamma _{\mathcal {B}} = \{B{j} | B{j} \in \mathcal {B}, Act\cap P_{j} \ne \emptyset \}\).

We assume that the sets of ports of the components in a \(\textsc {BIP}\) model and the sets of local variables are disjoint (i.e. for all \(i\ne j\), \(P_{i} \cap P_{j} = \emptyset \) and \(Var_{i} \cap Var_{j} = \emptyset \)). For a port \(\alpha \in P_{i}\), we identify with \({id({\alpha })}\) the index i of the component \(B_{i}\).

Let \(\varGamma \) be a set of interactions, a priority model \(\pi \) of \(\varGamma \) is a strict partial order of \(\varGamma \). For \(\gamma _1, \gamma _2 \in \varGamma \), \(\gamma _1\) has a lower priority than \(\gamma _2\) if and only if \((\gamma _1, \gamma _2) \in \pi \). For simplicity, we write \(\gamma _1 < \gamma _2\) in this case.

A BIP model \(\mathcal {P}_{\textsc {BIP}}\) is a tuple \({\langle {\mathcal {B},\varGamma ,\pi }\rangle }\), where \(\mathcal {B} = {\langle {B_{1}, \ldots , B_{n}}\rangle }\) is a set of atomic components, \(\varGamma \) is a set of interactions over \(\mathcal {B}\) and \(\pi \) is a priority model for \(\varGamma \).

We assume that each component \(B_{i}\) of \(\mathcal {P}_{\textsc {BIP}}\) has at most an error location, without outgoing edges and such that the port of all its incoming edges is \(error_i\); each \(error_i\) appears in a unique singleton interaction and all such interactions have the highest priority in \(\mathcal {P}_{\textsc {BIP}}\). Any \(\textsc {BIP}\) model can be put into such form (see [10]).

The BIP Semantics. A configuration c of a \(\textsc {BIP}\) model \(\mathcal {P}_{\textsc {BIP}}\) is a tuple \({\langle {{\langle {l_1,s_1}\rangle }, \ldots , {\langle {l_n,s_n}\rangle }}\rangle }\) such that for all \(i \in [1,n]\), \(l_i \in Q_{i}\) and \(s_i: Var_{i} \rightarrow \mathbb {Z}\) is a state of \(B_i\). Let \(\mathcal {P}_{\textsc {BIP}}={\langle {\mathcal {B},\varGamma ,\pi }\rangle }\) be a \(\textsc {BIP}\) model and \(c = {\langle {{\langle {l_1,s_1}\rangle }, \ldots , {\langle {l_n,s_n}\rangle }}\rangle }\) be a configuration. The interaction \(\gamma ={\langle {Act, g, op}\rangle } \in \varGamma \) is enabled in c if, for all the components \(B_i \in \mathcal {B}\) such that \(Act\cap P_{i} \ne \emptyset \), there exists an edge \({\langle {l_i, Act\cap P_{i}, g_i, op_i, l_i'}\rangle } \in E_{i}\) and \([\![g_i]\!]_{\mathcal {B}}(s_i) = true\), and \([\![g]\!]_{\mathcal {B}}(s_1, \ldots , s_n) = true\).

A \(\textsc {BIP}\) model \(\mathcal {P}_{\textsc {BIP}}={\langle {\mathcal {B},\varGamma ,\pi }\rangle }\) can take an edge from the configuration \(c={\langle {{\langle {l_1,s_1}\rangle }, \ldots , {\langle {l_n,s_n}\rangle }}\rangle }\) to the configuration \(c'={\langle {{\langle {l_1',s_1'}\rangle }, \ldots , {\langle {l_n',s_n'}\rangle }}\rangle }\) if there exists an interaction \(\gamma = {\langle {Act, g, op}\rangle }\) such that: (i) \(\gamma \) is enabled in c; (ii) there does not exist an enabled interaction \(\gamma '\in \varGamma \) in c such that \(\gamma ' > \gamma \); (iii) for all \(B_i \in \mathcal {B}\) such that \(Act\cap P_{i} \ne \emptyset \), there exists \({\langle {l_i, Act\cap P_{i}, g_i, op_i,l_i'}\rangle } \in E_{i}\) and if \(op = x:= exp\), \(op_i = y:= exp_i\) then \(s_i'' = s_i[x:= [\![exp]\!]_{\mathcal {E}}(s_i)]\), \(s_i' = s_i''[y:= [\![exp_i]\!]_{\mathcal {E}}(s_i'')]\); (iv) for all \(B_i \in \mathcal {B} \) such that \(Act\cap P_{i} = \emptyset \), \(l_i' = l_i\) and \(s_i' = s_i \).

We use the notation \(c \overset{\gamma }{\rightarrow }c'\) to denote that there exists an edge from the configuration c to the configuration \(c'\) on the interaction \(\gamma \). A configuration \(c_0={\langle {{\langle {l_1,s_1}\rangle },\ldots , {\langle {l_n,s_n}\rangle }}\rangle }\) is an initial configuration if, for some \(i \in [1,n]\), \(l_i = l_{0_i}\) and, for all \(i \in [1,n]\), \(s_i\) is a valuation for \(Var_{i}\) Footnote 3. A configuration c is reachable if and only if there exists a sequence of configurations \(c_0 \xrightarrow {\gamma _1} c_1 \xrightarrow {\gamma _2} \ldots \xrightarrow {\gamma _k} c_k\), such that \(c_0\) is an initial configuration and \(c_k = c\). A \(\textsc {BIP}\) model is safe if no error locations are reachable.

3 ESST for BIP (\(\textsc {ESST} _\textsc {BIP} \))

3.1 The ESST Framework

In this subsection we provide the necessary background on the ESST framework, following the presentation of [16, 17].

Programming Model.

The ESST framework analyzes a multi-threaded program \(\mathcal {P} = {\langle {{\mathcal {T}}, \textsc {Sched}}\rangle }\), consisting of a set of cooperative threads \({\mathcal {T}}= {\langle {{T_{1}}, \ldots , {T_{n}}}\rangle }\) and a non-preemptive scheduler \(\textsc {Sched} \). A non-preemptive scheduler cannot interrupt the execution of a thread, while a cooperative thread is responsible for suspending its execution and releasing the control to the scheduler.

A thread \({T_{i}}={\langle {G_{i}, LVar_{i}}\rangle }\) is a sequential program with a set of local variables \(LVar_{i}\) and is represented by a control-flow graph (CFG) \(G_{i}=(L_i,E_i,l_{0_i},L_{err_i})\), where: (i) \(L_i\) is the set of locations; (ii) \(E_i \subseteq L_i \times Ops(LVar_{i}) \times L_i\) is the set of edges; (iii) \(l_{0_i} \in L_i\) is the entry location; (iv) \(L_{err_i} \subseteq L_i\) is the set of error locations.

A scheduler \(\textsc {Sched} ={\langle {{ SVar}, F_S }\rangle }\) has a set of variables \({ SVar}\) and a scheduling function \( F_S \). For each thread \({T_{i}}\), the scheduler maintains a variable \(st_{{T_{i}}} \in { SVar}\) to keep track of its status (i.e. Running, Runnable, Waiting). A scheduler state \(\mathbb {S}\) is an assignment to all the variables \({ SVar}\). Given a scheduler state \(\mathbb {S}\) where no thread is Running, \( F_S (\mathbb {S})\) generates the set of scheduler states that describes the next thread to be run. We denote by \( SState \) the set of all possible scheduler states, and by \( SState _{One}\) the set of scheduler states, where only one thread is Running. A thread can change the scheduler state by calling a primitive function. For example, the call to a primitive function can change the thread status from Running to Waiting to release the control to the scheduler.

The intuitive semantics of a multi-threaded program is the following: the program executes the thread in the Running status (note that there is at most one running thread); the running thread \({T_{i}}\) can suspend its execution, setting the variable \(st_{{T_{i}}}\) to a value different from Running, by calling an appropriate primitive function; when there are no running threads, the scheduler executes its scheduling function to generate a set of running threads. The next thread to run is picked non-deterministically. See [10] for a formal definition of the semantic.

The ESST Algorithm.

The ESST algorithm [16, 17] performs a reachability analysis of a multi-threaded program \(\mathcal {P} ={\langle {{\mathcal {T}}, F_S }\rangle }\) using explicit-state techniques to explore the possible executions of the scheduler and lazy predicate abstraction [22] to explore the executions of the threads. In the following, we rely on the extended version of the ESST where the scheduler execution is semi-symbolic [17], since we will need a scheduler that reads and writes the local state of the threads. We provide a concise description of the reachability analysis algorithm, and refer to [16, 17] for the details.

The ESST constructs an abstract reachability forest (ARF) to represent the reachable states. An ARF node is a tuple \({\langle {\langle l_1,\varphi _1 \rangle ,\ldots ,\langle l_n,\varphi _n \rangle ,\varphi ,\mathbb {S}}\rangle }\), where for all \(i \in [1,n]\), \(l_i \in L_i\) is a location of \({T_{i}}\) and \(\varphi _i\) is a local region (a formula over \(LVar_{i}\)), \(\varphi \) is a global regionFootnote 4 (a formula over \(\bigcup _{i \in [1,n]}LVar_{i}\)) and \(\mathbb {S}\) is a scheduler state. The ARF is constructed by expanding the ARF nodes. An ARF node can be expanded as long as it is not covered (no other nodes in the ARF include the set of states denoted by this node) or if it is not an error node (the node does not contain any error location). If ESST terminates and all the nodes in the ARF are covered then \(\mathcal {P}\) is safe. If the expansion of the ARF reaches an error node, the ESST builds an abstract counterexample (a path in the ARF from the initial node to the error node), which is simulated in the concrete program; if the simulation succeeds, we find a real counter-example and the program is unsafe. Otherwise the counter-example is spurious, the ESST refines the current abstraction, and restarts the expansion (see [16] for details).

The node expansion uses three basic operations: the symbolic execution of a thread (based on the abstract strongest post-condition), the execution of a primitive function, and the semi-symbolic execution of the scheduling function \( F_S \). The abstract strongest post-condition \({{ SP}_{op}^{\delta }}(\varphi )\) is the predicate abstraction of the set of states reachable from any of the states in the region \(\varphi \) after executing the operation op, using the set of predicates \(\delta \). ESST associates a set of predicates to thread locations (\(\delta _{l'_i}\)), as well as to the global region (\(\delta \)). The primitive functions are executed by the primitive executor \( \textsc {Sexec}: ( SState \times PrimitiveCall ) \rightarrow (\mathbb {Z}\times SState ) \) to update the scheduler state. The scheduler function \( F_S \) is implemented by a function \( F_S : ARFNodes \rightarrow (2^{ SState _{One}\times LFProg }) \), where \( SState _{One}\) is the set of scheduler states with only one running thread, \( ARFNodes \) is the set of \(ARF \) nodes and \( LFProg \) is the set of loop-free programs (programs that contains assignments and conditional statements, but not loops) over the variables of \(\mathcal {P} \) Footnote 5. A ARF node \(\eta = (\langle l_1,\varphi _1 \rangle ,\ldots ,\langle l_n,\varphi _n \rangle ,\varphi ,\mathbb {S})\) is expanded by the following two rules:

-

E1.

If \(\mathbb {S}(st_{{T_{i}}}) = Running\) and there exists an edge \((l_i,op,l'_i) \in E_{i}\), create a successor node \(( \langle l_1,\varphi '_1 \rangle , \ldots , \langle l'_i,\varphi '_i \rangle , \ldots , \langle l_n,\varphi '_n \rangle , \varphi ', \mathbb {S}' )\), where:

-

\({\langle {\mathbb {S}', \hat{op}}\rangle } = {\left\{ \begin{array}{ll} {\langle {\mathbb {S}, op}\rangle } &{} \text {if } \, op \, \text { is not a primitive function call}\\ {\langle {\mathbb {S}'', x := v}\rangle } &{} \text {if} \, op \, \text {is} \, x := f(\mathbf {y}) \, \text {and}\, (v, \mathbb {S}'')=\textsc {Sexec} (\mathbb {S},f(\mathbf {y}))\\ \end{array}\right. }\)

-

\(\varphi '_i = {{ SP}_{\hat{op}}^{\delta _{l'_i}}}(\varphi _i \wedge \varphi )\), \(\varphi '_j = \varphi _j\), for \(i \ne j\), and \(\varphi ' = {{ SP}_{\hat{op}}^{\delta }}(\varphi )\). (\(\delta _{l'_i}\) and \(\delta \) are the precisions associated to the location \(l_i\) and to the global region respectively).

-

-

E2.

If there are no running threads, for each \({\langle {\mathbb {S}',P^{lf}}\rangle } \in F_S (\eta )\) create a successor node \(( \langle l_1,\varphi _1' \rangle , \ldots , \langle l_n,\varphi _n' \rangle , \varphi ', \mathbb {S}' )\), where \(\varphi '_j = {{ SP}_{P^{lf}}^{\delta _{l'_j}}}(\varphi _j \wedge \varphi )\), for \(j \in [1,n]\) and \(\varphi ' = {{ SP}_{\hat{P^{lf}}}^{\delta }}(\varphi )\).

The rule E1 expands the ARF node by unfolding the CFG edge \({\langle {l, op,l'}\rangle } \) of the running thread \({T_{i}}\). If the operation op is not a primitive function, then the scheduler state is unchanged (i.e. \(\mathbb {S}'=\mathbb {S}\)). Otherwise, if the operation op is a primitive function, (e.g. \(x:= f(\mathbf {y})\)), the algorithm executes the primitive executor \(\textsc {Sexec} \) to change the scheduler state and collect the return value of the function (i.e. \((v, \mathbb {S}'')=\textsc {Sexec} (\mathbb {S},f(\mathbf {y}))\)). In both cases, the state of the running thread and the global region are updated by computing the abstract strongest post condition. The rule E2 executes the scheduling function to create a new ARF node for each output state of the scheduling function when all the threads are not running. A detailed illustration of the execution of scheduling function will be give in Sect. 3.2.

3.2 Instantiation of ESST for BIP

To instantiate ESST for BIP, there are two naïve approaches. One is to translate a BIP model to a SystemC program, hence relying on the SystemC primitive functions and the SystemC scheduler (i.e. the existing instantiation of ESST [16]). This approach is inefficient, since one has to encode the \(\textsc {BIP}\) semantics with additional threads. Another approach is to reuse the SystemC primitive functions as in [16, 17], modifying the scheduler to mimic the BIP semantics. This approach is not efficient either, since the primitive functions in SystemC only allow threads to notify and wait for events. This has the effect of introducing additional variables in the scheduler to keep track of the sent and received events, which considerably increases the state space to be explored.

In this paper, we provide a novel instantiation of the ESST framework to analyze BIP models, it consists of: (i) a mapping from BIP to multi-threaded programs and the definition of a new primitive function wait() used by threads to interact with the scheduler; (ii) a new semi-symbolic scheduler that respects the BIP operational semantics and preserves the reachability of error locations.

We use the ESST version with a semi-symbolic scheduler, instead of using a purely explicit one, allowing the scheduler to read and write the state of the threads. This feature is important to analyse BIP models because, in BIP, interaction guards and effects are expressed over the global state of the system. Moreover, the semi-symbolic scheduler is also needed to correctly enforce the BIP priorities.

In each scheduling loop, the scheduler performs two tasks: (i) it computes the set of possible interactions and chooses one to be run; (ii) it schedules the execution of each thread that participates in the chosen interaction. When all the threads are in the Waiting state, the scheduler computes the set of possible interactions and chooses one interaction to be run by setting the status of the participating threads to Runnable, and by setting the value of a local variable in the thread. The variable is used in the guards of the thread edges and encode the \(\textsc {BIP}\) ports. Moreover, the scheduler is also responsible for executing the global effects of the interaction. Whithin each scheduling cycle, the scheduler picks the Runnable threads one by one, until no such threads are available.

Primitive Functions and Threads. In our \(\textsc {BIP}\) instantiation of ESST we introduce a primitive function wait(), which suspends the execution of the calling thread and releases the control back to the scheduler (thus, we have only one primitive function). The function does not change the state of the thread, but changes the status of the thread in the scheduler state to Waiting. Since the return value of wait() is of no interest, we will write wait() instead of \(x:= wait()\). Formally, the semantics of wait() is defined by the primitive executor \(\textsc {Sexec} \), that is \([\![wait()]\!]_{\mathcal {E}}(s, \mathbb {S}) = \textsc {Sexec} ({\langle {\mathbb {S},wait()}\rangle }) = {\langle {*,\mathbb {S}'}\rangle }\), where \(*\) denotes a dummy return value, \(s\) is the state of \({T_{i}}\), and \(\mathbb {S}'=\mathbb {S}[st_{{T_{i}}}:= Waiting]\), if \({T_{i}}\) is the caller of wait().

Given an atomic component \(B_{i} = {\langle {Var_{i}, Q_{i}, E_{i}, l_{0_i}, Q_{err_{i}}}\rangle }\) of \(\mathcal {P}_{\textsc {BIP}}\), we define the thread \({T_{i}}={\langle {G_{i}, LVar_{i}}\rangle }\), where \(LVar_{i} = Var_{i} \cup \{{evt^i}\}\), \( Dom ({evt^i})=\mathbb {Z}\) and \(G_{i}=(L_i,E_i,l'_{0_i},L_{err_i})\), where:

We introduce an additional integer variable \({evt^i}\) for each thread and we associate every port \(\alpha \) to a distinct integer value; for clarity, we use the notation \({evt^i}= \alpha \) instead of \({evt^i}= i\), where \(i \in \mathbb {Z}\) is the value we associated to the port \(\alpha \). The CFG \(G_{i}\) of the thread is obtained from a transformation of the \(\textsc {BIP}\) atomic component \(B_{i}\): (i) adding a location \(l_{wait}\) and an edge from l to \(l_{wait}\) for each \(l \in Q_{i}\); (ii) for each edge \(e={\langle {l, \alpha , g, op,l'}\rangle } \in E_{i}\), add an intermediate location \({l}_{e}\), and an edge from \(l_{wait}\) to \({l}_{e}\), labelled with \({evt^i}= \alpha \), and an edge from \({l}_{e}\) to \(l'\), labelled with op Footnote 6.

The edge to the location \(l_{wait}\) labelled by the primitive function wait() ensures that the thread releases the control to the scheduler, waiting that the scheduler chooses an interaction to be run. The subsequent edge labelled by \({evt^i}= \alpha \) ensures that the thread only executes the edge chosen by the scheduler and constrained by the value of the variable \({evt^i}\). Notice that the edge guard will be taken into account by the \(\textsc {BIP}\) scheduler.

Semi-symbolic BIP Scheduler. For analyzing \(\textsc {BIP}\) models, we design the semi-symbolic \(\textsc {BIP}\) scheduler \(\textsc {Sched} (\mathcal {P}_{\textsc {BIP}})={\langle { F_S , { SVar}}\rangle }\), where \({ SVar}= \{st_{{T_{1}}}, \ldots , st_{{T_{n}}}\}\), and \( F_S \) is the scheduling function that respects the \(\textsc {BIP}\) semantics. As required by the ESST, the scheduler keeps the status of each thread \({T_{i}}\) in a variable \(st_{{T_{i}}}\), with values \(\{Running\), Runnable, \(Waiting\}\). Initially all \(st_{{T_{i}}}\) are Runnable.

Given an ARF node \(\eta = {\langle {\langle l_1,\varphi _1 \rangle ,\ldots ,\langle l_n,\varphi _n \rangle ,\varphi ,\mathbb {S}}\rangle }\), we say that an interaction \(\gamma = {\langle {\{\alpha _1, \ldots , \alpha _k\}, g, op}\rangle }\) is enabled if there exists a set of edges \(\{t_{\alpha _1}, \ldots , t_{\alpha _k}\}\) such that, for all \(i \in [1,k]\), \(t_{\alpha _i} \in E_{{id({\alpha _i})}}\), \(t_{\alpha _i} = {\langle {l_{{id({\alpha _i})}}, \alpha _i, g_{t_{\alpha _i}}, op_{t_{\alpha _i}}, l'_{t_{\alpha _i}}}\rangle }\). In that case, we write \(enabled({\eta }, {\gamma })\) and we denote with \(EnabledSet(\eta )\) the set of all the enabled interactions in \(\eta \). Notice that the concept of enabled interaction on an ARF node is different from the one we had on a BIP configuration: we do not check the satisfiability of the guards in the ARF node to determine the set of enabled interactions. Instead, interaction guards and effects are accounted for by the symbolic execution of the scheduling function.

\( F_S \) alternates two different phases: (i) scheduling of new interactions; (ii) execution of edges participating in the chosen interaction. Given an ARF node \(\eta =\langle \langle l_1,\varphi _1 \rangle ,\ldots ,\langle l_n,\varphi _n \rangle ,\varphi ,\mathbb {S}\rangle \), \( F_S (\eta )\) is defined as follows:

-

F1.

If for all \(st_{{T_{i}}} \in { SVar}\), such that \(\mathbb {S}(st_{{T_{i}}}) = Waiting \) and \(EnabledSet({\eta }) = \{\gamma _1, \ldots , \gamma _k\}\), \( F_S (\eta ) = \{{\langle {\mathbb {S}_1, P^{lf}_1}\rangle }, \ldots ,{\langle {\mathbb {S}_k, P^{lf}_k}\rangle } \}\), where for all \(i \in [1,k]\):

-

\(\gamma _i = {\langle { \{\alpha _i^{1}, \ldots , \alpha _i^{l}\}, g_i, op_i}\rangle }\);

-

\(\mathbb {S}_i=\mathbb {S}[st_{{T_{{id({\alpha _i^{1}})}}}}:=Runnable,\ldots ,st_{{T_{{id({\alpha _i^{l}})}}}}:=Runnable]\);

-

\( P^{lf}_i = p; g_i; g_e; op_i; {evt^{1}_{{id({\alpha _i^{1}})}}}:=\alpha _i^{1}; \ldots ; {evt^{l}_{{id({\alpha _i^{l}})}}}:=\alpha _i^{l}\text {, where}\quad \quad \quad \quad \) \( p = \bigwedge _{{\langle {\gamma _i,\gamma '}\rangle } \in \pi , \alpha \in \gamma '} \bigwedge _{{\langle {l, \alpha , g_\alpha , op_\alpha , l'}\rangle } \in E_{{id({\alpha })}}} \lnot g_\alpha \quad \quad \) and \( g_e = \bigwedge _{\alpha \in \gamma _i}\bigvee _{{\langle {l, \alpha , g_\alpha , op_\alpha , l'}\rangle } \in E_{{id({\alpha })}}} g_\alpha \).

-

-

F2.

If there exists a thread \({T_{i}}\), such that \(\mathbb {S}(st_{{T_{i}}})=Runnable\), then \( F_S (\eta ) = \{{\langle {\mathbb {S}[ st_{{T_{i}}}:=Running], { skip }}\rangle }\}\).

In rule F1, the formula p encodes the priority constraints (there are no enabled interactions with a higher priority than \(\gamma _i\)), and the formula \(g_e\) imposes that, in each thread that participates in the interaction, there is at least one enabled edge labeled with the corresponding interaction port. Thus, the loop free program \(P^{lf}_i\) ensures that the interaction \(\gamma _i\) will be scheduled, according to the \(\textsc {BIP}\) semantics, and also imposes the correct ports that must be executed by the threads. The rule F2 just picks the next thread to be run.

Correctness of \(\mathbf{ESST}_\mathbf{BIP}\) .

Theorem 1

Let \(\mathcal {P}_{\textsc {BIP}}={\langle {\mathcal {B},\varGamma ,\pi }\rangle }\) be a \(\textsc {BIP}\) model and \(\mathcal {P} = {\langle {{\mathcal {T}},\textsc {Sched} (\mathcal {P}_{\textsc {BIP}})}\rangle }\) be the corresponding multi-threaded program with semi-symbolic \(\textsc {BIP}\) scheduler \(\textsc {Sched} (\mathcal {P}_{\textsc {BIP}})\). If the \(\textsc {ESST} _\textsc {BIP} \) algorithm terminates on \(\mathcal {P} \), then the \(\textsc {ESST} _\textsc {BIP} \) returns safe iff the \(\textsc {BIP}\) model \(\mathcal {P}_{\textsc {BIP}}\) is safe.

For lack of space, we provide the proofs in the extended technical report [10].

3.3 Optimizations

In this section we present some optimizations aiming to reduce the number of the ARF nodes that must be explored during the reachability analysis.

Partial Order Reduction for BIP. The application of POR to the \(\textsc {ESST} _\textsc {BIP} \) is based on the following idea: when the \(\textsc {ESST} _\textsc {BIP} \) executes the scheduling function \( F_S \) on a node \(\eta \), it creates the successor nodes only for a representative subset of the set of all the enabled interactions \(EnabledSet({\eta })\). To compute the independence relation between interactions, we define the following valid dependence relation [16] for \(\textsc {BIP}\) models: two interactions are dependent if they share a common component. This valid dependent relation can be computed statically from the \(\textsc {BIP}\) model. We have implemented both persistent set and sleep set POR approaches. The use of POR in \(\textsc {ESST} _\textsc {BIP} \) is correct since the application of POR to the general ESST framework is sound, provided a valid dependence relation [16].

Simultaneous Execution of the Edges Participating in an Interaction. In the basic \(\textsc {ESST} _\textsc {BIP} \), we serialize the edges participating in the same interaction since we use a scheduling function that allows only one thread to run at a time. Consider an ARF node \(\eta = {\langle {\langle l_1,\varphi _1 \rangle ,\ldots ,\langle l_n,\varphi _n \rangle ,\varphi ,\mathbb {S}}\rangle }\), and an interaction \(\gamma = {\langle {\{\alpha _1, \ldots , \alpha _k\}, g, op}\rangle }\) enabled in \(\eta \). Let \(\{t_{\alpha _1}, \ldots , t_{\alpha _k}\}\) be the set of participating edges in \(\gamma \) and \(op_{\alpha _1}, \ldots , op_{\alpha _k}\) be their respective effects. When we expand \(\eta \), we will create the following sequence of successor nodes:

where the label E2 denotes the execution of the \(\textsc {ESST} _\textsc {BIP} \) scheduler function. The intermediate nodes \(\eta _{1},\ldots , \eta _{2+4k-1}\) are due to the sequentialization of the execution of the edges participating in the interaction. These intermediate nodes increase the complexity of the reachability analysis. They do not correspond to any state reachable in the \(\textsc {BIP}\) model, where all the edges involved in an interaction are executed simultaneously, and are an artefact of the encoding of the \(\textsc {BIP}\) model into the ESST framework. We can modify the search discussed in Sect. 3 in order to avoid the generation of these intermediate states by (i) extending the primitive execution function \(\textsc {Sexec} \) to simultaneously evaluate a sequence of primitive functions, (ii) changing the node expansion rule E1 of ESST as follows:

-

E1’.

If \(\mathbb {S}(st_{{T_{i}}}) = Running\), let \(T_{R}=\{{T_{i}} \in T | \mathbb {S}(st_{{T_{i}}}) \ne Waiting\}\) be the set of threads not in the Waiting state. Let \(op=op_1; \ldots ; op_k\) be a sequential composition (in arbitrary order) of the operations labeling the outgoing edges \((l_i, op_i,l'_i) \in E_i\), for \(T_i \in T_{R}\) Footnote 7. The successor node is\( ( \langle l'_1,\varphi '_1 \rangle , \ldots , \langle l'_n,\varphi '_n \rangle , \varphi ', \mathbb {S}' ) \), where:

-

\({\langle {\mathbb {S}', \hat{op}}\rangle } = {\left\{ \begin{array}{ll} \small {\langle {\mathbb {S}, op}\rangle } &{} \small \text {if none of the} \, op_i \, \text {in} \, op \, \text {is a call to}\, wait() \\ \small {\langle {\mathbb {S}'', skip}\rangle } &{} \small \text {if all }\, op_i \, \text {in} \, op \, \text {is a}\, wait() \, \text {and} (*, \mathbb {S}'')=\textsc {Sexec} (\mathbb {S},op)\\ \end{array}\right. }\)

-

\(\varphi '_i = {{ SP}_{\hat{op_i}}^{\delta _{l'_i}}}(\varphi _i \wedge \varphi )\) for each thread \(T_i \in T_{R}\), \(\varphi '_j = \varphi _j\) and \(l'_j = l_j\) for each thread \(T_j \notin T_{R}\), and \(\varphi ' = {{ SP}_{\hat{op}}^{\delta }}(\varphi )\), where \(\hat{op_i}\) is the projection of \(\hat{op}\) on the instructions of thread \(T_i\).

-

We remark that, in BIP, we do not have shared variables. Thus, all the \(op_i\) are local to the corresponding components, and executing a sequence of \(op_i\) altogether will not create any conflict. The correctness of this optimization can be easily justified since it respects \(\textsc {BIP}\) operational semantics.

Implicit Primitive Functions. The previous optimization does not remove all the intermediate ARF nodes \(\eta _{1},\ldots ,\eta _{2+4k-1}\) visited by the \(\textsc {ESST} _\textsc {BIP} \) that do not have a corresponding configurations in the \(\textsc {BIP}\) operational semantics. In particular, we can avoid the creation of the intermediate ARF nodes created by calls to wait() noting that: (i) wait() is always executed immediately after the execution of some edge \(t_{\alpha _{i}}\) labeled by \(\alpha _{i}\), i.e. \(\eta \xrightarrow {\alpha _i} \eta ' \xrightarrow {wait()} \eta ''\) (see the description of the sequence of the ARF nodes visited after the scheduling of an interaction in the previous optimization); (ii) wait() only modifies the scheduler states of an ARF node. Thus, we can combine the execution of wait() with the execution of its preceding edge \(t_{\alpha _i}\). This optimization can be integrated in the \(\textsc {ESST} _\textsc {BIP} \) framework by modifying rule E1 as follows.

-

E1”.

If \(\mathbb {S}(st_{{T_{i}}}) = Running\), and \(\{(l_i,op,l^1_i), (l^1_i,wait(),l'_i)\} \subseteq E_i\), then the successor node is \(( \langle l_1,\varphi '_1 \rangle , \ldots , \langle l_n,\varphi '_n \rangle , \varphi ', \mathbb {S}')\), where:

-

\({\langle {\mathbb {S}', \hat{op}}\rangle } = {\langle {\mathbb {S}'', op;skip}\rangle } \text { if } \, op \text { is not } \, wait() \, and \,(*, \mathbb {S}'')=\textsc {Sexec} (\mathbb {S}, wait())\)

-

\(\varphi ' = {{ SP}_{\hat{op}}^{\delta }}(\varphi )\), \(\varphi '_i = {{ SP}_{\hat{op}}^{\delta _{l'_i}}}(\varphi _i \wedge \varphi )\), and \(\varphi '_j = \varphi _j\), for \(i \ne j\),

-

This optimization is correct with respect to BIP semantics since \(\textsc {ESST} _\textsc {BIP} \) will still visit all the reachable states of the original \(\textsc {BIP}\) model \(\mathcal {P}_{\textsc {BIP}}\). To see this, notice that there are no interactions to be scheduled in the intermediate sequence of ARF nodes created while executing an interaction, and after the execution of the edge \(t_{\alpha _i}\) the thread \({T_{i}}\) will always stop its execution.

We remark that the optimization for the implicit execution of primitive functions and the optimization for the simultaneous execution of the edges of an interaction can be combined together, to further reduce the search space of the basic \(\textsc {ESST} _\textsc {BIP} \).

4 Encoding BIP into Transition System

In this section, we show how to encode a \(\textsc {BIP}\) model into a Symbolic Transition System, thus enabling a direct application of state-of-the-art model checkers for infinite state systems, such as the nuXmv [12] symbolic model checker.

A Symbolic Transition System (STS) is a tuple \(S={\langle {V,I,Tr}\rangle }\), where: (i) \(V\) is a finite set of variables, (ii) \(I\) is a first-order formula over \(V\) (called initial condition), and (iii) \(Tr\) is a first-order formula over \(V\cup V'\) (called transition condition Footnote 8). The semantic of an STS can be given in terms of an explicit transition systems (see for example [26]).

The encoding of a \(\textsc {BIP}\) model \(\mathcal {P}_{\textsc {BIP}}={\langle {\mathcal {B},\varGamma ,\pi }\rangle }\) as an STS \(S_{P_{\textsc {BIP}}}={\langle {V,I,Tr}\rangle }\) is the following. The set of variables is defined as:

where for all \(i \in [1,n]\), we preserve the domain of each var \(x \in Var_{i}\), \( Dom (loc_{i}) = Q_{i}\); for all \(\alpha \in P_{i}\), \( Dom (v_{\alpha }) = \{true, false\}\); and \( Dom (v_\varGamma ) = \varGamma \).

The initial condition is \(I= \bigwedge _{i}^{n} (loc_{i} = l_{0_i})\), since we do not have initial predicates in \(\mathcal {P}_{\textsc {BIP}}\). The transition condition is \( Tr= (\bigwedge _{i=1}^n (Tr_{e_{i}} \wedge Tr_{p_{i}}) \wedge Tr_{\varGamma } \wedge Tr_{\pi } \), where \(Tr_{e_{i}}\) encodes the edges of the component \(B_{i}\), \(Tr_{p_{i}}\) determines when the variable \(v_{\alpha }\) for port \(\alpha \) is true, \(Tr_{\varGamma }\) encodes when an interaction is enabled, and \(Tr_{\pi }\) encodes the priorities.

In the following, let \(\varGamma _{B_i} = \{{\langle {Act,g,op\} | Act\cap P_{i} \ne \emptyset }\rangle }\) be the set of all the interactions on which \(B_i\) participates and \(\varGamma _{e} = \{{\langle {Act,g,op}\rangle } | e={\langle {l_i, \alpha , g_e, op_e, l_i'}\rangle }, \alpha \in Act\}\), with \(e \in E_{i}\), be the set of interactions that contain the port that labels e.

The encoding of an edge e of a component \(B_{i}\) is defined as:

and \(replace({e,\gamma })\) is a function that replaces all the occurrences of a variables y in e with \(e_\gamma \), if \(op_\gamma = y := e_\gamma \) and \(\gamma ={\langle {Act, g_\gamma , op_\gamma }\rangle }\) Footnote 9. \(Tr_{p_{i}}\) is defined as \( \bigwedge _{\alpha \in \varGamma } \big (v_{\alpha } \leftrightarrow \bigvee _{{\langle {l_i, \alpha , g_e, op_e, l_i'}\rangle } \in E_{i}} \big (loc_{i} = l_i \wedge g_e \big ) \big ) \). Finally, the conditions that constraint the interactions to their ports and the priorities among the interactions are defined as:

Theorem 2

The transition system \(S_{P_{BIP}}\) for a BIP model \(\mathcal {P}_{\textsc {BIP}}\) preserves reachability of any configuration of the BIP model.

The proof relies on the fact that the state space of the \(\textsc {BIP}\) model is preserved. The initial configuration is preserved by formula \(I\), where \(loc_{i}\) is constrained to the initial locations of the corresponding component. The transition relation is also preserved, since the variable \(v_\varGamma \) can be assigned to the value representing an interaction \(\gamma \), enabling the corresponding edges, if and only if \(\gamma \) is enabled in the corresponding state of the \(\textsc {BIP}\) model. The valuations of the additional variables \(v_{\alpha }\) and \(v_\varGamma \) do not alter the state space: their valuations are constrained by formula the \(Tr\) to reflect the \(\textsc {BIP}\) semantics.

5 Related Work

Several approaches to the verification of \(\textsc {BIP}\) models have been explored in the literature. DFinder [7] is a verification tool for \(\textsc {BIP}\) models that relies on compositional reasoning for identifying deadlocks and verifying safety properties. The tool has several limitations: it is unsound in the presence of data transfers among components (it assumes that the involved variables do not exchange values); its refinement procedure is not effective for infinite-state systems, since it consists only in removing the found unreachable deadlock states from the next round of the algorithm; finally, it can only handle \(\textsc {BIP}\) models with finite domain variables or integers. Our approaches instead are sound in the presence of data transfer, they exploit standard refinement mechanisms (e.g. refinement based on interpolation) and can handle \(\textsc {BIP}\) models with real variables.

The VCS [20] tool supports the verification of \(\textsc {BIP}\) models with data transfer among components, using specialized BDD- and SAT-based model checking algorithms for \(\textsc {BIP}\). Differently from our approach, VCS is only able to deal with finite domain variables, and priority is ignored.

Our encoding in transition system is related to works in [25, 29]. In [29], a timed \(\textsc {BIP}\) model is translated into Timed Automata and then verified with Uppaal [6]. The translation handles data transfers, but it is limited to \(\textsc {BIP}\) models with finite domain data variables and without priorities. In [25], the authors show an encoding of a \(\textsc {BIP}\) models into Horn Clauses. They do not handle data transfers on interactions and do not describe how to handle priorities. We remark that, any transition system can be encoded into Horn Clauses and then verified with tools such as Z3 [23] or Eldarica [24].

With respect to the verification of multi-threaded programs, the works most related to ours are [16, 17, 30]. In [16, 17], the authors present the ESST framework, instantiating it for SystemC [27] and FairThreads [11]. They neither consider instantaneous synchronizations nor priorities among interactions. Instead, in this work we instantiate the ESST framework for the analysis of \(\textsc {BIP}\) models, which encompasses instantaneous synchronizations and priorities. The semi-symbolic scheduler in [17] is also different from ours: while they use the semi-symbolic scheduler to handle parameters of the primitive functions, we use it to change the status of the local threads. We also apply and adapt several optimizations sound w.r.t. the \(\textsc {BIP}\) operational semantics. The work in [30] combines lazy abstraction and POR for the verification of generic multi-threaded programs with pointers. They do not leverage on the separation between coordination and computation which is the core of our \(\textsc {ESST} _\textsc {BIP} \) approach. Moreover, because of the pointers, they rely on a dynamic dependence relation for applying POR.

6 Experimental Evaluation

We implemented \(\textsc {ESST} _\textsc {BIP} \) extending the Kratos [13] software model checker. We implemented the encoding from \(\textsc {BIP}\) to transition system in a tool based on the \(\textsc {BIP}\) framework [2]. Our tool generates models in the input language of nuXmv, allowing us to reuse its model checking algorithms.

In the experimental evaluation, we used several benchmarks taken and adapted from the literature, including the temperature control system model and ATM transaction model used in [7], the train gate control system model used in [25], and several other consensus and voting algorithm models. Every benchmark is scalable with respect to the number of components. In total, we created 379 instances of both safe and unsafe models, and verified different invariant properties. All the benchmarks are infinite-state, due to integer variables, and some of them feature data transfer on interaction. Due to lack of space, we do not provide the details of each benchmark, but refer to our webpageFootnote 10 for more information.

We run several configurations of \(\textsc {ESST} _\textsc {BIP} \): EsstBip, EsstBiP+P, EsstBip+S, EsstBip+S+P, EsstBip+S+I and EsstBip+S+I+P, where \(\textsc {EsstBip} \) is the base version without any optimization, \(\textsc {P}\) denotes the use of partial order reduction, \(\textsc {S}\) denotes the use of the simultaneous execution of the interaction edges and \(\textsc {I}\) denotes the implicit execution of the primitives functions. After the encoding into transition systems, we run two algorithms implemented in nuXmv: (Ic3) an implementation of the IC3 algorithm integrated with predicate abstraction [14]; (Bmc) an implementation of Bounded Model Checking [9] via SMT [1] solving. For the benchmarks that do not exhibit data transfer, we also compared our approaches against DFinder (version 2) [7].

All the experiments have been performed on a cluster of 64-bit Linux machines with a 2.7 Ghz Intel Xeon X5650 CPU, with a memory limit set to 8 Gb and a time limit of 900 s. The tools and benchmarks used in the experiments are available in our webpage.

Comparison with DFINDER. We first compare on the subset of the benchmarks (100 instances) that DFinder can handle (these benchmarks do not have data transfer and are safe). We compare DFinder and Ic3 in the scatter plot of Fig. 2: DFinder is able to solve only 4 of our instances, while Ic3 solves all the 100 instances. The best configuration of \(\textsc {ESST} _\textsc {BIP} \) (EsstBip+S+I+P) shows a similar trend (solving 75 instances). For lack of space we do not show the respective plot. DFinder requires about 142 s to solve the four benchmarks, while both Ic3 and EsstBip+S+I+P solve all of them in a fraction of a second. The main explanations for these results are: (i) DFinder cannot prove 60 instances since it cannot find strong enough invariants to prove the property; (ii) it exceeds the memory limits for the remaining 36 instances.

Comparison of NUXMV and \(\mathbf{ESST}_\mathbf{BIP}\) . We show the results of the comparison among our approaches on the full set of instances in Fig. 1, where we plot the cumulative time to solve an increasing number of instances. Ic3 clearly outperforms all the other approaches, while the version of \(\textsc {ESST} _\textsc {BIP} \) with all the optimization outperforms all the other \(\textsc {ESST} _\textsc {BIP} \) configurations. In Fig. 3 we focus only on the safe instances: the plot shows that Ic3 is more efficient than \(\textsc {ESST} _\textsc {BIP} \). Ic3 is much more effective than \(\textsc {ESST} _\textsc {BIP} \) on a subset of the instances, where Ic3 can easily find an inductive invariant (for this subset, the number of frames needed by Ic3 to prove the property does not increase when increasing the number of components in each benchmark). In these cases instead, \(\textsc {ESST} _\textsc {BIP} \) still has to visit several nodes before succeeding in the coverage check. In Fig. 4, we focus on the unsafe properties. Both all the \(\textsc {ESST} _\textsc {BIP} \) approaches that enable the implicit primitive function execution and Ic3 outperform Bmc. The main reason is that BMC is not effective on long counterexamples, while in our benchmarks the length of the counterexamples grows with the number of components. We also observe that, for the unsafe cases, the approach EsstBip+S+I+P is faster than Ic3. Thus, the experiments show that Ic3 and \(\textsc {ESST} _\textsc {BIP} \) are complementary, with Ic3 being more efficient in the safe case, and \(\textsc {ESST} _\textsc {BIP} \) being more efficient for the unsafe ones. This can be also seen in Fig. 1, where we plot the virtual best configuration (VirtualBest) (i.e. the configuration obtained taking the lower run time for each benchmark), which shows the results that we would obtain running all our approaches in parallel (in a portfolio approach).

Evaluation of the \(\mathbf{ESST}_\mathbf{BIP}\) Optimization. In Fig. 5a and b we show two scatter plots to compare the results obtained with and without partial order reduction. The plot 5a shows how POR improves the performance when applied to EsstBip (for EsstBip+S we get similar results), while the plot 5b shows the same for EsstBip+S+I. The plots show that POR is effective on almost all benchmarks, even if in some cases the POR bookkeeping introduces some overhead. In Fig. 5c and d we show the results of applying the simultaneous execution of the edges participating in an interaction to the basic configuration with and without partial order reduction enabled (EsstBip and EsstBiP+P). In both cases, the improvements to the run times brought by the concurrent execution of edges is consistent, since the run times are always lower and the number of solved instances higher. Finally, in Fig. 5e and f we show the plots that compares EsstBip+S with EsstBip+S+I and EsstBip+S+P with EsstBip+S+I+P. In both cases the implicit execution of the primitives functions always brings a performance improvement.

7 Conclusions and Future Work

In this paper, we described two complementary approaches for the verification of infinite-state \(\textsc {BIP}\) models that, contrary to the existing techniques, consider all the features of \(\textsc {BIP}\) such as the global effects on the interactions and priorities. First, we instantiated for \(\textsc {BIP}\) the ESST framework and we integrated several optimization sound w.r.t. the \(\textsc {BIP}\) semantics. Second, we provided an encoding of \(\textsc {BIP}\) models into symbolic transition systems, enabling us to exploit the existing state of the art verification algorithms. Finally, we implemented the proposed techniques and performed an experimental evaluation on several benchmarks. The results show that our approaches are complementary, and that they outperform DFinder w.r.t performance and also w.r.t. the coverage of the \(\textsc {BIP}\) features. As future work we would like extend the proposed techniques to support timed \(\textsc {BIP}\) [4] (e.g. the symbolic encoding could be extended to HyDI [15]) and, in the case of ESST we would improve its performance in finding bugs using direct model checking [19]. Finally, we will investigate the possibility to exploit the invariants computed by DFinder in all our approaches.

Notes

- 1.

We also consider finite domain variables (e.g. Boolean), which can be easily encoded in \(\mathbb {Z}\).

- 2.

We can express any safety property using additional edges, interactions and error locations.

- 3.

While we did not add initial predicates for \(Var_{i}\), this can be encoded with an additional initial location and an edge that has as guard the initial predicates.

- 4.

Whereas in the general ESST framework the global region is used to track both local and global variables, we use it to only track the relations among the local variables due to the data transfer on interactions.

- 5.

The ESST framework does not allow the scheduler to produce programs with loops.

- 6.

Note that, while the formal presentation introduces intermediate locations and edges, in practice these are collapsed in a single edge since we use the large block encoding [8].

- 7.

Note that there is no non-determinism on the outgoing edge to be executed by each thread \(T_i\) after the scheduling of the interaction \(\gamma \).

- 8.

Hereby and below, we denote with \(V'=\{x' | x \in V\}\) the set of primed variables of \(V\).

- 9.

Note that, while in our definition \(op_\gamma \) is a single assignment, the approach can be easily generalized to sequential programs applying a single-static assignment (SSA) transformation [18].

- 10.

References

Barrett, C.W., Sebastiani, R., Seshia, S.A., Tinelli, C.: Satisfiability modulo theories. Handb. Satisfiability 185, 825–885 (2009)

Basu, A., Bensalem, S., Bozga, M., Combaz, J., Jaber, M., Nguyen, T.H., Sifakis, J.: Rigorous component-based system design using the BIP framework. IEEE Softw. 28(3), 41–48 (2011)

Basu, A., Bensalem, S., Bozga, M., Caillaud, B., Delahaye, B., Legay, A.: Statistical abstraction and model-checking of large heterogeneous systems. In: Hatcliff, J., Zucca, E. (eds.) FMOODS 2010, Part II. LNCS, vol. 6117, pp. 32–46. Springer, Heidelberg (2010)

Basu, A., Bozga, M., Sifakis, J.: Modeling heterogeneous real-time components in BIP. In: SEFM (2006)

Basu, A., Gallien, M., Lesire, C., Nguyen, T.H., Bensalem, S., Ingrand, F., Sifakis, J.: Incremental component-based construction and verification of a robotic system. ECAI 178, 631–635 (2008)

Behrmann, G., David, A., Larsen, K.G., Håkansson, J., Pettersson, P., Yi, W., Hendriks, M.: UPPAAL 4.0. In: QEST (2006)

Bensalem, S., Bozga, M., Nguyen, T.-H., Sifakis, J.: D-Finder: a tool for compositional deadlock detection and verification. In: Bouajjani, A., Maler, O. (eds.) CAV 2009. LNCS, vol. 5643, pp. 614–619. Springer, Heidelberg (2009)

Beyer, D., Cimatti, A., Griggio, A., Keremoglu, M.E., Sebastiani, R.: Software model checking via large-block encoding. In: FMCAD (2009)

Biere, A., Cimatti, A., Clarke, E., Zhu, Y.: Symbolic model checking without BDDs. In: Cleaveland, W.R. (ed.) TACAS 1999. LNCS, vol. 1579, p. 193. Springer, Heidelberg (1999)

Bliudze, S., Cimatti, A., Jaber, M., Mover, S., Roveri, M., Saab, W., Wang, Q.: Formal verification of infinite-state bip models. Technical report. https://es-static.fbk.eu/people/mover/paper/fvbip.pdf

Boussinot, F.: FairThreads: mixing cooperative and preemptive threads in C. Concurrency Comput. Pract. Experience 18(5), 445–469 (2006)

Cavada, R., Cimatti, A., Dorigatti, M., Griggio, A., Mariotti, A., Micheli, A., Mover, S., Roveri, M., Tonetta, S.: The nuXmv Symbolic Model Checker. In: Biere, A., Bloem, R. (eds.) CAV 2014. LNCS, vol. 8559, pp. 334–342. Springer, Heidelberg (2014)

Cimatti, A., Griggio, A., Micheli, A., Narasamdya, I., Roveri, M.: Kratos – a software model checker for systemC. In: Gopalakrishnan, G., Qadeer, S. (eds.) CAV 2011. LNCS, vol. 6806, pp. 310–316. Springer, Heidelberg (2011)

Cimatti, A., Griggio, A., Mover, S., Tonetta, S.: IC3 modulo theories via implicit predicate abstraction. In: Ábrahám, E., Havelund, K. (eds.) TACAS 2014 (ETAPS). LNCS, vol. 8413, pp. 46–61. Springer, Heidelberg (2014)

Cimatti, A., Mover, S., Tonetta, S.: HyDI: a language for symbolic hybrid systems with discrete interaction. In: SEAA (2011)

Cimatti, A., Narasamdya, I., Roveri, M.: Software model checking with explicit scheduler and symbolic threads. Log. Methods Comput. Sci. 8(2), 1–42 (2012)

Cimatti, A., Narasamdya, I., Roveri, M.: Verification of parametric system designs. In: FMCAD (2012)

Cytron, R., Ferrante, J., Rosen, B.K., Wegman, M.N., Zadeck, F.K.: Efficiently computing static single assignment form and the control dependence graph. ACM Trans. Program. Lang. Syst. 13(4), 451–490 (1991)

Edelkamp, S., Schuppan, V., Bošnački, D., Wijs, A., Fehnker, A., Aljazzar, H.: Survey on directed model checking. In: Peled, D.A., Wooldridge, M.J. (eds.) MoChArt 2008. LNCS, vol. 5348, pp. 65–89. Springer, Heidelberg (2009)

He, F., Yin, L., Wang, B.-Y., Zhang, L., Mu, G., Meng, W.: VCS: a verifier for component-based systems. In: Van Hung, D., Ogawa, M. (eds.) ATVA 2013. LNCS, vol. 8172, pp. 478–481. Springer, Heidelberg (2013)

Henzinger, T.A., Jhala, R., Majumdar, R., McMillan, K.L.: Abstractions from proofs. ACM SIGPLAN Not. 39, 232–244 (2004). ACM

Henzinger, T.A., Jhala, R., Majumdar, R., Sutre, G.: Lazy abstraction. In: POPL (2002)

Hoder, K., Bjørner, N.: Generalized property directed reachability. In: Cimatti, A., Sebastiani, R. (eds.) SAT 2012. LNCS, vol. 7317, pp. 157–171. Springer, Heidelberg (2012)

Hojjat, H., Konečný, F., Garnier, F., Iosif, R., Kuncak, V., Rümmer, P.: A verification toolkit for numerical transition systems. In: Giannakopoulou, D., Méry, D. (eds.) FM 2012. LNCS, vol. 7436, pp. 247–251. Springer, Heidelberg (2012)

Hojjat, H., Rümmer, P., Subotic, P., Yi, W.: Horn clauses for communicating timed systems. In: HCVS (2014)

Manna, Z., Pnueli, A.: The Temporal Logic of Reactive and Concurrent Systems: Specification. Springer, New York (1992)

IEEE 1666: SystemC language Reference Manual (2005)

Sifakis, J.: Rigorous system design. Found. Trends Electron. Des. Autom. 6(4), 293–362 (2013)

Su, C., Zhou, M., Yin, L., Wan, H., Gu, M.: Modeling and verification of component-based systems with data passing using BIP. In: ICECCS (2013)

Wachter, B., Kroening, D., Ouaknine, J.: Verifying multi-threaded software with Impact. In: FMCAD (2013)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Bliudze, S. et al. (2015). Formal Verification of Infinite-State BIP Models. In: Finkbeiner, B., Pu, G., Zhang, L. (eds) Automated Technology for Verification and Analysis. ATVA 2015. Lecture Notes in Computer Science(), vol 9364. Springer, Cham. https://doi.org/10.1007/978-3-319-24953-7_25

Download citation

DOI: https://doi.org/10.1007/978-3-319-24953-7_25

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-24952-0

Online ISBN: 978-3-319-24953-7

eBook Packages: Computer ScienceComputer Science (R0)