Abstract

Although predictive power and explanatory insight are both desiderata of scientific models, these features are often in tension with each other and cannot be simultaneously maximized. In such situations, scientists may adopt what I term a ‘division of cognitive labor’ among models, using different models for the purposes of explanation and prediction, respectively, even for the exact same phenomenon being investigated. Adopting this strategy raises a number of issues, however, which have received inadequate philosophical attention. More specifically, while one implication may be that it is inappropriate to judge explanatory models by the same standards of quantitative accuracy as predictive models, there still needs to be some way of either confirming or rejecting these model explanations. Here I argue that robustness analyses have a central role to play in testing highly idealized explanatory models. I illustrate these points with two examples of explanatory models from the field of geomorphology.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Prediction and explanation have long been recognized as twin goals of science, and yet a full understanding of the relations – and tensions – between these two goals remains unclear. When it comes to scientific modeling there are two well-known problems with any close marrying of prediction and explanation: First, there are phenomenological models that are highly useful for generating predictions, yet offer no explanatory insight. Hence, predictive power simpliciter cannot be taken as a hallmark of a good explanation. Second, as a matter of fact, explanatory power and predictive accuracy seem to be competing virtues in scientific modeling: a gain in explanatory power often requires sacrificing predictive accuracy and vice versa.

In the philosophy of biology, the tradeoffs scientists seem to face between explanatory and predictive models have received some attention, beginning with Richard Levins (1966) article “The Strategy of Model Building in Population Biology” and the various responses to it (e.g., Orzack and Sober 1993; Matthewson and Weisberg 2008). However, these tensions remain an under-explored issue in the philosophy of science and more cases need to be examined.

In what follows I will examine models from a field known as geomorphology, which is concerned with understanding how landforms change over time. There have been a number of interesting debates recently in the geomorphology literature about how to properly model geomorphic systems for the purposes of explanation and prediction. I shall use this work in geomorphology to address the following issues in the philosophy of science. First, I shall argue that there is, what I call, a “division of cognitive labor” among scientific models; that is, even for the same natural phenomenon, different scientific models better serve different modeling goals. Although this division of cognitive labor among models might seem obvious upon reflection, it is rarely explicitly articulated. Making this modeling strategy explicit, however, has important implications, in that it can forestall certain types of criticisms and reveal others. More specifically, it raises the possibility that a model that was designed for the purpose of scientific explanation may fail to make quantitatively accurate predictions (a different cognitive goal). Hence, recognizing that there is division of cognitive labor among models might suggest that criticisms involving the quantitative accuracy of an explanatory model are misguided. This, however, raises the second question that I wish to explore in this paper: How are prima facie explanatory models to be tested, and either accepted or rejected? Here I shall argue that robustness analyses have a central role to play in the testing and validating explanatory models. I shall illustrate these points using two examples of reduced complexity models that are being used to explain phenomena in geomorphology.Footnote 1

2 Reduced Complexity Models: “Reductionism” Versus “Synthesism”

Geomorphology is referred to most broadly as the science of the Earth’s surface; it is concerned more specifically with how landscapes change over time, and includes land-water interfaces such as coastal processes. Understanding how and why landscapes change over time involves synthesizing information from many different fields, including geology, hydrology, biology, geochemistry, oceanography, climatology, etc. Landscape change is strongly influenced by the relative presence, absence, and kind of vegetation, as well as the behavior of both human and non-human animals.

These sort of complexities have led to a number of interesting debates about the proper way to model geomorphic systems. One of the central debates concerns the appropriate level of scale at which to model geomorphic systems and has given rise to two broad approaches to modeling within geomorphology termed the “explicit numerical reductionist” approach versus the “synthesist” approach.

The traditional approach, which is termed by its opponents “explicit numerical reductionism” – or just “reductionist modeling” – tries to remain as firmly grounded in classical mechanics as possible, invoking laws such as conservation of mass, conservation of momentum, classical gravitation, entropy, etc. Moreover, it seeks to represent in the model as many of the physical processes known to be operating as possible and in as much detail as is computationally feasible. Models that are developed in the reductionist approach are termed “simulation models.”Footnote 2 As Brad Murray describes them, “Simulation models are designed to reproduce a natural system as completely as possible; to simulate as wide a range of behaviors, in as much detail, and with as much quantitative accuracy as can be achieved” (Murray 2003, p. 151).

By contrast, the so-called “synthesist” school of modeling in geomorphology, argues that complex phenomena don’t always require complex models. As one of the founders of the synthesist approach, Chris Paola, explains,

The crux of the new approach to modelling complex, multi-scale systems is that behaviour at a given level in the hierarchy of scales may be dominated by only a few crucial aspects of the dynamics at the next level below. Crudely speaking, it does not make sense to model 100% of the lower-level dynamics if only 1% of it actually contributes to the dynamics at the (higher) level of interest.” (Paola 2001, p. 2)

Rather than appealing to the fundamental laws, this approach tries to represent the effects of the lower level dynamics by a set of simplified rules or equations. These simplifications are not seen as an “unfortunate necessity”, but rather as the proper way to model such complex systems. Indeed synthesists such as Brad Murray argue that understanding how the many small-scale processes give rise to the large scale variables in the phenomenon of interest is a separate scientific endeavor from modeling that large scale phenomenon (Murray 2003; Werner 1999).

This division in geomorphology between the “reductionists” and the “synthesists” was arguably precipitated by the introduction of a new breed of models in geomorphology termed “reduced complexity models” (abbreviated RCM). Geomorphologists Nicholas and Quine note that,

In one sense, the classification of a model as a ‘reduced-complexity’ approach appears unnecessary since, by definition, all models represent simplifications of reality. However, in the context of fluvial geomorphology, such terminology says much about both the central position of classical mechanics within theoretical and numerical modelling, and the role of the individual modeller in defining what constitutes an acceptable representation of the natural environment.” (Nicholas and Quine 2007, p. 319)

An important class of these RCM models are known as “cellular models”, which are distant descendants of cellular automata models (Wolfram 1984). Many geomorphologists point to Murray and Paola’s 1994 cellular model of braided rivers, which was published in Nature, as “pioneering” (Nicholas 2010, p. 1) and marking a “paradigm shift” (Coulthard et al. 2007, p. 194) in geomorphic modeling. In the next section I will very briefly outline Murray and Paola’s model of river braiding as an illustration of these reduced complexity models and their controversial successes and failures. I will argue that a proper evaluation of reduced complexity models requires attending to the sort of scientific uses to which they are put. More specifically reduced complexity models tend to be most useful for generating scientific explanations, and not for more detailed predictions regarding specific systems.

3 Case #1: The MP Model Explanation of Braided Rivers

Rivers come with several different morphologies: some are relatively straight, others are meandering, and still others are braided. A braided river is one in which there is a number of interwoven channels and bars that are dynamically shifting and rearranging over time, while maintaining a roughly constant channel width (as seen below in Fig. 1).

Murray and Paola succinctly describe the goal and results of their reduced complexity model as follows:

Many processes are known to operate in a braided river, but it is unclear which of these are essential to explain the observed dynamics. We describe here a simple, deterministic numerical model of water flow over a cohesionless bed that captures the main spatial and temporal features of real braided rivers. The patterns arise from local scour and deposition caused by a nonlinear dependence of bedload sediment flux on water discharge. . . . our results suggest the only factors essential for braiding are bedload sediment transport and laterally unconstrained free-surface flow. (Murray and Paola 1994, p. 54)

Note, in this quotation, that the goal of the Murray-Paola (or MP) model is explanation – to explain why, in general, rivers braid – not to predict the specific braided pattern of any given river. The guiding assumption behind this model is that, although there are many processes operating, only a small number of relatively simple mechanisms are needed to produce these complex dynamics.

The MP model is essentially a type of “coupled lattice model.” In geomorphology, such models represent the landscape – here the river channel – with a grid of cells, and the development of the landscape is determined by the interactions between the cells – here fluxes of water and sediment – using rules that are highly simplified and abstracted representations of the governing physics. Figure 2 shows a schema of the MP model and the patterns this model produces.

(a) Schematic illustration of the rules in the cellular reduced complexity model of river braiding. The white arrows represent water and sediment routing and the black arrows show lateral sediment transport (From Murray and Paola 1994, p. 55 reproduced with the permission of the author). (b) Three successive times in one run of the model showing 20 × 200 cells with flow from top to bottom. The l.h.s. of each pair is the topography and the r.h.s. is the discharge (From Murray and Paola 1994, p. 56; reproduced with the permission of the author)

On the one hand these reduced complexity models can generate braided rivers with realistic patterns and statistical properties. On the other hand, the simplified rules used to route the water and sediment in the MP model have been called a “gross” simplification of the physics that “neglect most of the physics known to govern fluvial hydraulics (i.e., they do not solve a form of the Navier-Stokes equations)” (Nicholas 2010, p. 1).

It should be noted that there are models of river braiding in geomorphology that do adopt the rival “reductionist” approach, and try to simulate the river in as much accurate detail as is computationally feasible. The so-called DELFT3D model, for example, tries to solve the Navier-Stokes equations in three dimensions and includes many other processes such as the effects of wind and waves on flow and sediment transport. As Murray recounts, “DELFT3D is intended to be as close to a simulation model . . . as is practical, and is probably the best tool available for predicting or simulating fluvial [flow] . . . and bathymetric evolution [i.e., variations in the depth of the river or sea bed]” (Murray 2003, p. 159). However, such models are so complex that they yield very little insight into why the patterns emerge as they do.

Murray and Paola defend their highly idealized cellular model by emphasizing that the goal or purpose of their model is not to have a realistic simulation of braided rivers in all their complex detail, but rather to identify the fundamental mechanisms that cause a river to braid. Here their model was prima facie successful:

This simple model showed that feedback between topographical routed flow and nonlinear sediment transport alone presents a plausible explanation for the basic phenomenon of braiding (with lateral transport playing a key secondary role in perpetuating behavior). The model does not include details of flow or sediment-transport processes, such as secondary flow in confluences, and does not resolve distributions of flow and sediment transport on scales very much smaller than a channel width, suggesting that these aspects of the processes are not critical in producing braiding—that they are not a ‘fundamental’ part of the explanation. (Murray 2003, p. 158)

In other words, the purpose of the reduced complexity model is explanatory – to provide an explanation for why, in general, rivers braid by isolating the crucial mechanism.

Moreover, the mechanism for braiding seems to exhibit a sort of representational robustness – that is, it is not sensitive to the details of how the sediment-flux “law” or rule is represented: As Murray explains, “[b]raiding is a robust instability in the cellular model, which occurs for any set of rules and parameters that express the non-linear nature of the relationship between flow strength and sediment transport” (Murray and Paola 2003, p. 132). This sort of “insensitivity” of the explanandum phenomenon to the details of the rules or values of the parameters is discovered by performing what geomorphologists call sensitivity experiments, which can be thought of as a kind of robustness analysis (e.g., Weisberg 2006a).

In response to the challenge that the availability of these more detailed, physics-based simulation models displaces the need for reduced complexity models such as the MP model, Paola muses,

Ironically, the debate between synthesism and reductionism has arisen just as the increasing power of relatively cheap computers seems set to make it irrelevant. If we can solve the complete set of primitive equations with a computer, why not just do it? But this debate is far more fundamental than mere computing efficiency; it really goes to the heart of what science is about . . . CPU speed may double every 18 months, but the grasp of human intelligence does not. (Paola 2001, p. 5)

There is, however, another issue here that goes beyond the point of limited computing power – human or otherwise – and that is the issue of what makes something a good explanation. Arguably a good explanation is one that only includes the essential features needed to account for the phenomenon (for the purpose/context in question).Footnote 3 An explanation that includes far more than what is really needed to account for the phenomenon of interest (that is, an explanation that includes the proverbial kitchen sink) is arguably an inferior explanation, quite apart from whether or not the human mind is capable of seeing through those excessive details. The role of reduced complexity models in geomorphology is precisely to isolate just those fundamental mechanisms that are required to produce – and hence explain – a poorly understood phenomenon in nature.

The way the debate has played out between the “reductionists” and “synthesists” suggests that the proper question is not “What is the best way to model braided rivers?”, but rather “What is the best way to model braided rivers for a given purpose?”, where that purpose can be either explanatory insight or predictive power (or indeed something else). As Ron Giere reminds us, “There is no best scientific model of anything; there are only models more or less good for different purposes” (Giere 2001, p. 1060). I want to build on this insight and argue that, in geomorphology at least, we can nonetheless identify different kinds of models as being better for different kinds of goals or purposes. Very roughly, if one’s goal is explanation, then reduced complexity models will be more likely to yield explanatory insight than simulation models; whereas if one’s goal is quantitative predictions for concrete systems, then simulation models are more likely to be successful. I shall refer to this as the division of cognitive labor among models.

Recognizing that there is a division of cognitive labor among models in scientific practice, however, raises its own set of philosophical issues, which have not yet received adequate attention in the literature. For example, a model that was designed for the purpose of generating explanatory insight, may fail to make quantitatively accurate predictions for specific systems (a different cognitive goal). This failure in predictive accuracy need not mean that the basic mechanism hypothesized in the explanatory model is incorrect. Nonetheless, explanatory models need to be tested to determine whether the explanatory mechanism represented in the model is in fact the real mechanism operating in nature. To bring this issue of the testing of explanatory models into focus, let me introduce one more example of a reduced-complexity model explanation in geomorphology.

4 Case #2: The Model-Explanation of Rip Currents

Another enigmatic physical phenomenon that geomorphologists are using reduced complexity models to explain is rip currents, which are strong isolated offshore-directed flows that appear abruptly at apparently random locations, and last only for tens of minutes before disappearing. These “flash rips” as lifeguards call them are not produced by channels in the sea bed as other, more well understood, rip currents are. Rather, flash rips appear to be hydrodynamical in origin. Rip current velocities can be as fast as 1 m/s and they claim the lives of many beachgoers every year.

In order to explain the origin of these rip currents, Murray and Reydellet begin with the long-known observation that in a very strong rip current, a gap in wave breaking can extend through the surf zone. They furthermore observe that “the waves are not generally larger when they reach the shore than they are in adjacent areas, suggesting that some process other than breaking dissipates wave energy” (Murray and Reydellet 2001, p. 518). They hypothesize that the mechanism for this dissipation could also be part of the mechanism that is responsible for the formation of rip currents on planar (i.e., relatively flat) beaches.

As in our other example, they use a cellular “reduced complexity model” to propose an explanation for how and why such rip currents occur. A schematic diagram of the hypothesized mechanisms leading to rip currents is picture in Fig. 3 below.

Schematic illustration of hypothesized wave-current interaction in the reduced complexity model of rip currents. In (a) a weak offshore flow decreases wave heights locally, allowing offshore slope to accelerate current. In (b) removal of water from surf zone causes alongshore surface slopes that drive alongshore currents feeding the rip (Reproduced from Murray et al. 2003, p. 270 with permission)

The basic idea is that a weak offshore flow begins to decrease wave heights locally, which allows the offshore slope to accelerate the current. Then, as seen in Fig. 3b, the removal of water from the surf zone locally creates alongshore surface slopes that drive alongshore currents feeding the rip current (Murray et al. 2003, p. 270). They show how the interactions between these few simple mechanisms lead to the formation of rip currents in their model simulations. Moreover, they note how a number of unanticipated features of real rip currents emerge in their model, such as the typical narrowness of the rip current and the wide spacing between adjacent rip currents along a beach.

Once again we see these scientists emphasizing a division of cognitive labor among models, and defending the explanatory function of such reduced complexity models; they write,

In this numerical model some processes have been intentionally omitted, some treated in abstracted ways (e.g., the cross-shore currents) and some represented by simplest first-guess parameterizations (e.g., the newly hypothesized wave/current interaction) in an effort to determine the essential mechanisms causing flash-rip behaviors. The purpose of such a highly simplified model is to find the most concise explanation for a poorly understood phenomenon, not to reproduce the natural system with maximal quantitative accuracy. (Murray et al. 2003, p. 271)

One of the implications of the above quotation is that it is inappropriate to judge such an explanatory model by the quantitative accuracy of its predictions. As Murray has argued elsewhere,

for a highly simplified model in which many of the processes known to operate in the natural system have been intentionally left out, and others might be represented by simplest-first-guess parameterizations . . . accurate numerical predictions might not be expected. In such a case, the failure of a numerical model to closely match observations would not warrant rejecting the basic hypotheses represented by the model. (Murray 2003, p. 162)

Nonetheless explanatory models need to be tested, and the conditions specified under which the ‘basic hypotheses’ of the model can be rejected, otherwise such model-based explanations would carry little force.

These geomorphologists are very much aware of what philosophers refer to broadly as the problem of underdetermination. In the geomorphology literature underdetermination is typically discussed under the rubric of “equifinality”, following the terminology of the hydrologist Keith Beven (1996). Beven defines equifinality as the problem that, “in modeling . . . good fits to the available data can be obtained with a wide variety of parameter sets that usually are dispersed throughout the parameter space” (Beven 1996, p. 289). More specifically in the present context, there is the worry that these reduced complexity models might just be phenomenological models, that are able to reproduce the right phenomenon, but not for the right reasons. If the mechanisms producing the phenomenon in the model do not correspond to the mechanisms producing the phenomenon in the real world, then such models cannot be counted as being genuinely explanatory.

How are such highly-idealized explanatory models to be tested and validated? Robustness analyses seem to play an important role at two junctures in validating these models. First, as in the case of the model explanations of river braiding, robustness analyses in the form of sensitivity experiments are performed to ensure that the phenomenon in the model is not an artifact of the idealizing assumptions or arbitrary choice of parameters. Here Murray and Reydellet note,

The results show that strong, narrow, widely spaced rip currents can result robustly from some relatively simple interactions between a small number of processes. Model experiments have shown that this qualitative result does not depend sensitively on model parameters, or the details of the treatments of the processes in the model. (Murray and Reydellet 2001, p. 528)

There is, however, a second juncture at which robustness plays a role that is more directly relevant for the issue of confirmation. Murray and Reydellet note that their rip current model can produce quantitatively accurate predictions: specifically it produces rip currents with realistic spacings, and typical velocities, widths, and durations that match field data from Doppler-sonar observations. Interestingly, however, they reject this traditional kind of confirmation as carrying much epistemic weight. Instead, they note that this

quantitative realism relies on the tuning of two poorly constrained parameters, and in a model that represents some of the processes in ways that do not have a track record of use in other models or comparison with independent measurements, being able to tune parameters or adjust the formal way interactions are treated to produce a match might not provide impressive evidence in favor of the model. (Murray et al. 2003, p. 271)

In other words, there are situations in which quantitative accuracy can be bought cheaply and so should not be considered the be-all and end-all of confirmation. Here, instead, they argue that certain kinds of robust qualitative predictions carry much more epistemic weight:

For such a highly simplified model, a different kind of prediction needs to be tested—a prediction that arises robustly from the basic interactions in the model, and does not depend on parameter values or the details of how the interactions are treated in the model. (Murray et al. 2003, p. 271)

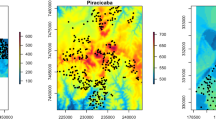

They determine two such qualitative tests for this reduced-complexity model explanation of rip currents. The first involves the qualitative prediction that the prevalence of rip currents – which they quantify with a parameter called “rip activity” or RA – decreases with increasing variation in incident wave heights. The second qualitative test involves the prediction that rip currents should be less frequent (and weaker) on beaches that are steeper. In both cases they show that these predictions derive from the fundamental mechanism hypothesized in the model. They then compared these model predictions to field observations of real rip currents on Torrey Pines Beach near San Diego. As Fig. 4 below indicates, results from the video footage showed the same trend of decreasing rip activity with increasing wave-height variability displayed in the model.

To test the second prediction regarding beach slope they compared rip activity on Torrey Pines with rip activity on two other beaches in southern California (Carlsbad Beach and San Onofre Beach) with respectively steeper slopes on days when the wave conditions were similar on all three beaches. As Fig. 5 above shows, the field observations once again show the same trend as the model predictions.

They conclude that,

Extensive model experiments indicate that the trends in the model results shown in Figures [4] and [5] do not vary; they result inexorably from the essential interactions and feedbacks in the model. Field observations that did not show the predicted trends could have falsified the model. (Murray et al. 2003, p. 276)

Indeed they note that there are rival models of rip currents that predict trends that are inconsistent with these field observations. They conclude that with these highly idealized explanatory models, qualitative predictions involving robust trends can be a more reliable form of model validation.

5 Conclusion: Some Philosophical Lessons from Geomorphology

Several of the themes I have reviewed here in geomorphology relate to similar debates that have occurred in the philosophy of biology. In the context of population biology, for example, Richard Levins (1966) has famously argued that there are tradeoffs between the modeling goals of generality, realism, and precision, and that robustness analyses play an important role in the validation of models. Both of these claims have been challenged by Steven Orzack and Elliott Sober (1993), who question whether there is any necessary conflict between generality, realism, and precision, and who reject robustness analyses as a highly-suspect, non-empirical form of confirmation.

The tradeoff I have described here is not between generality, realism, and precision specifically, but rather between quantitative predictive accuracy and explanatory insight. My aim has not been to argue that there is a necessary tradeoff between these scientific goals, but rather to point out that – as a matter of fact – geomorphologists tend to use different kinds of models for achieving different kinds of goals, even when it comes to the same phenomenon in nature. Moreover, we saw intuitively plausible reasons for why reduced complexity models tend to be better for isolating explanatory mechanisms, but worse for generating quantitatively accurate predictions for specific systems, while conversely, the more detailed “reductionist” simulation models tend to be more useful for concrete quantitative predictions, but often are too complex to offer much in the way of explanatory insight.

Despite these important differences, geomorphologists note that “reduced complexity” and “simulation” models mark a difference of degree, not a difference of kind, and that there is a continuous spectrum of models between them. Nonetheless, rather than thinking that there is one, “best” scientific model in this spectrum that is simultaneously optimal for prediction an explanation, geomorphologists instead seem to embrace, what I termed, a “division of cognitive labor” among models, routinely employing different models of the same phenomenon to achieve different epistemic ends.

Turning finally to the issue of robustness, there were two junctures at which geomorphologists were deploying robustness analyses in these examples. The first was in the context of so-called “sensitivity experiments,” which were used to determine whether the effect in the model was a robust result of the mechanism(s) hypothesized, or whether it was an artifact, depending sensitively on the values of the parameters. Such sensitivity experiments can be thought of as revealing a kind of representational robustness: the phenomenon of interest arises robustly from the mechanism(s) represented in the model, and does not depend on the other idealizations or the particular way in which it is represented in the model.

It is noteworthy that even Orzack and Sober grant the utility of this sort of robustness analysis. They write,

So far we have considered robustness to be a property . . . across models. It is also worth considering the concept as it applies within a single model. A numerical prediction of a model is said to be robust if its value does not depend much (or at all) on variation in the value of the input parameters. . . . This type of ‘internal robustness’ is meaningful and can be very useful. (Orzack and Sober 1993, p. 540)

Orzack and Sober go on to warn that such internal robustness is “no sure sign of truth”, but in the geomorphology examples we considered, it was never meant to be. As Michael Weisberg argues in his defense “Levins was not offering an alternative to empirical confirmation; rather, he was explaining a procedure used in conjunction with empirical confirmation in situations where one is relying on highly idealized models” (Weisberg 2006b, p. 642). Indeed this is the way geomorphologists were using robustness analyses at the second juncture – not in isolation, but as a way to help compare the predictions of the reduced complexity model to nature.

As we saw, one of the challenges facing reduced complexity models is that they are often designed for the purpose of uncovering explanatory mechanisms – not for producing quantitatively accurate predictions. Hence, it is typically not appropriate to test them by a brute comparison of their quantitative predictions with observations. The explanatory mechanisms identified in the model can be the correct fundamental mechanisms operating in nature, even if the model fails to provide quantitatively accurate predictions. Hence, robustness analyses also play an important role at this second stage of identifying those qualitative predictions or trends in the model that can appropriately be compared with observations. Thus robustness analyses, while not themselves a direct form of confirmation, can be an important step in the extended process of validating highly idealized scientific models.Footnote 4

Notes

- 1.

- 2.

The term ‘simulation’ is meant here in the sense of imitating the processes in the real-world system as closely as possible, not whether it is a model run on a computer simulation. This is choice of term is somewhat unfortunate in that both the “simulation” models and the rival “reduced-complexity” models are run as computer simulations.

- 3.

A full discussion of what distinguishes a good explanation from a poor one is outside the scope of this paper.

- 4.

To be clear, I fully accept the point cogently made by Oreskes et al. (1994) that models can never be “verified” or proven true, and that confirmation is inherently partial. I am using model “validation” in the looser sense of establishing that the model is acceptable for the purposes for which it is being deployed given our best available scientific evidence.

References

Beven, K. (1996). Equifinality and uncertainty in geomorphological modeling. In B. Rhoads & C. Thorn (Eds.), The scientific nature of geomorphology: Proceedings of the 27th Binghampton symposium in geomorphology, September 27–29, 1996 (pp. 289–313). New Jersey: Wiley.

Bokulich, A. (2008). Reexamining the quantum-classical relation: Beyond reductionism and pluralism. Cambridge: Cambridge University Press.

Bokulich, A. (2011). How scientific models can explain. Synthese, 180, 33–45.

Bokulich, A. (2012). Distinguishing explanatory from non-explanatory fictions. Philosophy of Science (Proceedings), 79(5), 725–737.

Coulthard, T., Hicks, D., & Van De Wiel, M. (2007). Cellular modeling of river catchments and reaches: Advantages, limitations, and prospects. Geomorphology, 90, 192–207.

Giere, R. (2001). The nature and function of models. Behavioral and Brain Sciences, 24(6), 1060.

Levins, R. (1966). The strategy of model building in population biology. American Scientist, 54(4), 421–431.

Matthewson, J., & Weisberg, M. (2008). The structure of tradeoffs in model building. Synthese, 170(1), 169–190.

Murray, A. B. (2003). Contrasting the goals, strategies, and predictions associated with simplified numerical models and detailed simulations. In P. Wilcock & R. Iverson (Eds.), Prediction in geomorphology (pp. 151–165). Washington, DC: American Geophysical Union.

Murray, A. B., & Paola, C. (1994). A cellular model of braided rivers. Nature, 371, 54–57.

Murray, A. B., & Paola, C. (2003). Modeling the effect of vegetation on channel pattern in bedload rivers. Earth Surface Processes and Landforms, 28, 131–143.

Murray, A. B., & Reydellet, G. (2001). A rip current model based on a hypothesized wave/current interaction. Journal of Coastal Research, 17(3), 517–530.

Murray, A. B., LeBars, M., & Guillon, C. (2003). Tests of a new hypothesis for non-bathymetrically driven rip currents. Journal of Coastal Research, 19(2), 269–277.

Nicholas, A. (2010). Reduced-Complexity Modeling of free bar morphodynamics in Alluvial channels. Journal of Geophysical Research, 115, F04021.

Nicholas, A., & Quine, T. (2007). Crossing the divide: Representation of channels and processes in reduced-complexity river models at reach and landscape scales. Geomorphology, 90, 318–339.

Oreskes, N., Shrader-Frechette, K., & Belitz, K. (1994). Verification, validation, and confirmation of numerical models in the earth sciences. Science, 263, 641–646.

Orzack, S., & Sober, E. (1993). A critical assessment of Levins’s The strategy of model building in population biology (1966). The Quarterly Review of Biology, 68(4), 533–546.

Paola, C. (2001). Modeling stream braiding over a range of scales. In M. Mosley (Ed.), Gravel-bed rivers V (pp. 11–38). Wellington: New Zealand Hydrological Society.

Weisberg, M. (2006a). Robustness analysis. Philosophy of Science, 73, 730–742.

Weisberg, M. (2006b). Forty years of ‘The strategy’: Levins on model building and idealization. Biology and Philosophy, 21(5), 623–645.

Werner, B. T. (1999). Complexity in natural landform patterns. Science, 284, 102–104.

Wolfram, S. (1984). Universality and complexity in cellular automata. Physica D, 10, 1–35.

Acknowledgement

I would like to express my deep gratitude to Brad Murray for stimulating discussions about these issues and for generously sharing his expertise with me.

I am also grateful to the National Science Foundation, grant 1230676, for their support of this research.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer International Publishing Switzerland

About this paper

Cite this paper

Bokulich, A. (2013). Explanatory Models Versus Predictive Models: Reduced Complexity Modeling in Geomorphology. In: Karakostas, V., Dieks, D. (eds) EPSA11 Perspectives and Foundational Problems in Philosophy of Science. The European Philosophy of Science Association Proceedings, vol 2. Springer, Cham. https://doi.org/10.1007/978-3-319-01306-0_10

Download citation

DOI: https://doi.org/10.1007/978-3-319-01306-0_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-01305-3

Online ISBN: 978-3-319-01306-0

eBook Packages: Humanities, Social Sciences and LawPhilosophy and Religion (R0)